#Open Source Serverless Computing Platforms

Explore tagged Tumblr posts

Link

Serverless computing is the future. Early adopters are already reaping the benefits; get started with a proprietary or open-source serverless platform today.

#Cloud Computing Model#Cloud Computing Experts#Serverless Architecture#Serverless Computing#Open Source Serverless Computing Platforms#Cloud-Native Application Development

0 notes

Link

Serverless computing is the future. Early adopters are already reaping the benefits; get started with a proprietary or open-source serverless platform today.

#Cloud Computing Model#Cloud Computing Experts#Serverless Architecture#Serverless Computing#Open Source Serverless Computing Platforms#Cloud-Native Application Development

0 notes

Link

Serverless computing is the future. Early adopters are already reaping the benefits; get started with a proprietary or open-source serverless platform today.

#Cloud Computing Model#Cloud Computing Experts#Serverless Architecture#Serverless Computing#Open Source Serverless Computing Platforms#Cloud-Native Application Development

0 notes

Link

Serverless computing is the future. Early adopters are already reaping the benefits; get started with a proprietary or open-source serverless platform today.

#Cloud Computing Model#Cloud Computing Experts#Serverless Architecture#Serverless Computing#Open Source Serverless Computing Platforms#Cloud-Native Application Development

0 notes

Text

The Evolution of PHP: Shaping the Web Development Landscape

In the dynamic world of web development, PHP has emerged as a true cornerstone, shaping the digital landscape over the years. As an open-source, server-side scripting language, PHP has played a pivotal role in enabling developers to create interactive and dynamic websites. Let's take a journey through time to explore how PHP has left an indelible mark on web development.

1. The Birth of PHP (1994)

PHP (Hypertext Preprocessor) came into being in 1994, thanks to Rasmus Lerdorf. Initially, it was a simple set of Common Gateway Interface (CGI) binaries used for tracking visits to his online resume. However, Lerdorf soon recognized its potential for web development, and PHP evolved into a full-fledged scripting language.

2. PHP's Role in the Dynamic Web (Late '90s to Early 2000s)

In the late '90s and early 2000s, PHP began to gain prominence due to its ability to generate dynamic web content. Unlike static HTML, PHP allowed developers to create web pages that could interact with databases, process forms, and provide personalized content to users. This shift towards dynamic websites revolutionized the web development landscape.

3. The Rise of PHP Frameworks (2000s)

As PHP continued to grow in popularity, developers sought ways to streamline and standardize their development processes. This led to the emergence of PHP frameworks like Laravel, Symfony, and CodeIgniter. These frameworks provided structured, reusable code and a wide range of pre-built functionalities, significantly accelerating the development of web applications.

4. PHP and Content Management Systems (CMS) (Early 2000s)

Content Management Systems, such as WordPress, Joomla, and Drupal, rely heavily on PHP. These systems allow users to create and manage websites with ease. PHP's flexibility and extensibility make it the backbone of numerous plugins, themes, and customization options for CMS platforms.

5. E-Commerce and PHP (2000s to Present)

PHP has played a pivotal role in the growth of e-commerce. Platforms like Magento, WooCommerce (built on top of WordPress), and OpenCart are powered by PHP. These platforms provide robust solutions for online retailers, allowing them to create and manage online stores efficiently.

6. PHP's Contribution to Server-Side Scripting (Throughout)

PHP is renowned for its server-side scripting capabilities. It allows web servers to process requests and deliver dynamic content to users' browsers. This server-side scripting is essential for applications that require user authentication, data processing, and real-time interactions.

7. PHP's Ongoing Evolution (Throughout)

PHP has not rested on its laurels. It continues to evolve with each new version, introducing enhanced features, better performance, and improved security. PHP 7, for instance, brought significant speed improvements and reduced memory consumption, making it more efficient and appealing to developers.

8. PHP in the Modern Web (Present)

Today, PHP remains a key player in the web development landscape. It is the foundation of countless websites, applications, and systems. From popular social media platforms to e-commerce giants, PHP continues to power a significant portion of the internet.

9. The PHP Community (Throughout)

One of PHP's strengths is its vibrant and active community. Developers worldwide contribute to its growth by creating libraries, extensions, and documentation. The PHP community fosters knowledge sharing, making it easier for developers to learn and improve their skills.

10. The Future of PHP (Ongoing)

As web technologies continue to evolve, PHP adapts to meet new challenges. Its role in serverless computing, microservices architecture, and cloud-native applications is steadily increasing. The future holds exciting possibilities for PHP in the ever-evolving web development landscape.

In conclusion, PHP's historical journey is interwoven with the evolution of web development itself. From its humble beginnings to its current status as a web development powerhouse, PHP has not only shaped but also continues to influence the internet as we know it. Its versatility, community support, and ongoing evolution ensure that PHP will remain a vital force in web development for years to come.

youtube

#PHP#WebDevelopment#WebDev#Programming#ServerSide#ScriptingLanguage#PHPFrameworks#CMS#ECommerce#WebApplications#PHPCommunity#OpenSource#Technology#Evolution#DigitalLandscape#WebTech#Coding#Youtube

30 notes

·

View notes

Text

Azure Data Engineering Tools For Data Engineers

Azure is a cloud computing platform provided by Microsoft, which presents an extensive array of data engineering tools. These tools serve to assist data engineers in constructing and upholding data systems that possess the qualities of scalability, reliability, and security. Moreover, Azure data engineering tools facilitate the creation and management of data systems that cater to the unique requirements of an organization.

In this article, we will explore nine key Azure data engineering tools that should be in every data engineer’s toolkit. Whether you’re a beginner in data engineering or aiming to enhance your skills, these Azure tools are crucial for your career development.

Microsoft Azure Databricks

Azure Databricks is a managed version of Databricks, a popular data analytics and machine learning platform. It offers one-click installation, faster workflows, and collaborative workspaces for data scientists and engineers. Azure Databricks seamlessly integrates with Azure’s computation and storage resources, making it an excellent choice for collaborative data projects.

Microsoft Azure Data Factory

Microsoft Azure Data Factory (ADF) is a fully-managed, serverless data integration tool designed to handle data at scale. It enables data engineers to acquire, analyze, and process large volumes of data efficiently. ADF supports various use cases, including data engineering, operational data integration, analytics, and data warehousing.

Microsoft Azure Stream Analytics

Azure Stream Analytics is a real-time, complex event-processing engine designed to analyze and process large volumes of fast-streaming data from various sources. It is a critical tool for data engineers dealing with real-time data analysis and processing.

Microsoft Azure Data Lake Storage

Azure Data Lake Storage provides a scalable and secure data lake solution for data scientists, developers, and analysts. It allows organizations to store data of any type and size while supporting low-latency workloads. Data engineers can take advantage of this infrastructure to build and maintain data pipelines. Azure Data Lake Storage also offers enterprise-grade security features for data collaboration.

Microsoft Azure Synapse Analytics

Azure Synapse Analytics is an integrated platform solution that combines data warehousing, data connectors, ETL pipelines, analytics tools, big data scalability, and visualization capabilities. Data engineers can efficiently process data for warehousing and analytics using Synapse Pipelines’ ETL and data integration capabilities.

Microsoft Azure Cosmos DB

Azure Cosmos DB is a fully managed and server-less distributed database service that supports multiple data models, including PostgreSQL, MongoDB, and Apache Cassandra. It offers automatic and immediate scalability, single-digit millisecond reads and writes, and high availability for NoSQL data. Azure Cosmos DB is a versatile tool for data engineers looking to develop high-performance applications.

Microsoft Azure SQL Database

Azure SQL Database is a fully managed and continually updated relational database service in the cloud. It offers native support for services like Azure Functions and Azure App Service, simplifying application development. Data engineers can use Azure SQL Database to handle real-time data ingestion tasks efficiently.

Microsoft Azure MariaDB

Azure Database for MariaDB provides seamless integration with Azure Web Apps and supports popular open-source frameworks and languages like WordPress and Drupal. It offers built-in monitoring, security, automatic backups, and patching at no additional cost.

Microsoft Azure PostgreSQL Database

Azure PostgreSQL Database is a fully managed open-source database service designed to emphasize application innovation rather than database management. It supports various open-source frameworks and languages and offers superior security, performance optimization through AI, and high uptime guarantees.

Whether you’re a novice data engineer or an experienced professional, mastering these Azure data engineering tools is essential for advancing your career in the data-driven world. As technology evolves and data continues to grow, data engineers with expertise in Azure tools are in high demand. Start your journey to becoming a proficient data engineer with these powerful Azure tools and resources.

Unlock the full potential of your data engineering career with Datavalley. As you start your journey to becoming a skilled data engineer, it’s essential to equip yourself with the right tools and knowledge. The Azure data engineering tools we’ve explored in this article are your gateway to effectively managing and using data for impactful insights and decision-making.

To take your data engineering skills to the next level and gain practical, hands-on experience with these tools, we invite you to join the courses at Datavalley. Our comprehensive data engineering courses are designed to provide you with the expertise you need to excel in the dynamic field of data engineering. Whether you’re just starting or looking to advance your career, Datavalley’s courses offer a structured learning path and real-world projects that will set you on the path to success.

Course format:

Subject: Data Engineering Classes: 200 hours of live classes Lectures: 199 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 70% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

Subject: DevOps Classes: 180+ hours of live classes Lectures: 300 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 67% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

For more details on the Data Engineering courses, visit Datavalley’s official website.

#datavalley#dataexperts#data engineering#data analytics#dataexcellence#data science#power bi#business intelligence#data analytics course#data science course#data engineering course#data engineering training

3 notes

·

View notes

Text

Why Learning Microsoft Azure Can Transform Your Career and Business

Microsoft Azure is a cloud computing platform and service created by Microsoft. It offers a comprehensive array of cloud services, including computing, analytics, storage, networking, and more. Organizations utilize Azure to build, deploy, and manage applications and services through data centers managed by Microsoft.

Why Choose Microsoft Azure?

Microsoft Azure stands out as a leading cloud computing platform, providing businesses and individuals with powerful tools and services.

Here are some reasons why it’s an excellent choice:

Scalability

Easily add or reduce resources to align with your business growth.

Global Reach

Available in over 60 regions, making it accessible around the globe.

Cost-Effective

Only pay for what you use, with flexible pricing options.

Strong Security

Safeguard your data with enterprise-level security and compliance.

Seamless Microsoft Integration

Integrates smoothly with Office 365, Dynamics 365, and hybrid environments.

Wide Range of Services

Covers everything from Infrastructure (IaaS), Platforms (PaaS), and Software as a Service (SaaS) to advanced AI and IoT tools.

Developer-Friendly

Supports tools like Visual Studio, GitHub, and popular programming languages.

Reliable Performance

Guarantees high availability and robust disaster recovery.

AI and IoT

Create intelligent applications and leverage edge computing for smarter solutions.

Open-Source Friendly

Works well with various frameworks and open-source technologies.

Empower Your Business

Azure provides the flexibility to innovate, scale globally, and maintain competitiveness—all backed by reliable and secure cloud solutions.

Why Learn Microsoft Azure?

Boost Your Career

Unlock opportunities for in-demand roles such as Cloud Engineer or Architect.

Obtain recognized certifications to enhance your visibility in the job market.

Help Your Business

Reduce expenses by crafting efficient cloud solutions.

Automate processes to increase productivity and efficiency.

Create Amazing Apps

Easily develop and deploy web or mobile applications.

Utilize Azure Functions for serverless architecture and improved scalability.

Work with Data

Handle extensive data projects using Azure's robust tools.

Ensure your data remains secure and easily accessible with Azure Storage.

Dive into AI

Develop AI models and train them using Azure Machine Learning.

Leverage pre-built tools for tasks like image recognition and language translation.

Streamline Development

Accelerate software delivery with Azure DevOps pipelines.

Automate the setup and management of your infrastructure.

Improve IT Systems

Quickly establish virtual machines and networks.

Integrate on-premises and cloud systems to enjoy the best of both environments.

Start a Business

Launch and grow your startup with Azure’s adaptable pricing.

Utilize tools specifically designed for entrepreneurs.

Work Anywhere

Empower remote teams with Azure Virtual Desktop and Teams.

Learning Azure equips you with valuable skills, fosters professional growth, and enables you to create meaningful solutions for both work and personal projects.

Tools you can learn in our course

Azure SQL Database

Azure Data Lake Storage

Azure Databricks

Azure Synapse Analytics

Azure Stream Analytics

Global Teq’s Free Demo Offer!

Don’t Miss Out!

This is your opportunity to experience Global Teq’s transformative technology without any commitment. Join hundreds of satisfied clients who have leveraged our solutions to achieve their goals.

Sign up today and take the first step toward unlocking potential.

Click here to register for your free demo now!

Let Global Teq partner with you in driving innovation and success.

0 notes

Text

Top Computer Science Projects for Final-Year Students

Always bringing innovative ideas to bear on projects, final-year computer science students have proposed the following projects.

These projects are a culmination of the years’ worth of work and a way to exhibit end-of course ingenuity for aspiring computer science students. Below are some innovative project ideas and domains to inspire students:

1. AI related projects

• Chatbots: Create a Virtual Helper for an individual area of specialization, including learning or medical.

• Image Recognition: Build an approach to tag and recognize objects in an image through the utilization of CNN.

2. Web Development Projects

• E-Learning Platform: Design an environment for the absorption of knowledge with tests and tutorials, and follow the level achieved.

• Crowdfunding Website: Develop a safe and convenient environment for project financing.

3. Data Science and Analytics

• Predictive Analysis: Predict patterns/behaviors on any subject such as stock exchange or future climates with machine learning algorithms.

• Customer Segmentation: Use e-commerce data to segment customers properly for the right kind of marketing.

4. Cybersecurity Projects

• Vulnerability Scanner: Design the use of a weak point identifier tool when evaluating a network or a system.

• Secure Authentication System: Develop complex procedures for account security with the help of biometric or OTP.

5. Blockchain Applications

• Cryptocurrency Wallet: Create a safe cryptocurrency wallet for exchange.

• Voting System: Introduce an open and secure middleware that will facilitate a transparent voting system through, blockchain technology.

6. Some of the IoT (Internet of Things) Projects

• Smart Home Automation: Design Home appliance control through a mobile application.

• IoT-Based Health Monitoring: Design and develop FOG computing platforms and applications using wearable devices for real time health monitoring parameters.

7. Mobile App Development

• Fitness Tracker: Create an application that will track physical activity, diet and personal objectives and achievements.

• AR Shopping App: Augment Reality features to improve the shopping experience.

8. Game Development

• 2D/3D Games: Design a game at a concept stage, develop an attractive storyline, and interesting mechanics using Unity or Unreal Engine.

• Educational Games: Provide target learning applications for children or adults that are based on game technologies.

9. Cloud Computing Projects

• Serverless Applications: Develop design for apps using the serverless computing for the purpose of scalability. • Cloud Resource Optimization: Develop means for cost and resource management in clouds. • Post your code on websites such as GitHub so that others can review it and give feedback in order to improve ones experience. • Cloud Resource Optimization: Create tools to optimize costs and resources in cloud environments.

10. Open-Source Contributions

• Contribute to open-source projects on platforms like GitHub to gain real-world experience and recognition. These ideas are not only of a technical kind but also they are defined by the attempts to solve some practical problems. Therefore, selecting a relevant and difficult project can enable learners make a great impact and expand on their portfolios. Don’t wait for tomorrow, start your ever fascinating project today to launch yourself into the world of technology!

#cse#computerscience#cloudcomputing#mobileappdevelopment#cybersecurity#iotprojects#takeoffprojects#takeoffedugroup

0 notes

Text

Presenting Azure AI Agent Service: Your AI-Powered Assistant

Presenting Azure AI Agent Service

Azure has announced managed features that enable developers to create safe, stateful, self-governing AI bots that automate all business procedures.

Organizations require adaptable, safe platforms for the development, deployment, and monitoring of autonomous AI agents in order to fully exploit their potential.

Use Azure AI Agent Service to enable autonomous agent capabilities

At Ignite 2024, Azure announced the upcoming public preview of Azure AI Agent Service, a suite of feature-rich, managed capabilities that brings together all the models, data, tools, and services that businesses require to automate any kind of business operation. This announcement is motivated by the needs of its customers and the potential of autonomous AI agents.

Azure AI Agent Service is adaptable and independent of use case. Whether it’s personal productivity agents that send emails and set up meetings, research agents that continuously track market trends and generate reports, sales agents that can investigate leads and automatically qualify them, customer service agents that follow up with personalized messages, or developer agents that can update your code base or evolve a code repository interactively, this represents countless opportunities to automate repetitive tasks and open up new avenues for knowledge work.

What distinguishes Azure AI Agent Service?

After speaking with hundreds of firms, it has discovered that there are four essential components needed to quickly produce safe, dependable agents:

Develop and automate processes quickly: In order to carry out deterministic or non-deterministic operations, agents must smoothly interact with the appropriate tools, systems, and APIs.

Integrate with knowledge connectors and a large memory: In order to have the appropriate context to finish a task, agents must connect to internal and external knowledge sources and monitor the status of conversations.

Flexible model selection: Agents that are constructed using the right model for the job at hand can improve the integration of data from many sources, produce better outcomes for situations unique to the task at hand, and increase cost effectiveness in scaled agent deployments.

Built-in enterprise readiness: Agents must be able to scale with an organization’s needs, meet its specific data privacy and compliance requirements, and finish tasks with high quality and dependability.

Azure AI Agent Service offers these components for end-to-end agent development through a single product surface by utilizing the user-friendly interface and extensive toolkit in the Azure AI Foundry SDK and site.

Let’s now examine the capabilities of Azure AI Agent Service in more detail.

Fast agent development and automation with powerful integrations

Azure AI Agent Service, based on OpenAI’s powerful yet flexible Assistants API, allows rapid agent development with built-in memory management and a sophisticated interface to seamlessly integrate with popular compute platforms and bridge LLM capabilities with general purpose, programmatic actions.

Allow your agent to act with 1400+ Azure Logic Apps connectors: Use Logic Apps’ extensive connector ecosystem to allow your agent accomplish tasks and act for users. Logic apps simplify workflow business logic in Azure Portal to connect your agent to external systems, tools, and APIs. Azure App Service, Dynamics365 Customer Voice, Microsoft Teams, M365 Excel, MongoDB, Dropbox, Jira, Gmail, Twilio, SAP, Stripe, ServiceNow, and others are connectors.

Use Azure Functions to provide stateless or stateful code-based activities beyond chat mode: Allow your agent to call APIs and transmit and wait for events. Azure Functions and Azure Durable tasks let you execute serverless code for synchronous, asynchronous, long-running, and event-driven tasks like invoice approval with human-in-the-loop, long-term product supply chain monitoring, and more.

Code Interpreter lets your agent create and run Python code in a safe environment, handle several data types, and generate data and visual files. This tool lets you use storage data, unlike the Assistants API.

Standardize tool library with OpenAPI: Use an OpenAPI 3.0 tool to connect your AI agent to an external API for scaled application compatibility. Custom tools can authenticate access and connections with managed identities (Microsoft Entra ID) for enhanced security, making it perfect for infrastructure or web services integration.

Add cloud-hosted tools to Llama Stack agents: The agent protocol is supported by Azure AI Agent Service for Llama Stack SDK developers. Scalable, cloud-hosted, enterprise-grade tools will be wireline compatible with Llama Stack.

Anchor agent outputs with a large knowledge environment

Easily establish a comprehensive ecosystem of enterprise knowledge sources to let agents access and interpret data from different sources, boosting user query responses. These data connectors fit your network characteristics and interact effortlessly with your data. Built-in data sources are:

Real-time web data online data grounding with Bing lets your agent give users the latest information. This addresses LLMs’ inability to answer current events prompts like “top news headlines” factually.

Microsoft SharePoint private data: SharePoint internal documents can help your agent provide accurate responses. By using on-behalf-of (OBO) authentication, agents can only access SharePoint data that the end user has permissions for.

Talk to structured data in Microsoft Fabric: Power data-driven decision making in your organization without SQL or data context knowledge. The built-in Fabric AI Skills allow your agent to develop generative AI-based conversational Q&A systems on Fabric data. Fabric provides secure data connection with OBO authentication.

Add private data from Azure AI Search, Azure Blob, and local files to agent outputs: Azure re-invented the File Search tool in Assistants API to let you bring existing Azure AI Search index or develop a new one using Blob Storage or local storage with an inbuilt data ingestion pipeline. With file storage in your Azure storage account and search indexes in your Azure Search Resource, this new file search gives you full control over your private data.

Gain a competitive edge with licensed data: Add licensed data from private data suppliers like Tripadvisor to your agent responses to provide them with the latest, best data for your use case. It will add more licensed data sources from other industries and professions.

In addition to enterprise information, AI agents need thread or conversation state management to preserve context, deliver tailored interactions, and improve performance over time. By managing and obtaining conversation history from each end-user, Azure AI Agent Service simplifies thread management and provides consistent context for better interactions. This also helps you overcome AI agent model context window restrictions.

Use GPT-4o, Llama 3, or another model that suits the job

Developers love constructing AI assistants with Azure OpenAI Service Assistants API’s latest OpenAI GPT models. Azure now offers cutting-edge models from top model suppliers so you can design task-specific agents, optimize TCO, and more.

Leverage Models-as-a-Service: Azure AI Agent Service will support models from Azure AI Foundry and use cross-model compatible, cloud-hosted tools for code execution, retrieval-augmented generation, and more. The Azure Models-as-a-Service API lets developers create agents with Meta Llama 3.1, Mistral Large, and Cohere Command R+ in addition to Azure OpenAI models.

Multi-modal support lets AI agents process and respond to data formats other than text, broadening application cases. GPT-4o‘s picture and audio modalities will be supported so you may analyze and mix data from different forms to gain insights, make decisions, and give user-specific outputs.

For designing secure, enterprise-ready agents from scratch

Azure AI Agent Service provides enterprise tools to protect sensitive data and meet regulatory standards.

Bring your own storage: Unlike Assistants API, you can now link enterprise data sources to safely access enterprise data for your agent.

BYO virtual network: Design agent apps with strict no-public-egress data traffic to protect network interactions and data privacy.

Keyless setup, OBO authentication: Keyless setup and on-behalf-of authentication simplify agent configuration and authentication, easing resource management and deployment.

Endless scope: Azure AI Agent Service on provided deployments offers unlimited performance and scaling. Agent-powered apps may now be flexible and have predictable latency and high throughput.

Use OpenTelemetry to track agent performance: Understand your AI agent’s reliability and performance. The Azure AI Foundry SDK lets you add OpenTelemetry-compatible metrics to your monitoring dashboard for offline and online agent output review.

Content filtering and XPIA mitigation help build responsibly: Azure AI Agent Service detects dangerous content at various severity levels with prebuilt and custom content filters.

Agents are protected from malicious cross-prompt injection attacks by prompt shields. Like Azure OpenAI Service, Azure AI Agent Service prompts and completions are not utilized to train, retrain, or improve Microsoft or 3rd party goods or services without your permission. Customer data can be deleted at will.

Use Azure AI Agent Service to orchestrate effective multi-agent systems

Azure AI Agent Service is pre-configured with multi-agent orchestration frameworks natively compatible with the Assistants API, including Semantic Kernel, an enterprise AI SDK for Python,.NET, and Java, and AutoGen, a cutting-edge research SDK for Python developed by Microsoft Research.

To get the most dependable, scalable, and secure agents while developing a new multi-agent solution, begin by creating singleton agents using Azure AI Agent Service. These agents can then be coordinated by AutoGen, which is always developing to determine the most effective patterns of cooperation for agents (and humans) to cooperate. If you want non-breaking updates and production support, you may then move features that demonstrate production value with AutoGen into Semantic Kernel.

Read more on Govindhtech.com

#AzureAI#AIAgent#AIAgentService#AI#OpenAPI#Llama#SDK#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

0 notes

Text

Node.js Development: Everything You Need to Know in 2025

As we approach 2025, Node.js development continues to be one of the most popular choices for backend development and scalable web applications. It’s a JavaScript runtime environment that has revolutionized server-side programming, enabling developers to build scalable, fast, and efficient applications. In this blog, we will explore what Node.js is, why it’s still relevant in 2025, and what trends and best practices developers should keep in mind.

What is Node.js?

Node.js is an open-source, cross-platform runtime environment that allows developers to execute JavaScript on the server side. Built on the V8 JavaScript engine (developed by Google for Chrome), it’s designed to be lightweight and efficient, especially for I/O-heavy tasks. Unlike traditional server-side environments, which often use blocking I/O, Node.js uses non-blocking, event-driven architecture, making it ideal for building scalable network applications.

Why Node.js is Still Relevant in 2025

High Performance with Non-Blocking I/O Node.js performance has been known for its high capabilities, especially when dealing with numerous simultaneous connections. With non-blocking I/O, Node.js handles requests asynchronously, which increases the efficiency of data-heavy applications like real-time chat apps, streaming services, and collaborative platforms. In 2025, this continues to be one of its main advantages, ensuring it remains a top choice for developers.

Large Ecosystem of Libraries and Tools Node.js npm (Node Package Manager) offers a vast ecosystem with over a million packages, making it easier to integrate various functionalities such as authentication, data processing, and communication protocols. This extensive library ecosystem continues to be a game-changer for JavaScript developers in 2025, reducing development time and improving productivity.

Full-Stack JavaScript Development One of the main reasons developers continue to choose Node.js is the ability to use JavaScript on both the front end and back end. This full-stack JavaScript development approach not only streamlines development but also reduces the learning curve for developers. With frameworks like Express.js, Node.js makes it easy to build robust RESTful APIs and microservices, making it an ideal choice for modern web applications.

Strong Community and Industry Adoption Node.js benefits from a large, active community that contributes to its development, constantly improving its functionality. From enterprise-level applications to startups, Node.js is widely adopted, with major companies like Netflix, LinkedIn, and Walmart utilizing it for their systems. The support from large organizations ensures that Node.js will continue to evolve and stay relevant.

Trends in Node.js Development for 2025

Microservices Architecture As businesses continue to shift towards a microservices architecture, Node.js plays a significant role. Its lightweight nature and ease of building APIs make it perfect for managing independent microservices that can scale horizontally. In 2025, more companies will adopt microservices with Node.js, making it easier to build and manage distributed applications.

Serverless Computing Serverless computing architectures, such as AWS Lambda, allow developers to build and run applications without managing servers. Node.js is an excellent choice for serverless development due to its fast startup time and efficient handling of event-driven processes. In 2025, serverless computing with Node.js will continue to grow, offering developers cost-effective and scalable solutions.

Edge Computing With the rise of IoT and edge computing, there is a greater demand for processing data closer to the source. Node.js, with its lightweight footprint and real-time capabilities, is ideal for edge computing, allowing developers to deploy apps that process data on the edge of networks. This trend will become even more prominent in 2025.

Real-Time Applications Real-time applications such as messaging platforms, live-streaming services, and collaborative tools rely on constant communication between the server and the client. Node.js, with libraries like Socket.io, enables seamless real-time data transfer, ensuring that applications remain responsive and fast. In 2025, real-time applications will continue to be a significant use case for Node.js.

Best Practices for Node.js Development in 2025

Asynchronous Programming with Promises and Async/Await Node.js asynchronous programming is a key aspect of its performance. As of 2025, it’s important to use the latest syntax and patterns. Promises and async/await make asynchronous code more readable and easier to manage, helping to avoid callback hell.

Monitoring and Performance Optimization In a production environment, it’s essential to monitor the performance of Node.js applications. Tools like PM2 and New Relic help track application performance, manage uptime, and optimize resource usage, ensuring applications run efficiently at scale.

Security Best Practices Node.js security is a critical consideration for every developer. Best practices include proper input validation, using HTTPS, managing dependencies securely, and avoiding common vulnerabilities like SQL injection and cross-site scripting (XSS).

Modular Code and Clean Architecture A clean, modular architecture is essential for long-term maintainability. Developers should ensure their Node.js applications are organized into reusable modules, promoting code reuse and making debugging easier.

Conclusion

Node.js development remains a powerhouse for backend development in 2025, thanks to its speed, scalability, and developer-friendly ecosystem. From building real-time applications to serverless computing, Node.js continues to evolve and support the changing needs of the tech world. Whether you're building microservices or implementing edge computing, Node.js is the framework to watch in 2025. Keep an eye on Node.js trends and best practices to stay ahead in the fast-paced world of backend web development.

0 notes

Text

The cloud computing arena is a battleground where titans clash, and none are mightier than Amazon Web Services (AWS) and Google Cloud Platform (GCP). While AWS has long held the crown, GCP is rapidly gaining ground, challenging the status quo with its own unique strengths. But which platform reigns supreme? Let's delve into this epic clash of the titans, exploring their strengths, weaknesses, and the factors that will determine the future of the cloud. A Tale of Two Giants: Origins and Evolution AWS, the veteran, pioneered the cloud revolution. From humble beginnings offering basic compute and storage, it has evolved into a sprawling ecosystem of services, catering to every imaginable need. Its long history and first-mover advantage have allowed it to build a massive and loyal customer base. GCP, the contender, entered the arena later but with a bang. Backed by Google's technological prowess and innovative spirit, GCP has rapidly gained traction, attracting businesses with its cutting-edge technologies, data analytics capabilities, and developer-friendly tools. Services: Breadth vs. Depth AWS boasts an unparalleled breadth of services, covering everything from basic compute and storage to AI/ML, IoT, and quantum computing. This vast selection allows businesses to find solutions for virtually any need within the AWS ecosystem. GCP, while offering a smaller range of services, focuses on depth and innovation. It excels in areas like big data analytics, machine learning, and containerization, offering powerful tools like BigQuery, TensorFlow, and Kubernetes (which originated at Google). The Data Advantage: GCP's Forte GCP has a distinct advantage when it comes to data analytics and machine learning. Google's deep expertise in these fields is evident in GCP's offerings. BigQuery, a serverless, highly scalable, and cost-effective multicloud data warehouse, is a prime example. Combined with tools like TensorFlow and Vertex AI, GCP provides a powerful platform for data-driven businesses. AWS, while offering its own suite of data analytics and machine learning services, hasn't quite matched GCP's prowess in this domain. While services like Amazon Redshift and SageMaker are robust, GCP's offerings often provide a more seamless and integrated experience for data scientists and analysts. Kubernetes: GCP's Home Turf Kubernetes, the open-source container orchestration platform, was born at Google. GCP's Google Kubernetes Engine (GKE) is widely considered the most mature and feature-rich Kubernetes offering in the market. For businesses embracing containerization and microservices, GKE provides a compelling advantage. AWS offers its own managed Kubernetes service, Amazon Elastic Kubernetes Service (EKS). While EKS is a solid offering, it lags behind GKE in terms of features and maturity. Pricing: A Complex Battleground Pricing in the cloud is a complex and ever-evolving landscape. Both AWS and GCP offer competitive pricing models, with various discounts, sustained use discounts, and reserved instances. GCP has a reputation for aggressive pricing, often undercutting AWS on certain services. However, comparing costs requires careful analysis. AWS's vast array of services and pricing options can make it challenging to compare apples to apples. Understanding your specific needs and usage patterns is crucial for making informed cost comparisons. The Developer Experience: GCP's Developer-Centric Approach GCP has gained a reputation for being developer-friendly. Its focus on open source technologies, its command-line interface, and its well-documented APIs appeal to developers. GCP's commitment to Kubernetes and its strong support for containerization further enhance its appeal to the developer community. AWS, while offering a comprehensive set of tools and SDKs, can sometimes feel less developer-centric. Its console can be complex to navigate, and its vast array of services can be overwhelming for new users. Global Reach: AWS's Extensive Footprint AWS boasts a global infrastructure with a presence in more regions than any other cloud provider. This allows businesses to deploy applications closer to their customers, reducing latency and improving performance. AWS also offers a wider range of edge locations, enabling low-latency access to content and services. GCP, while expanding its global reach, still has some catching up to do. This can be a disadvantage for businesses with a global presence or those operating in regions with limited GCP availability. The Verdict: A Close Contest The battle between AWS and GCP is a close contest. AWS, with its vast ecosystem, mature services, and global reach, remains a dominant force. However, GCP, with its strengths in data analytics, machine learning, Kubernetes, and developer experience, is a powerful contender. The best choice for your business will depend on your specific needs and priorities. If you prioritize breadth of services, global reach, and a mature ecosystem, AWS might be the better choice. If your focus is on data analytics, machine learning, containerization, and a developer-friendly environment, GCP could be the ideal platform. Ultimately, the cloud wars will continue to rage, driving innovation and pushing the boundaries of what's possible. As both AWS and GCP continue to evolve, the future of the cloud promises to be exciting, dynamic, and full of possibilities. Read the full article

0 notes

Text

The cloud computing arena is a battleground where titans clash, and none are mightier than Amazon Web Services (AWS) and Google Cloud Platform (GCP). While AWS has long held the crown, GCP is rapidly gaining ground, challenging the status quo with its own unique strengths. But which platform reigns supreme? Let's delve into this epic clash of the titans, exploring their strengths, weaknesses, and the factors that will determine the future of the cloud. A Tale of Two Giants: Origins and Evolution AWS, the veteran, pioneered the cloud revolution. From humble beginnings offering basic compute and storage, it has evolved into a sprawling ecosystem of services, catering to every imaginable need. Its long history and first-mover advantage have allowed it to build a massive and loyal customer base. GCP, the contender, entered the arena later but with a bang. Backed by Google's technological prowess and innovative spirit, GCP has rapidly gained traction, attracting businesses with its cutting-edge technologies, data analytics capabilities, and developer-friendly tools. Services: Breadth vs. Depth AWS boasts an unparalleled breadth of services, covering everything from basic compute and storage to AI/ML, IoT, and quantum computing. This vast selection allows businesses to find solutions for virtually any need within the AWS ecosystem. GCP, while offering a smaller range of services, focuses on depth and innovation. It excels in areas like big data analytics, machine learning, and containerization, offering powerful tools like BigQuery, TensorFlow, and Kubernetes (which originated at Google). The Data Advantage: GCP's Forte GCP has a distinct advantage when it comes to data analytics and machine learning. Google's deep expertise in these fields is evident in GCP's offerings. BigQuery, a serverless, highly scalable, and cost-effective multicloud data warehouse, is a prime example. Combined with tools like TensorFlow and Vertex AI, GCP provides a powerful platform for data-driven businesses. AWS, while offering its own suite of data analytics and machine learning services, hasn't quite matched GCP's prowess in this domain. While services like Amazon Redshift and SageMaker are robust, GCP's offerings often provide a more seamless and integrated experience for data scientists and analysts. Kubernetes: GCP's Home Turf Kubernetes, the open-source container orchestration platform, was born at Google. GCP's Google Kubernetes Engine (GKE) is widely considered the most mature and feature-rich Kubernetes offering in the market. For businesses embracing containerization and microservices, GKE provides a compelling advantage. AWS offers its own managed Kubernetes service, Amazon Elastic Kubernetes Service (EKS). While EKS is a solid offering, it lags behind GKE in terms of features and maturity. Pricing: A Complex Battleground Pricing in the cloud is a complex and ever-evolving landscape. Both AWS and GCP offer competitive pricing models, with various discounts, sustained use discounts, and reserved instances. GCP has a reputation for aggressive pricing, often undercutting AWS on certain services. However, comparing costs requires careful analysis. AWS's vast array of services and pricing options can make it challenging to compare apples to apples. Understanding your specific needs and usage patterns is crucial for making informed cost comparisons. The Developer Experience: GCP's Developer-Centric Approach GCP has gained a reputation for being developer-friendly. Its focus on open source technologies, its command-line interface, and its well-documented APIs appeal to developers. GCP's commitment to Kubernetes and its strong support for containerization further enhance its appeal to the developer community. AWS, while offering a comprehensive set of tools and SDKs, can sometimes feel less developer-centric. Its console can be complex to navigate, and its vast array of services can be overwhelming for new users. Global Reach: AWS's Extensive Footprint AWS boasts a global infrastructure with a presence in more regions than any other cloud provider. This allows businesses to deploy applications closer to their customers, reducing latency and improving performance. AWS also offers a wider range of edge locations, enabling low-latency access to content and services. GCP, while expanding its global reach, still has some catching up to do. This can be a disadvantage for businesses with a global presence or those operating in regions with limited GCP availability. The Verdict: A Close Contest The battle between AWS and GCP is a close contest. AWS, with its vast ecosystem, mature services, and global reach, remains a dominant force. However, GCP, with its strengths in data analytics, machine learning, Kubernetes, and developer experience, is a powerful contender. The best choice for your business will depend on your specific needs and priorities. If you prioritize breadth of services, global reach, and a mature ecosystem, AWS might be the better choice. If your focus is on data analytics, machine learning, containerization, and a developer-friendly environment, GCP could be the ideal platform. Ultimately, the cloud wars will continue to rage, driving innovation and pushing the boundaries of what's possible. As both AWS and GCP continue to evolve, the future of the cloud promises to be exciting, dynamic, and full of possibilities. Read the full article

0 notes

Text

Top Data Analytics Tools in 2024: Beyond Excel, SQL, and Python

Introduction

As the field of data analytics continues to evolve, new tools and technologies are emerging to help analysts manage, visualize, and interpret data more effectively. While Excel, SQL, and Python remain foundational, 2024 brings innovative platforms that enhance productivity and open new possibilities for data analysis from the Data Analytics Course in Chennai.

Key Data Analytics Tools for 2024

Tableau: A powerful data visualization tool that helps analysts create dynamic dashboards and reports, making complex data easier to understand for stakeholders.

Power BI: This Microsoft tool integrates with multiple data sources and offers advanced analytics features, making it a go-to for business intelligence and real-time data analysis.

Apache Spark: Ideal for big data processing, Apache Spark offers fast and efficient data computation, making it suitable for handling large datasets.

Alteryx: Known for its user-friendly interface, Alteryx allows data analysts to automate workflows and perform advanced analytics without extensive programming knowledge.

Google BigQuery: A serverless data warehouse that allows for quick querying of massive datasets using SQL, ideal for handling big data with speed.

Conclusion

In 2024, the landscape of data analytics tools is broader than ever, providing new capabilities for handling larger datasets, creating richer visualizations, and simplifying complex workflows. Data analysts who stay current with these tools will find themselves more equipped to deliver impactful insights.

0 notes

Text

The Future of AWS Careers: Emerging Trends and Opportunities

cloud computing continues to revolutionize industries worldwide, Amazon Web Services (AWS) remains at the forefront of this digital transformation. With its wide array of services, from storage and databases to machine learning and IoT, AWS has become a vital tool for businesses of aAs ll sizes. As a result, career opportunities in AWS are expanding rapidly, and professionals with the right skill sets are in high demand. Let’s explore the emerging trends in the AWS ecosystem and the opportunities they bring for those pursuing a future in cloud computing.https://internshipgate.com

1. Cloud-Native Development and Serverless Architectures

One of the most exciting trends in AWS is the shift towards cloud-native development and serverless architectures. AWS Lambda, for instance, allows developers to run code without provisioning or managing servers, which reduces overhead and improves scalability. This trend opens up opportunities for software developers, DevOps engineers, and cloud architects who specialize in designing and maintaining applications that are optimized for the cloud.

Career Opportunities:

Cloud Software Developer

Serverless Architect

Cloud Infrastructure Engineer

2. AI and Machine Learning (ML) Integration

The integration of AI and ML into AWS services is another game-changer for cloud professionals. AWS offers a suite of AI/ML tools like SageMaker, Rekognition, and Comprehend, making it easier for organizations to build intelligent applications. The growing importance of AI/ML skills in the cloud space means that professionals with expertise in data science and machine learning have new avenues to explore within the AWS ecosystem.

Career Opportunities:

Machine Learning Engineer

Data Scientist (AWS)

AI Solutions Architect

3. Edge Computing and IoT

With the rise of Internet of Things (IoT) devices and the need for real-time data processing, edge computing is becoming increasingly important. AWS offers solutions such as AWS IoT Core and AWS Greengrass, which allow businesses to process data closer to the source rather than sending it all to centralized cloud servers. This trend is generating a demand for professionals who can design and manage distributed computing systems and IoT architectures.

Career Opportunities:

IoT Cloud Engineer

Edge Computing Specialist

Cloud Network Engineer

4. Cybersecurity and Compliance

As organizations migrate more sensitive data to the cloud, cybersecurity and compliance have become top priorities. AWS provides various security tools, including AWS Shield, AWS WAF, and AWS Identity and Access Management (IAM), but ensuring robust cloud security still requires human expertise. Professionals skilled in cloud security, data privacy, and regulatory compliance are becoming indispensable to organizations aiming to safeguard their AWS environments.

Career Opportunities:

AWS Security Specialist

Cloud Compliance Analyst

Cybersecurity Architect (Cloud)

5. Hybrid Cloud and Multi-Cloud Architectures

While AWS remains a dominant player in the cloud market, many organizations are adopting hybrid cloud or multi-cloud strategies to avoid vendor lock-in or to meet specific business needs. This trend means there is a growing demand for professionals who can design and manage environments that integrate AWS with other cloud platforms like Microsoft Azure or Google Cloud.

Career Opportunities:

Multi-Cloud Architect

Cloud Migration Specialist

Hybrid Cloud Solutions Engineer

6. Automation and DevOps

DevOps has been a driving force behind the efficient deployment and management of cloud applications. AWS offers several tools like AWS CodePipeline, CloudFormation, and Elastic Beanstalk to streamline the DevOps lifecycle. As businesses continue to adopt agile practices, professionals skilled in automating workflows, managing CI/CD pipelines, and maintaining scalable infrastructure are in high demand.

Career Opportunities:

AWS DevOps Engineer

Automation Specialist (Cloud)

Cloud Infrastructure Developer

7. Big Data and Analytics

Data has become the currency of the digital age, and AWS offers comprehensive services for big data storage, processing, and analytics through services like Redshift, EMR, and Glue. Professionals with a background in data engineering, analytics, and database management have the opportunity to leverage these tools to help organizations extract actionable insights from their data.

Career Opportunities:

AWS Data Engineer

Cloud Database Administrator

Big Data Architect

How to Prepare for a Career in AWS

The future of AWS careers is bright, but staying competitive requires continuous learning and skill development. Here are a few ways to stay ahead:

Get Certified: AWS offers a range of certifications that validate your expertise in specific areas, such as AWS Solutions Architect, AWS Developer, and AWS Security Specialist.

Hands-On Experience: Practical experience in using AWS services is critical. Engage in real-world projects or contribute to open-source initiatives to build your portfolio.

Stay Updated: The AWS landscape is constantly evolving. Stay informed about new services, features, and best practices by attending AWS events, webinars, and reading official AWS blogs.

Conclusion

As AWS continues to expand its offerings and influence across industries, the career possibilities in the AWS ecosystem are limitless. Whether you’re a software developer, data scientist, cybersecurity expert, or network engineer, there’s a growing demand for professionals who can harness the power of AWS to drive innovation and business growth. Embrace the trends, stay adaptable, and position yourself for success in this ever-evolving field of cloud computing.https://internshipgate.com

#career#virtualinternship#internship#internshipgate#internship in india#aws cloud#job opportunities#aws course

1 note

·

View note

Text

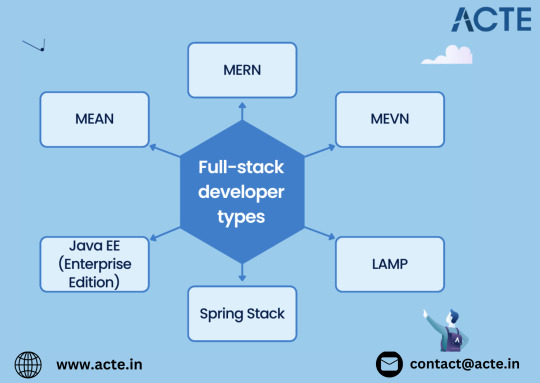

Exploring the Varieties of Full Stack Developers: A Comprehensive Guide

In today’s digital age, full-stack developers are at the forefront of web development, wielding skills that encompass both front-end and back-end technologies. For those looking to master the art of Full Stack, enrolling in a reputable Full Stack Developer Training in Pune can provide the essential skills and knowledge needed for navigating this dynamic landscape effectively.

Their versatility allows them to handle various aspects of application development, making them highly sought after in the tech industry. However, not all full-stack developers are created equal; they often specialize in specific technologies or frameworks. This blog will delve into the various types of full-stack developers and what distinguishes them in the field.

1. MEAN Stack Specialists

Core Technologies: MongoDB, Express.js, Angular, Node.js MEAN stack specialists are adept at utilizing JavaScript across the entire development stack. They create dynamic web applications by leveraging MongoDB for data storage, Express.js for server-side logic, Angular for building user interfaces, and Node.js for handling server operations. This cohesive use of JavaScript facilitates a smoother development process and simplifies debugging.

2. MERN Stack Experts

Core Technologies: MongoDB, Express.js, React, Node.js MERN stack experts share similarities with MEAN developers but opt for React in their front-end development. React’s component-based structure allows for the creation of highly interactive user interfaces. By combining MongoDB, Express.js, and Node.js, MERN developers can build efficient and responsive applications.

3. LAMP Stack Professionals

Core Technologies: Linux, Apache, MySQL, PHP LAMP stack professionals are well-versed in open-source technologies. They utilize PHP for server-side programming alongside MySQL for database management. The combination of Linux as the operating system and Apache as the web server makes LAMP a reliable choice for many web applications, emphasizing stability and flexibility.

4. Django Full-Stack Developers

Core Technologies: Django (Python), HTML, CSS, JavaScript Django full-stack developers focus on building robust applications using the Django framework, which is based on Python. Their proficiency extends to HTML, CSS, and JavaScript for front-end development. This skill set allows them to develop scalable and efficient web applications quickly. Here’s where getting certified with the Top Full Stack Online Certification can help a lot.

5. Ruby on Rails Developers

Core Technologies: Ruby, Rails, JavaScript, HTML, CSS Ruby on Rails developers specialize in using the Ruby programming language along with the Rails framework to create dynamic web applications. Their expertise spans both front-end and back-end technologies, and they utilize the conventions of Rails to streamline and expedite the development process.

6. Serverless Full-Stack Developers

Core Technologies: AWS Lambda, Azure Functions, Firebase Serverless full-stack developers leverage cloud computing to create applications without needing to manage physical servers. They typically work with languages like JavaScript or Python and utilize cloud services to build scalable and cost-effective applications, simplifying infrastructure management.

7. Mobile-Focused Full-Stack Developers

Core Technologies: React Native, Flutter, Ionic Mobile-focused full-stack developers specialize in creating applications that function seamlessly on various mobile platforms. They are skilled in both mobile front-end and back-end development, often employing APIs to support their applications. With the increasing demand for mobile apps, their expertise is particularly valuable.

Conclusion

Full-stack development encompasses a wide array of specializations, each with its own unique technologies and frameworks. By understanding the different types of full-stack developers, businesses can make informed decisions when hiring, ensuring they find the right talent for their projects. For aspiring developers, recognizing these specialties can help guide their learning and career paths in the ever-evolving tech landscape.

#full stack course#full stack developer#full stack software developer#full stack training#full stack web development

0 notes

Link

0 notes