#Mark Andreesen

Explore tagged Tumblr posts

Text

BROLIGARCHS:

Ross Douthat, colunista do NYTimes, entrevista Marc Andreessen, investidor do Vale do Silício e Steve Bannon, ex-estrategista-chefe de Donald Trump. que revelam um pouco das várias correntes que compõem o governo Trump.

Para Annie-Rose Strasser, produtora executiva daquele diário para áudio, "ler as linhas entre os vários grupos que compõem a direita da América pode parecer alucinante. Há o novo contingente da direita tecnológica. Há a ala populista que originalmente levou o presidente Trump ao poder. Existem os conservadores sociais, os isolacionistas, os falcões, os Wall Streeters da velha escola. As divisões políticas nunca na minha vida pareceram mais inescrutáveis. Entendê-los é crucial para entender nosso momento político e a série de notícias da Washington de Trump."

Confira:

youtube

youtube

0 notes

Text

Drones have changed war. Small, cheap, and deadly robots buzz in the skies high above the world’s battlefields, taking pictures and dropping explosives. They’re hard to counter. ZeroMark, a defense startup based in the United States, thinks it has a solution. It wants to turn the rifles of frontline soldiers into “handheld Iron Domes.”

The idea is simple: Make it easier to shoot a drone out of the sky with a bullet. The problem is that drones are fast and maneuverable, making them hard for even a skilled marksman to hit. ZeroMark’s system would add aim assistance to existing rifles, ostensibly helping soldiers put a bullet in just the right place.

“We’re mostly a software company,” ZeroMark CEO Joel Anderson tells WIRED. He says that the way it works is by placing a sensor on the rail mount at the front of a rifle, the same place you might put a scope. The sensor interacts with an actuator either in the stock or the foregrip of the rifle that makes adjustments to the soldier’s aim while they’re pointing the rifle at a target.

A soldier beset by a drone would point their rifle at the target, turn on the system, and let the actuators solidify their aim before pulling the trigger. “So there’s a machine perception, computer vision component. We use lidar and electro-optical sensors to detect drones, classify them, and determine what they’re doing,” Anderson says. “The part that is ballistics is actually quite trivial … It’s numerical regression, it’s ballistic physics.”

According to Anderson, ZeroMarks’ system is able to do things a human can’t. “For them to be able to calculate things like the bullet drop and trajectory and windage … It’s a very difficult thing to do for a person, but for a computer, it’s pretty easy,” he says. “And so we predetermined where the shot needs to land so that when they pull the trigger, it’s going to have a high likelihood of intersecting the path of the drone.”

ZeroMark makes a tantalizing pitch—one so attractive that venture capital firm Andreesen Horowitz invested $7 million in the project. The reasons why are obvious for anyone paying attention to modern war. Cheap and deadly flying robots define the conflict between Russia and Ukraine. Every month, both sides send thousands of small drones to drop explosives, take pictures, and generate propaganda.

With the world’s militaries looking for a way to fight back, counter-drone systems are a growth industry. There are hundreds of solutions, many of them not worth the PowerPoint slide they’re pitched from.

Can a machine-learning aim-assist system like what ZeroMark is pitching work? It remains to be seen. According to Anderson, ZeroMark isn’t on the battlefield anywhere, but the company has “partners in Ukraine that are doing evaluations. We’re hoping to change that by the end of the summer.”

There’s good reason to be skeptical. “I’d love a demonstration. If it works, show us. Till that happens, there are a lot of question marks around a technology like this,” Arthur Holland Michel, a counter-drone expert and senior fellow at the Carnegie Council for Ethics in International Affairs, tells WIRED. “There’s the question of the inherent unpredictability and brittleness of machine-learning-based systems that are trained on data that is, at best, only a small slice of what the system is likely to encounter in the field.”

Anderson says that ZeroMark’s training data is built from “a variety of videos and drone behaviors that have been synthesized into different kinds of data sets and structures. But it’s mostly empirical information that’s coming out of places like Ukraine.”

Michel also contends that the physics, which Anderson says are simple, are actually quite hard. ZeroMark’s pitch is that it will help soldiers knock a fast-moving object out of the sky with a bullet. “And that is very difficult,” Michel says. “It’s a very difficult equation. People have been trying to shoot drones out of the sky [for] as long as there have been drones in the sky. And it’s difficult, even when you have a drone that is not trying to avoid small arms fire.”

That doesn’t mean ZeroMark doesn’t work—just that it’s good to remain skeptical in the face of bold claims from a new company promising to save lives. “The only truly trustworthy metric of whether a counter-drone system works is if it gets used widely in the field—if militaries don’t just buy three of them, they buy thousands of them,” Michel says. “Until the Pentagon buys 10,000, or 5,000, or even 1,000, it’s hard to say, and a little skepticism is very much merited.”

3 notes

·

View notes

Text

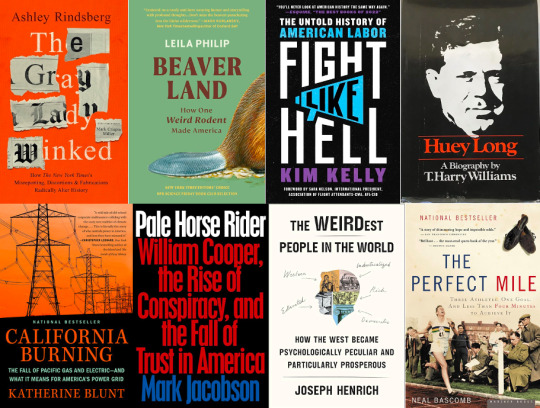

2023 Year in Review

Books by GPA

The Perfect Mile - 3.47

Huey Long - 3.33

California Burning - 3.13

Pale Horse Rider - 3.07

The WEIRDest People in the World - 3.00

Beaverland - 2.53

Fight Like Hell - 2.33

The Gray Lady Winked - 2.13

Andy's Top Three

The Perfect Mile

Pale Horse Rider

Huey Long

Gabe's Top Three

The Perfect Mile

Huey Long

The WEIRDest People on Earth

Jachles's Top Three

California Burning

Pale Horse Rider

Huey Long

Paul's Top Three

Huey Long

The Perfect Mile

Pale Horse Rider

Tommy's Top Three

The Perfect Mile

The WEIRDest People on Earth

California Burning

Non Book Club Books and Articles We Enjoyed in 2023

Andy:

You're Not Listening by Kate Murphy

Finding Ultra by Rich Roll

Attention Span by Gloria Mark

The Self-Driven Child by William Stixrud and Ned Johnson

Gabe:

The Last Action Heroes by Nick de Semlyen

Billionaire Wilderness by Justin Farrell

Labyrinth of Ice by Buddy Levy

Jachles:

Did Demolition Man Predict the Millenial? by Kabir Chibber from The New York Times

Paul:

Stay True by Hua Hsu

How to Read Nancy by Mark Newgarden and Paul Karasik

Wail: The Life of Bud Powell by Peter Pullman

The Number Ones by Tom Breihan

Left Back by Diane Ravitch

Other Minds by Peter Godfrey-Smith

The Garden State Parkway's Jon Bon Jovi Rest Stop is Playing Fast and Loose With Famous Quotes by Dan McQuade from Defector

Tommy:

Helgoland by Carlo Rovelli

Scarcity Brain by Michael Easter

We're all Stochastic Parrots by Goutham Kurra

Why AI Will Save the World by Marc Andreesen

A Pointed Angle by Meera Subramanian from Orion Magazine

2 notes

·

View notes

Text

Did you ever wonder why the 21st century feels like we're living in a bad cyberpunk novel from the 1980s? It's because these guys read those cyberpunk novels and mistook a dystopia for a road map. They're rich enough to bend reality to reflect their desires. But we're not futurists, we're entertainers! We like to spin yarns about the Torment Nexus because it's a cool setting for a noir detective story, not because we think Mark Zuckerberg or Andreesen Horowitz should actually pump several billion dollars into creating it.

#charles stross#the torment nexus#The modern era is run by unimaginative assholes who stole their ideas from sf paperbacks and the usborne book of the future

1 note

·

View note

Text

Did you ever wonder why the 21st century feels like we're living in a bad cyberpunk novel from the 1980s?

It's because these guys read those cyberpunk novels and mistook a dystopia for a road map. They're rich enough to bend reality to reflect their desires. But we're not futurists, we're entertainers! We like to spin yarns about the Torment Nexus because it's a cool setting for a noir detective story, not because we think Mark Zuckerberg or Andreesen Horowitz should actually pump several billion dollars into creating it. And that's why I think you should always be wary of SF writers bearing ideas.

1 note

·

View note

Text

We are used to thinking of our ideological divide as cleaving conservatives from liberals. I think the Republican Party’s collapse into incoherence reflects the fact that much of the modern right is reactionary, not conservative. This is what connects figures as disparate as Jordan Peterson and J.D. Vance and Peter Thiel and Donald Trump. These are the ideas that unite both the mainstream and the weirder figures of the so-called postliberal right, from Patrick Deneen to the writer Bronze Age Pervert. This is not a coalition that cares about tax cuts. It’s a coalition obsessed with where we went wrong: the weakness, the political correctness, the liberalism, the trigger warnings, the smug elites. It’s a coalition that believes we were once hard and have become soft; worse, we have come to lionize softness and punish hardness.

The story of the reactionary follows a template across time and place. It “begins with a happy, well-ordered state where people who know their place live in harmony and submit to tradition and their God,” Mark Lilla writes in his 2016 book, “The Shipwrecked Mind: On Political Reaction.” He continues:

Then alien ideas promoted by intellectuals — writers, journalists, professors — challenge this harmony, and the will to maintain order weakens at the top. (The betrayal of elites is the linchpin of every reactionary story.) A false consciousness soon descends on the society as a whole as it willingly, even joyfully, heads for destruction. Only those who have preserved memories of the old ways see what is happening. Whether the society reverses direction or rushes to its doom depends entirely on their resistance.

The Silicon Valley cohort Andreessen belongs to has added a bit to this formula. In their story, the old way that is being lost is the appetite for risk and inequality and dominance that drives technology forward and betters human life. What the muscled ancients knew and what today’s flabby whingers have forgotten is that man must cultivate the strength and will to master nature, and other men, for the technological frontier to give way. But until now, you had to squint to see it, reading small-press books or following your way down into the meme holes that have become the preferred form of communication among this crew.

–Ezra Klein, "The Reactionary Futurism of Marc Andreesen," The New York Times, October 26, 2023

0 notes

Text

The Four Billionaires Who Want to Control the Universe

SCHEERPOST, September 8, 2023 In Jonathan Taplin’s new book, “The End of Reality: How Four Billionaires are Selling a Fantasy Future of the Metaverse, Mars and Crypto,” the internet innovation expert delves into activities of the gang-of-four powerful oligarchs: Elon Musk, Peter Thiel, Mark Zuckerberg and Marc Andreesen, breaking down their increasing profits and infinite ambitions to control…

View On WordPress

0 notes

Text

stop gaslighting me. they already made a puss in boots movie it had the weird egg guy that looks like mark andreesen in it. they didnt make a second shrek spinoff movie and anyone who tries to tell me they did is lying and ill kill them

52 notes

·

View notes

Text

Google bans anticompetitive vocabularies

Google is facing anti-monopoly enforcement action in the EU and the USA and the UK, with more to come, and the company is starting to get nervous.

It's just issued guidance to employees advising them against using words in their internal comms that would be smoking guns in these investigations: "market," "barriers to entry," and "network effects" among them.

https://themarkup.org/google-the-giant/2020/08/07/google-documents-show-taboo-words-antitrust

The memo detailing the new linguistic rules leaked to The Markup, with the anodyne title "Five Rules of Thumb for Written Communications.”

https://www.documentcloud.org/documents/7016657-Five-Rules-of-Thumb-for-Written-Communications.html

The memo cites other companies that got into trouble when internal memos came to light, such as Microsoft's infamous stated internal goal of "cutting off Netscape's air supply." It advises workers to discuss their plans in pro-competition terms.

Googlers are advised to discuss customer retention through product quality, not through "stickiness" or other euphemisms for lock-in; to explain how new products will succeed without "leveraging" existing successes, and to steer clear of "scale effects" as corporate goals.

They're enjoined from celebrating Google's market dominance, to discuss how Google "dominates or controls" its markets, or even to estimate what share of a market Google controls. They're also not allowed to define their markets, which would let other estimate their share.

This is going to make for some tortured backflips! Google's going to struggle to (say) improve "search" without defining what "search" is.

Ironically for a leaked doc, the doc advises the reader to assume every doc their produce will leak (!), but OTOH, points for realism.

For me, the most interesting thing about the memo is that it marks a serious turning-point in the way that business leaders talk about competition. Peter Thiel famously said that "competition is for losers."

https://www.alleywatch.com/2015/08/according-peter-thiel-competition-loser/

Thiel's the quiet-part-aloud guy, but he's not alone. Famous investors like Warren Buffet and Andreesen-Horowitz openly boast of a preference for investing in monopolies, and creating monopolies is Softbank's stated purpose.

Every pirate wants to be an admiral. Capitalists who dream of dominance want competition. Capitalists who attain dominance want to abolish it.

33 notes

·

View notes

Text

okay but i have to laugh at how dustin called eduardo a tax evader on twitter, so eduardo doesn’t follow him but follows chris, mark’s sister, one of mark’s closest friends marc andreesen, peter thiel, sheryl sandberg, and sean parker but no one follows eduardo except mark’s friend marc who was the one who called eduardo a sucker when he first invested in facebook and the one mark got in trouble with for messaging shit about the winklvei and eduardo to. and what’s full on trippy is the first person he followed out of all of them was sean and he followed sean, randi, chris, and peter all before he followed facebook and he doesn’t follow the winklevoss twins or divya. like sweetie you’re so obvious.

#he is such mark trash still someone go collect him and help him#but he so obviously isn't following dustin because of that and i'm laughing#cause he follows literally everyone else#eduardo saverin#tsn

10 notes

·

View notes

Quote

The Observer has also learned that a Facebook board member and confidant of its CEO Mark Zuckerberg met Christopher Wylie, the Cambridge Analytica whistleblower, in the summer of 2016 just as the data firm started working for the Trump campaign. Facebook has repeatedly refused to say when its senior executives, including Zuckerberg, learned of how Cambridge Analytica had used harvested data from millions of people across the world to target them with political messages without their consent. But Silicon Valley insiders have told the Observer that Facebook board member Marc Andreessen, the founder of the venture capital firm Andreessen Horowitz and one of the most influential people in Silicon Valley, attended a meeting with Wylie held in Andreessen Horowitz’s office two years before he came forward as a whistleblower. ... Individuals who attended the meeting with Wylie and Andreessen claim it was set up to learn what Cambridge Analytica was doing with Facebook’s data and how technologists could work to “fix” it. It is unclear in what capacity Andreesen Horowitz hosted and who attened the meeting but it is nonetheless a hugely embarrassing revelation for Facebook, which was revealed last week to be the subject of a criminal investigation into whether it had covered up the extent of its involvement with Cambridge Analytica.

Facebook faces fresh questions over when it knew of data harvesting | Technology | The Guardian

#carole cadwalladr#the guardian#facebook#cambridge analytica#christopher wylie#mark zuckerber#marc andreessen

15 notes

·

View notes

Photo

New Post has been published on https://coinprojects.net/three-potentially-profitable-crypto-trades-as-we-head-into-the-weekend/

Three potentially profitable crypto trades as we head into the weekend

It has been a rollercoaster ride for cryptocurrencies this week. After a rally earlier in the week, the market has nosedived, and many top cryptocurrencies have shed off most of the gains they had made earlier. However, some cryptocurrencies have good odds of doing well in the coming days. These have big news coming up.

Bitcoin, too, is holding strong above the $23k mark despite the market correction and could help give the broader market momentum in the coming days. If you want to trade the market today, here are some of the top cryptocurrencies that are likely to do well soon.

Cronos (CRO)

Cronos (CRO) is one of the top cryptocurrencies likely to do well this week. This has a lot to do with the news that crypto.com is powering a system allowing users to pay for fuel in crypto at gas stations across Australia.

While the payments will be made in Bitcoin, the impact will also be felt in the price of Cronos. That’s because it is the cryptocurrency that powers the Crypto.com ecosystem. As news of the Australian gas station deals filters into the market, Cronos (CRO) will likely record some positive price action.

Cronos also recently got FCA approval, a factor that could add to its adoption levels in the U.K. This is likely to add to the favourable price momentum, especially if the broader market turns bullish again.

Flow (FLOW)

Flow (FLOW) was one of the best cryptocurrency performers recently. It is still one of the top cryptocurrencies in the green. Its recent rally has much to do with confusion with Marc Andreesen’s backed real estate venture, Flow.

That said, a lot is going on with the FLOW cryptocurrency that could play well into its price action. One is the rising number of NFTs launching on the flow blockchain. These are adding to the demand for FLOW. If the wave changes and the broader market turns bullish again, FLOW will likely outperform most top cryptocurrencies by a considerable margin.

Ethereum (ETH)

Ethereum (ETH) has recently been a top cryptocurrency performer, topping a high of $2000 on the 14th of August. In the short term, Ethereum will likely outperform the broader market. That’s because investors are still counting on an even bigger price rally as the merger draws closer.

Many analysts are already optimistic that the merger will positively influence the price of Ethereum. For instance, Arthur Hayes, the co-founder of Bitmex, believes that the cost of Ethereum could rise due to a mix of investor expectations and the now deflationary nature of Ethereum. He added that the price would continue increasing for years until every human has an Ethereum wallet.

With such potential and the fact that Ethereum has been in a correction recently, this cryptocurrency could see FOMO buying if the broader market turns green again in the coming days.

Source link By Motiur Rahman

#Altcoin #Bitcoin #BlockChain #BlockchainNews #Crypto #ETH #Etherium

0 notes

Text

Arpanet para luego WWW

Para mi sorpresa Internet inicia mucho antes de lo que pensaba, creo que una mayoria tambien lo creiamos, quizas los 90's era la epoca donde empezo a asentarse en los espacios familiares. Es con la fusion de varias redes de ordednadores individuales, de ellas la mas antigua es Arpanet.

En 1966 la agencia ARPA (Advanced Research Projects Agency), ellos tenian este programa de programas de investigacion. Aqui nace el concepto de Internet tal y como los conocemos, una red para conectar varios ordenadores, para mejorar la potencia y descentralizar el almacenamiento de la informacion. El gobierno necesitaba una manera de acceder y distribuir cualquier informacion en caso de catastrofes.

El equipo que diseñó, construyo e instalo ARPANET esa muy diverso, consistiendo en ingenieros eléctricos, científicos en computación, matemáticos y estudiantes avanzados. Grabaron el resultado de sus estudios e investigaciones en una serie de documentos llamados RFCs (Request for Comments), los cuales están disponibles para todo aquel que los quiera consultar.

En 1972 ARPANET se presentó en la First International Conference on Computers and Communication en Washington DC. Los científicos de ARPANET demostraron que el sistema era operativo creando una red de 40 puntos conectados en diferentes localizaciones. Esto estimuló la búsqueda en este campo y se crearon otras redes.

Luego a principios de los 80 se comenzaron a desarrollar los ordenadores de forma exponencial. El crecimiento era tan veloz que se temía que las redes se bloquearan debido al gran número de usuarios y de información transmitida, hecho causado por el fenómeno e-mail. La red siguió creciendo exponencialmente como muestra el gráfico.

WWW

El World Wide Web (WWW) es una red de “sitios” que pueden ser buscados y mostrados con un protocolo llamado HyperText Transfer Protocol (HTTP).

El concepto de WWW fue diseñado por Tim Berners-Lee y algunos científicos del CERN (Conseil Européen pour la Recherche Nucléaire) en Ginebra. Estos científicos estaban muy interesados en poder buscar y mostrar fácilmente documentación a través de Internet. Los científicos del CERN diseñaron un navegador/editor y le pusieron el nombre de World Wide Web. Este programa era gratuito. No es muy conocido actualmente pero muchas comunidades científicas lo comenzaron a usar en su momento.

En 1991 esta tecnología fue presentada al público a pesar de que el crecimiento en su utilización no fue muy espectacular, a finales de 1992 solamente había 50 sitios web en el mundo, y en 1993 había 150.

En 1993 Mark Andreesen, del National Center for SuperComputing Applications (NCSA) de Illinois publicó el Mosaic X, un navegador fácil de instalar y de usar. Supuso una mejora notable en la forma en qué se mostraban los gráficos. Era muy parecido a un navegador de hoy en día.

A partir de la publicación de la tecnología WWW y de los navegadores se comenzó a abrir Internet a un público más amplio: actividades comerciales, páginas personales, etc. Este crecimiento se aceleró con la aparición de nuevos ordenadores más baratos y potentes.

0 notes

Text

The Continuous Economy

Value

The Internet has passed 20 years old, and so, after two decades of what we quaintly called e-commerce and now just call “business,” it’s appropriate to consider whether the concept of value has changed.

In a world of pervasive information devices, a trillion percent reduction in the cost of computing since the birth of the Internet and more transactions occurring online than through physical exchange, can value still be the same?

Noting Mark Andreesen’s famous quote that “Software is eating world,” we all know software has become the dominant method chosen by consumers for selecting, consuming, and paying for their goods and services.

So how is one piece of information more valuable than another? Or, more specifically, how is one piece of software more valuable than another?

A Brief – But Essential – Digression on the History of Value

In Medieval times, the value of a good was thought to consist of its utility, or usefulness, and its scarcity. So, wood in deserts would be more valuable than wood in rainforests. The problem with this view was that it did not take into account what it cost to produce something. Given a marketplace full of wooden wagons, which wagon would have the most value?

In the 17th century, theorists like Petty and Cantillon tried to solve this problem by rooting value in the basic factors of production – labor and land, respectively. Some wood is harder to chop than others (apparently). Philosophers like John Locke, however, countered that utility remained a decisive factor following an earlier philosopher, John Law, who coined the water diamond paradox – diamonds have no utility to a parched man in the desert.

Notwithstanding this, throughout most of the 18th and 19th centuries, economists adopted a labor-centered theory of value. This made sense politically and socially as land became claimed for industrial scale development and skilled tradesmen from the country were drafted to work in factories.

But how much should they be paid? Karl Marx famously claimed that, “All commodities are only definite masses of congealed labor time.” Capital, like machines (and software much later) locked up value and released it for those who owned the capital rather than those who made it, the laborers.

The problem with labor and land-based theories of production, though, was that they did not explain why prices failed to go up – even for goods where, regardless of demand increases, supply always stayed the same; for instance, a cooper can only make so many barrels.

In the 19th century, Jevons and Menger revisited the utility theory of value, independently claiming that all value was based on utility. Jevons’ quote outlines the rather massive shift now being considered, “Cost of production determines supply, supply determines final degree of utility, final degree of utility determines value.” This was the so-called “marginalist” revolution.

We finally arrive in the 20th century and contemplate work done by Alfred Marshall. He attempted to reconcile the labor and utility theories of value by considering “time effects” – i.e. the time each factor takes to have an impact on the final value.

So we now find ourselves with a basic model of value that is determined by the factors of production (land, labor and capital), utility and time.

This time dimension is critical to our story in the 21st century.

Indeed, 20 years ago, customers chose products based on product features. To some extent, that’s still true today. But it’s more important to recognize that customers in 2016 are continuously looking for new products, embracing them, and then continuously switching. In fact, the willingness to switch is so strong, according to Harris Interactive, that 86 percent of consumers quit doing business with a company because of a single bad experience. see

If you think this is an outlier that only applies to hip Web properties, think again. Over 40 UK banks guarantee the switching of bank accounts at no cost to the customer

So, it appears that we are now experiencing an economy with a very short memory for value.

The Frictional Value of a Product

We call the perceived value associated with being able to switch at no cost, the frictional value of a product. And frictionless is better, and of higher utility, because it allows customers to embrace a product and then discard it much more easily when they find a better one.

Take this to the next logical business level and you see that some transformation of your organization is required.

Why is this an economic imperative right now? Is this just an academic idea that has no bearing on us working stiffs?

Consider a simple example. Two decades ago, you would choose a car based on your ideal product characteristics (make, size etc.) and how much you could afford to spend. Your choice would live with you while you paid it off, or until you could justify changing it. The idea of choosing products based on product features is, of course, at the core of all consumer choice. If this weren’t true, how would we decide what to buy?

But what happens when we relax the assumption that you have to live with your choice for some period of time? What would happen to your choice of car if any choice you made today could be rescinded tomorrow and a new choice made? What if your car was updated every week instead of every year? What if there was an April 2016 model of the new Ford instead of just the 2016 model?

Well, the customer now has the opportunity to make feature choices at a greater frequency than was possible in the past. This is already happening at the software level as car operating systems are updated wirelessly and provide new features – through software updates – to existing owners. In this case, the car becomes a platform for software. The physical choice of platform (car) is also beginning to change, though. For those who wish to change their car regularly, there are many services (e.g. Zip Car) http://www.zipcar.com that allow you to rent access to a car and return it (or just leave it somewhere) when you’re done.

The contrasting cases of cars being easily swapped when you don’t want to own one and the case of a car you own being software-updated regularly are instructive – They both aim to reduce the friction of making changes. The former, of the physical product itself; and the latter, the speed of adding new features, independent of the physical platform itself. But, in both cases, these features are accessed using some software – car operating system or car app.

Let’s apply this to software itself.

Imagine if, for a particular piece of software, say a task management application, you could get it for nothing (or at a very low price) – such that changing it becomes unconstrained by its cost to you. Add to this that new features are being bolted on to the application – and its competitors’ products, too – very frequently. The only feature limiting your ability to move to another application is the application’s ability to let you transport your personalization data (e.g. your tasks in the task app) to the new app. If your requirements changed very slowly, and other products changed very slowly, it is likely – as in the past – that the value of switching would be low. But if you were constantly on the lookout for new features and wanted to try them out, and new features were continuously being added, it follows that every app you chose to move to would need to enable you to move “back” again. This is because the prevailing assumption of the software product consumer is that other applications will conceivably meet their needs better in future.

Obviously, every software product has a set of threshold features without which consumers will tend not to choose it. A car needs to be waterproof (!) with airbags and ABS brakes. A task app needs to allow you to add dates to your tasks. This set of features starts off as what is often termed a minimum viable product.

I believe that this set now includes the ability to switch away from the product and back again. In other words, software products are being designed to reduce utility friction.

Value and Time in Software

What does this mean in terms of defining value for software?

For those economists of the marginalist persuasion, it establishes that value is driven by utility. However, it also highlights that this utility really increases with the shortening of the time required for it to have effect in the market.

It therefore follows that we need product development processes that are lean and high velocity, enabling the translation of new product features into customer choice as easily as possible.

> I call this trend the “continuous economy.”

To return to the issue of labor value, though, companies simply can’t compete today unless they’re continuously shipping innovative software products and improving the way they produce them.

The reason for this is easy to see – increased value is dependent on increased labor productivity as well as increasing utility at a specific time. Or, put another way, engineers need care and feeding if they’re to produce the goods.

Indeed, the organization, and its people and processes producing software, must be able to pivot with agility in what seems like a nanosecond to keep pace with the quicksilver velocity of the 21st century’s continuous economy.

This is all very different than what we’ve experienced, even in the recent past.

Two decades ago, transformation was a discrete event. An organization confronted a new competitor in the market, so it responded by developing and deploying new operations, organization or software to meet the challenge.

Today, we need to continuously develop and deploy new software as part of an embedded and sustained organizational culture.

If you develop and deploy discretely in the continuous economy, you won’t be in business very long. The type of skills you need in this economy are those developed continuously as you learn from your customer.

Summary Equation

Continuous Software Delivery = Continuous Product Delivery = Continuous Organizational Transformation

Every organization today is a software organization because every organization’s products are at least partly software. Therefore, continuous software delivery enables continuous product delivery, which is what business requires right now.

And, taking this a step further, once an organization truly embraces continuous delivery, it must also embrace continuous testing and continuous integration.

There are tremendous benefits for businesses here.

If you’re able to pull all this together – the continuous shipping of code, along with continuous testing and continuous integration – you’ll almost certainly get more stable and easily understood systems, systems that can be broken down into smaller increments, and systems that customers can readily interact with.

On a macro-level, this continuous economy, created by Web innovators, threatens to fundamentally re-shape traditional enterprises, which are now being called upon to continuously transform.

But, more specifically, the continuous economy means that enterprises must continuously reshape and refashion their offers to customers.

Disruption and Value

In the continuous economy, companies that learn slower than their customers are doomed, because they will be disrupted.

Obviously, this means acquiring new knowledge fast. But, more profoundly, in order to continuously improve your teams and your products, and meet customers where they are today, you need to learn how to learn.

Let’s consider why this is the case.

We use the term, “disruptive innovation” very often these days – so much so that its real meaning has been obscured. And, from my perspective, it’s a great example of companies learning in the wrong way.

In the mid 1990’s, Clayton Christensen coined this term, and the basic idea was that competitors fighting against established leading companies in a market float under the radar of these market leaders because they produce products that are initially of much lower quality.

Of course, these competitors also produce at much lower cost and typically invest a great deal of effort increasing quality over time. So, as they attack the established market from the bottom with low-price products, they’re also innovating the quality of their products until they can capture the high-end with high-quality products at a fraction of the production cost of the established companies.

If ever there was an example of disruption, it’s the personal computer; initially hard to use and configured by specialists, it had replaced the majority of word processing hardware and typewriters in industry by the 1990’s.

Disruption occurs because companies learn using established methods; in other words, they listen to the customers they have, who buy the most from them. The process of responding to a customer in this way could be called single-loop learning – your customer tells you that the noise of the typewriter is deafening so you make a quieter one.

There is another way, however.

Learning

A simple example from Chris Argyris explains the concept of “double-loop learning” –

> “[A] thermostat that automatically turns on the heat whenever the temperature in a room drops below 68°F is a good example of single-loop learning. A thermostat that could ask, ‘why am I set to 68°F?’ and then explore whether or not some other temperature might more economically achieve the goal of heating the room would be engaged in double-loop learning.”

So, what if typewriter manufacturers had continuously reflected on whether they were serving the right customers? They might have seen the massive shift in usage of the PC in academic institutions and the reduction in the use of their hardware for traditional use in these places.

But, to be fair, this is not an easy process – learning what you still need to learn.

Organizations that transform at speed need to have an awareness of where they need to develop so that they can transform “toward” it.

So, sure, you have to learn from customers and gain an understanding of what they want and need.

Every organization does that now.

But it’s not enough to simply grasp that insight and intelligence and run with it.

No, you have to continuously improve how and what you learn from customers in this fluid and frictionless world.

Let’s see what this means in real-world organizational terms for a software company (and we’re all working for software companies)

Assume, for a moment, that you’re shipping an update of 1,000 lines of software code each week. That’s great. Maybe you are using continuous delivery, DevOps or some other unicorn methods.

But it’s not continuous learning – not unless you’re continuously improving how you’re learning from customers and continuously improving the teams inside your organization.

The Continuity Principle

If you can’t continuously improve how you’re learning from customers and continuously improve the teams inside your organization, you can’t ship continuously improved products. So, you need to improve what you ship and how you ship to continuously transform your organization.

Here’s an example of how this works.

Let’s say that your software development team thinks that the customer wants an improved red button that’s square instead of round. Okay, development of an improved red button proceeds and is completed in one week. But, at the same time, the team creates an automated test for the red button as well as clear metrics for determining if the red button is actually the problem. As we will discuss in more depth in Part 3, the idea that the button needed changing is a hypothesis until it has been tested with customers.

So, in addition to shipping the new square button to some customers, the team keeps some subset of customers using the round button. The customer test is then simply whether the click rate of the square and round button differs. This is classic A/B testing used every day by Web companies.

Imagine that the processes are not currently in place to enable the development team to receive these metrics – many teams are kept in splendid isolation from the results of their work – then the establishment of this relationship, perhaps with the Web operations team, is also going to be required. Agile retrospectives and blameless post-mortems are also great examples of activities that promote double-loop learning.

This is as much a requirement of successful deployment of these features as the features themselves.

The focus on the delivery of product features, as well as developing internal capability to understand the success of the features, is a core principle of continuous learning.

Following directly from this is the insight that the capability of the team in general precedes the capability of the product being developed.

Therefore, we need to ensure that our teams are improving continuously, too. we know that we have to be releasing new features frequently. And note – we learn very little of value by deploying to production, but not releasing to customers.

This results in “The Continuity Principle”

> we build to release, we release to learn, we learn to build.

To recap, we need to build software fast, always improving both it and the teams that produce it; creating an environment that supports learning is of equal importance to delivery.

Underpinning the Continuity Principle is another principle that gets to the heart of how we can enable teams to learn and build at velocity.

## The Two-Product Principle and the IOTA Model

This is the “Two-Product Principle.”

In a nutshell, the Two-Product Principle says that you always ship twice – every time you release to customers, you’re releasing the next iteration of your team’s capability to learn from its customers.

How does a team accomplish this?

Let’s get practical.

To illustrate the approach, I’ll introduce a model called IOTA that we use in product development and that ties many of the concepts discussed in this series together. The model is diagrammed below and the remainder of this article uses an example to show its use “in the wild.”

Think of the product we ship to the customer as “Product 1” – this is all about realizing our hypotheses of what we think the customer wants. But it isn’t practical action until we have actually delivered it and received feedback from the customer.

Meanwhile, the internal capabilities we wish to develop – “Product 2” – are our view of what we need to improve while we build. These capabilities are, therefore, not theoretical in the same way as “Product 1.” We can see their effectiveness, and our effectiveness at building them, the very minute we decide we need them. “Product 2” is at hand immediately.

How often, though, do we release software features that were “urgent,” but whose effectiveness is never actually measured?

Every feature should be measured.

And every feature is the realization of a hypothesis concerning capabilities the customer not only needs but will value.

What do we do with the results of our measures?

These inform the next cycle (or sprint), where we either build on our success when it comes to accurately providing for the customer or decide whether to remediate our miscalculation.

A great example of this, which will be familiar to software practitioners in banks everywhere, is the budgeting application that many banks have built into their online offerings. The hypothesis was that customers needed help budgeting. This is almost certainly true, but the means in which this was tested was, in most cases, by providing a budgeting solution and then measuring its use. This, incidentally, is very low across the board.

Instead, consider this simplified IOTA model for the budgeting problem –

+ Hypotheses

Customers want to budget using historical data from their bank accounts rather than control future spending (which is how most people understand the term, “budget”).

Customers understand how they spend money by categorizing their transactions.

+ Capabilities (Features)

Create an alert that notifies whenever spend in a category goes over a budgeted amount. E.g. $100 from Amazon.

Enable the categorization of transactions.

+ Conditions

Obtain from the data warehouse team any attributes they already use to analyze transactions so that we can provide categories for users as a starter set.

Determine if we can deduce categories of things bought using the Amazon transaction reference.

+ Targets

Within a month 5 percent of Internet banking customers have categorized some transactions.

More than 75 percent of those customers have activated a budget alert.

A few things to note –

If the targets are not met, it automatically results in a questioning of the entire cycle from the start (hypotheses) again. In the next sprint, we may decide to further refine our target because we got mixed results – for instance, 20 percent signed up, but only 10 percent used alerts.

Notice also the conditions (Product 2). These actions will not necessarily improve the team if the basic hypotheses are false, i.e. if customers really do not want to budget using their online banking service, but they may still be useful. The use of product 2 to investigate deeper and probe outside of the current scope of the hypotheses can be a useful means of not falling into the disruption trap.

To see why this is the case, consider that a bank wanted a budgeting application because it was afraid of disintermediation by apps like Mint.com. The response was to create some similar features as shown above. After the results above, the bank decided to test the ability to decline transactions by SMS, based on the budget categories and implemented by an MVP that enabled this only for Amazon purchases. On release, both the adoption of categorization and alerts spiked sharply.

For those in banking, there are many variables in this; but for the purposes of elaboration here, it’s clear that the ability to control the transaction seems important to the value perceived by the customer. And the basis for disintermediation – flashy graphical features (like budget pie charts) – seems less valuable than transaction control.

As a result, in the next cycle, we’re forced to look at other transaction types, or perhaps payment methods, that are more transparent. In this way, we use the customer to investigate value more openly. The basis for this will often be those conditions built up in Product 2 activities.

There are many Product 2 examples that are very mundane, however, like getting more powerful laptops for the team so they can run full local testing, or writing automated tests for dependent API’s.

Either way, ensuring that Product 2 features are given the same priority as Product 1 features means that, over time, the team improves the conditions within which it works, as well as its understanding of the broader ecosystem within which the product functions.

If you take this model to heart, then your organization will do all it can to shorten the time to deployment and action in order to shorten the time to gain new learning in the market. This limits the length of the costly business guessing game.

Change

I have yet to find a team that did not embrace the IOTA model as a method for sprint planning (accompanying their existing methods).

If we look deeply into what it means to operate at velocity, we see that it has to do with ensuring that those who are in control of the factors of production (i.e. the engineers in control of the code) are also intimate with the needs of the customers.

Indeed, we are in the midst of a maker-driven revolution. The makers, and not the managers, are at the sharp end of deciding what will win in the market.

This is difficult to absorb for the majority of large corporate teams.

The secret in driving this transformation is to realize that the same principles we use for software development – small batches, frequent iterations, continual value delivery – are the same for effective organizational transformation.

The key here is picking a project, then trying to assemble as close to a full stack team as you can, with a product owner, or at least a business representative that is open to dialogue about what to develop next.

Even in the case of a fairly traditional project manager who wants his or her 500 stories delivered in the next 18 months (no arguments), the practice of stating hypotheses and measuring success is difficult for any commercially minded manager to refute.

So choose an organizational barrier to overcome, one that the whole organization will agree is important but thinks insurmountable. In some companies this is as simple as provisioning a VM in less than a month (!), but in others it might be more complex. The problem could be the massive drag some compliance requirement puts on value delivery.

Either way, the combination of a project that delivers fast, actively mines Product 2, and also shows a solution to a recurrent organizational nightmare, is unstoppable.

And here’s another hard lesson – Individuals don’t change organizations (even if they’re the CIO), events do.

Every project you work on should be as much a value event for your customers as it is for your organization.

At the same time, every project iteration is an opportunity to create new capabilities, both for your customer and for your organization.

An event in this definition has a special meaning. An event is the visible solution to a problem the organization thought was unsolvable. An event triggers a questioning process within an organization, and the questions lead to conversations among teams about what is possible. We move from a language of impossibility to a language of possibility. So, for example, “What would it take for us to reduce our infrastructure provisioning process from two weeks to two minutes?”

The ELSA Model

This requires the organization to communicate in a language of possibility, which is where our ELSA (Event Language Structure Agency) Model comes in.

And when people at all levels of the company communicate in this language of possibility, when the impetus for transformation doesn’t just come from the top down, everyone wants to pitch in, take responsibility for change and help solve recurrent problems – not just anointed, or self-anointed, individuals

Continuous transformation relies on effective communication that breaks down silos, because this is the only way to get insight from everybody on what needs to be built now, what the design interaction should be today and what organizational changes are needed immediately – not in the future, and not based on the past. Too often, we try to anticipate the future in software development, but in continuous transformation the priority is to act on the present.

This is the real science of action.

Conway’s Law and Anticipating the Outcome

Conway’s Law was coined by Melvin Conway in the 1980’s, and it has had the same impact on how we think of technology transformation as Moore’s Law has had on how we build computers. Conway’s Law states, “Organizations will tend to build systems that reflect their communication structures.” This simple idea has some very powerful implications.

Consider the typical separation between the functions required to deliver software in a technology organization. For simplicity, let’s say it’s Development to Testing to Quality Assurance to Deployment.

If you are solely concerned with your functional role, say development, and not the final outcome – successful software the customer loves using – then you will tend to optimize for that. Testing is somebody else’s problem. We see this with the frightening “systems integration testing” phase where our individually crafted units all come together. The feedback from this testing phase to the developers is often imprecise. This is because the testers test the outcome, as defined in their tests, and use this language to describe success, whereas the developers need to understand which part of their code is not functioning correctly.

Consider what the result is when we collapse unit and integration testing into the development itself. Developers take responsibility for the quality of the tests and the code across the full scope of the application being developed. They are responsible for creating working software, not just delivering code for testing. Another outcome is the increased velocity that arises from removing the hand-off between development and testing.

The most pernicious effect of Conway’s Law is that the silos we create promote supervision of the hand-off between the silos. If you were a tester and were measured on the success of your team at testing, you would want to ensure that you received code in a manner that optimized your ability to test it. Not meeting a standard format or method would mean that you would be held responsible for the low-quality inputs of another team! Therefore somebody has to keep an eye on this and measure how often this failure occurs. Of course, with all these “managers” across the functions there needs to be additional managers that collate their findings and report back up to executives on the overall quality of delivery. In lean terms, this is all “waste” – not adding to the actual quality of the outcome for the customer.

Our organizations have many smart and experienced people, so why do we do it this way? Consider a case where the project we were doing required a single line of code to be deployed to production. In this extreme example, the ability of a group of people (even in their silos) to quickly agree on what was good enough and promote it to production would be much greater than for a much larger block of code or functionality. Why do we work on passing such large functional units between the silo functions at one time; why do we not break it up into smaller units? This answer lies in another key aspect of large enterprise planning.

We want to do big things.

Doing big things entails a great deal of risk. We want to ensure that we do each piece of the big thing well, but we also want to ensure that each piece is done by those who can do it best. So we create specialized silos with experts in each. Each of these experts lets us know what could possibly go wrong with “the big thing” We look into the future and try to anticipate every eventuality. This is called risk management. We then create processes to check for these risks as the project progresses.

Of course, we are subject to a confirmation bias here as we tend to look for data that confirms our risk hypotheses. Because of these processes, we believe that we can do big things. After all, with all this resource focused on managing risk, we should be able to do really big things! The reality, as anyone who has managed very large projects will know, is that the risks that sink a project are either completely unexpected and un-anticipatable or emerge from the internal dynamics of a project as the requirements of the project evolve. This is why the research shows very strongly that large, year-long projects seldom, if ever, deliver as predicted or required on the planned budget or timeline.

Even with great risk management processes, large blocks or units of work are very difficult to evaluate for quality. If you develop and integrate months worth of work, it is likely that things have changed since you specified the work to be done, and certainly once problems are found, it is very difficult to separate out what is functioning correctly from what is causing a specific error.

Establishing an organization that is capable of setting a big vision but breaking the delivery of it up into small pieces, using teams that are unconstrained by the traditional functional silos is what we are trying to change.

Many approaches have been developed to help us get to this point.

Some address specifically the issue of trying to remediate the complex interdependent code bases that traditional organizations create:

Brian Foote coined a set of practices called, “Big Ball of Mud,” which addresses the fact that many legacy systems in the enterprise are haphazardly created and, thus, difficult to integrate, grow elegantly or replace.

Eric Evans in Domain Driven Design identified the concept of “Bounded Context,” which refers to the practice that breaks down complex systems into well-defined areas with specific walled-off boundaries.

In response to these problems, some practices have emerged and begun to attain great currency –

+ Continuous Delivery described by Jez Humble and Dave Farley in their book of the same name.

+ DevOps as defined by Adam Jacob at Chef Software

+ Agile Practices as defined in the Agile Manifesto

We need to respect this thinking, but if we’re really interested in transformation, we can’t be imprisoned by lineage. And we can’t try to reconcile 15 different languages and 10 vague organizing principles to create a hybrid. We also need to make sure we’re not bogged down in process.

The bottom line is that we have to be like Bruce Lee (to borrow the metaphor from Adam Jacob), who stripped martial arts down to their very simplified essence in order to conquer an opponent. For our part, we have to focus on the core idea of continuous transformation – continuously changing the way we deploy our people, processes, skills and resources to satisfy customers. And, right now, that means acquiring new knowledge fast. The same old knowledge, the knowledge of the past, and thinking that applies solely to the software development processes, just doesn’t work in the world of now.

Every Act of Design Is an Act of Feedback

Technology companies frequently throw a ball up in the air and walk away – before it actually lands. This prevents them from obtaining crucial feedback. We see this in software development when an organization releases a new quarterly product or feature and returns four months later; sadly, this approach doesn’t allow for much effectiveness tracking, learning, or improvement.

Which is why every single action in the continuous transformation process needs to be an act of design. The act of design is an act of feedback. And, in continuous transformation, you’re continuously getting feedback – whether you’re shipping product externally to a customer or you’re making changes inside you’re organization. You can see this in the IOTA Model, which encourages and stimulates small and very fast increments so you can test your assumptions about the world as well as your effectiveness in responding to those assumptions.

Entering the Crucible

In this approach, we get people and organizations geared up for continuous transformation by putting them through an exercise we call the Crucible. Basically, once you’re in the Crucible, we ask you and your team to think hard about what software you can build in two weeks – what your assumptions are, what the product improvement is, what the organizational learning change is, and how you’ll measure success.

The Crucible starts with a two-minute orientation during which the group discusses what’s possible in two weeks. Then, once a decision about what’s going to be built has been made, the team has five minutes to construct an IOTA Model. After that, it reflects for five minutes on how it worked and what it created. Finally, there’s a rest period, followed by non-work conversation.

We run this exercise 10 times in a row. At the end of 10 iterations, the team is incredibly clear about what can be built in two weeks, what problem can be solved in two weeks and what can be fixed within the organization in two weeks. It’s simple, stripped down and perfectly clear, so there’s no need for estimation.

Think of the Organization as a Product Venture

If you have 20 teams operating at this speed and cadence – and with this much clarity – you will have continuous transformation in your organization. And the continuous change will actually address and solve recurrent problems that exist for customers right now.

There’s one last part of continuous transformation, and that’s mindset. Organizations that want to continuously change need to think of themselves as product ventures – a collection of products and a collection of decisions to invest in those products.

And every team in a continuously transforming organization is a product team; so each team must include people who reflect the entire stack – infrastructure people, architecture people, apps people, code people, people who talk to customers and people who ask customers about prototype features, for example.

Team members also need to understand the economics and value of what they create. We must make their work visible. You write the software, you see the software in world and you know it made a difference in the world – for the organization and the customer. This is the link to reality, and it is essential to creating better software faster in an organization that is continuously transforming.

0 notes

Text

INTERNET

TELÉGRAFO: Antes de la creación de Internet, la única forma de comunicarse digitalmente era por medio del telégrafo El telégrafo se inventó en 1840, emitía señales eléctricas que viajaban por cables conectados entre un origen y un destino. Utilizaba el código Morse para interpretar la información.

Permitía las comunicaciones a distancia de una manera óptima. A través de signos transmitía mensajes muy económicos.

ARPANET: En 1958 los EEUU fundaron la Advanced Researchs Projects Agency (ARPA) a través del Ministerio de Defensa. El ARPA estaba formado por unos 200 científicos de alto nivel y tenia un gran presupuesto. El ARPA se centró en crear comunicaciones directas entre ordenadores para poder comunicar las diferentes bases de investigación. En 1962, el ARPA creó un programa de investigación computacional bajo la dirección de John Licklider, un científico del MIT (Massachusetts Institute of Technology). En 1967 ya se había hecho suficiente trabajo para que el ARPA publicara un plan para crear una red de ordenadores denominada ARPANET. ARPANET recopilaba las mejores idas de los equipos del MIT, el Natinonal Physics Laboratory (UK) y la Rand Corporation. La red fue creciendo y en 1971 ARPANET tenia 23 puntos conectados.

De ARPANET a INTERNET: En 1972 ARPANET se presentó en la First International Conference on Computers and Communication en Washington DC. Los científicos de ARPANET demostraron que el sistema era operativo creando una red de 40 puntos conectados en diferentes localizaciones. Esto estimuló la búsqueda en este campo y se crearon otras redes.

En aquél momento el mundo de las redes era un poco caótico, a pesar de que ARPANET seguía siendo el “estándar”. EN 1982, ARPANET adoptó el protocolo TCP/IP y en aquel momento se creó Internet (International Net)

De ARPANET a WWW: A principios de los 80 se comenzaron a desarrollar los ordenadores de forma exponencial. EL crecimiento era tan veloz que se temía que las redes se bloquearan debido al gran número de usuarios y de información transmitida, hecho causado por el fenómeno e-mail. La red siguió creciendo exponencialmente como muestra el gráfico.

WWW: El World Wide Web (WWW) es una red de “sitios” que pueden ser buscados y mostrados con un protocolo llamado HyperText Transfer Protocol (HTTP). El concepto de WWW fue diseñado por Tim Berners-Lee y algunos científicos del CERN (Conseil Européen pour la Recherche Nucléaire) en Ginebra. Estos científicos estaban muy interesados en poder buscar y mostrar fácilmente documentación a través de Internet. Los científicos del CERN diseñaron un navegador/editor y le pusieron el nombre de World Wide Web. Este programa era gratuito. No es muy conocido actualmente pero muchas comunidades científicas lo comenzaron a usar en su momento. En 1991 esta tecnología fue presentada al público a pesar de que el crecimiento en su utilización no fue muy espectacular, a finales de 1992 solamente había 50 sitios web en el mundo, y en 1993 había 150. En 1993 Mark Andreesen, del National Center for Super Computing Applications (NCSA) de Illinois publicó el Mosaic X, un navegador fácil de instalar y de usar. Supuso una mejora notable en la forma en qué se mostraban los gráficos. Era muy parecido a un navegador de hoy en día. A partir de la publicación de la tecnología WWW y de los navegadores se comenzó a abrir Internet a un público más amplio: actividades comerciales, páginas personales, etc. Este crecimiento se aceleró con la aparición de nuevos ordenadores más baratos y potentes.

TIM BERNERS-LEE: Berners-Lee fue el creador en 1989 de la World Wide Web (WWW), un proyecto global de hipertexto que permitiría por primera vez al mundo trabajar conjuntamente en la red. Berners-Lee redactó el HTLM (Lenguaje de etiquetado hipertexto) estableciendo enlaces con otros documentos en una computadora y elaboró un esquema de direcciones que dio a cada página de la Red una localización única, o URL (localizador universal de recursos).

Luego estableció unas reglas llamadas HTTP (Protocolo de transferencia de hipertexto), para transmitir información a través de la Red. En 1991 dio a los usuarios de Internet libre acceso a programas en el mundo entero. Los dos años siguientes perfeccionó el diseño de la Red aprovechando las observaciones de los que empleaban Internet.

30/09/21

0 notes

Link

In 1998, the urban planning student Mohammed Atta handed in his masters thesis at Hamburg’s University of Technology. Examining in depth the architecture of Aleppo’s historic Bab al-Nasr district, Atta’s thesis presented a picture of the human-scale “Islamic-Oriental city,” whose winding cobbled streets, shaded souks and alleys carved from honey-coloured stone had been violated by the concrete and glass boxes of liberal modernity.

Le Corbusier’s rectangular forms, the alien importation of French colonial planners, were aped by Syrian planners after independence, Atta’s thesis observed, an architectural symbol of Islamic civilisation’s total subjection to the West. Three years later, Atta’s critique of modernist architecture as a symbol of Western domination assumed its final form when, as the leader of the 9/11 hijackers, he flew American Airlines Flight 11 into the World Trade Center, the glittering towers in the heart of the liberal empire standing as a symbol for Western modernity itself.

The Syrian architect Marwa al-Sabouni, a student and admirer of the late Sir Roger Scruton, likewise sees in the Middle East’s modern architecture a tragic symbol of “a region where even the application of modernism has failed,” where “we traded our close-knit neighborhoods, our modest and inward houses, our unostentatious mosques and their neighbouring churches, our collaborative and shared spaces, and our shaded courtyards and knowledge-cultivating corners, leaving us with isolated ghettos and faceless boxes.”

For al-Sabouni, the anomie of liberal modernity was built into its very architecture, bringing desolation in its wake. An opponent of Islamism, she nevertheless shares the Islamist analysis that the Middle East’s instability is not inherent, but comes from the West’s exporting the structures of liberal modernity to the somnolent peace of Islamic civilisation, setting in train chaos.

“Losing our identity in exchange for the Western idea of ‘progress’ has proved to have greater consequences than we could predict,” she claims. “This vacuum in our identity could not be filled by imported ‘middle grounds’, as was once naively thought; this vacuum was instead filled by horrors and radicalizations, by sectarianisms and corruption, by crime and devastation — in one word, by war.”

It is natural to read a culture’s attitudes to its monuments as expressions of its social health. They are the symbolic repository of any given culture, and deeply imbued with political meaning. When civilisations fall and their literature is lost to time, it is their monuments that serve as testaments to their values, to their greatest heroes and their highest aspirations. Statues, great building projects and monuments are stories we tell about ourselves, expressions in stone and bronze of the Burkean compact between generations past and those to come. As Atta’s thesis states, the architecture of the past is imbued with moral meaning: “if we think about the maintenance of urban heritage,” he wrote, “then this is a maintenance of the good values of the former generations for the benefit of today’s and future generations.”

It is only logical then, for the terminal crisis of liberal modernity to play out in culture wars over monuments, as the fate of a monument stands as a metaphor for the civilisation that erected it. It is for this reason that conquerors of a civilisation so often pull down the monuments of their predecessors and replace them with their own, a powerful act of symbolic domination.

The wave of statue-toppling spreading across the Western world from the United States is not an aesthetic act, but a political one, the disfigured monuments in bronze and stone standing for the repudiation of an entire civilisation. No longer limiting their rage to slave-owners, American mobs are pulling down and disfiguring statues of abolitionists, writers and saints in an act of revolt against the country’s European founding, now reimagined as the nation’s original sin, a moral and symbolic shift with which we Europeans will soon be forced to reckon.

On our own continent, the symbolic, civilisational value of architectural monuments was expressed last year when the world gathered together online to watch Notre Dame in flames. The collective grief that for one evening united so many people was not just for the cathedral itself but for the civilisation that created it, a sudden jolt of loss and pain that came with the realisation the skill and self-belief it took to erect it had vanished forever.

Like Dark Age farmers tilling their crops in the crumbling ruins of a Roman city, we realise we are already squatting inside the monuments of a lost and greater civilisation, viewing the work of our forebears with the wonder and sadness of the Anglo-Saxon poet of The Ruin:

This masonry is wondrous; fates broke it courtyard pavements were smashed; the work of giants is decaying. Far and wide the slain perished, days of pestilence came, death took all the brave men away;

For many observing from outside liberalism, the current iconoclasm of the West is just such a cautionary tale of civilisational collapse. “Statues are being toppled, conditions are deplorable and there are gang wars on the beautiful streets of small towns in civilized Western European countries,” Hungary’s defiantly non-liberal leader Viktor Orban remarked in an interview last week, “I look at the countries advising us how to conduct our lives properly and I don’t know whether to laugh or cry.”

In contrast, in a recent speech to mark the centenary of the hated Treaty of Trianon, Orban specifically cited the historical monuments of the Carpathian region as a testament to the endurance of the Hungarian people throughout history: “the indelible evidence, churches and cathedrals, cities and town squares still stand everywhere today. They proclaim that we Hungarians are a great, culture-building and state-organising nation.”

It is notable that this speech, laden with architectural metaphors, saw Orban for the first time situate Hungary in a separate Central European civilisation outside the West, adopting the language of anti-colonialism in a marker, perhaps, of his shift towards China as a geopolitical patron. Raging against the “arrogant” French and British and “hypocritical American empire”, Orban claimed that “the West raped the thousand-year-old borders and history of Central Europe… just as the borders of Africa and the Middle East were redrawn. We will never forget that they did this.” But these days are over, Orban exulted: “the world is changing. The changes are tectonic. The United States is no longer alone on the throne of the world, Eurasia is rebuilding with full throttle… A new order is being born.”

It is striking, and meaningful, that the self-conscious civilisation states rising to challenge the collapsing liberal order express their neo-traditionalist value systems in reimagined forms of their pre-modernist architecture, with stone and brick giving concrete expression of the ideal.

In Budapest, Scruton’s city “full of monuments” where “in every park some bearded gentleman stands serenely on a plinth, testifying to the worth of Hungarian poetry, to the beauty of Hungarian music, to the sacrifices made in some great Hungarian cause,” Orban is engaged in an ongoing project to erase the modernist architecture of the communist era. The concrete boxes of the rejected order are now shrouded with neoclassical facades and the long-demolished monuments of the glorious past are being re-erected stone by carved stone.

In Russia, Putin’s new Military Cathedral, an archaeofuturistic confection fusing Orthodox church and state in an intimidating expression of raw power, symbolises the country’s apartness from collapsing liberal modernity. The cathedral is a symbol of a civilisation that links its present with its past and future, expressed by Putin in overtly Burkean terms in his recent essay on the Second World War as the “shared historical memory” that foregrounds “the living connection and the blood ties between generations”.

Erdogan’s Turkey similarly expresses its desire to return to its imperial heyday in the elaborate mosques, palaces and barracks in neo-Ottoman style springing up across Turkey itself and its former imperial dominions. In architecture as in political order, the stylings of modernity already seem old-fashioned and stripped of all vitality, the sunlit optimism of the Bauhaus degenerated over one bloody century to the vision of Grenfell Tower in flames.

Like liberalism, the architecture of the postwar order conquered the world, for a time, and is now being rejected by the liberal order’s challengers in favour of the styles that immediately preceded modernity, a civilisational kitsch rejected by al-Sabouni as merely “mimicking the creations of our ancestors”.

In a recent essay, the influential software engineer and thinker Marc Andreesen urged the West to start building again, to recover civilisational confidence, but what should we build? What does our architecture reveal about our political order, in the greater West and here in Britain? What are the greatest recent building projects of our times? Perhaps the glass and steel skyscrapers, temples to our financialised economy, that dominate our capital? The Nightingale hospitals, hurriedly converted from conference centres to deal with the mass casualties of a disease of globalisation?

Perhaps the airport is the fullest architectural expression of liberalism: the liminal symbol, both within the nation state and outside it, of global travel and optimism; a temple of bored consumption where the religions of the pre-liberal order were tucked away, all together, in the bland anonymity of the prayer room; until, that is, Mohammed Atta’s blowback to globalisation soured this post-historical dream, forcing the architecture of liberalism back to its early roots in Bentham’s surveilled and paranoid Panopticon.

The problem, at heart, is we can take down our monuments but have nothing to replace them with. As Scruton notes, monuments “commemorate the nation, raise it above the land on which it is planted, and express an idea of public duty and public achievement in which everyone can share. Their meaning is not ‘he’ or ‘she’ but ‘we’” — but perhaps there is no We any more, with the nihilism of the American mob an expression of a far deeper malaise.

It is surely no accident that this is a moral panic driven by millennials, an evanescent generation without property or progeny, barred from creating a future, who now reject their own past in its entirety. This is the endpoint of liberalism, trapped in the eternal present, a shallow growth with no roots from which to draw succour, and bringing forth no seeds of future life.

For Scruton’s pupil al-Sabouni, the salvation of Islamic civilisation is to be found in an architecture rooted in its past, where, she states, “we must be sincere in our own intentions toward our own identity. We must realise the desire to regain it in order to regain peace. And in order to do so, we simply have to do exactly what any dedicated farmer does to a plant: cultivate the roots and carefully prune the branches.”

But a civilisation that uproots itself will soon wither and die. Able to destroy but not to build, the fading civilisation of liberalism is now a grand, crumbling old edifice whose imminent collapse is wilfully ignored by its occupants, because they have too much invested in it, and can’t imagine what can possibly replace it. But as its unstable masonry keeps falling onto the streets below, the risk of a more spectacular, uncontrolled collapse gathers every day.

0 notes