#Limiting

Explore tagged Tumblr posts

Text

u ever jus wonder why you cant do shit right?

ya me too. what will it take for me to gain the ability to fly 😞😞😞 without airplanes or flying vehicles

#funkyglitch.txt#its jus so...so.. . . ..#LIMITING#like why cant i walk AND fly bro#maybe then ill like#idk help oje of my best friends out with her fear of heights#or something like that#like drop my problems into the nearest active volcano i find#that would help a ton bc imagine#ya sorry teacher my homework got melted in lava so idk wat u want me to do abt it#i can swim in the lavafor you if im at my limit though hmu anytime

2 notes

·

View notes

Text

Amity: how did your first T shot go, Luz?

Luz, still shaking like a leaf after breaking a blood vessel: I’m a pro at it!

#based on real life experience except it’s my third week I shouldn’t still be shaky enough with the needle to make it bleed#the owl house#limiting#Luz noceda#amity blight#gnc bi-gender Luz physically transitioning because she feels more comfortable is not lesbian erasure#that’s just how I headcanon them#gnc bigender Luz physically transitioning because she feels more comfortable that way isn’t lesbian erasure that’s just how I headcanon them

19 notes

·

View notes

Text

These were impositions, defining categories that failed to recognize the muddle that is us, human beings.

Siri Hustvedt, from The Blazing World

#oversimplification#categorization#defies categorization#complex#i contain multitudes#human nature#humans#humanity#limitations#limiting#quotes#lit#words#excerpts#quote#literature#siri hustvedt#the blazing world

3 notes

·

View notes

Quote

It's so limiting to say what something is. It becomes nothing more than that.

David Lynch

3 notes

·

View notes

Photo

The best way i know to complete a to-do list is limiting it to three monosyllabic tasks.

#to do list#to-do-list#list#shower#load#loading#leave#leaving#departure#three#3#short#tasks#travel#limited#limiting#complete#completed#success#achievement#monosyllabic

3 notes

·

View notes

Text

[guy who doesnt watch shows voice] yeah ive been meaning to watch that show

#spitblaze says things#i mean i do. but my issue is that i play/watch/read things at a snails pace#and have a bad habit of starting something and never finishing it#so i end up limiting myself#also also adhd doesnt like it when i have to give one thing my undivided attention if its not taking up as much processing power#as something like a video game#doin numbers

63K notes

·

View notes

Text

Nissan Harlecube, 2009. A special edition of the third generation (Z12) Cube

#Nissan#Nissan Harlecube#Nissan Cube#special edition#limited edition#2009#third generation#harlequin#multicoloured#Z12

24K notes

·

View notes

Text

as an aroace person with limited sexual experience, no interest in watching porn, and poor sex ed as a teen, there IS something simultaneously funny and vaguely tragic about being 28 adult years old and realising how extremely tiny your frame of reference is for genitalia and deciding you should expand this to better understand bodies (yours and others). and then you're just there like "okay so what the fuck do I even google right now, anyway"

#vivid flashbacks to being 19 and going on scarleteen like 'help what's a clitoris'#anyway society (by which i mean repressed evangelical white brits lol) really marked a whole area of anatomy as off limits huh#and the modern advertising friendly internet does not counteract it

55K notes

·

View notes

Text

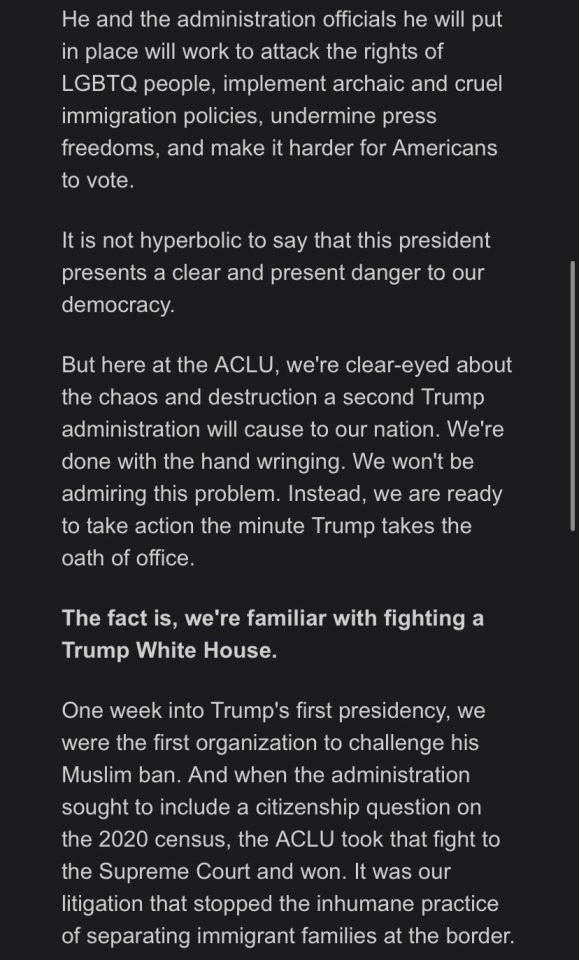

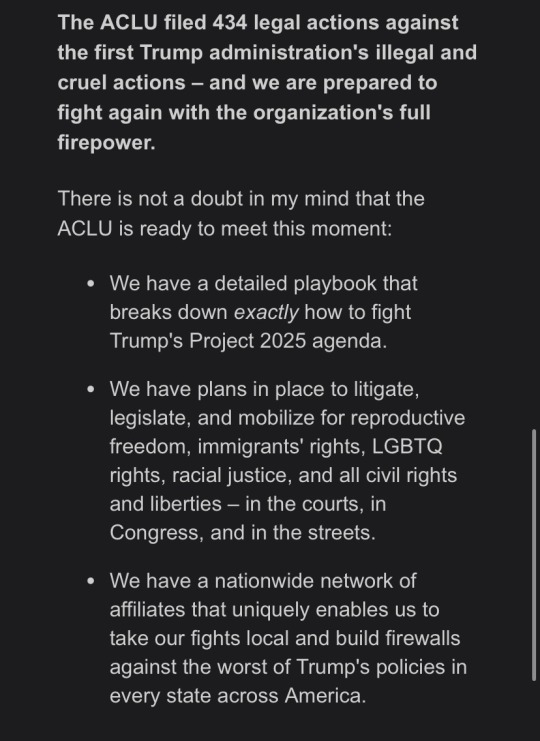

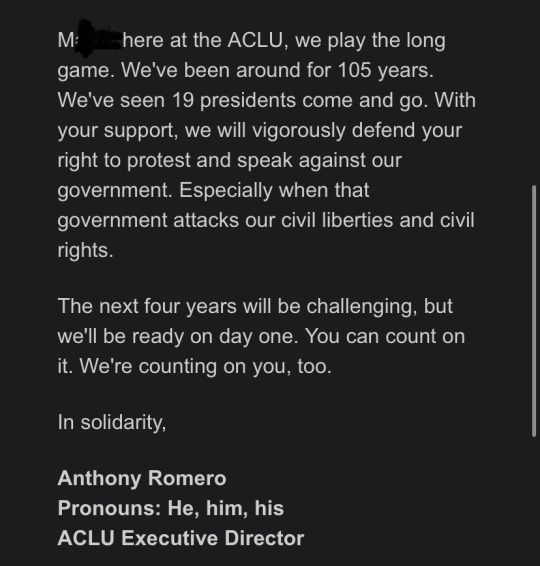

A Pragmatic and surprisingly comforting perspective about the Trump 2nd Presidency from the ACLU

***Apologies if this is how you found out the 2024 election results***

Blacked out part is my name.

I’m not going to let this make me give up. It’s disheartening, and today I will wallow, probably tomorrow too

AND

I will continue to do my part in my community to spread the activism and promote change for the world I want to live in. I want to change the world AND help with the dishes.

And I won’t let an orange pit stain be what stops me from trying to be better.

A link to donate to the ACLU if able and inclined. I know I am

#us politics#donald trump#election 2024#aclu#a promise to myself#how is this comforting you May ask#bc we are not fighting alone or uninformed#we have good and strong groups in our corners defending what we believe in#it’s not over yet#we have to try and pushback#added Alt image descriptions since this is leaving containment#happy to see many engaging with this to either donate time or money or both#really warms the cold heart of mine#wow this broke containment#overall it’s been pretty nice seeing people engaging with it ready to roll up their sleeves and get to work#they did the travel ban right at the beginning of the previous presidency too#also every major civil battle in the last century#brown V board of education- the one that desegregated schools#loving V Virginia- legalized interracial marriage#roe V wade- legalized abortion#United States V Nixon- watergate scandal WHICH LIMITED US PRESIDENTAL POWER#Edwards v. Aguillard- helped allow schools to teach evolution#Planned Parenthood v. Casey- another abortion case#ACLU v. NSA- to stop the NSA spying on wikipedia users#Ingersoll v. Arlene's Flowers- fought to stop LGBTQ discrimination from businesses#Obergefell v. Hodges- case that legalized gay marriage#literally WAY MORE GUYS#so don’t fall into dispair! these are literally one of the good ones!

26K notes

·

View notes

Text

alternate take on my other steve comic.

help me afford new socks

#Steve#ferret#ferrets#not snakes#art#comic#artists on tumblr#kofi#Steve is really very sweet. but his kindness has its limits

31K notes

·

View notes

Text

i propose that instead of pride month, we have queer year (queer people are treated like actual people all year long)

edit: @ilackhumanqualities wins best addition to this post

#queer#pride month#pride#asexual#aromantic#aroace#agender#aspec#acespec#arospec#lgbt#lgbtq#lgbtplus#lgbt+#lgbtq+#lgbtqia+#transgender#nonbinary#genderfluid#genderqueer#intersex#aplatonic#gay#lesbian#bisexual#pansexual#multigender#i don’t usually tag things with 40 billion tags#i started quizzing myself on how many i could name but so many got deleted bc of tag limit#described

35K notes

·

View notes

Text

Thank god for Sketchfab. Drawing the car was so worth it

#smallishbeans#joel smallishbeans#jimmy solidarity#solidaritygaming#grian#bad boys#trafficblr#limited life#wild life#Listen. Its my art and I get to decide who Joel hugs. But also I do think Grian would do everything to weasel out of it#tubby art#cw blood

15K notes

·

View notes

Text

Can’t stop drawing grian I hate him

10K notes

·

View notes

Text

A quote on being your wild, wonderful weirdo self!

#pastormike1976#you are awesome#don't forget it#words#quote#quotes#fear not#judgement#others#living#life#wild#wonderful#weirdo#self#guilty#concerned#limiting#less than#be better#progressive clergy#progressive pastor#progressive christian#lgbt#lgbtq#lgbtqia#gay#love is love#all means all#encouragement

1 note

·

View note

Text

Dual Action WIDOWSPEAK, breiter audio (April 2022)

#widowspeak#killertimes#breiteraudio#breiter#optocompressor#micpre#limiting#handbuilt#solidstate#LA2A#1176N#classic#distressor#fairchild#fyp#whocooksforyou#vactrol#rackmount#studio

0 notes