#LAION

Explore tagged Tumblr posts

Text

where my fellow monster fuckers at 👅👅👅👅👅👅

#dungeon meshi fanart#dungeon meshi#dunmeshi#laios touden#farcille#marcille donato#falin#not going to lie labru is the worst thing to happen to me because damn everyones lining up for yaoi and not laios x monsters sniff sniff#sorry cough im. normal#my artwork#rkgk#winged lion#theres not even a ship tag for them im sick#lios..?#laion#😭

26K notes

·

View notes

Text

The biggest dataset used for AI image generators had CSAM in it

Link the original tweet with more info

The LAION dataset has had ethical concerns raised over its contents before, but the public now has proof that there was CSAM used in it.

The dataset was essentially created by scraping the internet and using a mass tagger to label what was in the images. Many of the images were already known to contain identifying or personal information, and several people have been able to use EU privacy laws to get images removed from the dataset.

However, LAION itself has known about the CSAM issue since 2021.

LAION was a pretty bad data set to use anyway, and I hope researchers drop it for something more useful that was created more ethically. I hope that this will lead to a more ethical databases being created, and companies getting punished for using unethical databases. I hope the people responsible for this are punished, and the victims get healing and closure.

12 notes

·

View notes

Text

Okay, tech people:

Can anybody tell me what the LAION-5B data set is in layman's terms, as well as how it is used to train actual models?

Everything I have read online is either so technical that it provides zero information to me, or so dumbed down that it provides almost zero information to me.

Here is what I *think* is going on (and I already have enough information to know that in some ways this is definitely wrong.)

LAION uses a web crawler to essentially randomly check publicly accessible web pages. When this crawler finds an image, it creates a record of the image URL, a set of descriptive words from the image ALT text, (and other sources I think?) and some other stuff.

This is compiled into a big giant list of image URLs and descriptive text associated with the URL.

When a model is trained on this data it... I guess... essentially goes to every URL in the list, checks the image, extracts some kind of data from the image file itself, and then associates the data extracted from the image with the discriptive text that LAION has already associated with the image URL?

The big pitfall, apparently, is that there are a lot of images that have been improperly or even illegally posted on the internet publicly with the ability to let crawlers access them even though they shouldn't be public (e.g. medical records or CSAM) and the dataset is too large to actually hand-curate every single entry? So that therefore models trained on the dataset contain some amount of data that legally they should not have, outside and beyond copyright considerations. A secondary problem is that the production of image ALT text is extremely opaque to ordinary users, so certain images that a user might be comfortable posting may, unbeknownst to them, contain ALT text that the user would not like to be disseminated.

Am I even in the ballpark here? It is incredibly frustrating to read multiple news stories about this stuff and still lack the basic knowledge you would need to think about this stuff systematically.

7 notes

·

View notes

Note

psst ai art is not real art and hurts artists

Real life tends to be far more nuanced than sweeping statements, emotional rhetoric, or conveniently fuzzy definitions. “Artists” are not a monolithic entity and neither are companies. There are different activities with different economics.

I’ll preface the rest of my post with sharing my own background, for personal context:

👩���� I am an artist. I went to/graduated from an arts college and learned traditional art-making (sculpture to silkscreen printing), and my specialism was in communication design (using the gamut of requisite software like Adobe Illustrator, InDesign, Photoshop, Lightroom, Dreamweaver etc). Many of my oldest friends are career artists—two of whom served as official witnesses to my marriage. Friends of friends have shown at the Venice Biennale, stuff like that. Many are in fields like games, animation, VFX, 3D etc. In the formative years of my life, I’ve worked & collaborated in a wide range of creative endeavours and pursuits. I freelanced under a business which I co-created, ran commercial/for-profit creative events for local musicians & artists, did photography (both digital & analog film, some of which I hand-processed in a darkroom), did some modelling, styling, appeared in student films… the list goes on. I’ve also dabbled with learning 3D using Blender, a free, open source software (note: Blender is an important example I’ll come back to, below). 💸 I am a (budding) patron of the arts. On the other side of the equation, I sometimes buy art: small things like buying friends’ work. I’m also currently holding (very very tiny) stakes in “real” art—as in, actual fine art: a few pieces by Basquiat, Yayoi Kusama, Joan Mitchell. 👩💻 I am a software designer & engineer. I spent about an equal number of years in tech: took some time to re-skill in a childhood passion and dive into a new field, then went off to work at small startups (not “big tech”), to design and write software every day.

So I’m quite happy to talk art, tech, and the intersection. I’m keeping tabs on the debate around the legal questions and the lawsuits.

Can an image be stolen if only used in training input, and is never reproduced as output? Can a company be vicariously liable for user-generated content? Legally, style isn’t copyrightable, and for good reason. Copyright law is not one-size-fits-all. Claims vary widely per case.

Flaws in the Anderson vs Stability AI case, aka “stolen images” argument

Read this great simple breakdown by a copyright lawyer that covers reproduction vs. derivative rights, model inputs and outputs, derivative works, style, and vicarious liability https://copyrightlately.com/artists-copyright-infringement-lawsuit-ai-art-tools/

“Getty’s new complaint is much better than the overreaching class action lawsuit I wrote about last month. The focus is where it should be: the input stage ingestion of copyrighted images to train the data. This will be a fascinating fair use battle.”

“Surprisingly, plaintiffs’ complaint doesn’t focus much on whether making intermediate stage copies during the training process violates their exclusive reproduction rights under the Copyright Act. Given that the training images aren’t stored in the software itself, the initial scraping is really the only reproduction that’s taken place.”

“Nor does the complaint allege that any output images are infringing reproductions of any of the plaintiffs’ works. Indeed, plaintiffs concede that none of the images provided in response to a particular text prompt “is likely to be a close match for any specific image in the training data.””

“Instead, the lawsuit is premised upon a much more sweeping and bold assertion—namely that every image that’s output by these AI tools is necessarily an unlawful and infringing “derivative work” based on the billions of copyrighted images used to train the models.”

“There’s another, more fundamental problem with plaintiffs’ argument. If every output image generated by AI tools is necessarily an infringing derivative work merely because it reflects what the tool has learned from examining existing artworks, what might that say about works generated by the plaintiffs themselves? Works of innumerable potential class members could reflect, in the same attenuated manner, preexisting artworks that the artists studied as they learned their skill.”

My thoughts on generative AI: how anti-AI rhetoric helps Big Tech (and harms open-source/independents), how there’s no such thing as “real art”

The AI landscape is still evolving and being negotiated, but fear-mongering and tighter regulations seldom serve anyone’s favour besides big companies. It’s the oldest trick in the book to preserve monopoly and all big corps in major industries have done this. Get a sense of the issue in this article: https://www.forbes.com/sites/hessiejones/2023/04/19/amid-growing-call-to-pause-ai-research-laion-petitions-governments-to-keep-agi-research-open-active-and-responsible/?sh=34b78bae62e3

“AI field is progressing at unprecedented speed; however, training state-of-art AI models such as GPT-4 requires large compute resources, not currently available to researchers in academia and open-source communities; the ‘compute gap’ keeps widening, causing the concentration of AI power at a few large companies.”

“Governments and businesses will become completely dependent on the technologies coming from the largest companies who have invested millions, and by definition have the highest objective to profit from it.”

“The “AGI Doomer” fear-mongering narrative distracts from actual dangers, implicitly advocating for centralized control and power consolidation.”

Regulation & lawsuits benefit massive monopolies: Adobe (which owns Adobe Stock), Microsoft, Google, Facebook et al. Fighting lawsuits, licensing with stock image companies for good PR—like OpenAI (which Microsoft invested $10billion in) and Shutterstock—is a cost which they have ample resources to pay, to protect their monopoly after all that massive investment in ML/AI R&D. The rewards outweigh the risks. They don't really care about ethics, only when it annihilates competition. Regulatory capture means these mega-corporations will continue to dominate tech, and nobody else can compete. Do you know what happens if only Big Tech controls AI? It ain’t gonna be pretty.

Open-source is the best alternative to Big Tech. Pro-corporation regulation hurts open-source. Which hurts indie creators/studios, who will find themselves increasingly shackled to Big Tech’s expensive software. Do you know who develops & releases the LAION dataset? An open-source research org. https://laion.ai/about/ Independent non-profit research orgs & developers cannot afford harsh anti-competition regulatory rigmarole, or multi-million dollar lawsuits, or being deprived of training data, which is exactly what Big Tech wants. Free professional industry-standard software like Blender is open-source, copyleft GNU General Public License. Do you know how many professional 3D artists and businesses rely on it? (Now it’s development fund is backed by industry behemoths.) The consequences of this kind of specious “protest” masquerading as social justice will ultimately screw over these “hurt artists” even harder. It’s shooting the foot. Monkey’s paw. Be very careful what you wish for.

TANSTAAFL: Visual tradespeople have no qualms using tons of imagery/content floating freely around the web to develop their own for-profit output—nobody’s sweating over source provenance or licensing whenever they whip out Google Images or Pinterest. Nobody decries how everything is reposted/reblogged to death when it benefits them. Do you know how Google, a for-profit company, and its massively profitable search product works? “Engines like the ones built by OpenAI ingest giant data sets, which they use to train software that can make recommendations or even generate code, art, or text. In many cases, the engines are scouring the web for these data sets, the same way Google’s search crawlers do, so they can learn what’s on a webpage and catalog it for search queries.”[1] The Authors Guild v. Google case found that Google’s wholesale scanning of millions of books to create its Google Book Search tool served a transformative purpose that qualified as fair use. Do you still use Google products? No man is an island. Free online access at your fingertips to a vast trove of humanity’s information cuts both ways. I’d like to see anyone completely forgo these technologies & services in the name of “ethics”. (Also. Remember that other hyped new tech that’s all about provenance, where some foot-shooting “artists” rejected it and self-excluded/self-harmed, while savvy others like Burnt Toast seized the opportunity and cashed in.)

There is no such thing as “real art.” The definition of “art” is far from a universal, permanent concept; it has always been challenged (Duchamp, Warhol, Kruger, Banksy, et al) and will continue to be. It is not defined by the degree of manual labour involved. A literal banana duct-taped to a wall can be art. (The guy who ate it claimed “performance art”). Nobody in Van Gogh’s lifetime considered his work to be “real art” (whatever that means). He died penniless, destitute, believing himself to be an artistic failure. He wasn’t the first nor last. If a soi-disant “artist” makes “art” and nobody values it enough to buy/commission it, is it even art? If Martin Shkreli buys Wu Tang Clan’s “Once Upon a Time in Shaolin” for USD$2 million, is it more art than their other albums? Value can be ascribed or lost at a moment’s notice, by pretty arbitrary vicissitudes. Today’s trash is tomorrow’s treasure—and vice versa. Whose opinion matters, and when? The artist’s? The patron’s? The public’s? In the present? Or in hindsight?

As for “artists” in the sense of salaried/freelance gig economy trade workers (illustrators, animators, concept artists, game devs, et al), they’ll have to adapt to the new tech and tools like everyone else, to remain competitive. Some are happy that AI tools have improved their workflow. Some were struggling to get paid for heavily commoditised, internationally arbitraged-to-pennies work long before AI, in dehumanising digital sweatshop conditions (dime-a-dozen hands-for-hire who struggled at marketing & distributing their own brand & content). AI is merely a tool. Methods and tools come and go, inefficient ones die off, niches get eroded. Over-specialisation is an evolutionary risk. The existence of AI tooling does not preclude anyone from succeeding as visual creators or Christie’s-league art-world artists, either. Beeple uses AI. The market is information about what other humans want and need, how much it’s worth, and who else is supplying the demand. AI will get “priced in.” To adapt and evolve is to live. There are much greater crises we're facing as a species.

I label my image-making posts as #my art, relative to #my fic, mainly for navigation purposes within my blog. Denoting a subset of my pieces with #ai is already generous on this hellsite entropy cesspool. Anti-AI rhetoric will probably drive some people to conceal the fact that they use AI. I like to be transparent, but not everyone does. Also, if you can’t tell, does it matter? https://youtu.be/1mR9hdy6Qgw

I can illustrate, up to a point, but honing the skill of hand-crafted image-making isn’t worth my remaining time alive. The effort-to-output ratio is too high. Ain’t nobody got time fo dat. I want to tell stories and bring my visions to life, and so do many others. It’s a creative enabler. The democratisation of image-making means that many more people, like the disabled, or those who didn’t have the means or opportunity to invest heavily in traditional skills, can now manifest their visions and unleash their imaginations. Visual media becomes a language more people can wield, and that is a good thing.

Where I’m personally concerned, AI tools don’t replace anything except some of my own manual labour. I am incredibly unlikely to commission a visual piece from another creator—most fanart styles or representations of the pair just don’t resonate with me that much. (I did once try to buy C/Fe merch from an artist, but it was no longer available.) I don’t currently hawk my own visual wares for monetary profit (tips are nice though). No scenario exists which involves me + AI tools somehow stealing some poor artist’s lunch by creating my tchotchkes. No overlap regarding commercial interests. No zero-sum situation. Even if there was, and I was competing in the same market, my work would first need to qualify as a copy. My blog and content is for personal purposes and doesn’t financially deprive anyone. I’ll keep creating with any tool I find useful.

AI art allegedly not being “real art” (which means nothing) because it's perceived as zero-effort? Not always the case. It may not be a deterministic process but some creators like myself still add a ton of human guidance and input—my own personal taste, judgement, labour. Most of my generation pieces require many steps of in-painting, manual hand tweaking, feeding it back as img2img, in a back and forth duet. If you've actually used any of these tools yourself with a specific vision in mind, you’ll know that it never gives you exactly what you want—not on the first try, nor even the hundredth… unless you're happy with something random. (Which some people are. To each their own.) That element of chance, of not having full control, just makes it a different beast. To achieve desired results with AI, you need to learn, research, experiment, iterate, combine, refine—like any other creative process.

If you upload content to the web (aka “release out in the wild”), then you must, by practical necessity, assume it’s already “stolen” in the sense that whatever happens to it afterwards is no longer under your control. Again, do you know how Google, a for-profit company, and its massively profitable search product works? Plagiarism has always been possible. Mass data scraping or AI hardly changed this fact. Counterfeits or bootlegs didn’t arise with the web.

As per blog title and Asimov's last major interview about AI, I’m optimistic about AI overall. The ride may be bumpy for some now, but general progress often comes with short-term fallout. This FUD about R’s feels like The Caves of Steel, like Lije at the beginning [insert his closing rant about humans not having to fear robots]. Computers are good at some things, we’re good at others. They free us up from incidental tedium, so we can do the things we actually want to do. Like shipping these characters and telling stories and making pretty pictures for personal consumption and pleasure, in my case. Most individuals aren’t that unique/important until combined into a statistical aggregate of humanity, and the tools trained on all of humanity’s data will empower us to go even further as a species.

You know what really hurts people? The pandemic which nobody cares about; which has a significant, harmful impact on my body/life and millions of others’. That cost me a permanent expensive lifestyle shift and innumerable sacrifices, that led me to walk away from my source of income and pack up my existence to move halfway across the planet. If you are not zero-coviding—the probability of which is practically nil—I’m gonna have to discount your views on “hurt”, ethics, or what we owe to each other.

We are a non-profit organization with members from all over the world, aiming to make large-scale machine learning models, datasets and related code available to the general public. OUR BELIEFS: We believe that machine learning research and its applications have the potential to have huge positive impacts on our world and therefore should be democratized. PRINCIPLE GOALS: Releasing open datasets, code and machine learning models. We want to teach the basics of large-scale ML research and data management. By making models, datasets and code reusable without the need to train from scratch all the time, we want to promote an efficient use of energy and computing ressources to face the challenges of climate change. FUNDING: Funded by donations and public research grants, our aim is to open all cornerstone results from such an important field as large-scale machine learning to all interested communities.

The “AGI Doomer” fear-mongering narrative distracts from actual dangers, implicitly advocating for centralized control and power consolidation.”

youtube

2 notes

·

View notes

Text

LAION, el asistente digital de Peugeot que facilita conocer el nuevo SUV Peugeot 2008

Peugeot ha dado un paso innovador en la industria automotriz argentina al lanzar LAION, un asistente digital con inteligencia artificial diseñada para ofrecer una experiencia de usuario rápida y completa. Este asistente virtual permite a los usuarios acceder a información detallada sobre el nuevo Peugeot 2008, convirtiendo la búsqueda en una interacción 24/7, ágil y precisa. LAION, diseñado con…

0 notes

Text

I'm going to set something on fire

1 note

·

View note

Text

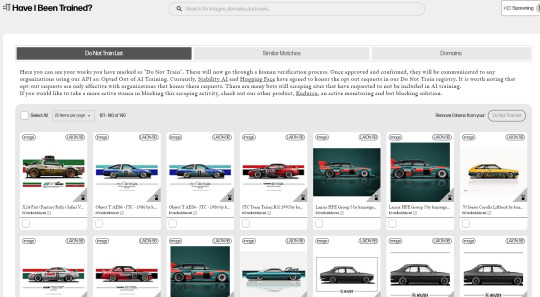

Just a heads up to any non AI artists that use red bubble (among many more). They are allowing your work to be used by the LAION-5B data set for use in AI training. haveibeentrained.com is free to use

9 notes

·

View notes

Text

Will you be my new mommy? 🥺👉👈

3 notes

·

View notes

Text

BITCHHH IM IN A PHILOSOPHY CLASS WHY AM I KNEE DEEP IN THE DOCUMENTATION FOR LAION-5B*

*LAION-5b is the dataset for Stable Diffusion text-to-image generator and currently the world's largest open-access image-text dataset. grins at you

#shows dedication to my craft *flips hair over shoulder*#anyways its cringe because its scraped from the internet#without credit#and get this: people have found CSAM in it.....#bias in LAION -> bias in stable diffusion#and if there's bias in this generative model imagine the biases in other generative models.....#yap

3 notes

·

View notes

Text

"For decades datasets were constructed by human intervention. This generally yielded datasets that are of high quality but too small to make today's LLM’s yield meaningful results.

LAION set out to build a dataset for these newer, hungrier models. They built a dataset that is purely constructed by machine processes, by running models and tweaking thresholds: LAION-5B is made by measure.

But what is getting measured? The quality of data? The capacities of CLIP? The success of a model against a benchmark? The benchmark itself?

[...]

Openness in the AI field matters, not just for model biases, but for the structural biases in the ecosystem. An ongoing problem is that curation by statistics amplifies many of those structural biases."

Models all the way down, Christo Buschek and Jer Thorp.

0 notes

Text

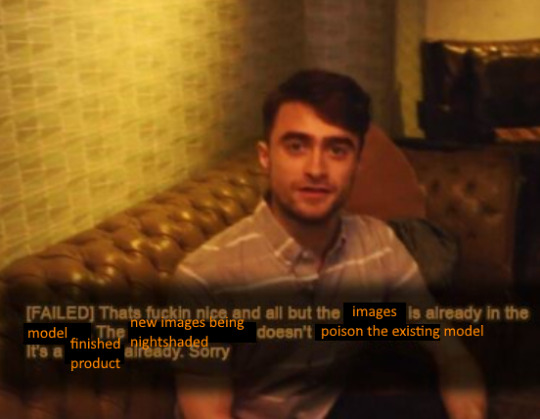

re: why nightshade/glaze is useless, aka "the chicken is already in the nugget", from the perspective of an Actual Machine Learning Researcher

a bunch of people have privately asked me to answer this aspect of the five points i raised, and i tire of repeating myself, so

the fundamental oversight here is a lack of recognition that these AI models are not dynamic entities constantly absorbing new data; they are more akin to snapshots of the internet at the time they were trained, which, for the most part, was several years ago.

to put it simply, Nightshade's efforts to alter images and introduce them to the AI in hopes of affecting the model's output are based on an outdated concept of how these models function. the belief that the AI is actively scraping the internet and updating its dataset with new images is incorrect. the LAION datasets, which are the foundation of most if not all modern image synthesis models, were compiled and solidified into the AI's 'knowledge base' long ago. The process is not ongoing; it's historical.

i think it's important for people to understand that Nightshade is fighting is against an already concluded war. the datasets have been created, the models have been trained, and the 'internet scraping' phase is not an ongoing process for these AI. the notion that AI is an ever-updating Skynet seeking to cannibalize all your art (or that the companies using it are constantly seeking out new art to add to the pile) is a science fiction myth, not a reality.

(for the many other reasons why it won't work see my other post. really i just wanted an excuse to make and post these two sloppy meme edits).

cheers

1K notes

·

View notes

Note

Hi! Genuine question, how do you know when one of your pieces has been stolen by AI dudebros?

You can search in haveibeentrained! It searches through LAION 5b, the biggest database of internet images that AI companies have been using. They now allow you to search by URL too, so if you have a website you can use that to see if they took something from it.

279 notes

·

View notes

Text

ChatGPT Bot Block

Hey Pillowfolks!

We know many of you are still waiting on our official stance regarding AI-Generated Images (also referred to as “AI Art”) being posted to Pillowfort. We are still deliberating internally on the best approach for our community as well as how to properly moderate AI-Generated Images based on the stance we ultimately decide on. We’re also in contact with our Legal Team for guidance regarding additions to the Terms of Service we will need to include regarding AI-Generated Images. This is a highly divisive issue that continues to evolve at a rapid pace, so while we know many of you are anxious to receive a decision, we want to make sure we carefully consider the options before deciding. Thank you for your patience as we work on this.

As of today, 9/5/2023, we have blocked the ChatGPT bot from scraping Pillowfort. This means any writings you post to Pillowfort cannot be retrieved for use in ChatGPT’s Dataset.

Our team is still looking for ways to provide the same protection for images uploaded to the site, but keeping scrapers from accessing images seems to be less straightforward than for text content. The biggest AI generators such as StableDiffusion use datasets such as LAION, and as far as our team has been able to discern, it is not known what means those datasets use to scrape images or how to prevent them from doing so. Some sources say that websites can add metadata tags to images to prevent the img2dataset bot (which is apparently used by many generative image tools) from scraping images, but it is unclear which AI image generators use this bot vs. a different bot or technology. The bot can also be configured to simply disregard these directives, so it is unknown which scrapers would obey the restriction if it was added.

For artists looking to protect their art from AI image scrapers you may want to look into Glaze, a tool designed by the University of Chicago, to protect human artworks from being processed by generative AI.

We are continuing to monitor this topic and encourage our users to let us know if you have any information that can help our team decide the best approach to managing AI-Generated Images and Generative AI going forward. Again, we appreciate your patience, and we are working to have a decision on the issue of moderating AI-Generated Images soon.

Best, Pillowfort.social Staff

873 notes

·

View notes

Text

"how do I keep my art from being scraped for AI from now on?"

if you post images online, there's no 100% guaranteed way to prevent this, and you can probably assume that there's no need to remove/edit existing content. you might contest this as a matter of data privacy and workers' rights, but you might also be looking for smaller, more immediate actions to take.

...so I made this list! I can't vouch for the effectiveness of all of these, but I wanted to compile as many options as possible so you can decide what's best for you.

Discouraging data scraping and "opting out"

robots.txt - This is a file placed in a website's home directory to "ask" web crawlers not to access certain parts of a site. If you have your own website, you can edit this yourself, or you can check which crawlers a site disallows by adding /robots.txt at the end of the URL. This article has instructions for blocking some bots that scrape data for AI.

HTML metadata - DeviantArt (i know) has proposed the "noai" and "noimageai" meta tags for opting images out of machine learning datasets, while Mojeek proposed "noml". To use all three, you'd put the following in your webpages' headers:

<meta name="robots" content="noai, noimageai, noml">

Have I Been Trained? - A tool by Spawning to search for images in the LAION-5B and LAION-400M datasets and opt your images and web domain out of future model training. Spawning claims that Stability AI and Hugging Face have agreed to respect these opt-outs. Try searching for usernames!

Kudurru - A tool by Spawning (currently a Wordpress plugin) in closed beta that purportedly blocks/redirects AI scrapers from your website. I don't know much about how this one works.

ai.txt - Similar to robots.txt. A new type of permissions file for AI training proposed by Spawning.

ArtShield Watermarker - Web-based tool to add Stable Diffusion's "invisible watermark" to images, which may cause an image to be recognized as AI-generated and excluded from data scraping and/or model training. Source available on GitHub. Doesn't seem to have updated/posted on social media since last year.

Image processing... things

these are popular now, but there seems to be some confusion regarding the goal of these tools; these aren't meant to "kill" AI art, and they won't affect existing models. they won't magically guarantee full protection, so you probably shouldn't loudly announce that you're using them to try to bait AI users into responding

Glaze - UChicago's tool to add "adversarial noise" to art to disrupt style mimicry. Devs recommend glazing pictures last. Runs on Windows and Mac (Nvidia GPU required)

WebGlaze - Free browser-based Glaze service for those who can't run Glaze locally. Request an invite by following their instructions.

Mist - Another adversarial noise tool, by Psyker Group. Runs on Windows and Linux (Nvidia GPU required) or on web with a Google Colab Notebook.

Nightshade - UChicago's tool to distort AI's recognition of features and "poison" datasets, with the goal of making it inconvenient to use images scraped without consent. The guide recommends that you do not disclose whether your art is nightshaded. Nightshade chooses a tag that's relevant to your image. You should use this word in the image's caption/alt text when you post the image online. This means the alt text will accurately describe what's in the image-- there is no reason to ever write false/mismatched alt text!!! Runs on Windows and Mac (Nvidia GPU required)

Sanative AI - Web-based "anti-AI watermark"-- maybe comparable to Glaze and Mist. I can't find much about this one except that they won a "Responsible AI Challenge" hosted by Mozilla last year.

Just Add A Regular Watermark - It doesn't take a lot of processing power to add a watermark, so why not? Try adding complexities like warping, changes in color/opacity, and blurring to make it more annoying for an AI (or human) to remove. You could even try testing your watermark against an AI watermark remover. (the privacy policy claims that they don't keep or otherwise use your images, but use your own judgment)

given that energy consumption was the focus of some AI art criticism, I'm not sure if the benefits of these GPU-intensive tools outweigh the cost, and I'd like to know more about that. in any case, I thought that people writing alt text/image descriptions more often would've been a neat side effect of Nightshade being used, so I hope to see more of that in the future, at least!

242 notes

·

View notes

Text

Meta ordered to stop training its AI on Brazilian personal data

Following similar pushback in the EU, Meta now faces daily fines if it uses Brazilian Facebook and Instagram data for AI training.

Brazil’s data protection authority (ANPD) has banned Meta from training its artificial intelligence models on Brazilian personal data, citing the “risks of serious damage and difficulty to users.” The decision follows an update to Meta’s privacy policy in May in which the social media giant granted itself permission to use public Facebook, Messenger, and Instagram data from Brazil — including posts, images, and captions — for AI training.

The decision follows a report published by Human Rights Watch last month which found that LAION-5B — one of the largest image-caption datasets used to train AI models — contains personal, identifiable photos of Brazilian children, placing them at risk of deepfakes and other exploitation.

As reported by The Associated Press, ANPD told the country’s official gazette that the policy carries “imminent risk of serious and irreparable or difficult-to-repair damage to the fundamental rights” of Brazilian users. The region is one of Meta’s largest markets, with 102 million Brazilian user accounts found on Facebook alone according to the ANPD. The notification published by the agency on Tuesday gives Meta five working days to comply with the order, or risk facing daily fines of 50,000 reais (around $8,808).

Continue reading.

#brazil#brazilian politics#politics#digital rights#artificial intelligence#national data protection agency#facebook#mod nise da silveira#image description in alt

54 notes

·

View notes