#IBM study

Explore tagged Tumblr posts

Text

A Groundbreaking Study Conducted by IBM Tells Workers to Embrace AI

The Comprehensive Study by IBM: Illuminating the AI LandscapeA Vision Beyond Apprehension: Augmenting Roles with AIStrategic Reskilling and AI Integration: A Recipe for SuccessEvolving Skills: From Technical Proficiency to Interpersonal AcumenSeizing the Future: Leveraging AI for Unprecedented Growth The rapid advancements in artificial intelligence (AI) have ignited both excitement and…

View On WordPress

#AI augmentation#AI impact on businesses#AI integration#embracing AI revolution#IBM study#innovation and growth#reskilling for success#revenue growth#shifting skill priorities#technology and human qualities#workforce evolution

0 notes

Text

USA 1993

49 notes

·

View notes

Text

Digital Science announces new AI patent study: IBM leads Google and Microsoft race to next AI generation

Major players are vying for top position as Generative AI drives technology patent grants up by 16% in the last five years and applications by 31%, according to a new patent study on trends in artificial intelligence by Digital Science company IFI CLAIMS U.S. commercial giants IBM, Google and Microsoft lead the way as the companies with the most patent applications in Generative AI (GenAI), with…

2 notes

·

View notes

Text

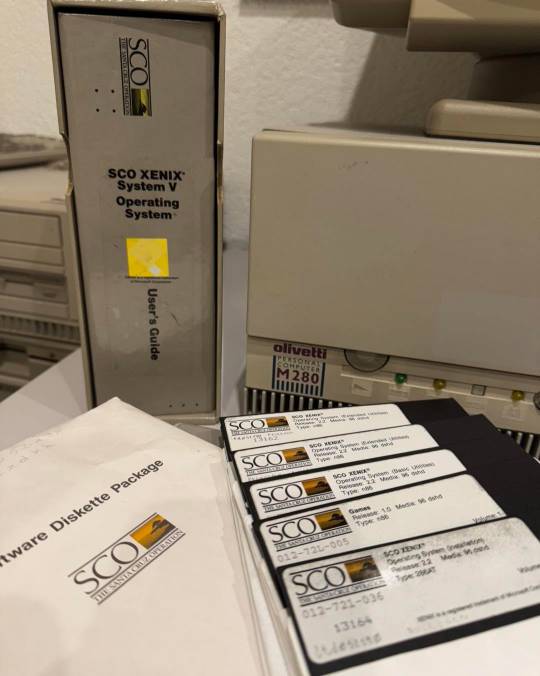

boxed software and manuals my beloved. i want this so bad.

#objectum#so cute#im in love#if you got me an IBM DOS manual set#id melt into you#like id turn into love itself#i love studying the things i love#i also love floppy disks#5 1/4" love#i need to give xenix a try#i wonder how many wholly unique tags ive typed out on tumblr.

63 notes

·

View notes

Text

IBM Study: Widespread Discontent in Retail Experiences, Consumers Signal Interest in AI-Driven Shopping Amid Economic Strain

Only 9% of respondents say they are satisfied with the in-store shopping experience; only 14% say the same for online shopping. Roughly 80% of consumers surveyed who haven’t used AI for shopping expressed an interest in using the technology for various aspects of their shopping journey. Manila, Philippines — As the retail landscape faces mounting pressure from evolving consumer expectations and…

View On WordPress

0 notes

Text

The discovery that hypnotic states could be used for market regulatory purposes was nothing short of a revolution for the Federal Reseve. in June 1968, Initial experiments with "financial clairvoyance" were conducted.

The original methodology was fairly simple: fully trained subjects would be placed in a sensory deprivation tank and undergo hypnosonic neuro-induction to the point of sub-finantial emanation. Subjects would remain attuned for 24 hours, at which point they would be de-emanated, and their experiences recorded via interview.

This methodology proved to be an expensive disaster. Repeated cycles of emanation and de-emanation had a catastrophic effect on mental cohesion. On average, subjects would begin to show signs of neuro-depatterning within the first 50 dives, and would slip into permanent catatonia by 350 dives.

Additionally, recovered documents from the period show that information from a single Plutophant was only accurate to within a 2.2i Murdoch deviations, and the interview method introduced a further 5.1i of uncertainty. While experimental attempts to record brain activity directly were underway, technology was primitive, often harmful to the subject, or necessitated invasive surgical modification. Even then, transchronological brain activity proved uniquely difficult to record.

Then, a breakthrough.

The 1960 nationwide upgrade of the minuteman nuclear system was underway, which created a surplus of IBM Drum Storage Drives. Drives that were largely donated to research institutions under the purview of project Clover. With some modifications, the cyclical nature these drum drives proved to be ideal for recording changes in transchronological neuropatterning.

These "Radio-Magnetic Neurological Sensory Arrays" were the predecessor to the modern neuroscope. The first production example, the IBM Y-2, was the size of an entire room, requiring enormous amounts of power and several trained technicians to process the thoughts of a single Plutophant into a human-readable form.

Study is ongoing.

685 notes

·

View notes

Note

I think modern society has major issues with mistaking correlation for causation and it's causing a lot of problems.

For example, some years back, there was a study showing that young children from households with a lot of books were scoring higher on literacy tests and doing better academically, whether or not their parents read to them. So everybody decided that books were magical and their mere presence improved kids' ability to learn, and they started a charity to distribute children's books to low-income families.

Now, on a moral level, there is nothing wrong with this. Very few people would argue that it's bad to give books to poor kids. And it probably did some good for some of those kids. But it didn't have the huge dramatic impact that many people were hoping for, because higher literacy rates were not caused by the presence of books. Both of those things were caused by the same third factor.

What kind of person owns a lot of books? What attributes do they value? What traits would they encourage in their children?

It was never about the books. It was always about the parents.

Now for a more disastrous example:

Decades ago, people noticed that college graduates were getting better jobs and earning more money, and they decided that meant everyone should go to college and then everyone would be more successful.

But that's not what happened.

If a particular achievement is seen as optional, then having that achievement says something about you. Back then, a college degree told employers that a prospective hire was someone who went above and beyond, who was willing to work harder to improve their skills and knowledge.

Once college is treated like it's mandatory, a college degree is scarcely more meaningful than a high school diploma.

And the presence of a degree cannot confer upon you the attitude and work ethic that leads to success any more than the presence of books can bestow literacy skills.

Now we have millions of people who took out massive student loans on the promise of success that are left with mountains of debt and mediocre prospects, and we keep shoveling millions more into increasingly corrupted and worthless schools with that same empty promise.

But it was never about the degree. It was always about the kind of person that earned one.

So, my dad was working for IBM back when corporations started listing college degrees as a requirement for employment. He was a data entry guy for the old style punch card computers, which means when someone wanted to ask the computer something they came to him, he set up the punch card, fed it into the computer, and read out the answer. When all these college graduates started getting hired, his job changed. Now, it was his responsibility to train them how to do his job. But, you sensibly ask, didn't they have college degrees? Didn't they learn all this in college? And the answer is yes, they did have college degrees. They all has MBA's, which taught them nothing about how to work computers. IBM just listed "MBA" as a requirement for every non-secretarial/custodial job because they thought having a large number of college graduates on staff sounded good. So these kids spent four years in college only to come out and get not only a low paying data entry job instead of the middle manager job they were expecting, but once they got that job they needed my dad to give them on the job training they could have gotten four years earlier with no money spent on college if the job listing didn't list an MBA as a requirement. In the stories my dad told me, most of these people quit after a year because they were told in college that this degree would get them a better job, and they didn't want to be lowly data entry people.

And nothing's really changed. Jobs that can easily be taught via on the job training or an apprenticeship model require college degrees. Colleges and guidance counselors lie about what kind of job a graduate can expect. And now you have over educated people loading up the Keurig machine at Starbucks to pay off their student debt because there are too many college graduates all going after the same jobs and not enough of those jobs to go around. Mandatory college has always been a scam. It's an artificial requirement that only exists because businesses think it looks good to hire people who have a piece of paper they can hang on the wall. The fact is, only very specialized jobs where on the job training wouldn't work need a college graduate. But there are billions of dollars at stake in the college racket, so on it goes.

230 notes

·

View notes

Text

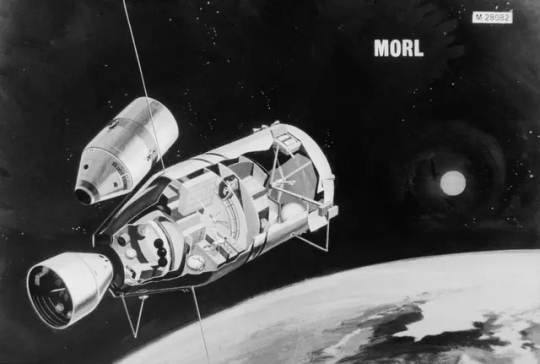

Cancelled Missions/Station: Manned Orbital Research Laboratory (MORL)

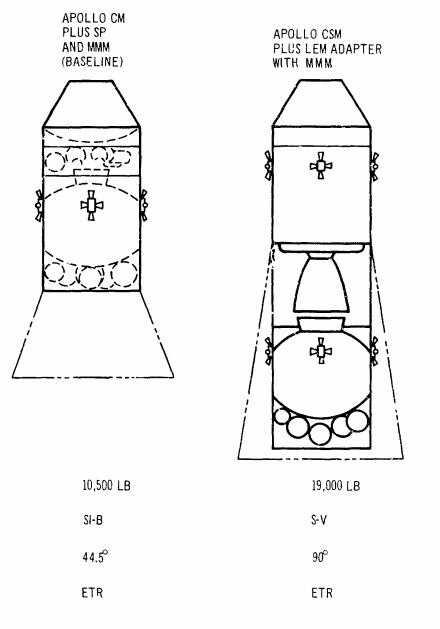

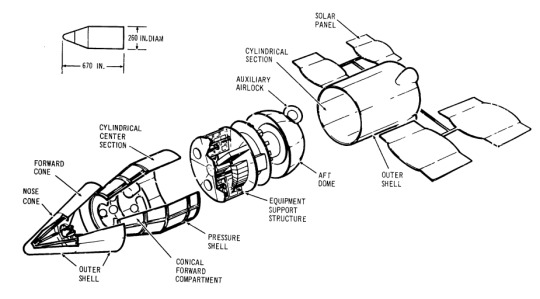

This was a study initiated in 1962 for space stations designs using the Gemini Spacecraft and later on the Apollo CSM. Boeing and Douglas received Phase I contracts in June 1964.

MORL/S-IVB Concept

"A 5 metric ton 'dry' space station, launched by Saturn IB, with Gemini or Apollo being used for crew rotation. The 6.5 meter diameter and 12.6 meter long station included a docking adapter, hangar section, airlock, and a dual-place centrifuge. Douglas was selected by NASA LaRC for further Phase 2 and 3 studies in 1963 to 1966. Although MORL was NASA's 'baseline station' during this period, it was dropped by the late 1960's in preference to the more capable station that would become Skylab.

Different docking concepts studied.

The Manned Orbital Research Laboratory was the brainchild of Carl M Houson and Allen C. Gilbert, two engineers at Douglas. In 1963 they proposed a Mini Space Station using existing hardware, to be launched by 1965. A Titan II or Atlas would be launched with a payload of control system, docking adapter and hangar module. The visiting crew would use the payload to transform the empty fuel tank of the last stage of the rocket into pressurized habitat (a so-called 'wet' space station). Provisions were available for 4 astronauts for a 100 day stay. Crew members would arrive two at a time aboard Gemini spacecraft. Equipment included a two-place centrifuge for the astronauts to readapt to gravity before their return to earth.

An early MORL concert. Artwork by Gordon Phillips.

In June 1964 Boeing and Douglas received Phase I contracts for further refinement of MORL station designs. The recommended concept was now for a 13.5 metric ton 'dry' space station, launched by Saturn IB, with Gemini or Apollo being used for crew rotation. The 6.5 meter diameter and 12.6 meter long station included a docking adapter, Hangar section, airlock, and a dual-place centrifuge.

"Medium-sized orbiting lab is this Manned Orbital Research Laboratory (MORL) developed for NASA's Langley Lab by Douglas Missiles & Spacecraft Division. The lab which weighs about 35,000 pounds, could maintain 3 to 6 men in orbit for a year.

Orbiting Stations: Stopovers to Space Travel by Irwin Stambler, G.P. Putnam's Sons, 1965."

Douglas was selected by NASA LaRC for further Phase 2 and 3 studies in 1963 to 1966. The major system elements of the baseline that emerged included:

A 660-cm-diameter laboratory launched by the Saturn IB into a 370-km orbit inclined at 28.72 degrees to the equator

A Saturn IB launched Apollo logistics vehicle, consisting of a modified Apollo command module, a service pack for rendezvous and re-entry propulsion, and a multi-mission module for cargo, experiments, laboratory facility modification, or a spacecraft excursion propulsion system.

Supporting ground systems.

MORL Phase IIb examined the utilization of the MORL for space research in the 1970s. Subcontractors included:

Eclipse-Pioneer Division of Bendix, stabilization and control

Federal Systems Division of IBM, communications, data management, and ground support systems

Hamilton Standard Division of the United Aircraft Corporation, environmental control/life support

Stanford Research Institute, priority analysis of space- related objectives

Bissett-Berman, oceanography

Marine Advisors, oceanography

Aero Services, cartography and photogrammetry

Marquardt, orientation propulsion

TRW, main engine propulsion.

The original MORL program envisioned one or two Saturn IB and three Titan II launches. Crew would be 6 to 9 Astronauts. After each Gemini docked to the MORL at the nose of the adapter, the crew would shut down the Gemini systems, put the spacecraft into hibernation, and transfer by EVA to the MORL airlock. The Gemini would then be moved by a small manipulator to side of the station to clear docking adapter for arrival of the next crew."

"Docking was to have 3 ports, all Nose Dock config, with spacecraft modifications totaling +405 lbs over the baseline Gemini spacecraft (structure beef-up, dock provisions, added retro-rockets, batteries, a data link for rendezvous, temp. control equip. for long-term, unoccupied Gemini storage on-orbit and removal of R&D instruments)."

"Later concepts including docking a Saturn-IB launched space telescope to MORL. At 4 meter diameter and 15 meter long, this would be the same size as the later Hubble Space Telescope. The crew would have to make EVA's to recover the film from the camera.

In 1965 Robert Sohn, head of the Technical Requirements Staff, TRW Space Technology Laboratories, proposed a detailed plan for early manned flight to Mars using MORL. The enlarged MORL-derived mission module would house six to eight men and be hurled on a Mars flyby by a single Saturn MLV-V-1 launch. MORL-derived Mars mission modules cropped up in other Douglas Mars studies until superseded by the 10-m diameter Planetary Mission Module in 1969.

MORL/Space Telescope

Why was MORL never launched ?

NASA had a need for a Space Station and MORL was little, easy and cheap. But NASA had more ambitious plans, embodied in the Apollo Applications Orbital Workshop (later called Skylab)."

-information from astronautix.com: link

source, source, source

NARA: 6375661, S66-17592

Posted on Flickr by Numbers Station: link

#Manned Orbital Research Laboratory#MORL#Space Station#Gemini#Gemini Program#Project Gemini#Apollo CSM Block II#Apollo Program#Saturn IB#Saturn I#S-IV#S-IVB#Apollo Applications Program#Cancelled#Study#1962#June#1965#my post

82 notes

·

View notes

Text

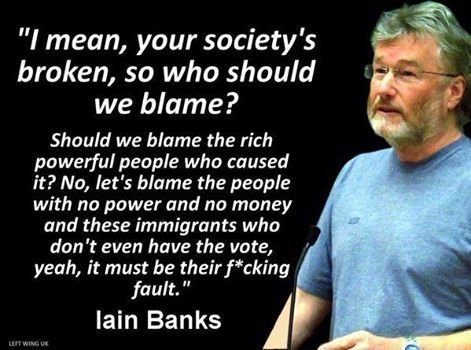

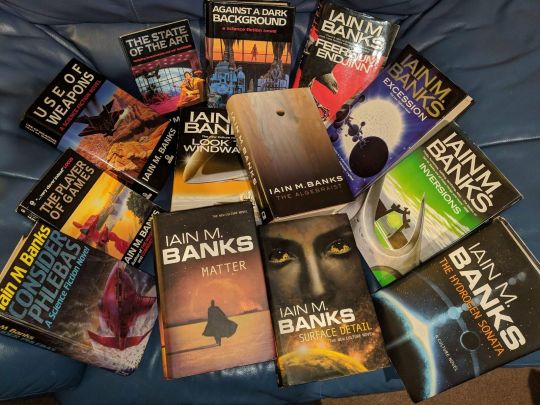

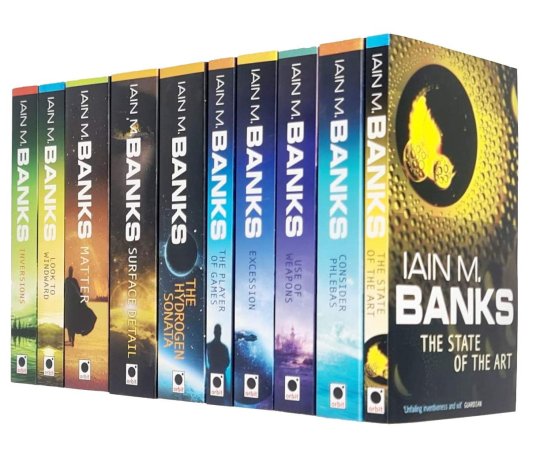

On February 16th 1954 the writer Iain Banks was born in Dunfermline, Fife

Banks was a son of a professional ice skater and an Admiralty officer. He spent his early years in North Queensferry and later moved to Gourock because of his father’s work requirement. He received his early education from Gourock and Greenock High Schools and at the young age of eleven, he decided to pursue a career in writing. He penned his first novel, titled The Hungarian Lift-Jet, in his adolescence. He was then enrolled at the University of Stirling where he studied English, philosophy and psychology. During his freshman year, he wrote his second novel, TTR.

Subsequent to attaining his bachelor degree, Banks worked a succession of jobs that allowed him some free time to write. The assortment of employments supported him financially throughout his twenties. He even managed to travel through Europe, North America and Scandinavia during which he was employed as an analyzer for IBM, a technician and a costing clerk in a London law firm. At the age of thirty he finally had his big break as he published his debut novel, The Wasp Factory, in 1984, henceforth he embraced full-time writing. It is considered to be one of the most inspiring teenage novels. The instant success of the book restored his confidence as a writer and that’s when he took up science fiction writing.

In 1987, he published his first sci-fi novel, Consider Phlebas which is a space opera. The title is inspired by one of the lines in T.S Eliot’s classic poem, The Waste Land. The novel is set in a fictional interstellar anarchist-socialist utopian society, named the Culture. The focus of the book is the ongoing war between Culture and Idiran Empire which the author manifests through the microcosm conflicts. The protagonist, Bora Horza Gobuchul, unlike other stereotypical heroes is portrayed as a morally ambiguous individual, who appeals to the readers. Additionally, the grand scenery and use of variety of literary devices add up to the extremely well reception of the book. Its sequel, The Player of Games, came out the very next year which paved way for other seven volumes in The Culture series.

Besides the Culture series, Banks wrote several stand-alone novels. Some of them were adapted for television, radio and theatre. BBC television adapted his novel, The Crow Road (1992), and BBC Radio 4 broadcasted Espedair Street. The literary influences on his works include Isaac Asimov, Dan Simmons, Arthur C. Clarke, and M. John Harrison. He was featured in a television documentary, The Strange Worlds of Iain Banks South Bank Show, which discussed his literary writings. In 2003, he published a non-fiction book, Raw Spirit, which is a travelogue of Scotland. Banks last novel, titled The Quarry, appeared posthumously. He also penned a collection of poetry but could not publish it in his lifetime. It is expected to be released in 2015. He was awarded multitude of titles and accolades in honour of his contribution to literature. Some of these accolades include British Science Fiction Association Award, Arthur C. Clarke Award, Locus Poll Award, Prometheus Award and Hugo Award.

Iain Banks was diagnosed with terminal cancer of the gallbladder and died at the age of 59 in the summer of 2013.

341 notes

·

View notes

Note

Okay so let’s say you have a basement just full of different computers. Absolute hodgepodge. Ranging in make and model from a 2005 dell laptop with a landline phone plug to a 2025 apple with exactly one usbc, to an IBM.

And you want to use this absolute clusterfuck to, I don’t know, store/run a sentient AI! How do you link this mess together (and plug it into a power source) in a way that WONT explode? Be as outlandish and technical as possible.

Oh.

Oh you want to take Caine home with you, don't you! You want to make the shittiest most fucked up home made server setup by fucking daisy chaining PCs together until you have enough processing power to do something. You want to try running Caine in your basement, absolutely no care for the power draw that this man demands.

Holy shit, what have you done? really long post under cut.

Slight disclaimer: I never actually work with this kind of computing, so none of this should be taken as actual, usable advice. That being said, I will cite sources as I go along for easy further research.

First of all, the idea of just stacking computers together HAS BEEN DONE BEFORE!!! This is known as a computer cluster! Sometimes, this is referred to as a supercomputer. (technically the term supercomputer is outdated but I won't go into that)

Did you know that the US government got the idea to wire 1,760 PS3s together in order to make a supercomputer? It was called the Condor Cluster! (tragically it kinda sucked but watch the video for that story)

Now, making an at home computer cluster is pretty rare as it's not like computing power scaled by adding another computer. It takes time for the machines to communicate in between each other, so trying to run something like a videogame on multiple PCs doesn't work. But, lets say that we have a massive amount of data that was collected from some research study that needs to be processed. A cluster can divide that computing among the multiple PCs for comparatively faster computing times. And yes! People have been using this to run/train their own AI so hypothetically Caine can run on a setup like this.

Lets talk about the external hardware needed first. There are basically only two things that we need to worry about. Power (like ya pointed out) and Communication.

Power supply is actually easier than you think! Most PCs have an internal power supply, so all you would need to do is stick the plug into the wall! Or, that is if we weren't stacking an unknowable amount of computers together. I have a friend that had the great idea to try and run a whole ass server rack in the dormitory at my college and yeah, he popped a fuse so now everyone in that section of the building doesn't have power. But that's a good thing, if you try to plug in too many computers on the same circuit, nothing should light on fire because the fuse breaks the circuit (yay for safety!). But how did my friend manage without his server running in his closet? Turns out there was a plug underneath his bed that was on it's own circuit with a higher limit (I'm not going to explain how that works, this is long enough already).

So! To do this at home, start by plugging everything into an extension cord, plug that into a wall outlet and see if the lights go out. I'm serious, blowing a fuse won't break anything. If the fuse doesn't break, yay it works! Move onto next step. If not, then take every other device off that circuit. Try again. If it still doesn't work, then it's time to get weird.

Some houses do have higher duty plugs (again, not going to explain how your house electricity works here) so you could try that next. But remember that each computer has their own plug, so why try to fit everything into one outlet? Wire this bad boy across multiple circuits to distribute the load! This can be a bit of a pain though, as typically the outlets for the each circuits aren't close to each other. An electrician can come in and break up which outlet goes to which fuse, or just get some long extension cords. Now, this next option I'm only saying this as you said wild and outlandish, and that's WIRING DIRECTLY INTO THE POWER GRID. If you do that, the computers can now draw enough power to light themselves on fire, but it is no longer possible to pop a fuse because the fuse is gone. (Please do not do this in real life, this can kill you in many horrible ways)

Communication (as in between the PCs) is where things start getting complex. As in, all of those nasty pictures of wires pouring out of server racks are usually communication cables. The essential piece of hardware that all of these computers are wired into is the switch box. It is the device that handles communication between the individual computers. Software decided which computer in the cluster gets what task. This is known as the Dynamic Resource Manager, sometimes called the scheduler (may run on one of the devises in the cluster but can have it's own dedicated machine). Once the software has scheduled the task, the switch box handles the actual act of getting the data to each machine. That's why speed and capacity are so important with switch boxes, they are the bottleneck for a system like this.

Uhh, connecting this all IBM server rack? That's not needed in this theoretical setup. Choose one computer to act as the 'head node' to act as the user access point and you're set. (sorry I'm not exactly sure what you mean by connect everything to an IBM)

To picture what all of this put together would look like, here’s a great if distressingly shaky video of an actual computer cluster! Power cables aren't shown but they are there.

But what about cable management? Well, things shouldn't get too bad given that fixing disordered cables can be as easy as scheduling the maintenance and ordering some cables. Some servers can't go down, so bad management piles up until either it has to go down or another server is brought in to take the load until the original server can be fixed. Ideally, the separate computers should be wired together, labeled, then neatly run into a switch box.

Now, depending on the level of knowledge, the next question would be "what about the firewall". A firewall is not necessary in a setup like this. If no connections are being made out of network, if the machine is even connected to a network, then there is no reason to monitor or block who is connecting to the machine.

That's all of the info about hardware around the computers, let's talk about the computers themselves!

I'm assuming that these things are a little fucked. First things first would be testing all machines to make sure that they still function! General housekeeping like blasting all of the dust off the motherboard and cleaning out those ports. Also, putting new thermal paste on the CPU. Refresh your thermal paste people.

The hardware of the PCs themselves can and maybe should get upgraded. Most PCs (more PCs than you think) have the ability to be upgraded! I'm talking extra slots for RAM and an extra SADA cable for memory. Also, some PCs still have a DVD slot. You can just take that out and put a hard drive in there! Now upgrades aren't essential but extra memory is always recommended. Redundancy is your friend.

Once the hardware is set, factory reset the computer and... Ok, now I'm at the part where my inexperience really shows. Computer clusters are almost always done with the exact same make and model of computer because essentially, this is taking several computers and treating them as one. When mixing hardware, things can get fucked. There is a version of linux specifically for mixing hardware or operating systems, OSCAR, so it is possible. Would it be a massive headache to do in real life and would it behave in unpredictable ways? Without a doubt. But, it could work, so I will leave it at that. (but maybe ditch the Mac, apple doesn't like to play nice with anything)

Extra things to consider. Noise level, cooling, and humidity! Each of these machines have fans! If it's in a basement, then it's probably going to be humid. Server rooms are climate controlled for a reason. It would be a good idea to stick an AC unit and a dehumidifier in there to maintain that sweat spot in temperature.

All links in one spot:

What's a cluster?

Wiki computer cluster

The PS3 was a ridiculous machine

I built an AI supercomputer with 5 Mac Studios

The worst patch rack I've ever worked on.

Building the Ultimate OpenSees Rig: HPC Cluster SUPERCOMPUTER Using Gaming Workstations!

What is a firewall?

Your old PC is Your New Server

Open Source Cluster Application Resources (OSCAR)

Buying a SERVER - 3 things to know

A Computer Cluster Made With BROKEN PCs

@fratboycipher feel free to add too this or correct me in any way

#Good news!#It's possible to do in real life what you are asking!#Bad news#you would have to do it VERY wrong for it to explode#Not really outlandish but very technical#...I may prefer youtube videos over reading#can you tell that I know more about the hardware than the software?#holy fuck it's not the way that this would be wired that would make this setup bad#connecting them is the easy part!#getting the computers to actually TALK to each other?#oh god oh fuck#i love technology#tadc caine#I'm tagging this as Caine#stemblr#ask#spark#computer science#computer cluster

32 notes

·

View notes

Text

USA 1997

91 notes

·

View notes

Text

Richard R John’s “Network Nation”

THIS SATURDAY (July 20), I'm appearing in CHICAGO at Exile in Bookville.

The telegraph and the telephone have a special place in the history and future of competition and Big Tech. After all, they were the original tech monopolists. Every discussion of tech and monopoly takes place in their shadow.

Back in 2010, Tim Wu published The Master Switch, his bestselling, wildly influential history of "The Bell System" and the struggle to de-monopolize America from its first telecoms barons:

https://memex.craphound.com/2010/11/01/the-master-switch-tim-net-neutrality-wu-explains-whats-at-stake-in-the-battle-for-net-freedom/

Wu is a brilliant writer and theoretician. Best known for coining the term "Net Neutrality," Wu went on to serve in both the Obama and Biden administrations as a tech trustbuster. He accomplished much in those years. Most notably, Wu wrote the 2021 executive order on competition, laying out a 72-point program for using existing powers vested in the administrative agencies to break up corporate power and get the monopolist's boot off Americans' necks:

https://www.eff.org/de/deeplinks/2021/08/party-its-1979-og-antitrust-back-baby

The Competition EO is basically a checklist, and Biden's agency heads have been racing down it, ticking off box after box on or ahead of schedule, making meaningful technical changes in how companies are allowed to operate, each one designed to make material improvements to the lives of Americans.

A decade and a half after its initial publication, Wu's Master Switch is still considered a canonical account of how the phone monopoly was built – and dismantled.

But somewhat lost in the shadow of The Master Switch is another book, written by the accomplished telecoms historian Richard R John: "Network Nation: Inventing American Telecommunications," published a year after The Master Switch:

https://www.hup.harvard.edu/books/9780674088139

Network Nation flew under my radar until earlier this year, when I found myself speaking at an antitrust conference where both John and Wu were also on the bill:

https://www.youtube.com/watch?v=2VNivXjrU3A

During John's panel – "Case Studies: AT&T & IBM" – he took a good-natured dig at Wu's book, claiming that Wu, not being an historian, had been taken in by AT&T's own self-serving lies about its history. Wu – also on the panel – didn't dispute it, either. That was enough to prick my interest. I ordered a copy of Network Nation and put it on my suitcase during my vacation earlier this month.

Network Nation is an extremely important, brilliantly researched, deep history of America's love/hate affair with not just the telephone, but also the telegraph. It is unmistakably as history book, one that aims at a definitive takedown of various neat stories about the history of American telecommunications. As Wu writes in his New Republic review of John's book:

Generally he describes the failure of competition not so much as a failure of a theory, but rather as the more concrete failure of the men running the competitors, many of whom turned out to be incompetent or unlucky. His story is more like a blow-by-blow account of why Germany lost World War II than a grand theory of why democracy is better than fascism.

https://newrepublic.com/article/88640/review-network-nation-richard-john-tim-wu

In other words, John thinks that the monopolies that emerged in the telegraph and then the telephone weren't down to grand forces that made them inevitable, but rather, to the errors made by regulators and the successful gambits of the telecoms barons. At many junctures, things could have gone another way.

So this is a very complicated story, one that uses a series of contrasts to make the point that history is contingent and owes much to a mix of random chance and the actions of flawed human beings, and not merely great economic or historical laws. For example, John contrasts the telegraph with the telephone, posing them against one another as a kind of natural experiment in different business strategies and regulatory responses.

The telegraph's early promoters, including Samuel Morse (as in "Morse code") believed that the natural way to roll out telegraph was via selling the patents to the federal government and having an agency like the post office operate it. There was a widespread view that the post office as a paragon of excellent technical management and a necessity for knitting together the large American nation. Moreover, everyone could see that when the post office partnered with private sector tech companies (like the railroads that became essential to the postal system), the private sector inevitably figured out how to gouge the American public, leading regulators to ever-more extreme measures to rein in the ripoffs.

The telegraph skated close to federalization on several occasions, but kept getting snatched back from the brink, ending up instead as a privately operated system that primarily served deep-pocketed business customers. This meant that telegraph companies were forever jostling to get the right to string wires along railroad tracks and public roads, creating a "political economy" that tried to balance out highway regulators and rail barons (or play them off against each other).

But the leaders of the telegraph companies were largely uninterested in "popularizing" the telegraph – that is, figuring out how ordinary people could use telegraphs in place of the hand-written letters that were the dominant form of long-distance communications at the time. By turning their backs on "popularization," telegraph companies largely freed themselves from municipal oversight, because they didn't need to get permission to string wires into every home in every major city.

When the telephone emerged, its inventors and investors initially conceived of it as a tool for business as well. But while the telegraph had ushered in a boom in instantaneous, long-distance communications (for example, by joining ports and distant cities where financiers bought and sold the ports' cargo), the telephone proved far more popular as a way of linking businesses within a city limits. Brokers and financiers and businesses that were only a few blocks from one another found the telephone to be vastly superior to the system of dispatching young boys to race around urban downtowns with slips bearing messages.

So from the start, the phone was much more bound up in city politics, and that only deepened with popularization, as phones worked their ways into the homes of affluent families and local merchants like druggists, who offered free phone calls to customers as a way of bringing trade through the door. That created a great number of local phone carriers, who had to fend off Bell's federally enforced patents and aldermen and city councilors who solicited bribes and favors.

To make things even more complex, municipal phone companies had to fight with other sectors that wanted to fill the skies over urban streets with their own wires: streetcar lines and electrical lines. The unregulated, breakneck race to install overhead wires led to an epidemic of electrocutions and fires, and also degraded service, with rival wires interfering with phone calls.

City politicians eventually demanded that lines be buried, creating another source of woe for telephone operators, who had to contend with private or quasi-private operators who acquired a monopoly over the "subways" – tunnels where all these wires eventually ended up.

The telegraph system and the telephone system were very different, but both tended to monopoly, often from opposite directions. Regulations that created some competition in telegraphs extinguished competition when applied to telephones. For example, Canada federalized the regulation of telephones, with the perverse effect that everyday telephone users in cities like Toronto had much less chance of influencing telephone service than Chicagoans, whose phone carrier had to keep local politicians happy.

Nominally, the Canadian Members of Parliament who oversaw Toronto's phone network were big leaguers who understood prudent regulation and were insulated from the daily corruption of municipal politics. And Chicago's aldermen were pretty goddamned corrupt. But Bell starved Toronto of phone network upgrades for years, while Chicago's gladhanding political bosses forced Chicago's phone company to build and build, until Chicago had more phone lines than all of France. Canadian MPs might have been more remote from rough-and-tumble politics, but that made them much less responsive to a random Torontonian's bitter complaint about their inability to get a phone installed.

As the Toronto/Chicago story illustrates, the fact that there were so many different approaches to phone service tried in the US and Canada gives John more opportunities to contrast different business-strategies and regulations. Again, we see how there was never one rule that governments could have used if they wanted to ensure that telecoms were well-run, widely accessible, and reasonably priced. Instead, it was always "horses for courses" – different rules to counter different circumstances and gambits from telecoms operators.

As John traces through the decades during which the telegraph and telephone were established in America, he draws heavily on primary sources to trace the ebb and flow of public and elite sentiment towards public ownership, regulation, and trustbusting. In John's hands, we see some of the most spectacular failures as more than a mismatch of regulatory strategy to corporate gambit – but rather as a mismatch of political will and corporate gambit. If a company's power would be best reined in by public ownership, but the political vogue is for regulation, then lawmakers end up trying to make rules for a company they should simply be buying giving to the post office to buy.

This makes John's history into a history of the Gilded Age and trustbusters. Notorious vulture capitalists like Jay Gould shocked the American conscience by declaring that businesses had no allegiance to the public good, and were put on this Earth to make as much money as possible no matter what the consequences. Gould repeated "raided" Western Union, acquiring shares and forcing the company to buy him out at a premium to end his harassment of the board and the company's managers.

By the time the feds were ready to buy out Western Union, Gould was a massive shareholder, meaning that any buyout of the telegraph would make Gould infinitely wealthier, at public expense, in a move that would have been electoral poison for the lawmakers who presided over it. In this highly contingent way, Western Union lived on as a private company.

Americans – including prominent businesspeople who would be considered "conservatives" by today's standards, were deeply divided on the question of monopoly. The big, successful networks of national telegraph lines and urban telephone lines were marvels, and it was easy to see how they benefited from coordinated management. Monopolists and their apologists weaponized this public excitement about telecoms to defend their monopolies, insisting that their achievement owed its existence to the absence of "wasteful competition."

The economics of monopoly were still nascent. Ideas like "network effects" (where the value of a service increases as it adds users) were still controversial, and the bottlenecks posed by telephone switching and human operators meant that the cost of adding new subscribers sometimes went up as the networks grew, in a weird diseconomy of scale.

Patent rights were controversial, especially patents related to natural phenomena like magnetism and electricity, which were viewed as "natural forces" and not "inventions." Business leaders and rabble-rousers alike decried patents as a federal grant of privilege, leading to monopoly and its ills.

Telecoms monopolists – telephone and telegraph alike – had different ways to address this sentiment at different times (for example, the Bell System's much-vaunted commitment to "universal service" was part of a campaign to normalize the idea of federally protected, privately owned monopolies).

Most striking about this book were the parallels to contemporary fights over Big Tech trustbusting, in our new Gilded Age. Many of the apologies offered for Western Union or AT&T's monopoly could have been uttered by the Renfields who carry water for Facebook, Apple and Google. John's book is a powerful and engrossing reminder that variations on these fights have occurred in the not-so-distant past, and that there's much we can learn from them.

Wu isn't wrong to say that John is engaging with a lot of minutae, and that this makes Network Nation a far less breezy read than Master Switch. I get the impression that John is writing first for other historians, and writers of popular history like Wu, in a bid to create the definitive record of all the complexity that is elided when we create tidy narratives of telecoms monopolies, and tech monopolies in general. Bringing Network Nation on my vacation as a beach-read wasn't the best choice – it demands a lot of serious attention. But it amply rewards that attention, too, and makes an indelible mark on the reader.

Support me this summer on the Clarion Write-A-Thon and help raise money for the Clarion Science Fiction and Fantasy Writers' Workshop!

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/07/18/the-bell-system/#were-the-phone-company-we-dont-have-to-care

#pluralistic#books#reviews#history#the bell system#monopoly#att#western union#gift guide#tim wu#richard r john#the master switch#antitrust#trustbusting

64 notes

·

View notes

Text

The Great College Lie: Why Degrees Don’t Mean Success

For years, we’ve been fed the same script: Go to college, get a degree, and the world will roll out a red carpet to your success. Sounds simple, right? Except, for many, that “red carpet” feels more like a never-ending hamster wheel of debt, underemployment, and job applications that go straight into the void. So, what happened? Did college lie to us, or did we buy into a dream that was never designed to include everyone?

Let’s dissect The Great College Lie—why the degree doesn’t guarantee success, and what you can do to thrive despite the system.

1. The Promise vs. Reality

The Promise:

College is marketed as the “great equalizer.” They told us education would unlock the American Dream: a steady career, financial security, and a house with a white picket fence. And sure, for some, it worked. But for many others, here’s the reality:

The Reality:

Student Loan Debt: The average college graduate in the U.S. owes $37,000+ in student loans, which can take decades to pay off.

Underemployment: Over 40% of college graduates work jobs that don’t require a degree (hello, barista jobs with a philosophy major).

No Guarantees: That diploma doesn’t protect you from layoffs, market crashes, or a rapidly evolving job market that now demands experience over credentials.

2. Why Degrees Don’t Equal Success

1. It’s About Who You Know, Not What You Know

Networking often outranks education. Studies have shown that up to 70% of jobs are never even posted publicly—they’re filled through connections. Translation? You can have a degree from Harvard, but Chad with zero qualifications might get the job because his dad plays golf with the CEO.

2. Degrees are Losing Their Edge

A bachelor’s degree used to set you apart. Now? It’s almost like having a high school diploma. Everyone has one, which means the competition is fiercer, and employers are raising their standards to include master’s degrees and certifications.

3. The Skills Gap is Real

A piece of paper doesn’t always mean you have the skills employers need. A 2021 survey revealed that 46% of employers feel recent grads aren’t prepared for the workforce. Critical thinking, problem-solving, and real-world experience often trump textbook knowledge.

3. The Student Loan Scam

Let’s call it what it is: a scam. The system was designed to profit off your dreams. Here’s how it works:

Colleges Overpromise: They lure students with flashy marketing, luxurious dorms, and vague promises of a “bright future.”

Loans Trap You: The government and private lenders make it easy to borrow, but repayment terms keep you financially enslaved for decades.

Inflated Costs: College tuition has skyrocketed over 1200% since 1980, far outpacing wage growth. So, you’re borrowing more but earning less.

4. “But College is Still Worth It, Right?”

It depends. For some fields—like medicine, law, and engineering—a degree is non-negotiable. But for many careers, it’s becoming clear that skills and experience matter more than credentials.

Here’s the Shift:

Trade Schools and Certifications: Electricians, plumbers, and tech professionals often earn just as much (or more) than degree holders—with a fraction of the debt.

Freelance and Entrepreneurial Skills: The internet has opened doors to self-taught careers in writing, design, coding, and more.

On-the-Job Learning: Companies like Google, Tesla, and IBM no longer require degrees for many positions—they value skills instead.

5. So, What Should You Do Instead?

1. Learn Marketable Skills

Platforms like Coursera, Udemy, and Khan Academy offer affordable (sometimes free) courses on coding, graphic design, marketing, and more.

The ROI on these courses often far exceeds a traditional degree.

2. Network, Network, Network

Attend local events, join LinkedIn groups, and connect with mentors in your field.

Remember: Jobs often go to those with connections—not just qualifications.

3. Embrace Lifelong Learning

The job market evolves constantly. Staying ahead means continually updating your skills, whether through certifications or self-study.

4. Question the Narrative

Don’t blindly follow the “go to college” script. Ask yourself: What do I want to do, and is college the best path to get there?

6. The Humble Truth About Success

Here’s the real kicker: Success isn’t tied to a degree—it’s tied to your grit, adaptability, and willingness to hustle smart.

Degrees can help, but they aren’t the golden ticket we were promised.

Building real-world skills, learning to market yourself, and forming relationships will often get you farther than any diploma can.

What They Don’t Want You to Know

The Great College Lie isn’t just about the myth of guaranteed success—it’s about the systems that profit from your hopes and dreams. College can be a valuable tool, but it’s not the only path to success.

The sooner we stop glorifying degrees and start valuing skills, effort, and innovation, the better off we’ll all be. In the meantime, let’s admit one thing: We were all sold a dream. But it’s not too late to wake up and rewrite the story.

#CollegeDebt#HigherEducationScam#CareerSuccess#StudentLoans#EducationCritique#LifeAfterCollege#Humor#TruthBombs#trends#news#world news#ModernCulture#SocialCommentary#CulturalCritique#EchoChamberCulture#MoralOutrage#fitness#please share#ReflectionRegret#RelatableTrash#funny post#funny memes#funny stuff#funny shit#humor#jokes#memes#lol#haha#societyandculture

29 notes

·

View notes

Text

Friday, 31st of May.

Had to pull a sort of all-nighter the day before to finish some French units before the deadline.

Had a private French class in order to analyse my written compositions with my professor

Finished the first course of the IBM Data Science certificate

Started planning out some essays and writing I need to submit during the summer

Notes of the day:

- I’ve been feeling quite fatigued recently, though it is not the first time I am preparing for a language certificate I still feel a bit nervous and must discipline myself into dealing with these emotions rationally.

- A part of me is quite envious of seeing my colleagues enjoying their vacations and time off university while I have to deal with additional examinations/studies and an internship but I should recognise that this surplus work will pay off in the future and I shouldn’t discourage myself.

- I wished I had more time to do readings for the next semester, but for now 2-3 hours of my day will have to suffice. I am a bit anxious for the opening of the application process for next summer’s internships. I need to acquire a research internship in my field of choice and I am not sure if I’ll obtain one in my own university because of the competition between 4 different stages of study going for the same internships…

- Academia aside, I’ve been spending the majority of my days either in libraries or alone in coffee shops doing some work. It is not the first time I spend a summer by myself and I think I’ve learnt to enjoy my own company harmoniously.

#dark academia#study#studyblr#classic academia#academia aesthetic#aesthetic#studyspo#books#literature#books and reading#study goals#studyspiration#study blog#study motivation#study hard#study aesthetic#stem academia#chaotic academia#dark academism#books & libraries

56 notes

·

View notes

Text

Dr. Evelyn Boyd Granville (May 1, 1924 - June 27, 2023) was the second African American woman to receive a Ph.D. in mathematics from an American University; she earned it from Yale University. She performed pioneering work in the field of computing.

She entered Smith College. She majored in mathematics and physics but also took a keen interest in astronomy. She was elected to Phi Beta Kappa and Sigma Xi and graduated summa cum laude. Encouraged by a graduate scholarship from the Smith Student Aid Society of Smith College, she applied to graduate programs in mathematics and was accepted by both Yale University and the University of Michigan; she chose Yale because of the financial aid they offered. She studied functional analysis, finishing her doctorate. Her dissertation was “On Laguerre Series in the Complex Domain”.

She moved from Washington to New York City. She moved to Los Angeles. There she worked for the US Space Technology Laboratories, which became the North American Aviation Space and Information Systems Division. She worked on various projects for the Apollo program, including celestial mechanics, trajectory computation, and “digital computer techniques”.

Forced to move because of restructuring at IBM, she took a position at California State University, Los Angeles as a full professor of mathematics. After retiring from CSULA she taught at Texas College for four years and then joined the faculty of the University of Texas at Tyler as the Sam A. Lindsey Professor of Mathematics. There she developed elementary school math enrichment programs. She has remained a strong advocate for women’s education in tech. #africanhistory365 #africanexcellence #phibetakappa

10 notes

·

View notes

Note

hiya! i'm 21 (they), in cdt, and i'm looking for a novella-style writing partner around my age (19–25) who's just as hopelessly in love with character-driven storytelling as i am. if you like your scenes to read like a movie (moody lighting, visual descriptions, silence doing more than dialogue) then we might be on the same page already!

rn i'm only interested in mxm, ocxoc, and historical romantic fiction set in 20th century america, ideally from the prohibition era through to the early space race (i'm most interested in a ww2 plot but down to basically anything from the 20s to 60s). i'm obsessed with the 'why' behind things and people: their contradictions, their longings, the private things they'll never say out loud. thus, i gravitate toward characters that feel bigger than the plot itself. something elegiac, character studies wrapped in narrative. so yeah, my style is very detailed, consistently over discord's character limit (i know that's not for everyone!). but if you like writing books together, collaborating closely, world-building with care, and really inhabiting your characters psychologically, we'll probably get along. i come from a love of literature and cinema, and try to bring that sensibility into every scene.

i usually don't use drawn face claims (i would if i knew how to draw lmao), but i'm great with vivid written descriptions or real-life references. i don't have many triggers either, though that's something we can discuss as we go. in that same line, i don't mind nsfw/smut, so long as it's there to serve the story. however, i tend to focus on the poetry of it rather than the choreography (so no explicit scenes please). i'm very slow-burn by nature, and love tension/restraint. give me nostalgia, misery, pain, longing, enemies-to-lovers, and i'll be yours! cause i rlly want to write characters who shouldn't fall in love, and then make it hurt when they do.

to wrap things up, i may get busy from time to time, but usually reply quickly when i'm inspired. and when i am, i really reply.

so yeah, if you're still reading this, we may just be a great pair! please add me on discord and share your ideas with me: ibm.7094.

Message if interested!

#fandomless rp#oc rp#oc roleplay#mxm rp#mxm roleplay#historical rp#historical roleplay#19+ rp#19+ roleplay

8 notes

·

View notes