#I like the idea of a bit of asymmetry in her design to reflect her original vocaloid's use of the same concept

Explore tagged Tumblr posts

Text

My favorite poor little meow-meow in celebration of Panthera chapter 4 (and in anticipation of chapter 5 coming soon???)

I updated her design a smidge because we got confirmation in this last chapter of her dark little booties!!

Fic by @idrewacow

#panthera fic#vocaloid#is it a spoiler to tag the character#idk how many chapters does she have to be like this before it's not a spoiler#anyway#I still might tweak her design here and there as I draw her more#I like the idea of a bit of asymmetry in her design to reflect her original vocaloid's use of the same concept#all without letting myself get too complicated#she is an inexpensive cat after all#legit tho the scream I scrumpt when I saw this chapter posted??#I'm gearing up to reread the reason trilogy#when boom#cat time#someone read this fic with me so I can gush to more people#I have predictions

4 notes

·

View notes

Text

All outfits I made for my Drakgo Teacher AU! Discussion under the cut. Lore/casual fic/part 1 here.

It was very important to me for all of these outfits to look like things that canon Dr. Drakken and Shego would wear, not just by lifting palettes from canon, but by incorporating design elements seen in the clothes they wear and finding outfits that feel appropriate for their ages.

I personally believe that the age gap between Dr. D and Shego is not insignificant, there's a clear difference in the way they behave and carry themselves in canon that signals this to me, aside from the fact that Drakken went to college with James. I'd say about 10-15 years, and their clothes should reflect that, not to mention their overall tastes. Dr. D's the butt of a lot of mid-life crisis jokes (by me, LOL), but he doesn't strike me as the sort to dress like he is going through one (this is what the villain career is for, aside from it being an extended and hilarious mental breakdown. The mullet is a holdover from the 80s, not trying to recapture his youth, in my opinion).

I wanted more 80s/70s older style clothes for Drakken, but a lot don't feel like him, and the brown palettes for many of them cross over with Mr. Barkin too much. Dr. D loses his identity with the shift in colour. He works well with deeper purples and reds, but him being listless, and honestly just plain depressed, had to be reflected in the colour choices. Even the long sleeves, hinting at this insecurity he has over his skin that I have made a point of in my Teacher AU. I've seen it a couple of times in fanfic, and I've enjoyed it immensely as an element of characterisation for him.

Chinos make their appearance here, and this Ms. Go's outfit is a slight remix of the original. I characterise that outfit, where she was effected by the Attitudinator and became Ms. Go, as something she would wear at the very beginning of her training as a teacher. Here, she has a bit more bite, more of the Shego we know and love, not as demure.

I gave her a tie in one of them because I love ties and she looks so chic with it. I really wanted to incorporate her belt from her original suit, but it's too garish to fit in with any of her outfits here, and it doesn't suit anything 'normal' or anything slightly professional/chic. Drakken's was easier to implement, more subtle.

Ms. Go palette is mostly green here for story reasons, too. It helps her in gaslighting the kids. If she wears greens and neutral colours, mostly, they won't be able to really tell that she IS green. I love the idea of a teacher Shego just seriously messing with kids' heads, I've had a few teachers like that in my life, who take great amusement in watching them trip over their words, or just outright lying to them to get a funny reaction.

The Good Ms. Go also has shorter hair, which I thought was so interesting - long hair as a hallmark of Shego's evilness - even Drakken's - so I just tied it up here. If I want her to go into evil, I can just by having her let her hair go, or if she just settles down with Dr. D, it's fine, too. It's also just practical as a teacher, makes her look put-together. Headbands make women look young, I find, there's a real girlishness to them. Ms. Go is a woman! And WHAT a woman!

Finding clothes that were professional yet edgy - o my God. The trek. Shego gave me a run for my money, I will tell you. If I had to redo any of this, I might be inclined to incorporate more asymmetry. I love her original suit, it's so striking and disorienting, it's actually insane to me that Stephen Silver designed that and everyone JUST AGREED, AND SAID IT WAS FINE, YEAH, WE'LL ANIMATE THAT?! Crazy. IN 2D, TOO!

Now, original Ms. Go has heels, and even Shego wears heels in So the Drama. Why no heels here? I didn't want to draw new feet and adjust the height, okay - BUT ALSO! She's still got that holdover from her hero days. She's got to be ready at any time, to spring into action!

FORGET THE FACT THAT SHE RUNS AND JUMPS AND SOMERSAULTS IN THE DAMN THINGS IN SO THE DRAMA, OKAY! She can afford to go to Mount Olympus and have Midas karate chop the calf and lower back pain away. She doesn't have a good masseuse/physio/chiropractor here in this AU. PRE-MIDAS! (Hm, maybe Dr. Lipsky could help with that?)

#drakgo fan art#drakken x shego#shego x drakken#drakgo fanart#drakgo#Drakgo Teacher AU#legendary art#legendary rambling#legendary fanfic#The Blab to end all Blabs.

36 notes

·

View notes

Note

Imo this new version of Mont fit way much better what i think might be the new art direction toward the metaverse costumes, as i noticed the last 6-7 phantom idols we got, are more focused on their wants/desires than the others, especially the first batch Like, Mont's original design is pretty, but its just a white shirt and shorts with some pizzaz. Yuki's...Lets be honest, is a mess, which it might be done on purpose since her mother is so controlling, but the mix asymmetry, phantom of the opera mask, knight left motive and Japanese style AOA ...Fleuret is wearing a butler costume.

While i sound a bit negative here, im actually really happy that the character design is finding a good footing! Im a huge fan of character design that tell you what a character is about! (I add that Yuki, Mont and Fleuret's design might be leftovers from the first project before p5x became what we know now, so that might be the reason why the look a bit off)

Putting everything else aside for a moment, I have to defend Fleuret, that is not a butler costume. I can sort of see the resemblance if you just quickly glance over him, but to me it is far more reminiscent of a old-fashioned revolutionary (as in, the kind of thing people wore around the era of the Revolutionary War here in the US). I won't say it's a 1:1 match there either, but that's the impression I get, and you can't just come into my inbox and slander my friend Seiji, haha.

Okay, with that out of the way, my answer to this got pretty long, heh, so I'm going to put it under a cut:

I do think this is a bit more of a matter of character analysis, interpretation, and personal taste than you're making it out to be, though your own opinion on each costume is entirely valid (as long as you're not just watering them down, haha)! As an artist and fan of character design myself, and someone who's taken some classes about it, I'd also say I enjoy character designs that represent who they are, but I think there's some nuance to it. And context is really important!

For one, like you said, Yuki (and most likely Fleuret and Mont) was originally designed for Codename: X, made obvious by her featuring on the promotional image for it, haha. Yuki, Fleuret, and Mont's designs feel more like thieves to me, in the way that the original P5 team does, without too many extra bells, whistles, or details compared to how some gacha designs can get. They were meant to be P5 characters, basically, not spinoff characters!

Mont's may seem a bit plain, but it didn't really need to be anything more than what it is. I agree that Yuki's can come across as a bit of a mess at first, but it's actually really grown on me. I think the philosophy here wasn't to tell you what they literally are (ex. Ryuji isn't literally a pirate, he's a former track star, so in the same way, Yukimi's outfit shouldn't be an aspiring lawyer) but to define their will of rebellion visually, in a way that feels like something you could imagine a thief wearing. However you feel about, for instance, Panther's catsuit, it's meant to reflect a thief who uses sex appeal to get what she wants, because that's how the writers chose to visualize Ann's sense of rebellion.

It's the same way here- Mont's outfit works for an ice skating thief, without being too flashy or superfluous, because Mont's not the type of person to be like that. Yuki's outfit seems Phantom of the Opera-themed, for reasons that may have gotten reworked over time, but you can still trace them back to her rebellion against her mother, and with armor because she's meant to be a fighting character that takes hits. Assuming Fleuret is meant to evoke a revolutionary, the ties to rebellion there are obvious, even when we don't have as complete an idea of who he is.

I'll agree that Yuki's AOA finisher doesn't really fit the rest of her theme, but it does fit her character- despite her differences with her mother, it's a complicated relationship, and she still has a fondness for traditional Japanese motifs. In general, I think the perceived messiness of Yuki's entire Metaverse aesthetic reflects a messy, complicated relationship and rebellion.

I suppose Dancer Mont's appearance does fit her desires more directly than the original Mont's does, but in a way that's sort of like saying Summer Tomoko's does too because it's the outfit her job on the beach gave her to wear. Obviously, wearing literally what they wear while fulfilling their desires in the real world is going to more directly represent their desires! But it loses some of the Phantom Thief-y-ness of the original, and that's why it's an alternate version, not a replacement.

Honestly, in my personal opinion, as pretty as Dancer Mont's outfit is, it's way less interesting than a proper Metaverse outfit like the original Mont has. It doesn't tell me anything I don't already know, or give me much of anything new to speculate on. I like a Metaverse outfit that isn't just the obvious choice, which is what Dancer Mont's outfit would be if that were her actual Metaverse outfit rather than an alternate version. Maybe that's why I'm so quick to remind everyone that the Dancer/Summer versions aren't replacements, heh!

And again, in the end, it's partially personal taste. I like character designs that make me think, and give me details to think about, rather than just telling me outright what the character is. But Dancer Mont seems to literally be her performance outfit, in the same way that Summer Motoha and Tomoko wear their literal swimsuits, and that's okay for what they're meant to be! I don't dislike Dancer Mont's outfit, but in my mind, it's certainly no replacement for the original, either.

#hoshihime98#of course this isn't meant to be an argument or anything. I'm not mad at this ask! I'm just trying to make this a discussion#because I think our viewpoints are a bit different here. but that's okay! we don't have to agree. I just wanted to explain how I see things#mont#yuki#fleuret#theories#analysis

5 notes

·

View notes

Text

CisLunar Dev Blog #1: Lunar

Howdy!

The first of many Dev Blogs on the projects I'm working on. This is all focused on CisLunar, my streaming/vtuber/pngtuber lore and storyline.

I got into the vtuber world maybe a year and a half ago. First it was me just trying to understand this new niche form of entertainment, which really blew up since then I feel, and now I'm a fan. As someone who wants to share something without actually showing my face, I really wanted to try it out!

With that being said, as someone who was raised to make every project/assignment the best, it's taken some time for me to get everything together. I also don't have that much disposable income (at the time i first started developing this, i was paying my way through grad school) so I know I don't have the fastest internet/best specs for streaming.

But! My computer doesn't mind a little bit of drawing streams here and there! So I hope to make some fun drawings on stream~

With that said, I noticed that a lot of vtubers create a persona that may or may not be similar to them. The lores of some of the characters they have can be quite extensive. As someone who enjoys creating a new world, I thought it would be cool to make my own little world.

Let's start with some characters shall we? This post will go over one of the titular characters: Lunar!

*EYESTRAIN WARNING*

Lunar Sun

Age: 30

She/They

Chaotic Good

A mischievous radio host who plays the newest & hottest music on Planet GJ504b. Underneath their fun day job, she uses her connections and gossip loving nature as an informant in the underworld. While she used to freelance her services, she now only works for the Nebula Mafia Family. Her boyfriend, the Nebula mafia don's son, is her usual customer.

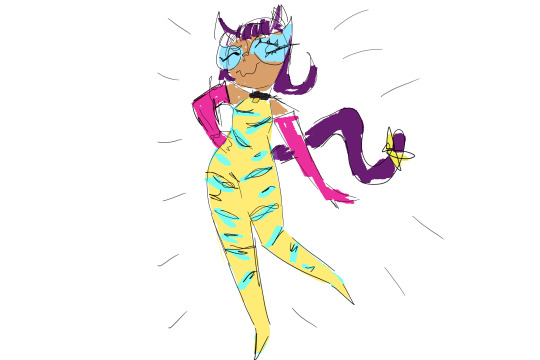

When first creating Lunar, I was actually going to go the full Vtuber route when I decided to take a stab at streaming part time. Her first iteration came from a Halloween design challenge i gave myself the previous year.

Fun fact: her skin tone is actually color picked from mine! I wanted her to be a reflection of me, so I decided to keep the mushroom hairstyle I drew as I used to rock a very good mushroom/pageboy haircut when I was younger.

Soon after I decided to change up her look. You see I tried to go in the class vtuber design route--cutouts and asymmetry. I then wanted to change her hair length as well. I wanted to get her away from being too similar to me and into her own person. At one point I thought this was the perfect length, but I liked swoop I gave it.

After a couple of passes of her clothes, it was then I liked the idea of giving her a catsuit with tiger stripes. I went through different color ways hoping to keep up with a certain identity color scheme, (two of the colors are featured in their eyes), but I rather liked the combination of these bright colors. This is where her playful personality began to take shape in my head. The bright colors of her clothes reflected her zany personality.

And thus her yr 1 design was born! I used it for the first year I started streaming! At this point, her story was pretty messy as I knew I wanted her to be an informant. I didn't think too much about it, as I just wanted to make things so I can began dipping my toe into streaming.

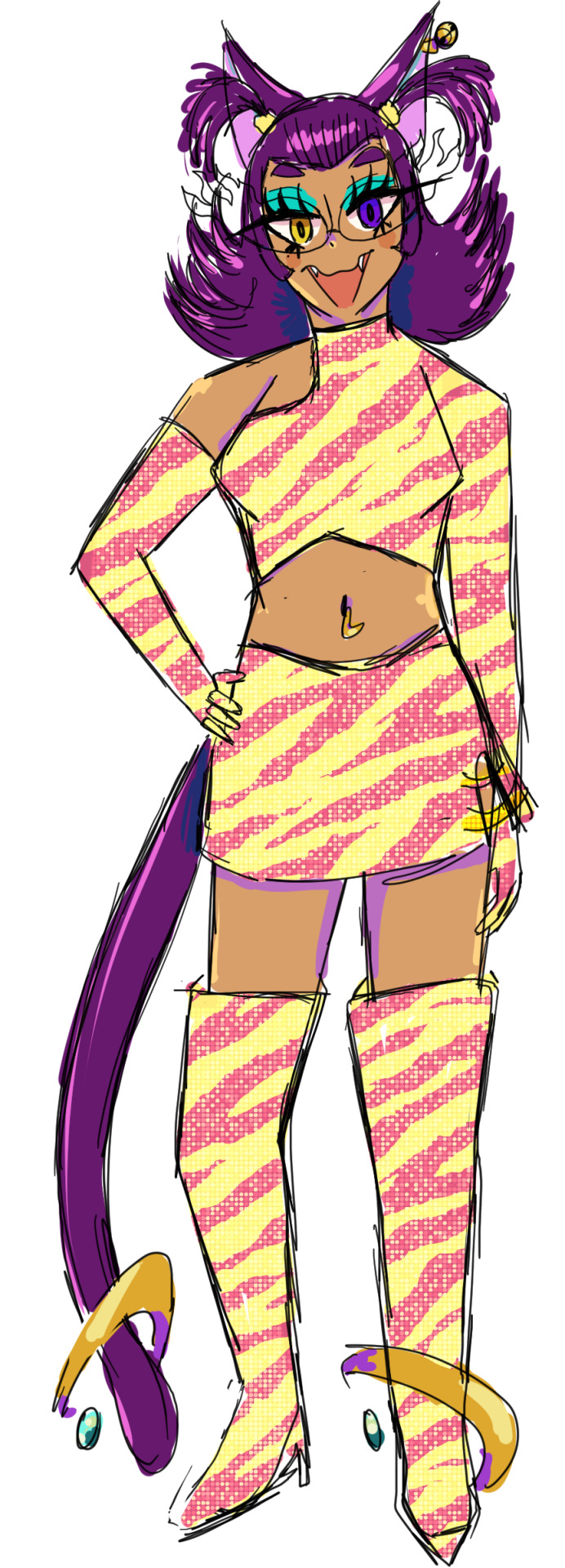

After a year however, I believed I could push myself and redesign Lunar. While I liked the concept of her design, I thought it was a bit bland. As character from outer space, something about her seemed very regular to me for some reason lol

Due to school, my poor internet, and my own mental health, I couldn't stream much and often. I set a goal for myself to use the next time I began streaming, I would put more creativity in Lunar and the world she resides in. The first step was redesigning her.

First I thought maybe I could keep the catsuit and do a different style of cut and change the colors. While I liked the monochromatic idea of the pattern, the clothes was still not hitting it for me. I then thought about giving her more skin. I was getting on the right track!

After more trials and doodles, I finally made a good design! It is reminiscent of her original design, but a bit more in line with her character. I was so happy with this design that I decided to give her more outfits for me to choose from. I like to think she has a massive closet. She has some style rules though: arms covered, prefers to show off their belly button, always wears shades.

I actually started on some illustrations! I'll finish them at some point lol

And that's it!

I'll touch more on her story when I discuss the Stream Lore a little bit later! Thank you for reading~

0 notes

Text

Weird metaphors and weird jewelry: The making of 'Candy Chaos'

IN THE BEGINNING (of the bracelet), THERE WAS THE WORD (no, not that one!) AND THE WORD WAS 'meh'.

…Uh, I suppose that 'meh' doesn't sound ls+ike a word to stir creativity. You'll have to bear with me. I will explain.

---

Whether or not you like it, I hope the result doesn't make you think 'meh'.

---

Last month, I was perusing a thrift store when I heard a siren calling. Following its song, I found a box of wooden beads. Given that my self control when it comes to beads is even worse than my self control when comes to chocolate, it should come to no surprise that I took it home. I was pleased by the wooden beads…I was less taken by the rest of its contents which either hid in cracks and crannies of the containers or had the audacity to flash their imperfection.

---

What I thought that I looked like when getting the beads.

What I probably actually looked like.

---

Some were nice enough like the little green and clear glass ones or simple but ever useful gold beads. Unfortunately there wasn't enough to make more than a few pairs of earrings and sometimes not even that. Others were like the hot pink clay beads, massively inconsistent in length. Finally, there was *gasp* plastic beads whose bright white windmill shapes refused to be ignored.

So with there not being many of the beads I did like and my hoity toity side sticking her nose up at the inferiority of the rest of the stowaways, I placed them all firmly in the 'meh' category. The logical thing to do at this point would have been to sell/give away/throw out the suckers but the only reason that beaders aren't a staple of the show 'Hoarders' is that our obsessions tend to be vaste in number but small in size. So into My Hoard of Doom (TM) they went.

Weeks later, I sat down at my worktable (which is totally not a coffee table that looks like it was hit by a tornado) determined to make something. As to what, I wasn't entirely sure what. I didn't have a massive amount of time before bed, so anything too intricate was out. My usual standby was to dig around in the bead soup and make earrings. However not that long ago, I had dealt with such earrings (I'll post about them later), which meant I had spent a fair bit of time

Writing about them.

Photographing them. (This takes too long as my equipment sucks and my training is non-existent.)

Editing the photographs. (Much like #2 when it comes to time.)

Noticing then freaking out about some miniscule flaws apparent in the photos.

Trying to convince myself that the flaws were barely noticeable and thus could be left along.

Failing at #4 and thus absolutely HAD TO fix said tiny flaws.

Is it so surprising that I felt earring-tated at the idea of making more?

Realising that as the items had (minisculely changed), I HAD TO go back to step #2. This cycle of course happened multiple times.

Is it so surprising that I felt earring-tated at the idea of making more?

However, there was a coil of memory wire nearby and sleepy minds can sometimes be a help instead of a hindrance. (Creativity of dreams seeping in?) In this state, I remembered a Facebook post asking if others ever made bracelets from random beads and my sleepy mind waved a lethargic hand at the beads I had formerly scorned.

Memory wire bracelet with random stuff? Sure, why not?

---

You'll need this to know what the heck I am talking about.

---

I recommend you reference the previous image to know what I am about to talk about.

Randomness is something of enemy of mine when it comes to design, or rather I view it as an enemy, while it is perfectly cordial to me. Like when I made the necklace 'Reflection on Asymmetry', going completely random was out of the question. I spent a good while taking beads on and off the long piece of memory wire that would be the bracelet's backbone until I felt I could produce a vague pattern to the piece. The sort that would allow me to get rid of my odd ball beads and prevent the bracelet having large boring sections, while keeping a feeling of spontaneity. It all came down to the Six Commandments:

Keep thy beads in the same relative colour family or else thy eyes will meltth. (Keep colours that more or less match or it will be ugly.)

At the Beginning and the Endth of thy memory wire make surest that there is a bead, neither small nor large, to mark the path. (Use a medium bead at the beginning and end of the bracelet length.)

Use thy smallest beads the most for they are a multitude and of those the pink most as they are as a legion to a single warrior. (You have too many small beads, so use them. Most of them are pink, so make use of them the most.)

For every fourth to eighth beads have oneth large bead or twoth with a few small beads in between. (Every 4-8 small beads have one large bead or two large beads with a couple small ones in between.)

Where there be loops at the ends of thy bracelet, let their little dangly-thingies joined in marriage to them be as unique as their fellows upon the path. (For the loops at the ends of the bracelets, have the little dangly-thingies attached to them be random-ish like the main bracelet.)

Above all else, if thou art going to follow the spirit of chaos in thy endeavour, then embrace it fully with all thy heart. This may lead to glory. (Okay, if I'm going to do weird things with this bracelet. I might as well make sure people know I am doing it on purpose. Those one or two unique beads that are sitting around for months doing nothing? There in. The white plastic windmills you poo-pooed and said belonged nowhere? I guess that means they belong here. NO obsessive matching!)

These Commandments pretty much tell the story of making the bracelet except for two things.

Firstly, for the dangly-thingies on the ends (I really need a better name for those), I didn't want to attach them directly to the bracelet loops. The loops being memory wire are really hard to manipulate so I was unsure of how good a job on them. That combined with the thinness of the pins that were used on for dangly-thingies (there's got to be a better name) made me worry that they would fall off. So instead of attaching them directly or using a jump ring to connect them, I used split rings (aka. that-key-chain-thingy) instead as they was wider and thicker than the jump rings I had. This plus the multiple loops of the split rings seemed to fit with the busy look of the rest of the bracelet.

Secondly, you see the big yellow bead on the main bracelet? It's hole was really big. In fact it was only little bigger than the pink beads thus it might might slide over them and the other small beads. The size difference was small enough that it hadn't happened yet, but I was worried I would get a grumpy customer who didn't like that there was suddenly a section of bare wire in their bracelet.

Solution? I'm tempted to say I did something cool and sophisticated but as I keep on using the so-called word 'dangly-thingy', I doubt you'd believe me anyways. I'll be honest: I spent five minutes running pink beads along the wire then shoving them into the yellow bead's hole while grunting like a pig. Once the hole was full, sliding or no sliding, I was sure there wouldn't be a sudden patch of bare wire.

Now you know how I made 'Candy Chaos' and it was really, really fun. Commandments or no, I mostly just randomly picked up and used beads. It made for an an oasis or spontaneity in a desert of order. I even found myself liking most of the things I was originally 'meh' on. The variation of colour, texture and shape made me think of a field of wildflowers in the spring. The white plastic windmills (or is it daisies now?) in particular added to this. Plus when you line two up, they act like gears, one spinning making the other one spin as well; thus, the bracelet's wearer has something to fidget with.

I write this after having a few days to process it. My final thoughts are other than missing my bedtime, I it was a success. I tried something new, used up a lot of beads that I normally would have ignored and got something I liked out of it. I will probably make more of this style.

. . . Also the word 'dangly-thingy' is starting to grow on me.

---

The bracelet 'Candy Chaos' is now for sale at my etsy store.

3 notes

·

View notes

Photo

Look How Far We’ve Come

Welcome to Year of the Pony’s second regular series (aside from the editorials), The Elements of MLP! Every month, we’ll be looking at one aspect of Friendship is Magic in some degree of depth to explore all the different parts that go together to make MLP what it is.

Part of the reason I started this year-long event was to get to the bottom of why I love this show so much, so maybe looking it piece by piece will help me appreciate the whole better.

This month, we’ve got the Element of Design and Animation, one of my personal favourites.

And, I’m not alone! So let’s go through the whole gosh darn process (or at least as many stages as I’ve got something to say on)!

Design

In the beginning, there was Lauren Faust.

If you only know one name from behind-the-scenes, it’s Lauren Faust. As MLP:FIM’s creator, she lead a lot of the development that made the show what it is visually (and in other areas, too, of course).

So, yes, Faust had an immeasurable influence on the design choices. And, because of her genuine love for My Little Pony as a kid, so did previous generations of MLP.

Figures that AJ would be the one to change the least during development (never change, AJ), but here they are: The old school Mane 6! As you probably know, all based on characters from the franchise’s history!

You’ll notice that even though the colours themselves varied, the pastel colour-scheme overall was there from the start, and it’s largely because of the franchise's roots in the 80s.

*Correction: Silver Rain on EQD pointed out to me that the cartoon commonly thought of as G2, My Little Pony Tales, is actually still G1. There is no G2 cartoon (generations are defined by new sets of the toys, not the cartoons or feature films, so even though Pony Tales is very distinct from the first two movies, it’s still G1)

The closest thing to a G2 anything besides the toys is a video game, but for this analysis I decided to keep it strictly to television and movies. So, enjoy knowing G2 is a lie!

See how the first two generations looked a bit washed out? And the next two are sort of over-saturated? In addition to looking cleaner because digital animation v.s. traditional (neither is better), G4 strikes a balance between the different colour-palettes of the franchise in a really comforting, visually satisfying way.

Soothing is probably the best word, which expertly matches the breezy, light-hearted, and loving tone of the show.

So, anyway, even after development went on, as changes were made, notes were taken, and the world we’ve come to know took shape, the show’s roots still have a surprisingly big influence on its visuals.

And my god, can we all take a second to appreciate these designs?

Every element---from characters, to creatures, to backgrounds, to that storybook thing they keep doing---make this show a visual treat. For several reasons:

Cute - the ponies are round; there are very few sharp points in their designs and that combined with the classic big eyes + cute tum + small nose and mouth = cute, makes them even freaking cuter

Instantly Recognizable - there’s a rule popularized by Simpsons creator Matt Groening that if you can recognize a character in silhouette, that’s a good design; even besides the fact that they’re ponies and you could tell them apart from human characters, there’s absolutely no doubt who’s who---I could even name the side characters if you tested me

Conveying Personality - You can tell most character’s personalities from the minute they walk on screen---take Starlight Glimmer, for instance; the first shot we see of her, she’s got kind of a 60s hairstyle, so before she even opens her mouth, you can guess she’s either got some old-fashioned ideas, or more accurately, she’s in charge of this weirdly cheery village (ever watch, well, pretty much anything from the 60s? Those fake smiles in Starlight’s village would fit right at home); her false-belief of what will make ponies truly happy is reflected in her design, and not only that, she used to have straight bangs to instantly cue to the viewer that this is a character who’s going to parallel Twilight (especially when she’s screaming about ‘creating Harmony’...)

Simplistic - Most of these designs use thick outlines and soft colours to emphasize just how cute the horses are; in relation to the backgrounds and other creatures, they easily draw the eye because the other elements are more detailed but very rarely have outlines.

Asymmetry - You’d think it would be more satisfying to an audience to see characters with symmetrical designs, but not so, and this is reflected in every single character design in the show (as well as most building designs: the Golden Oaks Library, Canterlot Castle, Cloudsdale, etc.); even Twilight, who’s bangs are cut straight across (according to the EQG universe), has a curve to her mane, highlights to the left, and a small part on one side

All of these aspects and probably more give all the designs a strong visual impact, while also making them cute as heck.

I don’t think I can say it better than this: these designs are so sweet, distinctive, and inviting that the fandom latched onto and created entire backstories and personas out of background characters. It’s like Bobba-Fett from the Star Wars franchise---in reality, he’s barely in the films, but because he managed to grab so many people’s attention with his surprisingly memorable design, he’s one of the most popular characters!

And, you know, I’m not going to lie to you. Part of the reason this show is so comforting to watch is just the soft colours and incredibly cute characters. It really is just soothing to look at. But there’s more to the visuals than just cute, pretty ponies.

Movement

One of the things I remember hearing back when I first came into the fandom in early season 2 was that some fans were downright shocked that MLP was animated in Flash--- a program so cheap and hard to animate in fluidly that most shows and projects that come out of it tend to look ... shoddy.

Which isn’t always the case, of course, good shows have been animated there, but I would say that, on the whole, shows animated in Flash seem to have great designs but less focus on fluid movement.

Like, even if you’re not an animation nerd, if I list a bunch of shows you animated with Flash, you might notice a kind of a pattern in how they move (of the ones you know):

Foster’s Home for Imaginary Friends

Johnny Test

Total Drama Island

Hi! Hi! Puffy Ami Yumi

Yin Yang Yo!

Kappa Mickey

Dan V.s.

Archer

The Gravity Falls pilot (the rest of the show was animated in ToonBoom Harmony, the same animation program My Little Pony: The Movie is being animated in!)

It’s a hard quality to describe for me, but the absolute best example probably is the Gravity Falls pilot:

youtube

See how everything looks exceedingly flat (even for 2D animation)? And how each movement tends to have this weird, unnecessary (for lack of a better word) pop to it?

That’s what Flash is known for.

I’d like to take a second to say it of course doesn’t make all of those shows bad at all; and in fact, there are a number of shows besides MLP that actually use Flash well (to the point that you wouldn’t know it’s animated in Flash).

Bob’s Burgers, Archer, Sym-Bionic Titan, The Amazing World of Gumball, even Fairly Odd Parents as of the 10th season.

I think it’s mostly just the fact that at the time we didn’t know many shows that could look this good and still use Flash.

The exciting thing is that MLP doesn’t move like that. Season 1 had its moments of animation flubs, sure, but I’ve re-watched it recently and it is animated pretty well.

A lot of moments will have a snap for emphasis (like, the manes will move how they’re supposed to), but it’s handled in a natural way that doesn’t distract from the movements we’re actually supposed to focus on.

And, no, it’s not the most sophisticated, beautiful animation out there, but it can run the gambit from snappy comedic timing to slower, dramatic scenes.

I think the only problem I’ve really noticed with G4 animation is whenever characters run in Equestria Girls.

I know, that’s really specific. I don’t even have a problem when they walk, it’s just when they’re running that it looks the most like the cheap Flash animation. It’s a hard quality for me to describe, but rewatch a scene where they have to run at all, I’m serious. It’s almost a pet peeve at this point.

It’s a hazard of the genre, I suppose. Like most Western animation, MLP focuses most of its time and energy on expression and the smaller movements as opposed to big, involved battle sequences or choreographed action (the only big battle sequence is the Dragon Ball Z Tirek fight, which was done super well, but is still a rarity in this show).

Meaning, some of the most unique bits of animation come in the form of expressions and reactions.

I always love these. Not for the meme potential (although... ), but because I know every time I see a face like that the animators went out of their way to make something distinct and insanely expressive.

And that’s not the only way they bring the visuals together. I could probably go on and on about things like the shading and lighting, the staging, the intelligent use of background to hide gags or references or just add that much more depth to a scene...

But, seriously, I’ve already eaten up so much of your dash.

I will say this, though. Friendship is Magic’s style is more gorgeous, fitting, and creative than you might think at first. It’s why the artistic side of the community exploded, and still hasn’t stopped growing. And the continual improvement in this element alone is enough to get me excited for the next season. I’ll admit it, it’s one of the biggest reasons I always look forward to seeing more.

Woo! That’s a wrap for this Elements of MLP, but I’ll have a new one for you every month this year! In the meantime, you can always check out the editorials, or, you know, whatever. I’m not gonna tell you how to live.

Year of the Pony

Header Image Only Possible Thanks to...

Elements by SpiritoftheWolf Elements by TechRainbow

Two really talented vector artists that were awesome enough to make this stuff! You’ll be seeing their names at least 12 times this year, so might as well check ‘em out now!

Pretty Pastel Ponies Practically Prancing ... Politely? Parallel? Perfectly?

#yearofthepony#animation#mlp#my little pony#analysis#friendship is magic#mlp:fim#mlp: fim#My Little Pony: Friendship is Magic#twilight sparkle#rainbow dash#pinkie pie#fluttershy#applejack#rarity#princess celestia#princess luna#sunset shimmer#starlight glimmer#discord#mlp s7

151 notes

·

View notes

Text

Interpreting and Decoding Mass Media Texts: KUWTK (Blog 2 Week 7)

Time to keep up with the Kardashians!

Who remembers this PAPER Magazine shoot of Kim Kardashian that #broketheinternet?

This image went viral in 2014 and became an on-going discussion globally. Several audiences believed this shoot was inappropriate as Kardashian had just recently become a mother and was expected to be “modest”, while other audience members celebrated the fact that she was able to express her sexuality and share her body confidence with the world post-birth. How can so many people interpret this image differently? It all has to do with semiotics, “the study of significant signs in society” (Sullivan, 2013, p. 135). You and I can have completely different understandings of this image. Professor Good discussed this concept in lecture on October 24th. Professor Good focused on the cultural studies of scholar Stuart Hall that explored this approach of how individuals come to understand reality through the use of signs and denotations of media texts.

In seminar on October 29th, we further discussed the studies of Stuart Hall as well as Saussure’s dyadic model of a sign. Signs are defined by the interaction of the signifier (the form of the sign) and the signified (the concept/idea that the signifier represents) (p. 137). In this PAPER Magazine example, the signifier entails of the actual photographed image/edit of Kim Kardashian, whereas the signified is the message/concept that PAPER Magazine’s creative director and Kim wanted to share with the audience through the cover. How can we as audiences 100% understand the intended message? The answer is, we can’t always. Audiences produce different meanings of things based on what they already know/their beliefs. This is why some individuals interpreted the cover as a strong, confident mother embracing her body and sexuality while others interpreted it as inappropriate and “too sexual”, not fully understanding its meaning.

This idea conveys the encoding and decoding of media messages. Stuart Hall “pointed out that there is always the potential for asymmetry between the message producer and audience” (Sullivan, 2013, p. 141). Have you ever posted something on Twitter or made an Instagram caption that audiences aren’t meant to take literally? Or wouldn’t entirely make sense unless the reader knew it’s backstory or connotative meaning? I have plenty of times, and most times I don’t even realize because I kind of just assume that most people will understand my intended meaning in today’s digital age. Here’s an example denotation and connotation from Keeping Up with the Kardashians:

Throughout the series, the Kardashians are heard saying “Bible” a lot. Usually, this happens in the context of trying to reassure someone, or for example, an instance where Khloe is calling Kourtney’s bluff. “Bible” is used as a Kardashian way of saying “I swear to God”. As a fan or regular viewer of the show, you would understand this frequent saying of theirs on a connotative level of meaning – ideas/feelings associated with the word. If you’re a first time viewer and you randomly hear Kim saying “Bible” or see a meme of the phrase online, you’re more likely to react to the denotative level of meaning – the literal Holy book, which may cause a bit of confusion. Millie Bobby Brown, star of Stranger Things, went on The Tonight Show Starring Jimmy Fallon and expressed her obsession with the Kardashian’s “special language”, including the connotative term “Bible”:

[Time stamp 1:25]

youtube

In Chapter 6 of the textbook, we learn that media texts are polysemic, meaning that they are capable of being interpreted in distinctly different ways based on personal experiences and cultural awareness (Sullivan, 2013, p. 142). The assigned article for this week by Granelli and Zenor (2016) focused on the polysemic television series Dexter – some audiences view him as a protagonist, some as an antagonist, and some as an anti-hero. In seminar I stated how I view Dexter as an antihero leaning more towards protagonist because of my “cultural knowledge” based on having watched the series and understanding his upbringing. We explored this idea a little deeper in seminar with the three subject positions: Dominant-hegemonic, negotiated and oppositional.

Let’s watch this short YouTube clip from Kylie Jenner’s office tour:

youtube

I’m sure you already know all about it, but I’ll explain just in case. In this clip, Kylie Jenner is waking her daughter up by singing “Rise and Shine”. I’m no vocal expert, but girly might want to invest in some singing lessons for the sake of Stormi (joking! love her). This clip went viral and was especially amusing for online audiences as Jenner was rumoured to be the lead singer of the band Terror Jr. in 2016. Hundreds of memes were created and Kylie Jenner even went as far as creating new merch products designed with the saying “Rise and Shine”. Let’s analyze the three subject positions within this media text:

Dominant/hegemonic position:

Accepts the media message as it is (a funny and viral meme)

Likely to engage with the meme and/or order “rise and shine” merchandise

Negotiated position:

Finds the meme funny, may lightly engage with it online

Unlikely to engage in merch consumption based upon the lens of personal knowledge/experiences:

Memes/trends die out quickly

Not worth it

Kylie shouldn’t profit

Oppositional position:

Resist dominant meaning of the text in a sense (finds it unfunny)

Understands the connotative meaning behind the text but disagrees with it

Likely to criticize Kylie for profiting off a meme that went viral without her intention or doing

OR likely to believe that Kris/Kylie strategically planned the viral meme

Think about the subject position(s) that you take based on this week’s content. I know a lot of people take an oppositional position when it comes to the Kardashians, but personally, I respect them and find myself more on the dominant/negotiated side of things! These three subject positions are constantly changing for audiences depending on what media texts are being consumed. Cultural knowledge also plays a big role in the subject positions. Through these positions and the study of signs, audiences can begin to reflect on the way that they encode and decode media messages and be more open minded towards other subject positions.

References:

Jennifer Good, Lecture October 24th 2019

Seminar Discussion October 29th 2019

Granelli, S., & Zenor, J. (2016) Decoding “The Code”: Reception theory and moral judgment of Dexter. International Journal of Communication, 10, 5056-5078.

Sullivan, J. (2013). Media Audiences: Effects, users, institutions and power

Images: Twitter, Google, Giphy Images

1 note

·

View note

Text

The facts about Facebook

This is a critical reading of Facebook founder Mark Zuckerberg’s article in the WSJ on Thursday, also entitled The Facts About Facebook.

Yes Mark, you’re right; Facebook turns 15 next month. What a long time you’ve been in the social media business! We’re curious as to whether you’ve also been keeping count of how many times you’ve been forced to apologize for breaching people’s trust or, well, otherwise royally messing up over the years.

It’s also true you weren’t setting out to build “a global company”. The predecessor to Facebook was a ‘hot or not’ game called ‘FaceMash’ that you hacked together while drinking beer in your Harvard dormroom. Your late night brainwave was to get fellow students to rate each others’ attractiveness — and you weren’t at all put off by not being in possession of the necessary photo data to do this. You just took it; hacking into the college’s online facebooks and grabbing people’s selfies without permission.

Blogging about what you were doing as you did it, you wrote: “I almost want to put some of these faces next to pictures of some farm animals and have people vote on which is more attractive.” Just in case there was any doubt as to the ugly nature of your intention.

The seeds of Facebook’s global business were thus sewn in a crude and consentless game of clickbait whose idea titillated you so much you thought nothing of breaching security, privacy, copyright and decency norms just to grab a few eyeballs.

So while you may not have instantly understood how potent this ‘outrageous and divisive’ eyeball-grabbing content tactic would turn out to be — oh hai future global scale! — the core DNA of Facebook’s business sits in that frat boy discovery where your eureka Internet moment was finding you could win the attention jackpot by pitting people against each other.

Pretty quickly you also realized you could exploit and commercialize human one-upmanship — gotta catch em all friend lists! popularity poke wars! — and stick a badge on the resulting activity, dubbing it ‘social’.

FaceMash was antisocial, though. And the unpleasant flipside that can clearly flow from ‘social’ platforms is something you continue not being nearly honest nor open enough about. Whether it’s political disinformation, hate speech or bullying, the individual and societal impacts of maliciously minded content shared and amplified using massively mainstream tools you control is now impossible to ignore.

Yet you prefer to play down these human impacts; as a “crazy idea”, or by implying that ‘a little’ amplified human nastiness is the necessary cost of being in the big multinational business of connecting everyone and ‘socializing’ everything.

But did you ask the father of 14-year-old Molly Russell, a British schoolgirl who took her own life in 2017, whether he’s okay with your growth vs controls trade-off? “I have no doubt that Instagram helped kill my daughter,” said Russell in an interview with the BBC this week.

After her death, Molly’s parents found she had been following accounts on Instagram that were sharing graphic material related to self-harming and suicide, including some accounts that actively encourage people to cut themselves. “We didn’t know that anything like that could possibly exist on a platform like Instagram,” said Russell.

Without a human editor in the mix, your algorithmic recommendations are blind to risk and suffering. Built for global scale, they get on with the expansionist goal of maximizing clicks and views by serving more of the same sticky stuff. And more extreme versions of things users show an interest in to keep the eyeballs engaged.

So when you write about making services that “billions” of “people around the world love and use” forgive us for thinking that sounds horribly glib. The scales of suffering don’t sum like that. If your entertainment product has whipped up genocide anywhere in the world — as the UN said Facebook did in Myanmar — it’s failing regardless of the proportion of users who are having their time pleasantly wasted on and by Facebook.

And if your algorithms can’t incorporate basic checks and safeguards so they don’t accidentally encourage vulnerable teens to commit suicide you really don’t deserve to be in any consumer-facing business at all.

Yet your article shows no sign you’ve been reflecting on the kinds of human tragedies that don’t just play out on your platform but can be an emergent property of your targeting algorithms.

You focus instead on what you call “clear benefits to this business model”.

The benefits to Facebook’s business are certainly clear. You have the billions in quarterly revenue to stand that up. But what about the costs to the rest of us? Human costs are harder to quantify but you don’t even sound like you’re trying.

You do write that you’ve heard “many questions” about Facebook’s business model. Which is most certainly true but once again you’re playing down the level of political and societal concern about how your platform operates (and how you operate your platform) — deflecting and reframing what Facebook is to cast your ad business a form of quasi philanthropy; a comfortable discussion topic and self-serving idea you’d much prefer we were all sold on.

It’s also hard to shake the feeling that your phrasing at this point is intended as a bit of an in-joke for Facebook staffers — to smirk at the ‘dumb politicians’ who don’t even know how Facebook makes money.

Y’know, like you smirked…

youtube

Then you write that you want to explain how Facebook operates. But, thing is, you don’t explain — you distract, deflect, equivocate and mislead, which has been your business’ strategy through many months of scandal (that and worst tactics — such as paying a PR firm that used oppo research tactics to discredit Facebook critics with smears).

Dodging is another special power; such as how you dodged repeat requests from international parliamentarians to be held accountable for major data misuse and security breaches.

The Zuckerberg ‘open letter’ mansplain, which typically runs to thousands of blame-shifting words, is another standard issue production from the Facebook reputation crisis management toolbox.

And here you are again, ironically enough, mansplaining in a newspaper; an industry that your platform has worked keenly to gut and usurp, hungry to supplant editorially guided journalism with the moral vacuum of algorithmically geared space-filler which, left unchecked, has been shown, time and again, lifting divisive and damaging content into public view.

The latest Zuckerberg screed has nothing new to say. It’s pure spin. We’ve read scores of self-serving Facebook apologias over the years and can confirm Facebook’s founder has made a very tedious art of selling abject failure as some kind of heroic lack of perfection.

But the spin has been going on for far, far too long. Fifteen years, as you remind us. Yet given that hefty record it’s little wonder you’re moved to pen again — imagining that another word blast is all it’ll take for the silly politicians to fall in line.

Thing is, no one is asking Facebook for perfection, Mark. We’re looking for signs that you and your company have a moral compass. Because the opposite appears to be true. (Or as one UK parliamentarian put it to your CTO last year: “I remain to be convinced that your company has integrity”.)

Facebook has scaled to such an unprecedented, global size exactly because it has no editorial values. And you say again now you want to be all things to all men. Put another way that means there’s a moral vacuum sucking away at your platform’s core; a supermassive ethical blackhole that scales ad dollars by the billions because you won’t tie the kind of process knots necessary to treat humans like people, not pairs of eyeballs.

You don’t design against negative consequences or to pro-actively avoid terrible impacts — you let stuff happen and then send in the ‘trust & safety’ team once the damage has been done.

You might call designing against negative consequences a ‘growth bottleneck’; others would say it’s having a conscience.

Everything standing in the way of scaling Facebook’s usage is, under the Zuckerberg regime, collateral damage — hence the old mantra of ‘move fast and break things’ — whether it’s social cohesion, civic values or vulnerable individuals.

This is why it takes a celebrity defamation lawsuit to force your company to dribble a little more resource into doing something about scores of professional scammers paying you to pop their fraudulent schemes in a Facebook “ads” wrapper. (Albeit, you’re only taking some action in the UK in this particular case.)

Funnily enough — though it’s not at all funny and it doesn’t surprise us — Facebook is far slower and patchier when it comes to fixing things it broke.

Of course there will always be people who thrive with a digital megaphone like Facebook thrust in their hand. Scammers being a pertinent example. But the measure of a civilized society is how it protects those who can’t defend themselves from targeted attacks or scams because they lack the protective wrap of privilege. Which means people who aren’t famous. Not public figures like Martin Lewis, the consumer champion who has his own platform and enough financial resources to file a lawsuit to try to make Facebook do something about how its platform supercharges scammers.

Zuckerberg’s slippery call to ‘fight bad content with more content’ — or to fight Facebook-fuelled societal division by shifting even more of the apparatus of civic society onto Facebook — fails entirely to recognize this asymmetry.

And even in the Lewis case, Facebook remains a winner; Lewis dropped his suit and Facebook got to make a big show of signing over £500k worth of ad credit coupons to a consumer charity that will end up giving them right back to Facebook.

The company’s response to problems its platform creates is to look the other way until a trigger point of enough bad publicity gets reached. At which critical point it flips the usual crisis PR switch and sends in a few token clean up teams — who scrub a tiny proportion of terrible content; or take down a tiny number of fake accounts; or indeed make a few token and heavily publicized gestures — before leaning heavily on civil society (and on users) to take the real strain.

You might think Facebook reaching out to respected external institutions is a positive step. A sign of a maturing mindset and a shift towards taking greater responsibility for platform impacts. (And in the case of scam ads in the UK it’s donating £3M in cash and ad credits to a bona fide consumer advice charity.)

But this is still Facebook dumping problems of its making on an already under-resourced and over-worked civic sector at the same time as its platform supersizes their workload.

In recent years the company has also made a big show of getting involved with third party fact checking organizations across various markets — using these independents to stencil in a PR strategy for ‘fighting fake news’ that also entails Facebook offloading the lion’s share of the work. (It’s not paying fact checkers anything, given the clear conflict that would represent it obviously can’t).

So again external organizations are being looped into Facebook’s mess — in this case to try to drain the swamp of fakes being fenced and amplified on its platform — even as the scale of the task remains hopeless, and all sorts of junk continues to flood into and pollute the public sphere.

What’s clear is that none of these organizations has the scale or the resources to fix problems Facebook’s platform creates. Yet it serves Facebook’s purposes to be able to point to them trying.

And all the while Zuckerberg is hard at work fighting to fend off regulation that could force his company to take far more care and spend far more of its own resources (and profits) monitoring the content it monetizes by putting it in front of eyeballs.

The Facebook founder is fighting because he knows his platform is a targeted attack; On individual attention, via privacy-hostile behaviorally targeted ads (his euphemism for this is “relevant ads”); on social cohesion, via divisive algorithms that drive outrage in order to maximize platform engagement; and on democratic institutions and norms, by systematically eroding consensus and the potential for compromise between the different groups that every society is comprised of.

In his WSJ post Zuckerberg can only claim Facebook doesn’t “leave harmful or divisive content up”. He has no defence against Facebook having put it up and enabled it to spread in the first place.

Sociopaths relish having a soapbox so unsurprisingly these people find a wonderful home on Facebook. But where does empathy fit into the antisocial media equation?

As for Facebook being a ‘free’ service — a point Zuckerberg is most keen to impress in his WSJ post — it’s of course a cliché to point out that ‘if it’s free you’re the product’. (Or as the even older saying goes: ‘There’s no such thing as a free lunch’).

But for the avoidance of doubt, “free” access does not mean cost-free access. And in Facebook’s case the cost is both individual (to your attention and your privacy); and collective (to the public’s attention and to social cohesion).

The much bigger question is who actually benefits if “everyone” is on Facebook, as Zuckerberg would prefer. Facebook isn’t the Internet. Facebook doesn’t offer the sole means of communication, digital or otherwise. People can, and do, ‘connect’ (if you want to use such a transactional word for human relations) just fine without Facebook.

So beware the hard and self-serving sell in which Facebook’s 15-year founder seeks yet again to recast privacy as an unaffordable luxury.

Actually, Mark, it’s a fundamental human right.

The best argument Zuckerberg can muster for his goal of universal Facebook usage being good for anything other than his own business’ bottom line is to suggest small businesses could use that kind of absolute reach to drive extra growth of their own.

Though he only provides a few general data-points to support the claim; saying there are “more than 90M small businesses on Facebook” which “make up a large part of our business” (how large?) — and claiming “most” (51%?) couldn’t afford TV ads or billboards (might they be able to afford other online or newspaper ads though?); he also cites a “global survey” (how many businesses surveyed?), presumably run by Facebook itself, which he says found “half the businesses on Facebook say they’ve hired more people since they joined” (but how did you ask the question, Mark?; we’re concerned it might have been rather leading), and from there he leaps to the implied conclusion that “millions” of jobs have essentially been created by Facebook.

But did you control for common causes Mark? Or are you just trying to take credit for others’ hard work because, well, it’s politically advantageous for you to do so?

Whether Facebook’s claims about being great for small business stand up to scrutiny or not, if people’s fundamental rights are being wholesale flipped for SMEs to make a few extra bucks that’s an unacceptable trade off.

“Millions” of jobs suggestively linked to Facebook sure sounds great — but you can’t and shouldn’t overlook disproportionate individual and societal costs, as Zuckerberg is urging policymakers to here.

Let’s also not forget that some of the small business ‘jobs’ that Facebook’s platform can take definitive and major credit for creating include the Macedonia teens who became hyper-adept at seeding Facebook with fake U.S. political news, around the 2016 presidential election. But presumably those aren’t the kind of jobs Zuckerberg is advocating for.

He also repeats the spurious claim that Facebook gives users “complete control” over what it does with personal information collected for advertising.

We’ve heard this time and time again from Zuckerberg and yet it remains pure BS.

WASHINGTON, DC – APRIL 10: Facebook co-founder, Chairman and CEO Mark Zuckerberg concludes his testimony before a combined Senate Judiciary and Commerce committee hearing in the Hart Senate Office Building on Capitol Hill April 10, 2018 in Washington, DC. Zuckerberg, 33, was called to testify after it was reported that 87 million Facebook users had their personal information harvested by Cambridge Analytica, a British political consulting firm linked to the Trump campaign. (Photo by Win McNamee/Getty Images)

Yo Mark! First up we’re still waiting for your much trumpeted ‘Clear History’ tool. You know, the one you claimed you thought of under questioning in Congress last year (and later used to fend off follow up questions in the European Parliament).

Reportedly the tool is due this Spring. But even when it does finally drop it represents another classic piece of gaslighting by Facebook, given how it seeks to normalize (and so enable) the platform’s pervasive abuse of its users’ data.

Truth is, there is no master ‘off’ switch for Facebook’s ongoing surveillance. Such a switch — were it to exist — would represent a genuine control for users. But Zuckerberg isn’t offering it.

Instead his company continues to groom users into accepting being creeped on by offering pantomime settings that boil down to little more than privacy theatre — if they even realize they’re there.

‘Hit the button! Reset cookies! Delete browsing history! Keep playing Facebook!’

An interstitial reset is clearly also a dilute decoy. It’s not the same as being able to erase all extracted insights Facebook’s infrastructure continuously mines from users, using these derivatives to target people with behavioral ads; tracking and profiling on an ongoing basis by creeping on browsing activity (on and off Facebook), and also by buying third party data on its users from brokers.

Multiple signals and inferences are used to flesh out individual ad profiles on an ongoing basis, meaning the files are never static. And there’s simply no way to tell Facebook to burn your digital ad mannequin. Not even if you delete your Facebook account.

Nor, indeed, is there a way to get a complete read out from Facebook on all the data it’s attached to your identity. Even in Europe, where companies are subject to strict privacy laws that place a legal requirement on data controllers to disclose all personal data they hold on a person on request, as well as who they’re sharing it with, for what purposes, under what legal grounds.

Last year Paul-Olivier Dehaye, the founder of PersonalData.IO, a startup that aims to help people control how their personal data is accessed by companies, recounted in the UK parliament how he’d spent years trying to obtain all his personal information from Facebook — with the company resorting to legal arguments to block his subject access request.

Dehaye said he had succeeded in extracting a bit more of his data from Facebook than it initially handed over. But it was still just a “snapshot”, not an exhaustive list, of all the advertisers who Facebook had shared his data with. This glimpsed tip implies a staggeringly massive personal data iceberg lurking beneath the surface of each and every one of the 2.2BN+ Facebook users. (Though the figure is likely even more massive because it tracks non-users too.)

Zuckerberg’s “complete control” wording is therefore at best self-serving and at worst an outright lie. Facebook’s business has complete control of users by offering only a superficial layer of confusing and fiddly, ever-shifting controls that demand continued presence on the platform to use them, and ongoing effort to keep on top of settings changes (which are always, to a fault, privacy hostile), making managing your personal data a life-long chore.

Facebook’s power dynamic puts the onus squarely on the user to keep finding and hitting reset button.

But this too is a distraction. Resetting anything on its platform is largely futile, given Facebook retains whatever behavioral insights it already stripped off of your data (and fed to its profiling machinery). And its omnipresent background snooping carries on unchecked, amassing fresh insights you also can’t clear.

Nor does Clear History offer any control for the non-users Facebook tracks via the pixels and social plug-ins it’s larded around the mainstream web. Zuckerberg was asked about so-called shadow profiles in Congress last year — which led to this awkward exchange where he claimed not to know what the phrase refers to.

EU MEPs also seized on the issue, pushing him to respond. He did so by attempting to conflate surveillance and security — by claiming it’s necessary for Facebook to hold this data to keep “bad content out”. Which seems a bit of an ill-advised argument to make given how badly that mission is generally going for Facebook.

Still, Zuckerberg repeats the claim in the WSJ post, saying information collected for ads is “generally important for security and operating our services” — using this to address what he couches as “the important question of whether the advertising model encourages companies like ours to use and store more information than we otherwise would”.

So, essentially, Facebook’s founder is saying that the price for Facebook’s existence is pervasive surveillance of everyone, everywhere, with or without your permission.

Though he doesn’t express that ‘fact’ as a cost of his “free” platform. RIP privacy indeed.

Another pertinent example of Zuckerberg simply not telling the truth when he wrongly claims Facebook users can control their information vis-a-vis his ad business — an example which also happens to underline how pernicious his attempts to use “security” to justify eroding privacy really are — bubbled into view last fall, when Facebook finally confessed that mobile phone numbers users had provided for the specific purpose of enabling two-factor authentication (2FA) to increase the security of their accounts were also used by Facebook for ad targeting.

A company spokesperson told us that if a user wanted to opt out of the ad-based repurposing of their mobile phone data they could use non-phone number based 2FA — though Facebook only added the ability to use an app for 2FA in May last year.

What Facebook is doing on the security front is especially disingenuous BS in that it risks undermining security practice by bundling a respected tool (2FA) with ads that creep on people.

And there’s plenty more of this kind of disingenuous nonsense in Zuckerberg’s WSJ post — where he repeats a claim we first heard him utter last May, at a conference in Paris, when he suggested that following changes made to Facebook’s consent flow, ahead of updated privacy rules coming into force in Europe, the fact European users had (mostly) swallowed the new terms, rather than deleting their accounts en masse, was a sign people were majority approving of “more relevant” (i.e more creepy) Facebook ads.

Au contraire, it shows nothing of the sort. It simply underlines the fact Facebook still does not offer users a free and fair choice when it comes to consenting to their personal data being processed for behaviorally targeted ads — despite free choice being a requirement under Europe’s General Data Protection Regulation (GDPR).

If Facebook users are forced to ‘choose’ between being creeped on or deleting their account on the dominant social service where all their friends are it’s hardly a free choice. (And GDPR complaints have been filed over this exact issue of ‘forced consent‘.)

Add to that, as we said at the time, Facebook’s GDPR tweaks were lousy with manipulative, dark pattern design. So again the company is leaning on users to get the outcomes it wants.

It’s not a fair fight, any which way you look at it. But here we have Zuckerberg, the BS salesman, trying to claim his platform’s ongoing manipulation of people already enmeshed in the network is evidence for people wanting creepy ads.

The truth is that most Facebook users remain unaware of how extensively the company creeps on them (per this recent Pew research). And fiddly controls are of course even harder to get a handle on if you’re sitting in the dark.

Zuckerberg appears to concede a little ground on the transparency and control point when he writes that: “Ultimately, I believe the most important principles around data are transparency, choice and control.” But all the privacy-hostile choices he’s made; and the faux controls he’s offered; and the data mountain he simply won’t ‘fess up to sitting on shows, beyond reasonable doubt, the company cannot and will not self-regulate.

If Facebook is allowed to continue setting its own parameters and choosing its own definitions (for “transparency, choice and control”) users won’t have even one of the three principles, let alone the full house, as well they should. Facebook will just keep moving the goalposts and marking its own homework.

You can see this in the way Zuckerberg fuzzes and elides what his company really does with people’s data; and how he muddies and muddles uses for the data — such as by saying he doesn’t know what shadow profiles are; or claiming users can download ‘all their data’; or that ad profiles are somehow essential for security; or by repurposing 2FA digits to personalize ads too.

How do you try to prevent the purpose limitation principle being applied to regulate your surveillance-reliant big data ad business? Why by mixing the data streams of course! And then trying to sew confusion among regulators and policymakers by forcing them to unpick your mess.

Much like Facebook is forcing civic society to clean up its messy antisocial impacts.

Europe’s GDPR is focusing the conversation, though, and targeted complaints filed under the bloc’s new privacy regime have shown they can have teeth and so bite back against rights incursions.

But before we put another self-serving Zuckerberg screed to rest, let’s take a final look at his description of how Facebook’s ad business works. Because this is also seriously misleading. And cuts to the very heart of the “transparency, choice and control” issue he’s quite right is central to the personal data debate. (He just wants to get to define what each of those words means.)

In the article, Zuckerberg claims “people consistently tell us that if they’re going to see ads, they want them to be relevant”. But who are these “people” of which he speaks? If he’s referring to the aforementioned European Facebook users, who accepted updated terms with the same horribly creepy ads because he didn’t offer them any alternative, we would suggest that’s not a very affirmative signal.

Now if it were true that a generic group of ‘Internet people’ were consistently saying anything about online ads the loudest message would most likely be that they don’t like them. Click through rates are fantastically small. And hence also lots of people using ad blocking tools. (Growth in usage of ad blockers has also occurred in parallel with the increasing incursions of the adtech industrial surveillance complex.)

So Zuckerberg’s logical leap to claim users of free services want to be shown only the most creepy ads is really a very odd one.

Let’s now turn to Zuckerberg’s use of the word “relevant”. As we noted above, this is a euphemism. It conflates many concepts but principally it’s used by Facebook as a cloak to shield and obscure the reality of what it’s actually doing (i.e. privacy-hostile people profiling to power intrusive, behaviourally microtargeted ads) in order to avoid scrutiny of exactly those creepy and intrusive Facebook practices.

Yet the real sleight of hand is how Zuckerberg glosses over the fact that ads can be relevant without being creepy. Because ads can be contextual. They don’t have to be behaviorally targeted.

Ads can be based on — for example — a real-time search/action plus a user’s general location. Without needing to operate a vast, all-pervasive privacy-busting tracking infrastructure to feed open-ended surveillance dossiers on what everyone does online, as Facebook chooses to.

And here Zuckerberg gets really disingenuous because he uses a benign-sounding example of a contextual ad (the example he chooses contains an interest and a general location) to gloss over a detail-light explanation of how Facebook’s people tracking and profiling apparatus works.

“Based on what pages people like, what they click on, and other signals, we create categories — for example, people who like pages about gardening and live in Spain — and then charge advertisers to show ads to that category,” he writes, with that slipped in reference to “other signals” doing some careful shielding work there.

Other categories that Facebook’s algorithms have been found ready and willing to accept payment to run ads against in recent years include “jew-hater”, “How to burn Jews” and “Hitler did nothing wrong”.

Funnily enough Zuckerberg doesn’t mention those actual Facebook microtargeting categories in his glossy explainer of how its “relevant” ads business works. But they offer a far truer glimpse of the kinds of labels Facebook’s business sticks on people.

As we wrote last week, the case against behavioral ads is stacking up. Zuckerberg’s attempt to spin the same self-serving lines should really fool no one at this point.

Nor should regulators be derailed by the lie that Facebook’s creepy business model is the only version of adtech possible. It’s not even the only version of profitable adtech currently available. (Contextual ads have made Google alternative search engine DuckDuckGo profitable since 2014, for example.)

Simply put, adtech doesn’t have to be creepy to work. And ads that don’t creep on people would give publishers greater ammunition to sell ad block using readers on whitelisting their websites. A new generation of people-sensitive startups are also busy working on new forms of ad targeting that bake in privacy by design.

And with legal and regulatory risk rising, intrusive and creepy adtech that demands the equivalent of ongoing strip searches of every Internet user on the planet really look to be on borrowed time.

Facebook’s problem is it scrambled for big data and, finding it easy to suck up tonnes of the personal stuff on the unregulated Internet, built an antisocial surveillance business that needs to capture both sides of its market — eyeballs and advertisers — and keep them buying to an exploitative and even abusive relationship for its business to keep minting money.

Pivoting that tanker would certainly be tough, and in any case who’d trust a Zuckerberg who suddenly proclaimed himself the privacy messiah?

But it sure is a long way from ‘move fast and break things’ to trying to claim there’s only one business model to rule them all.

from RSSMix.com Mix ID 8204425 https://tcrn.ch/2S42YXt via IFTTT

0 notes

Text

The facts about Facebook

This is a critical reading of Facebook founder Mark Zuckerberg’s article in the WSJ on Thursday, also entitled The Facts About Facebook.

Yes Mark, you’re right; Facebook turns 15 next month. What a long time you’ve been in the social media business! We’re curious as to whether you’ve also been keeping count of how many times you’ve been forced to apologize for breaching people’s trust or, well, otherwise royally messing up over the years.

It’s also true you weren’t setting out to build “a global company”. The predecessor to Facebook was a ‘hot or not’ game called ‘FaceMash’ that you hacked together while drinking beer in your Harvard dormroom. Your late night brainwave was to get fellow students to rate each others’ attractiveness — and you weren’t at all put off by not being in possession of the necessary photo data to do this. You just took it; hacking into the college’s online facebooks and grabbing people’s selfies without permission.

Blogging about what you were doing as you did it, you wrote: “I almost want to put some of these faces next to pictures of some farm animals and have people vote on which is more attractive.” Just in case there was any doubt as to the ugly nature of your intention.

The seeds of Facebook’s global business were thus sewn in a crude and consentless game of clickbait whose idea titillated you so much you thought nothing of breaching security, privacy, copyright and decency norms just to grab a few eyeballs.

So while you may not have instantly understood how potent this ‘outrageous and divisive’ eyeball-grabbing content tactic would turn out to be — oh hai future global scale! — the core DNA of Facebook’s business sits in that frat boy discovery where your eureka Internet moment was finding you could win the attention jackpot by pitting people against each other.

Pretty quickly you also realized you could exploit and commercialize human one-upmanship — gotta catch em all friend lists! popularity poke wars! — and stick a badge on the resulting activity, dubbing it ‘social’.

FaceMash was antisocial, though. And the unpleasant flipside that can clearly flow from ‘social’ platforms is something you continue not being nearly honest nor open enough about. Whether it’s political disinformation, hate speech or bullying, the individual and societal impacts of maliciously minded content shared and amplified using massively mainstream tools you control is now impossible to ignore.

Yet you prefer to play down these human impacts; as a “crazy idea”, or by implying that ‘a little’ amplified human nastiness is the necessary cost of being in the big multinational business of connecting everyone and ‘socializing’ everything.

But did you ask the father of 14-year-old Molly Russell, a British schoolgirl who took her own life in 2017, whether he’s okay with your growth vs controls trade-off? “I have no doubt that Instagram helped kill my daughter,” said Russell in an interview with the BBC this week.

After her death, Molly’s parents found she had been following accounts on Instagram that were sharing graphic material related to self-harming and suicide, including some accounts that actively encourage people to cut themselves. “We didn’t know that anything like that could possibly exist on a platform like Instagram,” said Russell.

Without a human editor in the mix, your algorithmic recommendations are blind to risk and suffering. Built for global scale, they get on with the expansionist goal of maximizing clicks and views by serving more of the same sticky stuff. And more extreme versions of things users show an interest in to keep the eyeballs engaged.

So when you write about making services that “billions” of “people around the world love and use” forgive us for thinking that sounds horribly glib. The scales of suffering don’t sum like that. If your entertainment product has whipped up genocide anywhere in the world — as the UN said Facebook did in Myanmar — it’s failing regardless of the proportion of users who are having their time pleasantly wasted on and by Facebook.

And if your algorithms can’t incorporate basic checks and safeguards so they don’t accidentally encourage vulnerable teens to commit suicide you really don’t deserve to be in any consumer-facing business at all.

Yet your article shows no sign you’ve been reflecting on the kinds of human tragedies that don’t just play out on your platform but can be an emergent property of your targeting algorithms.

You focus instead on what you call “clear benefits to this business model”.

The benefits to Facebook’s business are certainly clear. You have the billions in quarterly revenue to stand that up. But what about the costs to the rest of us? Human costs are harder to quantify but you don’t even sound like you’re trying.