#High-throughput Sequencing

Explore tagged Tumblr posts

Text

youtube

#lncRNA expression#follicular fluid#exosomes#obesity#polycystic ovary syndrome#PCOS#high-throughput sequencing#gene expression profiling#molecular mechanisms#biomarkers#metabolic syndrome#reproductive health#fertility research#ovarian function#non-coding RNA#genomics#endocrine disorders#therapeutic targets#diagnostic tools#personalized medicine#Youtube

0 notes

Text

Tech Breakdown: What Is a SuperNIC? Get the Inside Scoop!

The most recent development in the rapidly evolving digital realm is generative AI. A relatively new phrase, SuperNIC, is one of the revolutionary inventions that makes it feasible.

Describe a SuperNIC

On order to accelerate hyperscale AI workloads on Ethernet-based clouds, a new family of network accelerators called SuperNIC was created. With remote direct memory access (RDMA) over converged Ethernet (RoCE) technology, it offers extremely rapid network connectivity for GPU-to-GPU communication, with throughputs of up to 400Gb/s.

SuperNICs incorporate the following special qualities:

Ensuring that data packets are received and processed in the same sequence as they were originally delivered through high-speed packet reordering. This keeps the data flow’s sequential integrity intact.

In order to regulate and prevent congestion in AI networks, advanced congestion management uses network-aware algorithms and real-time telemetry data.

In AI cloud data centers, programmable computation on the input/output (I/O) channel facilitates network architecture adaptation and extension.

Low-profile, power-efficient architecture that effectively handles AI workloads under power-constrained budgets.

Optimization for full-stack AI, encompassing system software, communication libraries, application frameworks, networking, computing, and storage.

Recently, NVIDIA revealed the first SuperNIC in the world designed specifically for AI computing, built on the BlueField-3 networking architecture. It is a component of the NVIDIA Spectrum-X platform, which allows for smooth integration with the Ethernet switch system Spectrum-4.

The NVIDIA Spectrum-4 switch system and BlueField-3 SuperNIC work together to provide an accelerated computing fabric that is optimized for AI applications. Spectrum-X outperforms conventional Ethernet settings by continuously delivering high levels of network efficiency.

Yael Shenhav, vice president of DPU and NIC products at NVIDIA, stated, “In a world where AI is driving the next wave of technological innovation, the BlueField-3 SuperNIC is a vital cog in the machinery.” “SuperNICs are essential components for enabling the future of AI computing because they guarantee that your AI workloads are executed with efficiency and speed.”

The Changing Environment of Networking and AI

Large language models and generative AI are causing a seismic change in the area of artificial intelligence. These potent technologies have opened up new avenues and made it possible for computers to perform new functions.

GPU-accelerated computing plays a critical role in the development of AI by processing massive amounts of data, training huge AI models, and enabling real-time inference. While this increased computing capacity has created opportunities, Ethernet cloud networks have also been put to the test.

The internet’s foundational technology, traditional Ethernet, was designed to link loosely connected applications and provide wide compatibility. The complex computational requirements of contemporary AI workloads, which include quickly transferring large amounts of data, closely linked parallel processing, and unusual communication patterns all of which call for optimal network connectivity were not intended for it.

Basic network interface cards (NICs) were created with interoperability, universal data transfer, and general-purpose computing in mind. They were never intended to handle the special difficulties brought on by the high processing demands of AI applications.

The necessary characteristics and capabilities for effective data transmission, low latency, and the predictable performance required for AI activities are absent from standard NICs. In contrast, SuperNICs are designed specifically for contemporary AI workloads.

Benefits of SuperNICs in AI Computing Environments

Data processing units (DPUs) are capable of high throughput, low latency network connectivity, and many other sophisticated characteristics. DPUs have become more and more common in the field of cloud computing since its launch in 2020, mostly because of their ability to separate, speed up, and offload computation from data center hardware.

SuperNICs and DPUs both have many characteristics and functions in common, however SuperNICs are specially designed to speed up networks for artificial intelligence.

The performance of distributed AI training and inference communication flows is highly dependent on the availability of network capacity. Known for their elegant designs, SuperNICs scale better than DPUs and may provide an astounding 400Gb/s of network bandwidth per GPU.

When GPUs and SuperNICs are matched 1:1 in a system, AI workload efficiency may be greatly increased, resulting in higher productivity and better business outcomes.

SuperNICs are only intended to speed up networking for cloud computing with artificial intelligence. As a result, it uses less processing power than a DPU, which needs a lot of processing power to offload programs from a host CPU.

Less power usage results from the decreased computation needs, which is especially important in systems with up to eight SuperNICs.

One of the SuperNIC’s other unique selling points is its specialized AI networking capabilities. It provides optimal congestion control, adaptive routing, and out-of-order packet handling when tightly connected with an AI-optimized NVIDIA Spectrum-4 switch. Ethernet AI cloud settings are accelerated by these cutting-edge technologies.

Transforming cloud computing with AI

The NVIDIA BlueField-3 SuperNIC is essential for AI-ready infrastructure because of its many advantages.

Maximum efficiency for AI workloads: The BlueField-3 SuperNIC is perfect for AI workloads since it was designed specifically for network-intensive, massively parallel computing. It guarantees bottleneck-free, efficient operation of AI activities.

Performance that is consistent and predictable: The BlueField-3 SuperNIC makes sure that each job and tenant in multi-tenant data centers, where many jobs are executed concurrently, is isolated, predictable, and unaffected by other network operations.

Secure multi-tenant cloud infrastructure: Data centers that handle sensitive data place a high premium on security. High security levels are maintained by the BlueField-3 SuperNIC, allowing different tenants to cohabit with separate data and processing.

Broad network infrastructure: The BlueField-3 SuperNIC is very versatile and can be easily adjusted to meet a wide range of different network infrastructure requirements.

Wide compatibility with server manufacturers: The BlueField-3 SuperNIC integrates easily with the majority of enterprise-class servers without using an excessive amount of power in data centers.

#Describe a SuperNIC#On order to accelerate hyperscale AI workloads on Ethernet-based clouds#a new family of network accelerators called SuperNIC was created. With remote direct memory access (RDMA) over converged Ethernet (RoCE) te#it offers extremely rapid network connectivity for GPU-to-GPU communication#with throughputs of up to 400Gb/s.#SuperNICs incorporate the following special qualities:#Ensuring that data packets are received and processed in the same sequence as they were originally delivered through high-speed packet reor#In order to regulate and prevent congestion in AI networks#advanced congestion management uses network-aware algorithms and real-time telemetry data.#In AI cloud data centers#programmable computation on the input/output (I/O) channel facilitates network architecture adaptation and extension.#Low-profile#power-efficient architecture that effectively handles AI workloads under power-constrained budgets.#Optimization for full-stack AI#encompassing system software#communication libraries#application frameworks#networking#computing#and storage.#Recently#NVIDIA revealed the first SuperNIC in the world designed specifically for AI computing#built on the BlueField-3 networking architecture. It is a component of the NVIDIA Spectrum-X platform#which allows for smooth integration with the Ethernet switch system Spectrum-4.#The NVIDIA Spectrum-4 switch system and BlueField-3 SuperNIC work together to provide an accelerated computing fabric that is optimized for#Yael Shenhav#vice president of DPU and NIC products at NVIDIA#stated#“In a world where AI is driving the next wave of technological innovation#the BlueField-3 SuperNIC is a vital cog in the machinery.” “SuperNICs are essential components for enabling the future of AI computing beca

1 note

·

View note

Text

Fascinating Role of Genomics in Drug Discovery and Development

This article dives deep into the significance of genomics in drug discovery and development, highlighting well-known genomic-based drug development services that are driving the future of pharmaceutical therapies. #genomics #drugdiscovery

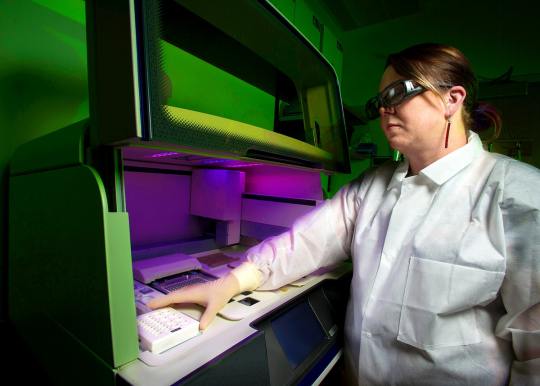

A scientist using a whole genome DNA sequencer, in order to determine the “DNA fingerprint” of a specific bacterium. Original image sourced from US Government department: Public Health Image Library, Centers for Disease Control and Prevention. Under US law this image is copyright free, please credit the government department whenever you can”. by Centers for Disease Control and Prevention is…

View On WordPress

#AI Tools for Predicting Risk of Genetic Diseases#Artificial Intelligence and Genomics#Role of Genomics and Companion Diagnostics#Role of Genomics in Biomarker Discovery#Role of Genomics in Drug Discovery and Development#Role of Genomics in Drug Repurposing#Role of Genomics in Personalized Medicine#Role of Genomics in Target Identification and Validation#Role of High-Throughput Sequencing

0 notes

Text

CRISPR screening libraries are powerful tools for high-throughput gene function research. Ubigene offers comprehensive CRISPR screening services, from library construction to data analysis. Our services include:

CRISPR-iScreen™ Technology: A proprietary platform for efficient CRISPR screening with high coverage and uniformity.

Library Plasmid Preparation: Amplification and validation of CRISPR library plasmids.

Custom CRISPR Library: Design and construction of tailored CRISPR libraries.

Library Virus Packaging: High-titer lentivirus production for library transduction.

Cell Pool Construction: Establishment of stable cell pools for screening.

Functional Screening and NGS Analysis: Execution of screening experiments and next-generation sequencing analysis.

Ubigene has 35+ ready-to-use libraries and provides one-stop services for your CRISPR screening needs. Explore our offerings and learn how we can accelerate your research by the link!

2 notes

·

View notes

Text

“The DeFi Game Changer on Solana: Unlocking Unprecedented Opportunities”

Introduction

In the dynamic world of decentralized finance (DeFi), new platforms and innovations are constantly reshaping the landscape. Among these, Solana has emerged as a game-changer, offering unparalleled speed, low costs, and robust scalability. This blog delves into how Solana is revolutionizing DeFi, why it stands out from other blockchain platforms, and what this means for investors, developers, and users.

What is Solana?

Solana is a high-performance blockchain designed to support decentralized applications and cryptocurrencies. Launched in 2020, it addresses some of the most significant challenges in blockchain technology, such as scalability, speed, and high transaction costs. Solana’s architecture allows it to process thousands of transactions per second (TPS) at a fraction of the cost of other platforms.

Why Solana is a DeFi Game Changer

1. High-Speed Transactions

One of Solana’s most remarkable features is its transaction speed. Solana can handle over 65,000 transactions per second (TPS), far exceeding the capabilities of many other blockchains, including Ethereum. This high throughput is achieved through its unique Proof of History (PoH) consensus mechanism, which timestamps transactions, allowing them to be processed quickly and efficiently.

2. Low Transaction Fees

Transaction fees on Solana are incredibly low, often less than a fraction of a cent. This affordability is crucial for DeFi applications, where high transaction volumes can lead to significant costs on other platforms. Low fees make Solana accessible to a broader range of users and developers, promoting more widespread adoption of DeFi solutions.

3. Scalability

Solana’s architecture is designed to scale without compromising performance. This scalability ensures that as the number of users and applications on the platform grows, Solana can handle the increased load without experiencing slowdowns or high fees. This feature is essential for DeFi projects that require reliable and consistent performance.

4. Robust Security

Security is a top priority for any blockchain platform, and Solana is no exception. It employs advanced cryptographic techniques to ensure that transactions are secure and tamper-proof. This high level of security is critical for DeFi applications, where the integrity of financial transactions is paramount.

Key Innovations Driving Solana’s Success in DeFi

Proof of History (PoH)

Solana’s Proof of History (PoH) is a novel consensus mechanism that timestamps transactions before they are processed. This method creates a historical record that proves that transactions have occurred in a specific sequence, enhancing the efficiency and speed of the network. PoH reduces the computational burden on validators, allowing Solana to achieve high throughput and low latency.

Tower BFT

Tower Byzantine Fault Tolerance (BFT) is Solana’s implementation of a consensus algorithm designed to maximize speed and security. Tower BFT leverages the synchronized clock provided by PoH to achieve consensus quickly and efficiently. This approach ensures that the network remains secure and resilient, even as it scales.

Sealevel

Sealevel is Solana’s parallel processing engine that enables the simultaneous execution of thousands of smart contracts. Unlike other blockchains, where smart contracts often face bottlenecks due to limited processing capacity, Sealevel ensures that Solana can handle multiple contracts concurrently. This capability is crucial for the development of complex DeFi applications that require high performance and reliability.

Gulf Stream

Gulf Stream is Solana’s mempool-less transaction forwarding protocol. It enables validators to forward transactions to the next set of validators before the current set of transactions is finalized. This feature reduces confirmation times, enhances the network’s efficiency, and supports high transaction throughput.

Solana’s DeFi Ecosystem

Leading DeFi Projects on Solana

Solana’s ecosystem is rapidly expanding, with numerous DeFi projects leveraging its unique features. Some of the leading DeFi projects on Solana include:

Serum: A decentralized exchange (DEX) that offers lightning-fast trading and low transaction fees. Serum is built on Solana and provides a fully on-chain order book, enabling users to trade assets efficiently and securely.

Raydium: An automated market maker (AMM) and liquidity provider built on Solana. Raydium integrates with Serum’s order book, allowing users to access deep liquidity and trade at competitive prices.

Saber: A cross-chain stablecoin exchange that facilitates seamless trading of stablecoins across different blockchains. Saber leverages Solana’s speed and low fees to provide an efficient and cost-effective stablecoin trading experience.

Mango Markets: A decentralized trading platform that combines the features of a DEX and a lending protocol. Mango Markets offers leverage trading, lending, and borrowing, all powered by Solana’s high-speed infrastructure.

The Future of DeFi on Solana

The future of DeFi on Solana looks incredibly promising, with several factors driving its continued growth and success:

Growing Developer Community: Solana’s developer-friendly environment and comprehensive resources attract a growing community of developers. This community is constantly innovating and creating new DeFi applications, contributing to the platform’s vibrant ecosystem.

Strategic Partnerships: Solana has established strategic partnerships with major players in the crypto and tech industries. These partnerships provide additional resources, support, and credibility, driving further adoption of Solana-based DeFi solutions.

Cross-Chain Interoperability: Solana is actively working on cross-chain interoperability, enabling seamless integration with other blockchain networks. This capability will enhance the utility of Solana-based DeFi applications and attract more users to the platform.

Institutional Adoption: As DeFi continues to gain mainstream acceptance, institutional investors are increasingly looking to platforms like Solana. Its high performance, low costs, and robust security make it an attractive option for institutional use cases.

How to Get Started with DeFi on Solana

Step-by-Step Guide

Set Up a Solana Wallet: To interact with DeFi applications on Solana, you’ll need a compatible wallet. Popular options include Phantom, Sollet, and Solflare. These wallets provide a user-friendly interface for managing your SOL tokens and interacting with DeFi protocols.

Purchase SOL Tokens: SOL is the native cryptocurrency of the Solana network. You’ll need SOL tokens to pay for transaction fees and interact with DeFi applications. You can purchase SOL on major cryptocurrency exchanges like Binance, Coinbase, and FTX.

Explore Solana DeFi Projects: Once you have SOL tokens in your wallet, you can start exploring the various DeFi projects on Solana. Visit platforms like Serum, Raydium, Saber, and Mango Markets to see what they offer and how you can benefit from their services.

Provide Liquidity: Many DeFi protocols on Solana offer opportunities to provide liquidity and earn rewards. By depositing your assets into liquidity pools, you can earn a share of the trading fees generated by the protocol.

Participate in Governance: Some Solana-based DeFi projects allow token holders to participate in governance decisions. By staking your tokens and voting on proposals, you can have a say in the future development and direction of the project.

Conclusion

Solana is undoubtedly a game-changer in the DeFi space, offering unparalleled speed, low costs, scalability, and security. Its innovative features and growing ecosystem make it an ideal platform for developers, investors, and users looking to leverage the benefits of decentralized finance. As the DeFi landscape continues to evolve, Solana is well-positioned to lead the charge, unlocking unprecedented opportunities for financial innovation and inclusion.

Whether you’re a developer looking to build the next big DeFi application or an investor seeking high-growth opportunities, Solana offers a compelling and exciting path forward. Dive into the world of Solana and discover how it’s transforming the future of decentralized finance.

#solana#defi#dogecoin#bitcoin#token creation#blockchain#crypto#investment#currency#token generator#defib#digitalcurrency#ethereum

3 notes

·

View notes

Text

Navigating the Complexity of Alternative Splicing in Eukaryotic Gene Expression: A Molecular Odyssey

Embarking on the journey of molecular biology exposes students to the marvels and intricacies of life at the molecular level. One captivating aspect within this domain is the phenomenon of alternative splicing, where a single gene orchestrates a symphony of diverse protein isoforms. As students grapple with questions related to this molecular intricacy, the role of a reliable molecular biology Assignment Helper becomes indispensable. This blog delves into a challenging question, exploring the mechanisms and consequences of alternative splicing, shedding light on its pivotal role in molecular biology.

Question: Explain the mechanisms and consequences of alternative splicing in eukaryotic gene expression, highlighting its role in generating proteomic diversity and the potential impact on cellular function. Additionally, discuss any recent advancements or discoveries that have provided insight into the regulation and functional significance of alternative splicing.

Answer: Alternative splicing, a maestro in the grand composition of gene expression, intricately weaves the fabric of molecular diversity. Mechanistically, this phenomenon employs exon skipping, intron retention, and alternative 5' or 3' splice sites to sculpt multiple mRNA isoforms from a single gene.

The repercussions of alternative splicing resonate deeply within the proteomic landscape. Proteins, diverse in function, emerge as a consequence, adding layers of complexity to cellular processes. Tissue-specific expression, another outcome, paints a vivid picture of the nuanced orchestration of cellular differentiation.

Regulating this intricate dance of alternative splicing involves an ensemble cast of splicing factors, enhancers, silencers, and epigenetic modifications. In the ever-evolving landscape, recent breakthroughs in high-throughput sequencing techniques, notably RNA-seq, offer a panoramic view of splicing patterns across diverse tissues and conditions. CRISPR/Cas9 technology, a molecular tool of precision, enables the manipulation of splicing factor expression, unraveling their roles in the intricate regulation of alternative splicing.

In the dynamic realm of molecular biology, alternative splicing emerges as a linchpin. Specific splicing events, linked to various diseases, beckon researchers towards therapeutic interventions. The complexities embedded in this molecular tapestry underscore the perpetual need for exploration and comprehension.

Conclusion: The odyssey through alternative splicing unveils its prominence as a cornerstone in the narrative of molecular biology. From sculpting proteomic diversity to influencing cellular functions, alternative splicing encapsulates the essence of molecular intricacies. For students navigating this terrain, the exploration of questions like these not only deepens understanding but also propels us into a realm of limitless possibilities.

#molecular biology assignment help#biology assignment help#university#college#assignment help#pay to do assignment

9 notes

·

View notes

Text

I kind wanna try sage because I have so many complaints about scholar's job design. even without having that much experience with the others, I've heard that it's the healer with the most "depth" out of the four, but I wonder how much of it is just due to how many actions that are typically always pressed in sequence are spread across 2 or 3 buttons. as I unlock more of my kit it increasingly feels that in an attempt to give the player more freedom with how they utilize it much of it ends up in conflict with itself, and even becomes a distraction, because you have to weave so many oGCDs to do things that realistically should be tied to a single button or have an implicit added complexity due to being tied to your pet for class fantasy reasons, but not in a way which feels "organic"

pet management is the bane of all pet classes in every MMO but it feels particularly salient with scholar because so much of their kit is tied to your pet being :

within range of target

in the middle of an action, or queued for any other actions

currently spawned and capable of accepting commands

and there's zero elements in the UI to indicate the current state for any of these conditions, you just kinda have to memorize that there's a completely different tempo to fairy abilities that don't follow the same rules for oGCD weaving by the player, hope you can keep track of where you placed it and remember to place it again every time you use dissipation — and if the fight features stage transitions also remember to summon it again! like it's just completely pointless mental load for the healer. pet abilities would be overpowered if they didn't have limited ranges, but your pet shouldn't lag behind you so much that it's usually best to lock it in one place at the start of the fight for fear that it will be too far away to be useful during a mechanic where players need to spread, it shouldn't require so much babysitting, and if it gets told to move somewhere such that it's more convenient to dish-out heals when the healer must be separated from the tanks or to minimize line of sighting, it shouldn't despawn when you get too far away such that you lose an oGCD to summon it again. this is not a punishment for not playing your job well, it's a punishment for trying to plan ahead in a fight and hassling with the limitations of a poorly implemented pet system

the fairy gauge is woefully underwhelming. spend heals, to gain more heals, tied to a single ability that requires manually selecting a target and an oGCD, that does zero instant healing but instead ticks as a very powerful regen, after a substantial delay. oh, and every time you use any of your five fairy abilities, or dissipation, this stops and you need to re-select the target and then wait several seconds for it to start again! it's baffling. I understand the niche it is trying to fulfill, and I want to use it more often, but it involves so many steps and has such a long delay between when you press the key to when it actually starts doing something that nearly every time the co-healer has already topped them off and it is wasted. for one there shouldn't be any delay between you pressing the button and the first tick, it should function just like a medica regen. it also feels extremely involved to manage:

you press aetherflow -> you spend all your aetherflow stacks using energy drain or oGCD heals -> you do this 3 times over the course of at least 2 minutes -> you can now choose a tank to sustain for a few seconds as long as you don't press any of your other fairy abilities (which are an extremely important part of your kit)

sages get Kardia which just passively provides the same function of high throughput single target regen while they DPS, without conflicting with the rest of their kit and requiring it be constantly monitored by selecting a target and then turning on and off to not be wasteful, it just works.

like, it's not that aetherpact singularly annoys me, because ultimately it's such a tiny speck of what defines the job, it's just that the only way to use it efficiently is kind of the culmination of all the contradictions inherent to playing scholar, you're spending resources to create resources which you barely use because they are mutually exclusive with the rest of your kit and rely on a clunky pet system. oh, Eos despawned again. it's just like. annoying. and it particularly feels like a slap in the face getting that at level 70 and seeing the job gauge tutorial for the first time and then learning ONLY this ability uses the entire gauge, and you can't use it for anything else. the idea of trading heals for damage when you've comfortable with a fight is something they should lean on further, and it's painful seeing that gauge full most of the fight because I barely have to use it, while white mages get to use their lilies offensively

4 notes

·

View notes

Text

Maximizing Efficiency: Best Practices for Using Sequencing Consumables

By implementing these best practices, researchers can streamline sequencing workflows, increase throughput, and achieve more consistent and reproducible results in genetic research. Sequencing Consumables play a crucial role in genetic research, facilitating the preparation, sequencing, and analysis of DNA samples. To achieve optimal results and maximize efficiency in sequencing workflows, it's essential to implement best practices for using these consumables effectively.

Proper planning and organization are essential for maximizing efficiency when using Sequencing Consumables. Before starting a sequencing experiment, take the time to carefully plan out the workflow, including sample preparation, library construction, sequencing runs, and data analysis. Ensure that all necessary consumables, reagents, and equipment are readily available and properly labeled to minimize disruptions and delays during the experiment.

Optimizing sample preparation workflows is critical for maximizing efficiency in sequencing experiments. When working with Sequencing Consumables for sample preparation, follow manufacturer protocols and recommendations closely to ensure consistent and reproducible results. Use high-quality consumables and reagents, and perform regular quality control checks to monitor the performance of the workflow and identify any potential issues early on.

Utilizing automation technologies can significantly increase efficiency when working with Sequencing Consumables. Automated sample preparation systems and liquid handling robots can streamline repetitive tasks, reduce human error, and increase throughput. By automating sample processing and library construction workflows, researchers can save time and resources while improving consistency and reproducibility in sequencing experiments.

Get More Insights On This Topic: Sequencing Consumables

#Sequencing Consumables#DNA Sequencing#Laboratory Supplies#Genetic Analysis#Next-Generation Sequencing#Molecular Biology#Research Tools#Bioinformatics

2 notes

·

View notes

Text

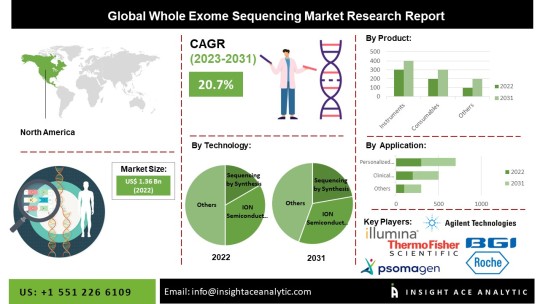

Whole Exome Sequencing Market Projected to Reach $7.30 Billion by 2031: Key Trends and Insights

InsightAce Analytic Pvt. Ltd. announces the release of a market assessment report on the "Global Whole Exome Sequencing Market Size, Share & Trends Analysis Report By Product (Instruments, Consumables, Services), By Technology (synthesis, ion semiconductor sequencing), By Workflow (pre-sequencing, sequencing and data analysis), By Application (Clinical Diagnostics, Drug Discovery & Development, Personalized Medicine), By End-User (Academic & Research Institutes, Hospitals & Clinics, Pharmaceutical & Biotechnology Companies)- Market Outlook And Industry Analysis 2031"

The Global Whole Exome Sequencing Market is estimated to reach over USD 7.30 billion by 2031, exhibiting a CAGR of 20.7% during the forecast period.

A genome's whole-exome and protein-coding genes can be sequenced using the exome sequencing technique. Using high-throughput DNA sequencing techniques, the exonic DNA is sequenced after the DNA segments that code for proteins or exons have been chosen. Miller Syndrome and Alzheimer's disease are two illnesses caused by genetic changes that can be found using the exome sequencing approach.

Get Free Access to Demo Report, Excel Pivot and ToC: https://www.insightaceanalytic.com/request-sample/1770

The main factors propelling the global whole exome sequencing market are the desire to identify uncommon diseases, the expansion of genomics and next-generation sequencing R&D, and the demand for personalized medication. Global market revenue growth will continue to be fueled by increasing funding in research-based projects, expanding alliances among top companies and research institutions for drug discovery, and a growing preference for next-generation sequencing techniques for diagnosing, treating, and monitoring chronic diseases.

New exome sequencing kits, software, and systems are projected to be available over the projection period, and ongoing research on rare illnesses is another factor that will support global market expansion. Additionally, the market's expansion is supported by whole-exome sequencing technology's low cost and quick speed, technological advances in whole-exome sequencing methodology, and strategic alliances among major research institutes worldwide.

List of Prominent Players in the Whole Exome Sequencing Market:

Thermo Fisher Scientific, Inc

Illumina, Inc.

Agilent Technologies, Inc

BGI

Psomagen

Hoffmann-La Roche Ltd

Azenta US Inc. (GENEWIZ)

CD Genomics

Novogene Co, Ltd

Eurofins Genomics.

Market Dynamics:

Drivers-

The primary reasons propelling the worldwide Whole Exome Sequencing Market are the growing applications in clinical diagnostics, increased demand for the detection of uncommon diseases, expanding genomics R&D, next-generation sequencing, and rising need for personalized medicine. The acceptance of next-generation sequencing methods for the diagnosis, prognosis, therapy, and follow-up of numerous chronic diseases, including cancer, is expanding, driving the industry. The acceptance of next-generation sequencing methods for the diagnosis, prognosis, therapy, and follow-up of numerous chronic diseases, including cancer, is expanding, driving the industry.

Challenges:

A lack of qualified professionals, high investment costs, and ethical and legal questions concerning the market's practices and results in various countries are some of the market's challenges. High reliance on government funding is a couple of the issues restricting the growth of the total exome sequencing sector.

Expert Knowledge, Just a Click Away: https://calendly.com/insightaceanalytic/30min?month=2024-02

Regional Trends:

The North America whole exome sequencing market position is predicted to record a major market share in revenue and is projected to rise at a high CAGR shortly. The main drivers of the growth of the whole-exome sequencing market are the rising prevalence of hereditary and chronic diseases, such as cancer, the increasing demand for personalized and customized medication, and supportive government initiatives. Besides, Asia Pacific had a substantial market share due to the augmented healthcare spending and the increasing prevalence of chronic diseases like diabetes, HIV, and neurological disorders. Favorable government measures for adopting and advancing whole-exome sequencing technology will also boost regional expansion.

Recent Developments:

In February 2023: According to Illumina Inc., the Broad Institute has just received the company's first NovaSeqX Plus machine. The platform will assist parties wishing to access the company's human whole genome product, blended genome/exome product, and sequencing service.

In May 2022: Thermo Fisher Scientific and The Qatar Genome Programme (QGP), a Qatar Foundation (QF) division, partnered to advance genomic research and clinical applications of predictive genomics in Qatar as a step towards expanding the advantages of precision medicine to Arab populations.

Segmentation of Whole Exome Sequencing Market-

By Product

Instruments

Consumables

Services

By Technology

Sequencing by Synthesis

ION Semiconductor Sequencing

Others

By Workflow

Pre-sequencing

Sequencing

Data Analysis

By Application

Clinical Diagnostics

Drug Discovery & Development

Personalized Medicine

Others

Unlock Your GTM Strategy: https://www.insightaceanalytic.com/customisation/1770

By End-use

Academic & Research Institutes

Hospitals & Clinics

Pharmaceutical & Biotechnology Companies

Others

By Region-

North America-

The US

Canada

Mexico

Europe-

Germany

The UK

France

Italy

Spain

Rest of Europe

Asia-Pacific-

China

Japan

India

South Korea

South East Asia

Rest of Asia Pacific

Latin America-

Brazil

Argentina

Rest of Latin America

Middle East & Africa-

GCC Countries

South Africa

Rest of Middle East and Africa

Empower Your Decision-Making with 180 Pages Full Report @ https://www.insightaceanalytic.com/buy-report/1770

About Us:

InsightAce Analytic is a market research and consulting firm that enables clients to make strategic decisions. Our qualitative and quantitative market intelligence solutions inform the need for market and competitive intelligence to expand businesses. We help clients gain competitive advantage by identifying untapped markets, exploring new and competing technologies, segmenting potential markets and repositioning products. Our expertise is in providing syndicated and custom market intelligence reports with an in-depth analysis with key market insights in a timely and cost-effective manner.

1 note

·

View note

Text

Lab Automation Market Business Overview, New Share, Trends Analysis, And Forecast To 2032

The global lab automation market (ラボオートメーション市場) is poised for substantial growth, with a projected compound annual growth rate (CAGR) of 7% between 2022 and 2032. It is expected to expand from US$ 1.8 billion in 2021 to US$ 4 billion by 2032.

In recent years, the laboratory automation market has witnessed remarkable growth, driven by advancements in technology, increasing demand for efficiency, and the need for precise and reliable results. As laboratories across various industries continue to embrace automation to streamline processes and enhance productivity, a closer examination of the market dynamics reveals numerous emerging trends and opportunities shaping its trajectory.

𝐃𝐨𝐰𝐧𝐥𝐨𝐚𝐝 𝐚 𝐒𝐚𝐦𝐩𝐥𝐞 𝐂𝐨𝐩𝐲 𝐨𝐟 𝐓𝐡𝐢𝐬 𝐑𝐞𝐩𝐨𝐫𝐭: https://www.factmr.com/connectus/sample?flag=S&rep_id=5672

Automation Revolutionizing Laboratory Operations

Automation has revolutionized laboratory operations by replacing manual tasks with automated processes, thereby minimizing errors, reducing turnaround times, and improving overall efficiency. From sample handling and preparation to data analysis and reporting, automation solutions are being adopted across diverse laboratory settings, including pharmaceuticals, biotechnology, clinical diagnostics, and academic research.

Integration of Robotics and Artificial Intelligence (AI)

One of the key trends driving the evolution of the lab automation market is the integration of robotics and AI technologies. Robotics enable precise and repetitive tasks, such as pipetting and sample handling, while AI algorithms analyze complex datasets, optimize workflows, and provide actionable insights. This combination of robotics and AI not only enhances efficiency but also enables laboratories to handle larger volumes of samples and data with greater accuracy.

Rise of Modular and Scalable Solutions

Modular and scalable automation solutions are gaining popularity among laboratories of all sizes. These systems offer flexibility to adapt to changing needs and accommodate growth, allowing laboratories to scale their automation capabilities as required. Additionally, modular platforms enable integration with existing laboratory infrastructure, minimizing disruption and maximizing return on investment.

Focus on Data Management and Integration

With the proliferation of high-throughput technologies generating vast amounts of data, effective data management and integration have become paramount in laboratory automation. Laboratories are investing in comprehensive informatics solutions that facilitate seamless data capture, analysis, and sharing across multiple platforms and systems. Integration with laboratory information management systems (LIMS) and electronic laboratory notebooks (ELNs) further enhances data integrity and compliance.

𝐂𝐨𝐦𝐩𝐞𝐭𝐢𝐭𝐢𝐯𝐞 𝐋𝐚𝐧𝐝𝐬𝐜𝐚𝐩𝐞:

The primary expansion strategy for the aforementioned players predominantly involves introducing new automation equipment and software tailored to address industry-specific needs. Additionally, strategic alliances, collaborations with academic and research institutions, governmental partnerships, and acquisitions of smaller players are frequently employed.

For instance:

Thermo Fisher Scientific, Inc. unveiled the Rapid EZ DNA-Seq library preparation kit in February 2020, providing PCR-free generation of sequencing-ready libraries for Next-Generation Sequencing (NGS) applications.

Danaher Corporation's Beckman Coulter, Inc. entered into a marketing agreement with Clever Culture Systems (CCS), a pioneer in microbiology automation utilizing Artificial Intelligence (AI), in July 2020. This collaboration aimed to promote the APAS Independence, an advanced lab automation solution, within their product portfolio.

Becton, Dickinson, and Company announced in February 2020 that the BD Kiestra ReadA received approval from the US Food and Drug Administration (FDA). This standalone device enhances operational efficiency in clinical microbiology laboratories by automating redundant plate management tasks and ensuring accuracy through standardized digital image acquisition.

Key Segments Covered in the Lab Automation Market Report

by Product :

Lab Automation Equipment

Automated Workstations

Automated Liquid Handling

Automated Integrated Workstations

Pipetting Systems

Reagent Dispensers

Microplate Washers

Automated Microplate Readers

Multi-mode Microplate Readers

Single-mode Microplate Readers

Automated ELISA Systems

Automated Nucleic Acid Purification Systems

Lab Automation Software & Informatics

Workstation/Unit Automation Software

Laboratory Information Management Systems (LIMS)

Electronic Laboratory Notebook

Scientific Data Management System

by Application :

Drug Discovery

Clinical Diagnostics

Genomics Solutions

Proteomics Solutions

by End User :

Biotechnology & Pharmaceutical Industries

Research & Academic Institutes

Hospitals & Diagnostic Laboratories

Forensic Laboratories

Environmental Testing Labs

Food & Beverage Industry

by Region :

North America

Latin America

Europe

Asia Pacific

Middle East & Africa

The lab automation market is poised for continued growth and innovation, driven by emerging trends such as robotics integration, modular solutions, and advanced data management capabilities. Laboratories that embrace automation stand to benefit from improved efficiency, enhanced accuracy, and accelerated workflows, ultimately driving advancements in scientific research, healthcare, and beyond. As technology continues to evolve and new opportunities emerge, the future of lab automation holds immense promise for transforming laboratory operations and advancing scientific discovery.

𝐂𝐨𝐧𝐭𝐚𝐜𝐭:

US Sales Office 11140 Rockville Pike Suite 400 Rockville, MD 20852 United States Tel: +1 (628) 251-1583, +353-1-4434-232 Email: [email protected]

1 note

·

View note

Text

Unlocking the Future of Cancer Diagnosis: Genomic Cancer Panel and Profiling Market Insights

ntroduction: Cancer, a complex and multifaceted group of diseases, continues to challenge the healthcare industry. However, advancements in genomics have opened new doors for personalized and targeted cancer treatments. One of the groundbreaking tools in this field is the Genomic Cancer Panel and Profiling market. In this blog, we'll delve into the key aspects of this market and explore how it is revolutionizing cancer diagnosis and treatment strategies.

Understanding Genomic Cancer Panel and Profiling:

Genomic cancer panel and profiling involve the comprehensive analysis of a patient's DNA, RNA, and other molecular markers to understand the genetic alterations driving cancer growth. This approach provides a detailed molecular profile of the tumor, allowing healthcare professionals to tailor treatment plans based on the unique genetic makeup of each patient's cancer.

Market Value observed in 2022 was $ 9.90 Billion, and the CAGR in 2023-2032 was found out to be 9.43%.

Market Growth and Trends:

The genomic cancer panel and profiling market have witnessed significant growth in recent years, driven by increasing cancer incidence, technological advancements, and a growing understanding of the role of genetics in cancer development. The market is characterized by a surge in collaborations between biotechnology companies, academic institutions, and healthcare providers to enhance research capabilities and develop innovative solutions.

Key Players and Technologies:

Several key players dominate the genomic cancer panel and profiling market, offering cutting-edge technologies and solutions. Illumina, Thermo Fisher Scientific, and QIAGEN are among the prominent companies leading the way in developing sequencing platforms and analysis tools. Next-generation sequencing (NGS) technologies play a pivotal role, enabling high-throughput and cost-effective analysis of large genomic datasets.

Clinical Applications:

The clinical applications of genomic cancer panel and profiling are diverse and impactful. These include identifying potential therapeutic targets, predicting treatment response, and uncovering resistance mechanisms. Additionally, this approach aids in the early detection of hereditary cancer syndromes, facilitating preventive measures for at-risk individuals.

Challenges and Opportunities:

While the genomic cancer panel and profiling market hold immense promise, it is not without challenges. Data interpretation, standardization of testing protocols, and the integration of genomic information into clinical practice pose ongoing hurdles. However, these challenges also present opportunities for further research, technological innovation, and collaboration to overcome barriers and improve patient outcomes.

Future Prospects:

The future of genomic cancer panels and profiling looks promising, with ongoing advancements in artificial intelligence, machine learning, and data analytics enhancing our ability to extract meaningful insights from vast genomic datasets. Integrating these technologies into routine clinical practice could streamline decision-making processes, optimize treatment strategies, and contribute to the broader field of precision medicine.

Conclusion:

The Genomic Cancer Panel and Profiling market are at the forefront of transforming cancer care by providing personalized and targeted approaches to diagnosis and treatment. As we continue to unlock the mysteries of the human genome, the synergy between technology, research, and clinical application holds the key to a future where cancer is not just treated but understood at its genetic core, paving the way for more effective and tailored therapies.

1 note

·

View note

Text

The real thing people don’t always understand is that even ethically trained, incredibly domain specific, generative AI has a purpose in scientific research.

I use machine learning extensively in my research, and people’s conflation of any machine learning with generative AI is my biggest pet peeve.

Because ML is a single branch of the incredibly vast research area that is artificial intelligence! I took an entire course on AI in 2018 before the large language model boom, and we covered so much of the history of the field. A big chunk of that class was just game theory because so much of AI algorithm development has been focused on playing and winning games. (See AlphaGo)

But like I said, some generative AI has a worthwhile scientific application! Not all generative AI is generating pointless text or weird images. Generative models are being used to explore chemical space to search for a variety of properties because there are simply too many possible molecules. Just considering potential pharmaceuticals the number is ~10^60. Generative models, such as variational autoencoders, can train on what we know works and suggest new targets to make computational high throughput screenings more effective. Paired with reinforcement learning techniques, these can extrapolate out to parameter areas where we previously had no known candidates. Additionally, generative models (e.g. diffusion models) can be used to predict protein sequences that will produce a desired structure to create synthetic proteins for purposes like catalysis.

I hate generative AI being forced into consumer products as much as any reasonable person should, but blanket statements calling for bans of all generative AI are also bad.

(Source)

73K notes

·

View notes

Text

Top Instruments Used in Advanced Genetic Testing Labs

Behind every accurate genetic test is a sophisticated network of high-precision instruments working seamlessly to extract, analyze, and interpret DNA. As genetic testing becomes central to diagnosing diseases and personalizing treatment, understanding the tools behind the science is essential.

At Greenarray Genomics Research and Solutions Pvt. Ltd., Pune, our state-of-the-art lab is equipped with some of the world’s most advanced instruments to ensure clinical-grade accuracy, efficiency, and reliability.

1. 🔬 Next-Generation Sequencers (NGS Machines)

Function: Sequence millions of DNA fragments simultaneously Used for: Whole genome, whole exome, targeted gene panels

NGS platforms (such as those from Illumina, Thermo Fisher, or MGI) have revolutionized the speed and scale at which DNA can be decoded. These machines form the backbone of high-throughput genetic testing.

2. 💠 Real-Time PCR (qPCR) Systems

Function: Amplify and quantify DNA or RNA in real time Used for: Mutation detection, infectious disease testing (e.g., COVID-19), gene expression studies

qPCR is a gold standard for precision-based, single-gene testing. It’s fast, accurate, and widely used for diagnostics and research.

3. 🧪 Automated DNA/RNA Extractors

Function: Isolate nucleic acids from blood, saliva, or tissue samples Used for: Sample prep for sequencing, PCR, or microarray analysis

Automated extractors reduce manual errors and enhance throughput��— ensuring high-quality input for downstream applications.

4. 🧬 Bioanalyzers and Fragment Analyzers

Function: Assess DNA/RNA quality and fragment size Used for: Library prep QC, gene expression profiling, miRNA analysis

These tools ensure that only high-quality samples are sent for sequencing or PCR, minimizing test failure rates.

5. 🌈 Fluorescence In Situ Hybridization (FISH) Systems

Function: Detect specific DNA sequences or chromosomal abnormalities using fluorescent probes Used for: Cancer genetics, prenatal testing, cytogenetic studies

FISH is a critical tool in cytogenetics, providing rapid results for targeted chromosomal conditions.

6. 🔍 Microscopes for Karyotyping

Function: Visualize chromosomes under high magnification Used for: Detecting large-scale chromosomal anomalies like trisomies, deletions, and translocations

Karyotyping remains a core diagnostic method for genetic syndromes, infertility, and recurrent miscarriage cases.

7. 📊 High-Performance Bioinformatics Workstations

Function: Analyze large genomic datasets and interpret DNA variants Used for: Clinical reporting, variant calling, annotation

With NGS generating gigabytes of raw data, advanced computational power and bioinformatics tools are vital for producing clear, actionable reports.

8. 🧫 Tissue Culture Hoods and CO₂ Incubators

Function: Grow and maintain live cells for cytogenetic testing Used for: Amniotic fluid culture, bone marrow cytogenetics, cancer cell studies

These are essential for chromosome-based tests, ensuring healthy, dividing cells for analysis.

9. 💡 Spectrophotometers and Fluorometers

Function: Quantify DNA/RNA and assess purity Used for: Quality control before PCR or sequencing

These instruments help maintain accuracy and reproducibility in molecular workflows.

10. 🗂️ LIMS (Laboratory Information Management System)

Function: Manage sample tracking, test data, reporting, and compliance Used for: Workflow automation and secure data handling

A robust LIMS ensures that testing is not just accurate — but also efficient, traceable, and patient-safe.

Why It Matters

The right instruments enable: ✅ Faster turnaround times ✅ Lower error rates ✅ Better diagnostic precision ✅ Integration with digital health records ✅ Scalable research and testing capacity

At Greenarray Genomics, We Invest in Excellence

Our Pune-based lab combines global-standard instruments with expert human insight. From automated extraction to real-time analysis and genetic counseling, every step is powered by top-tier technology and a patient-first approach.

0 notes

Text

Engineering Excellence: Comparing Spline and Welded Roll Connections for Maximum Plate Rolling Efficiency

In the realm of heavy-duty metal forming, the drive for precision, durability, and efficiency is paramount. Industries around the world rely on advanced machinery to shape thick plates into perfectly curved structures used in construction, shipbuilding, oil and gas, and many other sectors. At the heart of this technology lies the plate rolling machine, a robust piece of engineering designed to roll and form metal plates into cylindrical or conical shapes.

Among the key design aspects that determine the performance of these machines are the connections that transmit power to the rolls — particularly the spline and welded roll connections. Each method has its strengths and potential limitations. This article delves into how these connection types impact plate rolling operations and how choices made by plate rolling machine manufacturers directly influence efficiency and product quality.

Understanding Plate Rolling Machines

Before comparing spline and welded roll connections, it’s important to understand the core function of plate rolling machines. A plate rolling machine operates by passing a flat metal plate between rolls. The pressure and movement induce plastic deformation, bending the plate into a desired curvature.

There are various configurations, including:

2-roll machines: Typically used for thin sheets.

3-roll machines: The traditional workhorse, suitable for a wide range of thicknesses.

4 roll plate rolling machine: A modern solution offering improved pre-bending capability and reduced handling.

The 4 roll plate rolling machine has gained popularity for its ability to clamp and square the plate automatically, minimizing operator intervention and errors. This machine also excels at creating consistent cylindrical parts with precise roundness. Such advancements cater to industries demanding high productivity and accuracy.

The Rise of Automation in Plate Bending

As industries push for increased throughput and consistent quality, automation has become central to modern metal forming. An Automatic bending machine integrates CNC systems that control roll positioning, pressure, and rotation, allowing for repeatable and highly accurate bending cycles.

Automated systems significantly cut down cycle times, reduce reliance on skilled manual adjustments, and ensure that each product matches tight tolerances. They are also essential for handling complex shapes or sequences like variable-radius bending.

The choice of internal mechanical design — including how the rolls are connected and powered — plays a vital role in ensuring that automated systems operate reliably and efficiently over long periods.

Spline vs Welded Roll Connections: The Basics

What is a Spline Connection?

A spline is essentially a series of ridges (or teeth) machined onto a shaft that fit into corresponding grooves in a mating hub (in this case, inside the roll). When torque is applied, the splines engage and transmit rotational force effectively without slippage.

Advantages include:

Even distribution of torque across multiple contact surfaces.

The ability to absorb minor misalignments or deflections.

Easier maintenance and replacement since the roll can be slid off the shaft without cutting or grinding.

What is a Welded Connection?

In a welded connection, the roll is directly welded to the driving shaft. This creates a solid, permanent bond that transmits torque through the entire welded area.

Advantages include:

A rigid connection with zero backlash.

Lower manufacturing cost since it eliminates machining splines and matching hubs.

Compact design, often allowing for slightly shorter assemblies.

However, welded joints are subject to fatigue over time, especially under cyclic loading. Inspection methods such as ultrasonic or dye penetrant tests are often needed to monitor weld integrity.

Performance and Efficiency Considerations

Torque Transmission

One of the primary tasks of the roll connection is to transmit torque from the drive system to the roll. In heavy-duty applications, especially when rolling thick or high-strength steel, the torque demands are enormous.

Spline connections shine here due to:

Their ability to spread torque loads across many surfaces, reducing localized stress.

Less susceptibility to micro-cracks that can originate at a welded joint.

Welded connections handle torque effectively but can become points of stress concentration. Over time, repeated heavy loading may cause micro-fissures, leading to eventual failure if not detected early.

Alignment and Concentricity

Proper alignment between the roll and the drive shaft is critical for producing uniformly bent plates. Even slight eccentricities can lead to oval or inconsistent products.

Spline systems, due to precision machining, maintain excellent concentricity. Moreover, if wear develops, a roll can be re-sleeved or replaced without disturbing the drive shaft.

In welded designs, any misalignment introduced during welding is permanent unless the entire assembly is cut out and redone. Skilled welding is essential to avoid warping during cooling.

Maintenance and Downtime

When downtime costs thousands of dollars per hour, easy maintenance becomes vital. This is a key area where spline connections typically outperform welded designs.

With splines, the roll can be easily removed for resurfacing or replacement.

Welded rolls require cutting the weld, machining off remnants, and welding a new roll — a much longer process.

For operations using an Automatic bending machine, quick maintenance ensures the CNC system can return to its scheduled cycles rapidly, maintaining productivity targets.

Lifecycle Cost Analysis

While welded designs might seem attractive due to their lower upfront cost, the long-term economics can favor spline connections. This is due to:

Lower costs of repairs and shorter downtimes.

Longer lifespan of rolls and shafts since each component can be replaced individually.

Reduced risks of catastrophic failure, which might damage expensive machine bases or gear systems.

Leading plate rolling machine manufacturers often provide options for both connection types, letting buyers weigh capital costs against lifecycle benefits.

Innovations by Plate Rolling Machine Manufacturers

Global plate rolling machine manufacturers have pushed the envelope in both spline and welded designs. Advances include:

Case-hardened spline shafts that resist wear even under intense use.

Automated monitoring of torque loads and vibrations to predict maintenance needs.

Hybrid designs where rolls have spline hubs but are also secured with high-strength locking elements, blending the best of both worlds.

For high-volume operations, investing in a 4 roll plate rolling machine with splined connections can be a strategic choice. The four-roll system allows for faster cycle times, reduced re-rolling, and tight diameter tolerances, while splines ensure long service intervals.

The Role of CNC and Automation in Modern Plate Bending

Today, an Automatic bending machine paired with advanced roll connection technology delivers unmatched productivity. Features include:

Automatic calculation of roll positions based on plate thickness and desired radius.

Memory functions that store programs for repeat jobs.

Adaptive bending where sensors detect actual material spring-back and adjust rolls in real time.

A strong, precisely aligned connection like a spline ensures that these CNC commands translate directly into consistent material movement, without backlash or play.

Case Study: Heavy Vessel Manufacturing

Consider a plant producing thick-walled pressure vessels for petrochemical use. They employ a 4 roll plate rolling machine with spline-connected rolls. This setup allows them to:

Pre-bend and roll large plates in a single pass.

Quickly swap out worn rolls without affecting the drive shafts.

Maintain concentric rolling under varying loads, critical for vessels that must pass stringent roundness inspections.

Their older line, equipped with welded roll machines, required full shutdowns and days of work to replace a roll, dramatically impacting delivery schedules. Over a five-year period, the savings from reduced downtime alone justified the higher initial cost of the spline machines.

Choosing the Right Connection for Your Needs

When evaluating a new plate rolling system, decision-makers should consult closely with plate rolling machine manufacturers. Key questions include:

What is the typical thickness and material strength you will roll?

How many hours per day will the machine run?

How critical is quick maintenance and minimal downtime?

Are you planning for high automation levels with an Automatic bending machine?

For small workshops or lower utilization, welded roll connections might still make economic sense. For high-capacity shops or demanding specifications, investing in spline-connected rolls often delivers superior long-term value.

The Future: Intelligent Monitoring and Predictive Maintenance

Looking ahead, the integration of Industry 4.0 principles will reshape even the fundamentals of roll connections. Sensors embedded in spline hubs can now monitor:

Micro-vibrations indicating wear.

Load spikes that could signal impending failure.

Temperature anomalies pointing to lubrication or alignment problems.

Paired with the control systems of an Automatic bending machine, this creates a self-learning ecosystem where maintenance is scheduled based on real data, extending machine life and optimizing production schedules.

Conclusion: Engineering Excellence in Plate Rolling

The choice between spline and welded roll connections is more than a mechanical decision; it reflects a philosophy of engineering excellence. Spline connections embody precision, serviceability, and longevity, perfectly suited to the demands of automated, high-output facilities. Welded connections offer simplicity and lower upfront costs, fitting well in smaller or specialized operations.

By working closely with experienced plate rolling machine manufacturers, businesses can align their equipment choices with operational goals. Whether selecting a robust 4 roll plate rolling machine for high-volume production or an advanced Automatic bending machine for intricate shapes, ensuring the right roll connection is key to maximizing efficiency, minimizing lifecycle costs, and maintaining top-tier quality.

In a competitive global market, these engineering decisions define who leads and who follows. As technology continues to advance, the pursuit of optimal roll connections remains at the very heart of bending innovation — shaping not just metal, but the future of modern manufacturing.

#3 roll plate bending machine#3 roll bending machine#3 roll plate rolling machine#roll bending machine

0 notes

Text

Engineered Systems for Carton Accumulation and Packaging Line Optimization

Efficient carton accumulation is critical in any manufacturing facility where uninterrupted flow and minimal downtime are essential. In high-speed environments, such as food and beverage packaging, pharmaceuticals, and consumer goods production, properly engineered accumulation systems can significantly improve throughput while maintaining product integrity.

An engineered system for carton handling incorporates accumulation conveyors, guide rails, automated stops, and intelligent controls to buffer and sequence products precisely. These systems serve as a safeguard against equipment hiccups by holding cartons during brief line stoppages or slowdowns, thus preventing costly shutdowns and product waste.

At Dillin, we understand that accumulation isn’t a one-size-fits-all solution. Our modular accumulation conveyors are designed to accommodate various packaging formats, including shrink-wrapped bundles, cases, and individual cartons. Features like zero-pressure accumulation, zone-based control, and seamless integration with upstream and downstream equipment enable smarter and more scalable operations.

Moreover, pairing carton accumulation with air conveyor systems enhances the entire material handling process. Products move efficiently from one phase to another, maintaining proper spacing and orientation for labeling, inspection, or palletizing. This combination forms a cohesive, reliable, and intelligent packaging conveyor system that can adapt to changing production demands.

Our engineered systems are developed with durability, precision, and future expansion in mind. Whether you are retrofitting an existing line or building a new facility, our customized solutions ensure maximum ROI and consistent performance.

Source Url : - https://techtechnogear.info/engineered-systems-for-carton-accumulation-and-packaging-line-optimization/

0 notes

Text

Postdoctoral Research Associate Positions in Alzheimer’s disease: Genetics Washington University School of Medicine Postdoc for Whole-Genome Sequencing data in Alzheimer's disease and dementia-research on biomarkers and therapeutic targets See the full job description on jobRxiv: https://jobrxiv.org/job/washington-university-school-of-medicine-27778-postdoctoral-research-associate-positions-in-alzheimers-disease-genetics/?feed_id=97322 #Aging_Biology #alzheimers_disease #biomarkers #ft #genomics #ScienceJobs #hiring #research

0 notes