#High-speed CMOS circuitry

Explore tagged Tumblr posts

Text

74 series logic, transistor–transistor logic (TTL), 74hc and gate

74HC Series 10 V SMT Triple 2-Channel Analog Multiplexer/Demultiplexer -TSSOP-16

#Logic#74 Series#A/HC/T#74HC4053PW#118#Nexperia#74 series logic#transistor–transistor logic (TTL)#74hc and gate#display drivers#74 Series Logic ICs including#74hc buffer#cmos buffer circuit#High-speed CMOS circuitry#logic gates#74HC Series

1 note

·

View note

Text

Bus-hold devices, 74HC family High-speed CMOS circuitry, Logic Circuit

74HC Series 6 V 3-State Surface Mount Shift Register - SOIC-16

#Logic#74 Series#A/HC/T#74HC595D#118#Nexperia#Bus-hold devices#74HC family High-speed CMOS circuitry#Logic Circuit#what is a 74-series chip#7400-series integrated circuits#LED lighting#74HC TTL#logic integrated circuits#7400 logic gates

1 note

·

View note

Text

74 A/HC/T Series Logic Chips, Types of 74 A/HC/T Series Logic Chips

74HC Series 6 V Dual Retriggerable Monostable Multivibrator with Reset - TSSOP16

#118#Nexperia#74HC123PW#Logic#74 Series A/HC/T#Series Logic Chips#Types of 74 A/HC/T Series Logic Chips#74HC High-speed CMOS circuitry#Three-state output#combining the speed of TTL#TC74HC/HCT Series#Logic Circuit#NAND Gate

1 note

·

View note

Text

Understanding the Different Types of X-Ray Detectors in Medical Imaging

X-ray detectors are essential components in medical imaging, playing a critical role in capturing images that help diagnose a wide range of conditions. Over the years, advancements in technology have led to the development of various types of X-ray detectors, each with its unique features and applications. In this blog, we will explore the different types of X-ray detectors used in medical imaging and their significance.

Download PDF Brochure

1. Film-Based X-Ray Detectors

Film-based X-ray detectors, the earliest form of X-ray detection, involve the use of photographic films to capture images. When X-rays pass through a patient's body, they expose the film, which is then developed to produce an image. While film-based detectors offer high image resolution, they are gradually being replaced by digital technologies due to their time-consuming processing and the need for chemical development.

2. Computed Radiography (CR) Detectors

Computed Radiography (CR) detectors are an intermediate step between traditional film and fully digital systems. CR detectors use photostimulable phosphor plates to capture X-ray images. After exposure, the plate is scanned by a laser, releasing the stored energy as light, which is then converted into a digital image. CR detectors offer several advantages over film, including the ability to digitally enhance images and store them electronically. However, they still require an additional step of processing the phosphor plates.

3. Digital Radiography (DR) Detectors

Digital Radiography (DR) detectors represent the latest in X-ray detection technology, offering significant improvements in image quality, speed, and efficiency. DR detectors come in two main types: indirect and direct.

Indirect DR Detectors: These detectors use a scintillator material, typically cesium iodide or gadolinium oxysulfide, to convert X-rays into visible light. The light is then detected by a photodiode array or a charge-coupled device (CCD), which converts it into an electrical signal and finally into a digital image. Indirect DR detectors are widely used due to their high sensitivity and relatively low cost.

Request Sample Pages

Direct DR Detectors: Unlike indirect detectors, direct DR detectors do not use a scintillator. Instead, they employ a photoconductor material, such as amorphous selenium, to directly convert X-rays into an electrical signal. This direct conversion process results in higher image resolution and reduced blur, making direct DR detectors ideal for applications requiring detailed imaging, such as mammography.

4. Flat-Panel Detectors (FPDs)

Flat-Panel Detectors (FPDs) are a type of DR detector and are increasingly popular in modern medical imaging systems. FPDs are available in both indirect and direct configurations, offering the benefits of high image quality, rapid image acquisition, and digital workflow integration. FPDs are lightweight, compact, and have a large imaging area, making them versatile for various medical imaging applications, including radiography, fluoroscopy, and cone-beam computed tomography (CBCT).

5. Charge-Coupled Device (CCD) Detectors

Charge-Coupled Device (CCD) detectors are used in some specialized X-ray imaging systems. CCD detectors use a scintillator to convert X-rays into visible light, which is then captured by the CCD sensor. The sensor converts the light into an electrical charge, which is processed to create a digital image. CCD detectors are known for their high sensitivity and ability to capture images with low noise, making them suitable for applications like dental X-rays and small animal imaging.

6. Complementary Metal-Oxide-Semiconductor (CMOS) Detectors

Complementary Metal-Oxide-Semiconductor (CMOS) detectors are another type of digital X-ray detector, similar to CCD detectors but with some distinct advantages. CMOS detectors integrate the sensor and processing circuitry on a single chip, resulting in lower power consumption and faster readout speeds. These detectors are compact and cost-effective, making them ideal for portable and handheld X-ray imaging systems. CMOS detectors are also increasingly used in dental and mammography imaging due to their high image quality and efficiency.

Conclusion

X-ray detectors are a critical component of medical imaging, with various types available to suit different applications and needs. From traditional film-based detectors to advanced digital systems like DR, FPDs, and CMOS detectors, each type offers unique benefits that contribute to the accurate diagnosis and treatment of patients. As technology continues to evolve, we can expect further advancements in X-ray detection, leading to even better imaging capabilities and improved patient outcomes.

Whether you're a healthcare professional or simply interested in medical technology, understanding the different types of X-ray detectors can help you appreciate the innovations that make modern medical imaging possible.

Content Source:

0 notes

Text

Analog Devices HMCAD1511TR Comprehensive Guide

Analog Devices HMCAD1511 High Speed Multi-Mode 8-Bit 30 MSPS to 1 GSPS A/D Converter

General Description

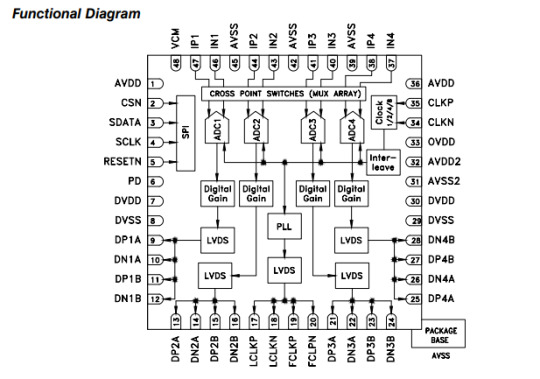

The HMCAD1511 is a versatile high performance low power analog-to-digital converter (ADC), utilizing time-interleaving to increase sampling rate. Integrated Cross Point Switches activate the input selected by the user.

In single channel mode, one of the four inputs can be selected as a valid input to the single ADC channel. In dual channel mode, any two of the four inputs can be selected to each ADC channel. In quad channel mode, any input can be assigned to any ADC channel.

An internal, low jitter and programmable clock divider makes it possible to use a single clock source for all operational modes.

The HMCAD1511 is based on a proprietary structure, and employs internal reference circuitry, a serial control interface and serial LVDS/RSDS output data. Data and frame synchronization clocks are supplied for data capture at the receiver. Internal 1 to 50X digital coarse gain with ENOB > 7.5 up to 16X gain, allows digital implementation of oscilloscope gain settings. Internal digital fine gain can be set separately for each ADC to calibrate for gain errors.

Various modes and configuration settings can be applied to the ADC through the serial control interface (SPI). Each channel can be powered down independently and data format can be selected through this interface. A full chip idle mode can be set by a single external pin. Register settings determine the exact function of this pin.

HMCAD1511 is designed to easily interface with Field Programmable Gate Arrays (FPGAs) from several vendors.

Key Features and Specifications

Multi-Mode Operation:

· Single Channel Mode: Maximum sampling rate of 1000 MSPS

· Dual Channel Mode: Maximum sampling rate of 500 MSPS

· Quad Channel Mode: Maximum sampling rate of 250 MSPS

Performance Highlights:

· Signal-to-Noise Ratio (SNR): Up to 49.8 dB in single channel mode

· Power Dissipation: Ultra-low at 710 mW including I/O at 1000 MSPS

· Start-Up Time: 0.5 µs from sleep and 15 µs from power down

Integration and Control:

· Integrated cross-point switches (MUX array) for flexible input selection

· Internal low jitter programmable clock divider

· Digital gain control from 1X to 50X with no missing codes up to 32X

· Serial LVDS/RSDS output for high-speed data transfer

Power and Environmental Specifications:

· Operating Voltage: 1.8V supply voltage

· Logic Levels: 1.7–3.6V CMOS logic on control interface pins

· Operating Temperature: -40°C to 85°C

Compatible Electronic Component Series

To maximize the functionality and performance of the HMCAD1511TR, it is essential to pair it with compatible electronic components. Here are five recommended series:

1. Texas Instruments THS4500 Series (Differential Amplifiers) · Category: Differential Amplifiers

· Parameter Values: Gain bandwidth: 2 GHz, Slew rate: 4200 V/µs, Supply voltage: 5V

· Application Scenarios: Ideal for interfacing with high-speed ADCs in communications systems

· Recommended Models: THS4503, THS4508

2. Analog Devices AD8138 Series (Differential Drivers) · Category: Differential Drivers

· Parameter Values: Bandwidth: 270 MHz, Slew rate: 1400 V/µs, Supply voltage: ±5V

· Application Scenarios: Suitable for high-speed data acquisition systems and ADC driver applications

· Recommended Models: AD8138, AD8139

3. Linear Technology (Analog Devices) LT6200 Series (Operational Amplifiers) · Category: Operational Amplifiers

· Parameter Values: Gain bandwidth: 165 MHz, Slew rate: 500 V/µs, Supply voltage: 3V to 12.6V

· Application Scenarios: High-speed signal conditioning and ADC input buffering

· Recommended Models: LT6200–5, LT6200–10

4. Maxim Integrated MAX9644 Series (Voltage References) · Category: Voltage References

· Parameter Values: Output voltage: 1.25V, Initial accuracy: ±0.2%, Temperature coefficient: 10 ppm/°C

· Application Scenarios: Provides stable reference voltage for high-precision ADCs

· Recommended Models: MAX9644, MAX6071

5. Microchip Technology MCP6V2X Series (Instrumentation Amplifiers) · Category: Instrumentation Amplifiers

· Parameter Values: Gain bandwidth: 1.3 MHz, Slew rate: 0.4 V/µs, Supply voltage: 1.8V to 6V

· Application Scenarios: Precision measurement systems and sensor signal conditioning

· Recommended Models: MCP6V27, MCP6V28

0 notes

Text

10 Fun and Easy Electronic Circuit Projects for Beginners

Check out the interesting electronics journey via these beginner projects! Learn about potentiometers, LED blinkers and simple amplifiers. Get hands on how mechanics of electronics work. Novices would definitely love doing these projects as they are both fun and medium to learn about circuitry

1. Low Power 3-Bit Encoder Design using Memristor

The design of an encoder in three distinct configurations—CMOS, Memristor, and Pseudo NMOS—is presented in this work. Three bits are used in the design of the encoder. Compared to cmos and pseudo-nmos logic, the suggested 3-bit encoder that uses memristor logic uses less power. With LTspice, the complete encoder schematic in all three configurations is simulated.

2. A Reliable Low Standby Power 10T SRAM Cell with Expanded Static Noise Margins

The low standby power 10T (LP10T) SRAM cell with strong read stability and write-ability (RSNM/WSNM/WM) is investigated in this work. The Schmitt-trigger inverter with a double-length pull-up transistor and the regular inverter with a stacking transistor make up the robust cross-coupled construction of the suggested LP10T SRAM cell. The read-disturbance is eliminated by this with the read path being isolated from real internal storage nodes. Additionally, it uses a write-assist approach to write in pseudo differential form using a write bit line and control signal. H-Spice/tanner 16mm CMOS Technology was used to simulate this entire design.

3. A Unified NVRAM and TRNG in Standard CMOS Technology

The various keys needed for cryptography and device authentication are provided by the True Random Number Generator (TRNG). The TRNG is usually integrated into the systems as a stand-alone module, which expands the scope and intricacy of the implementation. Furthermore, in order to support various applications, the system must store the key produced by the TRNG in non-volatile memory. However, in order to build a Non-Volatile Random Access Memory (NVRAM), further technological capabilities are needed, which are either costly or unavailable.

4. High-Speed Grouping and Decomposition Multiplier for Binary Multiplication

The study introduces a high-speed grouping and decomposition multiplier as a revolutionary method of binary multiplication. To lower the number of partial products and critical path time, the suggested multiplier combines the Wallace tree and Dadda multiplier with an innovative grouping and decomposition method. This adder's whole design is built on GDI logic. The suggested design is tested against the most recent binary multipliers utilizing 180mm CMOS technology.

5. Novel Memristor-based Nonvolatile D Latch and Flip-flop Designs

The basic components of practically all digital electrical systems with memory are sequential devices. Recent research and practice in integrating nonvolatile memristors into CMOS devices is motivated by the necessity of sequential devices having the nonvolatile property due to the critical nature of instantaneous data recovery following unforeseen data loss, such as an unplanned power outage.

6. Ultra-Efficient Nonvolatile Approximate Full-Adder with Spin-Hall-Assisted MTJ Cells for In-Memory Computing Applications

With a reasonable error rate, approximate computing seeks to lower digital systems' power usage and design complexity. Two extremely effective magnetic approximation full adders for computing-in-memory applications are shown in this project. To enable non-volatility, the suggested ultra-efficient full adder blocks are connected to a memory cell based on Magnetic Tunnel Junction (MTJ).

7. Improved High Speed or Low Complexity Memristor-based Content Addressable Memory (MCAM) Cell

This study proposes a novel method for nonvolatile Memristor-based Content Addressable Memory MCAM cells that combine CMOS processing technology with Memristor to provide low power dissipation, high packing density, and fast read/write operations. The suggested cell has CMOS controlling circuitry that uses latching to reduce writing time, and it only has two memristors for the memory cell.

8. Data Retention based Low Leakage Power TCAM for Network Packet Routing

To lessen the leakage power squandered in the TCAM memory, a new state-preserved technique called Data Retention based TCAM (DR-TCAM) is proposed in this study. Because of its excellent lookup performance, the Ternary Content Addressable Memory (TCAM) is frequently employed in routing tables. On the other hand, a high number of transistors would result in a significant power consumption for TCAM. The DR-TCAM can dynamically adjust the mask cells' power supply to lower the TCAM leakage power based on the continuous characteristic of the mask data. In particular, the DR-TCAM would not erase the mask data. The outcomes of the simulation demonstrate that the DR-TCAM outperforms the most advanced systems. The DR-TCAM consumes less electricity than the conventional TCAM architecture.

9. One-Sided Schmitt-Trigger-Based 9T SRAM Cell for NearThreshold Operation

This study provides a bit-interleaving structure without write-back scheme for a one-sided Schmitt-trigger based 9T static random access memory cell with excellent read stability, write ability, and hold stability yields and low energy consumption. The suggested Schmitt-trigger-based 9T static random access memory cell uses a one-sided Schmitt-trigger inverter with a single bit-line topology to provide a high read stability yield. Furthermore, by utilizing selective power gating and a Schmitt-trigger inverter write aid technique that regulates the Schmitt-trigger inverter's trip voltage, the write ability yield is enhanced.

10. Effective Low Leakage 6T and 8T FinFET SRAMs: Using Cells With Reverse-Biased FinFETs, Near-Threshold Operation, and Power Gating In this project, power gating is frequently utilized to lower SRAM memory leakage current, which significantly affects SRAM energy usage. After reviewing power gating FinFET SRAMs, we assess three methods for lowering the energy-delay product (EDP) and leakage power of six- and eight-transistor (6T, 8T) FinFET SRAM cells. We examine the differences in EDP savings between (1) power gating FinFETs, (2) near threshold operation, and alternative SRAM cells with low power (LP) and shorted gate (SG) FinFET configurations; the LP configuration reverse-biases the back gate of a FinFET and can cut leakage current by as much as 97%. Higher leakage SRAM cells get the most from power gating since their leakage current is reduced to the greatest extent. Several SRAM cells can save more leakage current by sharing power gating transistors. MORE INFO

0 notes

Text

Unlocking the Digital Revolution: Understanding Digital Logic Families

Introduction

The digital revolution has transformed countless aspects of our lives, from the way we communicate to the way we access information. At the heart of this revolution lies the concept of digital logic families, the building blocks that power our advanced technologies. In this article, we will explore the significance of digital logic families and delve into the intricate world of their evolution, types, practical applications, and future advancements.

What are Digital Logic Families?

In order to comprehend the complexity of digital logic families, it is crucial to first understand the concept of digital logic itself. Digital logic refers to the fundamental building blocks of electronic circuits that manipulate binary signals through logic gates. These logic gates perform operations such as AND, OR, and NOT, allowing for intricate manipulation of binary information.

Digital logic families, on the other hand, are a collection of integrated circuits (ICs) that share similar characteristics and use the same basic principles to process and transmit digital signals. They serve as the foundation for the digital devices we rely on every day, enabling efficient and reliable data processing.

Historical Evolution of Digital Logic Families

The birth of digital logic families can be traced back to the mid-20th century, when scientists and engineers began exploring the possibilities of using electronic circuits to process digital information. Milestones and key advancements during this period include the development of diode-based logic gates and the introduction of the first transistor-based logic gate, the transistor-transistor logic (TTL).

Common Types of Digital Logic Families

Transistor-Transistor Logic (TTL)

TTL is one of the earliest and most widely used digital logic families. It utilizes bipolar junction transistors to perform logic operations. TTL has various subfamilies, including low-power Schottky TTL (LS-TTL) and advanced Schottky TTL (AS-TTL). Each subfamily offers different characteristics and trade-offs, such as varying power consumption and switching speed.

Pros of TTL:

High noise immunity

Wide range of operating voltages

Rugged and reliable

Cons of TTL:

Higher power consumption compared to some newer logic families

Limited fan-out capability

Emitter-Coupled Logic (ECL)

ECL is a high-speed logic family that operates on the principle of current steering. It does not rely on voltage levels like TTL does, making it well-suited for high-speed and high-frequency applications. ECL has subfamilies like positive emitter-coupled logic (PECL) and low-voltage positive emitter-coupled logic (LVPECL).

Advantages of ECL:

Extremely fast switching speed

Low power supply noise sensitivity

Excellent signal integrity

Disadvantages of ECL:

Higher power consumption compared to some other logic families

More complex design requirements

Complementary Metal-Oxide-Semiconductor (CMOS)

CMOS logic family is widely used in modern digital circuits due to its low power consumption and ability to operate at lower voltages. It consists of complementary pairs of metal-oxide-semiconductor field-effect transistors (MOSFETs). Popular CMOS variants include low-power CMOS (LP-CMOS) and high-speed CMOS (HCMOS).

Overview of CMOS:

Low power consumption

Wide range of operating voltages

Higher resistance to noise

Benefits of CMOS:

Lower power consumption compared to TTL and ECL

Greater noise immunity

Compatibility with various IC technologies

Drawbacks of CMOS:

Reduced speed compared to ECL

Limited driving capability

Practical Applications of Digital Logic Families

Digital logic families find a wide range of practical applications in various devices, particularly through digital integrated circuits (ICs).

Digital integrated circuits (ICs)

ICs play a crucial role in the implementation of digital logic families in diverse devices. They provide the necessary circuitry for processing and transmitting digital signals efficiently. Examples of IC applications utilizing different logic families include microprocessors, microcontrollers, memory chips, and communication devices.

Role of ICs:

Integration of complex logic functions

Miniaturization of circuitry

Enhanced reliability and performance

Microprocessors and microcontrollers

Microprocessors and microcontrollers are key components in modern-day computing systems and embedded devices. They utilize different logic families based on the application's requirements. Logic families commonly employed in microcontrollers include CMOS and TTL, depending on factors like power consumption, speed, and complexity.

Fundamentals of microprocessors:

Execution of instructions

Data processing and manipulation

Interface with peripherals and memory

Comparison of logic families used in microcontrollers:

CMOS: Lower power consumption, compatibility with various IC technologies

TTL: Robustness, easier compatibility with legacy systems

Advancements and Future Trends in Digital Logic Families

The field of digital logic families constantly evolves to meet the demands of emerging technologies and applications.

Recent developments in logic families

Recent advancements in digital logic families include the development of advanced CMOS technologies, such as FinFET and nanosheet transistors, enabling higher performance and energy efficiency. Additionally, research and innovations in emerging technologies, such as quantum computing and neuromorphic engineering, hold promising prospects for future logic families.

Exploring emerging technologies

Emerging technologies, like spin-based computing and molecular electronics, show potential for revolutionizing the field of digital logic families. These cutting-edge technologies aim to overcome the limitations of current logic families and pave the way for faster, smaller, and more energy-efficient digital devices.

Summary and Future Outlook

In summary, digital logic families are the backbone of the digital revolution, providing the essential building blocks for advanced digital technologies. As technology continues to evolve, logic families will play a crucial role in driving further advancements in areas like artificial intelligence, internet of things, and robotics.

Looking ahead, the future of digital logic families holds immense potential for transformative breakthroughs. The continued exploration of emerging technologies and the ongoing pursuit of higher performance and energy efficiency will shape the next chapters of the digital revolution.

FAQs

A. What is the role of propagation delay in digital logic families?

Propagation delay refers to the time taken for a signal to propagate through a logic gate. It affects the overall speed and timing of digital circuits. Minimizing propagation delay is crucial for achieving faster processing speeds and ensuring reliable signal transmission.

B. Which logic family is ideal for high-speed applications?

Emitter-Coupled Logic (ECL) is often preferred for high-speed applications due to its fast switching speed and excellent signal integrity. However, it comes with higher power consumption and more complex design requirements compared to other logic families.

C. How does logic family selection affect signal integrity?

The choice of logic family can have a significant impact on signal integrity. Factors such as noise immunity, voltage levels, and switching characteristics of the logic family influence the quality and reliability of the transmitted signals. Selecting a logic family with better noise immunity and voltage margins improves overall signal integrity.

D. Are there any emerging logic families that might replace the current ones?

The field of digital logic families is constantly evolving, and emerging technologies like spin-based computing and molecular electronics hold the potential to introduce new logic families in the future. While these technologies are still in their early stages of development, they offer promising alternatives that could potentially replace or augment current logic families.

REFERENCE LINKS

https://easyelectronics.co.in/classification-and-characteristics-of-logic-families/

https://limewire.com/studio/image/create-image?model=blue-willow-v4&prompt=%2Fimagine+prompt%3A+An+intricate+digital+illustration+capturing+the+historical+evolution+of+digital+logic+families%2C+showcasing+the+evolution+from+diode-based+logic+gates+to+transistor-transistor+logic+%28TTL%29.+The+artwork+depicts+scientists+and+engineers+working+in+a+laboratory+setting%2C+surrounded+by+electronic+circuits+and+technological+equipment.+The+color+temperature+is+cool%2C+with+a+focus+on+blue+and+silver+tones+to+represent+the+futuristic+nature+of+the+evolution.+The+lighting+is+bright%2C+emphasizing+the+details+of+the+circuitry.+--v+5+--stylize+1000+--ar+16%3A9

https://www.electrically4u.com/classification-and-characteristics-of-digital-logic-family/

https://www.humix.com/video/9db79b2c89a65813caa6594e017ae42ae59f6a5692d0489406dcf4c54a8c70c6

0 notes

Photo

The Lisa Hardware

Reporting on the technical specifications of a computer toward the end of an article is unusual for BYTE, but it emphasizes that the why of Lisa is more important than the what. For part of the market, at least, the Lisa computer will change the emphasis of microcomputing from “How much RAM does it have?” to “What can it do for me?” For example, it is almost misleading to say that the Lisa comes with one megabyte of RAM, even though the fact itself is true. That doesn’t mean that the Lisa is sixteen times better than machines that have 64K bytes of RAM. Nor does it necessarily mean that the Lisa can work on much larger data files than other computers; its application programs each take 200K to 300K bytes, which significantly reduces the memory available for data. It’s more instructive to say, for example, that the Lisa with one megabyte can hold a 100-row by 50-column spreadsheet (as its advertisements state). With this in mind, let’s take a look at the Lisa.

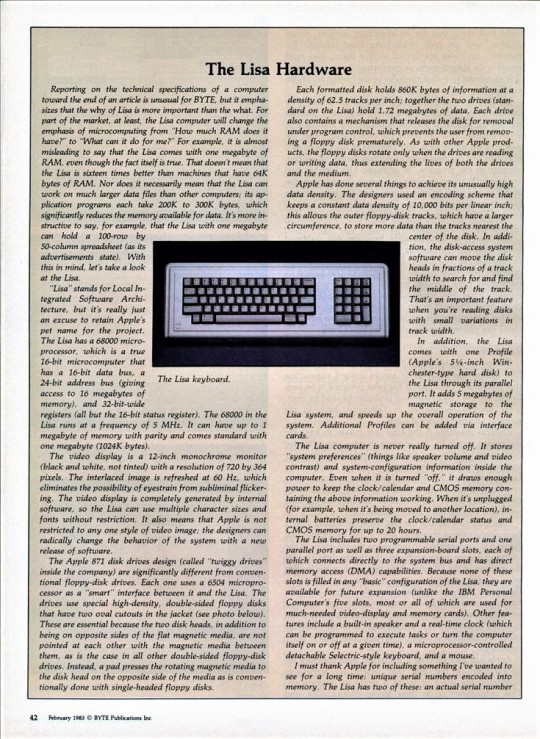

“Lisa” stands for Local Integrated Software Architecture, but it’s really just an excuse to retain Apple’s pet name for the project. The Lisa has a 68000 microprocessor, which is a true 16-bit microcomputer that has a 16-bit data bus, a 24-bit address bus (giving access to 16 megabytes of memory), and 32-bit-wide registers (all but the 16-bit status register). The 68000 in the Lisa runs at a frequency of 5 MHz. It can have up to 1 megabyte of memory with parity and comes standard with one megabyte (1024K bytes).

The video display is a 12-inch monochrome monitor (black and white, not tinted) with a resolution of 720 by 364 pixels. The interlaced image is refreshed at 60 Hz, which eliminates the possibility of eyestrain from subliminal flickering. The video display is completely generated by internal software, so the Lisa can use multiple character sizes and fonts without restriction. It also means that Apple is not restricted to any one style of video image; the designers can radically change the behavior of the system with a new release of software.

The Apple 871 disk drives design (called “twiggy drives” inside the company) are significantly different from conventional floppy-disk drives. Each one uses a 6504 microprocessor as a “smart” interface between it and the Lisa. The drives use special high-density, double-sided floppy disks that have two oval cutouts in the jacket (see photo below). These are essential because the two disk heads, in addition to being on opposite sides of the flat magnetic media, are not pointed at each other with the magnetic media between them, as is the case in all other double-sided floppy-disk drives. Instead, a pad presses the rotating magnetic media to the disk head on the opposite side of the media as is conventionally done with single-headed floppy disks.

Each formatted disk holds 860K bytes of information at a density of 62.5 tracks per inch; together the two drives (standard on the Lisa) hold 1.72 megabytes of data. Each drive also contains a mechanism that releases the disk for removal under program control, which prevents the user from removing a floppy disk prematurely. As with other Apple products, the floppy disks rotate only when the drives are reading or writing data, thus extending the lives of both the drives and the medium.

Apple has done several things to achieve its unusually high data density. The designers used an encoding scheme that keeps a constant data density of 10,000 bits per linear inch; this allows the outer floppy-disk tracks, which have a larger circumference, to store more data than the tracks nearest the center of the disk. In addition, the disk-access system software can move the disk heads in fractions of a track width to search for and find the middle of the track. That’s an important feature when you’re reading disks with small variations in track width.

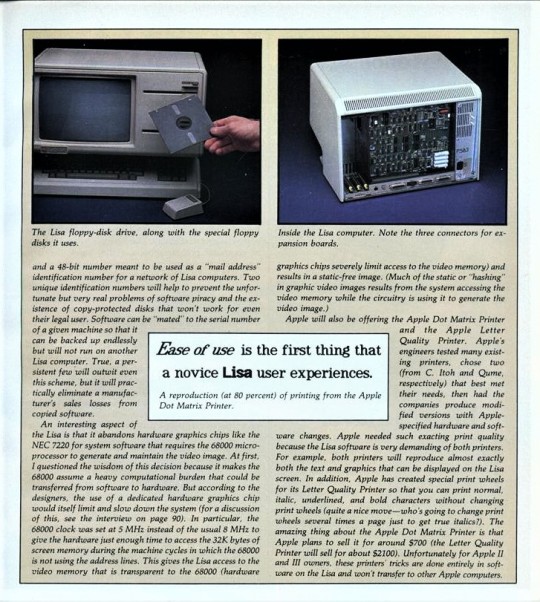

In addition, the Lisa comes with one Profile (Apple’s 5-1/4-inch Winchester-type hard disk) to the Lisa through its parallel port. It adds 5 megabytes of magnetic storage to the Lisa system, and speeds up the overall operation of the system. Additional Profiles can be added via interface cards.

The Lisa computer is never really turned off. It stores “system preferences” (things like speaker volume and video contrast) and system-configuration information inside the computer. Even when it is turned “off,” it draws enough power to keep the clock/calendar and CMOS memory containing the above information working. When it’s unplugged (for example, when it’s being moved to another location), internal batteries preserve the clock/calendar status and CMOS memory for up to 20 hours.

The Lisa includes two programmable serial ports and one parallel port as well as three expansion-board slots, each of which connects directly to the system bus and has direct memory access (DMA) capabilities. Because none of these slots is filled in any “basic” configuration of the Lisa, they are available for future expansion (unlike the IBM Personal Computer’s five slots, most or all of which are used for much-needed video-display and memory cards). Other features include a built-in speaker and a real-time clock (which can be programmed to execute tasks or turn the computer itself on or off at a given time), a microprocessor-controlled detachable Selectric-style keyboard, and a mouse.

I must thank Apple for including something I’ve wanted to see for a long time: unique serial numbers encoded into memory. The Lisa has two of these: an actual serial number An interesting aspect of the Lisa is that it abandons hardware graphics chips like the NEC 7220 for system software that requires the 68000 microprocessor to generate and maintain the video image. At first, I questioned the wisdom of this decision because it makes the 68000 assume a heavy computational burden that could be transferred from software to hardware. But according to the designers, the use of a dedicated hardware graphics chip would itself limit and slow down the system (for a discussion of this, see the interview on page 90). In particular, the 68000 clock was set at 5 MHz instead of the usual 8 MHz to give the hardware just enough time to access the 32K bytes of screen memory during the machine cycles in which the 68000 is not using the address lines. This gives the Lisa access to the video memory that is transparent to the 68000 (hardware graphics chips severely limit access to the video memory) and results in a static-free image. (Much of the static or “hashing” in graphic video images results from the system accessing the video memory while the circuitry is using it to generate the video image.)

Apple will also be offering the Apple Dot Matrix Printer and the Apple Letter Quality Printer. Apple’s engineers tested many existing printers, chose two (from C. Itoh and Qume, respectively) that best met their needs, then had the companies produce modified versions with Apple-specified hardware and software changes. Apple needed such exacting print quality because the Lisa software is very demanding of both printers. For example, both printers will reproduce almost exactly both the text and graphics that can be displayed on the Lisa screen. In addition, Apple has created special print wheels for its Letter Quality Printer so that you can print normal, italic, underlined, and bold characters without changing print wheels (quite a nice move who’s going to change print wheels several times a page just to get true italics?). The amazing thing about the Apple Dot Matrix Printer is that Apple plans to sell it for around $700 (the Letter Quality Printer will sell for about $2100). Unfortunately for Apple II and III owners, these printers’ tricks are done entirely in software on the Lisa and won’t transfer to other Apple computers.

Daily inspiration. Discover more photos at http://justforbooks.tumblr.com

10 notes

·

View notes

Text

N1-§1 What is the Solid-State Relay (SSR)?

Solid State Relay (also known as SSR, SS Relay, SSR relay or SSR switch) is an integrated contactless electronic switch device that is compactly assembled from an integrated circuit(IC) and discrete components. Depending on the switching characteristics of the electronic components (such as switching transistors, bi-directional thyristors and other semiconductor components), the SSRs are able to switch the "ON" and "OFF" state of the load very quickly through the electronic circuit, just like the function of traditional mechanical relays. Compared with the previous "coil-reed contact" relay, namely Electromechanical Relay(EMR), there is no movable mechanical part inside the SSR, and there is also no mechanical action during the switching process of the SSR. Therefore, the Solid-State Relay is also called "non-contact switch".

The structural characteristics of the SSR switch make it superior to the EMR. The main advantages of solid state relays are as follows:

● The semiconductor component acts as a switch for the relay, which is small in size (compact size) and long in life (long lifetime).

● Better Electro-Magnetic Compatibility than EMR - immunity to Radio Frequency Interference (RFI) and Electro-Magnetic Interference (EMI), low electromagnetic interference, and low electromagnetic radiation.

● No moving parts, no mechanical wear, no action noise, no mechanical failure, and high reliability.

● No spark, no arc, no burning, no contact bounce, and no wear between contacts.

● With “zero voltage conduction, zero current shutdown” function, easy to achieve "zero voltage” switching.

● Fast switching speed (SSR switching speed is 100 times higher than general EMR), high operating frequency.

● High sensitivity, low electrical level control signals (SSR can directly drive large current loads through the small current control signals), compatible with logic circuit (TTL, CMOS, DTL, HTL circuits), easy to implement multiple functions.

● Generally packaged by insulation material, with good moisture resistance, mildew resistance, corrosion resistance, vibration resistance, mechanical shock resistance and explosion-proof performance.

Click here to know more information about the comparation of SSR and EMR.

Furthermore, the amplification and drive function of the solid-state relay is very suitable for driving high-power actuator, which is more reliable than electromagnetic relays (EMR). The control switches of solid state relays require very low power, so the low control currents can be used to control high load currents. And, the solid-state relay uses mature and reliable optoelectronic isolation technology between the input and output terminals. This technology allows the output signal of the low power device to be directly connected to the input control terminals of the solid state relay, to control the high power device at the output terminal of the solid-state relay without the need for additional protection circuitry to protect the weak current device, because the "small control current device" (connected to the SSR input terminal) and the "large control power supplies" (connected to the SSR output terminal) have been electrically isolated. Besides, AC solid state relays use the “zero-crossing detector” technology to safely apply the AC-SSR to the computer's output interface without causing a series of interferences or even serious failures to the computer. And these features cannot be implemented by EMR. Because of the inherent characteristics of solid state relays and the above advantages, SSR has been widely used in various fields since it came out in 1974, and has completely replaced electromagnetic relays in many fields where electromagnetic relays cannot apply. Especially in the computer automatic control system field, because the solid state relay requires very low drive power and are compatible with the logic circuit, and can also directly drive the output circuit without the need for an additional intermediate digital buffer.

At present, solid state relays perform well in military, chemical, industrial automation control devices, electro mobile, telecommunication, civil electronic control equipment, as well as security and instrumentation applications, such as electric furnace heating system,computer numerical control machine (CNC machine), remote control machinery, solenoid valve, medical equipment, lighting control system (such as traffic light, scintillator,stage lighting control system), home appliances(such as washing machine, electric stove, oven, refrigerator, air conditioner), office equipment (such as photocopier,printers, fax machinesand Multi-function printers), fire safety systems, electric vehicle charging system and so on. All in all, solid state relays can be used in any application requiring high stability (optical isolation, high immunity), high performance (high switching speed, high load current), and small package size. Of course, solid state relays also have some disadvantages, including: exist on-state voltage drop and output leakage current, need heat dissipation measures, higher purchase cost than EMR, DC relays and AC relays are not universal, single control state, small number of contact groups, and poor overload capability. While some special customized solid state relays can solve some of the above problems, these disadvantages need to be considered and optimized when designing circuits and applying SSRs to maximize the benefits of solid state relays.

#solid state relay#ssr#electric relay#basics#electrical relay#electronic relay#ssr switch#ssr relay#slolid state

1 note

·

View note

Text

Why Camera Sensors Matter and How They Keep Improving

youtube

What is the most important aspect of a camera to consider when looking to buy a new one? In this video, Engadget put camera sensors in the spotlight and reviewed how they have improved and what role they play in today’s photographic equipment.

Camera brands regularly release new cameras, with each model improving on its past versions. However, video producer Chris Schodt from Engadget points out in the company’s latest YouTube video that it may appear camera sensors haven’t progressed as rapidly in the recent past, although resolution has increased. This is because modern-day cameras — such as the Canon EOS 5D released in 2005 — were already able to produce high-quality images over a decade ago and still continue to do so.

Camera sensors, in technical terms, can be described as a grid of photodiodes which act as a one-way valve for electrons. In CMOS sensors — which are widely used in digital cameras that photographers use today — each pixel has additional circuitry built into it aside from the photodiode.

These on-pixel electronics help CMOS sensors quick speed because they can read and reset quickly, although, in the past, this characteristic could also contribute to bringing up fixed-pattern noise. However, with the improvement of manufacturing processes, this side-effect has been largely eliminated in modern cameras.

Schodt explains that noise control is crucial to a camera’s low light performance and dynamic range, which is a measure of the range of light captured in the image between the maximum and minimum values. In a photograph, those are between white — such as when pixel clips or is overexposed — and black, respectively.

Clipped or overexposed pixels in an image

In an ideal scenario, camera sensors would capture light, which is emitted as photons, in a uniform way to reconstruct a perfectly clear image. However, that isn’t the case because they hit the sensor randomly.

One way to deal with this is to produce larger sensors and larger pixels, however, that comes with a large production cost and an equally large camera body, such as the Hasselblad H6D-100c digital back which has a 100MP CMOS sensor and a $26,500 price.

Other solutions include the development of Backside Illuminated sensors (BSI), such as the one announced by Nikon in 2017 and Sony first in 2015. This type of sensor leads to improved low-light performance and speed. Similarly, so does a stacked CMOS sensor that provides even faster speeds, such as the Sony Micro Four Thirds sensor published earlier in 2021.

Smartphones, on the other hand, use multiple images and average them together to improve noise and dynamic range, like the Google HDR+ with Bracketing Technology, which is also a direction that several modern video cameras have taken, too.

Looking towards the future of sensor development, Schodt explains that silicon, which is the material currently used to make sensors, is likely to stay, although some alternative materials have been used like gallium arsenide and graphene. Another possible direction is curved sensors, although they would make it difficult for users as curved sensors would need to be paired with precisely manufactured lenses. In practical terms, photographers would have to buy into a particular system with no option of using a third-party lens.

It’s likely that in the future focus will be on computational photography. Faster sensors and more on-camera processing to make use of smartphone-style image stacking might make its way to dedicated cameras, for example, in addition to AI-advanced image processing.

In the video above, Schodt explains more in detail the technical build of sensors and how their characteristics correlate to the resulting images. More Engadget educational videos can be found on the company’s YouTube page.

Image credits: Photos of camera sensors licensed via Depositphotos.

from PetaPixel https://ift.tt/3BZ0xZs

0 notes

Text

Small Modulator for Big Data

Fiber- optical networks, the foundation of the web, depend on high-fidelity details conversion from electrical to the optical domain. The scientists integrated the very best optical product with ingenious nanofabrication and style techniques, to understand, energy-efficient, high-speed, low-loss, electro-optic converters for quantum and classical interactions (Image thanks to Second Bay Studios/Harvard SEAS).

Conventional lithium niobite modulators, the long time workhorse of the optoelectronic market, might quickly go the method of the vacuum tube and floppy disc. Researchers from the HarvardJohn A. Paulson School of Engineering and Applied Sciences have actually established a brand-new technique to make and develop incorporated, on-chip modulators 100 times smaller sized and 20 times more effective than existing lithium niobite (LN) modulators.

Conventional lithium niobite modulators [right] are the foundation of contemporary telecoms however are large, pricey and power starving. This incorporated, on-chip modulator [center] is 100 times smaller sized and 20 times more effective. (Image thanks to the Loncar Lab/ Harvard SEAS)

The research study is explained in Nature

“This research demonstrates a fundamental technological breakthrough in integrated photonics,” stated MarkoLoncar, the Tiantsai Lin Professor of Electrical Engineering at SEAS and senior author of the paper. “Our platform could lead to large-scale, very fast and ultra-low-loss photonic circuits, enabling a wide range of applications for future quantum and classical photonic communication and computation.”

Harvard’s Office of Technology Development ( OTD) has actually worked carefully with the Loncar Lab on the development of a start-up business, HyperLight, that plans to advertise a portfolio of fundamental copyright associated to this research study. Readying the technology towards the launch of HyperLight has actually been assisted by moneying from OTD’s PhysicalSciences & & Engineering Accelerator, which offers translational financing for research study jobs that reveal prospective for substantial business effect.

Lithium niobate modulators are the foundation of contemporary telecoms, transforming electronic data to optical details in fiber optic cable televisions. However, traditional LN modulators are large, pricey and power starving. These modulators need a drive voltage of 3 to 5 volts, substantially greater than that supplied by common CMOS circuitry, which offers about 1 volt. As an outcome, different, power-consuming amplifiers are had to drive the modulators, seriously restricting chip-scale optoelectronic combination.

“We show that by integrating lithium niobate on a small chip, the drive voltage can be reduced to a CMOS-compatible level,” stated Cheng Wang, co-first author of the paper, previous PhD trainee and postdoctoral fellow at SEAS, and presently Assistant Professor at City University of HongKong “Remarkably, these tiny modulators can also support data transmission rates up to 210 Gbit/s. It’s like Antman – smaller, faster and better.”

“Highly-integrated yet high-performance optical modulators are very important for the closer integration of optics and digital electronics, paving the way towards future fiber-in-fiber-out opto-electronic processing engines,” stated Peter Winzer, Director of Optical Transmission Research at Nokia Bell Labs, the commercial partner in this task, and coauthor of the paper. “We see this new modulator technology as a promising candidate for such solutions.”

Lithium niobite is thought about by numerous in the field to be hard to deal with on small scales, a barrier that has actually up until now dismissed useful incorporated, on-chip applications. In previous research study, Loncar and his group showed a method to make high-performance lithium niobate microstructures utilizing basic plasma etching to physically shape microresonators in thin lithium niobate movies.

Combining that method with specifically developed electrical elements, the scientists can now develop and make an incorporated, high-performance on-chip modulator.

“Previously, if you wanted to make modulators smaller and more integrated, you had to compromise their performance,” stated Mian Zhang, a postdoctoral fellow at SEAS and co-first author of the research study. “For example, existing integrated modulators can easily lose the majority of the light as it propagates on the chip. In contrast, we have reduced losses by more than an order of magnitude. Essentially, we can control light without losing it.”

“Because a modulator is such a fundamental component of communication technology — with a role equivalent to that of a transistor in computation technology — the applications are enormous,” statedZhang “The fact that these modulators can be integrated with other components on the same platform could provide practical solutions for next-generation long-distance optical networks, data center optical interconnects, wireless communications, radar, sensing and so on.”

This research study was co-authored by Xi Chen, Maxime Bertrand, Amirhassan Shams-Ansari, and Sethumadhavan Chandrasekhar.

Source: HarvardJohn A. Paulson School of Engineering and Applied Sciences

New post published on: https://www.livescience.tech/2018/09/25/small-modulator-for-big-data/

0 notes

Text

RF mixed signal design | Mixed Signal Design

The Combination of Art and Science of RF and Mixed-signal Design

"Over the past couple of years, mixed-signal integrated circuit (IC) design has been one of the most technically tough and interesting segments of the semiconductor industry. Regardless of every advancement that has taken place in the semiconductor industry over that time period, the one continuous requirement is still to ensure the analog world we reside in connects effortlessly with the digital world of computing, a requirement particularly driven by the existing prevalent mobile environment and the swiftly emerging Internet of Things (IoT) "re-evolution" of RF and Mixed-signal Design.

Digital and memory ICs comprise about two-thirds of today's approximately $320 billion worldwide semiconductor market. These ICs are driven by Moore's Law and advanced CMOS process technology, which lowers the cost and enhances the integration of semiconductor devices annually. Discrete and analog semiconductors represent about one-fifth of the global semiconductor market and are mostly served by older semiconductor process technologies as core analog parts are costly to manufacture in newer process nodes.

Mixed-signal ICs account for about one-tenth of the global semiconductor market. This approximation relies on how you count a mixed-signal IC, which could be specified as a semiconductor tool that integrates considerable analog and digital functionality to provide an interface to the analog world. The archetype of mixed-signal ICs consist of system-on-chip (SoC) devices; cellular, Wi-Fi, Bluetooth as well as wireless personal area network (WPAN) transceivers; GPS, TV, and AM/FM receivers; audio as well as video converters; advanced clock and oscillator devices; networking interfaces; and recently, low-rate WPAN (LR-WPAN) wireless MCUs. Extremely integrated mixed-signal IC solutions often supersede legacy technologies in well-known semiconductor markets when the required functionality and analog efficiency could be accomplished at a reduced expense compared to other or discrete analog approaches. The more crucial part is, a high level of mixed-signal integration significantly streamlines the engineering required by system manufacturers, allowing them to concentrate on their core applications and reach the market much faster.

Designing Mixed-signal ICS:

Mixed-signal ICs are hard to design and manufacture, specifically if they consist of RF capability. A huge standalone analog and discrete IC market exist since analog integration with digital ICs is not an easy, simple process. Analog and RF design has frequently been referred to as "black art" due to the fact that a lot of it is done usually by experimentation and very often by intuition. The contemporary mixed-signal design must always be considered a lot more science than alchemy. "Brute force" analog integration must constantly be avoided, as experimentation is a really costly process in IC development.

The real "art" in mixed-signal design need to result from a deep understanding of how the underlying physical interaction phenomena manifest in facility systems integrated with a robust and elegant design methodology based on a digital-centric method. The perfect strategy combines mixed-signal design and digital signal processing and allows the integration of complicated, high-performance as well as very delicate analog as well as digital circuits without the anticipated trade-offs. The effective capacities of digital processing in fine-line digital CMOS procedures could be utilized to make up and adjust for analog blemishes and alleviate undesirable interactions, hence boosting the speed, accuracy, power usage, and eventually the cost and usability of the mixed-signal tool.

Moore's Law has been incredibly consistent with digital circuit design, increasing the variety of transistors in a provided area every 2 years, and it is still partly appropriate in the age of deep sub-micron technologies. This regulation does not usually apply as well to analog circuits, resulting in a considerable lag in the fostering of scaled technologies for analog ICs. It is not unusual for analog devices to be still designed as well as manufactured 180 nm technologies and above. The fact is that the scaling of the process technology partly drives the area and power scaling in analog circuits and often comes to be a design barrier. In fact, analog scaling is more frequently driven by the reduction of undesirable effects (such as analytical tool mismatch or noise resulting from flaws at materials interfaces), which is the outcome of quality enhancements of the process itself. Consequently, mixed-signal designers choose to rely upon processes that are a couple of steps behind the cutting edge of process technology, which could still enhance device quality by depending on a few of the most recent technological advancements. To put it simply, the analog facets of Moore's Law fall back to the standard digital approach. The scenario is a lot more dynamic, as well as the digital/analog technology void, could be partly made up if it is still worth the investment of the IC modern technology suppliers.

An ideal manufacturing process node for mixed-signal IC design lags behind the bleeding side of process technology, and the option of nodes is a compromise of a number of factors, which inevitably relies on the quantity of analog and mixed-signal circuitry consisted of in the device. More specifically, a more digital-centric mixed-signal design method allows the designer to utilize advanced process nodes in order to fix one of the toughest commercial issues with analog circuit integration-- the capability to integrate analog to decrease expenses while increasing functionality. Design engineering teams at numerous leading semiconductor firms are proactively pushing the limits of mixed-signal design and attempting to address this challenge with unique solutions where logic gates and switching components are changing amplifying and large passive devices.

The IoT and Mixed-signal Design:

The Internet of Things aggregates networks of IoT nodes, i.e., extremely low-priced, intelligent, and connected sensors and actuators utilized for data collection and tracking in myriad applications that enhance energy efficiency, security, healthcare, environmental monitoring, industrial processes controls, transportation, and livability in generally. IoT nodes are forecasted to reach 50 billion devices by 2020, and perhaps getting to the one trillion thresholds just a couple of years later on. These astronomical market numbers pose significant restrictions in regard to engineering, manufacturability, energy consumption, maintenance, and eventually the health of our environment. Along with being offered in astonishingly high quantities, all these IoT nodes need to be really small, energy-efficient, and secure, and they are normally not easily accessible to customers for maintenance. IoT nodes frequently require to run with extremely small coin cell batteries for years or even more, or perhaps counting on energy scavenging strategies.

These application needs to make the IoT node the utmost prospect for extremely innovative digital-centric mixed-signal design methods. The optimal IoT node will need modern mixed-signal circuits to interface to actuators and sensors. They need to consist of RF connectivity, usage of really power-efficient wireless protocols, and need marginal external elements. They likewise need to consist of power converters to optimize power efficiency and manage various battery chemistries or energy sources, all characteristics generally accessible with mature process nodes. At the same time, these IoT nodes will require reasonably complicated, ultra-low-power computing resources and memories to store and execute applications and network protocol software, which is much better addressed with finer technologies. The present instantiation of such a paradigm is a mixed-signal IC that is commonly known as a wireless MCU: an easy-to-use, small-footprint, energy-efficient, and highly integrated connected computing device with sensing and actuating capabilities.

RF and Mixed-signal Design, the spreading of ultra-low-power wireless MCUs are crucial to the innovation of the IoT. Wireless MCUs supply the minds, sensing, and connectivity for IoT nodes, from wireless security sensors to digital lighting controls. The combination of art as well as science for mixed-signal design is the vital enabler for the growth of next-generation wireless MCUs that link the analog, RF, as well as digital worlds and maximal advantage of the power of Moore's Law, without concessions in efficiency, cost, power consumption, or size.

Faststream helped one of the clients to design a next-generation vending machine solution using IoT and AI that supports functions such as predictive maintenance, customer-centric loyalty programs, and cashless transactions, feel free and contact us for more details: Email: [email protected]

#rf mixed signal design#mixed signals#mixed signal design#semiconductor#semiconductor design#ic design services#internet of things (iot)

0 notes

Text

Taking the new Mission RGO One transceiver to the field!

SWLing Post readers might recall that last year at the 2018 Hamvention, I met with radio engineer, Boris Sapundzhiev (LZ2JR), who was debuting the prototype of his 50 watt transceiver kit: the Mission RGO One (click here to read that post).

I’ve been in touch with Boris since last year and we arranged to meet again at the 2019 Hamvention so I could take a closer look at the RGO One especially since he has started shipping the first limited production run.

The RGO One is everything Boris promised last year and he’s on schedule having finished all of the hardware and implementing frequent firmware updates to add functionality.

Excellent first impressions

I’ll be honest: I think the RGO One was one of the most exciting little radios to come out of the Hamvention this year. Why?

First of all, in contrast to some radios I’ve tested and evaluated over the past two years, I can tell immediately that the Mission RGO One was developed by an active ham radio operator and DXer.

Here are some of the RGO One features and highlights lifted from the preliminary product manual (PDF):

QRP/QRO output 5 – 50W [can actually be lowered to 0 watts out in 1 watt increments]

All mode shortwave operation – coverage of all HAM HF bands (160m/60m optional)

High dynamic range receiver design including high IP3 monolithic linear amplifiers in the front end and diode ring RX mixer or H-mode first mixer (option).

Low phase noise first LO – SI570 XO/VCXO chip.

Full/semi (delay) QSK on CW; PTT/VOX operation on SSB. Strict RX/TX sequencing scheme. No click sounds at all!

Down conversion superhet topology with popular 9MHz IF

Custom made crystal filters for SSB and CW and variable crystal 4 pole filter – Johnson type 200…2000Hz

Fast acting AGC (fast and slow) with 134kHz dedicated IF

Compact and lightweight body [only 5 lbs!]

Custom made multicolor backlit FSTN LCD

Custom molded front panel with ergonomic controls.

Silent operation with no clicking relays inside – solid state GaAs PHEMT SPDT switches on RX (BPF and TX to RX switching) and ultrafast rectifying diodes (LPF)

Modular construction – Main board serves as a “chassis” also fits all the external connectors, daughter boards, inter-connections and acts as a cable harness.

Optional modules – Noise Blanker (NB), Audio Filter (AF), ATU, XVRTER, PC control via CAT protocol; USB UART – FTDI chipset

Double CPU circuitry control for front panel and main board – both field programmable via USB interface.

Memory morse code keyer (Curtis A, CMOS B); 4 Memory locations 128 bytes each

What really sets the Mission RGO apart from its competitors is the fact that it’s compact, lightweight (only 5 lbs–!), and has a power output of up to 50 watts. Most other rigs in this class have a maximum output of 10 to 15 watts and require an external amplifier for anything higher.

The RGO One should also play for a long time on battery power as the receive current drain is a modest 0.65A with receiver preamp on.

The RGO is also designed to encourage a comfortable operating position.

The bail lifts the front of the radio so that the faceplate and backlit screen are easily viewed at any angle.

The keypad is intuitive and (hold your applause) all of the important functions are within one button or knob press–!

The front panel design is so simple and clean. There are no embedded menus to navigate, for example, to change: filter width, power levels, RF gain, keyer speed, mic gain, pre amp, and audio monitor levels. Knob spacing is excellent and I believe I could even operate the RGO while wearing gloves.

Even split operation is designed so that, with one button press, you can easily monitor a pile-up and position your transmit frequency where the DX station last worked a contact (similar to the Icom XFC button). The user interface is intuitive–it’s obvious to me that Boris built this radio around working DX at home and in the field.

Speaking of the field…

Parks On The Air (POTA) with the Mission RGO One

At my request, Boris has kindly loaned me one of the first production run units to test and review over the next few months. I intend to evaluate this radio at home, in the field and (especially) on Field Day. By July, I should have a very good idea how well this Bulgaria-born transceiver performs under demanding radio conditions!

I had planned to begin my RGO One evaluation after returning home from Hamvention, but I couldn’t resist taking it to the field, even though the propagation forecast was dismal.

The first leg of my journey home took me to Columbus, Ohio on Monday, so I scheduled a POTA (Parks On The Air) activation of the Delaware State Park (K-1946).

Delaware State Park (POTA K-1946) in Delaware, Ohio.

My buddy Miles (KD8KNC) and I met with our friend Mike (K8RAT) at the park entrance and quickly found a great site with tall trees, a little shade and a large picnic table.

We set up both RGO One and (for comparison) my Elecraft KX2 for the POTA activation.

I won’t lie: band conditions were horrible. Propagation was incredibly weak, QRN was high and QSB was deep. Yuck.

Still, this activation gave me a chance to actually test the RGO One in proper field conditions.

I was limited to SSB since the only CW key I had was the paddle set specifically designed to attach to the front panel of the Elecraft KX2. Also, I used the LnR Precision EFT Trail-Friendly end-fed antenna which can only handle power up to 25 watts, so I didn’t increase the RGO One power over that.

Although I had never operated the radio before, I was able to sort out most of its functions and features quickly.

The receiver audio was excellent and the noise floor seemed quite low to my ears. The internal speaker does a fine job producing audio that is more than ample for a field setting. Still, I prefer operating with a set of earphones in the field–especially important on days like this when propagation equates to a lot of weak signals.

Although I couldn’t make a total of ten contacts to claim a proper POTA activation, I was pleased with offering up K-1946 to seven lucky POTA hunters/chasers. I simply didn’t have enough time allotted to work three more at such a slow QSO rate.

Of course, my signal reports were averaging “5 by 5” never more than “5 by 7” regardless if I used the KX2 or RGO One. RGO One audio reports were great.

Stay tuned!

I will publish my first review of the Mission RGO One in The Spectrum Monitor Magazine, likely in August or September. In the meantime, I will post updates here as I put the RGO One through the paces. I’m especially excited about operating it during Field Day with my buddy Vlado (N3CZ) and see how it holds up in such an RF-dense environment.

And now that the POTA bug has bitten me? Expect to catch me on the air with the RGO One over the next few weeks!

If you’re interested in following the Mission RGO One, bookmark the tag: RGO ONE.

Do you enjoy the SWLing Post?

Please consider supporting us via Patreon or our Coffee Fund!

Your support makes articles like this one possible. Thank you!

from DXER ham radio news http://bit.ly/2VYBrcG via IFTTT

0 notes

Text

Smaller, faster and more efficient modulator sets to revolutionize optoelectronic industry | Soukacatv.com

A research team comprising members from City University of Hong Kong (City), Harvard University and renowned information technologies laboratory has successfully fabricated a tiny on-chip lithium innovate modulator, an essential component for the optoelectronic industry. The modulator is smaller, more efficient with faster data transmission and costs less. The technology is set to revolutionize the industry.

HDMI Encoder Modulator,16in1 Digital Headend, HD RF Modulator at Soukacatv.com

SKD3013 3 Channel HD Encode Modulator

SKD19 Series 1U Rack 12CH Encode Modulator

SKD121X Encoding & Multiplexing Modulator

Household Universal Encoding & Modulation Modulator

The electro-optic modulator produced in this breakthrough research is only 1 to 2 cm long and its surface area is about 100 times smaller than traditional ones. It is also highly efficient -- higher data transmission speed with data bandwidth tripling from 35 GHz to 100 GHz, but with less energy consumption and ultra-low optical losses. The invention will pave the way for future high-speed, low power and cost-effective communication networks as well as quantum photonic computation.

The research project is titled "Integrated lithium innovate electro-optic modulators operating at CMOS-compatible voltages" and was published in the latest issue of the journal Nature.

Electro-optic modulators are critical components in modern communications. They convert high-speed electronic signals in computational devices such as computers to optical signals before transmitting them through optical fibers. But the existing and commonly used lithium innovate modulators require a high drive voltage of 3 to 5V, which is significantly higher than 1V, a voltage provided by a typical CMOS (complementary metal-oxide-semiconductor) circuitry. Hence an electrical amplifier that makes the whole device bulky, expensive and high energy-consuming is needed.

Dr Wang Cheng, Assistant Professor in the Department of Electronic Engineering at City and co-first author of the paper, and the research teams at Harvard University and Nokia Bell Labs have developed a new way to fabricate lithium innovate modulator that can be operated at ultra-high electro-optic bandwidths with a voltage compatible with CMOS.

"In the future, we will be able to put the CMOS right next to the modulator, so they can be more integrated, with less power consumption. The electrical amplifier will no longer be needed," said Dr Wang.

Thanks to the advanced Nano fabrication approaches developed by the team, this modulator can be tiny in size while transmitting data at rates up to 210 Gbit / second, with about 10 times lower optical losses than existing modulators.

"The electrical and optical properties of lithium innovate make it the best material for modulator. But it is very difficult to fabricate in nanoscale, which limits the reduction of modulator size," Dr Wang explains. "Since lithium innovate is chemically inert, conventional chemical etching does not work well with it. While people generally think physical etching cannot produce smooth surfaces, which is essential for optical transmission, we have proved otherwise with our novel Nano fabrication techniques."

With optical fibers becoming ever more common globally, the size, the performance, the power consumption and the costs of lithium innovate modulators are becoming a bigger factor to consider, especially at a time when the data centers in the information and communications technology (ICT) industry are forecast to be one of the largest electricity users in the world.

This revolutionary invention is now on its way to commercialization. Dr Wang believes that those who look for modulators with the best performance to transmit data over long distances will be among the first to get in touch with this infrastructure for photonics.

Dr Wang began this research in 2013 when he joined Harvard University as a PhD student at Harvard's John A. Paulson School of Engineering and Applied Sciences. He recently joined City and is looking into its application for the coming 5G communication together with the research team at the State Key Laboratory of Terahertz and Millimeter Waves at City.

"Millimeter wave will be used to transmit data in free space, but to and from and within base stations, for example, it can be done in optics, which will be less expensive and less loss," he explains. He believes the invention can enable applications in quantum photonics, too.

Established in 2000, the Soukacatv.com main products are modulators both in analog and digital ones, amplifier and combiner. We are the very first one in manufacturing the headend system in China. Our 16 in 1 and 24 in 1 now are the most popular products all over the world.

For more, please access to https://www.soukacatv.com.

CONTACT US

Company: Dingshengwei Electronics Co., Ltd

Address: Bldg A, the first industry park of Guanlong, Xili Town, Nanshan, Shenzhen, Guangdong, China

Tel: +86 0755 26909863

Fax: +86 0755 26984949

Mobile: 13410066011

Email: [email protected]

Source: science daily

0 notes

Photo

New Post has been published on http://www.updatedc.com/2018/11/01/canon-advanced-image-sensors-now-available-for-industrial-application/

Canon Advanced Image Sensors Now Available For Industrial Application

Canon’s advanced and super specialised image sensors are going to be available to businesses for all sort of applications, the sensor that can see in the dark inclusive. Canon’s advanced sensor technology is quite impressive.

Press release:

Canon Announces a New CMOS Sensor Business Platform

Patented Sensor Technology Now Available for Industrial Vision Applications

MELVILLE, N.Y., November 1, 2018 – Image sensors are an important driving force behind many of today’s successful brands, ranging from consumer products to industrial solutions. Today, Canon U.S.A, a leader in digital imaging solutions, announces that it is now offering select CMOS (complementary metal-oxide semiconductors) sensor products for sale to the industrial marketplace. Manufacturers, solutions providers and integrators who are searching for advanced components to create their own unique products and solutions can now utilize Canon’s patented technology to help them create these products and solutions and expand their business potential.

“For several decades, Canon has been a leader in developing and manufacturing advanced CMOS sensors with state-of-the-art technologies, which until now, were for exclusive use in Canon products,” said Kazuto Ogawa, president and chief operating officer, Canon U.S.A., Inc. “It was a natural evolution to expand into a new business platform that leverages our expertise in sensor manufacturing to target the growing market demands for high-quality industrial imaging solutions.”

Launching this new business, Canon will be showcasing its CMOS sensors at VISION, the world’s leading trade fair for machine vision, on Tuesday, November 6 through Thursday, November 8, 2018, in Stuttgart, Germany. Attendees are invited to visit Hall 1 – Stand 1G74 to explore Canon’s CMOS sensor products and the variety of applications that can potentially be enhanced, including machine vision, inspection, surveillance, drones, traffic-monitoring systems and other industrial applications.

Canon sensors on display include:

3U5MGXS CMOS Sensor – with an electronic global shutter, and an all pixel progressive reading at 120fps, the Canon 3U5MGXS CMOS 5MP sensor offers fast image capture with low power consumption. This helps to accurately capture images of subjects moving at high speeds without distortion to meet such industrial needs as medical imaging and inspecting parts during the manufacturing process. The Canon 3U5MGXS is now available.

35MMFHDXSCA CMOS Sensor – featuring an enormous 19um pixel pitch, the 35MMFHDXSCA CMOS sensor is capable of capturing color images in exceptionally low-light environments where the naked eye struggles to distinguish objects. Using new pixel and readout circuitry technologies that deliver a 2.76 megapixel resolution, these sensors can support a wide range of applications which require ultra-high sensitivity image capture, including defense, astronomy, surveillance and industrial operations. The Canon 35MMFHDXSCA is now available.

120MXS CMOS Sensor – by incorporating close to the same number of pixels as photoreceptors in the human eye, the Canon 120MXS CMOS sensor delivers ultra-high 120 MP resolution at 9.4fps in a compact APS-H format. This sensor targets the needs of the inspection, aerial mapping, life sciences, digital archiving and transportation industries. The Canon 120MXS is now available.

2U250MRXS CMOS Sensor – with a readout speed of 1.25 billion pixels per second, the prototype 2U250MRXS CMOS sensor, delivers ultra-high 250MP resolution in a compact APS-H format. Through advancements in circuit miniaturization and enhanced signal processing, this sensor delivers high resolution with incredible sensitivity and low noise.

For more information on Canon sensors, please visit canon-cmos-sensors.com.

Source from canonwatch

0 notes

Text

Silicon Photonics Stumbles at the Last Meter

We have fiber to the home, but fiber to the processor is still a problem

Photo: Getty Images

If you think we’re on the cusp of a technological revolution today, imagine what it felt like in the mid-1980s. Silicon chips used transistors with micrometer-size features. Fiber-optic systems were zipping trillions of bits per second around the world.

With the combined might of silicon digital logic, optoelectronics, and optical–fiber communication, anything seemed possible.

Engineers envisioned all of these advances continuing and converging to the point where photonics would merge with electronics and eventually replace it. Photonics would move bits not just across countries but inside data centers, even inside computers themselves. Fiber optics would move data from chip to chip, they thought. And even those chips would be photonic: Many expected that someday blazingly fast logic chips would operate using photons rather than electrons.

It never got that far, of course. Companies and governments plowed hundreds of millions of dollars into developing new photonic components and systems that link together racks of computer servers inside data centers using optical fibers. And indeed, today, those photonic devices link racks in many modern data centers. But that is where the photons stop. Within a rack, individual server boards are still connected to each other with inexpensive copper wires and high-speed electronics. And, of course, on the boards themselves, it’s metal conductors all the way to the processor.

Attempts to push the technology into the servers themselves, to directly feed the processors with fiber optics, have foundered on the rocks of economics. Admittedly, there is an Ethernet optical transceiver market of close to US $4 billion per year that’s set to grow to nearly $4.5 billion and 50 million components by 2020, according to market research firm LightCounting. But photonics has never cracked those last few meters between the data-center computer rack and the processor chip.

Nevertheless, the stupendous potential of the technology has kept the dream alive. The technical challenges are still formidable. But new ideas about how data centers could be designed have, at last, offered a plausible path to a photonic revolution that could help tame the tides of big data.

Inside a Photonics Module

Photo: Luxtera