#Here are some basic examples of AI:..

Explore tagged Tumblr posts

Text

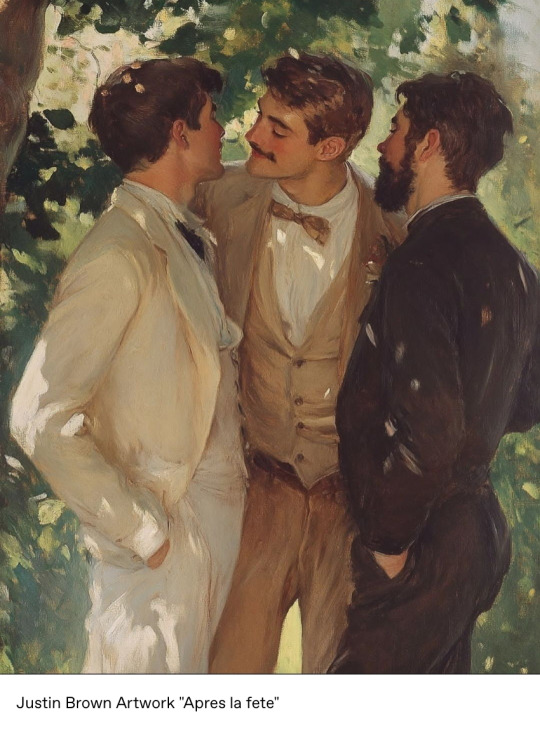

this is your periodic reminder that for all the artifacts and errors and "tells" one could possibly list, the only reliable way to actually determine if an image is ai generated is to investigate the source. it is becoming increasingly common for "fake classical paintings" to circulate around curative aesthetic blogs, and everyone should be using this as an opportunity to not only exercise their investigative skills but also appreciate art more in general. you're all checking out the artists you reblog, right? 🫣

so what are some signs to look for? let's use this very good example.

what a lovely late-impressionist piece blended with evocative leyendecker-esque themes! why haven't you ever heard of this artist before? surely tumblr would be all over an artist like this. who is justin brown?

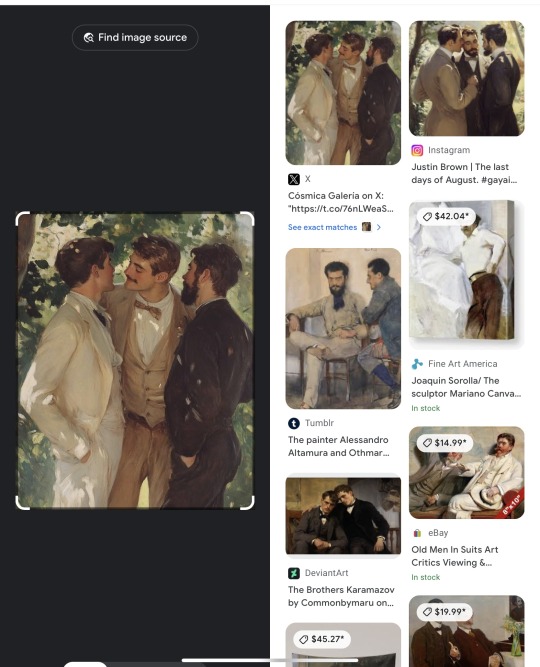

your two options from here are to do a search for the name, or a reverse image search. i prefer reverse image searching, particularly when it comes to a common name like "justin brown". so what does that net?

Immediately, without looking at any text, something is wrong: it barely exists. an actual historical piece would turn up numerous results from websites individually discussing the piece, but no such discussions are taking place. Looking at the text, though, does show the source-- and at least in this case, the creator was honest about their medium.

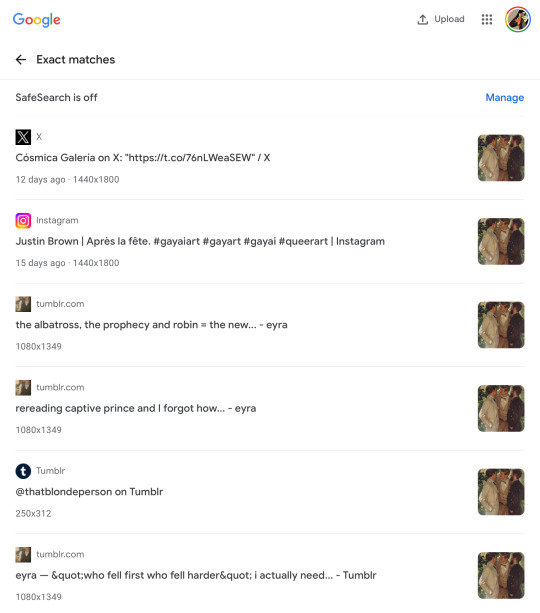

But let's also look at the "exact matches", in case a source doesn't make itself apparent in the initial sidebar results like this.

This section will often tell you post dates of images, and here it can be seen that the very first iteration of the image was posted 15 days ago. It did not exist online prior to that.

Seeing how long an unsourced image has been floating around is a skill applicable to more than just generative images! See a cool image of an artifact or other intriguing item with a vivid caption? Reverse search it! If all the results are paired with that caption and only go back a few months, you might just have viral facebook spam.

Sometimes generative creators are dishonest about their medium and do not tag it like in the example, so that's when establishing "jpeg provenance" becomes important. While it can be a little trickier to determine if someone is using generative images and not admitting to it if they aren't trying to pass it off as a classic, something to consider is the age of their account and the frequency with which they post. Here are some account red flags:

-Did they only start posting art after 2022, or if they did before, did their style/skill level WILDLY change? Not gradual improvement-- I'm talking amateur graphite portraits straight into complex digital renders. Everyone starts somewhere, newness is not a red flag alone; it's newness combined with existing in a vacuum away from any community.

-Do they post fully-finished paintings several times a week? -Do many of these paintings seem iterative of a similar theme or subject matter ("three well-dressed young men face each other under shade and dappled sunlight")?

-Does their style change in inconsistent ways? An artist that can swap between painting like Drew Struzan and Hokusai should be pretty well known, right? Why is no one hyping this guy?!

-Do they have social media besides the source instagram? If so, what are they posting about? Are there any WIPs? Doodles? Interactions with other artists? Gallery dates? 3am self-doubt posts? Or is it all self-promo? Crypto? Seemingly nothing art-related at all for someone pushing out 3 weekly paintings?

Basically, if it's important to you to omit this stuff when you curate, please don't just smash reblog if the source doesn't seem to be the OP themselves. Seeking out sources was important even before this became an issue, now it is more than ever.

peace n love

24K notes

·

View notes

Text

The conversation around AI is going to get away from us quickly because people lack the language to distinguish types of AI--and it's not their fault. Companies love to slap "AI" on anything they believe can pass for something "intelligent" a computer program is doing. And this muddies the waters when people want to talk about AI when the exact same word covers a wide umbrella and they themselves don't know how to qualify the distinctions within.

I'm a software engineer and not a data scientist, so I'm not exactly at the level of domain expert. But I work with data scientists, and I have at least rudimentary college-level knowledge of machine learning and linear algebra from my CS degree. So I want to give some quick guidance.

What is AI? And what is not AI?

So what's the difference between just a computer program, and an "AI" program? Computers can do a lot of smart things, and companies love the idea of calling anything that seems smart enough "AI", but industry-wise the question of "how smart" a program is has nothing to do with whether it is AI.

A regular, non-AI computer program is procedural, and rigidly defined. I could "program" traffic light behavior that essentially goes { if(light === green) { go(); } else { stop();} }. I've told it in simple and rigid terms what condition to check, and how to behave based on that check. (A better program would have a lot more to check for, like signs and road conditions and pedestrians in the street, and those things will still need to be spelled out.)

An AI traffic light behavior is generated by machine-learning, which simplistically is a huge cranking machine of linear algebra which you feed training data into and it "learns" from. By "learning" I mean it's developing a complex and opaque model of parameters to fit the training data (but not over-fit). In this case the training data probably includes thousands of videos of car behavior at traffic intersections. Through parameter tweaking and model adjustment, data scientists will turn this crank over and over adjusting it to create something which, in very opaque terms, has developed a model that will guess the right behavioral output for any future scenario.

A well-trained model would be fed a green light and know to go, and a red light and know to stop, and 'green but there's a kid in the road' and know to stop. A very very well-trained model can probably do this better than my program above, because it has the capacity to be more adaptive than my rigidly-defined thing if the rigidly-defined program is missing some considerations. But if the AI model makes a wrong choice, it is significantly harder to trace down why exactly it did that.

Because again, the reason it's making this decision may be very opaque. It's like engineering a very specific plinko machine which gets tweaked to be very good at taking a road input and giving the right output. But like if that plinko machine contained millions of pegs and none of them necessarily correlated to anything to do with the road. There's possibly no "if green, go, else stop" to look for. (Maybe there is, for traffic light specifically as that is intentionally very simplistic. But a model trained to recognize written numbers for example likely contains no parameters at all that you could map to ideas a human has like "look for a rigid line in the number". The parameters may be all, to humans, meaningless.)

So, that's basics. Here are some categories of things which get called AI:

"AI" which is just genuinely not AI

There's plenty of software that follows a normal, procedural program defined rigidly, with no linear algebra model training, that companies would love to brand as "AI" because it sounds cool.

Something like motion detection/tracking might be sold as artificially intelligent. But under the covers that can be done as simply as "if some range of pixels changes color by a certain amount, flag as motion"

2. AI which IS genuinely AI, but is not the kind of AI everyone is talking about right now

"AI", by which I mean machine learning using linear algebra, is very good at being fed a lot of training data, and then coming up with an ability to go and categorize real information.

The AI technology that looks at cells and determines whether they're cancer or not, that is using this technology. OCR (Optical Character Recognition) is the technology that can take an image of hand-written text and transcribe it. Again, it's using linear algebra, so yes it's AI.

Many other such examples exist, and have been around for quite a good number of years. They share the genre of technology, which is machine learning models, but these are not the Large Language Model Generative AI that is all over the media. Criticizing these would be like criticizing airplanes when you're actually mad at military drones. It's the same "makes fly in the air" technology but their impact is very different.

3. The AI we ARE talking about. "Chat-gpt" type of Generative AI which uses LLMs ("Large Language Models")

If there was one word I wish people would know in all this, it's LLM (Large Language Model). This describes the KIND of machine learning model that Chat-GPT/midjourney/stablediffusion are fueled by. They're so extremely powerfully trained on human language that they can take an input of conversational language and create a predictive output that is human coherent. (I am less certain what additional technology fuels art-creation, specifically, but considering the AI art generation has risen hand-in-hand with the advent of powerful LLM, I'm at least confident in saying it is still corely LLM).

This technology isn't exactly brand new (predictive text has been using it, but more like the mostly innocent and much less successful older sibling of some celebrity, who no one really thinks about.) But the scale and power of LLM-based AI technology is what is new with Chat-GPT.

This is the generative AI, and even better, the large language model generative AI.

(Data scientists, feel free to add on or correct anything.)

3K notes

·

View notes

Text

🎀 The It Girl Lifestyle Guide 🎀

hi girlies! this guide is a part of the big series: The Ultimate It-Girlism Guide. in this mini guide i'll be including all things health, morning/nighttime routines, and more!

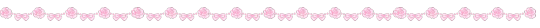

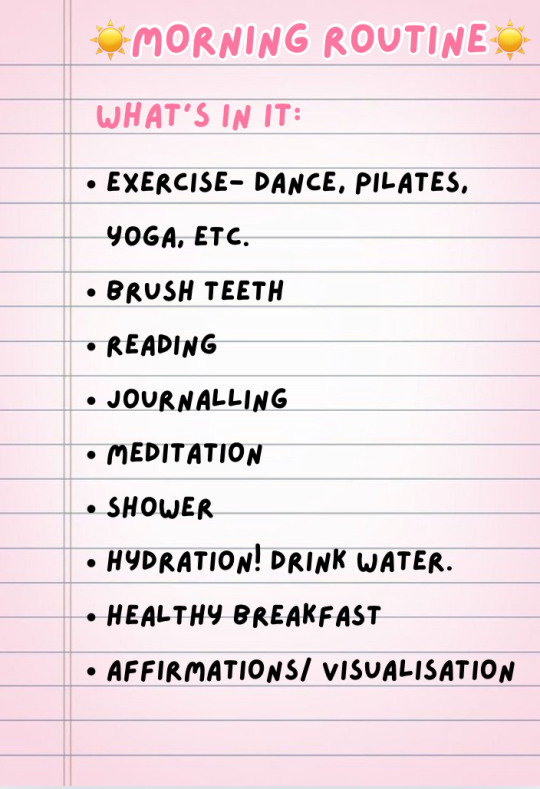

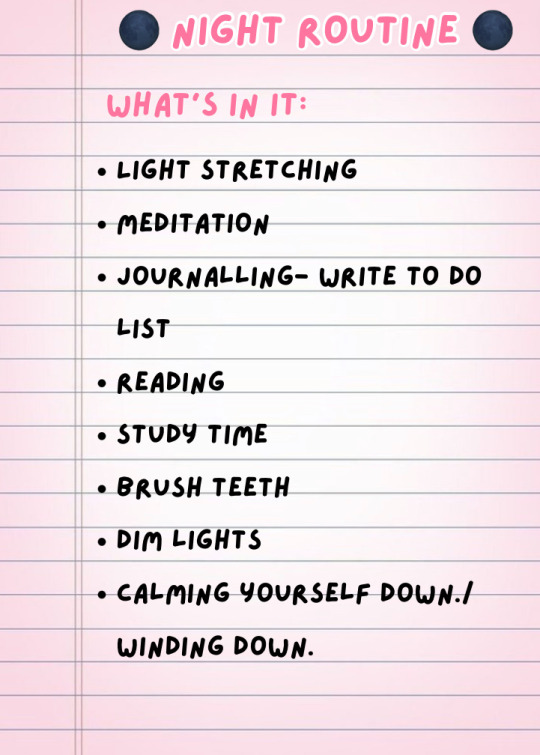

How to create your ideal morning / night / any other routine:

Here’s a mini step by step guide to curating a routine that works specifically for YOU, tailored to your own needs and wants. This can be for any routine u wanna create: morning, night, after school, after work, before school/ work, etc etc.

Apps / things needed:

ChatGPT (or an AI like that- it’s not completely necessary but it’s useful)

Notes app / docs app. (Or a pen and paper- this will be to write down the routine!)

Calendar app (optional tbh)

Ok so first off: decide what you want in your routine. Make a list in no particular order of what you need/ want in the routine.

Some examples:

Once you’ve created this list, you’re pretty much half way done. In this next part you can use chat GPT to make it easier, or use your own mind.

The next thing to do is: ask chatGPT to make a routine with the steps u wanted.

Make sure to mention what time your routine starts and ends. And if there’s anything you want to change, you can just ask the AI or make those changes yourself!

The last step is to write it down!

You can either write it down on the notes app, docs, on a journal/ piece of paper, anything that’s easily accessible to you. I heavily recommend writing it down somewhere, but if you dont want to you can…

Put it into your calander. This can help you be a bit more organised, but it’s not completely needed. As long as it’s written down somewhere- so you dont need to always remember it- you’re good.

Health and wellness

In this section, i will be talking about fitness, mental health and physical health. I will mention some useful tips to finally start, how to overcome procrastination, and how to take care of that area of your body.

1. FITNESS.

Numero uno: fitness! I’m not going to go yapping on about how fitness is so important- im assuming you all know that by now. But let me just remind you that staying fit is not only exercising or going to the gym everyday. It can be: running, going for a walk, playing a sport, yoga, pilates, dancing, cycling, and THE LIST GOES ON. DO anything that moves your body and gets you fit!

Here are some tips to help you get started:

Start small. Set small goals first. Set SMART goals

Choose the activities you enjoy. Like i mentioned earlier, there’s tons of ways to stay fit- cycling, running, swimming, yoga, dance, sports, etc. etc. (if you like, joining a class or working out with friends can help you stay motivated!)

Stay consistent. I know i know, this is said everywhere. But there is no progress without consistency. Even if you can’t do a whole workout one day, try and do 10 jumping jacks, or 5 pushups. Do whatever you can. Remember: 1% is better than 0.

Create a vision board. You can create one yourself, or find tons of them off Pinterest. Vision boards will make the process so much more fun and will certainly motivate you.

Set a reward system. Tell yourself: if you do this high intensity workout now, you can go to the spa later or watch tv.

Find a why. This goes for like everything tbh. If your why is big enough, you are capable of doing anything (even finding that lost book that you owe the library!) basically, are you doing this to get ripped? With tons of abs, or to get strong and impress people? Or are you doing this to boost your self esteem and improve your health?

2. FOOD & NUTRITION.

Balanced diet: eat the rainbow! Meaning- eat meals with a variety of different colours. Fruits, vegetables, proteins, carbohydrates, etc. it’s completely alright to eat a chocolate, but remember: EVERYTHING IN MODERATION.

Hydration: aim for at least 8 glasses of water a day. Trust me, drinking the magical potion that is water will help you SO much! It can help you clear your skin, have pink uncrusty lips, keep you fit and soooo much more.

Mindful eating: in the book IKIGAI it is said that you should only eat until you’re 80% full. Not 100%. Why? Because the time it takes for you to digest the food will have already made you extremely full. You may even have a stomachache. Studies also show that cutting back on calories can lead to better heart health, longevity, and weight loss.

Here are some tips to manage cravings:

Find healthier alternatives. If you are craving something sweet like chocolate, have something like a sweet fruit. If you crave something salty, try nuts. If you can’t think of any, search up some healthier alternatives to it!

Create more friction for junk, and less friction for healthy. This concept was said in the book Atomic Habits by James Clear. What does it mean? Make sure that it takes a lot of energy to get the unhealthy junk food. Maybe keep them high up in a cupboard so whenever you want it you have to go get a ladder, climb up, and then get it. And keep the healthy food in easy reach. Like some fruits open on a table, etc. (also remember to keep some actually yummy healthy food like Greek yogurt or protein bars.)

Distract yourself. Go do a workout or engage your mind in a hobby that you enjoy. Basically take your mind off food.

Yummy water. Make some lemonade for yourself. Or perhaps add slices of lemon, cucumber, mint or strawberries to it for some flavours. I’d do some research on this cus i know that some combos can rly help for things like clearing your skin, boosting energy, etc.

3. MENTAL HEALTH

Taking care of your mental health is just as important as taking care of your physical health. It affects how we think, feel and act and also determines how we handle stress, relate to others, relationships, etc.

Of course there will be ups and downs for our mental health. It’s not something that you can just fix once and it’ll be good forever. No, it’s a rollercoaster. But having a “good” mental health is really important for a successful lifestyle.

Here are some tips to help you improve your mental health:

Meditation / deep breathing. I can’t emphasise how important this is. Even 1-2 minutes a day is good. Start small. You dont even need to be sitting crossed legged for this. Whether you’re in class, on a vehicle or in a stressful situation; just breathe. Take a deep breath, and out. Do it right now.

Journalling. Write. It. Out. Writing your problems and worries out is SOO therapeutic, especially when you want to calm down. There are SO MANY benefits to journalling. But remember that once you’ve ranted on the paper, tear it, rip it, and watch it burn. (Don’t keep a journal for this unless you KNOW 150% that no ones ever gonna read it. Trust me, it’s terrifying knowing that someone’s read that.) other things you can do is create a gratitude journal, so whenever you’re feeling low you can just go to it or write in it.

Self careee!! Create time for self care in your week. Because if you do that, it’s gonna be that one thing which you’ll be looking forward to each week, which will make life SO much more fun and bearable. For me, my forms of self care are watching thewizardliz or tam Kaur, reading, watching a movie at night, etc.

POSITIVE. SELF. TALK. Need i say more? What you say to yourself, is what you believe. And what you believe reflects in your external life.

Sing your heart out to Olivia Rodrigo. I swear this is actually so calming and therapeutic. Basically: express your feelings. If you’re angry at someone, feeling grief or really hurt by someone, screaming to Olivia Rodrigo songs in my bedroom is my go-to (i just make sure not to do it when others can here hehe). You can punch your pillow, scream, cry, etc.

Remember honey: this too will pass. Repeat that over in your head. This will pass. This will pass. This will pass. I know you may be going through the toughest time ever, but this too will pass. Nothing is forever. You’ve gotten through so much worse. You’ve got this.

!! Girls, please remember that these are just some tips. I am NOT a professional. If you really feel horrible every single day, go to therapy or counselling. Also contact mental health hotlines or emergency numbers if needed.

Mkay thats it! I hope this was of some value to you, and stay tuned for the next guide in the it girl series!

#agirlwithglam🎀✨#vanilla self improvement⭐️#it girl series#health and wellness#pink pilates princess#mental health#routines#self improvement tips#self improvement#it girl#it girl energy#it girl tips#it girl guide#becoming that girl#self development#healthy habits#healthy lifestyle#health & fitness#health tips#fitness#girlblog#girlblogging#healthylifestyle#wongunism#diet#healthy food#fitness tips#mental wellness#habits#glow up

2K notes

·

View notes

Note

whats wrong with ai?? genuinely curious <3

okay let's break it down. i'm an engineer, so i'm going to come at you from a perspective that may be different than someone else's.

i don't hate ai in every aspect. in theory, there are a lot of instances where, in fact, ai can help us do things a lot better without. here's a few examples:

ai detecting cancer

ai sorting recycling

some practical housekeeping that gemini (google ai) can do

all of the above examples are ways in which ai works with humans to do things in parallel with us. it's not overstepping--it's sorting, using pixels at a micro-level to detect abnormalities that we as humans can not, fixing a list. these are all really small, helpful ways that ai can work with us.

everything else about ai works against us. in general, ai is a huge consumer of natural resources. every prompt that you put into character.ai, chatgpt? this wastes water + energy. it's not free. a machine somewhere in the world has to swallow your prompt, call on a model to feed data into it and process more data, and then has to generate an answer for you all in a relatively short amount of time.

that is crazy expensive. someone is paying for that, and if it isn't you with your own money, it's the strain on the power grid, the water that cools the computers, the A/C that cools the data centers. and you aren't the only person using ai. chatgpt alone gets millions of users every single day, with probably thousands of prompts per second, so multiply your personal consumption by millions, and you can start to see how the picture is becoming overwhelming.

that is energy consumption alone. we haven't even talked about how problematic ai is ethically. there is currently no regulation in the united states about how ai should be developed, deployed, or used.

what does this mean for you?

it means that anything you post online is subject to data mining by an ai model (because why would they need to ask if there's no laws to stop them? wtf does it matter what it means to you to some idiot software engineer in the back room of an office making 3x your salary?). oh, that little fic you posted to wattpad that got a lot of attention? well now it's being used to teach ai how to write. oh, that sketch you made using adobe that you want to sell? adobe didn't tell you that anything you save to the cloud is now subject to being used for their ai models, so now your art is being replicated to generate ai images in photoshop, without crediting you (they have since said they don't do this...but privacy policies were never made to be human-readable, and i can't imagine they are the only company to sneakily try this). oh, your apartment just installed a new system that will use facial recognition to let their residents inside? oh, they didn't train their model with anyone but white people, so now all the black people living in that apartment building can't get into their homes. oh, you want to apply for a new job? the ai model that scans resumes learned from historical data that more men work that role than women (so the model basically thinks men are better than women), so now your resume is getting thrown out because you're a woman.

ai learns from data. and data is flawed. data is human. and as humans, we are racist, homophobic, misogynistic, transphobic, divided. so the ai models we train will learn from this. ai learns from people's creative works--their personal and artistic property. and now it's scrambling them all up to spit out generated images and written works that no one would ever want to read (because it's no longer a labor of love), and they're using that to make money. they're profiting off of people, and there's no one to stop them. they're also using generated images as marketing tools, to trick idiots on facebook, to make it so hard to be media literate that we have to question every single thing we see because now we don't know what's real and what's not.

the problem with ai is that it's doing more harm than good. and we as a society aren't doing our due diligence to understand the unintended consequences of it all. we aren't angry enough. we're too scared of stifling innovation that we're letting it regulate itself (aka letting companies decide), which has never been a good idea. we see it do one cool thing, and somehow that makes up for all the rest of the bullshit?

#yeah i could talk about this for years#i could talk about it forever#im so passionate about this lmao#anyways#i also want to point out the examples i listed are ONLY A FEW problems#there's SO MUCH MORE#anywho ai is bleh go away#ask#ask b#🐝's anons#ai

900 notes

·

View notes

Text

Oh, you know, just the usual internet browsing experience in the year of 2024

Some links and explanations since I figured it might be useful to some people, and writing down stuff is nice.

First of all, get Firefox. Yes, it has apps for Android/iOS too. It allows more extensions and customization (except the iOS version), it tracks less, the company has a less shitty attitude about things. Currently all the other alternatives are variations of Chromium, which means no matter how degoogled they supposedly are, Google has almost a monopoly on web browsing and that's not great. Basically they can introduce extremely user unfriendly updates and there's nothing forcing them to not do it, and nowhere for people to escape to. Current examples of their suggested updates are disabling/severly limiting adblocks in June 2024, and this great suggestion to force sites to verify "web environment integrity" ("oh you don't run a version of chromium we approve, such as the one that runs working adblocks? no web for you.").

uBlockOrigin - barely needs any explanation but yes, it works. You can whitelist whatever you want to support through displaying ads. You can also easily "adblock" site elements that annoy you. "Please log in" notice that won't go away? Important news tm sidebar that gives you sensory overload? Bye.

Dark Reader - a site you use has no dark mode? Now it has. Fairly customizable, also has some basic options for visually impaired people.

SponsorBlock for YouTube - highlights/skips (you choose) sponsored bits in the videos based on user submissions, and a few other things people often skip ("pls like and subscribe!"). A bit more controversial than normal adblock since the creators get some decent money from this, but also a lot of the big sponsors are kinda scummy and offer inferior product for superior price (or try to sell you a star jpg land ownership in Scotland to become a lord), so hearing an ad for that for the 20th time is kinda annoying. But also some creators make their sponsored segments hilarious.

Privacy Badger (and Ghostery I suppose) - I'm not actually sure how needed these are with uBlock and Firefox set to block any tracking it can, but that's basically what it does. Find someone more educated on this topic than me for more info.

Https Everywhere - I... can't actually find the extension anymore, also Firefox has this as an option in its settings now, so this is probably obsolete, whoops.

Facebook Container - also comes with Firefox by default I think. Keeps FB from snooping around outside of FB. It does that a lot, even if you don't have an account.

WebP / Avif image converter - have you ever saved an image and then discovered you can't view it, because it's WebP/Avif? You can now save it as a jpg.

YouTube Search Fixer - have you noticed that youtube search has been even worse than usual lately, with inserting all those unrelated videos into your search results? This fixes that. Also has an option to force shorts to play in the normal video window.

Consent-O-Matic - automatically rejects cookies/gdpr consent forms. While automated, you might still get a second or two of flashing popups being yeeted.

XKit Rewritten - current most up to date "variation "fork" of XKit I think? Has settings in extension settings instead of an extra tumblr button. As long as you get over the new dash layout current tumblr is kinda fine tbh, so this isn't as important as in the past, but still nice. I mostly use it to hide some visual bloat and mark posts on the dash I've already seen.

YouTube NonStop - do you want to punch youtube every time it pauses a video to check if you're still there? This saves your fists.

uBlacklist - blacklists sites from your search results. Obviously has a lot of different uses, but I use it to hide ai generated stuff from image search results. Here's a site list for that.

Redirect AMP to HTML - redirects links from their amp version to the normal version. Amp link is a version of a site made faster and more accessible for phones by Bing/Google. Good in theory, but lets search engines prefer some pages to others (that don't have an amp version), and afaik takes traffic from the original page too. Here's some more reading about why it's an issue, I don't think I can make a good tl;dr on this.

Also since I used this in the tags, here's some reading about enshittification and why the current mainstream internet/services kinda suck.

#modern internet is great#enshittification#internet browsing#idk how to tag this#but i hope it will help someone#personal#question mark

1K notes

·

View notes

Text

When Your Antagonist Isn't a Person

Last time I talked about how to character create an antagonist (check it out here if you missed it!) but what happens when your antagonist isn’t a person?

Antagonists don’t necessarily have to be another character (or even one singular character). Rather, an antagonist is anything that raises the stakes and creates conflict for your protagonist. You will likely find this antagonist in your worldbuilding.

1. What your world may say about your antagonist

In a survival story, your protagonist will likely be out in the wilderness alone—thus, their antagonist may be creatures, starvation, dehydration, exposure (freezing, sunburn). Or something like a giant storm, or other natural phenomenon/disaster.

In an urban setting, the antagonist may be the ‘system’ itself; politics, institutions, a way they’ve been disadvantaged or otherwise put down by their world. (Often these systems can be represented by a person, if you so choose).

And a futuristic world opens up to technology being an antagonist; things aren’t working as they’re meant to, AI has gone wrong, or it’s gone too right—the technology is taking away from what the protagonist wants.

2. Goals and Motivations

Your non-human antagonist may not have goals or motivations, or very basic ones. Does a nasty storm exist to destroy humans? Probably not, it just is as it is. A creature’s goal may be to eat, it’s motivation being that it’s hungry.

However, a system or institution may have deeper goals/motivations. For example, Amazon is a company built to make Jeff Bezos money. Your institution may have a goal it presents to the public, and a true goal (usually monetary, but could also be religiously or politically motivated).

3. Additional sources of conflict

Sometimes non-human antagonists need some extra support to make your character’s life suck. A dangerous storm brewing in the distance is great, but you may also need some additional sources of conflict to keep your character moving until it reaches them.

If your character is taking down Amazon, they may be targeted by police, or drones with guns, or people who live off an Amazon salary, or require the convenience of it.

Often stories without one human antagonist tend to have multiple little antagonists. Survival stories are great for the amount of different conflicts you can throw at a character. You may even introduce small conflicts between other characters, even if those characters aren’t fully antagonists.

Next time I’ll talk about character vs. self, what do you do when your antagonist is also your protagonist?

#writing#creative writing#writers#screenwriting#writing community#writing inspiration#filmmaking#books#film#writing advice#antagonists#when your antagonist isn't a person

734 notes

·

View notes

Text

a generative AI workflow for art that can't reasonably be detected as "AI use"

before we start:

I am an artist with original art in my art tag dating back to 2017

I think intellectual property is a farce and i hate copyright

I don't think image scraping and using it as training data is stealing, the same way I don't think piracy is stealing

I have used my own laptop hardware for these image generations, not any online service

Images are not described, apologies

Step 1: Concept (txt2img)

Let's do a basic edgy fallen angel character as our example. The prompt was something like "fullbody edgy fallen angel, short hair wearing leather vest, chain accessory, broken halo, wings"

Model used: Counterfeit v3.0

Model used: Flat 2D Animerge v4.5

Step 2: Pick and choose parts, then trace

Since I've traced over a completely new image, it is impossible to detect that I've used generative AI at all. It can't be reverse-searched. For all intents and purposes, this is "original artwork by a human".

You can stop here, but I wanted to toss it back in to see what I'd get.

Step 3: img2img generation

I've added a dark background and an outline to the boots for clearer separation of character and background. This generation was done with a high CFG (guidance scale).

For reference, here's high CFG to lower CFG from left to right.

Step 4: Upscale and paintover

Obviously, these aren't flawless images. So we'll paint over some parts manually until it becomes "human art"

I only did the face for brevity's sake. I've fixed lighting, flattened out the noise, fixed her vest, and overall tried to make it more consistent. Direct paintover is a harder to execute, but it is completely possible to cover all "tells".

It's not my best work as I'm not that experienced with anime style illustration, but it will pass a general AI sniff check (using sightengine).

for comparison, here's some of my other original paintings.

Closing thoughts

You'll never really be able to actually 100% identify any art as AI generated or assisted, unless you're literally watching an artist over their shoulder. Models are only getting better at generation. Generative AI is just a Pinterest replacement in terms of art tools, really. Harassing artists and fearmongering about the use of AI is stupid and reactionary. You can still enjoy art even if it's generated.

The end

108 notes

·

View notes

Text

If you don't trust subliminals, make your own. (how to do that)

It's really simple, but I've seen a lot of people who say they wish they could but don't know how so here's everything you need to know.

You don't need a computer for this you can use your phone.

What you need:

An editing software, capcut works great

Voice to text, you can use Google translate, your own voice, or if you're extra: an AI voice replica of your comfort character

A list of affirmations that resonate with you or a list of what you want

Music, ASMR, nature sounds, frequencies. Capcut has free music, you can also screen record any of these off of YouTube. Feel free to create a custom DR ambiance with this.

Formulas

These work best if you mix and match. Do whatever you want there's no rules.

1. I,you,we

Example:

"I can shift.

You can shift.

We can shift."

This basically lets it sink into your subconscious.

2. I feel I love

This tricks your subconscious into believing you have it by telling yourself how much you love having it.

Example: " I love how often I shift"

This is not just limited to "I love" but any feelings you would feel if you had achieved your goal.

3. Why do I

Pretty straight forward, this phrases affirmations are questions.

Example: "why is shifting so easy for me"

4. Adverb

This is something you can add to affirmations to tweak them. Things like "instantly" or "effortlessly " I don't really think it warrants an example.

5. Everyone thinks, everyone says

This basically is affirming that everyone also believes you have your manifestation and you should too.

Example: "Everyone says your skin is flawless"

6. Mantras

Things that rhyme, are fun to say, or get stuck in your head. They work great!

Theres many more but it's just some ideas to get you going.

How to put it together

Write out your list of affirmations

Record text to speech, your voice, or an AI voice saying your affirmations

In whatever you're using to edit extract the audio and layer it with a louder audio of music, asmr, ambiance etc.

Lower the volume of the affirmations

If you feel like it add images you associate with what you're wanting

If you want it on YouTube you can upload it and keep it on private or unlisted

That's literally it.

#subliminal#subliminals#shifting antis dni#reality shifting#shifting community#shifting#shiftblr#shifting realities#shifting reality#loa manifesting#loassumption#loa blog#loa tumblr#affirmations

309 notes

·

View notes

Text

Music comms are CLOSED!! Check out the waitlist here!!

Ok y'know what, screw it. My brain seems to require three-four pieces at one time (genuinely cannot figure out why that is), and with the fact I only have two queued up right now and the game I'm composing for doesn't need any tracks at the moment, I'm getting composer's block again. So we're OPENING my music requests!!

I'm actually stunned at how many people seemed interested in getting a piece of soundtrack music for their f/o. I'm opening it to non-mutuals, and it's totally free! If you're concerned about paying/tipping for work, I'm always happy to receive content for my selfship, but I will not accept any money, and there's no pressure to tip content anyway. Again, this is for fun!

This is how it works:

Fill out this google form with the title of your ship, some songs you like, instruments, etc etc.

You can message on Tumblr or Discord (@/slipperson on Discord) on top of submitting the form too! I'll reach out myself once I get started on your piece.

I'll sketch out a draft, which is exactly like sketching out a basic pose for art - it'll typically only use piano/minor percussion. Sometimes I'll even give a simple concept before I flesh out a draft. I'll send it to you for approval.

If changes are needed, I'll refine the draft and re-update. If not, I'll go on to fleshing out the instrumental - this means adding instruments, changing volume (for example, in my first example, I used a lot of "dynamics"/volume changes to simulate the swelling of instruments). This is like adding the flat colors in a piece of art!

I'll send it to you again - I'll make changes upon your request, but if approved, I'll finally go ahead and mix the final draft. This means putting it through an audio program (audacity if you're curious!) and polishing the sound. This is like rendering the lighting!

After it's done, I'll send it to you for once last listen. Upon approval, I'll post it to Soundcloud, link it on Tumblr, and tag you in the post!

Important bits:

No comship/proship/aged up-or-down/RPF ships. Live action characters are fine as long as it's not the actual person. Familial/platonic ships are totally okay!

If you are a minor/ageless blog, I'm willing to write a piece for familial/platonic content, but not QPR/romantic.

Downtime is 1-2 months after I first open your request. I may finish it sooner, but no later than 2 months. This is because music generally takes awhile--30 seconds of music can take me 4-5 hours to concept! I also tend to work on 3-4 pieces of music at a time.

I will give frequent updates. Don't be afraid to reach out if you're curious on the status!

My work is never cleared to be used commercially or in AI programs. We're a bunch of selfshippers on Tumblr, so I know we all hate AI, but it's worth the mention. I tend to be strict on copywrite - it'll stay under my name, all rights reserved - however, you are free to use your piece wherever you'd like as long as it's not commercial use, used in a monetized campaign/video/form of media, and not used in AI.

I may put these tracks on a streaming service at a later date - not on Spotify, as the service is TERRIBLE with allowing their work to be remixed into AI. Something like Bandcamp or Soundcloud for Artists. If you are uncomfortable with this, please let me know.

Examples:

I will have my queue/completed list on my carrd here.

Thank you so much for your interest!! I'm actually so stunned I got so much love for this, and I'm excited to celebrate your ships with you!

heart border

#self ship#fictional other#yumeship#self ship community#silver musics#silver talks#love letters by slipperson#SoundCloud

56 notes

·

View notes

Note

thoughts on xDOTcom/CorralSummer/status/1823504569097175056 tumblrDOTcom/antinegationism/758845963819450368 ?

I mostly try to ignore AI art debates, and as a result I feel like I don't have enough context to make sense of that twitter exchange. That said...

It's about generative image models, and whether they "are compression." Which seems to mean something like "whether they contain compressed representations of their training images."

I can see two reasons why partisans in the AI art wars might care about this question:

If a training image is actually "written down" inside the model, in some compressed form that can be "read off" of the weights, it would then be easier to argue that a copyright on the image applies to the model weights themselves. Or to make similar claims about art theft, etc. that aren't about copyright per se.

If the model "merely" consists of a bunch of compressed images, together with some comparatively simple procedure for mixing/combining their elements together (so that most of the complexity is in the images, not the "combining procedure"), this would support the contention that the model is not "creative," is not "intelligent," is "merely copying art by humans," etc.

I think the stronger claim in #2 is clearly false, and this in turn has implications for #1.

(For simplicity I'll just use "#2", below, as a shorthand for "the stronger claim in #2," i.e. the thing about compressed images + simple combination procedure)

I can't present, or even summarize, the full range of the evidence against #2 in this brief post. There's simply too much of it. Virtually everything we know about neural networks militates against #2, in one way or another.

The whole of NN interpretability conflicts with #2. When we actually look at the internals of neural nets and what is being "represented" there, we rarely find anything that is specialized to a single training example, like a single image. We find things that are more generally applicable, across many different images: representations that mean "there's a curved line here" or "there's a floppy ear here" or "there's a dog's head here."

The linked post is about an image classifier (and a relatively primitive one), not an image generator, but we've also found similar things inside of generative models (e.g.).

I also find it difficult to understand how anyone could seriously believe #2 after actually using these models for any significant span of time, in any nontrivial way. The experience is just... not anything like what you would expect, if you thought they were "pasting together" elements from specific artworks in some simplistic, collage-like way. You can ask them for wild conjunctions of many different elements and styles, which have definitely never been represented before in any image, and the resulting synthesis will happen at a very high, humanlike level of abstraction.

And it is noteworthy that, even in the most damning cases where a model reliably generates images that are highly similar to some obviously copyrighted ones, it doesn't actually produce exact duplicates of those images. The linked article includes many pairs of the form (copyrighted image, MidJourney generation), but the generations are vastly different from the copyrighted images on the pixel level -- they just feel "basically the same" to us, because they have the same content in terms of humanlike abstract concepts, differing only in "inessential minor details."

If the model worked by memorizing a bunch of images and then recombining elements of them, it should be easy for it to very precisely reproduce just one of the memorized images, as a special case. Whereas it would presumably be difficult for such a system to produce something "essentially the same as" a single memorized image, but differing slightly in the inessential details -- what kind of "mixture," with some other image(s), would produce this effect?

Yet it's the latter that we see in practice -- as we'd expect from a generator that works in humanlike abstractions.

And this, in turn, helps us understand what's going in in the twitter dispute about "it's either compression or magic" vs. "how could you compress so much down to so few GB?"

Say you want to make a computer display some particular picture. Of, I dunno, a bird. (The important thing is that it's a specific picture, the kind that could be copyrighted.)

The simplest way to do this is just to have the computer store the image as a bitmap of pixels, without any compression.

In this case, it's unambiguous that the image itself is being represented in the computer, with all the attendant copyright (etc.) implications. It's right there. You can read it off, pixel by pixel.

But maybe this takes up too much computer memory. So you try using a simple form of compression, like JPEG compression.

JPEG compression is pretty simple. It doesn't "know" much about what images tend to look like in practice; effectively, it just "knows" that they tend to be sort of "smooth" at the small scale, so that one tiny region often has similar colors/intensities to neighboring tiny regions.

Just knowing this one simple fact gets you a significant reduction in file size, though. (The size of this reduction is a typical reference point for people's intuitions about what "compression" can, and can't, do.)

And here, again, it's relatively clear that the image is represented in the computer. You have to do some work to "unpack" it, but it's simple work, using an algorithm simple enough that a human can hold the whole thing in their mind at once. (There is probably at least one person in existence, I imagine, who can visualize what the encoded image looks like when they look at the raw bytes of a JPEG file, like those guys in The Matrix watching the green text fall across their terminal screens.)

But now, what if you had a system that had a whole elaborate library of general visual concepts, and could ably draw these concepts if asked, and could draw them in any combination?

You no longer need to lay out anything like a bitmap, a "copy" of the image arranged in space, tile by tile, color/intensity unit by color/intensity unit.

It's a bird? Great, the system knows what birds look like. This particular bird is an oriole? The system knows orioles. It's in profile? The system knows the general concept of "human or animal seen in profile," and how to apply it to an oriole.

Your encoding of the image, thus far, is a noting-down of these concepts. It takes very little space, just a few bits of information: "Oriole? YES. In profile? YES."

The picture is a close-up photograph? One more bit. Under bright, more-white-than-yellow light? One more bit. There's shallow depth of field, and the background is mostly a bright green blur, some indistinct mass of vegetation? Zero bits: the system's already guessed all that, from what images of this sort tend to be like. (You'd have to spend bits to get anything except the green blur.)

Eventually, we come to the less essential details -- all the things that make your image really this one specific image, and not any of the other close-up shots of orioles that exist in the world. The exact way the head is tilted. The way the branch, that it sits on, is slightly bent at its tip.

This is where most of the bits are spent. You have to spend bits to get all of these details right, and the more "arbitrary" the details are -- the less easy they are to guess, on the basis of everything else -- the more bits you have to spend on them.

But, because your first and most abstract bits bought you so much, you can express your image quite precisely, and still use far less room than JPEG compression would use, or any other algorithm that comes to mind when people say the word "compression."

It is easy to "compress" many specific images inside a system that understands general visual concepts, because most of the content of an image is generic, not unique to that image alone.

The ability to convey all of the non-unique content very briefly is precisely what provides us enough room to write down all the unique content, alongside it.

This is basically the way in which specific images are "represented" inside Stable Diffusion and MidJourney and the like, insofar as they are. Which they are, not as a general rule, but occasionally, in the case of certain specific images -- due to their ubiquity in the real world and hence in the training data, or due to some deliberate over-sampling of them in that data.

(In the case of MidJourney and the copyrighted images, I suspect the model was [over-?]heavily trained on those specific images -- perhaps because they were thought to exemplify the "epic," cinematic MidJourney house style -- and it has thus stored more of their less-essential details than it has with most training images. Typical regurgitations from image generators are less precise than those examples, more "abstract" in their resemblance to the originals -- just the easy, early bits, with fewer of the expensive less-essential details.)

But now -- is your image of the oriole "represented" in computer memory, in this last case? Is the system "compressing" it, "storing" it in a way that can be "read off"?

In some sense, yes. In some sense, no.

This is a philosophical question, really, about what makes your image itself, and not any of the other images of orioles in profile against blurred green backgrounds.

Remember that even MidJourney can't reproduce those copyrighted images exactly. It just makes images that are "basically the same."

Whatever is "stored" there is not, actually, a copy of each copyrighted image. It's something else, something that isn't the original, but which we deem too close to the original for our comfort. Something of which we say: "it's different, yes, but only in the inessential details."

But what, exactly, counts as an "inessential detail"? How specific is too specific? How precise is too precise?

If the oriole is positioned just a bit differently on the branch... if there is a splash of pink amid the green blur, a flower, in the original but not the copy, or vice versa...

When does it stop being a copy of your image, and start being merely an image that shares a lot in common with yours? It is not obvious where to draw the line. "Details" seem to span a whole continuous range of general-to-specific, with no obvious cutoff point.

And if we could, somehow, strip out all memory of all the "sufficiently specific details" from one of these models -- which might be an interesting research direction! -- so that what remains is only the model's capacity to depict "abstract concepts" in conjunction?

If we could? It's not clear how far that would get us, actually.

If you can draw a man with all of Super Mario's abstract attributes, then you can draw Super Mario. (And if you cannot, then you are missing some important concept or concepts about people and pictures, and this will hinder you in your attempts to draw other, non-copyrighted entities.)

If you can draw an oriole, in profile, and a branch, and a green blur, then you can draw an oriole in profile on a branch against a green blur. And all the finer details? If one wants them, the right prompt should produce them.

There is no way to stop a sufficiently capable artist from imitating what you have done, if it can imitate all of the elements of which your creation is made, in any imaginable combination.

111 notes

·

View notes

Text

ARE YOU SURE?!

Episode 3 production Notes

I genuinely wasn't expecting to have much to say on this when I started it but there are definitely some things to chat about with this episode. Get comfy, it's another long post!

Here's a link to my post on eps 1 & 2.

(I guess this is a series now)

The Tone

I was curious about how a few things would be handled with the remainder of the show, and some of the answers are starting to unfold. We definitely have to see how the upcoming episodes play out but there's at least something to evaluate.

My main takeaway from episode 3 is that the tone is so very different from the previous ones. In my last post, I spent quite a bit explaining why AYS was a successful mix of Bon Voyage and In The Soop. That's definitely not the case here. This episode is a mix of Bon Voyage and Run BTS tonally. This episode is more what I was expecting they'd try to do with the edit to highlight the chaos and shenanigans that were a main ingredient in the content of BTS as a full team, especially to cover for the reduced member count from the full group.

Some of the main items that make this episode seem more energetic than the previous ones are:

The timeline. Episode 3 covers far less time than 1 or 2 did. It makes it seem like there's just too much going on to fit in the same parameters, which again makes the tone seem overall more rushed and hectic.

The music: far higher bpms consistently on all of the backing tracks.

The choppy edits. When we recognize that there was something missing in the middle of an interaction, our brains are still processing what we might have missed and orienting to what we're seeing next. With all of that going on, it easy to feel 'left behind' in even the most casual of scenes.

The intended audience. I just had one moment here that bumped me. At some point one of the members clearly says 'Fighting' but the translation wasn't just that word. It was a whole sentence about wishing for encouragement or something. These episodes have been very clearly laid out for an audience that is not only familiar with BTS but enough so that we know who each of these members are and why they might be doing these kinds of activities. If you had none of this basic knowledge, I genuinely don't think this show is even watchable...so why would people with that level of understanding need an alternate translation to 'Fighting'? Idk, maybe I'm being overly sensitive but like I said, it bumped me enough to question who their target audience was. Something I didn't even question with the prev eps.

Edited to add: an example of the tone influenced by the edit. Can't believe I forgot this one. It stood out to me in my first watchthrough. Thankfully our beautiful giffers captured the story beats for us all.

The Edit

Like I said at the beginning, this episode was far less a blend of ITS and BV and more a mashup of BV with Run BTS. The only thing in this episode that reminds me of ITS is the extremely choppy edit, which i think most would agree was not one of the highlights of those shows.

There are so many unresolved story beats in this episode of AYS! If you're not used to noticing something like this, here's an example:

In the car after their meal, vmin discuss that it'd be nice to pull over for some pics.

At the coffee drive through, Jimin makes a point to coordinate a stopover with JK so he can have his drink.

...and then we arrive at the house with no resolution. Did they stop? Did JK get his drink? We don't know, the only evidence we have is Tae carrying his partially consumed drink. So IF they stopped, Tae either didn't drink his or it was a very brief stop to not finish.

Here's another one:

When arriving at the house, the guys are all commenting bout how nice the place is.

Jimin exclaims about the pool.

...we don't get an establishing shot of the pool. On a rewatch of the episode, once you already know where it is, you're able to notice it for the slightest of moments but that's it. No hint of how big the pool is or even where in relation to the members it's located. It's just a tiny thing, not even important to the storyline but it leaves the audience without a reference to what the members are talking about.

There are dozens more of examples I could list but I think you get the idea. Again, not a big deal on small productions but you KNOW there was plenty of b-roll footage of the place. We see some of it. These are mostly just tiny observations that, if isolated wouldn't mean anything, but repeatedly set the tone for how the audience will be experiencing the rest of the episode.

The Guest

Another choppy edit is how they handled the introduction of Tae and the narrative that explains his presence here when he wasn't in the previous episodes. They either didn't get any good footage explaining it or they decided to purposefully try to cut it 'dynamically' for a reveal... in my opinion, this was not successfully done and just leaves the viewer feeling like we just need to accept the confusion and enjoy the endearing moments....which is exactly one of the main things I comment on in my Run BTS series. The BTS production team is astoundingly terrible at entertaining exposition. Long time armys are just used to it by now and I really don't see them changing anytime soon. They know ppl will watch the content for the moments we get to spend with the members, whether there's a proper narrative or not, so why bother?

Anyway, it's clear to see why those who have a bias against Tae have been using this as ammunition in their fanwars. It's just an awkward narrative that was poorly presented. (Personally, I'd rather everyone just focus on celebrating the members moments with each other rather than warping them to win imaginary points in a pointless battle but alas, I'm just an aging fan in a space that rewards useless vitriol).

Jimin's sickness...again

Poor guy just can't catch a break! At least that was the point they were trying to make editing it this way. Honestly, Jimin has so far been not as impacted as he was the previous episodes. Likely due to this upset being far more mild than the last one. However, there's so much more tension with it cut the way it was in episode3. It's not just that we're aware of it upfront unlike we were in episode 1. It's only highlighted in moments where Jimin is NOT participating in whatever the others are: not eating, not swimming, not climbing. In episode 1, most of the scenes that included any mention of Jimin feeling unwell also included some hint of JK wanting to take care of him or inquiring after his health. Episode 3 includes no such scenes. The overall impact is a much lighter view of how the members spent an exciting day rather than a genuine look into how they're interacting with each other as humans.

One last note: The Sound

There are definitely some horrific sound moments this episode! So much so that I noticed some on my first watchthrough! Again, there are allowances to be made, this is not a show being shot on a sound stage in controlled conditions but some moments were just plain misses in the edit.

For example, when vmin is listening to music in the car, the balancing of SEVEN from vmin's audio is horrific. The way to do this properly is manage the audio levels of their voices alone and overlay with the music track. This will help mask any audio that leaked into their mics. Something that can be easily done with rights to the music...which they have. And oh look at that, they do it correctly for J-Hope's Arson! It honestly is just coming across as a missed correction on the edit but I'd expect more from a BTS show that's licensed to Disney.

That's it for now. Overall, I'm disappointed in the edit of this episode but moreso because of how pleasantly surprised i was by the previous episodes. This one was very much kn par with other BTS content. Im also looking forward to the remaining episodes as I estimate we'll see a little more liveliness from our latenight-loving Jimin now that he's had his nap! 😆

Edited to add:

Are You Sure?! MasterList

113 notes

·

View notes

Note

to quote night in the woods: I believe in a universe that doesn’t care and people who do

Yeah in my mind, I don't think the Universe can care in a human way.

To me it's basically an omniscient supercomputer. It knows everything there is to know, it has full control over reality, and it takes in requests (wishes) without thinking about morality or any negative impact it could have (it does try to avoid bugs and patches them if they do appear)

The way it “thinks” is not human. You know how, for example, an ai trained to create an organism that can move and cross a finish line will come up with WILD solutions? Like for example making an organism that just explodes until a BIT of its body crosses the finish line, thus technically completing the objective?

To me, that's how the Universe grants wishes. As long as the wish is granted, it did its job, no matter HOW it's actually granted. It does work with infinitely more precise and exhaustive set of parameters, making the wishes not as unpredictable, but it still has some wriggle room to “mess things up” (in a human perspective)

Also also, crucially, it grants people's WISHES, not necessarily their NEEDS. What someone wants can be very harmful or even prevent them from reaching their needs! But the Universe can’t help it! In a way, it’s the biggest people pleaser in this entire reality, it can’t say no to a properly performed wish!

I actually have a mini comic planned using that interpretation of the Universe. It's about the Change God explaining exactly why Loop's wish went that way and how they're a dumb whiny baby but that'll happen when I have more free time lmao

Here's a sneak peak tho! :D

#sorry I took your very nice quote and started yapping about my headcanons instead… my bad ndjdndnd#isat#isat spoilers#in stars and time#in stars and time spoilers#isat two hats#isat loop#isat headcanon#isat meta#isat theory#isat the universe#isat au#washed up loop au#ask valictini

56 notes

·

View notes

Text

Alhaitham in an Art Nouveau inspired style Here's a thread I wrote about this concept on Twitter, below the cut will be a copy of the text, sorry if it takes a weird format on tumblr since it was initially written as a twt thread

This might not make a lot of sense to some of you but before i talk about Alhaitham and Art Nouveau i'd like to talk about Kaveh and Romanticism The connection between Kaveh and Romanticism can be more easily done, specially with characters such as Faruzan calling him a romantic

The Romantic movement, as the name suggest, is very emotionally driven. Its a movement that values individualism ane subjectvism, it's objective is on evoking an emotional response, most comonly being feelings of sympathy, awe, fear, dread and wonder in relation to the world

Basically the artistic view of the Romantic is to represent the world while trying to say "we are hopeless in the grand scheme of things, little can we do to change the world yet the world is always changing us"

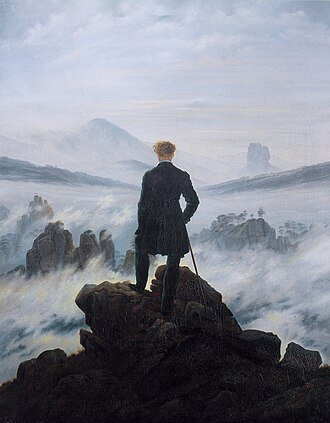

In Romantic pieces the man is always small compared to the setting they find themselves in, see the painting Wanderer Above the Sea of Fog by Caspar David Friedrich as an example, the human figure is central but relativelly insignificant to the world

Another thing about Romanticism is the importance of beauty, it's through it that the Romantic seeks to get in touch with their emotions and ituition and its through these lenses that they see the world. The Kaveh comparison should be easy to make with these descriptions

Kaveh's idle chat "The ability to ability to appreciat beauty is an important virtue" just cements to me the idea that his romanticism is closely connected to the artistic movement. He does have an argument agaisnt this connection but I'll bring it up later on the thread

Now that I used the opportunity to talk about my favorite character in a thread that wasn't supposed to be about him let's go back to Alhaitham and how to connect him to the Art Nouveau movement

But seriously, I brought up Kaveh's more obvious connection to Romanticism because the Nouveau movement was created as a direct mirrored response to the Romantic movement, and we all know how we feel about mirrored themes between these two characters

Art Nouveau is about rationality and logic, the movement was used more comonly on mass produced interior design pieces or architectural buildings, it's a movement much more focused on functionality than on art appreciation

They also had a big focus on the natural world but in a very different way, while Romantics saw nature as a power they couldnt contend with, artists from the Nouveau used the natural as an universal symbolical theme for broad mass appeal

Flowers, leaves, branches, complexes and organic shapes are the basis of this style, the logical side of it coming from the mathematics needed to create these shapes and themes in ways that were appealing and also structurally sound

To appreciate the Art Nouveau style is to understand it is a calculated artistic movement (another reason to be salty about an AI generated image trying to emulate it) In short, this style is less about the art and more about the rationality in the mathematics to make it

Another note I'd like to point out is that I love how both Alhaitham and Kaveh have dendro visions while both movements are so nature centric in different ways, Romanticism seeing it as a subjective power and Art Nouveau seeing it as recognizeable symbols

I mentioned an argument against the Kaveh comparison before: the one thing that bothers me about Romanticism is how negative it is in relation to humanity's position in the world and how that related back to Kaveh

In the Parade of Providence it was explicitely showed how much Kaveh dislikes the idea of people seeing themselves as helpless in relation to the problems of the world

People may suffer but there is something he can do to help them and he will do it

It doesn't feel right for me to say that Kaveh fits the Romantic themes because of his suffering, in a similar sense it also doesn't feel right to me to say Alhaitham fits Art Nouveau because of his rational behaviour while he as a character is a lot more complex than that

This thread was done all in fun and love for an artistic discussion, it's not a perfect argument to connect these characters and movements

+ I haven't studied art history in a year, if anyone knows more about these movements please tell me I love learning new things

++ Really sorry if my english is bad or I sound repetitive, it's not my first language and im trying my best here

Thanks for reading

I love you, have a nice day/evening/night

#my art#genshin impact#alhaitham#al haitham#i'll also tag#kaveh#because of the text#anyways uhm#in short i saw an ai generated image claiming to be alhaitham in an art nouveau style#because of that i felt the urge to not only redraw it so there was a human made piece resembling it#but also to erm actually it in general#it activated my art history autism so i had to

109 notes

·

View notes

Text

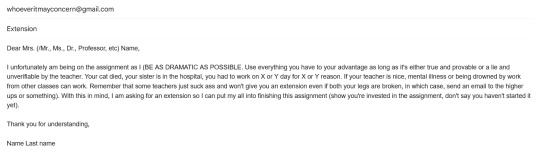

yeah so if you genuinely don't know how to write an email instead of using chatgpt and getting something that 1: has a chance to just fucking suck (and potentially be noticeably AI) and 2: uses stolen content, ruins the environment and indicates to the gen-AI companies that you want more gen-AI bullshit that will steal things and ruin the environment, you can look up how to write an email or look up email examples. here's a website, here's another, here's the wikihow page (it's a bit shit but it does its job), here's a good one for formal emails, and here's an email I sent today (more examples under the cut):

something that's really annoying right now is that most websites are desperately trying to sell you their cool funky AI friend that can write the email for you, and look at me: you have to tell it to go fuck itself (in your head, don't use it). this isn't about you being a moron for not being about to write an email, I struggled with it for a while too, I still do sometimes, writing emails notoriously sucks. gen-AI sucks more.

also, this might not be the case for everyone, but please at least try to learn how to write the email before using chatgpt, it will help you forever. if you have a question about emails or if you're not sure how to write one specific email, you can send an ask: I'm not all-knowing but I'll do my best to help.

I can mostly help for college/high school levels and I am studying in a French school, so the codes may not be exactly the same, but I am in fact being taught by English speakers, sometimes native ones.

I'll give more general advice at the end, but here are a few examples of emails I would send.

If there's even a small chance of your teacher not recognizing you, write at the top something like "I am Name Last name, I am in your X-Y-Z class on Mondays from 8AM to 9AM". This isn't too useful in high school because your teachers likely know you, but in college your teachers might not. This will give them context.

Do your best to avoid typos or grammar errors. Reread your email, especially if the teacher is a language teacher.

Be polite, always, unless the teacher explicitly specified they don't care.

You do not need to beg for anything, don't debase yourself, and if a teacher makes you debase yourself, report them. You shouldn't have to beg for something that you ask for in an email. (so no more than one please per email, and avoid this one please if possible).

If it's possible and safe for you, prefer discussing important matters IRL.

Remember who you're talking to. Is the teacher strict or chill? Younger or older? Are they a white abled man or a Black disabled woman? Are they very into "respect the teacher!!" or do they put themselves at your level? Are you a 15 y/o high school student or a 20 something college student? Is this teacher familiar with you? Have they been understanding in the past? etc.

Generally, despite all my warnings above, a simple polite email will be fine with most teachers. If you're not sure how to identify the above possibilities or how to alter your emails depending on them, just write a formal, polite email (like seen above).

Some universities have online courses that teach you how to write emails. If there is a web-type course in your university and you can take it, take it.

Mine has one. I hate it. They defined a tweet as a "post on a blogging platform". I have to complete it or I don't pass. It still has a good tutorial for writing emails. You are lucky in the sense that emails are like the basic thing that even the boomer teachers know how to do (even if they don't like doing it), so there are a lot of resources for people who haven't written emails yet and need to learn.

If possible, ask your teacher at the start of the year what email to contact them with - if you're lucky, they'll say things about what kinds of emails they want.

If you're lucky still, someone else will send a shit email and the teacher will make a point during the class to remind how to write a proper email.

I put "Dear name" everywhere, but if it's not an extremely formal setting, some teachers will be fine with a "Hello". If you're not sure of the receiver's gender, use their title (Dr. etc).

For the extension: sometimes teachers aren't allowed to give you an extension or are assholes who don't want to give you an extension. In that case, don't bother writing another email (again: don't beg. + it will make them dislike you which you don't want).

This works more in work settings, but I read once that it's good to say "I will be taking a day off" rather than "May I take a day off/is it possible to take a day off". Just say that it's going to happen.

Know your rights. I can't know them for you. Figure out what the teacher can and cannot do through legal documents on your school's website or whatever. Know your rights depending on your state or country.

If you have a bad memory and don't want to have to look up how to write an email everytime, open your notes app or your blocknote or any preferred place to take notes and write down the important. I'd advise to note common greetings, subjects, opening and closing lines. Same for your teachers, if you need to remember which one is a bitch and which one is chill, write their name down with a description.

#people who know how to write emails. I'm calling you. post email examples lmao#like if you have time to make a guide or to compile examples. do it#mumblings//#emails#how to write an email#chatgpt#(if you're a tech bro and you see this: do not bother I will block you)

46 notes

·

View notes

Text

OnK Chapter 150

Honestly, the naive part of me wants to believe Aka is doing this in purpose, because this chapter alone highlighted like half the reasons why I find romantic!Aqua and Kana so poorly written lmao

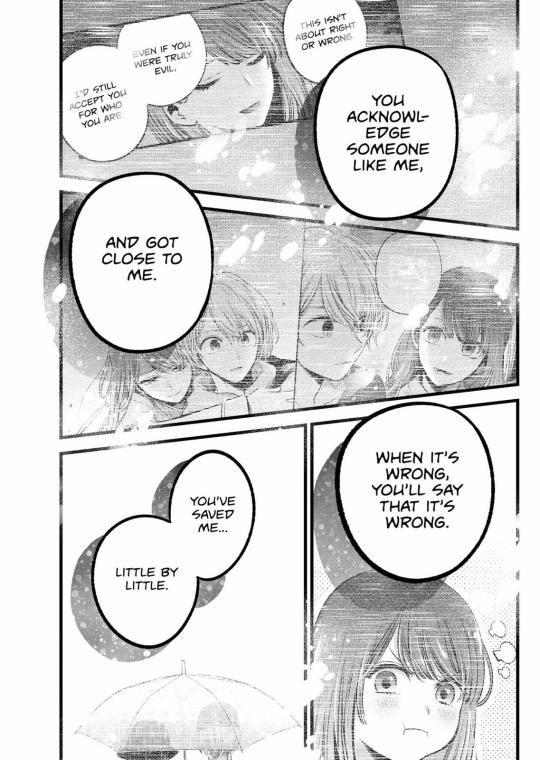

Compare that to this:

The writing in Aqua's and Akane's is so much better it's unreal 😂

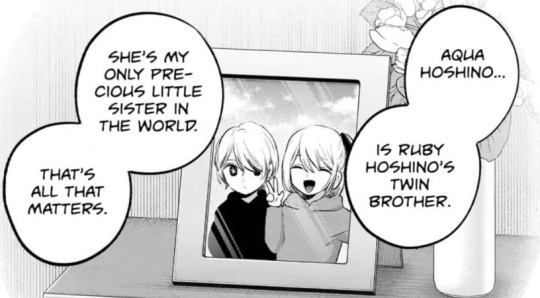

I'm so glad to have confirmation that Goro's regrets were appeased by knowing that Sarina is living her best life as Ruby. Goro acting like an over-protective dad and Aqua reaffirming that Ruby is his precious little sister were the highlights of the chapter for me. Figures that once Aka finally gives us some Aqua insight, he immediately makes it clear where Aqua stands in regards to Ruby lmao

Goro is often personified as the guilt and regrets Aqua carried into this new life, but he is much more than that.

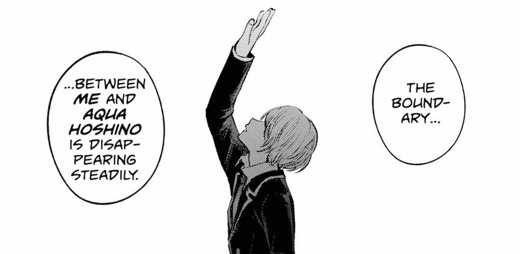

He is an entire framework of thoughts, complexes and experiences right there at the center of the individual we have come to know as Aqua. He is the entire base Aqua is built on, because when he reincarnated, he was just Goro - albeit a Goro thrown into a completely different situation, and a completely different life.

Of course, the longer Goro lives as Aqua, the more Aqua he becomes. He has been developing a new framework of thoughts, complexes and experiences that are more befitting of his situation and based on his current life. This all results in the Aqua we've come to known.

Up to now, Aqua has been simultaneously existing as the man he once was and the young boy he has become. But the man he once was is now feeling at peace knowing that Sarina-chan has gotten a new chance at life, which leaves the young boy he has become with one less reason to cling to a painful past.

But things aren't that easy, as evidenced by the fact that even after being "freed" by his past guilt, Aqua still has his black stars. As Aqua, he has regrets, guilt and issues of his own to overcome.

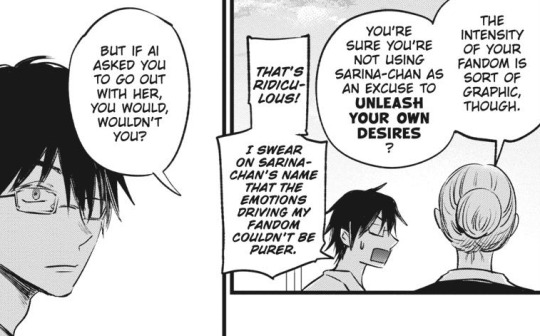

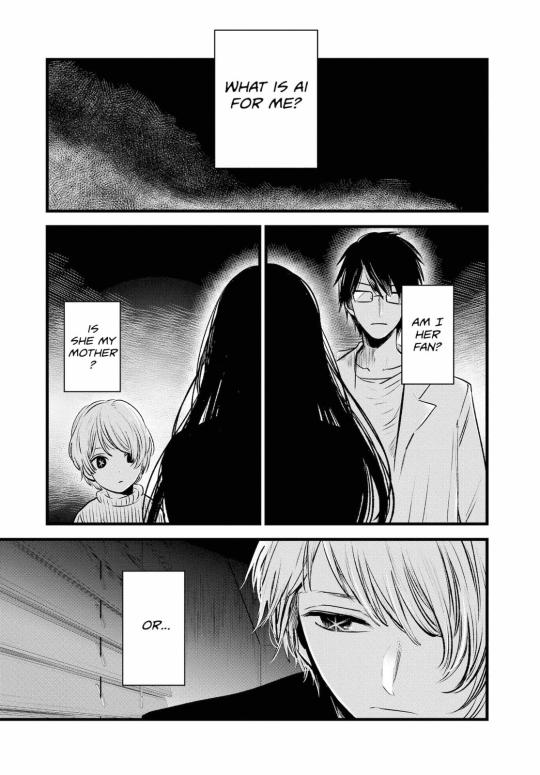

But it isn't just the revenge and the guilt, really. This, for example, is a confusion that has followed Aqua into his new life:

Which takes me to...

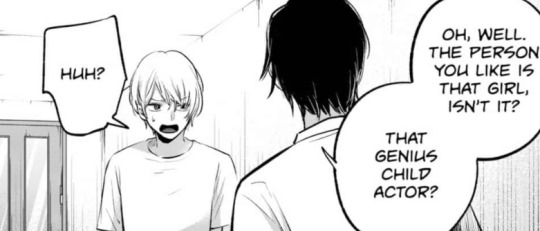

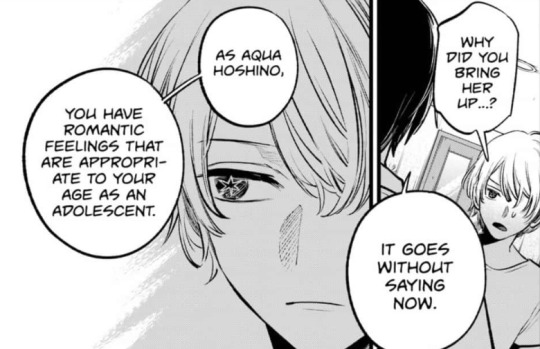

It's so incredibly ironic that it's "Goro" of all people who brings up Kana 😭 I've mentioned before that Kana has a lot of parallels with Ai and Sarina, and I theorized this may be one of the reasons why Aqua seemed so drawn to her from the get-go. And now we have Goro himself, the one who originally admired all of those traits, saying that Aqua likes Kana. It's like clockwork, except the clock may be broken.

The reasons Goro cites are so shallow and superficial, too. Perfectly fitting for an Oshi or a teenage crush, but hard to think of as anything deeper than that (for me, at least).

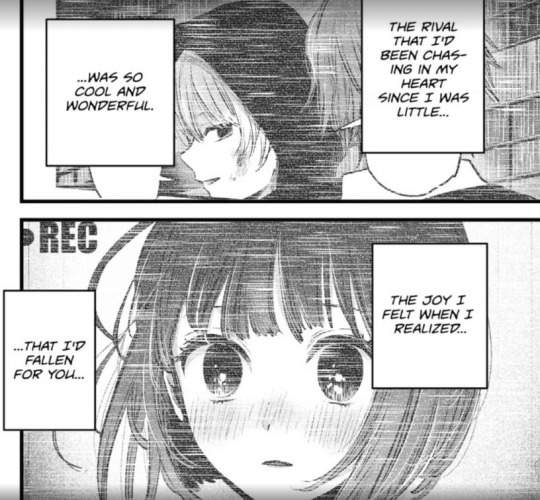

Which is even more ironic, because we end the chapter with Kana declaring herself as "seriously in love" with Aqua, when she herself does nothing but describe him superficially 😭

Kana has been basically living a shoujo manga in her head and Aqua is her chosen Male Lead 😂 It's like that time she thought Aqua was "straight and sincere", or when she thought Akane was a "goody-two-shoes".

Meanwhile, Aqua and Akane:

Poor Kana is out of her depth in this manga, but maybe that's the point. Kana is perfectly normal and that's just what Aqua needs am I right?

Seriously though, that's why I've always said that to me it doesn't really matter if Aqua and Kana end up together, because their writing is just... not it 😭 It's always just one giant trope without any depth of substance. It's no coincidence that these last three chapters are filled with tropes and forced writing. That's the way this ship has always been written in my eyes, and that's why it does nothing for me regardless of whether it's intended to be canon or not 😭

Even this, for example:

Aqua confirming (yet again) that he has been aware of Kana's romantic feelings all along could back-up what I said here and here. But at the same time, this could just be part of something as simple and unsubtle as this:

It's like there are two wolves within Aka. One is great at subtlety and organic development, and the other completely sucks at it 😂

But enough about that, I'm sure Aka will give me plenty to complain about next chapter so I'll save it until then lmao

Hmmm where have I seen this before?

Oh, right!

Funny how Akane is magically not brought up this chapter. If we assume Aka is just writing obvious stuff without deeper meaning, then Akane isn't brought up because Aka considers Chapters 97 & 98 as their romantic closure. Or maybe all the theories about Aqua being a scumbag that only dated Akane because Kana wasn't available were right. But considering that would make Aqua trash not worth discussing, I can only hope Aka won't stoop that low lmao

If we give Aka the benefit of the doubt (does he even deserve it at this point tho), then Goro not bringing up Akane can be pretty fitting. Because if Aqua likes Akane, it wouldn't be because she fits the ideals and tastes of the man he once was. It would be because of everything they have been through together as Aqua and Akane.

Case in point, when Aqua thought of Kana and Akane back when he first thought he was free, he did so as fully himself. But I digress! 🤡

Another thing that caught my eye is that Aka deliberately changed the number of chapters in the previous volume just so these Aqua-Kana focused chapters can be in the same volume as the Aqua-Ruby focused ones. Ruby, who mainly loves Aqua because he once was Goro and Kana, who just loves Aqua. Maybe he's doing it to contrast them (in favor of Kana, duh), or maybe he wants to show they're two sides of the same infatuation coin. One can dream, at least!

Speaking about not nice though, what the fuck is this 😭 I know Akane is trying to push Kana's buttons, but baby girl is switching from I-only-see-him-as-a-son!! I swear!!! to Haha actually! so swiftly that she's going to give herself whiplash. Plus, can't Aka let Akane push Kana's buttons while saying less OOC stuff? Granted, it's not like Kana knows Akane well, so of course she doesn't think it's weird for Akane to say that she wants to be with a boy on Christmas lmao