#GoogleCloudMarketplace

Explore tagged Tumblr posts

Text

Google Vertex AI API And Arize For Generative AI Success

Arize, Vertex AI API: Assessment procedures to boost AI ROI and generative app development

Vertex AI API providing Gemini 1.5 Pro is a cutting-edge large language model (LLM) with multi-modal features that provides enterprise teams with a potent model that can be integrated across a variety of applications and use cases. The potential to revolutionize business operations is substantial, ranging from boosting data analysis and decision-making to automating intricate procedures and improving consumer relations.

Enterprise AI teams can do the following by using Vertex AI API for Gemini:

Develop more quickly by using sophisticated natural language generation and processing tools to expedite the documentation, debugging, and code development processes.

Improve the experiences of customers: Install advanced chatbots and virtual assistants that can comprehend and reply to consumer inquiries in a variety of ways.

Enhance data analysis: For more thorough and perceptive data analysis, make use of the capacity to process and understand different data formats, such as text, photos, and audio.

Enhance decision-making by utilizing sophisticated reasoning skills to offer data-driven insights and suggestions that aid in strategic decision-making.

Encourage innovation by utilizing Vertex AI’s generative capabilities to investigate novel avenues for research, product development, and creative activities.

While creating generative apps, teams utilizing the Vertex AI API benefit from putting in place a telemetry system, or AI observability and LLM assessment, to verify performance and quicken the iteration cycle. When AI teams use Arize AI in conjunction with their Google AI tools, they can:

As input data changes and new use cases emerge, continuously evaluate and monitor the performance of generative apps to help ensure application stability. This will allow you to promptly address issues both during development and after deployment.

Accelerate development cycles by testing and comparing the outcomes of multiple quick iterations using pre-production app evaluations and procedures.

Put safeguards in place for protection: Make sure outputs fall within acceptable bounds by methodically testing the app’s reactions to a variety of inputs and edge circumstances.

Enhance dynamic data by automatically identifying difficult or unclear cases for additional analysis and fine-tuning, as well as flagging low-performing sessions for review.

From development to deployment, use Arize’s open-source assessment solution consistently. When apps are ready for production, use an enterprise-ready platform.

Answers to typical problems that AI engineering teams face

A common set of issues surfaced while collaborating with hundreds of AI engineering teams to develop and implement generative-powered applications:

Performance regressions can be caused by little adjustments; even slight modifications to the underlying data or prompts might cause anticipated declines. It’s challenging to predict or locate these regressions.

Identifying edge cases, underrepresented scenarios, or high-impact failure modes necessitates the use of sophisticated data mining techniques in order to extract useful subsets of data for testing and development.

A single factually inaccurate or improper response might result in legal problems, a loss of confidence, or financial liabilities. Poor LLM responses can have a significant impact on a corporation.

Engineering teams can address these issues head-on using Arize’s AI observability and assessment platform, laying the groundwork for online production observability throughout the app development stage. Let’s take a closer look at the particular uses and integration tactics for Arize and Vertex AI, as well as how a business AI engineering team may use the two products in tandem to create superior AI.

Use LLM tracing in development to increase visibility

Arize’s LLM tracing features make it easier to design and troubleshoot applications by giving insight into every call in an LLM-powered system. Because orchestration and agentic frameworks can conceal a vast number of distributed system calls that are nearly hard to debug without programmatic tracing, this is particularly important for systems that use them.

Teams can fully comprehend how the Vertex AI API supporting Gemini 1.5 Pro handles input data via all application layers query, retriever, embedding, LLM call, synthesis, etc. using LLM tracing. AI engineers can identify the cause of an issue and how it might spread through the system’s layers by using traces available from the session level down to a specific span, such as retrieving a single document.Image credit to Google Cloud

Additionally, basic telemetry data like token usage and delay in system stages and Vertex AI API calls are exposed using LLM tracing. This makes it possible to locate inefficiencies and bottlenecks for additional application performance optimization. It only takes a few lines of code to instrument Arize tracing on apps; traces are gathered automatically from more than a dozen frameworks, including OpenAI, DSPy, LlamaIndex, and LangChain, or they may be manually configured using the OpenTelemetry Trace API.

Could you play it again and correct it? Vertex AI problems in the prompt + data playground

The outputs of LLM-powered apps can be greatly enhanced by resolving issues and performing fast engineering with your application data. With the help of app development data, developers may optimize prompts used with the Vertex AI API for Gemini in an interactive environment with Arize’s prompt + data playground.

It can be used to import trace data and investigate the effects of altering model parameters, input variables, and prompt templates. With Arize’s workflows, developers can replay instances in the platform directly after receiving a prompt from an app trace of interest. As new use cases are implemented or encountered by the Vertex AI API providing Gemini 1.5 Pro after apps go live, this is a practical way to quickly iterate and test various prompt configurations.Image credit to Google Cloud

Verify performance via the online LLM assessment

With a methodical approach to LLM evaluation, Arize assists developers in validating performance after tracing is put into place. To rate the quality of LLM outputs on particular tasks including hallucination, relevancy, Q&A on retrieved material, code creation, user dissatisfaction, summarization, and many more, the Arize evaluation library consists of a collection of pre-tested evaluation frameworks.

In a process known as Online LLM as a judge, Google customers can automate and scale evaluation processes by using the Vertex AI API serving Gemini models. Using Online LLM as a judge, developers choose Vertex AI API servicing Gemini as the platform’s evaluator and specify the evaluation criteria in a prompt template in Arize. The model scores, or assesses, the system’s outputs according to the specified criteria while the LLM application is operating.Image credit to Google Cloud

Additionally, the assessments produced can be explained using the Vertex AI API that serves Gemini. It can frequently be challenging to comprehend why an LLM reacts in a particular manner; explanations reveal the reasoning and can further increase the precision of assessments that follow.

Using assessments during the active development of AI applications is very beneficial to teams since it provides an early performance standard upon which to base later iterations and fine-tuning.

Assemble dynamic datasets for testing

In order to conduct tests and monitor enhancements to their prompts, LLM, or other components of their application, developers can use Arize’s dynamic dataset curation feature to gather examples of interest, such as high-quality assessments or edge circumstances where the LLM performs poorly.

By combining offline and online data streams with Vertex AI Vector Search, developers can use AI to locate data points that are similar to the ones of interest and curate the samples into a dataset that changes over time as the application runs. As traces are gathered to continuously validate performance, developers can use Arize to automate online processes that find examples of interest. Additional examples can be added by hand or using the Vertex AI API for Gemini-driven annotation and tagging.

Once a dataset is established, it can be used for experimentation. It provides developers with procedures to test new versions of the Vertex AI API serving Gemini against particular use cases or to perform A/B testing against prompt template modifications and prompt variable changes. Finding the best setup to balance model performance and efficiency requires methodical experimentation, especially in production settings where response times are crucial.

Protect your company with the Vertex AI API and Arize, which serve Gemini

Arize and Google AI work together to protect your AI against unfavorable effects on your clients and company. Real-time protection against malevolent attempts like as jailbreaks, context management, compliance, and user experience all depend on LLM guardrails.

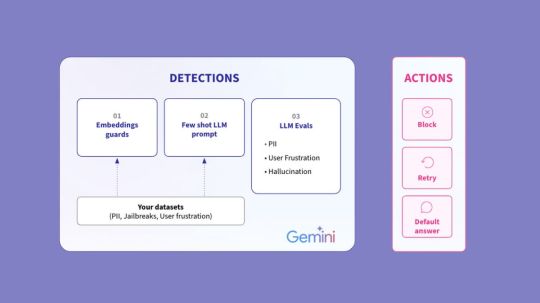

Custom datasets and a refined Vertex AI Gemini model can be used to configure Arize guardrails for the following detections:

Embeddings guards: By analyzing the cosine distance between embeddings, it uses your examples of “bad” messages to protect against similar inputs. This strategy has the advantage of constant iteration during breaks, which helps the guard become increasingly sophisticated over time.

Few-shot LLM prompt: The model determines whether your few-shot instances are “pass” or “fail.” This is particularly useful when defining a guardrail that is entirely customized.

LLM evaluations: Look for triggers such as PII data, user annoyance, hallucinations, etc. using the Vertex AI API offering Gemini. Scaled LLM evaluations serve as the basis for this strategy.

An instant corrective action will be taken to prevent your application from producing an unwanted response if these detections are highlighted in Arize. The remedy can be set by developers to prevent, retry, or default an answer such “I cannot answer your query.”

Utilizing the Vertex AI API, your personal Arize AI Copilot supports Gemini 1.5 Pro

Developers can utilize Arize AI Copilot, which is powered by the Vertex AI API servicing Gemini, to further expedite the AI observability and evaluation process. AI teams’ processes are streamlined by an in-platform helper, which automates activities and analysis to reduce team members’ daily operational effort.

Arize Copilot allows engineers to:

Start AI Search using the Vertex AI API for Gemini; look for particular instances, such “angry responses” or “frustrated user inquiries,” to include in a dataset.

Take prompt action and conduct analysis; set up dashboard monitors or pose inquiries on your models and data.

Automate the process of creating and defining LLM assessments.

Prompt engineering: request that Gemini’s Vertex AI API produce prompt playground iterations for you.

Using Arize and Vertex AI to accelerate AI innovation

The integration of Arize AI with Vertex AI API serving Gemini is a compelling solution for optimizing and protecting generative applications as businesses push the limits of AI. AI teams may expedite development, improve application performance, and contribute to dependability from development to deployment by utilizing Google’s sophisticated LLM capabilities and Arize’s observability and evaluation platform.

Arize AI Copilot’s automated processes, real-time guardrails, and dynamic dataset curation are just a few examples of how these technologies complement one another to spur innovation and produce significant commercial results. Arize and Vertex AI API providing Gemini models offer the essential infrastructure to handle the challenges of contemporary AI engineering as you continue to create and build AI applications, ensuring that your projects stay effective, robust, and significant.

Do you want to further streamline your AI observability? Arize is available on the Google Cloud Marketplace! Deploying Arize and tracking the performance of your production models is now simpler than ever with this connection.

Read more on Govindhtech.com

#VertexAIAPI#largelanguagemodel#VertexAI#OpenAI#LlamaIndex#Geminimodels#VertexAIVectorSearch#GoogleCloudMarketplace#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

1 note

·

View note

Text

We’re excited to announce that OpsMx, industry’s first CD solution focussed on Security for enterprises, is now available in Google Cloud Marketplace. You can leverage your existing credits & subscribe directly from GCP.

0 notes

Text

Cloud Computing

The fastest way to get started on GCP.

GCP Marketplace offers ready-to-go development stacks, solutions, and services to accelerate development. So you spend less time installing and more time developing.

Learn how cloud computing works & its important concepts with cloud computing projects in this cloud computing tutorial.

Let’s get started!

http://bit.ly/GCPmarketplace

0 notes

Text

Why Use The Upgraded Claude 3.5 Sonnet Model On Vertex AI

Introducing Vertex AI’s Upgraded Claude 3.5 Sonnet from Anthropic

In order to offer the most potent AI tools available together with unmatched choice and flexibility, Google Cloud has built its Vertex AI platform in an open manner. For this reason, Vertex AI gives you access to more than 160 models, including third-party, open-source, and first-party models, enabling you to create solutions that are especially suited to your requirements.

It declared in June that Vertex AI Model Garden now includes Anthropic’s Claude 3.5 Sonnet. Google Cloud announcing today that the upgraded Claude 3.5 Sonnet , which includes a new “computer use” capability in public beta on Vertex AI Model Garden, is now generally available for US clients. This implies that you may instruct the model to produce computer actions, including as keystrokes and mouse clicks, using the upgraded Claude 3.5 Sonnet enabling it to communicate with your user interface (UI). The upgraded Claude 3.5 Sonnet model is available to you via Model-as-a-Service (MaaS) solution.

Additionally, in the upcoming weeks, Vertex AI will also offer Claude 3.5 Haiku, Anthropic’s quickest and most affordable model.

Why Use Vertex AI’s Upgraded Claude 3.5 Sonnet Model?

Vertex AI streamlines the process of testing, implementing, and maintaining models such as the upgraded Claude 3.5 Sonnet by acting as a single AI platform.

By fusing Vertex AI’s strength with the intelligence of Claude 3.5 models, you can:

Try things with assurance

Vertex AI offers the enhanced Claude 3.5 Sonnet as a fully managed Model-as-a-Service. Without having to worry about complicated deployment procedures, MaaS allows you to do thorough tests in an intuitive environment and explore Claude 3.5 models with straightforward API requests.

Launch and operate without overhead

Simplify the deployment and scaling process. Claude 3.5 models offer pay-as-you-go or allocated throughput price options, as well as fully managed infrastructure tailored for AI workloads.

Create complex AI agents

Utilize Vertex AI’s tools and the enhanced Claude 3.5 Sonnet’s special features to create agents.

Construct with enterprise-level compliance and security

Make use of Google Cloud‘s integrated privacy, security, and compliance features. Enterprise controls, like the new organization policy for Vertex AI Model Garden, offer the proper access controls to guarantee that only authorized models are accessible.

Build with enterprise-grade security and compliance

It carefully curated library of more than 160 enterprise-ready first-party, open-source, and third-party models in Model Garden has grown with the inclusion of the Claude 3.5 models to Vertex AI. This allows you to choose the best models for your requirements through open and adaptable AI ecosystem.

Customers building with Anthropic’s Claude models on Vertex AI

Global energy provider AES uses Claude on Vertex AI to expedite energy safety assessments, greatly cutting down on the amount of time needed for this crucial yet time-consuming task:

Millions of people use Zapia, a personal AI assistant powered by Claude on Vertex AI, which is developed by BrainLogic AI, a firm creating innovative AI solutions especially for Latin America:

Through its AI-powered chat platform, Poe, Quora, the worldwide knowledge-sharing website, is utilizing Claude’s skills on Vertex AI to enable millions of daily interactions:

It is thrilled to collaborate with Anthropic and keep offering Google Cloud clients cutting-edge innovation backed by an open and accessible AI ecosystem.

How to get started with the upgraded Claude 3.5 Sonnet on Google Cloud

Choose the Claude 3.5 Sonnet v2 model tile by going to Model Garden. The upgraded Claude 3.5 Sonnet is also readily available on the Google Cloud Marketplace, where you can additionally benefit from the possibility of reducing your Google Cloud cost obligations. Today, only consumers in the United States can purchase the enhanced Claude 3.5 Sonnet v2.

Click “Enable,” then adhere to the next steps.

Start constructing by using sample notebook and documentation.

Safety & Trust

Every stage of Anthropic’s AI development is influenced by its dedication to safety. It carried out comprehensive safety assessments across several languages and policy domains when developing Claude 3.5 Haiku. Additionally, improved Claude’s capacity to handle delicate material with caution. Google Cloud in-house testing demonstrates that Claude 3.5 Haiku maintains exacting safety standards while delivering significant capability improvements.

Read more on govindhtech.com

#UpgradedClaude35#VertexAI#SonnetModel#GoogleCloud#ai#Claude35Haiku#GoogleCloudMarketplace#SafetyTrust#Anthropic#Customersbuilding#technology#technews#news#govindhtech

0 notes

Text

Google Cloud Marketplace Private Offer AI Use Case Updates

Improvements to the Google Cloud Marketplace private offer open both corporate and AI use cases.

Google Cloud Marketplace

Enterprise clients want flexibility and choice when it comes to procuring technology for various departments and business units that operate globally. This must also apply to technologies, such as generative AI solutions, that are purchased via the Google Cloud Marketplace and interact with a customer’s Google Cloud environment.

Google Cloud Marketplace optimizes the value of cloud investments by enabling purchases of ISV solutions to deduct from Google Cloud commitments, offers a premium inventory of cutting-edge ISV solutions, and enables flexible options to trial and buy them. Whether via public listings or tailored, negotiated private offers, can assist expedite transactions between consumers, technology providers, and channel partners by moving a large portion of the conventional IT sales process online.

Private offers are a useful tool for partners and consumers to agree on terms and payment plans that meet the unique requirements of a business. It have been pleased to present more private offer improvements today, along with the business applications they enable.

Support for enterprise AI purchasing models

Key fundamental models that may be deployed to Vertex AI as well as generative AI SaaS solutions can be purchased and sold on Google Cloud Marketplace. From producing high-quality code and summarizing lengthy papers to creating content for goods and services, these creative technologies are assisting businesses in delivering cutting-edge business apps.

Through a range of transaction models, such as usage-based discounts, committed use discounts (CUDs), and, most recently, provisioned throughput a fixed-cost subscription service that reserves throughput for supported generative AI models on Vertex AI Google Cloud Marketplace private offers enable customers to transact third-party foundational models and LLMs.

Based on the capacity acquired, google cloud created provisioned throughput to provide partners and consumers the freedom to transact and utilize any model from a partner-specified model family. Customers that are developing real-time generative AI systems, including chatbots and customer representatives, need provisioned throughput because it allows for key workloads that need constant high throughput while controlling overages and keeping predictable pricing. Customers may still benefit from the cost-saving measures that Google Cloud Marketplace procurement offers, such as the option to reduce Google Cloud obligations by investing in ISV solutions, such as generative AI models.

Customize payment plans and offers for several purchases

Each business unit inside an organization now has the option to purchase an ISV’s SaaS solution on Google Cloud Marketplace, with varying subscriptions and pricing plans depending on the requirements of their cost center. Customers may place several orders for the same product thanks to this feature, which is especially helpful for big businesses with many divisions, subsidiaries, or foreign offices.

Additionally, subscription plans for the same technological solution may be tailored for each unit. This capability is currently offered privately for fixed-fee SaaS solutions. Partners may activate numerous orders for their relevant items in Google Cloud Marketplace to make this possible. Watch a video demonstration to see how this works.

Enterprise use cases are already being enabled by customers and ISV partners, who are also seeing the value that this new functionality offers.

For Quantum Metric and its customers, the ability to make numerous orders for the same product in Google Cloud Marketplace has revolutionized the market. Everyone have been able to satisfy the demands of the companies to serve who need distinct subscriptions for the analytics platform for various business units and quick upsells thanks to multiple order support. Because of this, Quantum Metric has been able to grow its income and presence within the joint accounts while offering even better client service throughout the procurement cycle.

“Spoon Guru’s connection with Google Cloud Marketplace has accelerated company development, allowing to provide outstanding value and quickly build the client base. Meeting the various demands of it corporate clients from quick upsells to customized subscriptions for various business units has been made possible by the Nutrition Intelligence product’s flexibility to accommodate numerous orders. By offering more flexibility and help throughout the purchase process, this innovation has improved customer happiness while also speeding up revenue development.

Tackle’s purpose is to simplify things for all of the Cloud GTM clients. Currently always changing the road map to make it easier, more efficient, and more flexible for ISVs to sell via and with Google Cloud Marketplace. It are thus thrilled to be a launch partner for the multiple orders for a single customer feature of Google Cloud Marketplace. Customers of Google Cloud Marketplace benefit from more flexibility as it speeds up their Cloud GTM journey, enables them to meet client demands, and boosts income.

To better provide end users with various payment alternatives, partners may also benefit from streamlined payment schedules for private offers that are ISV-directed and reseller-initiated. Monthly, quarterly, yearly, and custom installment payment schedules including prorated payments are supported by Google Cloud Marketplace Private. The user experience for handling varied payment schedules is made simpler by this improvement.

Long-term contracts and upfront payments

Additionally, by paying for essential technological solutions up to five years in advance, consumers have more control over how they allocate their spending. Private packages allow for multi-year upfront fees and allow users to pay down their Google Cloud commitment on a yearly amortized basis. In addition, companies are implementing contract durations of up to seven years. Customers benefit from more flexible expenditure management because of to these capabilities, while others benefit from better financial control.

Read more on Govindhtech.com

#GoogleCloudMarketplace#PrivateOffer#AI#VertexAI#LLMs#generativeAI#SaaS#cloudcomputing#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

0 notes

Text

Catchpoint IPM Now Available On Google Cloud Marketplace

Catchpoint IPM

In most cases, the internet functions remarkably effectively, but what happens if it doesn’t? Since our business depends on flawless access to our applications and services, we demand it at all times. But frequently enough, this anticipation and actuality diverge.

The internet both the public and private IP networks is not a miraculously durable and unfailing network; rather, it is intricate, extremely brittle, dynamic, and prone to outages and service interruptions. Slowdowns, lost data, and operational difficulties are potential outcomes for the Site Reliability Engineers (SREs) who work tirelessly to keep our digital world operational and reachable.

Announcing Google Cloud Marketplace’s Catchpoint IPM

Taking note of these difficulties, Google Cloud is happy to inform you Catchpoint’s line of Internet Performance Monitoring (IPM) products, which are intended to support maintaining the dependability and performance of your digital environment, can now be found on the Google Cloud Marketplace. Through this cooperation, the Google Cloud community can easily use the powerful capabilities of Catchpoint IPM, which offers proactive monitoring of your whole Internet stack, including all of your Google Cloud services.

Take advantage of IPM for unmatched worldwide visibility

It is probable that your applications are distributed regionally, cloud-based, and focused on APIs and services. These days, IPM is essential if you want to have the necessary visibility into all the aspects of the Internet that affect your company, including your workers, networks, websites, apps, and customers.

Gaining insight into everything that may affect an application is essential. This includes user Wi-Fi connectivity, key internet services and protocols like DNS and BGP, as well as your own IP infrastructure, including point-to-point connections, SD-WAN, and SASE. International companies must comprehend the real-world experiences of their clients and staff members, no matter where they may be, and how ISPs, routing, and other factors affect them. What IPM offers is this visibility.

Catchpoint IPM tracks the whole application-user journey, in contrast to traditional Application Performance Management (APM) solutions that focus on the internal application stack. This covers all service delivery channels inside the Google Cloud infrastructure, including computation, API management, data analytics, cloud storage, machine learning, and networking products. It also includes BGP, DNS, CDNs, and ISPs.

With almost 3,000 vantage points across the globe, the largest independent observability network in the world powers Google’s award-winning technology, which lets users monitor from the critical locations for network experts to identify and address problems before they affect the company.

IPM strategies to attain online resilience

By utilizing Catchpoint’s IPM platform available on the Google Cloud Marketplace, you may enhance your monitoring capabilities with an array of potent tools. This is a little peek at what to expect.

Google Cloud Test Suite: Start Google Cloud Monitoring tests with a few clicks

With the help of Google Cloud’s and Catchpoint’s best practices for quick problem identification and resolution, IT teams can quickly create numerous tests for Google Cloud services thanks to the Test Suite for Google Cloud. It is especially user-friendly for beginners because of its design, which minimizes complexity and time investment for efficient Google Cloud service monitoring.

Pre-configured test templates for important Google Cloud services like BigQuery, Spanner, Cloud Storage, and Compute Engine are included in the Test Suite. Because these templates are so easily adaptable, customers can quickly modify the tests to meet their unique needs. This is especially helpful for enterprises that need to monitor and deploy their cloud services quickly.

The Internet Stack Map is revolutionary in guaranteeing the efficiency of your most important apps

With Internet Stack Map, you can see your digital service’s and its dependent services’ current state in real time. For any or all of your important apps or services, you can set up as many as you’d like. Using artificial intelligence (AI), Internet Stack Map will automatically identify all of the external components and dependencies required for the operation of each one of your websites and applications.

Looking across the Internet through backbone connections and core protocols, down to the origin server and its supporting infrastructure, along with any third-party dependencies, external APIs, and other services across the Internet, you can quickly assess the health of your application or service. It is impossible to achieve this distinct, next-generation picture with any other monitoring or observability provider.

Internet Sonar: Provide a response to the query, “Is it me or something else?”

Point of convergence In order to help you avoid occurrences that could negatively effect your experience or productivity, Internet Sonar intelligently offers clear and reliable internet health information at a glance. Internet Sonar monitors from where it matters by using information from the largest independent active observability network in the world. The outcome is a real-time status report driven by AI that can be viewed through an interactive dashboard widget and map, or it can be accessible by any system using an API.

Collaboration between Catchpoint and Google Cloud front end

To further enhance our performance monitoring offerings, Catchpoint has teamed up with Google Cloud to support their front end infrastructure worldwide. Through this partnership, Google Cloud’s global front end and Catchpoint’s Internet Performance Monitoring (IPM) capabilities are combined to give customers more tools for monitoring online performance globally.

Through this cooperation, users will be able to take advantage of Catchpoint’s experience in identifying and resolving performance issues early on, resulting in optimal uptime and service reliability. In addition, Catchpoint is providing professional assistance and a free trial to help gauge and enhance the performance of services that use Google’s global front end.

Read more on govindhtech.com

#CatchpointIPM#GoogleCloud#Marketplace#cloudbased#WiFiconnectivity#APImanagement#GoogleCloudMarketplace#GoogleCloudservices#artificialintelligence#AI#Catchpoint#technology#technews#news#govindhtech

0 notes