#GPU procurement

Explore tagged Tumblr posts

Text

India AI Mission: Union Minister Ashwini Vaishnaw Unveils Rs 10,000 Crore Initiative

Union IT Minister Ashwini Vaishnaw announced on Wednesday the launch of the Rs 10,000 crore India AI Mission, slated to roll out in the next two to three months. This ambitious initiative aims to bolster India’s capabilities in artificial intelligence (AI) by procuring computing power, fostering innovation, and enhancing skill development across the industry.

Key Components of India AI Mission

During the inaugural session of the Global IndiaAI Summit 2024, Minister Vaishnaw outlined several key components of the India AI Mission:

1.Graphics Processing Units (GPUs) Procurement: The government plans to procure 10,000 or more GPUs under a public-private partnership. This initiative aims to enhance industry efficiencies and support larger-scale AI applications.

2.AI Innovation Centre: The mission will establish an AI innovation centre to foster cutting-edge research and development in AI technologies.

3.High-Quality Data Sets: Special emphasis will be placed on creating high-quality data sets that can add significant value to startup initiatives and AI development efforts.

4.Application Development Initiative: A dedicated effort will be made to develop applications that address socio-economic challenges faced by India, leveraging AI solutions.

5.Focus on Skill Development: The India AI Mission will prioritize skill development initiatives to equip the workforce with the necessary capabilities to harness AI technologies effectively.

Strategic Importance and Global Context

Vaishnaw highlighted the strategic importance of making modern technology accessible to all, citing India’s digital public infrastructure as a prime example. He underscored the need for equitable access to technology, contrasting it with global trends where technology often remains limited to a few dominant entities.

Addressing AI Challenges

Acknowledging the dual nature of AI’s impact, Vaishnaw discussed the opportunities and challenges posed by AI technologies. He noted the global efforts to regulate AI, such as the AI Act in the European Union and executive orders in the United States, emphasizing their relevance in addressing AI-related issues globally.

Conclusion

As AI continues to evolve and impact various sectors, Minister Vaishnaw emphasized the importance of balancing innovation with responsible use. The India AI Mission, with its comprehensive approach to fostering AI capabilities, aims to position India as a leader in AI innovation while addressing societal challenges and ensuring inclusive growth.

0 notes

Text

China’s AI Gambit: 115,000 Nvidia Chips Planned for Data Centers Despite U.S. Export Ban

Source: www.scmp.com

Chinese technology companies are reportedly laying the groundwork for a sweeping expansion of domestic AI capabilities by planning up to 36 large-scale data centers in the remote western provinces of Xinjiang and Qinghai. These facilities aim to house over 115,000 Nvidia AI chips, including the high-performance H100 and H200 models, both currently banned from export to China by the U.S. government.

The initiative, rooted in China’s national strategy “East Data, West Computing,” seeks to tap into renewable energy, cooler climates, and lower land costs to support massive AI computing hubs. Notably, one proposed facility in Xinjiang alone could host the majority of these chips, enabling the training of large-scale language models and advanced AI systems.

Legality in Question: No Clear Path for Chip Acquisition

While tender documents and project filings cite Nvidia’s top-tier chips, there is no transparency on how China plans to acquire them amid ongoing U.S. export restrictions. The U.S. Commerce Department’s ban prohibits Nvidia from selling these chips to Chinese customers due to national security concerns, leaving regulators and industry watchers puzzled about how such a scale of procurement might be achieved.

Current estimates suggest only about 25,000 restricted Nvidia GPUs are available in China, a fraction of the planned inventory. Nvidia has strongly denied any involvement, stating that smuggling or unofficial acquisition would make the data centers unserviceable and “business-wise irrational” due to a lack of support and maintenance.

The situation has prompted a formal U.S. investigation, with the Commerce Department exploring possible violations of trade controls. Lawmakers have voiced growing concerns that sanctioned Nvidia AI chips may still be finding their way into China through gray-market networks or indirect resales.

Strategic Implications for the Global AI Race

Beijing’s bold pursuit of cutting-edge AI infrastructure underscores China’s determination to compete with U.S. tech leadership, even amid tightening export restrictions. President Xi Jinping has repeatedly stressed the importance of Artificial Intelligence(AI) in national development, and this new push reflects that directive. If realized, the scale of these facilities would place China among the world’s most AI-compute-rich nations.

Meanwhile, Nvidia CEO Jensen Huang has labeled the U.S. chip restrictions as “a failure,” warning they are inadvertently accelerating China’s domestic semiconductor innovation. He notes that Nvidia’s market share in China has dropped from 90% to roughly 50%, as local firms like Huawei and Alibaba step up with homegrown alternatives.

As the world watches this high-stakes technological standoff unfold, the outcome may redefine the next decade of AI leadership, supply chain sovereignty, and global tech regulation. Whether China can bypass the Nvidia AI chips chokehold or develop powerful alternatives remains one of the most pivotal questions in today’s AI arms race.

Sources:

https://www.tweaktown.com/news/106322/chinese-ai-companies-plan-new-facility-in-china-with-115-000-nvidia-gpus-even-chip-ban/index.html

https://www.tipranks.com/news/china-eyes-115000-banned-nvidia-ai-chips-for-massive-data-centers

0 notes

Text

The Laptop That Time Forgot

Diary of a Girl Who Clicks Things for a Living

Work laptop updated itself over the weekend. I came in this morning expecting to do Data Science™. Instead, I did Tech Support Theatre: Solo Edition.

The update somehow broke half my environment: Python gone, packages missing. A project I’ve been building for two months now throws errors like it’s a Greek tragedy.

Spent my morning reinstalling dependencies, trying to run a program I know works... Then another four hours on a call with a very patient colleague, who deserves an actuall decent raise for troubleshooting across eight time zones while I slowly lost my grip on reality.

The culprit?

My laptop is not a developer laptop. It is a Human Resources laptop. It has no GPU. No CUDA support. Not enough RAM. No real will to live.

I’ve been training models on a machine designed to open PDFs and play around on PowerPoint for months!! Oh how I feel my will to live vanish like system resources on a Teams call...

Well, I got it working - eventually - on Linux - barely.

Now I get to email IT and request “a laptop suitable for machine learning tasks,” which will indubitably take six weeks, twelve approvals and a blood pact with Procurement.

Until then, I am just a girl… Standing in front of a sad HP machine… Asking it to please pip install anything.

#sad code girl has no GPU#please send help#corporate life#tech hell#office satire#tumblr diary#fictional but not really#capitalismcore#HR laptop strikes again#girl in STEM#python weeps#diary of a desk drone#Diary of a Girl Who Clicks Things for a Living#rskyeauthor

0 notes

Text

AAS Miner Launches Next-Generation Cloud Mining Platform to Democratize Profitability

Innovative New User Rewards and Eco-Friendly Strategies Establish AAS Miner as a Portal to Crypto Wealth Growth

LONDON, UK – June 26, 2025 – AAS Miner, a prominent cloud mining platform, today announced the extension of its services, providing a seamless and safe gateway for people to invest in cryptocurrency mining and receive passive income. As a user-friendly and efficient solution, AAS Miner looks to set the standard for the international cloud mining sector with their accessible solutions for established investors and novice digital asset investors alike.

AAS Miner vows to provide an unrivalled mining experience through the integration of innovative ASIC and GPU mining hardware with a friendly user interface. The platform eliminates the complexities associated with traditional cryptocurrency mining, such as hardware procurement, maintenance, and high energy costs, making it easier for users to begin their wealth appreciation journey.

“At AAS Miner, our core mission is to empower individuals to participate in the burgeoning digital economy with confidence and ease,” said a spokesperson for AAS Miner. “We believe that generating passive income from cryptocurrencies should be straightforward and accessible. Our platform, backed by advanced technology and a dedicated expert team, ensures a reliable and efficient mining environment for all our users.”

Key Advantages of Choosing AAS Miner:

Effortless Start: Users can begin mining in a few simple steps: register an account, select a preferred mining contract, and start receiving stable daily income directly to their account. New users are welcomed with a $10 registration bonus, and daily sign-in rewards of $0.8 are available.

State-of-the-Art Hardware: AAS Miner utilizes the latest ASIC and GPU mining equipment, ensuring high efficiency and optimal performance for digital asset production.

No Hidden Fees: The platform transparently covers all hardware, installation, maintenance, and electricity costs, providing a clear and predictable earning model for users.

Stable and Predictable Earnings: Once a contract is purchased, the system automatically processes and updates earnings every 24 hours, offering a consistent income stream.

Robust Fund Security: A significant portion of user funds is secured in offline cold wallets. Enhanced security protocols, including McAfee® Security and Cloudflare® Security, are implemented to ensure maximum asset protection.

Expert Support and Eco-Friendly Operations: An expert team of blockchain professionals and IT engineers provides 24/7 online customer service. Furthermore, AAS Miner is dedicated to environmentally friendly mining, powering its operations with clean energy sources like monocrystalline solar panels and large-scale wind energy to ensure profitability while minimizing ecological impact.

Lucrative Referral Program: Users can earn a permanent 5% referral bonus (Level 1) and 2.5% (Level 2) by inviting friends, providing an additional avenue for wealth accumulation.

AAS Miner offers a variety of popular mining contracts tailored to different investment capacities and durations, including options for Bitcoin (BTC), Litecoin (LTC), and Dogecoin (DOGE) cloud computing power.

As the cryptocurrency market continues its appreciation, cloud mining with AAS Miner presents an opportunity to participate in the digital economy’s growth, aligning technological advancement with sustainable development.

About AAS Miner: AAS Miner is a leading cloud mining platform established with the goal of providing a simple, secure, and efficient way for individuals worldwide to earn cryptocurrency. Leveraging advanced mining hardware and an expert team, AAS Miner is committed to transparent operations, robust security, and environmentally responsible practices, empowering users to achieve passive income in the digital asset space.

Media Contact:Full Name: DOLAN Peter James Position: Advertising Manager Email: [email protected] Website: https://aas8.com Address: 5 Egerton Drive, Hale, Altrincham, United Kingdom, WA15 8EF

More info: https://chainwirenow.com/aas-miner-launches-next-generation-cloud-mining-platform-to-democratize-profitability/

0 notes

Text

China gets Nvidia GPU for the AI race through Vietnam and Malaysia

Deepseek recently challenged the AI community and shook the US, revealing an escalating rivalry between the United States and China in the field of artificial intelligence. The competition has entered a new phase, with Washington tightening export controls on advanced semiconductor technologies since September 2022. Aimed squarely at curbing Beijing’s access to cutting-edge AI capabilities, the restrictions target high-performance graphics processing units (GPUs) produced by US chipmaker Nvidia, including the A100 and H100 models—critical components for training large-scale AI models. Despite these curbs, AEOW/INPACT has uncovered how China is succeeding in acquiring restricted chips through two intermediary countries in Asia. By analyzing export trade data, AEOW/INPACT has identified six recent shipments of NVIDIA A100 and H100 GPUs, valued at a combined $642,060, destined for two Chinese companies.

The Vietnamese trail to Suzhou Etron Technologies Co Ltd

The first shipment trail runs through Vietnam. The country gets its share of A100 GPU from Nvidia Singapore branch via Taiwan according to trade data. In July and October 2024, a Vietnamese company called Etron Vietnam Technologies company limited. According to IPC, a trade association for electronics, Etron Vietnam is linked to the website “etron-global.com” which belongs to Chinese company ETRON Global.

Screenshot of Etron Global’s website (source)

The company is shipping 8x NVIDIA A100 GPUs and 16x NVIDIA A100 GPUs to a company called Elb International in Hong Kong. The company has been incorporated in Hong Kong in 2019.

Screenshot of Hong Kong commercial registry list of newly incorporated companies

According to a press article, Elb International Ltd is actually a subsidiary of Suzhou Etron Technologies Co Ltd, listed on the Shanghai Stock Exchange (code: 603380 SH) and was set up to do procurement of electronics and expand overseas markets. The company which is a top electronics manufacturer located in Suzhou is also linked with the website etron.cn which has the same page as Etron Global.

Screenshot Etron’s website (source)

The case underscores how unsanctioned Chinese firms are exploiting their regional footholds across Asia to funnel restricted AI components back into China, circumventing export controls.

The Malaysian trail to Chengdu AI hub

The second shipment trail runs through Malaysia. In February and March 2025, a Malaysian logistics firm, United Despatch Agent Sdn Bhd, exported two consignments labelled “A100 GPU baseboard” and “Geforce graphics NVIDIA H100.” The former refers to the full NVIDIA A100 GPU card—an advanced semiconductor device designed for AI training, inference, and high-performance computing. The latter, however, is a misnomer: no such product as a “GeForce NVIDIA H100” exists. GeForce is NVIDIA’s consumer-facing line, tailored for gaming and creative applications, while the H100 belongs to its data centre-grade Hopper architecture, developed for AI workloads. The suspicious labelling raises the prospect of deliberate obfuscation—possibly to disguise the shipment of restricted H100 GPUs amid tightening US export controls.

According to shipping records, the goods were flown to China via Malaysia Airlines. United Despatch Agent, which describes itself as a provider of end-to-end logistics solutions with a focus on electronics, appears to have acted as the freight forwarder. There is no information on the actual Malaysian supplier. The freight forwarder firm’s involvement adds a layer of plausible deniability to the transaction, potentially complicating enforcement efforts around export restrictions.

Screenshot of United Despatch Agent

While the supplier cannot be identified, it has been possible to detect the import of A100 GPUs in Malaysia in 2024 to various Malaysian companies (Neview Technology Company Limited and M & S international trade technolog – Txi logistics M sdn bhd is a logistics company) from an Indian company based in Mumbai: Beehive Tech Solutions Private Limited

Import data to Malaysia

Looking at trade data, Indian companies are importing A100 GPUs from Nvidia Singapore branch via Taiwan.

Top supplier of GPUs to India in 2024

The Chinese company buying GPUs is Chengdu Huo Feng Technology co. AEOW/INPACT has not been able to find the company but its address is in the High Tech Zone of Chengdu. Interestingly, Chengdu High Tech Zone has been chosen as a national hub for the development of artificial intelligence with several projects and an AI business ecosystem. On May 10th 2022, Huawei and several other Chinese companies signed contracts for the launch of the AI Computing Center.

The GPU could likely benefit to Huawei AI Computer Center and other local developments. The Center is the largest in southwestern China and will include platforms for AI computing, smart cities, and scientific innovation. It has the computing power of 300 petaflops.

Photo of the AI computing centre in construction in 2022.

These two circumvention schemes offer a clear illustration of how China continues to acquire critical AI components through indirect channels, underscoring its determination to stay competitive in the global race for artificial intelligence dominance.

Two export control circumvention schemes used by China to procure AI GPUs

It also highlights the limits of export control as they are right now and the porous nature of the semiconductor supply chain in the Indo-Pacific region.

0 notes

Text

Deploying Inference AI in the Cloud vs. On-Premise: What You Need to Know

As AI adoption accelerates across industries, the deployment of machine learning models particularly for inference—has become a strategic infrastructure decision. One of the most fundamental choices organizations face is whether to deploy inference workloads in the cloud or on-premise.

This decision isn’t just about infrastructure—it affects performance, cost, scalability, security, and ultimately, business agility. In this blog, we break down the core tradeoffs between cloud and on-premise AI inference to help you make the best decision for your organization.

1. Performance and Latency

· Cloud: Leading cloud providers offer highly optimized GPUs/TPUs and AI inference services with global availability. However, latency can be a concern for real-time applications where data must travel to and from the cloud.

· On-Premise: Provides lower latency and consistent performance, especially when co-located with data sources (e.g., in factories, hospitals, or autonomous vehicles).

Best for: On-premise is ideal for latency-sensitive or high-throughput scenarios with local data.

2. Scalability and Flexibility

· Cloud: Offers elastic scalability. You can dynamically spin up resources as demand fluctuates, and support multi-region deployments without investing in physical hardware.

· On-Premise: Scaling is constrained by available hardware. Adding capacity requires lead time for procurement, setup, and configuration.

Best for: Cloud is the clear winner for organizations with variable workloads or rapid growth.

3. Cost Considerations

· Cloud: Offers a pay-as-you-go model, which reduces upfront costs but can become expensive at scale, especially with persistent usage and GPU-intensive workloads.

· On-Premise: High upfront investment but potentially lower TCO (total cost of ownership) over time for consistent workloads, particularly if resources are fully utilized.

Best for: On-premise may offer long-term savings for stable, predictable inference loads.

4. Security and Compliance

· Cloud: Cloud providers offer robust security and compliance tools, but data must leave your environment, which can pose risks for sensitive applications (e.g., in healthcare or finance).

· On-Premise: Offers greater control over data sovereignty and physical security, making it preferable for regulated industries.

Best for: On-premise is often required for strict compliance or where data privacy is paramount.

5. Deployment Speed and Operations

· Cloud: Faster deployment with minimal setup. Managed services reduce the DevOps burden and accelerate time-to-market.

· On-Premise: Requires significant setup and ongoing management (e.g., hardware maintenance, patching, monitoring).

Best for: Cloud suits teams with limited infrastructure or IT resources.

6. Hybrid Approaches: The Best of Both Worlds?

Many organizations are adopting hybrid strategies, running low-latency or secure workloads on-premise while offloading compute-intensive or scalable tasks to the cloud.

Examples include:

· Performing initial preprocessing on edge/on-prem and sending summary data to the cloud.

· Deploying real-time models on-site and periodically retraining them in the cloud.

Final Thoughts

There’s no one-size-fits-all answer. Choosing between cloud and on-premise AI inference depends on your workload characteristics, compliance needs, and long-term strategy.

Key takeaways:

· Choose cloud for scalability, speed, and operational ease.

· Choose on-premise for control, compliance, and latency.

· Consider hybrid for flexibility and optimization.

Before committing, run cost analyses, latency benchmarks, and pilot tests to align infrastructure choices with business goals.

0 notes

Text

What Is IT Hardware Maintenance: 7 Common Maintenance Services You Need

IT hardware maintenance refers to the ongoing support, servicing, and upkeep of physical IT equipment such as servers, storage devices, workstations, desktops, laptops, networking gear, and peripherals. Regular maintenance ensures performance, reduces the risk of failures, extends hardware life, and helps organizations avoid costly downtime.

Here are seven essential IT hardware maintenance services every business should consider:

1. Preventive Maintenance

Routine inspections, cleanings, and firmware updates to keep equipment running efficiently. This includes:

Cleaning dust from servers and desktops

Replacing aging components (fans, batteries, etc.)

Verifying hardware performance benchmarks

2. Break-Fix Support

On-demand repair services when a device or system fails. Typically involves:

Diagnosing issues (hardware diagnostics)

Replacing or repairing faulty parts

Restoring operations with minimal disruption

3. Hardware Monitoring and Diagnostics

Remote or on-site monitoring of hardware health using diagnostic tools to:

Track temperatures, fan speeds, and voltage

Detect hard drive errors, memory issues, or power failures early

Trigger alerts before full system failure occurs

4. Firmware and BIOS Updates

Updating low-level software on devices to fix bugs, improve compatibility, and patch security vulnerabilities. Essential for:

Servers

Network switches

Storage systems

5. Hardware Upgrades and Replacements

Keeping hardware aligned with performance demands by:

Upgrading RAM, SSDs, GPUs, or CPUs

Replacing legacy hardware nearing end-of-life (EOL)

Ensuring compatibility with newer operating systems

6. Asset Tracking and Lifecycle Management

Maintaining a log of hardware assets, warranties, and usage stats:

Helps with budgeting and procurement

Tracks service history

Determines when to repair vs. replace equipment

7. Third-Party Maintenance (TPM) Services

Cost-effective support after OEM warranties expire. Benefits include:

Reduced maintenance costs

Extended lifespan for older hardware

Flexible SLAs for support availability

Why IT Hardware Maintenance Matters:

Minimizes unplanned downtime

Enhances performance and security

Reduces total cost of ownership (TCO)

Supports business continuity

Would you like a downloadable checklist or comparison guide for OEM vs. third-party maintenance?

0 notes

Text

Empower Your Business with the Best Dell Server Distributor in Dubai

In today’s fast-paced digital world, dependable IT infrastructure is not a luxury—it’s a necessity. For businesses in Dubai, ensuring smooth operations, secure data handling, and high-speed performance starts with one critical component: servers. At Dellserver-Dubai, we understand how essential these needs are. That’s why we’ve become a trusted name for anyone searching for a reliable Dell Server distributor Dubai. Whether you’re a small business scaling your resources or an enterprise upgrading its data center, we offer the most competitive deals on genuine Dell servers in the region.

Why Dell Servers Are a Smart Investment

Dell has long stood at the forefront of server technology, offering durable, high-performance systems that meet the needs of businesses of all sizes. Known for their power, flexibility, and long-term reliability, Dell servers are engineered to support complex computing tasks, virtualized environments, big data analytics, and cloud applications. Choosing Dell means investing in proven technology, and when you get it through Dellserver-Dubai, you’re also gaining access to exceptional customer service, real-time inventory, and the assurance that you're buying from a dedicatedDell Server distributor Dubai.

At Dellserver-Dubai, we make it our mission to help you leverage Dell technology to its fullest. We bring together certified expertise, genuine products, and tailored advice to ensure you get the most value from your server infrastructure. Whether you need a single PowerEdge rack server or are building a redundant multi-node environment, we deliver Dell's excellence with personalized support every step of the way.

Exclusive Offers from a Trusted Dell Server Seller in Dubai

What separates us from other vendors is more than just our product range. As one of the leading Dell Server sellers in Dubai, we offer exclusive pricing and deals that you won’t find elsewhere. Because we source directly from Dell’s authorized channels, we’re able to pass on savings to our clients—while still providing full manufacturer warranties and support.

Our customers include IT consultants, data center operators, SMEs, educational institutions, and government departments across the UAE. They trust Dellserver-Dubai not just for affordability, but also for authenticity and reliability. Every Dell server you buy from us is guaranteed to be 100% original and brand-new. And because we’re based locally in Dubai, you get faster delivery, local technical support, and no unnecessary international shipping fees or delays.

Discover the Full Range of Dell Servers in Dubai

As a specialized Dell Server wholesale Dubai, we maintain an extensive stock of Dell server models designed to meet a wide variety of needs. From the entry-level PowerEdge T-series towers ideal for small offices to high-performance R-series rack servers used in modern data centers, we’ve got it all under one roof. Need specialized storage-focused servers or GPU-enhanced machines for AI processing? We’ve got that covered too.

Each server comes with a selection of customizable configurations—whether you’re looking for high core-count CPUs, massive RAM capacities, scalable RAID arrays, or hot-swappable power supplies. The goal is simple: to provide every business with a tailor-fit solution that enhances productivity, reliability, and security.

And for clients with bulk requirements or long-term projects, we also offer the best Dell Server wholesale pricing in Dubai. We support system integrators, resellers, and IT solution providers with bulk discounts and dedicated account management to ensure smooth and cost-effective procurement.

Technical Guidance That Makes a Difference

We know that buying a server isn’t just about hardware—it’s about solving business challenges. That’s why our team of IT experts is always on hand to help you choose the right system based on your current needs and future growth plans. As experienced professionals in server deployment, storage, and networking, we help you make informed decisions that align with your business goals.

When you work with Dell Server dubai, you’re not just dealing with another Dell Server seller in Dubai—you’re working with a team that genuinely understands technology and cares about your success. From pre-sales consultations to post-purchase support, we stay with you throughout the server’s lifecycle to ensure smooth performance and quick resolutions to any issues that arise.

Your One-Stop Dell Server Wholesale Partner

Our growing base of repeat clients across the UAE and Gulf region is a testament to our commitment to service. We’re not here for one-off sales—we’re here to build lasting partnerships. As a trusted Dell Server wholesale provider, we serve IT companies and system integrators looking to deliver the best solutions to their clients, without breaking budgets or sacrificing quality.

We maintain consistent stock levels and offer flexible payment terms for bulk buyers. Need a server quickly for a client project? We’ve got you. Need help with a custom build-out for a data center deployment? We’re on it. Dellserver-Dubai is where convenience meets capability—and where you’ll find all your server needs fulfilled under one roof.

Start Growing with Reliable Dell Server Solutions

If your business depends on performance, uptime, and security, there’s no better time to invest in a Dell solution—and no better partner than Dellserver-Dubai. As a recognized Dell Server distributor Dubai, we’re here to help your business grow with dependable, enterprise-grade technology at the best possible value.

Reach out to our team today and experience what hundreds of businesses already know: when it comes to trusted Dell Server sellers in Dubai, no one does it better. Whether you’re upgrading, expanding, or starting from scratch, let us provide the reliable backbone your IT environment deserves—with real expertise, real products, and real value.

0 notes

Text

The AI Explosion Continues in 2025: What Organizations Should Anticipate This Year

New Post has been published on https://thedigitalinsider.com/the-ai-explosion-continues-in-2025-what-organizations-should-anticipate-this-year/

The AI Explosion Continues in 2025: What Organizations Should Anticipate This Year

With AI forecasted to continue its explosion in 2025, the ever-evolving technology presents both unprecedented opportunities and complex challenges for organizations worldwide. To help today’s organizations and professionals secure the most value from AI in 2025, I’ve shared my thoughts and anticipated AI trends for this year.

Organizations Must Strategically Plan for the Cost of AI

The world continues to be ecstatic about the potential of AI. However, the cost of AI innovation is a metric that organizations must plan for. For example, AI needs GPUs, however many CSPs have larger deployments of N-1, N-2 or older GPUs which weren’t built exclusively for AI workloads. Also, cloud GPUs can be cost prohibitive at scale and easily switched on for developers as projects grow/scale (more expense); additionally, buying GPUs (if able to procure due to scarcity) for on-prem use can also be a very expensive proposition with individual chips costing well into the tens of thousands of dollars. As a result, server systems built for demanding AI workloads are becoming cost prohibitive or out of reach for many with capped departmental operating expenses (OpEx) budgets. In 2025, enterprise customers must level-set their AI costs and re-sync levels of AI development budget. With so many siloed departments now taking initiative and building their own AI tools, companies can inadvertently be spending thousands per month on small or siloed uses of cloud-based GPU and their requirement for AI compute instances, which all mount up (especially if users leave these instances running).

Open-Source Models Will Promote the Democratization of Several AI Use Cases

In 2025, there will be immense pressure for organizations to prove ROI from AI projects and associated budgets. With the cost leveraging low code or no code tools provided by popular ISVs to build AI apps, companies will continue to seek open-source models which are more easily fine tuned rather than training and building from scratch. Fine-tuning open-source models more efficiently use available AI resources (people, budget and/or compute power), helping explain why there are currently over 900K+ (and growing) models available for download at Hugging Face alone. However, when enterprises embark on open-source models, it will be critical to secure and police the use of open-source software, framework, libraries and tools throughout their organizations. Lenovo’s recent agreement with Anaconda is a great example of this support, where the Intel-powered Lenovo Workstation portfolio and Anaconda Navigator help streamline data science workflows.

AI Compliance Becomes Standard Practice

Shifts in AI policy will see the computing of AI move closer to the source of company data, and more on-premises (especially for the AI Development phases of a project or workflow). As AI becomes closer to the core of many businesses, it will move from a separate parallel or special workstream to that in line with many core business functions. Making sure AI is compliant and responsible is a real objective today, so as we head into 2025 it will become more of a standard practice and form part of the fundamental building blocks for AI projects in the enterprise. At Lenovo, we have a Responsible AI Committee, comprised of a diverse group of employees who ensure solutions and products meet security, ethical, privacy, and transparency standards. This group reviews AI usage and implementation based on risk, applying security policies consistently to align with a risk stance and regulatory compliance. The committee’s inclusive approach addresses all AI dimensions, ensuring comprehensive compliance and overall risk reduction.

Workstations Emerge as Efficient AI Tools In and Out of the Office

Using workstations as more powerful edge and departmental based AI appliances is already on the increase. For example, Lenovo’s Workstation portfolio, powered by AMD, helps media and entertainment professionals bridge the gap between expectations and the resources needed to deliver the highest-fidelity visual content. Thanks to their small form factor and footprint, low acoustic, standard power requirements, and use of client-based operating systems, they can be easily deployed as AI inference solutions where more traditional servers may not fit. Another use case is within standard industry workflows where AI enhanced data analytics can deliver real business value and is VERY line of sight to C suite execs trying to make a difference. Other use cases are the smaller domain specific AI tools being created by individuals for their own use. These efficiency savings tools can become AI superpowers and can include everything from MS Copilot, Private Chatbots to Personal AI Assistants.

Maximize AI’s Potential in 2025

AI is one of the fastest-growing technological evolutions of our era, breaking into every industry as a transformative technology that will enhance efficiency for all – enabling faster and more valuable business outcomes.

AI, including machine and deep learning and generative AI with LLMs, requires immense compute power to build and maintain the intelligence needed for seamless customer AI experiences. As a result, organizations should ensure they leverage high-performing and secure desktop and mobile computing solutions to revolutionize and enhance the workflows of AI professionals and data scientists.

#2025#acoustic#agreement#ai#AI compliance#AI development#ai inference#AI innovation#ai tools#amd#anaconda#Analytics#approach#apps#bridge#budgets#Building#Business#chatbots#chips#Cloud#code#Companies#compliance#comprehensive#computing#content#CSPs#data#data analytics

0 notes

Text

Did DeepSeek Use Shell Companies In Singapore To Procure Nvidia Blacklisted Chips?

Zero Hedge by Tyler DurdenFriday, Jan 31, 2025 – 07:20 AM Chinese startup DeepSeek’s latest AI models, V3 and R1, were reportedly trained on just 2,048 Nvidia H800 chips—GPUs that were blacklisted by the US government in 2023 for sale to Chinese firms. Federal investigators are now combing through trade data to determine how DeepSeek acquired the restricted chips. Read more…

0 notes

Text

AI in Supply Chain Market to be Worth $58.55 Billion by 2031

Meticulous Research®—a leading global market research company, published a research report titled, ‘AI in Supply Chain Market by Offering (Hardware, Software, Other), Technology (ML, NLP, RPA, Other), Deployment Mode, Application (Demand Forecasting, Other), End-use Industry (Manufacturing, Retail, F&B, Other) & Geography - Global Forecast to 2031’

According to this latest publication from Meticulous Research®, the AI in supply chain market is projected to reach $58.55 billion by 2031, at a CAGR of 40.4% from 2024 to 2031. The growth of the AI in supply chain market is driven by the increasing incorporation of artificial intelligence in supply chain operations and the rising need for greater visibility & transparency in supply chain processes. However, the high procurement & operating costs of AI-based supply chain solutions and the lack of supporting infrastructure restrain the growth of this market.

Furthermore, the growing demand for AI-based business automation solutions is expected to generate growth opportunities for the players operating in this market. However, performance issues in integrating data from multiple sources and data security & privacy concerns are major challenges impacting market growth. Additionally, the rising demand for cloud-based supply chain solutions is a prominent trend in the AI in supply chain market.

Based on offering, the AI in supply chain market is segmented into hardware, software, and services. In 2024, the hardware segment is expected to account for the largest share of 44.4% of the AI in supply chain market. The large market share of this segment is attributed to advancements in data center capabilities, the growing need for storage hardware due to increasing storage requirements for AI applications, the crucial need for constant connectivity in the supply chain operations, and the emphasis on product development and enhancement by manufacturers. For instance, in January 2023, Intel Corporation launched its 4th Gen Intel Xeon Scalable processors (code-named Sapphire Rapids), the Intel Xeon CPU Max Series (code-named Sapphire Rapids HBM), and the Intel Data Center GPU Max Series (code-named Ponte Vecchio). These new processors deliver significant improvements in data center performance, efficiency, security, and AI capabilities.

However, the software segment is expected to record the highest CAGR of 43.2% during the forecast period. This segment's growth is driven by the rising focus on product development and the enhancement of supply chain software and the benefits offered by supply chain software in facilitating supply chain visibility and centralized operations.

Download Sample Report Here @ https://www.meticulousresearch.com/download-sample-report/cp_id=5064

Based on technology, the AI in supply chain market is segmented into machine learning, computer vision, natural language processing, context-aware computing, and robotic process automation. In 2024, the machine learning segment is expected to account for the largest share of 63.0% of the AI in supply chain market. The large market share of this segment is attributed to the advancements in data center capabilities, increasing deployment of machine learning solutions and its ability to perform tasks without relying on human input, and the rapid adoption of cloud-based technology across several industries. For instance, in June 2022, FedEx Corporation (U.S.) invested in FourKites, Inc. (U.S.), a supply chain visibility startup. This strategic collaboration allows FedEx to leverage its machine learning and AI capabilities with data from FedEx, enhancing its operational efficiency and visibility.

However, the robotic process automation segment is expected to record the highest CAGR of 42.9% during the forecast period. The growth of this segment is driven by the increased adoption of RPA across various industries and the rising demand for automating business processes to meet heightened customer expectations.

Based on deployment mode, the AI in supply chain market is segmented into cloud-based deployments and on-premise deployments. In 2024, the cloud-based deployments segment is expected to account for the larger share of 75.6% of the AI in supply chain market. The large market share of this segment is attributed to the increasing avenues for cloud-based deployments, the superior flexibility and affordability offered by cloud-based deployments, and the increasing adoption of cloud-based solutions by small & medium-sized enterprises.

Moreover, the cloud-based deployments segment is expected to record the highest CAGR during the forecast period. The rapid development of new security measures for cloud-based deployments is expected to drive this segment's growth in the coming years.

Based on application, the AI in supply chain market is segmented into demand forecasting, supply chain planning, warehouse management, fleet management, risk management, inventory management, predictive maintenance, real-time supply chain visibility, and other applications. In 2024, the demand forecasting segment is expected to account for the largest share of 25.2% of the AI in supply chain market. The large market share of this segment is attributed to the rising initiatives to integrate AI capabilities in supply chain solutions, dynamic changes in customer behaviors and expectations, and the rising need to achieve accuracy and resilience in the supply chain. For instance, in March 2023, Zionex, Inc. (South Korea), a prominent provider of advanced supply chain and integrated business planning platforms, launched PlanNEL Beta. This AI-powered SaaS platform is designed for demand forecasting and inventory optimization.

However, the real-time supply chain visibility segment is expected to record the highest CAGR during the forecast period. This segment's growth is driven by the rising integration of AI capabilities into supply chains to obtain real-time data on them.

Based on end-use industry, the AI in supply chain market is segmented into manufacturing, food and beverage, healthcare & pharmaceuticals, automotive, retail, building & construction, medical devices & consumables, aerospace & defense, and other end-use industries. In 2024, the manufacturing segment is expected to account for the largest share of 23.1% of the AI in supply chain market. The large market share of this segment is attributed to the increasing number of manufacturing companies, favorable initiatives to integrate artificial capabilities in the supply chain, and the increasing focus on achieving accuracy and resilience in the supply chain among manufacturers.

However, the retail segment is expected to record the highest CAGR of 47.8% during the forecast period. This segment's growth is driven by the rising integration of AI capabilities in the retail supply chain to forecast inventory and demand and retailers' growing focus on meeting consumer expectations.

Based on geography, the AI in supply chain market is segmented into North America, Europe, Asia-Pacific, Latin America, and the Middle East & Africa. In 2024, Asia-Pacific is expected to account for the largest share of 36.9% of the AI in supply chain market. The large market share of this region is attributed to the rapid pace of digitalization and modernization across industries, the advent of Industry 4.0, and the growing adoption of advanced technologies across various businesses.

Moreover, the Asia-Pacific region is projected to record the highest CAGR of 42.7% during the forecast period. This market's growth is driven by the proliferation of advanced supply chain solutions, the rising deployment of AI tools across the region, and efforts by major market players to implement AI technology across various sectors.

Key Players

Some of the key players operating in the AI in supply chain market are IBM Corporation (U.S.), SAP SE (Germany), Microsoft Corporation (U.S.), Google LLC (U.S.), Amazon Web Services, Inc. (U.S.), Intel Corporation (U.S.), NVIDIA Corporation (U.S.), Oracle Corporation (U.S.), C3.ai, Inc. (U.S.), Samsung SDS CO., Ltd. (South Korea), Coupa Software Inc. (U.S.), Micron Technology, Inc. (U.S.), Advanced Micro Devices, Inc. (U.S.), FedEx Corporation (U.S.), and Deutsche Post DHL Group (Germany).

Complete Report Here : https://www.meticulousresearch.com/product/ai-in-supply-chain-market-5064

Key questions answered in the report:

Which are the high-growth market segments based on offering, technology, deployment mode, application, and end-use industry?

What was the historical market for AI in supply chain?

What are the market forecasts and estimates for the period 2024–2031?

What are the major drivers, restraints, and opportunities in the AI in supply chain market?

Who are the major players, and what shares do they hold in the AI in supply chain market?

What is the competitive landscape like in the AI in supply chain market?

What are the recent developments in AI in supply chain market?

What are the different strategies adopted by the major players in AI in supply chain market?

What are the key geographic trends, and which are the high-growth countries?

Who are the local emerging players in the AI in supply chain market, and how do they compete with the other players?

Contact Us: Meticulous Research® Email- [email protected] Contact Sales- +1-646-781-8004 Connect with us on LinkedIn- https://www.linkedin.com/company/meticulous-research

#AI in Supply Chain Market#AI-powered Supply Chain#Demand Forecasting#Real-time Supply Chain Visibility#Supply Chain Planning#Inventory Management#Fleet Management#Warehouse Management

0 notes

Text

Reducing Time-to-Market with QSC Cloud GPUs

In today’s fast-paced technological landscape, enterprise-level companies and AI-driven startups are under immense pressure to stay ahead of the curve. With the rise of machine learning models, deep learning techniques, and big data analytics, the need for high-performance computing resources has never been greater. However, as these companies scale their AI projects, they often face significant challenges related to GPU availability, scalability, and cost efficiency. This is where QSC Cloud comes in, offering a powerful and flexible solution to accelerate AI workflows and reduce time-to-market.

The Challenges Facing AI-Driven Enterprises

For AI and machine learning teams, the ability to quickly develop, train, and deploy complex models is crucial. However, many organizations encounter common pain points in their journey:

Limited Access to High-Performance GPU Clusters: Advanced machine learning models require significant computational power. Traditional data centers may not have enough GPUs available or may involve lengthy approval processes to gain access to the necessary resources.

Scalability Issues: As AI projects grow, so do the computational demands. Many enterprises struggle to quickly scale their infrastructure to meet these rising demands, which can lead to delays in project timelines.

Long Setup Times and High Costs: Setting up and maintaining GPU infrastructure can be expensive and time-consuming. Enterprises often face high upfront costs when investing in their own hardware and the logistics involved in maintaining these systems.

These challenges result in slowed innovation and delayed time-to-market, which can directly impact a company’s competitive advantage in the AI space.

How QSC Cloud Can Help

QSC Cloud provides a cost-effective, flexible, and scalable solution to these challenges. By offering on-demand access to high-performance GPU clusters like the Nvidia H100 and H200, QSC Cloud empowers enterprises to seamlessly scale their AI workflows without the burden of managing complex hardware or worrying about infrastructure limitations.

Here’s how QSC Cloud helps reduce time-to-market:

1. On-Demand Access to High-Performance GPUs

With QSC Cloud, enterprises can instantly access powerful GPU clusters on-demand, whether they are running deep learning models, large-scale data analytics, or training AI systems. This eliminates the need for long procurement processes and gives teams the flexibility to rapidly access the compute power they need, when they need it.

2. Scalability to Meet Growing Demands

One of the biggest benefits of QSC Cloud is its scalability. As AI projects grow in complexity, so does the need for more computational power. QSC Cloud offers the flexibility to scale resources up or down as needed, without the hassle of investing in additional hardware or worrying about infrastructure limitations. This means teams can remain agile and responsive to project needs, reducing downtime and accelerating development cycles.

3. Cost Efficiency and Quick Setup

Traditional data centers often require significant upfront investment and maintenance costs. QSC Cloud, however, offers a pay-as-you-go model, allowing enterprises to only pay for the resources they actually use. This makes it easier to manage costs and prevents overspending on unused infrastructure. Additionally, with quick setup times and easy deployment, businesses can focus on their AI projects rather than worrying about managing hardware or infrastructure.

Accelerating Innovation with QSC Cloud

In the fast-moving world of AI development, time is of the essence. Delays in computational resources can lead to missed opportunities and delayed product releases. By using QSC Cloud, enterprises can streamline their workflows, reduce the time required for training and inferencing, and ultimately reduce their time-to-market. Whether you are a large enterprise or an AI-driven startup, QSC Cloud offers the scalability, cost-effectiveness, and speed needed to stay ahead of the competition and innovate faster.

With QSC Cloud, you can solve the challenges of GPU availability, scalability, and cost efficiency, enabling you to accelerate your AI projects and meet the growing demands of the market.

1 note

·

View note

Text

Chrome mais leve usando menos memória RAM (Otimização)

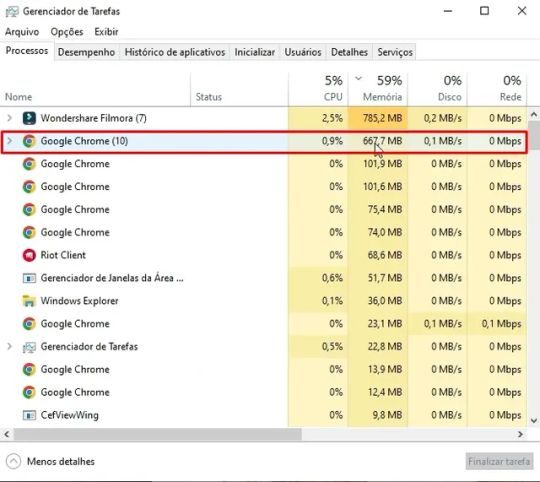

Neste artigo vou mostrar como otimizar drasticamente o desempenho do Google Chrome. Nos testes em que eu realizei, percebi uma boa diferença no consumo de Memória RAM. Então, eu vou mostrar como fiz passo a passo para conseguir reduzir e deixar mais leve o Google Chrome. Com apenas 3 guias abertas esta consumindo mais de 1 GB de Memória. E essas 3 guias fazem com que fique aberto vários processos do Chrome em execução. Aprenda esta configuração avançada e depois você comenta se funcionou para você também beleza.

Deixando o Chrome mais leve e reduzindo o consumo de memória RAM

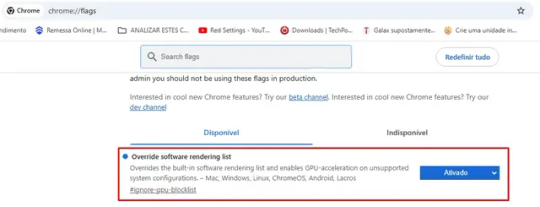

1. Override Software Rendering List Primeiro abra o Chrome e digite no campo de endereços chrome://flags. Será exibido uma página de configuração avançada do Google Chrome. Nesta página você vai procurar por Override Software Rendering List. Ative e clique em Reiniciar para salvar as configurações.

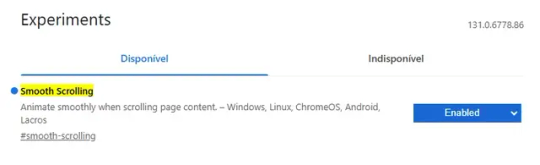

2. Smooth Scrolling Nesta próxima configuração, procure por Smooth Scrolling. (Ative esta configuração para uma animação mais suave nas rolagens de páginas da Web) e clique no botão Reiniciar.

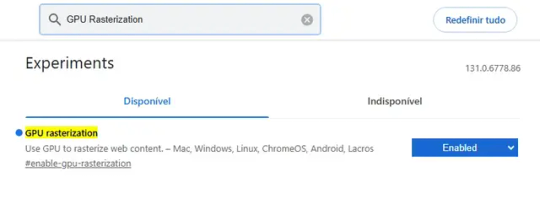

3. GPU Rasterization Agora no campo de pesquisa desta mesma tela, digite GPU. Encontre a configuração GPU Rasterization e ative esta configuração e clique em Reiniciar.

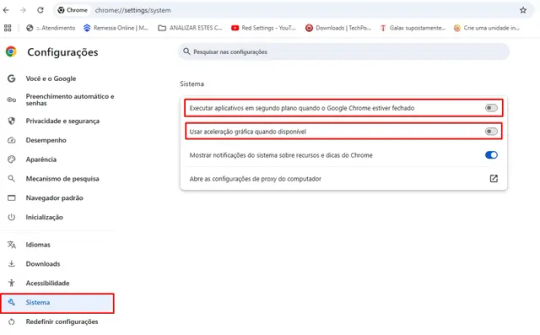

Outras configurações - Clique nos 3 pontinhos laterais na parte superior e abra as configurações. - Na aba esquerda selecione Desempenho e desative 'Alertas de problemas de desempenho'. - Abaixo, em Economia de memória, estará provavelmente selecionada a opção Equilibrada, altere para Máximo - Em velocidade de páginas pré-carregadas selecione Pré-carregamento padrão. - Agora vamos na aba Aparência e vamos desativar a opção 'Mostrar imagens de prévia na guia'. - E por último, na aba Sistema desative as opções: 'Executar aplicativos em segundo plano quando o Google Chrome estiver fechado' e também 'Usar aceleração gráfica quando disponível'.

É Hora de ver o resultado Ao refazer o teste de consumo de Memória Ram no Chrome, observei que ao abrir 3 guias novamente, o consumo de memória foi reduzido de 1 GB para 650 megabytes, ganhando um resultado incrível e deixando o navegador mais leve. Se esta configuração que eu te passei der certo aí para você comenta aqui embaixo para eu saber que funcionou.

Read the full article

0 notes

Text

NayaOne Digital Sandbox Supports Financial Services Growth

Leaders in Fintech Use Generative AI to Provide Faster, Safer, and More Accurate Financial Services.

Ntropy, Contextual AI, NayaOne, and Securiti improve financial planning, fraud detection, and other AI applications with NVIDIA NIM microservices and quicker processing. A staggering 91% of businesses in the financial services sector (FSI) are either evaluating artificial intelligence or currently using it as a tool to improve client experiences, increase operational efficiency, and spur innovation.

Generative AI powered by NVIDIA NIM microservices and quicker processing may improve risk management, fraud detection, portfolio optimization, and customer service.

Companies like Ntropy, Contextual AI, and NayaOne all part of the NVIDIA Inception program for innovative startups are using these technologies to improve financial services applications.

Additionally, NVIDIA NIM is being used by Silicon Valley-based firm Securiti to develop an AI-powered copilot for financial services. Securiti is a centralized, intelligent platform for data and generative AI safety.

The businesses will show how their technology can transform heterogeneous, sometimes complicated FSI data into actionable insights and enhanced innovation possibilities for banks, fintechs, payment providers, and other organizations at Money20/20, a premier fintech conference taking place this week in Las Vegas.

- Advertisement -

Ntropy Brings Order to Unstructured Financial Data

New York-based Ntropy Organizes Unstructured Financial Data Ntropy assists in clearing financial services processes of different entropy disorder, unpredictability, or uncertainty states.

By standardizing financial data from various sources and geographical locations, the company’s transaction enrichment application programming interface (API) serves as a common language that enables financial services applications to comprehend any transaction with human-like accuracy in milliseconds, at a 10,000x lower cost than conventional techniques.

The NVIDIA Triton Inference Server and Llama 3 NVIDIA NIM microservice use NVIDIA H100 Tensor Core GPUs. The Llama 3 NIM microservice increased Ntropy’s large language models (LLMs) usage and throughput by 20x compared to native models.

Using LLMs and the Ntropy data enricher, Airbase, a top supplier of procure-to-pay software platforms, improves transaction authorization procedures.

Ntropy will talk at Money20/20 about how their API may be used to clean up merchant data belonging to consumers, which increases fraud detection by enhancing risk-detection algorithms’ accuracy. Consequently, this lowers revenue loss and erroneous transaction declines.

In order to expedite loan distribution and user decision-making, an additional demonstration will demonstrate how an automated loan agent uses the Ntropy API to examine data on a bank’s website submit an appropriate investment report.

What Is A Contextual AI?

Contextual AI perceives and reacts to its surroundings. This implies that when it answers, it takes into account the user’s location, prior actions, and other crucial information. These systems are designed to provide customized and relevant responses.

Contextual AI Advances Retrieval-Augmented Generation for FSI

A California-based company with headquarters in Mountain View, provides a production-grade AI platform that is perfect for developing corporate AI applications in knowledge-intensive FSI use cases. Retriever-augmented generation powers this platform.

In order to provide significantly higher accuracy in context-dependent tasks, the Contextual AI platform combines the entire RAG pipeline extraction, retrieval, reranking, and generation into a single, optimized system that can be set up in a matter of minutes and further customized and tuned in response to user requirements.

HSBC intends to employ contextual AI to retrieve and synthesize pertinent market outlooks, financial news, and operational papers in order to enhance research findings and process recommendations. Contextual AI’s pre-built applications, which include financial analysis, policy-compliance report production, financial advising inquiry resolution, and more, are also being used by other financial institutions.

A user may inquire, “What’s our forecast for central bank rates by Q4 2025?” for instance. With references to certain parts of the source, the Contextual AI platform would provide a succinct explanation and a precise response based on real documents.

Contextual AI works with the open-source NVIDIA TensorRT-LLM library and NVIDIA Triton Inference Server to improve LLM inference performance.

NayaOne Provides Digital Sandbox for Financial Services Innovation

London-based NayaOne Offers a Digital Sandbox for Financial Services Innovation. Customers may safely test and certify AI applications using NayaOne‘s AI sandbox before they are commercially deployed. Financial institutions may develop synthetic data and access hundreds of fintechs on its platform.

Customers may utilize the digital sandbox to better assure top performance and effective integration by benchmarking apps for accuracy, fairness, transparency, and other compliance standards.

The need for AI-powered financial services solutions is growing, and our partnership with NVIDIA enables organizations to use generative AI’s potential in a safe, regulated setting. “Its’re building an ecosystem that will enable financial institutions to prototype more quickly and efficiently, resulting in genuine business transformation and expansion projects.”

Customers may explore and experiment with optimal AI models using NayaOne‘s AI Sandbox, which makes use of NVIDIA NIM microservices, and more quickly deploy them. When using NVIDIA accelerated computing, NayaOne can analyze massive datasets for its fraud detection models up to 10 times quicker and with up to 40% less infrastructure cost than when using extensive CPU-based algorithms.

Using the open-source NVIDIA RAPIDS data science and AI libraries, the digital sandbox speeds up money movement fraud detection and prevention. At the NVIDIA AI Pavilion at Money20/20, the company will display its digital sandbox.

Securiti’s AI Copilot Enhances Financial Planning

Securiti’s very adaptable Data+AI platform enables customers to create secure, end-to-end corporate AI systems, supporting a wide variety of generative AI applications such as safe enterprise AI copilots and LLM training and tuning.

The business is currently developing a financial planning assistant that is driven by NVIDIA NIM. In order to deliver context-aware answers to customers’ financial inquiries, the copilot chatbot accesses a variety of financial data while abiding by privacy and entitlement regulations.

The chatbot pulls information from a number of sources, including investing research materials, customer profiles and account balances, and earnings transcripts. Securiti’s technology preserves controls like access entitlements while securely ingesting and preparing information for usage with high-performance, NVIDIA-powered LLMs. Lastly, it offers consumers personalized replies via an easy-to-use user interface.

Securiti ensured the secure usage of data while optimizing the LLM’s performance using the Llama 3 70B-Instruct NIM microservice. The company will demonstrate generative AI at Money20/20. The NVIDIA AI Enterprise software platform offers Triton Inference Server and NIM microservices.

Read more on Govindhtech.com

#NayaOne#DigitalSandbox#ContextualAI#Ntropy#GenerativeAI#NVIDIANIMmicroservices#NVIDIANIM#AI#Securiti#News#Technews#Technology#Technologytrends#govindhtech#Technologynews

0 notes

Text

Critérios para Selecionar o Notebook Ideal para Programação

Escolher o notebook ideal para programação e desenvolvimento pode ser uma tarefa desafiadora, especialmente com tantas opções no mercado. Você precisa de um equipamento que ofereça desempenho, durabilidade e conforto para longas horas de trabalho. Neste artigo, vamos explorar os principais critérios para selecionar o melhor notebook para suas necessidades, incluindo comparações como asus ou lenovo e outros fatores essenciais.

Entendendo Suas Necessidades

Antes de mergulhar nas especificações técnicas, é importante entender suas necessidades específicas. Você é um desenvolvedor front-end, back-end ou full-stack? Trabalha com aplicações pesadas como machine learning e big data, ou foca mais em desenvolvimento web e mobile? Essas perguntas ajudam a definir o que procurar em um notebook ideal para trabalho.

Processador e Memória RAM

O processador é o coração do seu notebook. Para desenvolvimento, recomendamos pelo menos um Intel Core i5 ou Ryzen 5. Se você trabalha com tarefas mais intensivas, como compilar grandes projetos ou rodar máquinas virtuais, um Intel Core i7 ou Ryzen 7 é ideal. A memória RAM também é crucial; 8GB é o mínimo recomendável, mas 16GB ou mais é o ideal para garantir que você possa trabalhar sem problemas de desempenho.

Armazenamento

O tipo e a quantidade de armazenamento também são fundamentais. Um SSD (Solid State Drive) é preferível a um HDD, pois oferece tempos de leitura e gravação muito mais rápidos. Isso resulta em um sistema mais responsivo e tempos de compilação reduzidos. Um SSD de 256GB é um bom começo, mas 512GB ou mais é ideal, especialmente se você trabalha com muitos projetos ou arquivos grandes.

Tela e Resolução

A tela do seu notebook é onde você passará a maior parte do tempo, então escolher uma de boa qualidade é essencial. Uma resolução Full HD (1920x1080) é o mínimo recomendável para proporcionar clareza e espaço suficiente para múltiplas janelas de código. Telas maiores de 15 polegadas são preferíveis, mas um modelo de 13 ou 14 polegadas pode ser mais portátil.

Teclado e Conforto

Programadores passam muitas horas digitando, então um teclado confortável é essencial. Procure um notebook com um teclado de tamanho completo, teclas bem espaçadas e um bom feedback tátil. A retroiluminação do teclado também pode ser um diferencial importante se você trabalha em ambientes com pouca luz.

Portabilidade

A portabilidade é uma consideração importante, especialmente se você trabalha remotamente ou viaja frequentemente. Notebooks leves e finos são mais fáceis de transportar, mas podem sacrificar desempenho. Encontrar um equilíbrio entre potência e portabilidade é crucial. Modelos como os ultrabooks são ideais para esse perfil de usuário.

Bateria

A duração da bateria é um fator crucial para quem precisa de mobilidade. Procure notebooks que ofereçam pelo menos 8 horas de autonomia para garantir que você possa trabalhar ou jogar sem precisar de uma tomada o tempo todo. Alguns modelos podem oferecer até 12 horas de uso com uma única carga.

Considerações Gráficas

Embora a maioria dos desenvolvedores não precise de uma placa gráfica dedicada, se você trabalha com desenvolvimento de jogos ou edição de vídeo, uma GPU dedicada pode ser essencial. Modelos como o NVIDIA GeForce ou AMD Radeon são boas opções.

Comparações de Marcas

Escolher entre marcas como asus ou lenovo pode ser difícil. Ambas oferecem modelos robustos e confiáveis, mas a decisão final pode depender de preferências pessoais e características específicas de cada modelo. Leve em consideração também outras marcas como Dell, HP e Apple, que têm modelos excelentes para desenvolvedores.

Exemplos de Notebooks

Dell XPS 15 - Excelente desempenho com opções de processadores i7 e i9, além de uma tela 4K.

MacBook Pro 16" - Ótimo para desenvolvedores iOS e macOS, com desempenho robusto e tela Retina.

Lenovo ThinkPad X1 Carbon - Portátil, durável e com um teclado muito confortável.

Asus ZenBook Pro Duo - Inovador com uma segunda tela para multitarefa.

Escolher o notebook certo para programação e desenvolvimento envolve considerar uma variedade de fatores, desde o processador e memória RAM até a portabilidade e duração da bateria. Avalie suas necessidades e orçamento para encontrar o modelo que melhor se adapte ao seu trabalho. E não se esqueça de verificar opções específicas para outras atividades, como notebook para estudar e notebook perfeito para jogos.

Ao final, seu objetivo é encontrar um equilíbrio entre desempenho, conforto e preço. Seja você um programador iniciante ou um desenvolvedor experiente, o notebook certo pode fazer uma grande diferença na sua produtividade e satisfação no trabalho. Boa sorte na sua escolha!

0 notes

Text

The Intersection of AI Sovereignty and GPU as a Service: Building Secure, Scalable AI Models

Today, we're exploring a topic, the intersection of AI sovereignty and GPU as a Service (GPUaaS): that's as exhilarating as a plot twist in a science fiction novel. With a dash of intrigue, it's a tale of authority and power control. So, grab your favorite cappuccino coffee, and let's explore how nations exploit GPUaaS to build secure, stable, reliable and scalable AI models, all while keeping the reins firmly in their hands.

Defining AI Sovereignty: What It Means and Why It Matters:

Being the ruler of your digital kingdom, AI Sovereignty is all about keeping your precious data, AI models and technological know-how, developed to create the ecosystem under your haven. That's the crux of Sovereign AI. It is tailored to fulfill the specific requirements of a country or organization having complete control over its AI assets, ensuring data privacy, security, and compliance with local regulations.

In a world where data is regarded as the new oil, trusting external entities for AI solutions can be perilous.Sovereign AI ensures that sensitive information remains intact within national boundaries to reduce dependency on foreign technologies and potential vulnerabilities from cyber thefts. It's like creating a fortress around your digital den, ensuring they're secure from prying eyes

GPU as a Service (GPUaaS): How It Enables Scalable AI Development:

Now, let's talk about the strength behind modern AI—GPUs (Graphics Processing Units). GPU as a Service isn’t just tech jargon; it’s the source of power behind the latest AI innovations and is being acclaimed as the secret sauce for the next wave of AI breakthroughs! GPUs became the mainstay of AI development, powering complex AI computations. But here's the catch: GPUs are expensive and maintaining and procuring high-performance GPUs can be a costly affair like hosting a royal banquet. For training complicated AI models, their capability to execute parallel processing made them impeccable.

But with GPUaaS, you only pay for what you use like only paying for the ride which you have travelled, which saves you from shelling out thousands upfront, easy on your pocket. GPUaaS allows organizations to measure their computing power up or down, based on the need to access top-tier GPU resources. This flexibility quickens AI development without the heavy upfront costs. It sounds logical not to procure expensive hardware that might gather dust during off-peak periods! The flexibility and cost efficiency, in the fast-paced AI arena, make GPUaaS a no-brainer for companies aiming to stay agile.

For example, companies, like NVIDIA and Oracle have collaborated to offer GPUaaS solutions custom-made for AI workloads. While keeping operations effectual and cost-effective, this collaboration offers businesses with the computational firepower they demand.

The Security Imperatives of Sovereign AI:

There is no free lunch in today’s world. So, great power comes with great responsibility and substantial security concerns. Establishing the security of AI systems is supreme, primarily when dealing with sensitive data.

Sovereign AI addresses these concerns by:

Data Localization: By adhering to local laws and protection against external danger, keep data within national or organizational boundaries.

Tailored Security Protocols: Rather than relying on generic solutions, implement security measures customized to specific regional or organizational needs for safe, trusted AI, crucial for future economic and national security.

Reduced Dependency: Sovereign AI is crucial for national security. Safeguarding strategic and economic aspects is of paramount interest, so minimizing reliance on foreign technologies, which may have hidden vulnerabilities will reduce cyber threats.

For example, heightened security is provided by NVIDIA's confidential computing solutions for AI workloads, ensuring that data remains shielded during processing. This approach is associated with the principles of Sovereign AI, providing both control and peace of mind.

Use Cases: Secure and Scalable AI Models Powered by GPUaaS

Let's discuss some real-world examples:

Domestic AI Initiatives: Nations like South Korea, are investing profoundly in AI infrastructure. South Korea aims to bolster its national AI capabilities by securing 10,000 high-performance GPUs, ensuring that development and data processing, are within its borders.

Enterprise AI Solutions: Without massive capital expenditure, required to procure high-performing GPUs, companies are leveraging GPUaaS to develop AI models. Businesses are innovating swiftly through this approach while maintaining command over their data and models.

Healthcare Sector: Hospitals and research institutions are cashing on GPUaaS to process vast amounts of medical data firmly. The principle of Sovereign AI makes sure that patient information remains confidential and complies with local health regulations.

In principle, an amalgamation of Sovereign AI and GPUaaS is like having a stable, secure, and scalable playground for AI innovation. Nations and organizations are empowered to build cutting-edge AI solutions while keeping everything under their supervision.

Wrapping Up:

The technological landscape is undergoing a revolutionary change, thanks to the intersection of AI sovereignty and GPU as a Service. The dynamic duo plays a pivotal role in the scalability of cloud-based GPU resources with the security and control of sovereign AI practices. As we move ahead the key to building AI models is incorporating this synergy that is not only mighty and scalable but also safe and compliant.

So, whether you're a tech entrepreneur, an AI enthusiast, or a lawmaker, keep a close eye on this exciting fusion which is not just the future of AI—it's the future of secure, scalable, and sovereign AI.

1 note

·

View note