#Derek Bickerton

Explore tagged Tumblr posts

Note

hi! what are your favorite nonfiction books then?

OKAY BUCKLE IN

So my all-time favorite book that I am always reading is the Tao Te Ching. I prefer the John H. MacDonald rendition, though I recommend comparing translations once you've made it through once or twice.

For general US-ish history, I enjoy Colin Woodard. I mentioned in my last response that he puts history in a nice narrative sort of format that's still thick with interesting facts and primary source quotations. American Nations was the first of his that I read.

Of course, for the comprehensive US history, you should take a crack at Howard Zinn's People's History.

For lighter history and anthropology, if you haven't read Bill Bryson, he's a lot of fun. He's got two books on English linguistics - The Mother Tongue and Made in America.

If we're talking linguistics, I do also have to give a shoutout to my problematic boi Derek Bickerton and his Bastard Tongues, which is his (somewhat controversial) theory on the nature of pidgin and creole languages. He ends on an absolutely insane experiment proposal that he whines will never happen because it's "unethical" and something something academic proto cancel culture IDK. The man is insane but he writes a damn good book, his theory is interesting and has merit, and the topic is fascinating.

And for books in American indigenous history, my first recommendation is An Indigenous People's History of the United States by Roxanne Dunbar-Ortiz. Charles C. Mann's 1491 is intimidatingly thick, but so worth the read and has an incredible amount of information on pre-contact South America. For more modern history, there's a ton of great books on and by the major players in AIM in the 1970s. In the Spirit of Crazy Horse is a good place to start and is recommended by Leonard Peltier, who the book is about.

Oh, and finally, for psychology, a must-read is The Body Keeps Score.

10 notes

·

View notes

Text

Derek Bickerton? Is that you?

you can't just not talk to babies after they're born to see what little fucked-up language they develop. you can't do that anymore. if you try to not hold a baby after it's born to see what happens they'll say "hey! hold your baby!". and if you wanna just put a bunch of babies alone on an island together, to see what happens, they'll stop you. you can't do any of that anymore. because of woke

11K notes

·

View notes

Text

Si els nens creixessin aïllats dels adults, crearien el seu propi idioma?

Si els nens creixessin aïllats dels adults, crearien el seu propi idioma?

elconfidencial.com

View On WordPress

0 notes

Text

I am not making this up: Creolist Derek Bickerton wanted to experiment with children of adults with no common language on a remote island. The NSF said no.

10 notes

·

View notes

Text

Superlinguo linguistics books list - fiction and non-fiction

This post is a collection of all the reviews and write ups of linguistics fiction and non-fiction that has appeared on Superlinguo over the years. I’ll add to this post whenever I write a new review.

See also, the separate post of links to linguistics books for young people.

Where available, I’ve added affiliate links to Bookshop.org and Amazon. Buying through these links provides financial support to Superlinguo.

Non-fiction

Because Internet, Gretchen McCulloch

I didn’t write a review of this because it’s weird to review a book where you pop up as a minor character in a chapter. I can promise the rest of the book is also excellent. Gretchen is one of the most compelling pop linguistics writers out there. (Bookshop.org affiliate link, Amazon affiliate link)

Language Unlimited: The Science Behind Our Most Creative Power, David Adger

This example-rich ten chapter volume from David Adger focuses on the unique syntactic capacity of human languages. I wish there were more linguistics professors bringing their A-game to a larger audience like this. (Bookshop.org affiliate link, Amazon affiliate link)

How We Talk, N.J. Enfield

If you did linguistics but never got to study Conversation Analysis, or you want a whistlestop tour of some of the most interesting work to come out of the field in the last couple of decades, this book is certainly worth a visit. (Bookshop.org affiliate link, Amazon affiliate link)

Rooted, An Australian History of Bad Language, Amanda Laugesen

The history of swearing is a history of language and power and identity, a perfect way to unpack the myth of what it means to be Australian. (Booshop.org affiliate link)

Women Talk More than Men… and Other Myths about Language Explained, Abby Kaplan

Each chapter takes a ‘myth’ about language and deconstructs it, with careful and critical attention to research. (Bookshop.org affiliate link, Amazon affiliate link)

The Art of Language Invention, David J. Peterson

This book is designed as an introduction to conlanging, but can just as easily be read as an introduction to linguistic analysis, or a refresher if you’re heading back to university and want to polish up on your linguistics vocab. (Bookshop.org affiliate link, Amazon affiliate link)

Secret Language, Barry J. Blake

Blake does a good job of wending his way though an impressive array of topics, explaining things clearly and providing often entertaining examples, but never dwelling too long on any one topic. (Bookshop.org affiliate link, Amazon affiliate link)

You Are What You Speak, Lane Green

Overall this book is a great read, suffused with both enthusiasm for the topic and a desire to not fudge things for the sake of a good anecdote. (Amazon affiliate link)

Bastard Tongues, Derek Bickerton

Bastard Tongues is part memoir, part linguistic adventure. Until as recently as the 1970s Creoles were dismissed as simple languages, arising from simple communicative needs. Bickerton very quickly shows that this is not the case. (Bookshop.org affiliate link, Amazon affiliate link)

The Information, James Gleick

The first three chapters focus on language and literacy without a bit or a broadband to be seen. (Bookshop.org affiliate link, Amazon affiliate link)

Netymology, Tom Chatfield

Netymology is a romp through the lexicon of the interwebs, with 100 short and snappy entries all focusing on one or two words, their meaning, etymology, origins and changing usage. (Amazon affiliate link)

A Christmas Cornucopia, Mark Forsyth

If yours is the kind of family that gets a kick out of sitting around guessing the punchlines to the jokes in Christmas crackers, then you’ll get a great deal of entertainment in your post lunch stupor reading and sharing informative gems from this volume. (Bookshop.org affiliate link, Amazon affiliate link)

The Speculative Grammarian Essential Guide to Linguistics

SpecGram takes you a giant leap towards absurdity, often masked by seemly earnest academic rigour. (Bookshop.org affiliate link, Amazon affiliate link)

Fiction

Some of this is fiction with clear linguistic themes, other times I’ve made a post about reading something like a linguist.

The Darkest Bloom (Shadowscent book 1), Crown of Smoke (Shadowscent book 2), P.M. Freestone

A Young Adult fantasy adventure, with a cracking pace and memorable characters. The book features the first few snippets of Aramteskan, a language I created to be unlike any existing human language, which places smell at the centre of people’s experience. Check out the Shadowscent hashtag on the blog for more news. (Book 1: Bookshop.org affiliate link, Amazon affiliate link, Book 2: Amazon affiliate link)

Native Tongue, Suzette Haden Elgin

There are two separate plots that explore linguistic relativity, the first explores the consequences of humans learning languages of aliens with radically different perceptions of reality. The second, and really the core of the book, is the secret organisation of women who are creating their own language to escape the tyranny of the male dominated world. (Bookshop.org affiliate link, Amazon affiliate link)

Babel-17, Samuel R. Delany

The pace is good, the universe isn’t too badly dated and even though there’s a neat ending with regards to some of the plot, there are enough unanswered questions for the reader to build their own conclusions. (Bookshop.org affiliate link, Amazon affiliate link)

Embassy Town, China Miéville

Even if you’ve not studied language or linguistics Mieville guides you though the narrative turns with consummate skill that rarely feels too heavy handed. (Bookshop.org affiliate link, Amazon affiliate link)

New Finnish Grammar, Diego Marani

The tale itself is a compelling one, but for a word nerd it’s got another layer of intrigue as the Finnish language is a central focus of the story. (Bookshop.org affiliate link, Amazon affiliate link)

Snow Crash, Neal Stephenson

Stephenson goes right to the heart of some of the biggest debates in linguistics in the 20th century, although Hiro doesn’t seem that convinced by Universalism. (Bookshop.org affiliate link, Amazon affiliate link)

Cat’s Cradle, Kurt Vonnegut

Woven throughout the story are a small number of examples of this ‘dialect’, and while I tried very hard to behave and just enjoy reading the books, I couldn’t help but note them all down. (Bookshop.org affiliate link, Amazon affiliate link)

Short Stories

Repairing the World, John Chu

In a reality that is constantly being invaded by portals to other worlds, linguists are called in to deal with whoever and whatever comes through from the other side.

Polyglossia, Tamara Vardomskaya

Although a work of fiction, it touches on many of the social tensions in the context of endangered languages and efforts to document and revive them.

The Story of Your Life, Ted Chiang

It’s one of the best fictional descriptions I’ve come across of the process to document and capture a language you don’t speak. (Short story collection: Bookshop.org affiliate link, Amazon affiliate link)

581 notes

·

View notes

Link

Hasta ayer no me enteré.

Un lingüista cognitivo que ha trabajado hasta el final con una vitalidad envidiable. A pesar de las críticas que suscitó no dejó de lado el interactivismo, en lo que se refiere a la adquisición del lenguaje.

Fue un honor escucharle.

0 notes

Text

© Γράφει ο Πυθεύς

λόγος δυνάστης μέγας ἐστίν, ὃς σμικροτάτῳ σώματι καὶ ἀφανεστάτῳ θειότατα ἔργα ἀποτελεῖ· Ο λόγος είναι ένας μεγάλος δυνάστης, που ενώ έχει το πιο μικρό και αφανές σώμα, επιτελεί τα πιο θεϊκά έργα· Γοργίου, Ἑλένης Ἐγκώμιον Επιμέλεια-Μετάφραση: Π. Καλλιγάς ⇒

Πρόλογος

Η μακρά ιστορία αναζήτησης των καταβολών της γλώσσας έχει τις ρίζες της στην μυθολογία. Οι μύθοι των περισσότερων λαών δεν πιστώνουν τους ανθρώπους με την επινόησή της, αλλά μιλούν για θεϊκή γλώσσα, προϋπάρχουσα της ανθρώπινης. Αποκρυφιστικές γλώσσες που χρησιμοποιήθηκαν για την επικοινωνία με ζώα ή πνεύματα, όπως αυτή των πουλιών, είναι επίσης κοινές και παρουσιάζουν ιδαίτερο ενδιαφέρον.

Στην Ινδουιστική κοσμολογία, θεότητα του λόγου, ο λόγος προσωποποιημένος, είναι η Vāc, ο ιερός Λόγος του Βράχμαν, η Μητέρα των Βεδών. Η ιστορία των Αζτέκων θέλει μόνον έναν άντρα, τον Coxcox και μία γυναίκα, την Xochiquetzal, να επιβιώνουν από κατακλυσμό πάνω σ᾽ένα κομμάτι φλοιού από δέντρο. Κατόπιν βρέθηκαν στη στεριά κι απέκτησαν πολλά παιδιά τα οποία αρχικά γεννήθηκαν ανίκανα να μιλήσουν, αλλά στη συνέχεια, αφού τους επισκέφθηκε ένα περιστέρι, προικίσθηκαν με γλώσσα, αλλά με διαφορετική το κα��ένα κι έτσι δεν μ��ορούσαν να συννενοηθούν.

Παρόμοια πλημμύρα περιγράφεται από τον λαό των Κάσκα στην Βόρεια Αμερική, ωστόσο, όπως με την ιστορία της Βαβέλ, οι άνθρωποι ήσαν τότε διάσπαρτοι σε ολόκληρο τον κόσμο. Ο αφηγητής προσθέτει ότι αυτό εξηγεί τα διαφορετικά πληθυσμιακά κέντρα, τις πολλές φυλές και γλώσσες: πρίν τον κατακλυσμό, υπήρχε ένα και μόνο κέντρο, όπου ζούσαν όλοι οι άνθρωποι σε μία χώρα και μιλούσαν μία γλώσσα. Δεν γνώριζαν πού ζούσαν οι άλλοι άνθρωποι και πιθανόν να θεωρούσαν τους εαυτούς τους ως μόνους επιζήσαντες. Πολύ αργότερα, όταν περιπλανώμενοι συνάντησαν λαούς από άλλα μέρη, μιλούσαν διαφορετική γλώσσα και δεν μπορούσε να καταλάβει ο ένας τον άλλον.

Ένας θρύλος των Ιροκουά, μιλά για τον θεό Taryenyawagon, Κύριο των Ουρανών, ο οποίος οδηγώντας τους ανθρώπους του σε ταξίδι, τους παρότρυνε να εγκατασταθούν σε μέρη διαφορετικά και γι᾽αυτό άλλαξαν οι γλώσσες τους. Μία διαφωνία ευθύνεται για την απόκλιση των γλωσσών αφηγείται η φυλή των Salish: δύο άνθρωποι λογομαχούσαν για το αν ο υψίσυχνος βόμβος που συνοδεύει το πέταγμα της πάπιας, προκαλείται από τον αέρα που διαπερνά το ράμφος της ή από το φτερούγισμα της. Το θέμα δεν διευθετήθηκε από τον φύλαρχο, ο οποίος στη συνέχεια συγκάλεσε συμβούλιο αρχηγών από τα παραπλήσια χωριά. Το συμβούλιο έμεινε σε επιχειρήματα αφού κανείς δεν συμφωνούσε και τελικά οδήγησε στην διάσπαση της φυλής. Με τα χρόνια, εκείνοι που απομακρύνθηκαν, άρχισαν να μιλούν διαφορετικά κι έτσι δημιουργήθηκαν άλλες γλώσσες.

Στην μυθολογία των Yuki, ιθαγενείς της Καλιφόρνια, ο Πλάστης, συνοδευόμενος από τον Κογιότ, δημιουργεί την γλώσσα, όπως επίσης τις φυλές σε διάφορες τοποθεσίες. Την νύχτα τοποθετεί ράβδους σε διάφορα σημεία του καταλύματός τους, οι οποίες με το πρώτο φώς της μέρας θα μεταμορφωθούν σε λαούς —ο καθένας με τις συνήθειές του, τον τρόπο ζωής και την γλώσσα του. Ο λαός των Ticuna στο τροπικό δάσος του Αμαζονίου, παραδίδει ότι όλοι οι λαοί ήσαν κάποτε μία φυλή και μιλούσαν την ίδια γλώσσα ωσότου κάποια μέρα φαγώθηκαν (δίχως να γνωρίζουμε από ποιόν) δύο αυγά κολίμπρι. Ακολούθως η φυλή διασπάστηκε σε ομάδες και διασκορπίστηκε στα μήκη και τα πλάτη του κόσμου.

Στην αρχαία Ελλάδα θρυλείται ότι οι άνθρωποι ζούσαν για αιώνες δίχως νόμους υπό την εξουσία του Δία και μιλούσαν μόνο μια γλώσσα. Την εποχή του Φορωνέα, ο Ερμής (θεός του λόγου) τους γνώρισε τα μυστικά άλλων διαλέκτων, σπέρνοντας συνάμα την διχόνοια ανάμεσά τους με αποτέλεσμα να χωρισθούν σε έθνη. Ακολούθως ο Δίας, δυσαρεσημένος από την εξέλιξη, έχρισε τον Φορωνέα, πρώτο βασιλέα των ανθρώπων. Η μυθολογία των Βίκινγκ θέλει την ικανότητα του λόγου να δωρίζεται στους ανθρώπους, μαζί με την όραση και την ακοή, από τον Βε, τρίτο γιό του Μπόρ. Περπατώντας με τα αδέλφια του κοντά στην θάλασσα σταμάτησαν σε δύο δέντρα στα οποία έδωσαν σχήμα ανθρώπινο. Ο πρώτος αδελφός τους χάρισε πνεύμα και ζωή, ο δεύτερος αίσθηση και ευφυία κι ο τρίτος, μορφή, λόγο, ακοή και όραση.

Οι Wasania, λαός της ομοεθνίας Μπαντού στην ανατολική Αφρική, παραδίδουν θρύλο σύμφωνα με τον οποίο οι κάτοικοι της γης αρχικά γνώριζαν μονάχα μία γλώσσα αλλά με το ξέσπασμα μεγάλου λοιμού, οι άνθρωποι κυριεύθηκαν από μανία που τους έκανε να περιφέρονται σε διάφορα μέρη ψελλίζοντας λέξεις ακατάληπτες κι έτσι προέκυψαν οι διαφορετικές γλώσσες. Θεός ο οποίος μιλά όλες τις γλώσσες, γίνεται κεντρικό θέμα στην Αφρικάνικη μυθολογία, με δύο παραδείγματα να είναι ο Εσού του λαού των Γιορούμπα, κατεργάρης αγγελιαφόρος των θεών και ο Ορουνμίλα, θεός της μαντείας.

Όπως αναφέρεται σε σχετικό μύθο λαού της Νότιας Αυστραλίας, η ποικιλομορφία των γλωσσών προέκυψε, τρόπον τινά, από τον καννιβαλισμό, όταν με τον θάνατο μιας πανούργας και μοχθηρής γριάς που ζούσε στο απώτερο παρελθόν, ανακουφισμένοι οι άνθρωποι από όλα τα μέρη συγκεντρώθηκαν για να γιορτάσουν και πρώτοι οι Αβορίγγινες Raminjerar άρχισαν να κατασπαράζουν το πτώμα. Τότε άρχισαν να μιλούν κατανοητά. Άνθρωποι άλλων φυλών τρώγοντας από τα εντόσθιά της, άρχισαν να μιλούν λίγο διαφορετικά από τους πρώτους, όπως επίσης εκείνοι που τράφηκαν από τα υπόλοιπα μέρη κι έτσι εμφανίστηκαν οι πολλές γλώσσες. Μια άλλη ομάδα Αβορίγινων, οι Gunwinggu, αφηγούνται ότι κάποια θεότητα έστελνε στον ύπνο των παιδιών τις γλώσσες που μιλούσε η ίδια για να παίζουν. Η ιστορία περιέχει αρκετά αφηγήματα ανθρώπων που επιχείρησαν να ανακαλύψουν πειραματικά τις καταβολές της γλώσσας. Μια τέτοια ιστορία παραδίδει ο Ηρόδοτος:

Οι Αιγύπτιοι ωστόσο, προτού να γίνει βασιλιάς τους ο Ψαμμήτιχος, νόμιζαν ότι αυτοί ήταν οι πρώτοι που είχαν δημιουργηθεί απ᾽ όλους τους ανθρώπους. Ο Ψαμμήτιχος όμως, όταν έγινε βασιλιάς, θέλησε να μάθει ποιοί είχαν γίνει πρώτοι και έτσι, από την εποχή του, οι Αιγύπτιοι θεωρούν ότι οι Φρύγες έγιναν πρώτα από αυτούς, και αυτοί πρώτα από τους υπόλοιπους.

Ο Ψαμμήτιχος λοιπόν, όσο κι αν έψαχνε, τρόπο δεν έβρισκε κανέναν για να μάθει αυτό το πράγμα, ποιοί από τους ανθρώπους είχαν γίνει πρώτοι, και έτσι κατεβάζει τούτη την ιδέα: δίνει σε κάποιον βοσκό δυο νεογέννητα παιδιά από γονείς συνηθισμένους να τα μεγαλώσει στη στάνη του, και τον προστάζει η ανατροφή τους να είναι τέτοια ώστε κανένας να μη βγάζει μπροστά τους μιλιά, παρά να μένουν σε μια έρημη καλύβα μοναχά τους κι αυτός να τους πηγαίνει την ώρα όπου πρέπει τις κατσίκες, να τους δίνει μπόλικο γάλα και να κάνει και όλα τα άλλα χρειαζούμενα.

Όλα αυτά ο Ψαμμήτιχος τα έκανε και τα πρόσταξε επειδή ήθελε ν᾽ ακούσει ποιά γλώσσα θα μιλούσαν πρώτη τα παιδιά όταν θα έπαυαν πια να βγάζουν άναρθρες κραυγές. Έτσι και έγινε. Είχαν περάσει πια δυο χρόνια όπου ο βοσκός έκανε αυτή τη δουλειά, όταν κάποτε ανοίγει την πόρτα και μπαίνει, και τα δυο παιδιά πέφτουν στα πόδια του και φωνάζουν «βεκός» απλώνοντας τα χέρια.

Την πρώτη φορά όπου το άκουσε, ο βοσκός δεν έκανε λόγο· αλλά καθώς συχνοπήγαινε για να τα φροντίζει, η λέξη αυτή ακουγόταν πολλές φορές, και τότε ο βοσκός το μήνυσε του βασιλιά κι αυτός τον πρόσταξε να του πάει μπροστά του τα παιδιά. Άκουσε λοιπόν και ο ίδιος ο Ψαμμήτιχος και ρώτησε να μάθει τί πράγμα είναι αυτό το «βεκός» και ποιοί το λένε· και ανακάλυψε ότι έτσι ονομάζουν οι Φρύγες το ψωμί.

Μ᾽ αυτόν τον τρόπο οι Αιγύπτιοι ζύγισαν την υπόθεση και παραδέχτηκαν ότι οι Φρύγες είναι αρχαιότεροί τους. Ότι έτσι έγιναν τα πράγματα, το άκουσα από τους ιερείς του Ηφαίστου στη Μέμφιδα. Όσο για τους Έλληνες, αν��μεσα στα πολλά άλλα κουραφέξαλα, λένε και τούτο, ότι δηλαδή ο Ψαμμήτιχος έκοψε τη γλώσσα μερικών γυναικών και σε αυτές τις γυναίκες ανάθεσε την ανατροφή των παιδιών.

Ηρόδοτος Ἱστορίαι 2.2.1-2.2.5 Μνημοσύνη – Ψηφιακή Βιβλιοθήκη της Αρχαίας Ελληνικής Γραμματείας ⇒

Λέγεται ότι ο βασιλέας Ιάκωβος Ε΄ της Σκωτίας πραγματοποίησε παρόμοια πείραμα και τα παιδιά που συμμετείχαν μίλησαν Εβραϊκά. Σε παρόμοιες δοκιμασίες που προέβησαν αμφότεροι οι μονάρχες των Μεσαιωνικών χρόνων, Φρειδερίκος Β΄ Χοενστάουφεν και Ακμπάρ ο Μέγας, τα παιδιά δεν μίλησαν, παρά μόνον χτυπούσαν τα χέρια τους και χειρονομούσαν, γεγονός που υποδηλώνει ότι η γλωσσική ικανότητα αποκτάται και δεν αναδύεται αυθόρμητα.

Ιστορία της Γλωσσολογίας

Οι άνθρωποι άρχισαν να ασχολούνται με τη γλώσσα με ποικίλους τρόπους από πολύ νωρίς. Στους μεγάλους πολιτισμούς του λεγόμενου «εύφορου μισοφέγγαρου» (Μεσοποταμία και Αίγυπτο) επινοήθηκαν συστήματα γραφής (ιερογλυφικά και ιδεογράμματα) από τα οποία εξελίχτηκαν αργότερα άλλα συστήματα (συλλαβικές και συμφωνικές γραφές) μία από τις οποίες μετασχημάτισαν σε αλφάβητο οι Έλληνες. Οι λόγιοι αυτών των παλιών πολιτισμών έγραψαν επίσης Γραμματικές· η παλιότερη που έχουμε στη διάθεσή μας είναι μια Βαβυλωνιακή Γραμματική που χρονολογείται γύρω στο 1600 π.Χ. Οι πρώτες θεωρητικές αναζητήσεις, όμως, ο πρώτος δηλαδή ελεύθερος στοχασμός σχετικά με τη γλώσσα και τη σχέση της με τον κόσμο και τον άνθρωπο ξεκίνησε, όπως ο φιλοσοφικός στοχασμός γενικότερα, από την Αρχαία Ελλάδα.

Το πρώτο φιλοσοφικό κείμενο για τη γλώσσα είναι ο διάλογος του Πλάτωνα, Κρατύλος, γνωστός και ως Περί ονομάτων λόγος. Το ερώτημα που θέτουν ο Κρατύλος και ο Ερμογένης στον Πλάτωνα είναι αν υπάρχει φυσική σχέση ανάμεσα στα όντα και στα ονόματά τους. Ο Πλάτων σε διάφορα σημεία των έργων του, πραγματεύθηκε την συνάφεια λέξεων και ιδεών. Ο Αριστοτέλης, το πιο ακριβές και συστηματικό μυαλό ανάμεσα στους φιλοσόφους της αρχαιότητας, ασχολήθηκε με αρκετά γλωσσικά ζητήματα σ�� ποικίλα έργα του:

Η βιολογική διάσταση της γλώσσας: Historia animalium VI. 9: η διάκριση της φωνῆς – διάλεκτος

Η «ψυχολογική» διάσταση της γλώσσας: Περί ψυχής, 2.8

Η οντολογική διάσταση της γλώσσας: Κατηγορίαι, 1-4

Η λογική διάσταση της γλώσσας: Περί ερμηνείας, 1-4

Η κειμενική διάσταση της γλώσσας – Ποιητική, κεφ. 19-22

Η κειμενική διάσταση της γλώσσας – Ρητορική, 3. 1

«Και η δύναμη του λόγου είναι για την ψυχή ό,τι τα φάρμακα για τη φύση των σωμάτων» ισχυρίζεται ο Γοργίας στο Ελένης Εγκώμιον, ενώ στο Περί του μη όντος ή Περί Φύσεως, αναδεικνύει την αδυναμία του λόγου να μεταδώσει αντικειμενικές αλήθειες, εξαιτίας της χρήσης λεκτικών συμβολισμών αντί των ίδιων: «Δεν υπάρχει τίποτε και αν υπάρχει είναι ακατάληπτο στον άνθρωπο και αν είναι καταληπτό, δεν μεταδίδεται και δεν μεταφέρεται στον πλησίον». Η έμφαση στην ορθοέπεια, αναφαίνει την σπουδαιότητα που είχε η ορθή χρήση του λόγου για τον Πρωταγόρα. Ο Ερμογένης στον Πλατωνικό Κρατύλο, υποστηρίζει ότι η ορθότητα ενός ονόματος καθορίζεται από τη συμφωνία με την οποία αντιστοιχίζουμε μία λέξη σε κάποιο πράγμα (συμβασιοκρατία). Τα συμβαίνοντα στον έξω κόσμο προκέλεσαν την ανάγκη του ανθρώπου να εκφραστεί, λέει ο Εμπεδοκλής.

Οι Στωικοί ασχολήθηκαν ιδιαίτερα συστηματικά με τη γλώσσα, η οποία κατείχε σημαντική θέση στο φιλοσοφικό τους σύστημα. Ήταν οι πρώτοι που μίλησαν για σημεία, δίνοντας έμφαση στη διάκριση μεταξύ της μορφής και του περιεχομένου, τα οποία ονόμασαν σημαίνον και σημαινόμενο αντίστοιχα. Η ιδέα του σημείου με τις δύο πλευρές του υιοθετήθηκε από τους Μεσαιωνικούς (Σχολαστικούς) Φιλοσόφους· ήρθαν σε επαφή μαζί της μέσω του Ιερού Αυγουστίνου, ο οποίος είχε αποδώσει τους όρους των Στωικών με τους λατινικούς signans (σημείο), significans (σημαίνον) και significandum (σημαινόμενο). Αργότερα αναπτύχθηκε με ελαφρώς διαφορετικό τρόπο από τον Saussure, ο οποίος μίλησε πρώτος για την ανάγκη δημιουργίας ενός κλάδου Σημειολογίας, πράγμα που έγινε από τη δεύτερη δεκαετία του 20ου αιώνα.

Οι Στωικοί έθεσαν τις βάσεις της Γραμματικής, καθώς ήταν οι πρώτοι που μίλησαν για μέρη του λόγου, για κλίσεις του ονόματος και του ρήματος, για πτώσεις κ.ά. Οι αρχές τους έ��εσαν τις βάσεις για τη συγγραφή των Γραμματικών κατά την Ελληνιστική εποχή. Στον δυτικό κόσμο η Γραμματική εμφανίζεται για πρώτη φορά στην Ελληνιστική εποχή ως Τέχνη Γραμματική. Η πρώτη πλήρης Γραμματική συντάχθηκε από τον Διονύσιο τον Θράκα στο τέλος του 2ου π.Χ. αιώνα. Ο Διονύσιος ο Θραξ υιοθέτησε τις αρχές των Στωικών, αλλά πρόσθεσε μέρη του λόγου, ταξινόμησε τις ελληνικές λέξεις βάσει χαρακτηριστικών όπως γένος, πτώση, αριθμό, χρόνο, φωνή, έγκλιση και γενικά προσπάθησε να συστηματοποιήσει τα στοιχεία της γλώσσας εντοπίζοντας τις κανονικότητες της γλώσσας. Ο δεύτερος μεγάλος σταθμός ήταν η Γραμματική την οποία έγραψε τον 2ο αιώνα μ.Χ. ο Απολλώνιος Δύσκολος, ο οποίος πρόσθεσε και συντακτική ανάλυση.

Οι Λατίνοι ασχολήθηκαν πολύ με τη γλώσσα και τη συγγραφή Γραμματικής. Μεταξύ των Ρωμαίων που έγραψαν Γραμματικές συγκαταλέγονται οι Varro, Cicero, Quintilianus, Julius Caesar, Aelius Donatus και Priscianus. Οι Λατίνοι Γραμματικοί ακολούθησαν σε μεγάλο βαθμό το πρότυπο των Αλεξανδρινών, διατηρώντας τα ίδια μέρη του λόγου και την ίδια λογική στην ταξινόμηση κλιτικών παραδειγμάτων. Η ομοιότητα της δομής των δύο γλωσσών συνετέλεσε στο να θεωρηθούν οι λεξικές και οι γραμματικές κατηγορίες της Ελληνικής καθολικές. Όλη η δυτική γραμματική παράδοση στηρίζεται στην ελληνορωμαϊκή αυτή κληρονομιά.

Στον Μεσαίωνα οι Σχολαστικοί Φιλόσοφοι υποστήριξαν την καθολικότητα των κατηγοριών. Στόχος της Γραμματικής είναι να εντοπίσει τα καθολικά στοιχεία της γλώσσας (universalia). Οι λεγόμενες Φιλοσοφικές Γραμματικές του Μεσαίωνα έχουν συνταχθεί με αυτόν ακριβώς τον τρόπο. Οι πιο γνωστές είναι του Roger Bacon και η λίγο μεταγενέστερη Port Royal. Οι σύγχρονοι σημειολόγοι π.χ. αναφέρονται συχνά στους Σχολαστικούς Φιλοσόφους· χαρακτηριστικό παράδειγμα το μυθιστόρημα του Ουμπέρτο Έκο, Το Όνομα του Ρόδου, το οποίο εκτυλίσσεται σε μεσαιωνικό μοναστήρι.

Η επιστήμη της Γλωσσολογίας, που ξεκίνησε τον 19ο αιώνα, μετά τη γνωριμία των Ευρωπαίων όχι μόνο με τη γλώσσα, αλλά και με τη Γραμματική της Σανσκριτικής, επηρεάστηκε βαθύτατα από αυτήν, ιδίως ως προς τη φωνητική/φωνολογική και μορφολογική ανάλυση. Κατά το δεύτερο μισό του 18ου αιώνα ένας Βρετανός δικαστής στην Ινδία, ο Sir William Jones, αποφάσισε να μάθει Σανσκριτικά για να μπορέσει να διαβάσει από το πρωτότυπο τα αρχαία ινδικά κείμενα. Κατάπληκτος εντόπισε πάρα πολλές ομοιότητες με τις κλασικές γλώσσες, Ελληνική και Λατινική, τις οποίες βεβαίως γνώριζε άριστα, όπως όλοι οι μορφωμένοι της εποχής του. Έγραψε λοιπόν ένα ιστορικό πλέον άρθρο, το οποίο δημοσιεύτηκε το 1786 στην Αγγλία και στο οποίο υπήρχε η περίφημη παρατήρηση:

Η Σανσκριτική παρουσιάζει μια ομοιότητα προς την Ελληνική και τη Λατινική τόσο ως προς τις ρίζες των ρημάτων όσο και ως προς τους τύπους των λέξεων τόσο έντονη, ώστε να είναι αδύνατον να είναι τυχαία· τόσο έντονη ώστε να είναι αδύνατον οποιοσδήποτε φιλόλογος να τις εξετάσει χωρίς να πιστέψει ότι έχουν ξεπηδήσει από την ίδια πηγή, η οποία ίσως να μην υπάρχει πια.

Η είδηση αυτή δημιούργησε σάλο και απίστευτο ενθουσιασμό στους επιστημονικούς κύκλους της εποχής. Έδωσε το έναυσμα για μία εντυπωσιακή ερευνητική δραστηριότητα, η οποία, μέσα από τη σύγκριση αυτών και αργότερα και άλλων γλωσσών προσπάθησε να ελέγξει την υπόθεση αυτή που διατυπώθηκε από τον Jones.

Σύντομα αναπτύχθηκε η θεωρία των οικογενειών γλωσσών, καθιερώθηκε η ονομασία Ινδοευρωπαϊκή για την πρωτογλώσσα από την οποία προέρχονται οι τρεις αυτές γλώσσες και οι απόγονοί τους και καταρτίστηκαν οικογενειακά δέντρα των γλωσσών. Οι προσπάθειες επικεντρώθηκαν στην επανασύνθεση της Πρωτοϊνδοευρωπαϊκής και εντοπίστηκαν οι τρόποι με τους οποίους άλλαξαν οι θυγατρικές γλώσσες (βάσει των φωνητικών νόμων και της αναλογίας). Προς το τέλος του αιώνα η συσσωρευμένη αυτή γνώση άρχισε να συστηματοποιείται και να θεωρητικοποιείται περισσότερο στα πλαίσια της σχολής των Νεογραμματικών (Junggrammatiker). Ανάμεσά τους ξεχωρίζει η σημαντική φυσιογνωμία του Wilhelm von Humboldt.

Η σύγχρονη Γλωσσολογία θεωρείται ότι αρχίζει το 1916 με τη δημοσίευση του μεταθανάτιου έργου του Ferdinand de Saussure, Cours de linguistique generale. Στο έργο αυτό, βασισμένο στις σημειώσεις των μαθητών του Ελβετού γλωσσολόγου, τονίζεται για πρώτη φορά εμφατικά η σημασία της μελέτης του συστήματος της γλώσσας. Ο Δομισμός διαδόθηκε σε όλη την Ευρώπη, όπου αναπτύχθηκαν σχολές με ελαφρές διαφοροποιήσεις (της Κοπεγχάγης, της Πράγας, του Παρισιού κλπ.) και στην Αμερική.

Η Γενετική Γραμματική είναι ο τρίτος μεγάλος σταθμός στην εξέλιξη της μελέτης της γλώσσας. Θεμελιώθηκε από τον Noam Chomsky το 1957, με τη δημοσίευση του βιβλίου του, Συντακτικές Δομές (Syntactic Structures) και καθιερώθηκε με την πληρέστερη εκδοχή της που παρουσιάστηκε το 1965 στις Πλευρές της Θεωρίας της Σύνταξης (Aspects of the Theory of Syntax).

Εισαγωγή

Η γλώσσα, περισσότερο από οτιδήποτε άλλο, είναι αυτό που μας κάνει ανθρώπους: η μοναδική της δύναμη να αναπαριστά και να μοιράζεται απεριόριστες σκέψεις, είναι ουσιώδης για όλες τις ανθρώπινες κοινωνίες και έχει διαδραματίσει κεντρικό ρόλο στην ανάδειξη του είδους μας, τα τελευταία εκατομμύρια χρόνια, από μικρό και περιφερειακό μέλος της οικολογικής κοινότητας της Υποσαχάριας Αφρικής στο κυρίαρχο είδος του πλανήτη. Σήμερα υπάρχουν στον κόσμο 6.000 γλώσσες, σε τόσο μεγάλη ποικιλία που κάλλιστα προκαλούν κάποιον να αναρωτηθεί πώς ένα ανθρώπινο ον θα μπορούσε ενδεχομένως να τις μάθει και να τις χρησιμοποιήσει. Πώς προέκυψαν αυτές οι γλώσσες; Γιατί δεν υπάρχει μόνο μία;

Πριν από περίπου οκτώ εκατομμύρια χρόνια, ανάμεσα στους γηγενείς πληθυσμούς κάποιων Αφρικανικών δασών, ζούσαν πιθηκόμορφα δημιουργήματα κι ανάμεσά τους οι κοινοί πρόγονοι χιμπατζήδων και ανθρώπων. Η οπτικοποίηση της πιθανής μορφής αυτών των πλασμάτων είναι αρκετά εύκολη υπόθεση· αρκεί να επικαλεσθούμε την εικόνα κάποιου που μοιάζει με σύγχρονο γορίλλα, ζεί ουσιαστικά πάνω σε δέντρα, περπατά χρησιμοποιώντας και τα τέσσερα άκρα του, ενώ η φωνητική του επικοινωνία περιορίζεται σε σύστημα πιθανόν είκοσι ή τριάντα κλήσεων όπως ο χιμπατζής. Αλλά τι συμβαίνει με την εμφάνιση και την συμπεριφορά των προγόνων μας δύο εκατομμύρια χρόνια πριν; Σε εκείνη την φάση συνιστούσαν ξεχωριστό είδος από αυτό των προγόνων του χιμπατζή, αλλά δεν ήταν ακόμη σοφοί άνθρωποι (homo sapiens). Πως ζούσαν αυτά τα πλάσματα και πιό συγκεκριμμένα τι είδους γλώσσα διέθεταν; Η απεικόνιση εκείνων των πιο πρόσφατων πλασμάτων είναι δυσκολότερη. Θα είχε την αίσθηση κάποιος ότι σίγουρα θα μας έμοιαζαν περισσότερο και ειδικότερα, ότι το σύστημα επικοινωνίας τους θα πρέπει να ήταν πιο εξεζητημένο σε σχέση με αυτό των προγόνων τους έξι εκατομμύρια χρόνια νωρίτερα. Αλλά πόσο πιο προηγμένο; Ποιά χαρακτηριστικά της σύγχρονης ανθρώπινης γλώσσας φέρει εκείνο το σύστημα επικοινωνίας και ποιά παραμένουν απόντα;

Υπάρχει κάτι το μυστηριώδες και εντούτοις συναρπαστικό, σχετικά με τους ενδιάμεσους αυτούς προγόνους μας. Αυτή η γοητεία αποτελεί την βάση αναρίθμητων ιστοριών επιστημονικής φαντασίας καθώς και το άσβεστο ενδιαφέρον κάποιων διαδόσεων που θέλουν τέτοια πλάσματα να υπάρχουν ακόμη, ίσως σε κάποιες απομακρυσμέσμενες περιοχές της κοιλάδας των Ιμαλαΐων. Συνεπώς, σε αρκετούς μη γλωσσολόγους μοιάζει αυτονόητο ότι η μελέτη των γλωσσικών ικανοτή��ων εκείνων των ενδιάμεσων προγόνων (που σημαίνει διερεύνηση των καταβολών και της εξέλιξης της ανθρώπινης γλώσσας) θα έπρεπε να βρίσκεται στην πρώτη σειρά ενδιαφέροντος για την γλωσσολογία. Ωστόσο, δεν είναι. Σαν αντικείμενο έρευνας, η εξέλιξη της γλώσσας μόλις τώρα αρχίζει να ανακτά την υπόληψή της, μετά από μια περίοδο απαξίωσης η οποία διήρκεσε περισσότερο από 100 χρόνια. Στις αιτίες αυτής της παραμέλησης θα αναφερθούμε στην συνέχεια μαζί με τα στοιχεία που ήρθαν στην επιφάνεια από ανθρωπολόγους, πρωτευοντολόγους και νευροβιολόγους, πολλοί από τους οποίους επέδειξαν πιο περιπετειώδη ζήλο από τους γλωσσολόγους στον τομέα αυτό. Θα αναφερθούμε επίσης στην ουσιαστική πλέον συμβολή ορισμένων γλωσσολόγων.

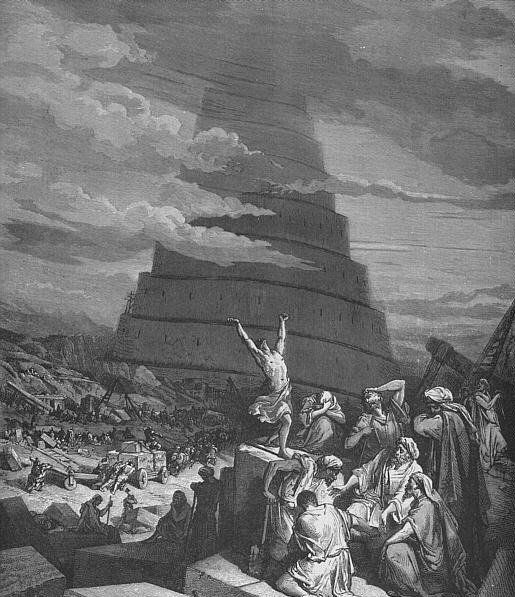

Πολλές θρησκείες παραδίδουν αφηγήματα για την καταγωγή της γλώσσας. Σύμφωνα με την Ιουδαιο-Χριστιανική παράδοση, ο Θεός στον Κήπο της Εδέμ παραχώρησε στον Αδάμ την κυριαρχία πάνω σε όλα τα ζώα και σε πρώτη εφαρμογή της εκείνος, προχωρά στην κατονομασία τους. Το γεγονός ότι υπάρχουν σήμερα πολλές γλώσσες κι όχι μόνον μία, ερμηνεύεται στην ιστορία για τον Πύργο της Βαβέλ: η γλωσσική πολυμορφία συνιστά τιμωρία της αλαζονείας του ανθρώπου. Για όσο διάστημα ήταν γενικώς αποδεκτά τέτοιου είδους αφηγήματα, η προέλευση της γλώσσας δεν αποτελούσε αίνιγμα. Όταν όμως οι λαϊκές ερμηνείες των φυσικών φαινομένων άρχισαν να συμπληρώνουν ή να αντικαθιστούν τις θρησκευτικές, ήταν αναπόφευκτο ότι μια τέτοια επεξήγηση θα δινόταν και για τις καταβολές της γλώσσας.

The “confusion of tongues” by Gustave Doré, a woodcut depicting the Tower of Babel from Abrahamic myth (wikipedia)

Η εκδοχή σύμφωνα με την οποία η προέλευση της γλώσσας οφείλει να προηγείται χρονικά της καταγεγραμμένης ιστορίας, δεν περιόριζε τους στοχαστές του 18ου αιώνα όπως οι Ζαν Ζακ Ρουσσώ, Ετιέν Μπονό ντε Κοντιγιάκ και Γιόχαν Γκότφριντ Χέρντερ, οι οποίοι πίστευαν ότι εφόσον κατευθύνει απλά κάποιος την σκέψη του στις συνθήκες διαβίωσης των άγλωσσων ανθρώπων, θα μπορούσε να καταλήξει σε αξιόπιστα συμπεράσματα για το πως θα πρέπει να είχε αναδυθεί η γλώσσα. Δυστυχώς από τις αιτιάσεις εκείνες, δεν προέκυψε κάποια κοινώς αποδεκτή και τον 19ο αιώνα κατέληξαν να μοιάζουν όλο και περισσότερο αδύναμες και υποθετικές, σε αντίθεση με τα εκτεταμένα αλλά πειστικά αποτελέσματα που μπορούσε να αποφέρει η ιστορική και η συγκριτική γλωσσολογία. Με την ίδρυσή της, επομένως, το 1866, η Γλωσσολογική Εταιρεία του Παρισιού επέλεξε να δώσει έμφαση στην σπουδαιότητά της ως ερευνητικό σώμα, περιλαμβάνοντας στο καταστατικό της, απαγόρευση παρουσίασης οποιουδήποτε εγγράφου αφορούσε στην προέλευση της γλώσσας. Οι περισσότεροι γλωσσολόγοι υποστηρίζουν ακόμη, τρόπον τινά, αυτή την απαγόρευση πιστεύοντας ότι κάθε έρευνα για την καταγωγή της γλώσσας θα πρέπει αναπόφευκτα να είναι τόσο υποθετική όσο και κατά συνέπεια ασήμαντη.

Jean-Jacques Rousseau (1712 – 1778) _wikipedia

Etienne Bonnot de Condillac (1714-1780) _wikipedia

Johann Gottfried (after 1802, von) Herder (1744 – 1803) _wikipedia

Avram Noam Chomsky (1928-) _wikipedia

Από την δεκαετία του 1960, η θεωρία της γραμματικής κατακυριεύθηκε από τις ιδέες του Νόαμ Τσόμσκι, για τον οποίο το κεντρικό θέμα της γλωσσολογίας είναι η φύση του εγγενούς βιολογικού καταπιστεύματος που επιτρέπει στους ανθρώπους να αρθρώσουν λόγο τόσο γρήγορα και αποτελεσματικά κατά τον πρώτο χρόνο της ζωής τους. Από αυτή την σκοπιά, φαίνεται φυσικό να θεωρείται η προέλευση της γλώσσας ζήτημα εξελικτικής βιολογίας: πώς εξελίχθηκε αυτό το έμφυτο γλωσσικό κληροδότημα στους ανθρώπους και ποιά τα ισοδύναμά του (αν υπάρχουν) σε άλλα πρωτεύοντα; Αλλά ο Τσόμσκι έχει αποθαρρύνει ρητά το ενδιαφέρον του για την εξέλιξη της γλώσσας έχοντας προτείνει επιπλέον ότι η γλώσσα είναι τόσο διαφορετική από τα περισσότερα χαρακτηριστικά άλλων ζώων, ώστε μπορεί να είναι προϊόν φυσικών ή χημικών διαδικασιών αντί βιολογικών (Chomsky 1988:167,1991:50). Παραδόξως, όταν οι γλωσσολόγοι, οι οποίοι ενστερνίζονται τις απόψεις του Τσόμσκι, επιχειρούν κάποια ερμηνεία στα χαρακτηριστικά των διακριτών γλωσσών, αναφερόμενοι στο έμφυτο γλωσσικό καταπίστευμα (ή παγκόσμια γραμματική) είναι γενικά απρόθυμοι να προωθήσουν την έρευνά τους ένα βήμα παραπέρα, στα ερωτήματα πώς και γιατί αυτό το ��γγενές ιδίωμα έχει αποκτήσει τα ιδιαίτερα χαρακτηριστικά τα οποία έχει. Ωστόσο, παρά την αποστασιοποίηση ορισμένων (Newmeyer 1991, Pinker and Bloom 1990, Pinker 1994) η επιρροή του Τσόμσκι νοηματοδοτείται από την άρνηση των γλωσσολόγων να εμπλακούν με αυτό το πεδίο που μόνο διαβρώνεται.

Στοιχεία Ανθρωπολογίας και Αρχαιολογίας

Η ανθρωπολογία, δεν ασχολείται μόνο με τον πολιτισμό των ανθρώπων αλλά και με τους ίδιους σαν οργανισμούς, με την βιολογική έννοια του όρου, περιλαμβάνοντας την εξελικτική τους πορεία [R. Foley (1995) και Mithen (1996)]. Η γλώσσα είναι ταυτόχρονα πολιτισμικό φαινόμενο αλλά και το πλέον πρόδηλο χαρακτηριστικό γνώρισμα του σύγχρονου σοφού ανθρώπου ως είδος. Εντούτοις, το ερώτημα του πώς και γιατί οι άνθρωποι απέκτησαν γλώσσα ενδιαφέρει αμφότερους τους ανθρωπολόγους του πολιτισμικού και του βιολογικού πεδίου. Πώς λοιπόν μπορεί να φωτίσει η ανθρωπολογία αυτά τα θέματα;

Η αρχαιότερη άμεση μαρτυρία για την γλώσσα, με την μορφή γραφής, δεν ξεπερνά σε ηλικία τα περίπου 5,000 χρόνια. Συνεπώς είναι ιδιαιτέρως όψιμη για να μπορέσει να φωτίσει τις καταβολές του αρθρωμένου λόγου και θα πρέπει να καταφύγουμε σε έμμεσα στοιχεία. Δυστυχώς τα διαθέσιμα τεκμήρια είναι διπλά έμμεσα. Τα φωνητικά εργαλεία (γλώσσα, χείλια και λάρυγγας) των πρώιμων ανθρώπων, θα μας έλεγαν πολλά αν μπορούσαμε να τα εξετάσουμε άμεσα· αλλά σαν μαλακοί ιστοί που είναι, δεν επιβιώνουν και βασίζουμε την πληροφόρησή μας σε ότι μπορούμε να σταχυολογήσουμε από οστά και ειδικότερα από κρανία. Παράλληλα με τέτοια ευρήματα, έχουμε εργαλεία και άλλα τέχνεργα, όπως επίσης ίχνη ανθρώπινης ενδιαίτησης, παραπεταμένα οστά ζώων· αλλά, και πάλι, οτιδήποτε διαθέτουμε στρεβλώνεται από το γεγονός ότι η πέτρα διατηρείται περισσότερο από τα κόκκαλα και πολύ καλύτερα από υλικά όπως το ξύλο ή το δέρμα. Ενόψει τούτων, οι μόνες σχετικά παγιωμένες χρονολογίες που μπορεί να μας προσφέρει η ανθρωπολογία είναι δύο ορόσημα, ένα, μετά το οποίο μπορούμε να είμαστε βέβαιοι ότι η γλώσσα με την πλήρη σημερινή μορφή της ήταν παρούσα και ένα, πριν το οποίο σίγουρα δεν ήταν. Για την μακρά περίοδο ανάμεσα τους, τα ανθρωπολογικά στοιχεία είναι προκλητικά αλλά συνάμα, απογοητευτικά αμφιλεγόμενα· δεν υπάρχουν αδιαφιλονίκητα ισοδύναμα στον κατάλογο των απολιθωμάτων για συγκεκριμένα στάδια της γλωσσικής εξέλιξης.

Δικαίως μπορούμε να είμαστε σίγουροι ότι η σύγχρονη μορφή του προφορικού λόγου εξελίχθηκε μονάχα μία φορά. Αυτό δεν είναι λογικά απαραίτητο. Μπορεί να γίνει κατανοητό ότι κάτι σχετικό με τις επικοινωνιακές και γνωστικές λειτουργίες της γλώσσας και με την χρήση της ομιλίας ως μέσο έκφρασης, θα μπορούσε να έχει εξελιχθεί ανεξάρτητα, περισσότερες από μία φορά, όπως συνέβη στην περίπτωση του ματιού στο ζωικό βασίλειο. Ωστόσο, αν είχε συμβεί κάτι τέτοιο, θα περιμέναμε να βρούμε σημερινά τεκμήρια, όμοια με αυτά που αποδεικνύουν ότι, εξαιτίας της δομής τους, τα μάτια των χταποδιών, των θηλαστικών και των εντόμων δεν έχουν κοινό πρόγονο. Προς το παρόν δεν υπάρχουν τέτοιες ενδείξεις. Στην ποικιλομορφία τους, όλες οι υπάρχουσες γλώσσες εμφανίζουν ορισμένες θεμελιώδεις κοινές ιδιότητες γραμματικής, νοήματος και ήχου, γεγονός το οποίο κάνει τον Τσόμσκι να αισθάνεται δικαιολογημένος για τον ισχυρισμό του ότι σε κάποιον επισκέπτη από άλλον πλανήτη θα μπορούσε να φανεί ότι υπάρχει μόνον μία ανθρώπινη γλώσσα. Επιπροσθέτως, ένα παιδί που απομακρύνεται από την γλωσσική κοινότητα των γονέων του σε νεαρή ηλικία, μπορεί να αποκτήσει οποιαδήποτε γλώσσα ως μητρική, ανεξάρτητα από εκείνη που μιλούν οι γονείς του· κανένα παιδί δεν γεννιέται με βιολογικό προσανατολισμό υπέρ κάποιας γλώσσας ή κάποιου γλωσσικού ιδιώματος. Αυτό σημαίνει ότι η γλώσσα ενός ολότελα σύγχρονου είδους, θα πρέπει να έχει αναπτυχθεί προτού μια μοντέρνα ανθρώπινη ομάδα διαχωριστεί γεωγραφικά από την υπόλοιπη ανθρώπινη φυλή (διαχωριστεί, δηλαδή μέχρι την επινόηση των σύγχρονων μέσων μεταφοράς). Ο πρώτος τέτοιος σαφής διαχωρισμός φαίνεται να συνέβει με τον παλαιότερο εποικισμό της Αυστραλίας από τον άνθρωπο της σοφίας (homo sapiens). Αρχαιολογικά ευρήματα προτείνουν ως πιθανή χρονολογία του συμβάντος τουλάχιστον 40,000 και ίσως 60,000 ή περισσότερα χρόνια. Μπορούμε εντούτοις να δεχτούμε αυτή την χρονική προσέγγιση ως την πρωιμότερη εξακριβωμένη χρονολογία (terminus ante quem) για την εξέλιξη μιας μορφής της γλώσσας η οποία από βιολογική άποψη είναι ξεκάθαρα μοντέρνα.

Για την μεταγενέστερη εξακριβωμένη χρονολογία (terminus post quem) είναι σαφές ότι η ομιλούμενη γλώσσα, με λίγο-πολύ σύγχρονα αρθρωτικά ή ακουστικά χαρακτηριστικά, προϋποθέτει φωνητική οδό παρόμοια με την τωρινή. Αλλά πως πρόκειται να ερμηνεύσουμε το λίγο-πολύ και το παρόμοια; Ένα πράγμα είναι ξεκάθαρο: οι ακουστικές ιδιότητες πολλών ανθρώπινων φθόγγων, ιδιαίτερα των φωνηέντων, εξαρτώνται από την χαρακτηριστική ανθρώπινη φωνητική οδό σχήματος ‘Γ’, με την στοματική κοιλότητα σε ορθή γωνία με τον φάρυγγα και με τον λάρυγγα σχετικά χαμηλά στον λαιμό. Αυτή η διάταξη χαρακτηρίζει τους ανθρώπους, διότι σχεδόν σε όλα τα υπόλοιπα θηλαστικά, ακόμα και στα ανθρώπινα νεογνά τους πρώτους λίγους μήνες της ζωής τους, ο λάρυγγας είναι αρκετά ψηλά, ώστε να εφάπτεται η επιγλωττίδα με την μαλακή υπερώα σχηματίζοντας ένας αυτοφερόμενο αεραγωγό από την μύτη προς τους πνεύμονες, ελαφρά καμπυλωμένο αντί σχήματος ‘Γ’ και τελείως χωριστό από τον αγωγό που οδηγεί από το στόμα προς το στομάχι. Έχοντας αυτούς τους δύο διακριτούς αγωγούς, σχεδόν όλα τα άλλα θηλαστικά όπως και τα μωρά των ανθρώπων είναι ικανά να αναπνέουν την ώρα που καταπίνουν. Από την άλλη, το χαρακτηριστικό του ενήλικου ανθρώπινου φάρυγγα, μέσω του οποίου πρέπει να περάσουν τροφή και αέρας, είναι ζωτικός παράγοντας για την διαμόρφωση του χαρακτηρισικού της ακουστικής δομής των φθόγγων. Πότε λοιπόν αναπτύχθηκε αυτή η φωνητική οδός σχήματος ‘Γ’;

Ο Lieberman υποστήριξε [(1984), Lieberman και Crelin (1971)] ότι μέχρι και στους Νεάντερταλ, οι οποίοι δεν εξαφανίστηκαν παρά μόλις πριν από περίπου 35,000 χρόνια, η θέση του λάρυγγα βρισκόταν αρκετά ψηλά στον λαιμό εμποδίζοντας την πλήρη παραγωγή της σύγχρονης γκάμας των φωνηέντων και ενδεχομένως αυτό το γλωσσικό μειονέκτημα να ήταν ένας από τους λόγους που δεν συνεχίστηκε το είδος. Το επιχείρημά του βασίζεται ωστόσο σε ερμηνεία κρανιακής ανατομίας απολιθώματος, η οποία κατά γενική ομολογία έχει απορριφθεί από ανθρωπολόγους (Trinkaus και Shipman 1993, Aiello και Dean 1990). Σύμφωνα με μία εναλλακτική άποψη, η φωνητική οδός σχήματος ‘Γ’ είναι υποπροϊόν της φάσης που οδήγησε στην δίποδη βάδιση (bipedalism) η οποία ευνόησε τον επαναπροσανατολισμό του κεφαλιού πάνω στην σπονδυλική στήλη και ως εκ τούτου την σύμπτυξη της βάσης του κρανίου, κατά την οποία ο λάρυγγας ωθήθηκε βαθύτερα στον λαιμό (DuBrul 1958, Aiello 1996b). Εύλογα προκύπτει το ερώτημα, πότε οι πρόγονοί μας απέκτησαν την ικανότητα να μετακινούνται με τα πόδια; Οι ανθρωπολόγοι γενικά συναινούν γενικά στο ότι αυτό συνέβη πολύ νωρίς. Στα ευρήματα περιλαμβάνονται απολιθωμένα ίχνη βαδίσματος από την περιοχή Laetoli στην Τανζανία, ηλικίας περίπου 3.5 εκατομμυρίων ετών και ο σκελετός του αυστραλοπίθηκου afarensis, επονομαζόμενου Λούσι (Lucy) ο οποίος χρονολογείται πάνω από 3 εκατομμύρια χρόνια πριν. Έτσι, εφόσον ο διποδισμός ήταν ο βασικός παράγοντας που συνεισέφερε στην μετακίνηση του λάρυγγα βαθύτερα στον λαιμό, τότε η φωνητική οδός σχήματος ‘Γ’, θα πρέπει επίσης να διαμορφώθηκε σχετικά νωρίς.

Ο σκελετός της Λούσι (Αυστρολοπίθηκος afarensis)

Ίχνη βάδισης από το Laetoli, τα οποία αποδίδονται στον αυστρολοπίθηκο afarensis

Ίχνη βάδισης από το Laetoli, τα οποία αποδίδονται στον αυστρολοπίθηκο afarensis

Ίχνη βάδισης από το Laetoli, τα οποία αποδίδονται στον αυστρολοπίθηκο afarensis

Κρανίο αυστραλοπίθηκου afarensis τριών εκατομμυρίων ετών (Αιθιοπία)

[σύνδεσμοι για τις εικόν��ς: wikipedia, Παλαιοανθρωπολογία ΕΚΠΑ]

Αυτό αντιτίθεται σε μια ευρέως διαδεδομένη άποψη μεταξύ των ερευνητών για τις καταβολές της γλώσσας, δηλαδή ότι το χαμήλωμα του λάρυγγα (με τον συνακόλουθο αυξημένο κίνδυνο πνιγμού) ήταν συνέπεια της ανάπτυξης μιας πιό εξελιγμένης γλώσσας, όχι προάγγελος αυτής (προσαρμογή σε αυτή, με δαρβινική ορολογία). Για την κυρίαρχη αυτή αντίληψη όμως, μπορεί να ισχυριστεί κάποιος, ότι κατά μία έννοια είναι κατάλοιπο μιας ευρύτερης οπτικής (brain-first) για την εξέλιξη η οποία θέλει, σε γενικές γραμμές, την ανώτερη ευφυία των ανθρώπων να προηγείται εξελικτικά των ουσιαστικών ανατομικών αλλαγών. Η άποψη αυτή ήταν δημοφιλής μέχρι την εποχή που “παρουσιάστηκε” ο άνθρωπος του Πιλτντάουν (Piltdown Man) με το ανθρωπόμορφο κρανίο και το πιθηκοειδές σαγόνι, αλλά και την περίοδο για την οποία εξακολουθούσε να θεωρείται γνήσιο απολίθωμα. Σήμερα έχει απορριφθεί εξαιτίας των αποδεικτικών στοιχείων για το μικρό μέγεθος του κρανίου των αυστραλοπιθήκων και των πρώιμων ανθρώπων.

Το κρανίο του Piltdown Man υπό εξέταση, με φόντο το πορτραίτο του Δαρβίνου. Απεικονίζονται (απο αριστερά): F. O. Barlow, G. Elliot Smith, Charles Dawson, Arthur Smith Woodward. Μπροστινή σειρά: A. S. Underwood, Arthur Keith, W. P. Pycraft, and Ray Lankester. Ζωγραφική του John Cooke, 1915 (wikipedia)

Οι αναφορές στα κρανία θεωρήθηκε ότι αυξάνουν την πιθανότητα εξαγωγής συμπερασμάτων για την γλώσσα από τον εγκέφαλο των ανθρωπιδών [ο όρος χρησιμοποιείται με την έννοια του ανήκω σε, για πλάσματα γένους αυστραλοπιθήκων ή homo]. Το μέγεθος του εγκεφάλου δεν μας λέει κάτι το ιδιαίτερο (αν και θα επανέλθουμε στο θέμα). Τι συμβαίνει όμως με την δομή του; Αν μπορούσε να καταδειχθεί ότι μια περιοχή του σύγχρονου ανθρώπινου εγκεφάλου, συσχετισμένη αποκλειστικά με την γλώσσα, ήταν παρούσα στους εγκεφάλους των ανθρωπιδών μιας συγκεκριμένης χρονολογίας, εύλογα θα μπορούσαμε να συμπεράνουμε ότι εκείνα τα πλάσματα διέθεταν γλωσσική ικανότητα. Αλλά αυτή η λογική ακολουθία αντιμετωπίζει τρία προβλήματα. Πρώτον, εφόσον ο εγκεφαλικός ιστός δεν απολιθώνεται, ο προσδιορισμός της μορφολογίας του βασίζεται σε περιγραφή και ερμηνεία του ανάγλυφου των πτυχώσεων από το εσωτερικό των κρανίων ή καλύτερα των αντιγράφων τους σε προπλάσματα [εσωτερικά εκμαγεία κοίλων αντικειμένων (endocasts)] κατασκευασμένα από κρανία. Η περιοχή που θεωρείται πιο στενά συνδεδεμένη με την κατανόηση και την άρθρωση του λόγου στους σύγχρονους ανθρώπους, είναι αυτή του Μπροκά (Broca’s area)· αλλά η ταυτοποίηση μιας αντίστοιχης περιοχής στους ανθρωπίδες, αποδείχθηκε ιδιαίτερα επίμαχο εγχείρημα (Falk 1992). Δεύτερον, καμία περιοχή του εγκεφάλου, ακόμα και η περιοχή Μπροκά, δεν μοιάζει να συνδέεται με την γλώσσα και οτιδήποτε σχετικό. Τρίτον, η περιοχή Μπροκά, φαίνεται να σχετίζεται λίγο ή καθόλου με την εκφορά του λόγου στους πιθήκους, με αποτέλεσμα ακόμη κι αν μπορέσει να αποδειχθεί ότι μια αντίστοιχη περιοχή υπάρχει σε συγκεκριμένο ανθρωπίδα, η λειτουργία της σε αυτόν ενδέχεται να μην είναι γλωσσική. Οι συνέπειες της “συνεξέλιξης εγκεφάλου-γλώσσας”, όπως την αποκαλεί ο Terrence William Deacon (Deacon (1997), παραμένουν απογοητευτικά ασαφείς.

Ορισμένοι ερευνητές έχουν συνδέσει την γλώσσα με την εξέλιξη της “απελευθέρωσης των χεριών” [(handedness) ικανότητα άνισης κατανομής λεπτών κινητικών δεξιοτήτων] η οποία αναπτύχθηκε πολύ πιο δυναμικά στους ανθρώπους παρά σε άλλα ζώα (Bradshaw και Rogers 1992, Corballis 1991). Στους περισσότερους ανθρώπους κυρίαρχο χέρι είναι το δεξί, ελεγχόμενο από την αριστερή πλευρά του εγκεφάλου, όπου βρίσκονται συνήθως οι γλωσσικές περιοχές. Η αντίληψη της κοινής αυτής τοποθεσίας σαν κάτι περισσότερο από απλή σύμπτωση είναι ελκυστική. Εφόσον ισχύει, θα μπορούσαμε να εξάγουμε γλωσσικά συμπεράσματα μέσα από ευφυείς δοκιμές πραγματοποιημένες με απολιθωμένα πέτρινα εργαλεία, προκειμένου να προσδιορίσουμε αν οι άνθρωποι που τα κατασκεύασαν ήταν ή δεν ήταν, κατά κύριο λόγο, δεξιόχειρες. Από την άλλη πλευρά, ο συσχετισμός γλώσσας και ευχέρειας στην χρήση των χεριών, είναι κάθε άλλο παρά σαφής: η αριστεροχειρία ούτε προϋποθέτει μήτε συνεπάγεται την κυριαρχία της δεξιάς πλευράς του εγκεφάλου στην γλώσσα (Noble and Davidson 1996). Επιπλέον, ακόμα κι αν οι ενδείξεις σαφούς υπεροχής δεξιόχειρων σε κάποια ομάδα ανθρωπιδών ληφθούν ως ισχυρή απόδειξη γλωσσικής ικανότητας, ουδεμία λεπτομέρεια μας παρέχουν για την φύση αυτής.

Στρεφόμενοι από την βιολογία στον πολιτισμό, η κοινή λογική θα υπαινισσόταν ότι αν συνέβαινε ένα σχετικά ξαφνικό άλμα προς την εκλέπτυνση της ανθρώπινης γλωσσικής συμπεριφοράς, θα άφηνε στις αρχαιολογικές καταγραφές άμεσα ίχνη αντίστοιχου άλματος στην επιτήδευση των διατηρημένων τέχνεργων (εργαλείων, στολιδιών και καλλιτεχνημάτων). Συνέβη άραγε κάτι τέτοιο και πότε; Πράγματι σημειώνεται μεγάλη αύξηση ποικιλίας και βελτίωση ποιότητας εργαλείων τουλάχιστον 40,000 ετών που βρέθηκαν σε Ευρώπη και Αφρική, ακολουθούμενη από τις πασίγνωστες σπηλαιογραφίες του Λασκώ και αλλού, πριν από 30,000 χρόνια. Αυτός όμως ο χρονικός προσδιορισμός είναι αρκετά μεταγενέστερος του εκτιμώμενου για την πλήρη ανάπτυξη της σύγχρονης γλώσσας, δεδομένου ότι ταυτίζεται ή είναι ακόμα πιο πρόσφατος από την τελευταία εύλογη ημερομηνία για την εποίκηση της Αυστραλίας. Με την χρήση τέτοιου είδους στοιχείων, ορισμένοι μελετητές δεν δίστασαν να υποστηρίξουν ότι η γλώσσα αναπτύχθηκε “αργά”· ωστόσο από την διερεύνηση της άποψης, αποδεικνύεται συνήθως ότι αυτό που εννοούν εκείνοι με τον όρο γλώσσα είναι περισσότερο η συνειδητή χρήση συμβόλων, παρά η σημασία που έχει για τους γλωσσολόγους. Επιπροσθέτως, υπάρχουν διάσπαρτες αλλά ενδιαφέρουσες ενδείξεις πολιτισμικής συμπεριφοράς χιλιάδες χρόνια νωρίτερα, όπως θύλακες ταφής, εγχάρακτα οστά και η χρήση βαφής από κόκκινη ώχρα για την διακόσμηση του σώματος. Τι μπορεί να σημαίνουν αυτές για την γλώσσα δεν είναι ξεκάθαρο, αλλά ενδεχομένως να είναι σημαντικό ότι κάποιες από τις εμπλεκόμενες ημερομηνίες δεν είναι τόσο απομακρυσμένες από ένα ορόσημο που υποδεικνύεται από στοιχεία γενετικής τα οποία θα δούμε παρακάτω.

Στοιχεία Γενετικής

Από τα τέλη της δεκαετίας του 1970, η μοριακή γενετική παρέχει πρόσβαση σε εντελώς νέες τεχνικές για την εκτίμηση της σχέσης των ανθρώπων μεταξύ τους και με άλλα πρωτεύοντα. (Το ότι μας χωρίζουν μόλις 5 εκατομμύρια χρόνια από τον πρόγονο τον οποίο μοιραζόμαστε με τους χιμπατζήδες, προκύπτει από στοιχεία γενετικής.) Την δεκαετία του 1950 γνωστοποιήθηκε ότι οι πληροφορίες οι οποίες διαφοροποιούν γενετικά ένα άτομο από άλλα (εκτός πιθανού μονοζυγωτικού διδύμου) μεταφέρονται από το DNA στα χρωμοσώματα κάθε κυττάρου του σώματος. Οι γενετιστές μπορούν τώρα να συγκρίνουν άτομα και ομάδες σε ότι αφορά στο ποσοστό του DNA που μοιράζονται. Επιπλέον, μπορούν να κάνουν το ίδιο, όχι μόνο για το DNA στον πυρήνα του κυττάρου, το οποίο κληρονομείται από αμφότερους τους γονείς, αλλά και για εκείνο στα μιτοχόνδρια του κυττάρου —κάποια από τα επονομαζόμενα οργανίδια, τα οποία περιέχει το κύτταρο πέρα από τον πυρήνα του. Αυτό που είναι σημαντικό για το μιτοχονδριακό DNA είναι ότι κληρονομείται μονάχα από την ��ητέρα. Συνεπάγεται ότι ο μόνος λόγος που μπορεί να υπάρχει για οποιαδήποτε διαφορά ανάμεσα στο μιτοχονδριακό DNA δύο ανθρώπων, είναι ανακριβής κληροδοσία εξαιτίας μετάλλαξης· διότι, δίχως αυτή την ανακρίβεια, θα είχαν και οι δυό τους ακριβώς το ίδιο μιτοχονδριακό DNA με τον πιο πρόσφατο κοινό τους πρόγονο από την θηλυκή πλευρά. Έτσι, δεδομένου ότι μεταλλάξεις στο DNA συμβαίνουν με σταθερό ρυθμό, η έκταση της διαφοράς DNA δύο ατόμων είναι δείκτης του αριθμού των γενεών που τους χωρίζει από την πιο πρόσφατη γυναίκα από την οποία κατάγονται αμφότεροι, διαμέσου των θυγατέρων της, των θυγατέρων των θυγατέρων της κ.ο.κ.

Η τεχνική αυτή χρησιμοποιήθηκε για τον χωροχρονικό εντοπισμό του πιο πρόσφατου θηλυκού προγόνου από τον οποίο κατάγονται όλοι οι ζώντες άνθρωποι (Cann et all. 1987). Με την βοήθεια περίτεχνων στατιστικών μεθόδων, εικάζεται ότι αυτή η γυναίκα έζησε προσεγγιστικά περίπου πριν 200,000 χρόνια στην Αφρική και για τον λόγο αυτό, επονομάστηκε Αφρικανή Εύα (αλλιώς, Μιτοχονδριακή Εύα). Τόσο η Αφρικανική τοποθεσία όσο και η χρονολογία, ήταν πολύ κοντά σε συμφωνία με το σενάριο «πέρα από την Αφρική» για τον πρώιμο άνθρωπο της σοφίας, το οποίο προτάθηκε σε ανεξάρτητη βάση από ορισμένους αρχαιολόγους και κατά συνέπεια οι δύο θεωρίες αλληλοϋποστηρίζονταν. Το ψευδώνυμο Εύα είναι προσφυές, αλλά συνάμα ατυχές, επειδή υπονοεί ότι εξαιρουμένου του αρσενικού συντρόφου ή των συντρόφων της Εύας, ουδεμία απο τις σύγχρονές της έχει έστω κι έναν ζωντανό απόγονο σήμερα. Πρόκειται για πλάνη· αυτό που μπορεί να ισχυριστεί κανείς είναι ότι κάθε άνθρωπος που βρίσκεται στην ζωή σήμερα, ο οποίος προέρχεται από θηλυκό σύγχρονο της Εύας, θα πρέπει να συνδέεται με εκείνη τουλάχιστον μέσω ενός αρσενικού προγόνου. Εντούτοις, το επιχείρημα της Cann και των συνεργατών της, πράγματι υποδηλώνει ότι υπήρξε πληθυσμιακή συμφόρηση κατά την σχετικά πρόσφατη ανθρώπινη προϊστορία, έτσι ώστε οι περισσότεροι των ανθρώπων που ζούσαν πριν πρίπου 200.000 χρόνια, διάσπαρτοι σε μεγάλες περιοχές της Αφρικής, της Ευρώπης και της Ασίας, δεν άφησαν ζώντες απογόνους. Γιατί άραγε συνέβη κάτι τέτοιο;

Πολλοί ερευνητές ενέδωσαν στον πειρασμό να προτείνουν, ότι αυτό που ξεχώριζε την κοινωνία της Εύας, το χαρακτηριστικό το οποίο επέτρεψε στους απογόνους τους να ξεπεράσουν άλλους ανθρώπους και αποθάρρυνε την διασταύρωση με αυτούς, θα πρέπει να ήταν οι μάλλον νεοαποκτηθείσες γλωσσικές ικανότητες. Ωστόσο δεν πρόκειται παρά για μια εικασία. Η ίδια η Rebecca L. Cann, ανέφερε αργότερα μια από τις πολλές εναλλακτικές πιθανότητες, όπως είναι για παράδειγμα, μια μολυσματική νόσος (Cann et al. 1994). Αλλά η πιθανή διασύνδεση με την εξέλιξη της γλώσσας έγινε δημοφιλής από τον Ιταλό γενετιστή Luigi Luca Cavalli-Sforza (1995) και τον Αμερικανό γλωσσολόγο Merritt Ruhlen (1994), των οποίων οι υποτιθέμενες ανακατασκευές λεξιλογίων των πρωτο-ανθρώπων, εφόσον είναι αυθεντικές, θα πρέπει κατά προσέγγιση να χρονολογούνται από την εποχή της Εύας. Ωστόσο, της άποψης αυτής, υπόκειται η αμφιλογία περί «μητρικής γλώσσας». Ακόμη και αν, υποστηρίζοντάς την, ήταν δυνατόν να αναδομήσουμε την μοντέρνα γλώσσα από τις προγονικές ρίζες όλων των σύγχρονων γλωσσών, θα επρόκειτο για καθαρή σύμπτωση αν εκείνη η αρχαία γλώσσα (μητρική, με την γλωσσική έννοια για την ιστορία) συνιστούσε συνάμα την πρώτη γλωσσική ποιλομορφία με εντελώς σύγχρονα χαρακτηριστικά (μητρική γλώσσα με την βιολογική έννοια). Έτσι για άλλη μια φορά, ερχόμαστε αντιμέτωποι με στοιχεία τα οποία, παρότι δελεαστικά, δεν οδηγούν σε κάποιο ασφαλές συμπέρασμα.

Luigi Luca Cavalli-Sforza (wikipedia)

Merritt Ruhlen (wikipedia)

Μέσω της γενετικής παρέκκλισης ή επιλογής η θηλυκή γενεαλογία μπορεί να οδηγήσει πίσω σε ένα μοναδικό θηλυκό, όπως η Μιτοχονδριακή Εύα (wikipedia)

Στοιχεία μελέτης των πρωτευόντων

Κανένα από τα ζώντα πρωτεύοντα, πλην του ανθρώπου, δεν μπορεί να μιλήσει. Ωστόσο, τρία σύγχρονα ερευνητικά πεδία που αφορούν σε πρωτεύοντα, ενδέχεται να φωτίσουν την εξέλιξη της γλώσσας. Σε αυτά εμπλέκονται, συστήματα φωνητικών κλήσεων, γνωστικές ικανότητες (ειδικότερα η αντίληψη των κοινωνικών σχέσεων), αποτελέσματα πειραμάτων σχετικών με την διδασκαλία νοηματικής γλώσσας και μέθοδοι τεχνητών σημάνσεων σε πιθήκους.

Φωνητικά συστήματα

Πριν λίγες δεκαετίες, ήταν γενικά αποδεκτό ότι οι κραυγές όλων των ζώων, συμπεριλαμβανομένων πιθήκων και μαϊμούδων, αντανακλούσαν κατ᾽ αποκλειστικότητα φυσικές ή συναισθηματικές καταστάσεις όπως πόνος, φόβος, πείνα ή σεξουαλική επιθυμία. Από αυτή την άποψη, το μέρος του ανθρώπινου φωνητικού ρεπερτορίου στο οποίο φαίνεται να μοιάζει περισσότερο το φωνητικό σύστημα των πρωτευόντων, ήταν αυτό που συνίσταται από ακούσιους ήχους, όπως κραυγές πόνου, γέλιου ή θλίψης. Ουδείς γλωσσολόγος υπήρξε πρόθυμος να αναλογισθεί κάποιον ενδιάμεσο εξελικτικό σύνδεσμο. Αλλά θεωρούσαν ότι από τα «λεξιλόξια» αυτών, απουσίαζε το βασικό στοιχείο των αντίστοιχων ανθρώπινων: κλήσεις που ταυτοποιούνται αναλογικά με συγκεκριμένα πράγματα ή κατηγορίες αντικειμένων του εξωτερικού κόσμου. Δεδομένης της θεώρησης αυτής, οι φωνές των ζώων εύκολα εγκαταλείφθηκαν ως άσχετες με την ανθρώπινη γλώσσα. Ωστόσο, μελέτες στην συμπεριφορά των ζώων, βρέθηκαν σταδιακά σε αμηχανία με την υπόθεση αυτή και ορισμένοι ανέπτυξαν έναν ιδιαίτερα κομψό και πειστικό τρόπο για την συστηματική δοκιμασία της.

Τις δεκαετίες 1970 και 1980, οι Dorothy Cheney και Robert Seyfarth δαπάνησαν χρόνια στην μελέτη συμπεριφοράς των κερκοπιθηκίδων [vervet monkeys, η μόνη σωζόμενη οικογένεια από την υπεροικογένεια των κερκοπιθηκοειδών, του γένους Χλωροκήβος πυγέρυθρος (Chlorocebus pygerythrus)] στο φυσικό τους περιβάλλον, το καταφύγιο του Εθνικού πάρκου Amboseli στην Κένυα.

Chlorocebus pygerythrus vervet Photo credit: Anne Zeller Primate Info Net Wisconsin Primate Research Center (WPRC) Library University of Wisconsin-Madison

Amboseli National Park (wikipedia)

Αυτές οι μικρές μαϊμούδες, εκφέρουν διακριτούς προειδοποιητικούς ήχους για διαφορετικά είδη θηρευτών, κυρίως λεοπαρδάλεις, φίδια και αετούς, στα οποία αρμόζουν αντίστοιχα είδη ενεργειών διαφυγής: καταφεύγουν στα δέντρα για να γλιτώσουν από τις λεοπαρδάλεις, περιεργάζονται το έδαφος γύρω τους για να αποφύγουν τα φίδια και κρύβονται σε θάμνους για να ξεφύγουν από τους αετούς. Αυτού του είδους η φαινόμενη αναφορικότητα έχει παρατηρηθεί και στο παρελθόν, όχι μόνον στους κερκοπιθηκίδες· αλλά αυτού του είδους η συνειδητοποίηση δεν κλόνισε την γενική πεποίθηση ζωωλόγων και γλωσσολόγων, ότι οι κραυγές των ζώων ήταν ουσιαστικά περισσότερο συναισθηματικές ή συγκινησιακού περιεχομένου, παρά αναφορικές. Με απλά λόγια η κλήση μιας μαϊμούς για έναν αετό, θα πρέπει να ερμηνευθεί ως συνδεδεμένη όχι με κάτι στον εξωτερικό κόσμο (Να, ένας αετός!) αλλά, καλύτερα, με την εσωτερική της κατάσταση (νοιώθω φόβο για τον αετό ή αισθάνομαι ότι πρέπει να κρυφτώ στους θάμνους). Βέβαια, αν μια μαϊμού έβγαζε την κραυγή του αετού, οι άλλες θα λάμβαναν επίσης τα μέτρα τους, αλλά αυτό θα μπορούσε να συμβεί μόνον επειδή αυτές οι άλλες, αντιλήφθηκαν τον αετό για το εαυτό τους και ως εκ τούτου, θα μπορούσαμε να πούμε, ότι βίωσαν το ίδιο συναίσθημα.

Οι Cheney και Seyfarth κατέδειξαν το λάθος της ερμηνείας αυτής με ένα κρίσιμο πείραμα. Μαγνητοφώνησαν τ��ς προειδοποιητικές κλήσεις κινδύνου και τις αναπαρήγαγαν από κρυμμένα ηχεία ενόσω οι σχετικοί θηρευτές απουσίαζαν. Αν η παραδοσιακή ερμηνεία ήταν ορθή, θα μπορούσε να προβλεφθεί ότι τα ζώα δεν θα προέβαιναν σε δράσεις αποφυγής ανταποκρινόμενα στα ψευδή ακούσματα. Ενδεχομένως να κοιτούσαν γύρω τους σε αναζήτηση κάποιου αντίστοιχου κυνηγού, αλλά αποτυγχάνοντας να εντοπίσουν κάποιον, δεν θα βίωναν την αντίδραση του φόβου και συνεπώς δεν θα έκαναν τίποτα. Ωστόσο, αυτό που οι Cheney και Seyfarth διαπίστωσαν, είναι ότι τα ζώα αντιδρούσαν στους ήχους των μεγαφώνων σαν να ήταν αυθεντικοί, λαμβάνοντας τα κατάλληλα μέτρα αποφυγής. Το ηχητικό μήνυμα πυροδοτούσε από μόνο του την δράση, όχι το συναίσθημα ή η φυσική κατάσταση που προκαλείται από την θέα κάποιου αρπακτικού. Επομένως, οι προειδοποιητικές κραυγές περιέχουν πράγματι πληροφορίες αναφορικές του περιβάλλοντος, για τις οποίες οι μαϊμούδες μπορούν να δράσουν κατάλληλα και ως εκ τούτου, στο περιορισμένο αυτό εύρος, ομοιάζουν με τις λέξεις μιας ανθρώπινης γλώσσας.

Μια δεύτερη άποψη για την οποία η ανθρώπινη γλώσσα διαφοροποιείται από τις κραυγές των ζώων, θα μπορούσαμε να πούμε, είναι ότι μόνον η γλώσσα των ανθρώπων μπορεί να είναι αναξιόπιστη. Αν η φωνητική κλήση ενός ζώου είναι αυτόματη απόκριση σε κάποιο συναισθηματικό η φυσικό ερέθισμα, η αξιοπιστία της είναι κατά μια έννοια διασφαλισμένη. Οι άνθρωποι, από την άλλη, μπορούν να πούν ψέματα ή να κάνουν λάθος. Αλλά οι Cheney και Seyfarth, έδειξαν ότι και από την άποψη αυτή το χάσμα ανάμεσα στις προειδοποιητικές κραυγές των μαϊμούδων και την ανθρώπινη γλώσσα είναι μικρότερο από όσο εθεωρείτο παλαιότερα. Η χρήση των κλήσεων αυτών από τα ζώα δεν είναι ολοκληρωτικά εγγενώς προσδιορισμένη· για παράδειγμα, νεαρές μαϊμούδες συχνά θα βγάλουν την κραυγή για τον αετό, ακόμα και αν έχουν δεί κάτι στον ουρανό το οποίο δεν είναι αετός, ούτε καν πουλί ενδεχομένως, όπως ένα φύλλο που πέφτει. Οι ενήλικες μαϊμούδες αντιδρούν και αυτές διαφορετικά στις κλήσεις των νεωτέρων. Αντί να αναλάβουν άμεση δράση αποφυγής, όπως θα έκαναν αν είχαν ακούσει κάποιο ενήλικο κάλεσμα, πρώτα ελέγχουν οι ίδιες την παρουσία του αντίστοιχου θηρευτή και εφόσον απουσιάζει, αγνοούν την κλήση. Οι νεαρές μαϊμούδες δε, φαίνεται να αναμορφώνουν το έμφυτο ρεπερτόριο των φωνητικών τους αντιδράσεων σε προειδοποιήσεις ακριβείας, παρατηρώντας πότε οι φωνές τους προκάλεσαν αντίστοιχη κινητοποίηση και πότε αγνοήθηκαν.

Από αυτές τις παρατηρήσεις, φαίνεται για τις μαϊμούδες, ότι οι κλήσεις τους έχουν κάποιο περιεχόμενο ανεξάρτητο της φυσικής ή συναισθηματικ��ς τους κατάστασης. Οι Cheney και Seyfarth κατάφεραν επίσης να δείξουν ότι κρίνοντας την αξιοπιστία της κλήσης που ακούει, η μαϊμού ξεπερνά την απλή αναγνώριση του επισκέπτη. Είναι ξεκάθαρο ότι μπορούν να διακρίνουν φωνές μεμονωμένων ατόμων, επειδή όταν μια νεαρή μαϊμού βγάζει κραυγή δυσφορίας οι ενήλικες, στο άκουσμά της, στρέφουν το βλέμμα τους προς την μητέρα της, σαν να περιμένουν την αντίδρασή της. Οι Cheney και Seyfarth συνέκριναν τα αντανακλαστικά σε ηχογραφημένες φωνές διαφορετικών ατόμων για ποικιλία καλεσμάτων. Στην απουσία κάποιου πραγματικού κινδύνου, οι ακροατές εξοικειώνονται και κατά συνέπεια, αγνοούν τους καταγεγραμμένους προειδοποιητικούς ήχους για κάποιον αετό με την φωνή της μαϊμούς Α, αλλά θα εξακολουθήσουν να αντιδρούν σε συναγερμούς από την φωνή της μαϊμούς Β. Όμως, ακόμη κι αν η φωνή της μαϊμούς Α γίνει γνώριμη, δεν θα αγνοήσουν ένα ηχογραφημένο κάλεσμα διαφορετικού είδους από την ίδια μαϊμού (ας πούμε κάποιο από το φωνητικό τους ρεπερτόριο σχετικά με ατομική ή ομαδική συμπεριφορά). Μπορούν προφανώς να διακρίνουν τα ζητήματα για τα οποία μια άλλη μαϊμού είναι αξιόπιστος μάρτυρας από εκείνα για τα οποία δεν είναι.

Βέβαια, το σύστημα φωνητικών κλήσεων των μαϊμούδων δεν διαθέτει ουδεμία γραμματική οργάνωση που να μοιάζει με την γλώσσα των ανθρώπων και το ίδιο ισχύει για όλα τα φωνητικά συστήματα όλων των υπολοίπων πρωτευόντων. Παρόλα αυτά οι παρατηρήσεις που προαναφέρθηκαν, όπως και άλλες, τείνουν να φανερώνουν ότι οι διαφορές ανάμεσα στο φωνητικό σύνολο των πρωτευόντων και την ανθρώπινη γλώσσα δεν είναι τόσο χαοτικές όπως αναμενόταν και ως εκ τούτου αποδυναμώνουν την υπόθεση άρνησης οποιασδήποτε εξελικτικής σύνδεσης μεταξύ τους.

Γνωστικές δεξιότητες

Μακροχρόνιες μελέτες σε ομάδες πρωτευόντων στο φυσικό τους περιβάλλον, φανερώνουν ότι γνωρίζουν πολύ περισσότερες λεπτομέρειες για τους εαυτούς τους, τα μέλη του είδους τους και το περιβάλλον τους, από αυτές που θεωρούσαμε πιθανές κατά το παρελθόν. Συγκεκριμένα, μπορούν να διακρίνουν τον συγγενή από κάποιον που ανήκει σε άλλο είδος και με την ανάμνηση της μεταξύ τους αντίδρασης (ποιός έκανε τι σε ποιόν) μπορούν να ξεχωρίσουν συμμάχους από εχθρούς. Αυτό σχετίζεται με την γλώσσα στο μέτρο του βασικού χαρακτηριστικού της που είναι η ικανότητα γραμματικής αναπαράστασης των ρόλων των συμμετεχόντων σε μια κατάσταση. Για παράδειγμα, η πρόταση, ο Γιάννης έδωσε στην Μαίρη μια μπανάνα, αναπαριστά ένα επεισόδιο με τον Γιάννη να είναι ο δράστης-agent, η Μαίρη ο στόχος-goal και η μπανάνα ο πάσχων-patient ή το κεντρικό θέμα σε μια πράξη προσφοράς. Με όρους σημειωτικής ανάλυσης και ειδικότερα του κλάδου της σημαντικής, τέτοιο σύνολο σχέσεων μεταξύ των παρευρισκομένων σε μια κατάσταση αποκαλείται θεματική δομή ή δομή επιχειρήματος. Τα ανώτερα πρωτεύοντα δεν παράγουν προτάσεις, αλλά σαφώς διαθέτουν ικανότητα διανοητικής αναπαράστασης θεματικών δομών που βρίσκονται στο νοηματικό υπόβαθρο των προτάσεων. Στο μέτρο αυτό, εξελίχθηκαν προς ένα στάδιο γνωστικής ετοιμότητας για την απόκτηση κάποιας γλώσσας.

Ένας από τους ρουβίκωνες που υποστηρίχθηκαν για την διάκριση των ανθρώπων από τα άλλα ζώα, είναι ότι ενώ τα τελευταία ίσως διαθέτουν διαδικαστική γνώση (γνωρίζω πώς) μόνον οι άνθρωποι έχουν πρόσβαση σε (λογικο-)προτασιακή γνώση (γνωρίζω αυτό). Εφόσον αληθεύει, είναι δελεαστικό να δούμε την προτασιακή γνώση ως προϋπόθεση για την γλώσσα. Ωστόσο, προσπαθώντας κάποιος να αξιολογήσει την ορθότητα του συλλογισμού, βρίσκεται αμέσως αντιμέτωπος με τον κίνδυνο της κυκλικότητας. Αν η προτασιακή γνώση σημαίνει απλά «γνώση τέτοιου είδους ώστε να μπορεί μόνο να αναπαραχθεί με την μορφή πρότασης» τότε δεν εκπλήσσει το ότι θα έπρεπε να αφορά αποκλειστικά σε αυτούς οι οποίοι «χρησιμοποιούν προτάσεις» δηλαδή στους ανθρώπους· αλλά υποστηρίζοντας ότι «απουσιάζει από τα ζώα» είναι σαν να λες ότι τα ζώα «στερούνται γλώσσας». Από την άλλη αν η προτασιακή γνώση προσδιορίζεται ως λογικά ανεξάρτητη της γλώσσας, όπως με τους όρους της θεματικής δομής, δεν είναι με κανέναν τρόπο τόσο ξεκάθαρο ότι υπάρχει αυτός ο ρουβίκωνας.

Τουλάχιστον δύο περαιτέρω θεωρήσεις υποστηρίζουν την ιδέα ότι τα πρωτεύοντα έχουν πρόσβαση στο «γνωρίζω αυτό». Η μία είναι ο βαθμός στον οποίο, στις ομολογουμένως προσποιητές εργαστηριακές συνθήκες, οι χιμπατζήδες μπορούν να αποκτήσουν και να φανερώσουν επίγνωση αφηρημένων εννοιών όπως το «ίδιο» και το «διαφορετικό» και να τις εφαρμόσουν με βάση σειρά κριτηρίων, όπως χρώμα και μέγεθος. Η άλλη είναι το γεγονός ότι τα πρωτεύοντα, προφανώς μπορούν να επιδοθούν σε εξαπατήσεις (τεχνάσματα) ή να επιδείξουν αυτό που ονομάζεται Μακιαβελική νοημοσύνη. Ερμηνεύοντας την Μακιαβελική συμπεριφορά, είναι βέβαια απαραίτητο να προφυλαχθούμε από την υπερενθουσιώδη απόδοση χαρακτηριστικών γνωρισμάτων της ανθρώπινης προσωπικότητας στα ζώα. Ωστόσο, η συμπεριφορά αυτή υπαινίσσεται ότι τα πρωτεύοντα είναι σε θέση να συλλάβουν καταστάσεις που δεν υπάρχουν, δηλαδή να σκεφτούν με έναν αφηρημένο, προτασιακό τρόπο και ως εκ τούτου ενισχύεται η αξία του λόγου αναζήτησης προδρόμων της γλώσσας σε άλλα είδη.

Η κοινωνικές σχέσεις μεταξύ των πρωτευόντων είναι συγχρόνως πιο σύνθετες και λιγότερο στερεοτυπικές σε σχέση με αυτές άλλων θηλαστικών και έχει προταθεί, ότι οι κοινωνικοί παράγοντες ενδεχομένως να υπερέχουν των επικοινωνιακών στην προαγωγή της γλωσσικής εξέλιξης. Ο Robin Dunbar και οι συνεργάτες του, σε διάφορες μελέτες τους, επέστησαν την προσοχή στην σχέση του πλήθους της ομάδας και του μεγέθους του εγκεφάλου με την κοινωνική φροντίδα, σε διάφορα είδη πρωτευόντων. Η μέριμνα του ενός ζώου για το άλλο (περιποίηση, φροντίδα μεταξύ των μελών της κοινωνίας των ζώων, καθαρισμός καλλωπισμός, ξεψείρισμα, χτένισμα κλπ) είναι σημαντική για την ενίσχυση της συνοχής των ομάδων· από την άλλη, ο χρόνος που αφιερώνεται σε αυτήν, αυξάνεται εκθετικά όσο πληθαίνει η ομάδα, περιορίζοντας έτσι τον διαθέσιμο χρόνο για άλλα ουσιαστικά καθήκοντα όπως η συλλογή τροφής. Ο Dunbar υποστηρίζει ότι η γλώσσα προσέφερε διέξοδο από το δίλημμα: σαν είδος φωνητικής μέριμνας, με το πλεονέκτημα ότι μέσω αυτής μπορεί κάποιο να μεριμνά για πολλά άλλα μονομιάς. Ίχνη τούτης της αρχέτυπης λειτουργίας είναι δυνατόν να παρατηρηθούν στο εύρος με το οποίο, ακόμη και σήμερα, η γλώσσα χρησιμοποιείται σε κουτσομπολιά και για την ενδυνάμωση των κοινωνικών σχέσεων, περισσότερο από τους πιό αφηρημένους, αναπαραστατικούς και πληροφοριοδοτικούς σκοπούς, οι οποίοι τείνουν να ενδιαφέρουν θεωρητικούς γραμματολόγους και φιλοσόφους.

Πειράματα νοηματικής (γλώσσας)

Οι πίθηκοι δεν διαθέτουν φωνητική οδό κατάλληλη για ομιλία, αλλά οι βραχίονες και τα χέρια τους είναι αρκετά ικανά να σχηματίζουν νεύματα γλώσσας κωφών —νοηματικής γλώσσας. Στα 1970 προκλήθηκε μεγάλος ενθουσιασμός από πειράματα τα οποία απέδιδαν στους χιμπατζήδες την ικανότητα εκμάθησης της Αμερικανικής Νοηματικής Γλώσσας και κατά συνέπεια η γλώσσα δεν μπορούσε πλέον να λογίζεται αποκλειστικά ανθρώπινο προσόν. Οι γλωσσολόγοι, σε γενικές γραμμές, αρνήθηκαν σθεναρά ότι η ακολουθία των νευμάτων από μέρους των χιμπατζήδων, όπως στις περιπτώσεις των Washoe και Nim, θα μπορούσαν να θεωρηθούν αυθεντικοί συντακτικοί συνδυασμοί ή σύνθετες λέξεις, υποδεικνύοντας το γεγονός ότι δεν πλησίασαν ποτέ την ποικιλία και την πολυπλοκότητα της νοηματικής γλώσσας που με ευχέρεια χρησιμοποιούν οι άνθρωποι. Οι υποστηρικτές των χιμπατζήδων από την άλλη, ισχυρίστηκαν ότι τα είδη των νοηματικών συνδυασμών που παρήγαγαν τα ζώα, ήσαν σχεδόν όμοια με τους συνδυασμούς των λέξεων τους οποίους παράγουν άνθρωποι σε βρεφική ηλικία στο δίλεξο ή τηλεγραφικό στάδιο εκμάθησης της γλώσσας, έτσι ώστε εφόσον αυτό που έκαναν οι χιμπατζήδες δεν ήταν ενδεικτικό για την γλώσσα, τότε δεν θα μπορούσε επίσης κάποιος, να αποκαλεί τον τηλεγραφικό βρεφικό λόγο, έκφανση της γλώσσας. Στο πλαίσιο αυτό, το θέμα δεν είναι αν η νοηματική συμπεριφορά των χιμπατζήδων και άλλων πιθήκων αρμόζει να αποκαλείται γλωσσική, αλλά το αν αυτή η συμπεριφορά φωτίζει με κάποιον τρόπο την εξέλιξη της γλώσσας.

Washoe (c. September 1965 – October 30, 2007) was a chimpanzee who was the first non-human to learn to communicate using American Sign Language (wikipedia)

Nim Chimpsky (November 19, 1973 – March 10, 2000) was a chimpanzee who was the subject of an extended study of animal language acquisition (codenamed 6.001) at Columbia University, led by Herbert S. Terrace; the linguistic analysis was led by the psycholinguist Thomas Bever. (wikipedia)

Thomas G. Bever (born December 9, 1939) is a Regent’s Professor of Psychology, Linguistics, Cognitive Science, and Neuroscience at the University of Arizona. He has been a leading figure in psycholinguistics, focusing on the cognitive and neurological bases of linguistic universals, among other pursuits. (wikipedia)

Kanzi (born October 28, 1980), is a male bonobo who has been featured in several studies on great ape language. According to Sue Savage-Rumbaugh, a primatologist who has studied the bonobo throughout his life, Kanzi has exhibited advanced linguistic aptitude. (wikipedia)

Μία από τις συνέπειες των πειραμάτων στην γλώσσα των πιθήκων, ήταν η αναμόχλευση παλαιότερης θεωρίας σύμφωνα με την οποία η ανθρώπινη γλώσσα ενδεχομένως ξεκίνησε με χειρονομίες και μόνον αργότερα μεταφέρθηκε στην φωνητική οδό (Armstrong et al. 1995). Όπως οι πίθηκοι μπορούν να κάνουν νοήματα, δίχως να διαθέτουν ανθρώπινο φωνητικό σύστημα, έτσι θα μπορούσαν οι αυστραλοπιθηκίνες πρόγονοί μας να επικοινωνούσαν μέσω νευμάτων προτού αναπτυχθούν οι φωνητικές οδοί τους και καταστούν ικανοί για το σύγχρονο είδος ομιλίας. Βασικός πόλος έλξης αυτής της πρότασης υπήρξε ανέκαθεν η εμβαλωματική επίλυση στο πρόβλημα εκμάθησης του χειρισμού της αυθαίρετης σχέσης μεταξύ λέξεων και νοημάτων, από μέρους των ανθρώπων. Η φαινομενικότητα της λύσης έγκειται στο γεγονός ότι πολλά από τα νοήματα (τα σύμβολα που χρησιμοποιεί προκειμένου να εκφράσει γλωσσικά σημεία) στην Αμερικανική Νοηματική Γλώσσα και σε άλλες, είναι υποκινούμενα (εικονικά) παρά αυθόρμητα (συμβολικά)· δηλαδή προσομοιώνουν ή ανακαλούν, κατά κάποιον τρόπο, τις αναφορές τους στον εξωτερικό κόσμο, ενώ πολλά άλλα νοήματα είχαν κάποτε πολύ πιο σαφή κίνητρα από ότι έχουν σήμερα. Η αναλογία των λεξιλογίων στις εικονικές νοηματικές γλώσσες είναι κατά πολύ μεγαλύτερη εκείνης των εικονικών (ονονοματοποιητικών) λέξεων στα λεξιλόγια των ομιλούμενων γλωσσών. Αυτές οι υποκινούμενες χειρομορφές θα μπορούσαν να έχουν συναποτελέσει ένα ικρίωμα, τρόπον τινά, για την διευκόλυνση ενός πιο δύσκολου έργου, του ελέγχου (της τελειοποίησης, της αυθεντίας στην κατοχή και την χρήση) των αφηρημένων σημάτων, είτε αυτά σχηματίζονται με τα χέρια είτε φωνητικά. Αλλά η έλξη τούτης της επιχειρηματολογίας, εξαφανίζεται μόλις κάποιος θυμηθεί ότι το λεξιλόγιο των φωνητικών κλήσεων των μαϊμούδων είναι εξίσου συμβολικό με τα περισσότερα λόγια της ανθρώπινης γλώσσας. Η κραυγή του αετού, της λεοπάρδαλης και του φιδιού δεν ομοιάζουν ούτε στο ελάχιστο με τις φωνές των ζώων αυτών. Συνεπώς, ακόμη κι αν κάποιος θεωρεί την χρήση συμβολικών σημάτων, επικοινωνιακό Ρουβίκωνα, πρόκειται για έναν Ρουβίκωνα που διέβησαν μη ανθρώπινα είδη αδιακρίτως, τα οποία διέθεταν ξεκάθαρα αναφορικό λεξιλόγιο κλήσεων και σχεδόν σίγουρα από τους πρώιμους προγόνους μας, πολύ πριν την εμφάνιση των Ανθρωπιδών.

Σχετικότερο με την εξέλιξη της γλώσσας, ενδεχομένως, είναι ό,τι μπορεί να σταχυολογηθεί από την παρατήρηση του μπονόμπο (ή πυγμαίου χιμπαντζή) Kanzi (Savage-Rumbaugh et al. 1993, Savage-Rumbaugh and Lewin 1994). Η Sue Savage-Rumbaugh άρχισε να εκπαιδεύει την μητέρα του Kanzi τόσο στην νοηματική γλώσσα όσο και στην χρήση πληκτρολογίου αφηρημένων λεξογραμμάτων, ενώ ο νεογέννητος Kanzi αφέθηκε να παίζει και να παρακολουθεί ανενόχλητος ότι συνέβαινε. Η μητέρα αποδείχθηκε, όχι πολλά υποσχόμενη μαθήτρια· από την άλλη, ο Kanzi ανέπτυξε αυθόρμητα μια μορφή επικοινωνίας που περιελάμβανε χειρονομίες και λεξογράμματα, επιδεικνύοντας συνάμα εκπληκτική ικανότητα κατανόησης της προφορικής Αγγλικής γλώσσας —κατά τι πιό ορθή αντίληψη, στην πραγματικότητα, από την δίχρονη κόρη ενός εκ των συνεργατών της Savage-Rumbaugh, τουλάχιστον μέσα σ᾽αυτό το επιτηδευμένα περιορισμένο φάσμα συντακτικών κατασκευών.

Savage-Rumbaugh (wikipedia)

Yerkish lexigram representing Savage-Rumbaugh. Savage-Rumbaugh is a developer of the language (wikipedia)

Lexigram describing Kanzi (wikipedia)

Bonobos Kanzi and Panbanisha with Sue Savage-Rumbaugh (wikipedia)

H Sue υποστηρίζει ότι, ο Kanzi φανερώνει κανονιστικά στοιχεία χρήσης σημάτων και λεξογραμμάτων και εφόσον κάποιος το επιθυμεί θα μπορούσε να αποκαλέσει αυτό το σύστημα κανόνων, συντακτικό. Φαίνεται όμως υπερβολικό να συμπεράνουμε ότι η διαφορά ανάμεσα στο συντακτικό του Kanzi κι εκείνο των ανθρωπίνων γλωσσών, έγκειται μόνο στον βαθμό της πολυπλοκότητας και όχι στο είδος. Από τους δύο κανόνες τους οποίους επινόησε, αντί απλά να αντιγράψει ο Kanzi από την ανθρώπινη χρήση, ο ένας (το ιδεόγραμμα προηγείται της χειρονομίας) σαφώς δεν έχει αντίστοιχο ανθρώπινο, ενώ ο άλλος (η πράξη προηγείται της δράσης, με αντιστοιχία στην αλληλουχία των συμβάντων, όπως η κρυψώνα για το κυνήγι ή το γαργάλημα για το τσίμπημα) ερμηνεύεται ως ξεκάθαρα σημασιολογικός ή πραγματικός, αντί συντακτικός. Επιπλέον, τα λεγόμενα από τον Kanzi είναι σχεδόν όλα πολύ βραχύχρονα για να επιτρέψουν σαφή αναγνώριση παρομοίων φθόγγων ή φράσεων παρόμοιων ��ε των ανθρώπων. Θα μπορούσαμε να συμπεράνουμε, πιό συντηρητικά, ότι ο Kanzi μπορεί πράγματι να έχει επινοήσει κάποιο είδος στοιχειώδους σύνταξης, το οποίο όμως δεν μπορεί να αντιπαραβληθεί άμεσα με αυτό του συντακτικού που διαθέτουν οι γλώσσες των ανθρώπων. Στόχος για τον ερευνητή της εξέλιξης στην ανθρώπινη γλώσσα είναι, λοιπόν, η αιτιολόγηση των διαφορών ανάμεσα στο τι κάνει ο μπονόμπο και τι οι άνθρωποι.

Στοιχεία Νευροβιολογίας

Για την συστηματική διερεύνηση της σχέσης μεταξύ γλώσσας και εγκεφάλου, θα χρειαζόταν κάποιος να διεξάγει χειρουργικά πειράματα, ηθικά αδιανόητου είδους. Συνεπώς οι γνώσεις μας πρέπει να συγκεντρωθούν με σχετικά συμπτωματικό τρόπο, από την γλωσσική συμπεριφορά ανθρώπων που υποφέρουν από εγκεφαλική βλάβη εξαιτίας κάποιου ατυχήματος ή εγκεφαλικής αιμορραγίας. Η προσέγγιση αυτή υπολείπεται της ιδανικής επειδή η έκταση της ζημιάς δεν υπόκειται βεβαίως σε οποιονδήποτε πειραματικό έλεγχο και είναι προσδιορίσιμη μόνον έμμεσα μέσω μεθόδων όπως η απεικόνιση μαγνητικού συντονισμού (MRI), η οποία είναι παρόμοια της ακτινογραφίας αλλά πολύ πιο λεπτομερής και η τομογραφία εκπομπής ποζιτρονίων (PET), η οποία υπολογίζει στιγμιαίες μεταβολές στην ροή του αίματος. Είναι επίσης εφικτό, με την συγκατάθεση του ασθενούς, να δοκιμασθούν οι επιπτώσεις στην γλώσσα από την διέγερση περιοχών του εγκεφάλου, κατά την διάρκεια χειρουργικών επεμβάσεων που στοχεύουν στον έλεγχο της επιληψίας (Calvin and Ojemann 1994). Το γεγονός ότι η βιβλιογραφία, για τέτοιου είδους μελέτες, προκαλεί μιά κάποια σύγχυση, δεν εντυπωσιάζει. Ωστόσο, προτείνει απαντήσεις (αν και διόλου συμπερασματικές) για τα δύο μεγάλα ζητήματα σχετικά με την εξέλιξη της γλώσσας. Το πρώτο αφορά στην σχετική προτεραιότητα της φωνητικής και της χειρονομιακής οδού για τον λόγο. Το δεύτερο αφορά στον βαθμό κατά τον οποίο η σύνταξη είναι μια πληθωριστική έκρηξη στα πλαίσια μιας γενικότερης αύξησης της πολυπλοκότητας με την οποία οι ανθρωπίδες αναπαρέστησαν νοητικά τον κόσμο, συμπεριλαμβανομένων των κοινωνικών σχέσεων και στον βαθμό στον οποίο συνιστά παραφυάδα ειδικότερης ανάπτυξης, όπως η τελειοποίηση των εργαλείων, η ακριβέστερη ρίψη λίθων ή η μεγαλύτερη ευχέρεια στην άρθρωση.

Πριν σκεφτούμε αυτά τα μεγάλα ζητήματα, αξίζει να υπογραμμίσουμε ότι η σχέση μεταξύ συγκεκριμένων λειτουργιών και συγκεκριμένων περιοχών του εγκεφάλου, δεν είναι ξεκάθαρη και σταθερή ούτε σε μεμονωμένα άτομα, ούτε στο σύνολο του είδους. Εξασκώντας ένα δάχτυλο, μπορεί να αυξηθεί η περιοχή του εγκεφαλικού φλοιού που είναι αφιερωμένη στον έλεγχό του ��αι σε πολλούς τυφλούς ανθρώπους οι περιοχές ελέγχου των δαχτύλων στον φλοιό του εγκεφάλου είναι μεγαλύτερες από τον μέσο όρο. Αυτή η λειτουργική πλαστικότητα είναι ιδιαίτερα εμφανής στην πρώιμη βρεφική ηλικία, έτσι ώστε να μπορεί ένα νεαρό άτομο το οποίο υποφέρει από εκτεταμμένη βλάβη στο αριστερό ημισφαίριο (όπου εδράζει γενικότερα ο έλεγχος της γλώσσας) να αναπτύξει ωστόσο αξιόλογη γλωσσική ικανότητα ελεγχόμενη από το δεξί ημισφαίριο. Πράγματι, δίχως τέτοια πλαστικότητα και περιθώριο λειτουργικής αλληλεπικάλυψης, είναι δύσκολο να διακρίνουμε ακόμη και αν θα μπορούσε να έχει εξελιχθεί η γλώσσα, διότι εμπλέκει απόδοση νέων ρόλων σε περιοχές του εγκεφάλου, οι οποίες αρχικά εξυπηρετούσαν άλλες λειτουργίες.

Η περιοχή του εγκεφάλου η ο��οία μοιάζει να εμπλέκεται ξεκάθαρα με τους κανονικοποίηση της γραμματικής είναι αυτή του Μπροκά, στον εμπρόσθιο λοβό του αριστερού ημισφαιρίου. Ενόψει του πεδίου αλληλεπικάλυψης λειτουργιών, φαίνεται λογικό να προβλέψουμε ότι εάν η γλώσσα ήταν σε αρχικό στάδιο χειρονομιακή, η περιοχή του Μπροκά θα βρισκόταν σχετικά πλησίον εκείνης μέσω της οποίας ο εγκέφαλος ελέγχει τις κινήσεις των χεριών· αλλά αυτό δεν ισχύει. Ο έλεγχος των σωματικών κινήσεων εδράζει στην αποκαλούμενη κινητική ταινία (motor strip) μπροστά από την κεντρική αύλακα ή Ρολανδική σχισμή, η οποία διαχωρίζει τον εμπρόσθιο από τον βρεγματικό λοβό. Η περιοχή Μπροκά, βρίσκεται πράγματι πλησίον του κινητικού φλοιού (προκινητική περιοχή)· αλλά, εγγύτερα στην περιοχή του εκείνη ή οποία έχει τον έλεγχο όχι των χεριών αλλά μάλλον της γλώσσας, των σιαγόνων και των χειλιών. Επιπροσθέτως, άλλη περιοχή, παρόμοιας έκτασης με αυτή του Μπροκά φαίνεται συναφής με την σύνταξη της νοηματικής γλώσσας, ακόμα και ανάμεσα σε εκ γενετής κωφούς ανθρώπους, στο μέτρο της γραμματικής του εκφωνούμενου λόγου (Poizner et al. 1987).

The Brain’s Language Centers-http://www.rhsmpsychology.com/Handouts/brain_and_language.htm

Οι λοβοί του εγκεφάλου (wikipedia)

Πιθανώς, η περιοχή του γραμματικού ελέγχου θα μπορούσε να έχει μεταναστεύσει, τρόπος του λέγειν, εφόσον ο κυρίαρχος γλωσσικός δίαυλος από χειρονομιακός έγινε φωνητικός. Εφόσον, ωστόσο, η παρούσα θέση της περιοχής Μπροκά δεν την εμποδίζει να διαδραματίζει τον ρόλο της στην νοηματική γλώσσα, μια υποθετική γλωσσική περιοχή πλησίον αυτής των χεριών στον προκινητικό φλοιό, πιθανότατα θα μπορούσε να έχει διατηρήσει τον αυθεντικό έλεγχο της γραμματικής ακόμα και όταν η φωνητική οδός διαδέχθηκε την χειρονομιακή. Έτσι, φαίνεται πιό πιθανό η γλωσσική λειτουργία που ασκεί η περιοχή Μπροκά να μην έχει μετακινηθεί και �� παρούσα θέση της στον εγκέφαλο αντανακλά το γεγονός ότι η ανθρώπινη γλώσσα υπήρξε ανέκαθεν κατά βάση φωνητική.

Βλάβη στην περιοχή Μπροκά επηρεάζει την γραμματική, αλλά και την άρθρωση του λόγου, πολύ περισσότερο από το λεξιλόγιο. Οι αφασικοί Μπροκά, μπορούν σε γενικές γραμμές να παράξουν κατάλληλα ουσιαστικά, επίθετα και ρήματα γι᾽ αυτό που προσπαθούν να πούν· το έργο της συνεκτικής οργάνωσής τους σε ορθά δομημένες προτάσεις με κατάλληλες γραμματικές λέξεις (όπως προσδιοριστικά άρθρα, μόρια, άκλιτα μέρη του λόγου, βοηθητικά κλπ.) είναι αυτό που τους προβληματίζει. Συμπληρωματικό είδος αφασίας, στο οποίο εμπλέκεται άπταιστη γραμματική αλλά ακατάλληλο ή ασυνάρτητο λεξιλόγιο, σχετίζεται με βλάβη άλλου μέρους του αριστερού ημισφαιρίου σε περιοχή του κροταφικού και τμήματος του βρεγματικού λοβού, γνωστή ως περιοχή Βερνίκε. Στους αφασικούς Βερνίκε, η φαρέτρα της γραμματικής για την περιγραφή του κόσμου παραμένει άθικτη, αλλά διαταράσσεται η πρόσβαση σε έννοιες οργάνωσης της εμπειρίας τους αυτής (στο μέτρο που μπορεί κάποιος να εξισώνει έννοιες με λέξεις). Επομένως η αφασία Βερνίκε είναι προβληματική για οποιαδήποτε εισήγηση, ότι οι εννοιολογικοί συσχετισμοί, όπως οι θεματικές δομές στις οποίες αναφερθήκαμε νωρίτερα, δεν ήταν απλά απαραίτητη προϋπόθεση για την εξέλιξη του συντακτικού, αλλά μάλλον το έναυσμά του. Στην βάση τούτης της υπόθεσης, θα περίμενε κάποιος ότι η λεκτική και η γραμματική αποδιοργάνωση να είναι άρρηκτα συνδεδεμένες αντί να συμβαίνουν ανεξάρτητα. Έτσι, σε απάντηση του δεύτερου ερωτήματος μας, τα χαρακτηριστικά της αφασίας Βερνίκε υποδηλώνουν ότι για την ισχύουσα εξέλιξη της σύνταξης, ήταν απαραίτητο κάτι πιο εξειδικευμένο από μια γενικότερη εννοιολογική πολυπλοκότητα.

Έχουν γίνει διάφορες προτάσεις αναφορικά με το ειδικό αυτό συστατικό. Ορισμένοι μελετητές έχουν επικαλεσθεί την ιεραρχική οργάνωση σχετικά σύνθετων συμπεριφορών οι οποίες εμπλέκουν εργαλεία (π.χ. Greenfield 1991). Ο William H. Calvin (1993) έχει επισημάνει τις νευροβιολογικές εξελικτικές διαδικασίες οι οποίες είναι απαραίτητες για τον έλεγχο των μυών κατά τον συντονισμό ακριβείας και έχει προτείνει ότι σχετικές νευρικές δομές ενδέχεται να συνεργάστηκαν για γρήγορη και αβίαστη συντακτική οργάνωση λέξεων του προφορικού λόγου. Ωστόσο, τέτοιες προσεγγίσεις δεν προσμετρούν την εγγύτητα της περιοχής Μπροκά με αυτήν του κινητικού φλοιού, η οποία ελέγχει συγκεκριμένα το στόμα. Υπόθεση η οποία αξιοποιεί αυτή την γειτνίαση, θα εξετασθεί παρακάτω.

Στοιχεία γλωσσολογίας

Μπορεί να μοιάζει παράδοξο, μια ενότητα αφιερωμένη στις γλωσσολογικές αποδείξεις για τις καταβολές της γλώσσας να μένει για το τέλος. Ωστόσο, όπως εξηγήθηκε στο πρώτο μέρος, οι γλωσσολόγοι είναι σχετικά νεοαφιχθέντες στο πεδίο αυτό. Με βάση τις συνεισφορές τους, μπορούν να χωρισθούν σε αυτούς που επικεντρώθηκαν στην σχέση γλώσσας-πρωτογλώσσας και σε μία πιο πρόσφατη, ετερόκλιτη ομάδα, εστιασμένη στην λογική εξέλιξη συγκεκριμένων πτυχών της σύγχρονης γραμματικής οργάνωσης.

Πρωτογλώσσα και πραγματική γλώσσα