#Deployment image servicing and management

Explore tagged Tumblr posts

Text

Imagine finally going on your laptop to work on your project that is rapidly becoming due because u beat the executive dysfunction and finding ALL your files deleted (art, apps, sentimental screenshots etc)

And then while you’re fixing your dad’s dumbass mistake file explorer itself corrupts 😞

#FORTUNATELY with my son Dism (the Deployment Image Servicing and Management tool) I was able to fix it 😌#Which reminds me of when this laptop had it’s usb and camera functions corrupted too#That was a whole affair that involved me having a seperate laptop for a while but the most humiliating thing#was after I came back to this one and had it factory reset my dad said it was from a bad double image#and I’m like 😭😭😭 we could’ve used the DISM… like my beloved head child#Ok real talk the nature of how Dism’s name came about is going to have to come out sooner or later#I was planning on taking it with me to the grave but Dolphin consider this a treat for us becoming so close#It’s extremely embarrassing but it’s also 100% the truth#Dism and Archus have their names taken from a persona YouTube channel I used to watch obsessively…..#like the channel name literally is their two names smooshed together in that order#The funny thing is as I got older I learnt that those names were actually the names of the YouTuber’s OCs themselves 😅#They REALLY were meant to be placeholder names but when the time came I really couldn’t find anything that suited#Dism better than his non-name 😅 it’s too fitting to the story and his character even now. especially now.#And I really love the dichotomy between the warm and loved Archie vs the cast-off broken Archus it’s so good for him too#And as a side-note. My Dism is the superior Dism ✨#just pav things

2 notes

·

View notes

Text

#AI Factory#AI Cost Optimize#Responsible AI#AI Security#AI in Security#AI Integration Services#AI Proof of Concept#AI Pilot Deployment#AI Production Solutions#AI Innovation Services#AI Implementation Strategy#AI Workflow Automation#AI Operational Efficiency#AI Business Growth Solutions#AI Compliance Services#AI Governance Tools#Ethical AI Implementation#AI Risk Management#AI Regulatory Compliance#AI Model Security#AI Data Privacy#AI Threat Detection#AI Vulnerability Assessment#AI proof of concept tools#End-to-end AI use case platform#AI solution architecture platform#AI POC for medical imaging#AI POC for demand forecasting#Generative AI in product design#AI in construction safety monitoring

0 notes

Text

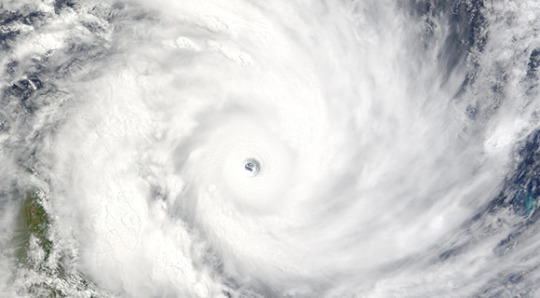

Hurricane Milton Resources, Emergency Contacts, and Recovery Assistance

Hurricane Milton is making landfall in Florida, and residents across the state must prepare for the potential devastation it could bring. With forecasts predicting high winds, torrential rain, and widespread flooding, Hurricane Milton could leave communities struggling to rebuild.

New Image Roofing Atlanta gathered information about Hurricane Milton, the damage and devastation it will likely leave in its path, valuable emergency resources, and what New Image Roofing has invested to assist the urgent upcoming recovery efforts.

New Image Roofing Florida 352-316-6008 is ready to assist residents and businesses with roofing and recovery needs. Below is a breakdown of the potential risks, necessary resources, and emergency contacts to help Floridians navigate this challenging time.

Potential Devastation from Hurricane Milton

Hurricane Milton’s impact on Florida could be catastrophic. Forecasts show a Category 4 storm, and officials urge everyone to prepare for the worst. The potential damage from this hurricane could include:

Winds up to 150 mph – These extreme wind speeds can tear roofs off homes and businesses, uproot trees, and snap power lines. Flying debris could cause significant property damage and put lives at risk.

Torrential rainfall and flooding – Milton is expected to dump up to 20 inches of rain in certain areas, leading to flash flooding in low-lying regions. Coastal areas face the added threat of storm surge, which could inundate homes and infrastructure.

Watch this video to grasp the dangers of storm surge (a storm surge of 15 feet is expected with Hurricane Milton).

youtube

Power outages – Downed power lines will likely cause widespread outages. These outages may last days or weeks, leaving communities without access to essential services.

Tornadoes – Hurricane Milton’s powerful system could spawn tornadoes, particularly in the eastern parts of the state, causing additional destruction.

Watch this video to see Hurricane Milton’s approach to Florida’s west coast.

youtube

New Image Roofing Florida’s Response

New Image Roofing Florida has a strong history of helping communities recover after hurricanes. The company is prepared to assist with Hurricane Milton’s aftermath. As part of their commitment to helping Florida rebuild, New Image Roofing teams will be deployed to the most affected regions as soon as it is safe to begin repairs.

Rapid Deployment – New Image Roofing Florida teams are on standby, ready to travel to hurricane-affected areas to begin emergency repairs. Their teams specialize in patching damaged roofs, installing temporary tarps, and providing long-term roofing solutions.

NEW IMAGE ROOFING FLORIDA 352-316-6008

Residential and Commercial Assistance – New Image Roofing Florida is equipped to handle residential and commercial properties. Their priorities are to rapidly secure buildings, prevent further water damage, and help businesses reopen quickly.

Free Inspections and Estimates – The company offers free roof inspections and damage estimates for all affected Floridians.

Experienced Hurricane Recovery Teams – With years of experience handling the aftermath of powerful storms, New Image Roofing Florida will work efficiently to secure homes, schools, businesses, and critical infrastructure.

Federal and State Resources

In the wake of Hurricane Milton, Floridians will rely on various state and federal agencies to provide essential services. Below is a list of important contacts and resources for emergency assistance, shelters, and recovery support:

Federal Emergency Management Agency (FEMA)

Website: fema.gov Phone: 1-800-621-FEMA (3362)

Services: FEMA provides disaster relief assistance, including temporary housing, emergency financial aid, and infrastructure repair.

American Red Cross

Website: redcross.org Phone: 1-800-RED-CROSS (733-2767)

Services: The Red Cross offers shelter, food, and medical support during and after disasters.

Florida Division of Emergency Management (FDEM)

Website: floridadisaster.org Phone: 850-815-4000 State Assistance Emergency Line: 1-800-342-3557 Florida Relay Service: Dial 711 (TDD/TTY)

Services: FDEM coordinates state-wide emergency response, disaster recovery, and evacuation orders.

New Image Roofing Florida

Website: newimageroofingfl.com Phone: 352-316-6008

Services: New Image Roofing Florida provides full-service emergency roof inspections, patching up damaged roofs, installing temporary tarps, and providing long-term roofing solutions. The company will also coordinate/attend adjusters meetings with your insurance agency.

Florida Power & Light (FPL)

Website: fpl.com Phone: 1-800-468-8243

Services: FPL provides power outage reporting and updates on restoration timelines.

National Flood Insurance Program (NFIP)

Website: floodsmart.gov Phone: 1-888-379-9531

Services: NFIP provides information about flood insurance policies and assistance with claims after flood damage.

Florida Department of Transportation (FDOT)

Website: fdot.gov Phone: 1-850-414-4100

Services: FDOT manages road closures and traffic conditions. They provide real-time updates about safe evacuation routes and road repairs after a storm.

Local Florida County Emergency Services

Each Florida county has emergency management teams coordinating shelters, first responders, and relief efforts. Check your county’s website for specific contact numbers and resources. At-risk counties include:

Charlotte Citrus De Soto Flagler Glades Hardee Hernando Hillsborough Manatee Pasco Pinellas Sarasota Sumter

Visit WUSF (West Central Florida’s NPR station) website for valuable local information, emergency shelter, and guidance.

Website: wusf.org

Hurricane Season Risks and Preparedness

Florida’s hurricane season runs from June 1 to November 30. Hurricane Milton is hitting just as the state braces for more potential storms. The danger doesn’t end when the hurricane passes. After a storm like Milton, communities are left vulnerable to future weather events. The risk of another hurricane striking Florida before Milton’s recovery remains high.

Weakening Infrastructure – After Milton, homes and businesses will be more susceptible to damage from weaker tropical storms or hurricanes. Unrepaired roofs and weakened structures could collapse or fail under minimal pressure.

Flooding Risks – Milton’s heavy rainfall and storm surge will saturate the ground and fill waterways. This will leave communities vulnerable to even small rain events, with the potential for additional flooding.

Power Restoration Delays – With Milton causing widespread outages, the power grid may remain unstable for weeks, making it difficult for residents to recover fully before the next storm hits.

Preparing for Future Storms – Residents must begin making plans now for the rest of hurricane season. Stock up on supplies, make sure your property is secure, and stay informed about future weather developments.

Additional Tips for Hurricane Preparedness

To ensure the safety of yourself and your loved ones, follow these guidelines when preparing for a Hurricane:

Evacuate if Ordered – Listen to local officials and immediately evacuate if you are in an evacuation zone. Delaying could put your life at risk.

youtube

Secure Your Property – Install hurricane shutters, trim trees, and secure outdoor items. Consider having your roof inspected by New Image Roofing before the storm hits.

Prepare a Disaster Kit – Include essentials like water, food, medications, flashlights, batteries, and important documents.

Stay Informed – Official sources like FEMA, FDEM, and the National Weather Service offer updates and information.

Read more about hurricane preparedness at newimageroofingatlanta.com/hurricane-preparedness-a-comprehensive-guide

Hurricane Milton Resources and Recovery

In this article, you discovered information about hurricane preparedness, potential severe damage to roofs and homes, post-hurricane emergency services and resources, and how to repair your home and roof after the storm.

Your awareness and preparedness for Hurricane Milton (and coming storms) will minimize damages and help you return to normal in the storm’s aftermath.

Lack of proactive measures and delayed action will leave you uninformed, in life-threatening situations, and severely challenged to get your home and roof repaired after a hurricane sweeps through your community.

New Image Roofing Florida – 352-316-6008

Sources: fema.gov/disaster/current/hurricane-milton climate.gov/news-features/event-tracker/hurricane-milton-rapidly-intensifies-category-5-hurricane-becoming nhc.noaa.gov/refresh/graphics_at4+shtml/150217.shtml?cone

New Image Roofing Atlanta

2020 Howell Mill Rd NW Suite 232 Atlanta, GA30318 (404) 680-0041

To see the original version of this article, visit https://www.newimageroofingatlanta.com/hurricane-milton-resources-emergency-contacts-and-recovery-assistance/

#emergency roof repair#residential roofing#residential roofer atlanta#Hurricane Milton#Hurricane Emergency Resources#Hurricane Milton Emergency#Hurricane#Youtube

34 notes

·

View notes

Text

B-2 Stealth Bomber Demoes QUICKSINK Low Cost Maritime Strike Capability During RIMPAC 2024

The U.S. Air Force B-2 Spirit carried out a QUICKSINK demonstration during the second SINKEX (Sinking Exercise) of RIMPAC 2024. This marks the very first time a B-2 Spirit has been publicly reported to test this anti-ship capability.

David Cenciotti

B-2 QUICKSINK

File photo of a B-2 Spirit (Image credit: Howard German / The Aviationist)

RIMPAC 2024, the 29th in the series since 1971, sees the involvement of 29 nations, 40 surface ships, three submarines, 14 national land forces, over 150 aircraft, and 25,000 personnel. During the drills, two long-planned live-fire sinking exercises (SINKEXs) led to the sinking of two decommissioned ships: USS Dubuque (LPD 8), sunk on July 11, 2024; and the USS Tarawa (LHA 1), sunk on July 19. Both were sunk in waters 15,000 feet deep, located over 50 nautical miles off the northern coast of Kauai, Hawaii.

SINKEXs are training exercises in which decommissioned naval vessels are used as targets. These exercises allow participating forces to practice and demonstrate their capabilities in live-fire scenarios providing a unique and realistic training environment that cannot be replicated through simulations or other training methods.

RIMPAC 2024’s SINKEXs allowed units from Australia, Malaysia, the Netherlands, South Korea, and various U.S. military branches, including the Air Force, Army, and Navy, to enhance their skills and tactics as well as validate targeting, and live firing capabilities against surface ships at sea. They also helped improve the ability of partner nations to plan, communicate, and execute complex maritime operations, including precision and long-range strikes.

LRASM

During the sinking of the ex-Tarawa, a U.S. Navy F/A-18F Super Hornet deployed a Long-Range Anti-Ship Missile (LRASM). This advanced, stealthy cruise missile offers multi-service, multi-platform, and multi-mission capabilities for offensive anti-surface warfare and is currently deployed from U.S. Navy F/A-18 and U.S. Air Force B-1B aircraft.

The AGM-158C LRASM, based on the AGM-158B Joint Air-to-Surface Standoff Missile – Extended Range (JASSM-ER), is the new low-observable anti-ship cruise missile developed by DARPA (Defense Advanced Research Projects Agency) for the U.S. Air Force and U.S. Navy. NAVAIR describes the weapon as a defined near-term solution for the Offensive Anti-Surface Warfare (OASuW) air-launch capability gap that will provide flexible, long-range, advanced, anti-surface capability against high-threat maritime targets.

QUICKSINK

Remarkably, in a collaborative effort with the U.S. Navy, a U.S. Air Force B-2 Spirit stealth bomber also took part in the second SINKEX, demonstrating a low-cost, air-delivered method for neutralizing surface vessels using the QUICKSINK. Funded by the Office of the Under Secretary of Defense for Research and Engineering, the QUICKSINK experiment aims to provide cost-effective solutions to quickly neutralize maritime threats over vast ocean areas, showcasing the flexibility of the joint force.

The Quicksink initiative, in collaboration with the U.S. Navy, is designed to offer innovative solutions for swiftly neutralizing stationary or moving maritime targets at a low cost, showcasing the adaptability of joint military operations for future combat scenarios. “Quicksink is distinctive as it brings new capabilities to both current and future Department of Defense weapon systems, offering combatant commanders and national leaders fresh methods to counter maritime threats,” explained Kirk Herzog, the program manager at the Air Force Research Laboratory (AFRL).

Traditionally, enemy ships are targeted using submarine-launched heavyweight torpedoes, which, while effective, come with high costs and limited deployment capabilities among naval assets. “Heavyweight torpedoes are efficient at sinking large ships but are expensive and deployed by a limited number of naval platforms,” stated Maj. Andrew Swanson, division chief of Advanced Programs at the 85th Test and Evaluation Squadron. “Quicksink provides a cost-effective and agile alternative that could be used by a majority of Air Force combat aircraft, thereby expanding the options available to combatant commanders and warfighters.”

Regarding weapon guidance, the QUICKSINK kit combines a GBU-31/B Joint Direct Attack Munition’s existing GPS-assisted inertial navigation system (INS) guidance in the tail with a new radar seeker installed on the nose combined with an IIR (Imaging Infra-Red) camera mounted in a fairing on the side. When released, the bomb uses the standard JDAM kit to glide to the target area and the seeker/camera to lock on the ship. Once lock on is achieved, the guidance system directs the bomb to detonate near the hull below the waterline.

Previous QUICKSINK demonstrations in 2021 and 2022 featured F-15E Strike Eagles deploying modified 2,000-pound GBU-31 JDAMs. This marks the very first time a B-2 Spirit has been publicly reported to test this anti-ship capability. Considering a B-2 can carry up to 16 GBU-31 JDAMs, this highlights the significant anti-surface firepower a single stealth bomber can bring to a maritime conflict scenario.

Quicksink

F-15E Strike Eagle at Eglin Air Force Base, Fla. with modified 2,000-pound GBU-31 Joint Direct Attack Munitions as part of the second test in the QUICKSINK Joint Capability Technology Demonstration on April 28, 2022. (U.S. Air Force photo / 1st Lt Lindsey Heflin)

SINKEXs

“Sinking exercises allow us to hone our skills, learn from one another, and gain real-world experience,” stated U.S. Navy Vice Adm. John Wade, the RIMPAC 2024 Combined Task Force Commander in a public statement. “These drills demonstrate our commitment to maintaining a safe and open Indo-Pacific region.”

Ships used in SINKEXs, known as hulks, are prepared in strict compliance with Environmental Protection Agency (EPA) regulations under a general permit the Navy holds pursuant to the Marine Protection, Research, and Sanctuaries Act. Each SINKEX requires the hulk to sink in water at least 6,000 feet deep and more than 50 nautical miles from land.

In line with EPA guidelines, before a SINKEX, the Navy thoroughly cleans the hulk, removing all materials that could harm the marine environment, including polychlorinated biphenyls (PCBs), petroleum, trash, and other hazardous materials. The cleaning process is documented and reported to the EPA before and after the SINKEX.

Royal Netherlands Navy De Zeven Provinciën-class frigate HNLMS Tromp (F803) fires a Harpoon missile during a long-planned live fire sinking exercise as part of Exercise Rim of the Pacific (RIMPAC) 2024. (Royal Netherlands Navy photo by Cristian Schrik)

SINKEXs are conducted only after the area is surveyed to ensure no people, marine vessels, aircraft, or marine species are present. These exercises comply with the National Environmental Policy Act and are executed following permits and authorizations under the Marine Mammal Protection Act, Endangered Species Act, and Marine Protection, Research, and Sanctuaries Act.

The ex-Dubuque, an Austin-class amphibious transport dock, was commissioned on September 1, 1967, and served in Vietnam, Operation Desert Shield, and other missions before being decommissioned in June 2011. The ex-Tarawa, the lead amphibious assault ship of its class, was commissioned on May 29, 1976, participated in numerous operations including Desert Shield and Iraqi Freedom, and was decommissioned in March 2009.

This year marks the second time a Tarawa-class ship has been used for a SINKEX, following the sinking of the ex-USS Belleau Wood (LHA 3) during RIMPAC 2006.

H/T Ryan Chan for the heads up!

About David Cenciotti

David Cenciotti is a journalist based in Rome, Italy. He is the Founder and Editor of “The Aviationist”, one of the world’s most famous and read military aviation blogs. Since 1996, he has written for major worldwide magazines, including Air Forces Monthly, Combat Aircraft, and many others, covering aviation, defense, war, industry, intelligence, crime and cyberwar. He has reported from the U.S., Europe, Australia and Syria, and flown several combat planes with different air forces. He is a former 2nd Lt. of the Italian Air Force, a private pilot and a graduate in Computer Engineering. He has written five books and contributed to many more ones.

@TheAviationist.com

12 notes

·

View notes

Text

NASA anticipates lunar findings from next-generation retroreflector

Apollo astronauts set up mirror arrays, or "retroreflectors," on the moon to accurately reflect laser light beamed at them from Earth with minimal scattering or diffusion. Retroreflectors are mirrors that reflect the incoming light back in the same incoming direction.

Calculating the time required for the beams to bounce back allowed scientists to precisely measure the moon's shape and distance from Earth, both of which are directly affected by Earth's gravitational pull. More than 50 years later, on the cusp of NASA's crewed Artemis missions to the moon, lunar research still leverages data from those Apollo-era retroreflectors.

As NASA prepares for the science and discoveries of the agency's Artemis campaign, state-of-the-art retroreflector technology is expected to significantly expand our knowledge about Earth's sole natural satellite, its geological processes, the properties of the lunar crust and the structure of lunar interior, and how the Earth-moon system is changing over time. This technology will also allow high-precision tests of Einstein's theory of gravity, or general relativity.

That's the anticipated objective of an innovative science instrument called NGLR (Next Generation Lunar Retroreflector), one of 10 NASA payloads set to fly aboard the next lunar delivery for the agency's CLPS (Commercial Lunar Payload Services) initiative. NGLR-1 will be carried to the surface by Firefly Aerospace's Blue Ghost 1 lunar lander.

Developed by researchers at the University of Maryland in College Park, NGLR-1 will be delivered to the lunar surface, located on the Blue Ghost lander, to reflect very short laser pulses from Earth-based lunar laser ranging observatories, which could greatly improve on Apollo-era results with sub-millimeter-precision range measurements.

If successful, its findings will expand humanity's understanding of the moon's inner structure and support new investigations of astrophysics, cosmology, and lunar physics—including shifts in the moon's liquid core as it orbits Earth, which may cause seismic activity on the lunar surface.

"NASA has more than half a century of experience with retroreflectors, but NGLR-1 promises to deliver findings an order of magnitude more accurate than Apollo-era reflectors," said Dennis Harris, who manages the NGLR payload for the CLPS initiative at NASA's Marshall Space Flight Center in Huntsville, Alabama.

Deployment of the NGLR payload is just the first step, Harris noted. A second NGLR retroreflector, called the Artemis Lunar Laser Retroreflector (ALLR), is currently a candidate payload for flight on NASA's Artemis III mission to the moon and could be set up near the lunar south pole. A third is expected to be manifested on a future CLPS delivery to a non-polar location.

"Once all three retroreflectors are operating, they are expected to deliver unprecedented opportunities to learn more about the moon and its relationship with Earth," Harris said.

Under the CLPS model, NASA is investing in commercial delivery services to the moon to enable industry growth and support long-term lunar exploration. As a primary customer for CLPS deliveries, NASA aims to be one of many customers on future flights. NASA's Marshall Space Flight Center in Huntsville, Alabama, manages the development of seven of the 10 CLPS payloads carried on Firefly's Blue Ghost lunar lander.

IMAGE: Next Generation Lunar Retroreflector, or NGLR-1, is one of 10 payloads set to fly aboard the next delivery for NASA’s CLPS (Commercial Lunar Payload Services) initiative in 2025. NGLR-1, outfitted with a retroreflector, will be delivered to the lunar surface to reflect very short laser pulses from Earth-based lunar laser ranging observatories. Credit: Firefly Aerospace

2 notes

·

View notes

Text

How Artificial Intelligence can both benefit us and affect humans?

The evolution of artificial intelligence (AI) brings both significant benefits and notable challenges to society.

And my opinion about artificial intelligence is that can benefit us but in a certain way it can also affect us.

And you will say why I think that is good because mainly it is because several aspects are going to change and for some things the help you give us will be useful but for other things it is going to screw us up very well.

And now I'm going to tell you some Advantages and some Disadvantages of AI

Benefits:

1. Automation and Efficiency: AI automates repetitive tasks, increasing productivity and freeing humans to focus on more complex and creative work. This is evident in manufacturing, customer service, and data analysis.

2. Healthcare Improvements: AI enhances diagnostics, personalizes treatment plans, and aids in drug discovery. For example, AI algorithms can detect diseases like cancer from medical images with high accuracy.

3. Enhanced Decision Making: AI systems analyze large datasets to provide insights and predictions, supporting better decision-making in sectors such as finance, marketing, and logistics.

4. Personalization: AI personalizes user experiences in areas like online shopping, streaming services, and digital advertising, improving customer satisfaction and engagement.

5. Scientific Research: AI accelerates research and development by identifying patterns and making predictions that can lead to new discoveries in fields like genomics, climate science, and physics.

Challenges:

1. Job Displacement: Automation can lead to job loss in sectors where AI can perform tasks traditionally done by humans, leading to economic and social challenges.

2. Bias and Fairness: AI systems can perpetuate and amplify existing biases if they are trained on biased data, leading to unfair outcomes in areas like hiring, law enforcement, and lending.

3. Privacy Concerns: The use of AI in data collection and analysis raises significant privacy issues, as vast amounts of personal information can be gathered and potentially misused.

4. Security Risks: AI can be used maliciously, for instance, in creating deepfakes or automating cyberattacks, posing new security threats that are difficult to combat.

5. Ethical Dilemmas: The deployment of AI in critical areas like autonomous vehicles and military applications raises ethical questions about accountability and the potential for unintended consequences.

Overall, while the evolution of AI offers numerous advantages that can enhance our lives and drive progress, it also requires careful consideration and management of its potential risks and ethical implications. Society must navigate these complexities to ensure AI development benefits humanity as a whole.

2 notes

·

View notes

Text

Future-Ready Enterprises: The Crucial Role of Large Vision Models (LVMs)

New Post has been published on https://thedigitalinsider.com/future-ready-enterprises-the-crucial-role-of-large-vision-models-lvms/

Future-Ready Enterprises: The Crucial Role of Large Vision Models (LVMs)

What are Large Vision Models (LVMs)

Over the last few decades, the field of Artificial Intelligence (AI) has experienced rapid growth, resulting in significant changes to various aspects of human society and business operations. AI has proven to be useful in task automation and process optimization, as well as in promoting creativity and innovation. However, as data complexity and diversity continue to increase, there is a growing need for more advanced AI models that can comprehend and handle these challenges effectively. This is where the emergence of Large Vision Models (LVMs) becomes crucial.

LVMs are a new category of AI models specifically designed for analyzing and interpreting visual information, such as images and videos, on a large scale, with impressive accuracy. Unlike traditional computer vision models that rely on manual feature crafting, LVMs leverage deep learning techniques, utilizing extensive datasets to generate authentic and diverse outputs. An outstanding feature of LVMs is their ability to seamlessly integrate visual information with other modalities, such as natural language and audio, enabling a comprehensive understanding and generation of multimodal outputs.

LVMs are defined by their key attributes and capabilities, including their proficiency in advanced image and video processing tasks related to natural language and visual information. This includes tasks like generating captions, descriptions, stories, code, and more. LVMs also exhibit multimodal learning by effectively processing information from various sources, such as text, images, videos, and audio, resulting in outputs across different modalities.

Additionally, LVMs possess adaptability through transfer learning, meaning they can apply knowledge gained from one domain or task to another, with the capability to adapt to new data or scenarios through minimal fine-tuning. Moreover, their real-time decision-making capabilities empower rapid and adaptive responses, supporting interactive applications in gaming, education, and entertainment.

How LVMs Can Boost Enterprise Performance and Innovation?

Adopting LVMs can provide enterprises with powerful and promising technology to navigate the evolving AI discipline, making them more future-ready and competitive. LVMs have the potential to enhance productivity, efficiency, and innovation across various domains and applications. However, it is important to consider the ethical, security, and integration challenges associated with LVMs, which require responsible and careful management.

Moreover, LVMs enable insightful analytics by extracting and synthesizing information from diverse visual data sources, including images, videos, and text. Their capability to generate realistic outputs, such as captions, descriptions, stories, and code based on visual inputs, empowers enterprises to make informed decisions and optimize strategies. The creative potential of LVMs emerges in their ability to develop new business models and opportunities, particularly those using visual data and multimodal capabilities.

Prominent examples of enterprises adopting LVMs for these advantages include Landing AI, a computer vision cloud platform addressing diverse computer vision challenges, and Snowflake, a cloud data platform facilitating LVM deployment through Snowpark Container Services. Additionally, OpenAI, contributes to LVM development with models like GPT-4, CLIP, DALL-E, and OpenAI Codex, capable of handling various tasks involving natural language and visual information.

In the post-pandemic landscape, LVMs offer additional benefits by assisting enterprises in adapting to remote work, online shopping trends, and digital transformation. Whether enabling remote collaboration, enhancing online marketing and sales through personalized recommendations, or contributing to digital health and wellness via telemedicine, LVMs emerge as powerful tools.

Challenges and Considerations for Enterprises in LVM Adoption

While the promises of LVMs are extensive, their adoption is not without challenges and considerations. Ethical implications are significant, covering issues related to bias, transparency, and accountability. Instances of bias in data or outputs can lead to unfair or inaccurate representations, potentially undermining the trust and fairness associated with LVMs. Thus, ensuring transparency in how LVMs operate and the accountability of developers and users for their consequences becomes essential.

Security concerns add another layer of complexity, requiring the protection of sensitive data processed by LVMs and precautions against adversarial attacks. Sensitive information, ranging from health records to financial transactions, demands robust security measures to preserve privacy, integrity, and reliability.

Integration and scalability hurdles pose additional challenges, especially for large enterprises. Ensuring compatibility with existing systems and processes becomes a crucial factor to consider. Enterprises need to explore tools and technologies that facilitate and optimize the integration of LVMs. Container services, cloud platforms, and specialized platforms for computer vision offer solutions to enhance the interoperability, performance, and accessibility of LVMs.

To tackle these challenges, enterprises must adopt best practices and frameworks for responsible LVM use. Prioritizing data quality, establishing governance policies, and complying with relevant regulations are important steps. These measures ensure the validity, consistency, and accountability of LVMs, enhancing their value, performance, and compliance within enterprise settings.

Future Trends and Possibilities for LVMs

With the adoption of digital transformation by enterprises, the domain of LVMs is poised for further evolution. Anticipated advancements in model architectures, training techniques, and application areas will drive LVMs to become more robust, efficient, and versatile. For example, self-supervised learning, which enables LVMs to learn from unlabeled data without human intervention, is expected to gain prominence.

Likewise, transformer models, renowned for their ability to process sequential data using attention mechanisms, are likely to contribute to state-of-the-art outcomes in various tasks. Similarly, Zero-shot learning, allowing LVMs to perform tasks they have not been explicitly trained on, is set to expand their capabilities even further.

Simultaneously, the scope of LVM application areas is expected to widen, encompassing new industries and domains. Medical imaging, in particular, holds promise as an avenue where LVMs could assist in the diagnosis, monitoring, and treatment of various diseases and conditions, including cancer, COVID-19, and Alzheimer’s.

In the e-commerce sector, LVMs are expected to enhance personalization, optimize pricing strategies, and increase conversion rates by analyzing and generating images and videos of products and customers. The entertainment industry also stands to benefit as LVMs contribute to the creation and distribution of captivating and immersive content across movies, games, and music.

To fully utilize the potential of these future trends, enterprises must focus on acquiring and developing the necessary skills and competencies for the adoption and implementation of LVMs. In addition to technical challenges, successfully integrating LVMs into enterprise workflows requires a clear strategic vision, a robust organizational culture, and a capable team. Key skills and competencies include data literacy, which encompasses the ability to understand, analyze, and communicate data.

The Bottom Line

In conclusion, LVMs are effective tools for enterprises, promising transformative impacts on productivity, efficiency, and innovation. Despite challenges, embracing best practices and advanced technologies can overcome hurdles. LVMs are envisioned not just as tools but as pivotal contributors to the next technological era, requiring a thoughtful approach. A practical adoption of LVMs ensures future readiness, acknowledging their evolving role for responsible integration into business processes.

#Accessibility#ai#Alzheimer's#Analytics#applications#approach#Art#artificial#Artificial Intelligence#attention#audio#automation#Bias#Business#Cancer#Cloud#cloud data#cloud platform#code#codex#Collaboration#Commerce#complexity#compliance#comprehensive#computer#Computer vision#container#content#covid

2 notes

·

View notes

Text

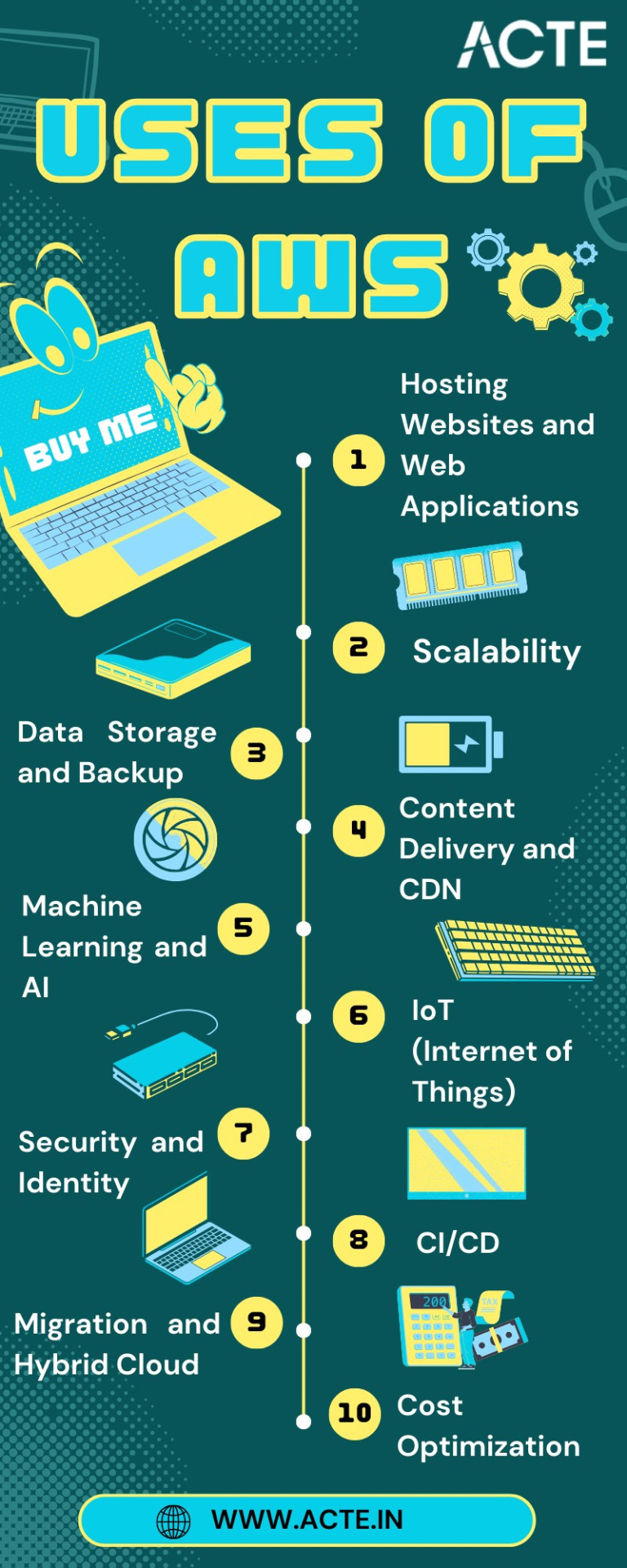

Your Journey Through the AWS Universe: From Amateur to Expert

In the ever-evolving digital landscape, cloud computing has emerged as a transformative force, reshaping the way businesses and individuals harness technology. At the forefront of this revolution stands Amazon Web Services (AWS), a comprehensive cloud platform offered by Amazon. AWS is a dynamic ecosystem that provides an extensive range of services, designed to meet the diverse needs of today's fast-paced world.

This guide is your key to unlocking the boundless potential of AWS. We'll embark on a journey through the AWS universe, exploring its multifaceted applications and gaining insights into why it has become an indispensable tool for organizations worldwide. Whether you're a seasoned IT professional or a newcomer to cloud computing, this comprehensive resource will illuminate the path to mastering AWS and leveraging its capabilities for innovation and growth. Join us as we clarify AWS and discover how it is reshaping the way we work, innovate, and succeed in the digital age.

Navigating the AWS Universe:

Hosting Websites and Web Applications: AWS provides a secure and scalable place for hosting websites and web applications. Services like Amazon EC2 and Amazon S3 empower businesses to deploy and manage their online presence with unwavering reliability and high performance.

Scalability: At the core of AWS lies its remarkable scalability. Organizations can seamlessly adjust their infrastructure according to the ebb and flow of workloads, ensuring optimal resource utilization in today's ever-changing business environment.

Data Storage and Backup: AWS offers a suite of robust data storage solutions, including the highly acclaimed Amazon S3 and Amazon EBS. These services cater to the diverse spectrum of data types, guaranteeing data security and perpetual availability.

Databases: AWS presents a panoply of database services such as Amazon RDS, DynamoDB, and Redshift, each tailored to meet specific data management requirements. Whether it's a relational database, a NoSQL database, or data warehousing, AWS offers a solution.

Content Delivery and CDN: Amazon CloudFront, AWS's content delivery network (CDN) service, ushers in global content distribution with minimal latency and blazing data transfer speeds. This ensures an impeccable user experience, irrespective of geographical location.

Machine Learning and AI: AWS boasts a rich repertoire of machine learning and AI services. Amazon SageMaker simplifies the development and deployment of machine learning models, while pre-built AI services cater to natural language processing, image analysis, and more.

Analytics: In the heart of AWS's offerings lies a robust analytics and business intelligence framework. Services like Amazon EMR enable the processing of vast datasets using popular frameworks like Hadoop and Spark, paving the way for data-driven decision-making.

IoT (Internet of Things): AWS IoT services provide the infrastructure for the seamless management and data processing of IoT devices, unlocking possibilities across industries.

Security and Identity: With an unwavering commitment to data security, AWS offers robust security features and identity management through AWS Identity and Access Management (IAM). Users wield precise control over access rights, ensuring data integrity.

DevOps and CI/CD: AWS simplifies DevOps practices with services like AWS CodePipeline and AWS CodeDeploy, automating software deployment pipelines and enhancing collaboration among development and operations teams.

Content Creation and Streaming: AWS Elemental Media Services facilitate the creation, packaging, and efficient global delivery of video content, empowering content creators to reach a global audience seamlessly.

Migration and Hybrid Cloud: For organizations seeking to migrate to the cloud or establish hybrid cloud environments, AWS provides a suite of tools and services to streamline the process, ensuring a smooth transition.

Cost Optimization: AWS's commitment to cost management and optimization is evident through tools like AWS Cost Explorer and AWS Trusted Advisor, which empower users to monitor and control their cloud spending effectively.

In this comprehensive journey through the expansive landscape of Amazon Web Services (AWS), we've embarked on a quest to unlock the power and potential of cloud computing. AWS, standing as a colossus in the realm of cloud platforms, has emerged as a transformative force that transcends traditional boundaries.

As we bring this odyssey to a close, one thing is abundantly clear: AWS is not merely a collection of services and technologies; it's a catalyst for innovation, a cornerstone of scalability, and a conduit for efficiency. It has revolutionized the way businesses operate, empowering them to scale dynamically, innovate relentlessly, and navigate the complexities of the digital era.

In a world where data reigns supreme and agility is a competitive advantage, AWS has become the bedrock upon which countless industries build their success stories. Its versatility, reliability, and ever-expanding suite of services continue to shape the future of technology and business.

Yet, AWS is not a solitary journey; it's a collaborative endeavor. Institutions like ACTE Technologies play an instrumental role in empowering individuals to master the AWS course. Through comprehensive training and education, learners are not merely equipped with knowledge; they are forged into skilled professionals ready to navigate the AWS universe with confidence.

As we contemplate the future, one thing is certain: AWS is not just a destination; it's an ongoing journey. It's a journey toward greater innovation, deeper insights, and boundless possibilities. AWS has not only transformed the way we work; it's redefining the very essence of what's possible in the digital age. So, whether you're a seasoned cloud expert or a newcomer to the cloud, remember that AWS is not just a tool; it's a gateway to a future where technology knows no bounds, and success knows no limits.

6 notes

·

View notes

Text

Using Docker for Full Stack Development and Deployment

1. Introduction to Docker

What is Docker? Docker is an open-source platform that automates the deployment, scaling, and management of applications inside containers. A container packages your application and its dependencies, ensuring it runs consistently across different computing environments.

Containers vs Virtual Machines (VMs)

Containers are lightweight and use fewer resources than VMs because they share the host operating system’s kernel, while VMs simulate an entire operating system. Containers are more efficient and easier to deploy.

Docker containers provide faster startup times, less overhead, and portability across development, staging, and production environments.

Benefits of Docker in Full Stack Development

Portability: Docker ensures that your application runs the same way regardless of the environment (dev, test, or production).

Consistency: Developers can share Dockerfiles to create identical environments for different developers.

Scalability: Docker containers can be quickly replicated, allowing your application to scale horizontally without a lot of overhead.

Isolation: Docker containers provide isolated environments for each part of your application, ensuring that dependencies don’t conflict.

2. Setting Up Docker for Full Stack Applications

Installing Docker and Docker Compose

Docker can be installed on any system (Windows, macOS, Linux). Provide steps for installing Docker and Docker Compose (which simplifies multi-container management).

Commands:

docker --version to check the installed Docker version.

docker-compose --version to check the Docker Compose version.

Setting Up Project Structure

Organize your project into different directories (e.g., /frontend, /backend, /db).

Each service will have its own Dockerfile and configuration file for Docker Compose.

3. Creating Dockerfiles for Frontend and Backend

Dockerfile for the Frontend:

For a React/Angular app:

Dockerfile

FROM node:14 WORKDIR /app COPY package*.json ./ RUN npm install COPY . . EXPOSE 3000 CMD ["npm", "start"]

This Dockerfile installs Node.js dependencies, copies the application, exposes the appropriate port, and starts the server.

Dockerfile for the Backend:

For a Python Flask app

Dockerfile

FROM python:3.9 WORKDIR /app COPY requirements.txt . RUN pip install -r requirements.txt COPY . . EXPOSE 5000 CMD ["python", "app.py"]

For a Java Spring Boot app:

Dockerfile

FROM openjdk:11 WORKDIR /app COPY target/my-app.jar my-app.jar EXPOSE 8080 CMD ["java", "-jar", "my-app.jar"]

This Dockerfile installs the necessary dependencies, copies the code, exposes the necessary port, and runs the app.

4. Docker Compose for Multi-Container Applications

What is Docker Compose? Docker Compose is a tool for defining and running multi-container Docker applications. With a docker-compose.yml file, you can configure services, networks, and volumes.

docker-compose.yml Example:

yaml

version: "3" services: frontend: build: context: ./frontend ports: - "3000:3000" backend: build: context: ./backend ports: - "5000:5000" depends_on: - db db: image: postgres environment: POSTGRES_USER: user POSTGRES_PASSWORD: password POSTGRES_DB: mydb

This YAML file defines three services: frontend, backend, and a PostgreSQL database. It also sets up networking and environment variables.

5. Building and Running Docker Containers

Building Docker Images:

Use docker build -t <image_name> <path> to build images.

For example:

bash

docker build -t frontend ./frontend docker build -t backend ./backend

Running Containers:

You can run individual containers using docker run or use Docker Compose to start all services:

bash

docker-compose up

Use docker ps to list running containers, and docker logs <container_id> to check logs.

Stopping and Removing Containers:

Use docker stop <container_id> and docker rm <container_id> to stop and remove containers.

With Docker Compose: docker-compose down to stop and remove all services.

6. Dockerizing Databases

Running Databases in Docker:

You can easily run databases like PostgreSQL, MySQL, or MongoDB as Docker containers.

Example for PostgreSQL in docker-compose.yml:

yaml

db: image: postgres environment: POSTGRES_USER: user POSTGRES_PASSWORD: password POSTGRES_DB: mydb

Persistent Storage with Docker Volumes:

Use Docker volumes to persist database data even when containers are stopped or removed:

yaml

volumes: - db_data:/var/lib/postgresql/data

Define the volume at the bottom of the file:

yaml

volumes: db_data:

Connecting Backend to Databases:

Your backend services can access databases via Docker networking. In the backend service, refer to the database by its service name (e.g., db).

7. Continuous Integration and Deployment (CI/CD) with Docker

Setting Up a CI/CD Pipeline:

Use Docker in CI/CD pipelines to ensure consistency across environments.

Example: GitHub Actions or Jenkins pipeline using Docker to build and push images.

Example .github/workflows/docker.yml:

yaml

name: CI/CD Pipeline on: [push] jobs: build: runs-on: ubuntu-latest steps: - name: Checkout Code uses: actions/checkout@v2 - name: Build Docker Image run: docker build -t myapp . - name: Push Docker Image run: docker push myapp

Automating Deployment:

Once images are built and pushed to a Docker registry (e.g., Docker Hub, Amazon ECR), they can be pulled into your production or staging environment.

8. Scaling Applications with Docker

Docker Swarm for Orchestration:

Docker Swarm is a native clustering and orchestration tool for Docker. You can scale your services by specifying the number of replicas.

Example:

bash

docker service scale myapp=5

Kubernetes for Advanced Orchestration:

Kubernetes (K8s) is more complex but offers greater scalability and fault tolerance. It can manage Docker containers at scale.

Load Balancing and Service Discovery:

Use Docker Swarm or Kubernetes to automatically load balance traffic to different container replicas.

9. Best Practices

Optimizing Docker Images:

Use smaller base images (e.g., alpine images) to reduce image size.

Use multi-stage builds to avoid unnecessary dependencies in the final image.

Environment Variables and Secrets Management:

Store sensitive data like API keys or database credentials in Docker secrets or environment variables rather than hardcoding them.

Logging and Monitoring:

Use tools like Docker’s built-in logging drivers, or integrate with ELK stack (Elasticsearch, Logstash, Kibana) for advanced logging.

For monitoring, tools like Prometheus and Grafana can be used to track Docker container metrics.

10. Conclusion

Why Use Docker in Full Stack Development? Docker simplifies the management of complex full-stack applications by ensuring consistent environments across all stages of development. It also offers significant performance benefits and scalability options.

Recommendations:

Encourage users to integrate Docker with CI/CD pipelines for automated builds and deployment.

Mention the use of Docker for microservices architecture, enabling easy scaling and management of individual services.

WEBSITE: https://www.ficusoft.in/full-stack-developer-course-in-chennai/

0 notes

Text

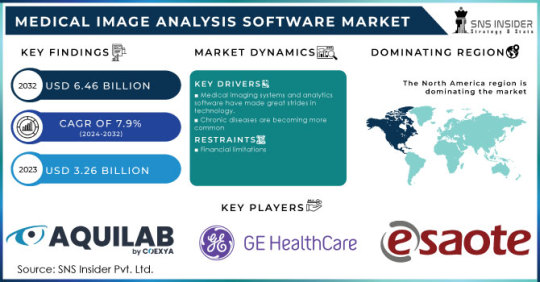

Medical Image Analysis Software Market Business Landscape Changes and Market Growth Patterns

The global medical image analysis software market is projected to experience substantial growth in the coming years, driven by technological advancements, the growing demand for improved diagnostics, and an increased focus on precision medicine. The market is forecasted to expand due to the rapid integration of artificial intelligence (AI) and machine learning (ML) technologies in medical imaging, which are enhancing diagnostic accuracy and improving patient outcomes.

Market Overview

Medical image analysis software refers to applications that process and analyze medical images (such as X-rays, MRIs, and CT scans) to assist healthcare professionals in diagnosing diseases, monitoring treatment progress, and conducting medical research. These tools play a critical role in clinical decision-making, ensuring better accuracy and reducing human error. The market for these software solutions is expanding as healthcare professionals seek innovative ways to manage and interpret vast volumes of imaging data.

Get Free Sample Report @ https://www.snsinsider.com/sample-request/2198

Market Segmentation

The medical image analysis software market is segmented based on the following categories:

By Type

Standalone Software

Integrated Software

By Application

Oncology

Neurology

Cardiology

Orthopedics

Others

By Deployment

Cloud-based

On-premise

By End-User

Hospitals

Diagnostic Centers

Research Institutions

Others

Regional Analysis

The global medical image analysis software market spans across key regions, including:

North America: Dominating the market, driven by advanced healthcare infrastructure, high adoption of AI technologies, and an aging population requiring diagnostic imaging.

Europe: Following North America, with significant contributions from countries like Germany, the UK, and France. Increased research investments in medical imaging technologies and growing awareness among healthcare providers contribute to regional growth.

Asia-Pacific: Witnessing rapid adoption of healthcare technologies, particularly in countries like Japan, China, and India. The rising prevalence of chronic diseases and demand for efficient healthcare services are key drivers.

Latin America & Middle East & Africa: Although these regions are in the nascent stages of adopting medical image analysis software, rising healthcare investments and a growing demand for diagnostic tools are likely to foster market growth.

KEY PLAYERS:

Some of the major key players are Agfa-Gevaert Group, ESAOTE SPA, Aquilab SAS., GE Healthcare, Koninklijke Philips N.V., MIM Software, Inc., Spacelabs Healthcare, Merge Healthcare, Inc., Siemens Health ineers, Toshiba Medical Systems Corporation,and other players.

Key Points:

Increasing prevalence of chronic diseases and aging population are propelling market demand.

AI-driven medical imaging solutions are improving the efficiency and accuracy of diagnoses.

Cloud-based deployment models offer cost-effective solutions, contributing to market growth.

Significant investments in healthcare infrastructure and research are boosting the market, particularly in developed economies.

Rising awareness and adoption of medical image analysis tools in emerging markets.

Future Scope

The future of the medical image analysis software market looks promising, with substantial innovations on the horizon. Advancements in AI and machine learning technologies will continue to drive the evolution of imaging solutions, leading to more precise and faster diagnostics. Furthermore, the integration of 3D imaging, augmented reality, and enhanced visualization tools will transform the medical imaging landscape. Additionally, cloud-based solutions will democratize access to these advanced technologies, enabling smaller healthcare facilities in emerging economies to benefit from cutting-edge imaging tools. As the global healthcare sector moves towards precision medicine and personalized treatment plans, medical image analysis software will play an integral role in improving patient care and treatment outcomes.

Conclusion

In conclusion, the medical image analysis software market is set to grow substantially in the near future, driven by advancements in technology and increased demand for accurate, efficient diagnostic tools. As healthcare providers strive for better patient care and improved outcomes, the market will see a greater focus on AI-powered solutions, cloud-based platforms, and the integration of new imaging modalities. The increasing availability of these tools will empower healthcare providers globally, enabling them to offer more personalized and effective treatment strategies.

Contact Us: Jagney Dave - Vice President of Client Engagement Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

Other Related Reports:

Medical Coding Market

Virtual Clinical Trials Market

Digital Clinical Trials Market

Speech Therapy Market

#Medical Image Analysis Software Market Share#Medical Image Analysis Software Market#Medical Image Analysis Software Market Trends#Medical Image Analysis Software Market Size

0 notes

Text

Exploring Web Design Firms: The Backbone of Modern Business Success

In today’s digital world, a website serves as the face of a business. Whether you're a small startup or a multinational corporation, having an engaging, functional, and user-friendly website is a necessity. This is where web design firms come into play—professional agencies or teams specializing in creating and maintaining websites that help businesses stand out, drive traffic, and convert visitors into customers.

But what exactly makes a web design firm essential for your business? Let's dive into the world of web design firms and explore how they can play a crucial role in shaping your digital presence.

What is a Web Design Firm?

A web design firm is a company that specializes in designing, developing, and maintaining websites for individuals, businesses, and organizations. These firms typically employ a mix of creative designers, skilled developers, and experienced project managers who work together to create websites that are visually appealing, functional, and optimized for user experience.

Web design firms cater to a range of needs—from crafting simple brochure websites to developing complex e-commerce platforms and content management systems (CMS). Their expertise goes beyond the visual aspects of a website and includes understanding user behavior, ensuring functionality across devices, and even optimizing the website for search engines.

Why Choose a Web Design Firm?

Professional Expertise Web design involves much more than choosing a theme and adding some text and images. It requires expertise in graphic design, programming languages, UX/UI principles, SEO, and analytics. Hiring a web design firm ensures you’re working with experienced professionals who understand the latest trends, best practices, and technologies to create a website that delivers results.

Customized Solutions One of the key advantages of working with a web design firm is the level of customization they offer. Instead of relying on pre-built templates, they create websites tailored to your business's specific needs, audience, and goals. Whether you need a creative portfolio, a dynamic e-commerce site, or a robust corporate platform, a web design firm will deliver a solution that's uniquely yours.

Time and Resource Efficiency Building a website in-house can be time-consuming, especially if you lack the necessary expertise or resources. A web design firm allows you to focus on running your business while they handle all the technical and creative aspects of building your online presence. From design and development to deployment and maintenance, they take care of it all.

User-Centric Approach Web design firms prioritize the user experience (UX) and user interface (UI) design. They understand that a website must be intuitive, easy to navigate, and visually engaging to keep visitors engaged. With a focus on accessibility and mobile responsiveness, they ensure your website provides a seamless experience across all devices and screen sizes.

Search Engine Optimization (SEO) A beautifully designed website is useless if it doesn't show up in search engines. A good web design firm knows the importance of SEO and ensures that your website is optimized for better visibility. From strategic content placement to fast loading speeds and mobile-friendliness, they take SEO into account during the design and development process, helping you rank higher on search engine results pages (SERPs).

Post-Launch Support Launching a website isn’t the end of the journey. Ongoing maintenance, updates, and troubleshooting are essential to keep your website running smoothly. Web design firms often provide post-launch support, ensuring your site remains up-to-date and functional over time.

Types of Web Design Services Offered by Firms

Web design firms offer a wide variety of services based on the needs of their clients. Some of the key services include:

Custom Website Design: Tailor-made design solutions that match your brand identity.

Responsive Web Design: Websites that automatically adjust to different screen sizes (desktops, tablets, smartphones).

E-commerce Web Design: Creating online stores with secure payment systems, user-friendly navigation, and product management tools.

Content Management Systems (CMS): Developing websites with platforms like WordPress, Joomla, or Drupal, allowing businesses to easily update their content.

SEO and Digital Marketing: Improving your site's visibility and helping you reach your target audience.

Branding and Graphic Design: Developing a strong visual identity with logo design, color schemes, and other branding elements.

Website Redesign: Updating and improving the design and functionality of an existing website.

Mobile App Development: In some cases, web design firms also offer mobile app design and development, expanding the digital footprint of your business.

How to Choose the Right Web Design Firm

Choosing the right web design firm can be a daunting task. Here are some factors to consider when making your decision:

Portfolio: A strong portfolio is one of the best ways to evaluate the capabilities of a web design firm. Look for diversity in their previous projects, as well as work that aligns with your vision and goals.

Experience and Expertise: Check how long the firm has been in the industry and the level of expertise of their team. The more experienced the firm, the better equipped they are to handle challenges that may arise.

Client Testimonials: Customer reviews and case studies can provide insight into the firm’s work ethic, communication, and ability to meet deadlines.

Communication and Collaboration: A good web design firm should be easy to work with and understand your vision. Ensure that they have a clear communication process in place.

Budget and Timelines: Be clear about your budget and timeline expectations from the start. Look for a firm that offers transparency and works within your budget while still delivering high-quality work.

Conclusion

In today’s digital-first world, a professional, well-designed website is essential for any business looking to succeed. Web design firms provide the expertise, creativity, and technical skills necessary to build and maintain an effective online presence. Whether you're launching a new site or revamping an existing one, partnering with a web design firm can make all the difference in achieving your business goals and creating an unforgettable user experience.

By choosing the right web design firm, you’re not just building a website—you’re crafting the digital face of your brand that will attract and engage customers for years to come.

#web design firms#website designer#website design company#website design firms#website creation company#web page design company#website designer near me#web page designers near me#website builders near me#website design near me

0 notes

Text

Azure AI Engineer Training | Azure AI Engineer Online

How Azure Blob Storage Integrates with AI and Machine Learning Models

Introduction

Azure Blob Storage is a scalable, secure, and cost-effective cloud storage solution offered by Microsoft Azure. It is widely used for storing unstructured data such as images, videos, documents, and logs. Its seamless integration with AI and machine learning (ML) models makes it a powerful tool for businesses and developers aiming to build intelligent applications. This article explores how Azure Blob Storage integrates with AI and ML models to enable efficient data management, processing, and analytics. Microsoft Azure AI Engineer Training

Why Use Azure Blob Storage for AI and ML?

Machine learning models require vast amounts of data for training and inference. Azure Blob Storage provides:

Scalability: Handles large datasets efficiently without performance degradation.

Security: Built-in security features, including role-based access control (RBAC) and encryption.

Cost-effectiveness: Offers different storage tiers (hot, cool, and archive) to optimize costs.

Integration Capabilities: Works seamlessly with Azure AI services, ML tools, and data pipelines.

Integration of Azure Blob Storage with AI and ML

1. Data Storage and Management

Azure Blob Storage serves as a central repository for AI and ML datasets. It supports various file formats such as CSV, JSON, Parquet, and image files, which are crucial for training deep learning models. The ability to store raw and processed data makes it a vital component in AI workflows. Azure AI Engineer Online Training

2. Data Ingestion and Preprocessing

AI models require clean and structured data. Azure provides various tools to automate data ingestion and preprocessing:

Azure Data Factory: Allows scheduled and automated data movement from different sources into Azure Blob Storage.

Azure Databricks: Helps preprocess large datasets before feeding them into ML models.

Azure Functions: Facilitates event-driven data transformation before storage.

3. Training Machine Learning Models

Once the data is stored in Azure Blob Storage, it can be accessed by ML frameworks for training:

Azure Machine Learning (Azure ML): Directly integrates with Blob Storage to access training data.

PyTorch and TensorFlow: Can fetch and preprocess data stored in Azure Blob Storage.

Azure Kubernetes Service (AKS): Supports distributed ML training on GPU-enabled clusters.

4. Model Deployment and Inference

Azure Blob Storage enables efficient model deployment and inference by storing trained models and inference data: Azure AI Engineer Training

Azure ML Endpoints: Deploy trained models for real-time or batch inference.

Azure Functions & Logic Apps: Automate model inference by triggering workflows when new data is uploaded.

Azure Cognitive Services: Uses data from Blob Storage for AI-driven applications like vision recognition and natural language processing (NLP).

5. Real-time Analytics and Monitoring

AI models require continuous monitoring and improvement. Azure Blob Storage works with:

Azure Synapse Analytics: For large-scale data analytics and AI model insights.

Power BI: To visualize trends and model performance metrics.

Azure Monitor and Log Analytics: Tracks model predictions and identifies anomalies.

Use Cases of Azure Blob Storage in AI and ML

Image Recognition: Stores millions of labeled images for training computer vision models.

Speech Processing: Stores audio datasets for training speech-to-text AI models.

Healthcare AI: Stores medical imaging data for AI-powered diagnostics.

Financial Fraud Detection: Stores historical transaction data for training anomaly detection models. AI 102 Certification

Conclusion

Azure Blob Storage is critical in AI and ML workflows by providing scalable, secure, and cost-efficient data storage. Its seamless integration with Azure AI services, ML frameworks, and analytics tools enables businesses to build and deploy intelligent applications efficiently. By leveraging Azure Blob Storage, organizations can streamline data handling and enhance AI-driven decision-making processes.

For More Information about Azure AI Engineer Certification Contact Call/WhatsApp: +91-7032290546

Visit: https://www.visualpath.in/azure-ai-online-training.html

#Ai 102 Certification#Azure AI Engineer Certification#Azure AI-102 Training in Hyderabad#Azure AI Engineer Training#Azure AI Engineer Online Training#Microsoft Azure AI Engineer Training#Microsoft Azure AI Online Training#Azure AI-102 Course in Hyderabad#Azure AI Engineer Training in Ameerpet#Azure AI Engineer Online Training in Bangalore#Azure AI Engineer Training in Chennai#Azure AI Engineer Course in Bangalore

0 notes

Text

Saab delivers the first serial-produced Gripen E fighter to Sweden's Defense Material Administration

Fernando Valduga By Fernando Valduga 10/20/2023 - 09:08am Military, Saab

On Friday, October 6, an important milestone was surpassed when Saab delivered the first serially produced Gripen E aircraft to the FMV (Sweden Defense Material Administration), which will now operate the aircraft before delivering it to the Swedish Armed Forces.

In the past, two JAS39 Gripen E were delivered to FMV for use in flight test operations, but under the Saab operating license.

"I am very happy and pleased that we have reached this important milestone towards the implementation of the hunt. It is an important milestone and more deliveries will take place soon," says Lars Tossman, head of Saab's aeronautical business area.

Lars Helmrich accompanied the development of the Gripen system for almost 30 years, first as a fighter pilot and then as commander of the Skaraborg F7 air flotilla. As the current head of FMV's aviation and space equipment business area, he is impressed with the aircraft that are now being delivered.

"The delivery means that FMV has now received all parts of the weapon system to operate the Gripen E independently," said Mattias Fridh, Head of Delivery Management for the Gripen Program. "Its technicians have received training on the Gripen E and have initial capabilities for flight line operations and maintenance. The support and training systems have already been delivered, and parts of the support systems delivered in 2022 were updated in August to match the new configuration."

So far, three aircraft have been delivered to the Swedish state, used in testing operations. From 2025, the plan is for FMV to deliver the JAS 39E to the Swedish Air Force. However, Air Force personnel are already, and have been since 2012, involved in development activities with both pilots and other personnel. It is an important part of the Swedish model to ensure that what the user receives is really necessary.

“This is a very important step for deployment in the Swedish Armed Forces in 2025 at F7 Satenäs, and FMV has now applied for its own flight test authorization from the Swedish Military Aviation Safety Inspection. This is the culmination of intensive work in both development and production, where many employees have done a fantastic job."

In addition to Sweden and Brazil, which have already placed orders for JAS 39 E/F, several countries show interest in the system. Today, Gripen is operated by Hungary, the Czech Republic and Thailand through agreements with the Swedish government and FMV. Brazil and South Africa have business directly with Saab.

Tags: Military AviationFlygvapnet - Swedish Air ForceFMVGripen EJAS39 Gripensaab

Sharing

tweet

Fernando Valduga

Fernando Valduga

Aviation photographer and pilot since 1992, has participated in several events and air operations, such as Cruzex, AirVenture, Dayton Airshow and FIDAE. He has work published in specialized aviation magazines in Brazil and abroad. Uses Canon equipment during his photographic work in the world of aviation.

Related news

MILITARY

US forces are attacked in the Red Sea, Syria and Iraq

20/10/2023 - 08:48

MILITARY

Philippine Air Force acquires Lockheed C-130J-30 Super Hercules aircraft

19/10/2023 - 22:41

EMBRAER

IMAGES: First KC-390 Millennium in the NATO configuration enters service in the Portuguese Air Force

19/10/2023 - 17:47

MILITARY

Putin announces MiG-31 permanent patrols with hypersonic weapons over the Black Sea

10/19/2023 - 4:00 PM

MILITARY

KAI selects display mounted on the Thales Scorpion helmet to enhance the FA-50 fighter in Poland

10/19/2023 - 2:00 PM

MILITARY

VIDEO: Airbus delivers second C295 MSA aircraft to Ireland

10/19/2023 - 11:30

Client PortalClient PortalClient PortalClient PortalClient PortalClient PortalClient PortalClient PortalhomeMain PageEditorialsINFORMATIONeventsCooperateSpecialitiesadvertiseabout

Cavok Brazil - Digital Tchê Web Creation

Commercial

Executive

Helicopters

HISTORY

Military

Brazilian Air Force

Space

Specialities

Cavok Brazil - Digital Tchê Web Creation

11 notes

·

View notes

Text

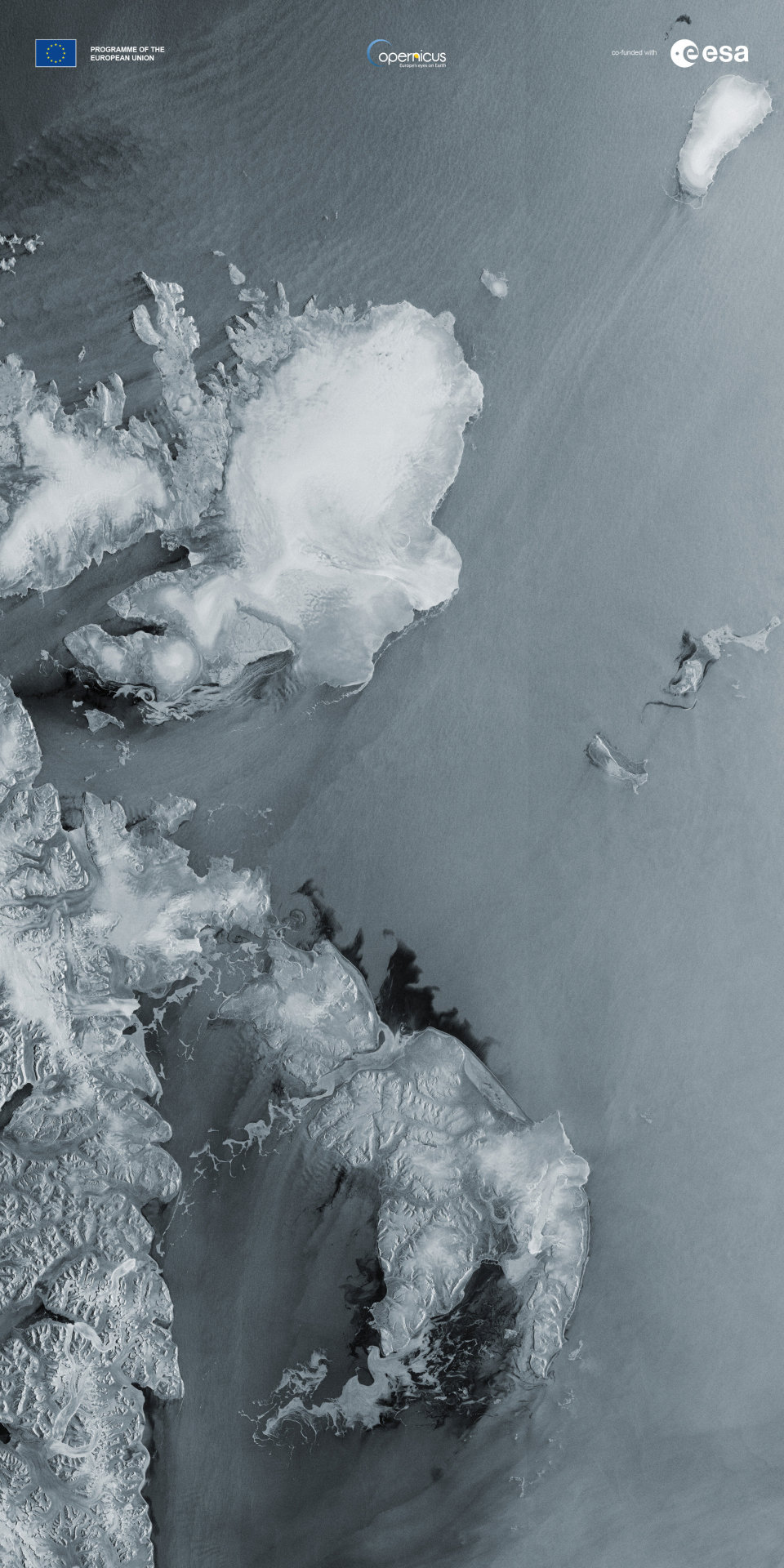

Sentinel-1C captures first radar images of Earth

Less than a week after its launch, the Copernicus Sentinel-1C satellite has delivered its first radar images of Earth—offering a glimpse into its capabilities for environmental monitoring. These initial images feature regions of interest, including Svalbard in Norway, the Netherlands, and Brussels, Belgium.

Launched on 5 December from Europe's Spaceport in French Guiana aboard a Vega-C rocket, Sentinel-1C is equipped with a state-of-the-art C-band synthetic aperture radar (SAR) instrument. This cutting-edge technology allows the satellite to deliver high-resolution imagery day and night, in all weather conditions, supporting critical applications such as environmental management, disaster response and climate change research.

Now, the new satellite has delivered its initial set of radar images over Europe, flawlessly processed by the Sentinel-1 Ground Segment. These images showcase an exceptional level of data quality for initial imagery, highlighting the outstanding efforts of the entire Sentinel-1 team over the past years.

The first image (see figure above), captured just 56 hours and 23 minutes after liftoff, features Svalbard, a remote Norwegian archipelago in the Arctic Ocean.

This image demonstrates Sentinel-1C's ability to monitor ice coverage and environmental changes in harsh and isolated regions. These capabilities are essential for understanding the effects of climate change on polar ecosystems and for enabling safer navigation in Arctic waters.

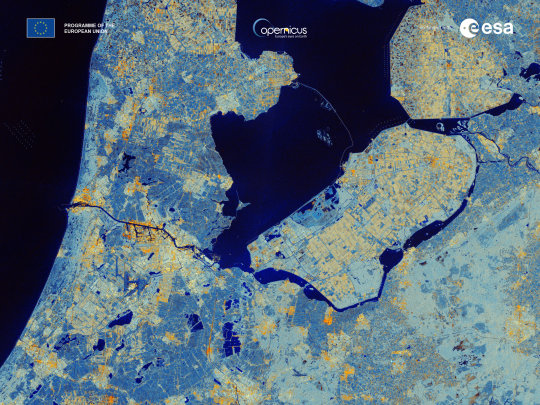

Moving to mainland Europe, the second image (below) showcases part of the Netherlands, including Amsterdam and the region of Flevoland, renowned for its extensive farmland and advanced water management systems.

Sentinel-1C's advanced radar captures intricate details of this region, providing invaluable data for monitoring soil moisture and assessing crop health. These insights are essential for enhancing agricultural productivity and ensuring sustainable resource management in one of Europe's key farming areas.

This Sentinel-1C image of the Netherlands echoes the very first SAR image acquired by the legacy European Remote-Sensing (ERS) mission in 1991, which captured the Flevoland polder and the Ijsselmeer—marking the first European radar image ever taken from space.

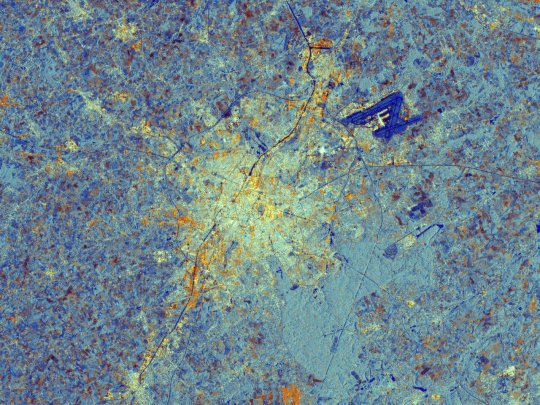

Finally, the third image (below) highlights Brussels, Belgium, where Sentinel-1C's radar technology vividly depicts the dense urban landscape in bright white and yellow tones, contrasting with the surrounding vegetation. Waterways and low-reflective areas, such as airport runways, appear in darker hues.

Interestingly, Brussels holds historical significance for the Sentinel program, as it was the subject of the first radar image captured by Sentinel-1A in April 2014.

The European Commission oversees Copernicus, coordinating diverse services aimed at environmental protection and enhancing daily life. While ESA, responsible for the Sentinel satellite family, ensures a steady flow of high-quality data to support these services.

ESA's Director of Earth Observation Programs, Simonetta Cheli, commented, "These images highlight Sentinel-1C's remarkable capabilities. Although it's early days, the data already demonstrate how this mission will enhance Copernicus services for the benefit of Europe and beyond."

Since its launch, Sentinel-1C has undergone a series of complex deployment procedures, including the activation of its 12-meter-long radar antenna and solar arrays.

While the satellite is still in its commissioning phase, these early images underscore its potential to deliver actionable insights across a range of environmental and scientific applications.

Reflecting on the Sentinel-1C launch, Ramon Torres, ESA's Project Manager for the Sentinel-1 mission, said, "Sentinel-1C is now poised to continue the critical work of its predecessors, unveiling secrets of our planet—from monitoring the movements of ships on vast oceans to capturing the dazzling reflections of sea ice in polar regions and the subtle shifts of Earth's surface. These first images embody a moment of renewal for the Sentinel-1 mission.'"

Sentinel-1 data contributes to numerous Copernicus services and applications, including Arctic sea-ice monitoring, iceberg tracking, routine sea-ice mapping and glacier-velocity measurements. It also plays a vital role in marine surveillance, such as oil-spill detection, ship tracking for maritime security and monitoring illegal fishing activities.

Additionally, it is widely used for observing ground deformation caused by subsidence, earthquakes and volcanic activity, as well as for mapping forests, water and soil resources. The mission is crucial in supporting humanitarian aid and responding to crises worldwide.

All Sentinel-1 data are freely available via the Copernicus Data Space Ecosystem, providing instant access to a wide range of data from both the Copernicus Sentinel missions and the Copernicus Contributing Missions.

TOP IMAGE: The first image features Svalbard, a remote Norwegian archipelago in the Arctic Ocean. Credit: contains modified Copernicus Sentinel data (2024), processed by ESA

CENTRE IMAGE: This image showcases part of the Netherlands, including Amsterdam and the region of Flevoland. Credit: contains modified Copernicus Sentinel data (2024), processed by ESA

LOWER IMAGE: Brussels, Belgium, captured by Sentinel-1C. Credit: contains modified Copernicus Sentinel data (2024), processed by ESA

2 notes

·

View notes

Text

Cloud Computing in Healthcare: Adoption Trends & Competitive Landscape

The global healthcare cloud computing market size is anticipated to reach USD 45.1 billion by 2030, according to a new report by Grand View Research, Inc. The market is projected to grow at a CAGR of 12.7% from 2024 to 2030. The associated benefits of data analytics and increase in demand for flexible & scalable data storage by healthcare professionals is expected to drive the demand for these services over the forecast period.

Healthcare organizations are digitalizing their IT infrastructure and deploying cloud servers to improve features of systems. These solutions help organizations in reducing infrastructure cost & interoperability issues and aid in complying with regulatory standards. Hence, rising demand from health professionals to curb IT infrastructure costs and limit space usage are anticipated to boost market growth over the forecast period.

Increase in government initiatives undertaken to develop and deploy IT systems in this industry is one of the key drivers of this market. Moreover, increase in partnerships between private & public players and presence of a large number of players offering customized solutions are some of the factors anticipated to drive demand in the coming years.

Healthcare Cloud Computing Market Report Highlights

Nonclinical information systems dominated the market and accounted for a market share of 50.7% in 2023. It can be attributed to the larger penetration of cloud computing services for various applications such as fraud management, financial management, healthcare information exchange, and others.