#DataFormats

Explore tagged Tumblr posts

Text

Data Engineering User Guide

Data Engineering User Guide #sql #database #language #query #schema #ddl #dml#analytics #engineering #distributedcomputing #dataengineering #science #news #technology #data #trends #tech #hadoop #spark #hdfs #bigdata

Even though learning about Data engineering is a daunting task, one can have a clear understanding of this filed by following a step-by-step approach. In this blog post, we will go over each of the steps and relevant steps you can follow through as a tutorial to understand Data Engineering and related topics. Concepts on Data In this section, we will learn about data and its quality before…

0 notes

Text

E-Discovery Success: Best Practices for Electronic Data Discovery Explained

Introduction to Electronic Data Discovery (E-Discovery)

Electronic data discovery, commonly known as e-discovery, involves the identification and retrieval of electronically stored information (ESI) pertinent to legal cases and investigations. This process is essential in our digital age, where vast amounts of electronic data are created and stored daily. Efficiently managing and finding relevant data is crucial for legal proceedings, corporate lawsuits, and regulatory investigations, akin to finding a needle in a haystack.

Understanding Electronic Data Discovery

E-discovery entails locating, gathering, and providing ESI in response to judicial procedures or investigations. This includes various electronic formats such as voicemails, documents, databases, audio and video files, social media, and web pages. The e-discovery process in court is governed by established procedures and civil procedural laws.

Example of E-Discovery in Action

An example of e-discovery is locating and gathering ESI relevant to a legal case. This can include emails, documents, databases, chat messages, social media posts, and web pages. Legal professionals utilize e-discovery software and tools to efficiently search, process, and review this electronic material. For instance, in a class-action lawsuit, e-discovery technologies can expedite the review process, allowing the legal team to classify and prioritize millions of documents swiftly using predictive coding.

Best Practices in E-Discovery

Establish Clear Processes and Workflows: Define and communicate clear roles and responsibilities within your e-discovery operations, including protocols for collecting, preserving, processing, reviewing, and producing data.

Stay Informed on Legal Requirements: Keep up-to-date with relevant laws, regulations, and rules governing e-discovery in your area, including any updates or amendments to ensure compliance.

Implement Strong Information Governance: Manage ESI effectively throughout its lifecycle by implementing robust information governance policies, including proper classification, retention, and disposal of data.

Leverage Technology and Tools: Utilize e-discovery software and technology solutions to streamline and automate various stages of the process, enhancing efficiency and accuracy.

Conduct Early Case Assessments: Perform early case assessments to understand the case and the ESI involved, allowing for a focused and cost-effective e-discovery strategy.

Maintain Quality Control: Implement quality control measures throughout the e-discovery process to ensure accuracy, consistency, and defensibility. Regularly validate search terms and use sampling techniques to verify data integrity.

Challenges in E-Discovery

Volume and Complexity of Data: Managing the overwhelming amount of ESI, including emails, documents, and social media posts, can be challenging.

Data Preservation and Collection: Ensuring the integrity of preserved and collected data is crucial, especially when dealing with diverse data sources and multiple stakeholders.

Data Privacy and Security: Protecting sensitive information during the e-discovery process is vital, requiring compliance with data privacy regulations and robust security measures.

International and Cross-Border E-Discovery: Conducting e-discovery across different jurisdictions involves navigating varying laws, regulations, languages, and cultural differences, complicating the process.

Keeping Up with Technology and Tools: Staying updated with evolving e-discovery technologies and tools can be challenging, including selecting the right software and managing data formats.

Cost and Resource Management: E-discovery can be expensive, especially with large volumes of data and complex legal matters. Effective budget management, resource allocation, and cost control are essential.

Conclusion

In today's digital era, electronic data discovery is a dynamic and evolving process with its benefits and challenges. By implementing best practices, staying current with regulatory requirements, leveraging technology, and fostering collaboration, organizations can effectively navigate these challenges. This ensures the identification of relevant evidence, enabling wise decisions and successful legal outcomes.

0 notes

Video

youtube

Code Like A Pro With ChatGPT: Create JSON, SQL, XML, RegEx, Shell, & YAML Commands!

#youtube#FreeTool#Programming#Shell#RegEx#SoftwareDevelopment#CodeSnippet#TechTips#ProgrammingTutorial#OpenAI#NaturalLanguageProcessing#MachineLearning#ArtificialIntelligence#ProductivityHacks#Automation#DataFormats#ProgrammingTips#CodeGeneration#ShellCommands#RegularExpressions#SQL#YAML#XML#JSON#ChatGPT

1 note

·

View note

Text

Outsourcing Data Formatting Services in India

For improved commercial operations an organization must retain its informative resources effectively. With comprehensive data formatting services create a well-organised database with all your company's crucial information to ensure a high-quality outcome. Such services also remove redundant or worthless material to regulate your file. A variety of technology tools, current approaches, and quality assurance teams are great assistance for them to give the best data formatting services at an affordable price so you get all this by outsourcing it to a Data Entry Expert.

To know more - https://www.dataentryexpert.com/data-processing/data-formatting-services.php

#dataformatting#dataformattingservices#dataformattingservice#dataformattingcompany#affordabledataformatting#bestdataformattingservices#outsourcingdataformattingservices#dataformattingservicesinindia#topdataformattingservices

0 notes

Text

#SpreadsheetSoftware#DataAnalysis#DataVisualization#FinancialModeling#DataManipulation#FormulasAndFunctions#DataEntry#DataFormatting#MicrosoftOffice#BusinessTools#Budgeting#ChartsAndGraphs#ExcelFormulas#Automation#Collaboration

0 notes

Text

Transforming Data into Actionable Insights with Domo

In today's data-driven world, organizations face the challenge of managing vast amounts of data from various sources and deriving meaningful insights from it. Domo, a powerful cloud-based platform, has emerged as a game-changer in the realm of business intelligence and data analytics. In this blog post, we will explore the capabilities of Domo and how it enables businesses to harness the full potential of their data.

What is Domo?

Domo is a cloud-based business intelligence and data analytics platform that empowers organizations to easily connect, prepare, visualize, and analyze their data in real-time. It offers a comprehensive suite of tools and features designed to streamline data operations and facilitate data-driven decision-making.

Key Features and Benefits:

Data Integration: Domo enables seamless integration with a wide range of data sources, including databases, spreadsheets, cloud services, and more. It simplifies the process of consolidating data from disparate sources, allowing users to gain a holistic view of their organization's data.

Data Preparation: With Domo, data preparation becomes a breeze. It offers intuitive data transformation capabilities, such as data cleansing, aggregation, and enrichment, without the need for complex coding. Users can easily manipulate and shape their data to suit their analysis requirements.

Data Visualization: Domo provides powerful visualization tools that allow users to create interactive dashboards, reports, and charts. It offers a rich library of visualization options and customization features, enabling users to present their data in a visually appealing and easily understandable manner.

Collaboration and Sharing: Domo fosters collaboration within organizations by providing a centralized platform for data sharing and collaboration. Users can share reports, dashboards, and insights with team members, fostering a data-driven culture and enabling timely decision-making across departments.

AI-Powered Insights: Domo leverages artificial intelligence and machine learning algorithms to uncover hidden patterns, trends, and anomalies in data. It provides automated insights and alerts, empowering users to proactively identify opportunities and mitigate risks.

Use Cases:

Sales and Marketing Analytics: Domo helps businesses analyze sales data, track marketing campaigns, and measure ROI. It provides real-time visibility into key sales metrics, customer segmentation, and campaign performance, enabling organizations to optimize their sales and marketing strategies.

Operations and Supply Chain Management: Domo enables organizations to gain actionable insights into their operations and supply chain. It helps identify bottlenecks, monitor inventory levels, track production metrics, and streamline processes for improved efficiency and cost savings.

Financial Analysis: Domo facilitates financial reporting and analysis by integrating data from various financial systems. It allows CFOs and finance teams to monitor key financial metrics, track budget vs. actuals, and perform advanced financial modeling to drive strategic decision-making.

Human Resources Analytics: Domo can be leveraged to analyze HR data, including employee performance, retention, and engagement. It provides HR professionals with valuable insights for talent management, workforce planning, and improving overall employee satisfaction.

Success Stories: Several organizations have witnessed significant benefits from adopting Domo. For example, a global retail chain utilized Domo to consolidate and analyze data from multiple stores, resulting in improved inventory management and optimized product placement. A technology startup leveraged Domo to analyze customer behavior and enhance its product offerings, leading to increased customer satisfaction and higher revenue.

Domo offers a powerful and user-friendly platform for organizations to unlock the full potential of their data. By providing seamless data integration, robust analytics capabilities, and collaboration features, Domo empowers businesses to make data-driven decisions and gain a competitive edge in today's fast-paced business landscape. Whether it's sales, marketing, operations, finance, or HR, Domo can revolutionize the way organizations leverage data to drive growth and innovation.

#DataCleaning#DataNormalization#DataIntegration#DataWrangling#DataReshaping#DataAggregation#DataPivoting#DataJoining#DataSplitting#DataFormatting#DataMapping#DataConversion#DataFiltering#DataSampling#DataImputation#DataScaling#DataEncoding#DataDeduplication#DataRestructuring#DataReformatting

0 notes

Text

Data Formats: 3D, Audio, Image

https://paulbourke.net/dataformats/

0 notes

Text

💡 Fun fact: Excel 2019 provides a range of formatting options to easily organize and enhance your data. With the auto format shortcut, you can effortlessly apply pre-designed styles to save time and make your spreadsheets visually appealing.

#bpa #bpaeducators

#ExcelTips #DataFormatting #TimeSaver

0 notes

Link

0 notes

Text

I will accurate data entry and copy paste work for you

DearFriend,

Are you looking for someone to help you with data entry work? I am here to provide you with accurate and efficient data entry services that will help you save time and increase productivity. I have extensive experience in data entry, and I am committed to delivering high-quality work within the agreed timeframe.

Services I Offer ;

Data entry from any source to Excel, Google Sheets, or any other database

Data organization and management to help you keep track of information.

Contacts and Email Research for Marketing and Business Purposes

Other custom data entry services as per your requirements.

Data scraping and mining from websites and PDFs..

Microsoft Excel Data Cleaning

PDF to Excel/CSV Conversion

Offline/Online Data Entry Job

Companies Emails Research

Social Media Research

Email Marketing Lists

Web Research Jobs

Virtual Assistance

Copy Paste Tasks

Excel data entry

Data Collection

Data Mining

Why will you hire me?

24/7 Online

Group Working

Unlimited Revisions

Guaranteed Data Quality

Efficient Time of working

On-time delivery

Please Message before Ordering the gig to get a Better Deal and avoid any confusions. Don't hesitate to contact with me.

#virtualassistant#Validemail#datamining#webresearch#exceldataentry#dataentry#typingwork#jobcopypaste#Worktypinglogo#designconvertpdftowordbackground#removalcopywritingcopypaste job#emaillisting#All Categories#Validemai l#CopyPaste#DataEntry#WebResearch#DataFormatting Cleaning#VirtualAssistant#VirtualAdministrationAssistant#ConvertFiles#DataProcessing#DataMining Scraping

2 notes

·

View notes

Text

Safeguarding Data Privacy: The Vital Role of Computer Security

How do you safeguard Data Privacy? #analytics #engineering #distributedcomputing #dataengineering #science #news #technology #data #trends #tech #hadoop #spark #hdfs #bigdata #communication #privacy #security #pii #internet #internet

In today’s modern and digital age of data-driven movements, data plays a crucial role in our personal and professional lives. Everything we do generates data in this digital world, both public and private. Data sets are generated from our devices connected through networks, smart cars, payment systems, smart homes, shopping habits, and transportation systems. These data are used to gather…

View On WordPress

0 notes

Link

Flatfile The standard for data onboarding.

0 notes

Photo

eDataMine offers outsource data processing services at an affordable cost. It includes image processing services, word processing services, resume processing services, and many more as per your requirements. Quote now with eDataMine and claim your free trial.

#data processing services#dataprocessing#outsourcing#outsourcedataprocessing#image processing services#wordprocessing#resumeprocessing#formsprocessing#data cleansing services#dataformatting

1 note

·

View note

Text

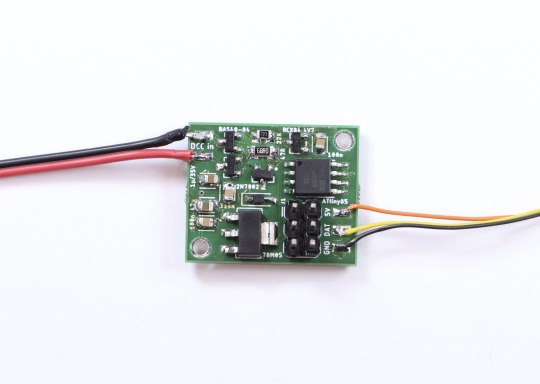

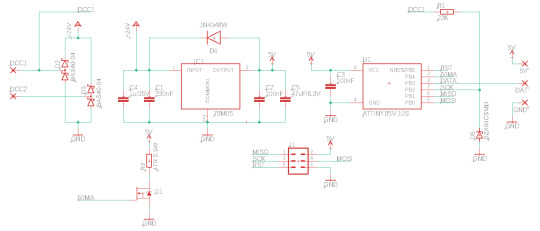

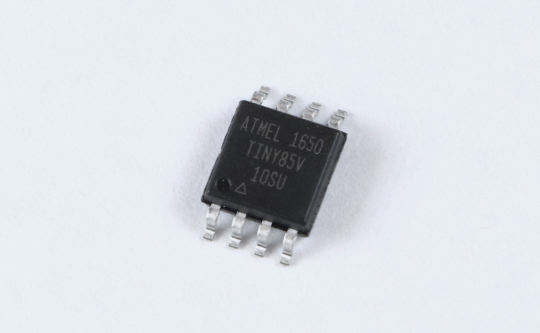

In the past few weeks I’ve built a model railroad signal for garden railways with a custom DCC decdoder. Here’s what it looks like:

youtube

I’ve heard from a number of people that they thought this was interesting, but they didn’t really understand what I was doing. So today I’ll try to explain the core of it all, this circuit board:

And here’s the associated circuit diagram:

I’ll try to explain why this is the way it is and what it does.

(This whole explanation is aimed at people who have never done anything with electronics before. If that’s not you, then this may be a bit boring. Also, I didn’t come up with any of the parts of this. Most of this is based on things I learned by reading OpenDCC and Mikrocontroller.net. I’m sure I still made a lot of mistakes, though, and they’re definitely all mine and not the fault of anyone on these sites.)

The goal

First let’s talk about requirements. My goal was to build an american signal type “Searchlight”. Such a signal has between one and three lamps. Thanks to a clever electromechanic design that moves different color filters around, each lamp can show different colors - up to three from a total selection of four.

Replicating this system for a model railroad is not practical. I need something else. Having multiple colored LEDs next to each other wouldn’t work; they’re too big and I want it to all look like one light source. There are LEDs that contain red, blue and green in one housing, but that would require a lot of wires quickly that all have to be put in the mast. The solution is this:

This is an “adressable” LED, better known under the name “Neopixel” used by a large american online store. There are many variations from different manufacturers. The key thing is that each LED has a tiny control circuit built right in. It takes four wires: Plus five volts, minus, data in and data out. If you have more than one, you can connect the data out of the first directly to the data in of the second and so on. Connect the plus and minus as well, and you can control almost unlimited amounts of LEDs with just three wires.

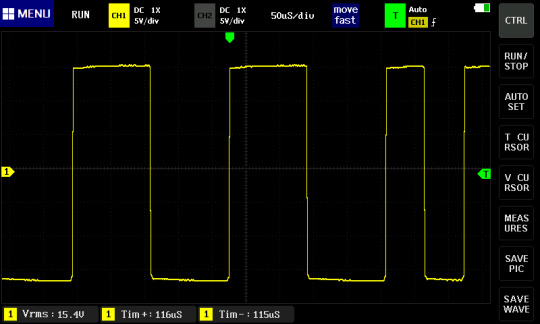

The data line has a special protocol that you need to generate. Basically you need to switch it from 0 to 5 to 0 volts again and again at a certain rate; the time it stays at 5 volts (“high”) determines whether you’re sending a 0 or a 1. From these bits you form bytes, which tell each LED what specific color value to send.

Due to this dataformat, you definitely need some electronic circuit controlling the signal, and the first requirements for this are:

Provide five volts DC power

Generate the data for the LEDs in the correct format

The Input

There are a lot of options for designing the input side of things. In my case, I’m assuming the signal is electrically connected to the rails of a model railroad that is controlled digitally. With digital command control (DCC), the voltage at the rails has a constant value of about 15 to 25 volts, larger for larger scales. This voltage constantly flips polarity; first plus is on the left rail, then it goes to the right rail (and minus vice versa), and then back. It’s like AC in normal wall outlets, but with very abrupt changes instead of a smooth sine wave.

This voltage has two tasks. First it supplies the locomotives with power, but it also transmits information. If one of these change-and-back sequences is long, it transmits a “0”; if it’s short, it transmits a “1”. These bits together then form the bytes that form the messages that say things like, “Locomotive three run at speed step 64” or “switch 10 switch to direction left”.

This decoder uses both features. The digital voltage provides both the data and the power. For a locomotive, that is required since the only conductors you have are the rails. This is a stationary decoder, so I could have designed it so that it only uses digital commands, and gets the power from an external power supply. However, I wanted to use the least amount of cables, so I’m using the simple version.

With that, the requirements are fixed. The circuit has to:

Turn the digital power (15-25 Volts, AC-ish) into 5 Volts DC

Read and understand the digital data signal (decode it, hence the name “decoder”) and calculate the colors for the LEDs.

Computation

This calculation is the real key here. The digital signal has a completely different fromat than what the LEDs expect. It’s slower, but also has completely different meaning. At best it transmits “set switch or signal 10 to state 0”. Which color values are associated with that, let alone any blending to make it look nice, are things the signal has to decide for itself. There is no way to build a simple stupid adapter here; I need a complete computer.

Luckily, you can get those for cheap and in really tiny.

The ATtiny85 costs about 1€ depending on how many you order, and it’s smaller than one cent coin (I think in basically any currency), but from a technical point of view, it is essentially a full computer. It has all the important parts anyway. There is a CPU that can run at (depending on the version) up to 20 MHz; half a kilobyte of RAM and eight kilobyte of internal storage for the program. Multiple programs is a bit of a challenge. If you know Arduinos, the ATtiny85 is related to the ATmega328p in the Arduino Uno and Nano. Far less powerful, but cheaper and significantly smaller.

What it lacks are all the surroundings like keyboard and screen for input and output. The chip is designed for applications where this isn’t needed, or at least only minimal things. The software that you write can assign each pin (okay, five out of eight) freely for different tasks: The pin can work as an input, telling the software whether there’s a low or high level of voltage at it (meaning 0 or 5 Volts), or it can work as an output and write high or low values, meaning setting the pin explicitly to 0 or 5 Volts.

There are other options for the Pins as well; among other things it can also read analog voltages and generate them to some extent. But for this task I only need the simple digital high-low inputs and outputs.

These types of chips, known as microcontrollers, exist in thousands of variations by different manufacturers with very different performance characteristics. They are the key part of basically everything that’s digitally controlled these days. Washing machines, everything that plugs into a computer including every single Apple lightning cable, TVs, TV remotes, amazing amounts of parts in cars and so on are all the realm of microcontrollers. The ATtiny85 is, as the name implies, very much at the low end of the scale (though there are smaller ones), and even here, it is a bit out of date. But it is very easy to program and very forgiving of mistakes, which makes it great in hobby situations.

To run, this chip needs around 3-5 Volts DC (some versions like the one here can also run on a bit less) and exactly one capacitor. I’m already generating 5 Volts DC for the LEDs anyway, so this chip will get them as well. That means for all the calculation, only two pieces of hardware are required.

There is some more associated hardware, though, for getting the program (which I’ve written myself) on the chip. For that you need a programmer, a device that you can buy for some money, or make yourself astonishingly easily from an Arduino. It needs to be connected with six wires to the chip. The standard for this is with a six-pin plug, which I’ve thus included here as well. There are standard six-wire cables for this.

You could connect the cables differently, for example with some sort of spring-loaded contacts on some programming circuit board you’d have to build for that, or in the worst case, just temporarily solder the cables in there. But the plug version is both simple and convenient, with the only downside that it makes the circuit a bit more pointy.

(Due to the Tumblr image limit, the next part will have to be in a reblog)

46 notes

·

View notes

Text

A BioWare Community Manager made an announcement on the Mass Effect modding community Discord about a resource for MELE modders. having spoken to some modders, BioWare have put together a helpful document and some other files for modding toolset devs to refer to when building modding tools [source].

MELE_ModdingSupport

This project contains files to assist the Mass Effect: Legendary Edition modding community. Contained within are SQL scripts for creating plot databases and various data format descriptions in the form of header files.

Plot databases

Inside the PlotDatabases folder you will find scripts for re-creating the plot database for each title.

Data Formats

Inside the DataFormats folder you will find header files that describe the layout of data formats useful for modding. Note that structures are laid out in the order in which data is serialized and they are not meant to indicate how the data is organized in memory. [github link]

46 notes

·

View notes

Photo

Web Research Services: Increase Your Company's Profits and Reduce In-House or Administration Costs up to 65%

#Hirinfotech #webresearch #internetresearch #datamanagement #datamining #dataformatting #dataprocessing #marketresearch #productresearch #socialmediaresearch #ecommerceproductresearch #documentresearch #eventresearch #leadresearch #websearch #VA #dataentry

1 note

·

View note