#Create-Sql-Database-Online

Explore tagged Tumblr posts

Text

Database-Management-System, Create-Sql-Database-Online, Online-Sql-Generator, Sql-Code-Generator, Sql-Data-Generator

Creating an SQL database has become increasingly accessible thanks to modern tools and platforms. Whether you’re a beginner or an experienced developer, the ability to create an SQL database online allows for faster setup and management, often with minimal installation or configuration required. In this guide, we’ll walk you through the step-by-step process of creating an SQL database online and introduce how using an online SQL generator can simplify this task.

Step 1: Choose the Right Online SQL Platform

The first step in creating an SQL database online is choosing a platform that best suits your needs. Several platforms offer cloud-based database creation and management, such as:

Amazon RDS (Relational Database Service)

Google Cloud SQL

Microsoft Azure SQL Database

Heroku Postgres

MySQL Database Service (MDS)

These platforms allow you to create, manage, and scale SQL databases without requiring local infrastructure.

Step 2: Set Up an Account on the Platform

Once you’ve chosen your platform, the next step is to sign up for an account. Most of these services offer free trials or free tiers, which can be useful for testing purposes.

Create an account on the chosen platform by providing necessary details.

Verify your email address to activate your account.

Once activated, you can proceed to the dashboard where you’ll create your database.

Step 3: Create a New SQL Database

With access to the platform, the next step is to create an SQL database online. Each platform’s interface will vary slightly, but here’s a general guide to the process:

Navigate to the database management section of the platform.

Click on the "Create Database" option or similar action button.

Name your database — choose a meaningful name for easy identification.

Select the database type (e.g., MySQL, PostgreSQL, SQL Server) based on your project’s needs.

Configure settings such as database size, storage, and region (if applicable).

Click Create or Submit to generate your SQL database.

Step 4: Use an Online SQL Generator to Create Tables and Queries

Once your database is created, you’ll need to define its structure. This is where an online SQL generator becomes helpful. These tools automatically generate SQL queries for creating tables, inserting data, and more, saving you time and minimizing the chance for errors.

Some popular online SQL generators include:

SQLFiddle

DB Fiddle

Instant SQL Formatter

How to Use an Online SQL Generator:

Select the SQL type (e.g., MySQL, PostgreSQL) in the generator tool.

Input the structure for the tables, including column names, data types, and constraints.

The tool will generate SQL queries based on your input.

Copy the generated SQL code and run it in your SQL platform to create tables or insert data into your database.

For example, if you need to create a table for users, you might input the following details:

Table Name: Users

Columns: id (INT, PRIMARY KEY), name (VARCHAR), email (VARCHAR), created_at (TIMESTAMP)

The online SQL generator will output something like this:

sql

Copy code

CREATE TABLE Users (

id INT PRIMARY KEY,

name VARCHAR(100),

email VARCHAR(100),

created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP

);

You can then execute this query on your platform to create the table.

Step 5: Connect to Your SQL Database

After creating your database and defining its structure, you’ll need to connect your application to the database. Most online SQL platforms provide you with connection details, including:

Hostname

Port number

Username and password

Database name

Using these credentials, you can connect to your database from any SQL client (such as MySQL Workbench or pgAdmin) or programmatically through your application’s backend.

Step 6: Test and Manage Your Database

Now that your database is up and running, it’s important to test and manage it. You can start by:

Running sample queries using your SQL generator to ensure everything is set up correctly.

Inserting sample data to validate the structure and ensure the database handles data as expected.

Using the platform’s dashboard to monitor the database’s performance, including storage usage, connection times, and more.

Conclusion

Creating an SQL database online is a straightforward process thanks to the variety of platforms and tools available today. By selecting the right platform, leveraging an online SQL generator for writing queries, and carefully managing your database, you can ensure your project is set up for success. Whether you’re working on a small project or developing an enterprise-level solution, creating your database online allows you to scale and adapt easily without the need for complex local setups. Now that you know how to create an SQL database online, it’s time to put it into action and start building your project’s data infrastructure!

#Database-Management-System#Create-Sql-Database-Online#Online-Sql-Generator#Sql-Code-Generator#Sql-Data-Generator

0 notes

Text

Database design and management course and Assignment help

Contact me through : [email protected]

I will provide advice and assistance in your database and system design course. I will handle everything including;

Normalization

Database design (ERD, Use case, concept diagrams etc)

Database development (SQL and Sqlite)

Database manipulation

Documentation

#database assignment#assignment help#SQL#sqlserver#Microsoft SQL server#INSERT#UPDATE#DELETE#CREATE#college student#online tutoring#online learning#assignmentwriting#Access projects#Database and access Final exams

3 notes

·

View notes

Link

Want to build an online spreadsheet database without coding? Trunao presents a step-by-step online database management system with no coding. A web-based application that stores and organizes information is known as an online database. Read the easy step!

#convert excel to database#create sql database from excel#online database#online database management system#online database without coding#spreadsheet to online database#spreadsheets and databases

0 notes

Text

The flood of text messages started arriving early this year. They carried a similar thrust: The United States Postal Service is trying to deliver a parcel but needs more details, including your credit card number. All the messages pointed to websites where the information could be entered.

Like thousands of others, security researcher Grant Smith got a USPS package message. Many of his friends had received similar texts. A couple of days earlier, he says, his wife called him and said she’d inadvertently entered her credit card details. With little going on after the holidays, Smith began a mission: Hunt down the scammers.

Over the course of a few weeks, Smith tracked down the Chinese-language group behind the mass-smishing campaign, hacked into their systems, collected evidence of their activities, and started a months-long process of gathering victim data and handing it to USPS investigators and a US bank, allowing people’s cards to be protected from fraudulent activity.

In total, people entered 438,669 unique credit cards into 1,133 domains used by the scammers, says Smith, a red team engineer and the founder of offensive cybersecurity firm Phantom Security. Many people entered multiple cards each, he says. More than 50,000 email addresses were logged, including hundreds of university email addresses and 20 military or government email domains. The victims were spread across the United States—California, the state with the most, had 141,000 entries—with more than 1.2 million pieces of information being entered in total.

“This shows the mass scale of the problem,” says Smith, who is presenting his findings at the Defcon security conference this weekend and previously published some details of the work. But the scale of the scamming is likely to be much larger, Smith says, as he didn't manage to track down all of the fraudulent USPS websites, and the group behind the efforts have been linked to similar scams in at least half a dozen other countries.

Gone Phishing

Chasing down the group didn’t take long. Smith started investigating the smishing text message he received by the dodgy domain and intercepting traffic from the website. A path traversal vulnerability, coupled with a SQL injection, he says, allowed him to grab files from the website’s server and read data from the database being used.

“I thought there was just one standard site that they all were using,” Smith says. Diving into the data from that initial website, he found the name of a Chinese-language Telegram account and channel, which appeared to be selling a smishing kit scammers could use to easily create the fake websites.

Details of the Telegram username were previously published by cybersecurity company Resecurity, which calls the scammers the “Smishing Triad.” The company had previously found a separate SQL injection in the group’s smishing kits and provided Smith with a copy of the tool. (The Smishing Triad had fixed the previous flaw and started encrypting data, Smith says.)

“I started reverse engineering it, figured out how everything was being encrypted, how I could decrypt it, and figured out a more efficient way of grabbing the data,” Smith says. From there, he says, he was able to break administrator passwords on the websites—many had not been changed from the default “admin” username and “123456” password—and began pulling victim data from the network of smishing websites in a faster, automated way.

Smith trawled Reddit and other online sources to find people reporting the scam and the URLs being used, which he subsequently published. Some of the websites running the Smishing Triad’s tools were collecting thousands of people’s personal information per day, Smith says. Among other details, the websites would request people’s names, addresses, payment card numbers and security codes, phone numbers, dates of birth, and bank websites. This level of information can allow a scammer to make purchases online with the credit cards. Smith says his wife quickly canceled her card, but noticed that the scammers still tried to use it, for instance, with Uber. The researcher says he would collect data from a website and return to it a few hours later, only to find hundreds of new records.

The researcher provided the details to a bank that had contacted him after seeing his initial blog posts. Smith declined to name the bank. He also reported the incidents to the FBI and later provided information to the United States Postal Inspection Service (USPIS).

Michael Martel, a national public information officer at USPIS, says the information provided by Smith is being used as part of an ongoing USPIS investigation and that the agency cannot comment on specific details. “USPIS is already actively pursuing this type of information to protect the American people, identify victims, and serve justice to the malicious actors behind it all,” Martel says, pointing to advice on spotting and reporting USPS package delivery scams.

Initially, Smith says, he was wary about going public with his research, as this kind of “hacking back” falls into a “gray area”: It may be breaking the Computer Fraud and Abuse Act, a sweeping US computer-crimes law, but he’s doing it against foreign-based criminals. Something he is definitely not the first, or last, to do.

Multiple Prongs

The Smishing Triad is prolific. In addition to using postal services as lures for their scams, the Chinese-speaking group has targeted online banking, ecommerce, and payment systems in the US, Europe, India, Pakistan, and the United Arab Emirates, according to Shawn Loveland, the chief operating officer of Resecurity, which has consistently tracked the group.

The Smishing Triad sends between 50,000 and 100,000 messages daily, according to Resecurity’s research. Its scam messages are sent using SMS or Apple’s iMessage, the latter being encrypted. Loveland says the Triad is made up of two distinct groups—a small team led by one Chinese hacker that creates, sells, and maintains the smishing kit, and a second group of people who buy the scamming tool. (A backdoor in the kit allows the creator to access details of administrators using the kit, Smith says in a blog post.)

“It’s very mature,” Loveland says of the operation. The group sells the scamming kit on Telegram for a $200-per month subscription, and this can be customized to show the organization the scammers are trying to impersonate. “The main actor is Chinese communicating in the Chinese language,” Loveland says. “They do not appear to be hacking Chinese language websites or users.” (In communications with the main contact on Telegram, the individual claimed to Smith that they were a computer science student.)

The relatively low monthly subscription cost for the smishing kit means it’s highly likely, with the number of credit card details scammers are collecting, that those using it are making significant profits. Loveland says using text messages that immediately send people a notification is a more direct and more successful way of phishing, compared to sending emails with malicious links included.

As a result, smishing has been on the rise in recent years. But there are some tell-tale signs: If you receive a message from a number or email you don't recognize, if it contains a link to click on, or if it wants you to do something urgently, you should be suspicious.

30 notes

·

View notes

Text

The Great Data Cleanup: A Database Design Adventure

As a budding database engineer, I found myself in a situation that was both daunting and hilarious. Our company's application was running slower than a turtle in peanut butter, and no one could figure out why. That is, until I decided to take a closer look at the database design.

It all began when my boss, a stern woman with a penchant for dramatic entrances, stormed into my cubicle. "Listen up, rookie," she barked (despite the fact that I was quite experienced by this point). "The marketing team is in an uproar over the app's performance. Think you can sort this mess out?"

Challenge accepted! I cracked my knuckles, took a deep breath, and dove headfirst into the database, ready to untangle the digital spaghetti.

The schema was a sight to behold—if you were a fan of chaos, that is. Tables were crammed with redundant data, and the relationships between them made as much sense as a platypus in a tuxedo.

"Okay," I told myself, "time to unleash the power of database normalization."

First, I identified the main entities—clients, transactions, products, and so forth. Then, I dissected each entity into its basic components, ruthlessly eliminating any unnecessary duplication.

For example, the original "clients" table was a hot mess. It had fields for the client's name, address, phone number, and email, but it also inexplicably included fields for the account manager's name and contact information. Data redundancy alert!

So, I created a new "account_managers" table to store all that information, and linked the clients back to their account managers using a foreign key. Boom! Normalized.

Next, I tackled the transactions table. It was a jumble of product details, shipping info, and payment data. I split it into three distinct tables—one for the transaction header, one for the line items, and one for the shipping and payment details.

"This is starting to look promising," I thought, giving myself an imaginary high-five.

After several more rounds of table splitting and relationship building, the database was looking sleek, streamlined, and ready for action. I couldn't wait to see the results.

Sure enough, the next day, when the marketing team tested the app, it was like night and day. The pages loaded in a flash, and the users were practically singing my praises (okay, maybe not singing, but definitely less cranky).

My boss, who was not one for effusive praise, gave me a rare smile and said, "Good job, rookie. I knew you had it in you."

From that day forward, I became the go-to person for all things database-related. And you know what? I actually enjoyed the challenge. It's like solving a complex puzzle, but with a lot more coffee and SQL.

So, if you ever find yourself dealing with a sluggish app and a tangled database, don't panic. Grab a strong cup of coffee, roll up your sleeves, and dive into the normalization process. Trust me, your users (and your boss) will be eternally grateful.

Step-by-Step Guide to Database Normalization

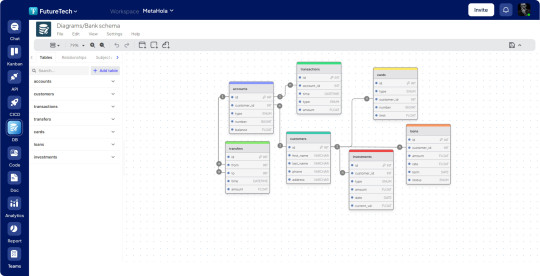

Here's the step-by-step process I used to normalize the database and resolve the performance issues. I used an online database design tool to visualize this design. Here's what I did:

Original Clients Table:

ClientID int

ClientName varchar

ClientAddress varchar

ClientPhone varchar

ClientEmail varchar

AccountManagerName varchar

AccountManagerPhone varchar

Step 1: Separate the Account Managers information into a new table:

AccountManagers Table:

AccountManagerID int

AccountManagerName varchar

AccountManagerPhone varchar

Updated Clients Table:

ClientID int

ClientName varchar

ClientAddress varchar

ClientPhone varchar

ClientEmail varchar

AccountManagerID int

Step 2: Separate the Transactions information into a new table:

Transactions Table:

TransactionID int

ClientID int

TransactionDate date

ShippingAddress varchar

ShippingPhone varchar

PaymentMethod varchar

PaymentDetails varchar

Step 3: Separate the Transaction Line Items into a new table:

TransactionLineItems Table:

LineItemID int

TransactionID int

ProductID int

Quantity int

UnitPrice decimal

Step 4: Create a separate table for Products:

Products Table:

ProductID int

ProductName varchar

ProductDescription varchar

UnitPrice decimal

After these normalization steps, the database structure was much cleaner and more efficient. Here's how the relationships between the tables would look:

Clients --< Transactions >-- TransactionLineItems

Clients --< AccountManagers

Transactions --< Products

By separating the data into these normalized tables, we eliminated data redundancy, improved data integrity, and made the database more scalable. The application's performance should now be significantly faster, as the database can efficiently retrieve and process the data it needs.

Conclusion

After a whirlwind week of wrestling with spreadsheets and SQL queries, the database normalization project was complete. I leaned back, took a deep breath, and admired my work.

The previously chaotic mess of data had been transformed into a sleek, efficient database structure. Redundant information was a thing of the past, and the performance was snappy.

I couldn't wait to show my boss the results. As I walked into her office, she looked up with a hopeful glint in her eye.

"Well, rookie," she began, "any progress on that database issue?"

I grinned. "Absolutely. Let me show you."

I pulled up the new database schema on her screen, walking her through each step of the normalization process. Her eyes widened with every explanation.

"Incredible! I never realized database design could be so... detailed," she exclaimed.

When I finished, she leaned back, a satisfied smile spreading across her face.

"Fantastic job, rookie. I knew you were the right person for this." She paused, then added, "I think this calls for a celebratory lunch. My treat. What do you say?"

I didn't need to be asked twice. As we headed out, a wave of pride and accomplishment washed over me. It had been hard work, but the payoff was worth it. Not only had I solved a critical issue for the business, but I'd also cemented my reputation as the go-to database guru.

From that day on, whenever performance issues or data management challenges cropped up, my boss would come knocking. And you know what? I didn't mind one bit. It was the perfect opportunity to flex my normalization muscles and keep that database running smoothly.

So, if you ever find yourself in a similar situation—a sluggish app, a tangled database, and a boss breathing down your neck—remember: normalization is your ally. Embrace the challenge, dive into the data, and watch your application transform into a lean, mean, performance-boosting machine.

And don't forget to ask your boss out for lunch. You've earned it!

8 notes

·

View notes

Text

How to Transition from Biotechnology to Bioinformatics: A Step-by-Step Guide

Biotechnology and bioinformatics are closely linked fields, but shifting from a wet lab environment to a computational approach requires strategic planning. Whether you are a student or a professional looking to make the transition, this guide will provide a step-by-step roadmap to help you navigate the shift from biotechnology to bioinformatics.

Why Transition from Biotechnology to Bioinformatics?

Bioinformatics is revolutionizing life sciences by integrating biological data with computational tools to uncover insights in genomics, proteomics, and drug discovery. The field offers diverse career opportunities in research, pharmaceuticals, healthcare, and AI-driven biological data analysis.

If you are skilled in laboratory techniques but wish to expand your expertise into data-driven biological research, bioinformatics is a rewarding career choice.

Step-by-Step Guide to Transition from Biotechnology to Bioinformatics

Step 1: Understand the Basics of Bioinformatics

Before making the switch, it’s crucial to gain a foundational understanding of bioinformatics. Here are key areas to explore:

Biological Databases – Learn about major databases like GenBank, UniProt, and Ensembl.

Genomics and Proteomics – Understand how computational methods analyze genes and proteins.

Sequence Analysis – Familiarize yourself with tools like BLAST, Clustal Omega, and FASTA.

🔹 Recommended Resources:

Online courses on Coursera, edX, or Khan Academy

Books like Bioinformatics for Dummies or Understanding Bioinformatics

Websites like NCBI, EMBL-EBI, and Expasy

Step 2: Develop Computational and Programming Skills

Bioinformatics heavily relies on coding and data analysis. You should start learning:

Python – Widely used in bioinformatics for data manipulation and analysis.

R – Great for statistical computing and visualization in genomics.

Linux/Unix – Basic command-line skills are essential for working with large datasets.

SQL – Useful for querying biological databases.

🔹 Recommended Online Courses:

Python for Bioinformatics (Udemy, DataCamp)

R for Genomics (HarvardX)

Linux Command Line Basics (Codecademy)

Step 3: Learn Bioinformatics Tools and Software

To become proficient in bioinformatics, you should practice using industry-standard tools:

Bioconductor – R-based tool for genomic data analysis.

Biopython – A powerful Python library for handling biological data.

GROMACS – Molecular dynamics simulation tool.

Rosetta – Protein modeling software.

🔹 How to Learn?

Join open-source projects on GitHub

Take part in hackathons or bioinformatics challenges on Kaggle

Explore free platforms like Galaxy Project for hands-on experience

Step 4: Work on Bioinformatics Projects

Practical experience is key. Start working on small projects such as:

✅ Analyzing gene sequences from NCBI databases ✅ Predicting protein structures using AlphaFold ✅ Visualizing genomic variations using R and Python

You can find datasets on:

NCBI GEO

1000 Genomes Project

TCGA (The Cancer Genome Atlas)

Create a GitHub portfolio to showcase your bioinformatics projects, as employers value practical work over theoretical knowledge.

Step 5: Gain Hands-on Experience with Internships

Many organizations and research institutes offer bioinformatics internships. Check opportunities at:

NCBI, EMBL-EBI, NIH (government research institutes)

Biotech and pharma companies (Roche, Pfizer, Illumina)

Academic research labs (Look for university-funded projects)

💡 Pro Tip: Join online bioinformatics communities like Biostars, Reddit r/bioinformatics, and SEQanswers to network and find opportunities.

Step 6: Earn a Certification or Higher Education

If you want to strengthen your credentials, consider:

🎓 Bioinformatics Certifications:

Coursera – Genomic Data Science (Johns Hopkins University)

edX – Bioinformatics MicroMasters (UMGC)

EMBO – Bioinformatics training courses

🎓 Master’s in Bioinformatics (optional but beneficial)

Top universities include Harvard, Stanford, ETH Zurich, University of Toronto

Step 7: Apply for Bioinformatics Jobs

Once you have gained enough skills and experience, start applying for bioinformatics roles such as:

Bioinformatics Analyst

Computational Biologist

Genomics Data Scientist

Machine Learning Scientist (Biotech)

💡 Where to Find Jobs?

LinkedIn, Indeed, Glassdoor

Biotech job boards (BioSpace, Science Careers)

Company career pages (Illumina, Thermo Fisher)

Final Thoughts

Transitioning from biotechnology to bioinformatics requires effort, but with the right skills and dedication, it is entirely achievable. Start with fundamental knowledge, build computational skills, and work on projects to gain practical experience.

Are you ready to make the switch? 🚀 Start today by exploring free online courses and practicing with real-world datasets!

#bioinformatics#biopractify#biotechcareers#biotechnology#biotech#aiinbiotech#machinelearning#bioinformaticstools#datascience#genomics#Biotechnology

2 notes

·

View notes

Text

SQL GitHub Repositories

I’ve recently been looking up more SQL resources and found some repositories on GitHub that are helpful with learning SQL, so I thought I’d share some here!

Guides:

s-shemee SQL 101: A beginner’s guide to SQL database programming! It offers tutorials, exercises, and resources to help practice SQL

nightFuryman SQL in 30 Days: The fundamentals of SQL with information on how to set up a SQL database from scratch as well as basic SQL commands

Projects:

iweld SQL Dictionary Challenge: A SQL project inspired by a comment on this reddit thread https://www.reddit.com/r/SQL/comments/g4ct1l/what_are_some_good_resources_to_practice_sql/. This project consists of creating a single file with a column of randomly selected words from the dictionary. For this column, you can answer the various questions listed in the repository through SQL queries, or develop your own questions to answer as well.

DevMountain SQL 1 Afternoon: A SQL project where you practice inserting querying data using SQL. This project consists of creating various tables and querying data through this online tool created by DevMountain, found at this link https://postgres.devmountain.com/.

DevMountain SQL 2 Afternoon: The second part of DevMountain’s SQL project. This project involves intermediate queries such as “practice joins, nested queries, updating rows, group by, distinct, and foreign key”.

37 notes

·

View notes

Text

Top 10 In- Demand Tech Jobs in 2025

Technology is growing faster than ever, and so is the need for skilled professionals in the field. From artificial intelligence to cloud computing, businesses are looking for experts who can keep up with the latest advancements. These tech jobs not only pay well but also offer great career growth and exciting challenges.

In this blog, we’ll look at the top 10 tech jobs that are in high demand today. Whether you’re starting your career or thinking of learning new skills, these jobs can help you plan a bright future in the tech world.

1. AI and Machine Learning Specialists

Artificial Intelligence (AI) and Machine Learning are changing the game by helping machines learn and improve on their own without needing step-by-step instructions. They’re being used in many areas, like chatbots, spotting fraud, and predicting trends.

Key Skills: Python, TensorFlow, PyTorch, data analysis, deep learning, and natural language processing (NLP).

Industries Hiring: Healthcare, finance, retail, and manufacturing.

Career Tip: Keep up with AI and machine learning by working on projects and getting an AI certification. Joining AI hackathons helps you learn and meet others in the field.

2. Data Scientists

Data scientists work with large sets of data to find patterns, trends, and useful insights that help businesses make smart decisions. They play a key role in everything from personalized marketing to predicting health outcomes.

Key Skills: Data visualization, statistical analysis, R, Python, SQL, and data mining.

Industries Hiring: E-commerce, telecommunications, and pharmaceuticals.

Career Tip: Work with real-world data and build a strong portfolio to showcase your skills. Earning certifications in data science tools can help you stand out.

3. Cloud Computing Engineers: These professionals create and manage cloud systems that allow businesses to store data and run apps without needing physical servers, making operations more efficient.

Key Skills: AWS, Azure, Google Cloud Platform (GCP), DevOps, and containerization (Docker, Kubernetes).

Industries Hiring: IT services, startups, and enterprises undergoing digital transformation.

Career Tip: Get certified in cloud platforms like AWS (e.g., AWS Certified Solutions Architect).

4. Cybersecurity Experts

Cybersecurity professionals protect companies from data breaches, malware, and other online threats. As remote work grows, keeping digital information safe is more crucial than ever.

Key Skills: Ethical hacking, penetration testing, risk management, and cybersecurity tools.

Industries Hiring: Banking, IT, and government agencies.

Career Tip: Stay updated on new cybersecurity threats and trends. Certifications like CEH (Certified Ethical Hacker) or CISSP (Certified Information Systems Security Professional) can help you advance in your career.

5. Full-Stack Developers

Full-stack developers are skilled programmers who can work on both the front-end (what users see) and the back-end (server and database) of web applications.

Key Skills: JavaScript, React, Node.js, HTML/CSS, and APIs.

Industries Hiring: Tech startups, e-commerce, and digital media.

Career Tip: Create a strong GitHub profile with projects that highlight your full-stack skills. Learn popular frameworks like React Native to expand into mobile app development.

6. DevOps Engineers

DevOps engineers help make software faster and more reliable by connecting development and operations teams. They streamline the process for quicker deployments.

Key Skills: CI/CD pipelines, automation tools, scripting, and system administration.

Industries Hiring: SaaS companies, cloud service providers, and enterprise IT.

Career Tip: Earn key tools like Jenkins, Ansible, and Kubernetes, and develop scripting skills in languages like Bash or Python. Earning a DevOps certification is a plus and can enhance your expertise in the field.

7. Blockchain Developers

They build secure, transparent, and unchangeable systems. Blockchain is not just for cryptocurrencies; it’s also used in tracking supply chains, managing healthcare records, and even in voting systems.

Key Skills: Solidity, Ethereum, smart contracts, cryptography, and DApp development.

Industries Hiring: Fintech, logistics, and healthcare.

Career Tip: Create and share your own blockchain projects to show your skills. Joining blockchain communities can help you learn more and connect with others in the field.

8. Robotics Engineers

Robotics engineers design, build, and program robots to do tasks faster or safer than humans. Their work is especially important in industries like manufacturing and healthcare.

Key Skills: Programming (C++, Python), robotics process automation (RPA), and mechanical engineering.

Industries Hiring: Automotive, healthcare, and logistics.

Career Tip: Stay updated on new trends like self-driving cars and AI in robotics.

9. Internet of Things (IoT) Specialists

IoT specialists work on systems that connect devices to the internet, allowing them to communicate and be controlled easily. This is crucial for creating smart cities, homes, and industries.

Key Skills: Embedded systems, wireless communication protocols, data analytics, and IoT platforms.

Industries Hiring: Consumer electronics, automotive, and smart city projects.

Career Tip: Create IoT prototypes and learn to use platforms like AWS IoT or Microsoft Azure IoT. Stay updated on 5G technology and edge computing trends.

10. Product Managers

Product managers oversee the development of products, from idea to launch, making sure they are both technically possible and meet market demands. They connect technical teams with business stakeholders.

Key Skills: Agile methodologies, market research, UX design, and project management.

Industries Hiring: Software development, e-commerce, and SaaS companies.

Career Tip: Work on improving your communication and leadership skills. Getting certifications like PMP (Project Management Professional) or CSPO (Certified Scrum Product Owner) can help you advance.

Importance of Upskilling in the Tech Industry

Stay Up-to-Date: Technology changes fast, and learning new skills helps you keep up with the latest trends and tools.

Grow in Your Career: By learning new skills, you open doors to better job opportunities and promotions.

Earn a Higher Salary: The more skills you have, the more valuable you are to employers, which can lead to higher-paying jobs.

Feel More Confident: Learning new things makes you feel more prepared and ready to take on tougher tasks.

Adapt to Changes: Technology keeps evolving, and upskilling helps you stay flexible and ready for any new changes in the industry.

Top Companies Hiring for These Roles

Global Tech Giants: Google, Microsoft, Amazon, and IBM.

Startups: Fintech, health tech, and AI-based startups are often at the forefront of innovation.

Consulting Firms: Companies like Accenture, Deloitte, and PwC increasingly seek tech talent.

In conclusion, the tech world is constantly changing, and staying updated is key to having a successful career. In 2025, jobs in fields like AI, cybersecurity, data science, and software development will be in high demand. By learning the right skills and keeping up with new trends, you can prepare yourself for these exciting roles. Whether you're just starting or looking to improve your skills, the tech industry offers many opportunities for growth and success.

#Top 10 Tech Jobs in 2025#In- Demand Tech Jobs#High paying Tech Jobs#artificial intelligence#datascience#cybersecurity

2 notes

·

View notes

Text

java full stack

A Java Full Stack Developer is proficient in both front-end and back-end development, using Java for server-side (backend) programming. Here's a comprehensive guide to becoming a Java Full Stack Developer:

1. Core Java

Fundamentals: Object-Oriented Programming, Data Types, Variables, Arrays, Operators, Control Statements.

Advanced Topics: Exception Handling, Collections Framework, Streams, Lambda Expressions, Multithreading.

2. Front-End Development

HTML: Structure of web pages, Semantic HTML.

CSS: Styling, Flexbox, Grid, Responsive Design.

JavaScript: ES6+, DOM Manipulation, Fetch API, Event Handling.

Frameworks/Libraries:

React: Components, State, Props, Hooks, Context API, Router.

Angular: Modules, Components, Services, Directives, Dependency Injection.

Vue.js: Directives, Components, Vue Router, Vuex for state management.

3. Back-End Development

Java Frameworks:

Spring: Core, Boot, MVC, Data JPA, Security, Rest.

Hibernate: ORM (Object-Relational Mapping) framework.

Building REST APIs: Using Spring Boot to build scalable and maintainable REST APIs.

4. Database Management

SQL Databases: MySQL, PostgreSQL (CRUD operations, Joins, Indexing).

NoSQL Databases: MongoDB (CRUD operations, Aggregation).

5. Version Control/Git

Basic Git commands: clone, pull, push, commit, branch, merge.

Platforms: GitHub, GitLab, Bitbucket.

6. Build Tools

Maven: Dependency management, Project building.

Gradle: Advanced build tool with Groovy-based DSL.

7. Testing

Unit Testing: JUnit, Mockito.

Integration Testing: Using Spring Test.

8. DevOps (Optional but beneficial)

Containerization: Docker (Creating, managing containers).

CI/CD: Jenkins, GitHub Actions.

Cloud Services: AWS, Azure (Basics of deployment).

9. Soft Skills

Problem-Solving: Algorithms and Data Structures.

Communication: Working in teams, Agile/Scrum methodologies.

Project Management: Basic understanding of managing projects and tasks.

Learning Path

Start with Core Java: Master the basics before moving to advanced concepts.

Learn Front-End Basics: HTML, CSS, JavaScript.

Move to Frameworks: Choose one front-end framework (React/Angular/Vue.js).

Back-End Development: Dive into Spring and Hibernate.

Database Knowledge: Learn both SQL and NoSQL databases.

Version Control: Get comfortable with Git.

Testing and DevOps: Understand the basics of testing and deployment.

Resources

Books:

Effective Java by Joshua Bloch.

Java: The Complete Reference by Herbert Schildt.

Head First Java by Kathy Sierra & Bert Bates.

Online Courses:

Coursera, Udemy, Pluralsight (Java, Spring, React/Angular/Vue.js).

FreeCodeCamp, Codecademy (HTML, CSS, JavaScript).

Documentation:

Official documentation for Java, Spring, React, Angular, and Vue.js.

Community and Practice

GitHub: Explore open-source projects.

Stack Overflow: Participate in discussions and problem-solving.

Coding Challenges: LeetCode, HackerRank, CodeWars for practice.

By mastering these areas, you'll be well-equipped to handle the diverse responsibilities of a Java Full Stack Developer.

visit https://www.izeoninnovative.com/izeon/

2 notes

·

View notes

Text

The 2 types of databases for your business

Do you need to provide you and your team with a full-featured free value-added application builder to digitize the workflow? Collaborate with unlimited users and creators at zero upfront cost. Get an online database free now, and we will provide your business with all the basic tools to design, develop, and deploy simple database-driven applications and services right out of the box.

Here is the definition of a database according to the dictionary:

Structured set of files regrouping information having certain characters in common; software allowing to constitute and manage these files.

The data contained in most common databases is usually modeled in rows and columns in a series of tables to make data processing efficient.

Thus, the data can be easily accessed, managed, modified, updated, monitored and organized. Most databases use a structured query language (SQL) to write and query data

Compared to traditional coding, oceanbase's free online database platform allows you to create database-driven applications in a very short period of time. Build searchable databases, interactive reports, dynamic charts, responsive Web forms, and so on-all without writing any code. Just point, click, and publish. It's that simple!

Traditional software development requires skilled IT personnel, lengthy requirements gathering, and manual coding. Databases and applications built with code are also difficult to learn, deploy, and maintain, making them time, cost, and resource intensive.

On the other hand, codeless database manufacturers enable business professionals to participate in rapid iterative development, even if they have no technical experience.

With oceanbase's simple database builder, you can use off-the-shelf application templates and click and drag and drop tools to build powerful cloud applications and databases 20 times faster than traditional software development.

Oceanbase provides the best free database with an intuitive code-free platform for building data-driven applications that are easy to modify and extend. Get results faster without writing code or managing the server.

The 2 types of databases for your business There are 2 different ones. Here they are:

Databases for functional data This kind of databases have for objective to store data to make a process work. For example the MySQL database for a website.

In a next part, we will advise you the best tools for your business.

Customer databases The purpose of these databases is to store the data of your prospects/customers. For example, a contact may leave you their email address, phone number, or name.

This type of database is highly sought after by businesses because it serves several purposes:

Store contacts. Assign a tag or a list per contact. Perform remarketing or retargeting.

3 notes

·

View notes

Text

Manage databases efficiently with OneTab’s database management system. Create SQL databases online, generate SQL code effortlessly with our SQL code and data generators, and streamline your workflow with an online SQL generator.

#Database-Management-System#Create-Sql-Database-Online#Online-Sql-Generator#Sql-Code-Generator#Sql-Data-Generator

0 notes

Text

How to Integrate PowerApps with Azure AI Search Services

In today’s digital world, businesses require efficient and intelligent search functionalities within their applications. Microsoft PowerApps Training Course, a low-code development platform, allows users to build powerful applications, while Azure AI Search Services enhances data retrieval with AI-driven search capabilities. By integrating PowerApps with Azure AI Search, organizations can optimize their applications for better search performance, user experience, and data accessibility.

This article provides a step-by-step guide on how to integrate PowerApps with Azure AI Search Services to create an intelligent and responsive search solution.

Prerequisites

Before starting, ensure you have the following:

A Microsoft Azure account and subscription

An Azure AI Search service instance

A PowerApps environment set up

A data source (SQL Database, Cosmos DB, or Blob Storage) indexed in Azure AI Search. Microsoft PowerApps Online Training Courses

Step 1: Set Up Azure AI Search

Create an Azure AI Search Service

Sign in to the Azure Portal and search for “Azure AI Search.”

Click Create and configure settings such as subscription, resource group, and pricing tier.

Choose a service name and location, then click Review + Create to deploy the service.

Create and Populate an Index

In your Azure AI Search service, navigate to Indexes and click Add Index.

Define the necessary fields, including ID, Title, Description, and other relevant attributes.

Navigate to Data Sources and select the source you want to index (SQL, Blob Storage, etc.).

Set up an Indexer to populate the index automatically and keep it updated.

Once the index is created and populated, you can query it using REST API endpoints. Power Automate Training

Step 2: Create a Custom Connector in PowerApps

To connect PowerApps with Azure AI Search, a custom connector is required to communicate with the search API.

Set Up a Custom Connector

Open PowerApps and navigate to Custom Connectors.

Click New Custom Connector and select Create from Blank.

Provide a connector name and continue to the configuration page.

Configure API Connection

Enter the Base URL of your Azure AI Search service

Select API Key Authentication and enter the Azure AI Search Admin Key found in the Azure portal under the Keys section.

Define API Actions

Click Add Action and configure it as follows:

Verb: GET

Endpoint URL: /indexes/{index-name}/docs?api-version=2023-07-01-Preview&search={search-text}

Define request parameters such as index-name and search-text.

Save and test the connection to ensure it retrieves data from Azure AI Search. Microsoft PowerApps Online Training Courses

Step 3: Integrate PowerApps with Azure AI Search

Add the Custom Connector to PowerApps

Open your PowerApps Studio and create a Canvas App.

Navigate to Data and add the newly created Custom Connector.

Implement Search Functionality

Insert a Text Input field where users can enter search queries.

Add a Button labeled "Search."

Insert a Gallery Control to display search results.

Step 4: Test and Deploy

After setting up the integration, test the app by entering search queries and verifying that results are retrieved from Azure AI Search. If necessary, refine the search logic and adjust index configurations.

Once satisfied with the functionality, publish and share the PowerApps application with users.

Benefits of PowerApps Azure AI Search Integration

Enhanced Search Performance: AI-driven search provides fast and accurate results. Power Automate Training

Scalability: Supports large datasets with minimal performance degradation.

Customization: Allows tailored search functionalities for different business needs.

Improved User Experience: Enables intelligent and context-aware search results.

Conclusion

Integrating PowerApps with Azure AI Search Services is a powerful way to enhance application functionality with AI-driven search capabilities. This step-by-step guide provides the necessary steps to set up and configure both platforms, allowing you to create efficient and intelligent search applications.

By leveraging the power of Azure AI Search, PowerApps users can significantly improve data accessibility and user experience, making applications more intuitive and efficient. Start integrating today to unlock the full potential of your applications!

Visualpath is the Leading and Best Institute for learning in Hyderabad. We provide PowerApps and Power Automate Training. You will get the best course at an affordable cost.

Call on – +91-7032290546

Visit: https://www.visualpath.in/online-powerapps-training.html

#PowerApps Training#Power Automate Training#PowerApps Training in Hyderabad#PowerApps Online Training#Power Apps Power Automate Training#PowerApps and Power Automate Training#Microsoft PowerApps Training Courses#PowerApps Online Training Course#PowerApps Training in Chennai#PowerApps Training in Bangalore#PowerApps Training in India#PowerApps Course In Ameerpet

1 note

·

View note

Text

Best Snowflake Online Course Hyderabad | Snowflake Course

Snowflake Training Explained: What to Expect

Snowflake Online Course Hyderabad has gained immense popularity as a cloud-based data platform. Businesses and professionals are eager to master it for better data management, analytics, and performance. If you're considering Snowflake training, you might be wondering what to expect. This article provides a comprehensive overview of the learning process, key topics covered, and benefits of training.

Snowflake is a cloud-native data warehouse that supports structured and semi-structured data. It runs on major cloud providers like AWS, Azure, and Google Cloud. Learning Snowflake can enhance your skills in data engineering, analytics, and business intelligence. Snowflake Course

Who Should Enrol in Snowflake Training?

Snowflake training is ideal for various professionals. Whether you are a beginner or an experienced data expert, the training can be tailored to your needs. Here are some of the key roles that benefit from Snowflake training:

Data Engineers – Learn how to build and manage data pipelines.

Data Analysts – Gain insights using SQL and analytics tools.

Database Administrators – Optimize database performance and security.

Cloud Architects – Understand Snowflake's architecture and integration.

Business Intelligence Professionals – Enhance reporting and decision-making.

Even if you have basic SQL knowledge, you can start learning Snowflake. Many courses cater to beginners, covering fundamental concepts before diving into advanced topics.

Key Topics Covered in Snowflake Training

A well-structured Snowflake Online Course program covers a range of topics. Here are the core areas you can expect:

1. Introduction to Snowflake Architecture

Understanding Snowflake’s multi-cluster shared data architecture is crucial. Training explains how Snowflake separates compute, storage, and services, offering scalability and performance benefits.

2. Working with Databases, Schemas, and Tables

You’ll learn how to create and manage databases, schemas, and tables. The training covers structured and semi-structured data handling, including JSON, Parquet, and Avro file formats.

3. Writing and Optimizing SQL Queries

SQL is the backbone of Snowflake Course covers SQL queries, joins, aggregations, and analytical functions. You’ll also learn query optimization techniques to improve efficiency.

4. Snowflake Data Sharing and Security

Snowflake allows secure data sharing across different organizations. The training explains role-based access control (RBAC), encryption, and compliance best practices.

5. Performance Tuning and Cost Optimization

Understanding how to optimize performance and manage costs is essential. You’ll learn about virtual warehouses, caching, and auto-scaling to enhance efficiency.

6. Integration with BI and ETL Tools

Snowflake integrates with tools like Tableau, Power BI, and ETL platforms such as Apache Airflow and Matillion. Training explores these integrations to streamline workflows.

7. Data Loading and Unloading Techniques

You'll learn how to load data into Snowflake using COPY commands, Snow pipe, and bulk load methods. The training also covers exporting data in different formats.

Learning Modes: Online vs. Instructor-Led Training

When choosing a Snowflake training program, you have two main options: online self-paced courses or instructor-led training. Each has its pros and cons.

Online Self-Paced Training

Flexible learning schedule.

Affordable compared to instructor-led courses.

Access to recorded sessions and study materials.

Suitable for individuals who prefer self-learning.

Instructor-Led Training

Live interaction with experienced trainers.

Opportunity to ask questions and clarify doubts.

Hands-on projects and real-time exercises.

Structured curriculum with guided learning.

Your choice depends on your learning style, budget, and time availability. Some platforms offer a combination of both for a balanced experience.

Benefits of Snowflake Training

Investing in Snowflake training offers numerous advantages. Here’s why it’s worth considering:

1. High Demand for Snowflake Professionals

Organizations worldwide are adopting Snowflake, creating a demand for skilled professionals. Earning Snowflake expertise can open new career opportunities.

2. Better Data Management Skills

Snowflake training equips you with best practices for handling large-scale data. You’ll learn how to manage structured and semi-structured data efficiently.

3. Improved Job Prospects and Salary Growth

Certified Snowflake professionals often receive higher salaries. Many organizations prefer candidates with Snowflake expertise over traditional database management skills.

4. Real-World Project Experience

Most training programs include hands-on projects that simulate real-world scenarios. This experience helps in applying theoretical knowledge to practical use cases.

5. Enhanced Business Intelligence Capabilities

With Snowflake training, you can improve data analytics and reporting skills. This enables businesses to make data-driven decisions more effectively.

How to Choose the Right Snowflake Training Program

With various training providers available, choosing the right one is crucial. Here are some factors to consider:

Accreditation and Reviews – Look for well-reviewed courses from reputable providers.

Hands-on Labs and Projects – Practical exercises help reinforce learning.

Certification Preparation – If you're aiming for Snowflake certification, choose a course that aligns with exam objectives.

Support and Community – A strong community and mentor support can enhance learning.

Conclusion

Snowflake training is an excellent investment for data professionals. Whether you're a beginner or an experienced database expert, learning Snowflake can boost your career prospects. The training covers essential concepts such as architecture, SQL queries, performance tuning, security, and integrations.

Choosing the right training format—self-paced or instructor-led—depends on your learning preferences. With growing demand for Snowflake professionals, gaining expertise in this platform can lead to better job opportunities and higher salaries. If you’re considering a career in data engineering, analytics, or cloud computing, Snowflake training is a smart choice.

Start your Snowflake learning journey today with Visualpath and take your data skills to the next level!

Visualpath is the Leading and Best Institute for learning in Hyderabad. We provide Snowflake Online Training. You will get the best course at an affordable cost.

For more Details Contact +91 7032290546

Visit: https://www.visualpath.in/snowflake-training.html

#Snowflake Training#Snowflake Online Training#Snowflake Training in Hyderabad#Snowflake Training in Ameerpet#Snowflake Course#Snowflake Training Institute in Hyderabad#Snowflake Online Course Hyderabad#Snowflake Online Training Course#Snowflake Training in Chennai#Snowflake Training in Bangalore#Snowflake Training in India#Snowflake Course in Ameerpet

0 notes

Text

Why Django is Ideal for Scalable Web Applications

I say all of that because, in this age, where all stuff like stuff is online, web app development that scales up along with your business is a must. Whether you’re just beginning with a few users and going to be millions in the future, scalability is that one must ingredient that will guarantee your application will be running well. This is where Django saves strong points at this stage. And it’s a web framework based on Python which, as a far simpler framework with strong features and great potential for further growth, attracts the developer’s attention. To learn why especially Django is the best choice for creating scalable web applications, let’s take a look at the very reasons.

1. A Strong Foundation for Growth:

The modular approach of Django inherently makes it scalable from the ground up. Django follows a top-down architecture so the entire application can scale, magazine along with its app, and can split up multiple magazines to be deployed at the same time. For example:

1.Middleware takes care of the communication between the request and the response.

2.They are also useful because they allow you to encapsulate features in various units that will inhabit in certain areas, and are expandable in certain areas of your application.

3.You just have to do the rest while the integrated ORM takes care of the requests between your application and the database for a quick pace even if you scale up your amount of data.

It enables this strong backbone for your application to be ready for any difficult scenarios it can possibly encounter.

2. Ease of Horizontal Scaling:

Horizontal scaling with after databases and Memcached integration using caching is possible by Django. Adding more servers to share the load with is a part of this process and Django's framework is ready for these distributed setups.

Also, Django makes it possible to use load balancers with django without worrying those traffic is spend evenly over the servers, reducing downtime and improving the user experience. With this capability, businesses can grow without complete rewriting their infrastructure.

3.Modern Asynchronous Capabilities:

Django is moving with the times. From version 3.1 on it also adds support for asynchronous programming via ASGI (Asynchronous Server Gateway Interface). Django handles real time features such as WebSockets, live notifications and chat application with no sweat. Django is equipped to handle thousands of simultaneous connections if your application only involves that.

4. Optimized Database Management:

Django’s ORM not only makes database interactions easier but also enables developers to:

Database sharding is used to spread data across some databases.

Replication strategy can be taken to make highly available either among multiple nodes or horizontal across multiple replicants.

Index and cache to improve query performance.

Since they come with these capabilities, even with large database usage, these applications can scale easily. Django is compatible both SQL and NoSQL databases, which maximizes choice to address different businesses needs.

5.Built-in Security:

When scaling an app, you are managing a larger user base and more sensitive data. Security is one of the key areas that Django takes care of, programming it with built in security against common attacks like SQL injection, cross site scripting (XSS) and cross site request forgery (CSRF).

The strong user authentication system it has prevents exposure to sensitive information while it allows you focus on growth and not worry about potential vulnerabilities.

6. An Ecosystem That Grows With You:

Django’s large collection of reusable packages is surely one of Django’s strongest points. There’s probably a package out already to make it happen if you need to implement a feature, whether an API, user authentication, or whatever you need. Not only does this save you time but it also leaves you free to work on the differentiated features of your application.

Along with that, any given time, Django’s active community is there to give support, keep us posted about diffs and updates, and introduce innovative tooling that aims to solve new challenges.

7. Excellent Documentation and Learning Curve:

One of Django’s strongest selling points has always been its comprehensive documentation. New users get up to speed easily and seasoned developers pack serious power into solid, highly scalable applications in a timely manner. Learning a system in a simplified and easier way improves its time of development and enables quicker projects delivery.

This allows teams to keep up with the increasing business needs while the developers quickly change their project requirements using Django.

8. Flexibility for Diverse Applications:

Django has flexibility to be used with different kinds of applications. Django is a great framework if you need to deliver a large site for people to browse, add content to, communicate, or buy things from. Businesses that are interested in expanding their operations are swayed by the fact that it can handle high traffic, large amount of data and intricate workflows.

The framework can be bent to accommodate to pivot and change without technical constraints.

9.Ready for the Cloud:

The cloud is where future is going on and django is ready for it. It fits in organically with cloud platforms like AWS, Google Cloud and Azure. All of that holds true, but with compatibility for tools like Docker and Kubernetes, deploying and scaling your application is a simple breeze. It allows very easy adaption of changing demands and traffic spikes without any headache.

Conclusion:

Thanks to the modular approach it takes, its security, its asynchronous capabilities and abundance of ecosystem, Django is one of the best frameworks to build highly scalable web applications. Maintaining performance and user experience and future ready for growing demands, it is a reliable solution for a business that aspires to have digital solutions of the future ready.

It is a pragmatic decision for organisations and developers especially at RapidBrainsto pick Django when building web applications to be scalable, safe, performant and flexible to change. Hire Django Developers from RapidBrains will defenitely take up your project.Let’s kickstart your Django journey right now, and see how the vast web development opportunities have to offer you!

0 notes

Text

Power BI Training: Key Benefits and Learning Objectives

Introduction

In today's data-driven world, organizations rely on business intelligence tools to analyze data and make informed decisions. Microsoft Power BI is one of the most powerful and widely used business analytics tools, enabling users to transform raw data into interactive reports and dashboards.

For those looking to enhance their skills, Power BI Online Training & Placement programs offer comprehensive education and job placement assistance, making it easier to master this tool and advance your career.

Power BI training is essential for professionals looking to enhance their data analytics skills and improve business decision-making. This blog explores the key benefits and learning objectives of Power BI training, helping you understand why mastering this tool can be a game-changer for your career.

Key Benefits of Power BI Training

1. High Demand for Power BI Skills

With businesses increasingly relying on data analytics, the demand for Power BI professionals is growing. Organizations seek experts who can visualize data effectively, making Power BI training a valuable investment for career growth.

2. Enhanced Data Analysis and Visualization

Power BI enables users to create interactive dashboards and reports, making data easier to understand. Training helps professionals leverage advanced visualization techniques to present insights in a clear and impactful manner.

3. Seamless Integration with Other Tools

Power BI integrates effortlessly with Microsoft tools like Excel, Azure, and SQL Server, as well as third-party applications like Google Analytics and Salesforce. Training teaches users how to connect, analyze, and visualize data from multiple sources.

4. Real-Time Data Monitoring and Insights

With Power BI, businesses can track key performance indicators (KPIs) in real time. Learning how to implement automated data refresh and live dashboards ensures accurate and up-to-date decision-making.

5. No Coding Knowledge Required

Unlike other data analytics tools that require extensive coding knowledge, Power BI offers a user-friendly, drag-and-drop interface. Training helps non-technical professionals build reports and dashboards without needing to write complex code.

6. Cost-Effective Business Intelligence Solution

Power BI is more affordable than many other BI tools, making it a preferred choice for small and large businesses. Training ensures that users can fully utilize Power BI’s capabilities, maximizing its cost-effectiveness. It’s simpler to master this tool and progress your profession with the help of Best Online Training & Placement programs, which provide thorough instruction and job placement support to anyone seeking to improve their talents.

7. Improved Collaboration and Report Sharing

Power BI allows teams to collaborate efficiently by sharing reports and dashboards securely across an organization. Training covers Power BI Service, which enables cloud-based sharing and real-time updates.

8. Career Advancement Opportunities

Professionals with Power BI skills can explore careers in data analytics, business intelligence, and reporting. Many organizations prioritize candidates with Power BI certification, making training an important step toward career success.

Key Learning Objectives of Power BI Training

1. Understanding Power BI Fundamentals

Introduction to Power BI and its components (Power BI Desktop, Service, and Mobile)

Overview of business intelligence and data visualization concepts

2. Data Importing and Transformation

Connecting Power BI to various data sources like Excel, SQL Server, and cloud-based databases

Cleaning and transforming raw data using Power Query

3. Data Modeling and DAX (Data Analysis Expressions)

Creating relationships between tables for better data analysis

Writing DAX formulas for custom calculations, aggregations, and time-based analysis

4. Creating Interactive Dashboards and Reports

Designing professional dashboards using charts, graphs, and KPI visuals

Implementing slicers, filters, and drill-through functionalities for better user interaction

5. Publishing and Sharing Reports

Uploading reports to Power BI Service for cloud access

Setting up data refresh schedules and sharing insights with teams

6. Implementing Advanced Features

Using Row-Level Security (RLS) to restrict access to specific data

Integrating Power BI with Azure, Excel, and other Microsoft tools

Exploring AI-driven analytics for predictive insights

7. Preparing for Power BI Certification

Understanding Microsoft’s Power BI Data Analyst Associate (PL-300) certification

Practicing with real-world case studies and hands-on exercises

Conclusion

Power BI training equips professionals with the skills needed to analyze and visualize data efficiently. Whether you are a data analyst, business professional, or IT specialist, mastering Power BI can enhance your career prospects and help organizations make data-driven decisions.

By investing in Power BI training, you gain valuable expertise in data modeling, reporting, and business intelligence, making you a sought-after professional in today’s competitive job market.

0 notes

Text

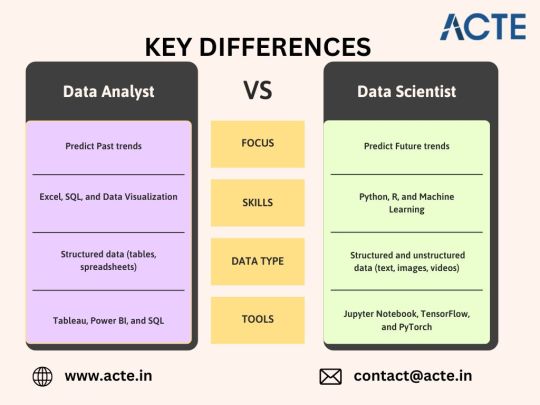

The Essential Skills Required to Become a Data Analyst or a Data Scientist

In today’s data-driven world, businesses require professionals who can extract valuable insights from vast amounts of data. Two of the most sought-after roles in this field are Data Analysts and Data Scientists. While their job functions may differ, both require a strong set of technical and analytical skills. If you want to advance your career at the Data Analytics Training in Bangalore, you need to take a systematic approach and join up for a course that best suits your interests and will greatly expand your learning path. Let’s explore the essential skills needed for each role and how they contribute to success in the data industry.

Essential Skills for a Data Analyst

A Data Analyst focuses on interpreting historical data to identify trends, generate reports, and support business decisions. Their role requires strong analytical thinking and proficiency in various tools and techniques. For those looking to excel in Data analytics, Data Analytics Online Course is highly suggested. Look for classes that align with your preferred programming language and learning approach.

Key Skills for a Data Analyst

Data Manipulation & Querying: Proficiency in SQL to extract and manipulate data from databases.

Data Visualization: Ability to use tools like Tableau, Power BI, or Excel to create charts and dashboards.

Statistical Analysis: Understanding of basic statistics to interpret and present data accurately.

Excel Proficiency: Strong command of Excel functions, pivot tables, and formulas for data analysis.

Business Acumen: Ability to align data insights with business goals and decision-making processes.

Communication Skills: Presenting findings clearly to stakeholders through reports and visualizations.

Essential Skills for a Data Scientist

A Data Scientist goes beyond analyzing past trends and focuses on building predictive models and solving complex problems using advanced techniques.

Key Skills for a Data Scientist

Programming Skills: Proficiency in Python or R for data manipulation, modeling, and automation.

Machine Learning & AI: Knowledge of algorithms, libraries (TensorFlow, Scikit-Learn), and deep learning techniques.

Big Data Technologies: Experience with platforms like Hadoop, Spark, and cloud services for handling large datasets.

Data Wrangling: Cleaning, structuring, and preprocessing data for analysis.

Mathematics & Statistics: Strong foundation in probability, linear algebra, and statistical modeling.

Model Deployment & Optimization: Understanding how to deploy machine learning models into production systems.

Comparing Skills: Data Analyst vs. Data Scientist

Focus Area: Data Analysts interpret past data, while Data Scientists build predictive models.

Tools Used: Data Analysts use SQL, Excel, and visualization tools, whereas Data Scientists rely on Python, R, and machine learning frameworks.

Technical Complexity: Data Analysts focus on descriptive statistics, while Data Scientists work with AI and advanced algorithms.

Which Path is Right for You?

If you enjoy working with structured data, creating reports, and helping businesses understand past trends, a Data Analyst role is a great fit.

If you prefer coding, solving complex problems, and leveraging AI for predictive modeling, a Data Scientist career may be the right choice.

Final Thoughts

Both Data Analysts and Data Scientists play crucial roles in leveraging data for business success. Learning the right skills for each role can help you build a strong foundation in the field of data. Whether you start as a Data Analyst or aim for a Data Scientist role, continuous learning and hands-on experience will drive your success in this rapidly growing industry.

0 notes