#CCPA (California Consumer Privacy Act)

Explore tagged Tumblr posts

Text

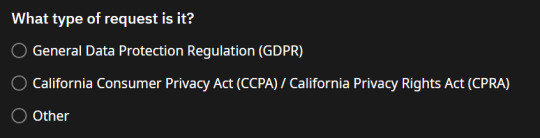

trying to request the data from my reddit profile so i can delete it. does anyone know which option to select? i am unfamiliar with these and don't currently have the time to research them

#finn says shit#reddit#gdpr#general data protection regulation#california consumer privacy act#ccpa#california privacy rights act#cpra#if it matters i'm not from california#and selecting other doesn't make you write anything

1 note

·

View note

Text

Understanding CPRA: A Guide for Beginners

The California Privacy Rights Act (CPRA) is a data privacy law that was passed in California in November 2020 as an extension and expansion of the California Consumer Privacy Act (CCPA). The CPRA introduces new privacy rights for consumers and additional obligations for businesses. The law is expected to go into effect on January 1, 2023. Why Do We Need CPRA? The CPRA came about to address some…

View On WordPress

#Automated Decision Making#California Consumer Privacy Act#California Privacy Protection Agency#California Privacy Rights Act#CCPA#Compliance#Confidentiality#Consent#Consent Management#Consumer Rights#CPPA#CPRA#Data Breach#Data Deletion#Data Practices#data privacy#Data Processing#data protection#Data Rights#Data Security#Digital Age#Enforcement#Information Protection#Non-compliance#personal data#Privacy Notice#Privacy Policy#Right to Correct#Right to Delete#Sensitive Personal Information

0 notes

Text

Trusting Data in the Digital Age: Building a Better Future

23 May 2023

In today's interconnected world, data has become a powerful resource that fuels technological advancements and drives decision-making processes across various industries. However, the increasing reliance on data also raises concerns about its trustworthiness and the potential consequences of its misuse. Building trust in data is crucial to ensure the integrity, reliability, and ethical use of information. This article explores the concept of trust in data, its significance, challenges, and strategies to establish a foundation of trust for a data-driven future.

The Importance of Trust in Data:

Trust is the cornerstone of any successful relationship, and the relationship between humans and data is no exception. Trust in data is vital for several reasons:

Informed Decision Making: It enables individuals, organizations, and governments to make well-informed decisions with confidence. Whether it's assessing market trends, evaluating performance metrics, or formulating policies, reliable data forms the basis for sound judgments.

Transparency and Accountability: Trustworthy data promotes transparency, allowing stakeholders to understand the origins, quality, and limitations of the information they rely upon. It also holds organizations accountable for their actions, as data-driven insights can be scrutinized for bias, manipulation, or misrepresentation.

Technological Advancements: Trustworthy data is the fuel that powers artificial intelligence, machine learning, and other emerging technologies. Without reliable data, the algorithms and models developed to improve efficiency, automate processes, and drive innovation may yield flawed results and detrimental outcomes.

Challenges to Trust in Data:

Building trust in data is not without its challenges. Several factors contribute to skepticism and hinder the establishment of trust:

Data Quality and Accuracy: Data can be riddled with errors, inconsistencies, or biases, compromising its reliability. Incomplete or outdated datasets can further erode trust in their validity and relevance.

Data Privacy and Security: Data breaches, unauthorized access, and misuse of personal information have heightened concerns around data privacy and security. Individuals and organizations are increasingly wary of sharing their data due to potential risks of exploitation or compromise.

Ethical Considerations: Data collection, analysis, and utilization raise ethical questions regarding consent, fairness, and the potential for discrimination. Failure to address these concerns undermines trust in data-driven initiatives.

Building Trust in Data:

Establishing trust in data is a collective responsibility that requires proactive measures from various stakeholders. Here are some strategies to foster trust in data:

Data Governance and Standards: Developing robust governance frameworks and industry-wide standards for data collection, storage, and usage helps ensure transparency, consistency, and accountability.

Data Quality Assurance: Implementing rigorous data validation, verification, and cleansing processes helps maintain data integrity and accuracy. Regular audits and checks can help identify and rectify any errors or biases.

Data Privacy and Security Measures: Prioritizing data privacy and security is crucial to earning the trust of individuals and organizations. Implementing strong data protection measures, complying with relevant regulations (such as GDPR), and being transparent about data handling practices can build confidence in data usage.

Ethical Data Practices: Adhering to ethical guidelines, such as obtaining informed consent, anonymizing data, and minimizing bias, demonstrates a commitment to responsible data practices and helps build trust among stakeholders.

Collaboration and Openness: Encouraging collaboration, knowledge-sharing, and open data initiatives foster a culture of trust and transparency. Embracing external audits and inviting scrutiny can help identify areas of improvement and build confidence in data processes.

Conclusion:

In the age of data-driven decision-making, trust in data is paramount. Establishing trust requires addressing challenges related to data quality, privacy, security, and ethical considerations. By implementing robust governance frameworks, ensuring data accuracy, prioritizing privacy and security, adhering to ethical guidelines, and fostering collaboration, stakeholders can build a solid foundation of trust in data. Trustworthy data not only enables informed decision-making but also paves the way for responsible technological advancements that benefit society as a whole.

Make more confident business decisions with data you can trust.

Schedule a demo today!

#DataGovernance#DataManagement#DataPrivacy#DataProtection#DataCompliance#DataSecurity#DataQuality#DataGovernanceFramework#DataGovernancePolicy#GDPR (General Data Protection Regulation)#CCPA (California Consumer Privacy Act)#DataGovernanceBestPractices#DataStewardship#DataGovernanceStrategy#DataGovernanceCouncil#DataGovernanceManagement#DataGovernanceProcesses#DataGovernanceTools#DataGovernanceTraining

0 notes

Text

Recent Developments in Data Privacy and Their Implications for Business

Recent Developments in Data Privacy and Their Implications for Business

Data privacy is a hot topic in today’s digital world. Here are nine recent developments that changed the data privacy landscape and what they mean for businesses and consumers. 1. The EU General Data Protection Regulation (GDPR) came into force in May 2018, creating a unified data protection framework across the EU and giving individuals more control over their personal data. The EU General…

View On WordPress

#BCR#binding corporate rules#California Consumer Privacy Act#CCPA#CDPSA#China Information Protection Law#CJEU#Court of Justice of the European Union#data privacy#Digital Markets Act#DMA#DSA#EU Digital Services Act#EU General Data Protection Regulation#GDPR#Global Privacy Control#GPC#India Personal Data Protection Bill#PDPB#PIPL#Schrems II#Senator Kirsten Gillibrand#UK Data Protection Act#US Consumer Data Privacy and Security Act

0 notes

Text

The Future of Digital Marketing: Trends You Can't Afford to Miss in 2024

Digital marketing is evolving at a rapid pace, and staying ahead of the curve is essential for businesses that want to remain competitive. As we step into 2024, several key trends are reshaping the landscape of digital marketing. These trends reflect changes in technology, consumer behavior, and market dynamics. Let’s dive into the most significant digital marketing trends that you can’t afford to miss in 2024.

1. Artificial Intelligence (AI) and Automation

Artificial Intelligence (AI) has been steadily gaining traction in digital marketing, and 2024 is set to see even greater adoption of AI-driven tools. AI is already being used for predictive analytics, personalized content delivery, and chatbots that enhance customer experience. In 2024, AI-powered platforms will play an even bigger role in creating tailored marketing campaigns, optimizing ad spend, and predicting consumer behavior with incredible accuracy.

Automation, alongside AI, will continue to streamline marketing processes. Automated email marketing, social media scheduling, and content curation are now standard, but advancements in AI will make automation even smarter. Marketers can expect to automate more complex tasks, such as customer segmentation and dynamic ad creation, allowing businesses to focus on creative strategy rather than repetitive tasks.

2. Voice Search and Conversational Marketing

Voice search is transforming the way consumers find information. With the rise of smart speakers like Amazon Echo and Google Home, more people are using voice search to interact with brands. By 2024, voice search optimization will be crucial for businesses that want to maintain their visibility online.

Conversational marketing, which focuses on real-time, one-on-one connections between marketers and consumers, will also grow in importance. AI-driven chatbots and messaging platforms will become even more sophisticated, making it easier to engage with customers at any point in their buying journey. Personalization through conversational AI will help businesses deliver the right message at the right time, increasing customer engagement and satisfaction.

3. The Rise of Short-Form Video Content

Short-form video content, popularized by platforms like TikTok, Instagram Reels, and YouTube Shorts, is expected to dominate digital marketing in 2024. Consumers prefer quick, digestible content, and businesses must adapt by creating more short videos that are engaging and to the point. Brands will need to focus on storytelling, creativity, and humor to capture the attention of their target audience in mere seconds.

Additionally, live streaming will continue to grow, offering businesses the opportunity to connect with their audience in real time. Whether it's product launches, Q&A sessions, or live events, live streaming offers an interactive experience that fosters brand loyalty and trust.

4. Personalization at Scale

Consumers today expect personalized experiences, and in 2024, the ability to offer personalized content at scale will be a key differentiator for brands. With the help of AI and data analytics, marketers can deliver highly tailored content that resonates with individual consumers. This includes personalized email campaigns, product recommendations, and even website experiences that adapt to user behavior in real time.

Personalization will not just be about addressing the consumer by name. Instead, it will focus on delivering the right content, products, and messages based on consumers’ preferences, past behavior, and even their current mood. Brands that succeed in personalizing their marketing efforts at scale will see higher engagement and customer retention.

5. Privacy and Data Protection

In 2024, data privacy will continue to be a critical concern for both consumers and marketers. With new regulations like the GDPR (General Data Protection Regulation) and CCPA (California Consumer Privacy Act) setting strict guidelines on data usage, businesses must prioritize transparency and ethical data handling.

Marketers will need to focus on first-party data collection methods, as reliance on third-party cookies fades due to privacy concerns. Building trust with consumers by being clear about how data is used will be essential. This shift will challenge marketers to get creative with how they gather and utilize customer information in a way that balances personalization with privacy.

Conclusion

As we look toward 2024, digital marketing is set to become more advanced, automated, and personalized than ever before. Businesses that embrace AI, short-form video content, voice search, and data privacy will be well-positioned to succeed in the ever-changing digital landscape. Staying ahead of these trends will be crucial for maintaining a competitive edge and delivering meaningful, personalized experiences to customers in the digital age.

2 notes

·

View notes

Text

Survey Programming Trends: Adapting to an Ever-Changing Field

In the realm of survey programming, the pace of technological advancement and shifting methodologies is a constant. As organizations and researchers strive to gather actionable insights from diverse populations, staying abreast of the latest trends and adapting to emerging technologies becomes essential. This article delves into the current trends in survey programming and explores how professionals can navigate these changes to enhance their data collection processes and outcomes.

1. Increased Use of Artificial Intelligence and Machine Learning

Artificial Intelligence (AI) and Machine Learning (ML) have made significant inroads into survey programming, transforming the way surveys are designed, administered, and analyzed.

2. Integration of Mobile and Multichannel Surveys

With the majority of people accessing the internet via smartphones and tablets, optimizing surveys for mobile devices is no longer optional—it's a necessity. Moreover, integrating various channels, such as email, SMS, social media, and web-based platforms, ensures that surveys reach a broader audience and accommodate different user preferences.

3. Emphasis on User Experience and Accessibility

Survey programming is increasingly focusing on user experience (UX) and accessibility to ensure that surveys are engaging and inclusive. This includes designing intuitive interfaces, minimizing survey fatigue, and accommodating respondents with disabilities.

4. Enhanced Data Security and Privacy Measures

With growing concerns about data privacy and security, survey programmers are placing greater emphasis on protecting respondent information. Compliance with regulations such as the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA) is crucial.

5. Leveraging Real-Time Analytics and Dashboarding

Real-time analytics and dashboarding are revolutionizing how survey data is analyzed and presented. Instead of waiting for post-survey data processing, organizations can now access live data streams and interactive dashboards that provide immediate insights.

6. Incorporation of Gamification and Interactive Elements

To boost engagement and response rates, survey designers are increasingly incorporating gamification and interactive elements. Techniques such as quizzes, polls, and interactive scenarios make surveys more engaging and enjoyable for respondents.

7. Adoption of Advanced Survey Methodologies

Survey methodologies are evolving to include more sophisticated approaches, such as conjoint analysis, discrete choice modeling, and experience sampling methods.

8. Focus on Inclusivity and Cultural Sensitivity

As global surveys become more common, there is a growing emphasis on inclusivity and cultural sensitivity. This involves designing surveys that account for diverse cultural contexts, languages, and social norms.

9. Increased Use of Data Integration and Cross-Platform Analytics

Integrating survey data with other sources, such as CRM systems, social media analytics, and transaction records, provides a more comprehensive view of respondents.

10. Growing Importance of Ethical Considerations

Ethical considerations are becoming more prominent in survey programming. This includes ensuring informed consent, minimizing respondent burden, and being transparent about how data will be used.

Conclusion

Survey programming is an ever-evolving field, driven by technological advancements and shifting methodological trends. By staying informed about the latest developments and adapting to new tools and techniques, survey professionals can enhance the effectiveness of their data collection efforts. Embracing AI and ML, optimizing for mobile and multichannel experiences, focusing on user experience and accessibility, and maintaining high standards of data security and ethical practices are key to navigating the complexities of modern survey programming.

To know more read our latest blog: Navigating Trends: The Ever-Evolving in Survey Programming

Also read: survey programming services company

3 notes

·

View notes

Text

Rob Lilleness Shares The Impact of Technology on Privacy and Personal Data

In an unexpectedly evolving digital landscape, the problem of privacy and private statistics has turned out to be a paramount issue for individuals, corporations, and governments alike. The advent of technology has ushered in limitless benefits, however it has additionally raised questions on how our personal records are accumulated, saved, and used. Rob Lilleness, a professional inside the area, shares insights into the profound effect of technology on privateness and private records.

The Digital Footprint: How Technology Shapes Our Online Identities

In the contemporary interconnected global world, our online presence, regularly called a "digital footprint," is usually expanding. Everything from the websites we visit to the products we buy leaves a trail of statistics. Rob Lilleness emphasizes that understanding this digital footprint is critical for individuals and organizations.

Data Collection and Its Consequences

The significant collection of private information by using tech giants, social media systems, and other on-line services has led to worries about how this information is used. Rob Lilleness delves into the practices of records collection, from cookies and monitoring pixels to social media interactions, dropping light on how non-public information is collected, regularly without users' specific consent.

The Threats to Privacy

Rob Lilleness discusses the threats posed to private privacy inside the virtual age. From cyberattacks and records breaches to the selling of private information to third parties, the vulnerabilities are numerous. He highlights the want for robust cybersecurity measures and law to protect personal statistics.

Privacy Legislation and User Rights

The impact of generation on privateness has spurred legislative movement in numerous components of the world. General Data Protection Regulation (GDPR) in Europe and the California Consumer Privacy Act (CCPA) are examples of such efforts. Lilleness explores how these guidelines aim to shield individuals' rights and offers readers a higher understanding of the tools at their disposal to say control over their personal statistics.

Balancing Convenience and Privacy

Rob Lilleness recognizes that technology has brought enormous comfort to our lives, from customized suggestions to immediate conversation. However, it also demands that we strike a balance between enjoying these conveniences and protecting our private statistics. He discusses the importance of knowledgeable consent and the duty of tech organizations in this regard.

The Role of Ethics in Technology

Lastly, Rob Lilleness emphasizes the significance of ethical considerations in era development. He explores the concept of "privacy by using design," which inspires tech groups to prioritize user privacy from the very inception of their products and services.

In conclusion, the effect of generation on privacy and private statistics is a complicated and multifaceted problem. Rob Lilleness sheds mild on the diverse components of this challenge, from facts collection and threats to legislative movement and ethical considerations. As the era keeps strengthening, knowledge of the consequences for privateness becomes increasingly vital for individuals and society as a whole.

4 notes

·

View notes

Text

CCPA Notice | Understand CCPA Notice Requirements - Speedy Loan Advance

Stay informed about your privacy rights under the California Consumer Privacy Act (CCPA) with Speedy Loan Advance. Our CCPA Notice explains how we collect, use, and protect your personal information while ensuring compliance with CCPA notice requirements. Learn about your rights to access, delete, or opt out of the sale of your data. We prioritize transparency and data security, giving you control over your personal information. Read our detailed CCPA Notice to understand how we safeguard your privacy and comply with California’s data protection laws. Stay protected—review our policy today!

0 notes

Text

How Does the Presence of The ISO 27001 Certification Help with Compliance?

The inclusion of the ISO 27001 certification within a company strengthens its competitive advantage as the internal data is safe from external manipulation. Industries that face high rivalry issues in their market are prone to face complications due to data breaches, cyber threats, and phishing. The ISO 27001 offers a proactive and practical set of clauses that are relevant for identifying potential risks and managing them in due time.

When it comes to compliance management, many companies fail, and face government intervention followed by heavy penalties. More than 50% of small companies fail to maintain the privacy of their customers and employee details. Consequently, their brand will lose market reputation and authorities will pay unnecessary penalties. The following blog will discuss how this certification help with regulatory obligations.

1. Aligns with Legal and Regulatory Requirements - Many industries have strict data security and privacy laws. The ISO 27001 helps businesses comply with regulations such as:

GDPR (General Data Protection Regulation) – Ensures secure handling of personal data.

HIPAA (Health Insurance Portability and Accountability Act) – Supports healthcare organizations in securing patient data.

CCPA (California Consumer Privacy Act) – Enhances data protection measures for U.S. businesses.

SOX (Sarbanes-Oxley Act) – Helps financial firms manage IT security risks.

NIST Cybersecurity Framework – Provides controls that align with U.S. government security recommendations.

By implementing the ISO 27001, organisations can demonstrate compliance to all laws and avoid legal penalties.

2. Strengthens Risk Management and Security Controls - The ISO 27001 mandates a risk-based approach to information security. It helps to identify, assess, and mitigate risks associated with cyber threats, data breaches, and unauthorized access. Ensures continuous monitoring and improvement of security controls. This helps organisations proactively address risks rather than reacting to incidents.

3. Ensures Data Protection and Confidentiality - It establishes encryption, access control, and data classification policies. It prevents unauthorized access to sensitive business, customer, and employee data. The certification enhances business continuity by securing critical information assets. These security practices align with various data protection laws and industry standards.

4. Facilitates Third-Party Compliance and Supply Chain Security - Many organisations require vendors, partners, and suppliers to follow strict security protocols. The ISO 27001 certification proves that an organisation meets high-security standards. It simplifies compliance with supplier risk management frameworks in industries like finance, healthcare, and government contracting. This makes it easier to win contracts and build trust with stakeholders.

5. Reduces the Risk of Data Breaches and Fines - Non-compliance with security regulations often results in hefty fines and reputation damage. The ISO 27001 lowers breach risks by enforcing strict security policies, audits, and employee training. It helps organizations respond effectively to security incidents, reducing legal liability.

6. Supports Compliance with Other ISO Standards

If a company is already certified to other ISO standards, integrating the ISO 27001 simplifies compliance by providing:

A unified management system.

Consistent risk assessment and documentation practices.

A structured approach to security, quality, and regulatory compliance.

7. Demonstrates Commitment to Security and Compliance - The ISO 27001 certification is internationally recognized and shows that a company takes security seriously. Builds customer confidence by proving data is handled securely. Helps meet compliance requirements for government tenders, financial services, and cloud-based businesses.

To learn more about the ISO 27001 certification and its benefits, get help from certified and reliable ISO consultancy houses.

Also Read: What Is the Difference Between the ISO 14001 and the ISO 45001!

0 notes

Text

How user data is handled by Been Verified?

In the digital age, online services that provide access to public records and background information have become increasingly popular. Been Verified is one such platform that offers users the ability to search for information on individuals, including contact details, criminal records, and more. However, with growing concerns about data privacy, it's essential to understand how user data is handled by been verified.

Understanding Been Verified

Been Verified is a people search engine that aggregates data from various public sources. Users can access background reports, phone number lookups, email address lookups, and other services through its subscription-based model. The platform aims to provide easy and transparent access to public records, but this also raises questions about how personal data is collected, used, and protected.

Data Collection by Been Verified

When using Been Verified, the platform collects several types of data, including:

Personal Information: This includes your name, email address, phone number, and billing details when you create an account.

Search Data: Been Verified tracks search queries made on the platform to improve its services and provide relevant results.

Device Information: The platform may collect details about your device, browser, IP address, and operating system.

Cookies and Tracking Technologies: Like many websites, Been Verified uses cookies to personalize the user experience and analyze site traffic.

How Been Verified Uses Collected Data

The data collected by Been Verified serves various purposes, including:

Providing Services: User data is primarily used to deliver search results, generate reports, and offer customer support.

Improving the Platform: Aggregated and anonymized data is analyzed to enhance search accuracy and user experience.

Communication: Been Verified may contact users for account updates, service notifications, or marketing purposes.

Compliance with Legal Obligations: The platform may share data with law enforcement when legally required.

Data Security Measures

Been Verified takes data security seriously and employs various measures to protect user information:

Encryption: Data transmitted between your device and Been Verified’s servers is encrypted using Secure Socket Layer (SSL) technology.

Access Controls: Strict access controls are in place to ensure that only authorized personnel have access to sensitive data.

Regular Audits: The platform conducts regular security audits and assessments to detect and mitigate vulnerabilities.

Data Minimization: Been Verified limits the data it collects and retains, ensuring that unnecessary information is not stored.

User Control Over Data

Been Verified provides users with several options to control their personal data:

Opting Out: Individuals can request to have their information removed from the Been Verified database through the company’s opt-out page.

Access and Update: Users can access and update their account information at any time.

Data Deletion: Upon request, Been Verified will delete personal data in accordance with applicable laws.

Compliance with Data Protection Regulations

Been Verified complies with relevant data protection laws, including:

California Consumer Privacy Act (CCPA): California residents have the right to know what personal information is collected and request its deletion.

General Data Protection Regulation (GDPR): European users are granted additional rights concerning their personal data, including the right to access, rectify, and erase their information.

Fair Credit Reporting Act (FCRA): While Been Verified is not a consumer reporting agency, it adheres to relevant legal requirements concerning the use of data for permissible purposes.

Transparency and Accountability

To maintain transparency, Been Verified provides a clear and detailed privacy policy outlining how data is collected, used, and protected. Users are encouraged to review this policy to stay informed about their rights and the company’s practices.

Final Thoughts

While using services like Been Verified can provide valuable insights, it’s crucial to be aware of how your data is handled. By understanding the platform’s data collection practices, security measures, and compliance with privacy laws, users can make informed decisions about their personal information. If you have concerns about your data, taking advantage of the opt-out options and exercising your privacy rights can further protect your information.

For more information, visit the official been verified website and review their privacy policy.

0 notes

Text

RChilli Strengthens User Trust with CCPA Certification

As businesses and technology continue to evolve, the importance of data privacy has reached an all-time high. RChilli, a global leader in AI-driven HR technology solutions, has recently fully complied with the California Consumer Privacy Act (CCPA). This accomplishment strengthens the company’s commitment to user data privacy and showcases its ongoing dedication to innovation and security in the…

0 notes

Text

How Web3 Marketing Agencies Are Navigating the Legal Landscape

The rapid growth of Web3 technologies—blockchain, decentralized finance (DeFi), non-fungible tokens (NFTs), and the metaverse—has led to new opportunities for businesses and marketers. As companies look to leverage these innovative platforms, Web3 marketing agencies have emerged as key players in guiding brands through the complexities of this new digital landscape. However, alongside the excitement and opportunities come significant legal challenges, which Web3 marketing agency must navigate to ensure compliance, protect their clients, and build sustainable marketing campaigns.

In this article, we’ll explore how Web3 marketing agencies are addressing legal issues, including data privacy, intellectual property, advertising regulations, and contract law while ensuring they stay ahead of the curve in an evolving regulatory environment.

Understanding the Legal Challenges Facing Web3 Marketing Agencies

The decentralized nature of Web3 introduces unique legal complexities for marketing agencies. While established rules and regulations govern traditional marketing, Web3 introduces new variables that agencies must contend with. Here are some of the primary legal challenges they face:

Data Privacy and Consumer Protection In Web3, user data is often decentralized, and consumers have greater control over their personal information. However, this also raises concerns about how data is collected, stored, and used, especially when it comes to compliance with data protection regulations like the European Union’s General Data Protection Regulation (GDPR) or the California Consumer Privacy Act (CCPA). Web3 marketing agencies must ensure they handle user data in a transparent manner. Since Web3 platforms often operate without a central authority, it becomes more challenging for agencies to ensure that third-party platforms comply with privacy laws. To mitigate risk, agencies must work with their clients to educate them on best practices for user consent, data storage, and protection, ensuring compliance with global privacy standards.

Intellectual Property and NFT Ownership As Web3 marketing agencies incorporate NFTs into their campaigns, they must grapple with intellectual property (IP) laws in new and complex ways. NFTs are unique digital assets that can represent anything from artwork to virtual real estate. However, the legal rights associated with NFTs, such as copyright ownership or licensing agreements, can be ambiguous. A key issue for Web3 marketing agencies is ensuring that their clients have clear rights to use the digital assets they are promoting or selling as NFTs. Agencies must carefully vet the ownership and licensing rights of any digital assets involved in NFT-based campaigns to prevent potential legal disputes. Moreover, since NFTs can often be resold or transferred across platforms, agencies must ensure that IP rights are clearly stated and legally binding.

Advertising and Marketing Regulations Traditional advertising laws and regulations—like truth in advertising, consumer protection, and financial disclosures—apply in the Web3 space as well, but there is a significant lack of clear guidelines specific to decentralized technologies. This creates challenges for Web3 marketing agencies when running campaigns on decentralized platforms or promoting blockchain-based products like tokens or NFTs. Web3 marketing agencies need to stay updated on evolving regulations from bodies like the U.S. Federal Trade Commission (FTC), the U.K.’s Advertising Standards Authority (ASA), or the European Union’s consumer protection laws. Agencies must ensure that any claims made in Web3 campaigns are truthful, substantiated, and compliant with existing advertising rules. This becomes even more critical when promoting investment opportunities or financial products, as regulatory scrutiny is growing in the realm of crypto marketing.

Decentralized Finance (DeFi) and Securities Laws Decentralized finance platforms allow individuals to access financial services without intermediaries, but these platforms are not free from regulation. In particular, the U.S. Securities and Exchange Commission (SEC) and other financial regulators worldwide are increasingly focused on whether tokens or digital assets issued through DeFi platforms are classified as securities. For Web3 marketing agencies promoting DeFi projects or tokens, it is essential to understand securities laws and ensure that the promotion of such assets complies with existing financial regulations. Failure to do so can result in fines, legal action, or the shutdown of campaigns. Agencies may need to work closely with legal teams to evaluate whether the assets being promoted qualify as securities and, if so, follow proper disclosure and compliance protocols.

Smart Contract and Liability Issues Smart contracts, which are self-executing contracts with terms directly written into code, are integral to many Web3 projects. While they offer a level of automation and transparency, smart contracts also come with legal implications. Issues such as contract disputes, liabilities, and errors in the contract code can lead to legal challenges. Web3 marketing agencies must ensure that their clients fully understand the legal implications of using smart contracts in their marketing efforts. Additionally, they should work with developers and legal experts to ensure that smart contracts are written correctly and can withstand legal scrutiny.

How Web3 Marketing Agencies Are Addressing Legal Challenges

To navigate these legal challenges, Web3 marketing agencies are adopting several strategies:

Collaboration with Legal Experts Many Web3 marketing agencies are partnering with legal experts who specialize in blockchain and cryptocurrency law. These professionals help agencies understand complex legal frameworks, ensure compliance with regulations, and mitigate legal risks associated with decentralized technologies.

Implementing Clear and Transparent Terms Given the uncertainties surrounding data ownership and intellectual property rights in Web3, agencies are ensuring that clear terms of service and user agreements support all campaigns. These agreements help define the rights and responsibilities of consumers and ensure that agencies are protected legally.

Staying Ahead of Regulatory Changes Since the legal landscape for Web3 is constantly evolving, agencies are dedicating resources to staying updated on the latest regulatory developments. Many Web3 marketing agencies are part of industry groups or forums that provide insights into new regulations and best practices for compliance.

Educating Clients on Legal Risks Web3 marketing agencies are working closely with their clients to help them understand the potential legal risks involved in marketing within the Web3 space. By offering legal advice and working proactively to identify issues, agencies can help clients mitigate legal risks and stay compliant with regulatory requirements.

Conclusion

As the Web3 space continues to evolve, so too will the legal landscape. Web3 marketing agencies are at the forefront of navigating the complexities of decentralized platforms, privacy regulations, and intellectual property laws. By staying informed, collaborating with legal experts, and implementing transparent practices, these agencies are not only ensuring compliance but also setting the stage for long-term success in a rapidly changing industry. For brands seeking to capitalize on the Web3 revolution, working with a knowledgeable Web3 marketing agency is essential to mitigate legal risks and build a sustainable, compliant marketing strategy.

0 notes

Text

Cloud Advertising Industry Report: AI, Data Analytics & Programmatic Trends

The global cloud advertising market size is anticipated to reach USD 14.31 billion by 2030, according to a new report by Grand View Research, Inc. The market is expected to grow at a CAGR of 18.1% from 2023 to 2030. The global market for cloud advertising is expected to experience growth in the forecast period owing to the increasing focus on data privacy, and compliance regulations, which has bolstered the demand for cloud advertising.

Advertisers are increasingly concerned about adhering to privacy regulations, such as the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA). Cloud advertising platforms offer advanced data protection and compliance features, enabling advertisers to ensure the security and privacy of user data while delivering personalized ads. This driver has gained prominence as data privacy concerns have become more prevalent among consumers and regulatory bodies.

Cloud Advertising Market Report Highlights

By service, the platform as a service (PaaS) segment accounted for the major revenue share of 53.2% in 2022 and is anticipated to maintain its dominance over the forecast period. The demand for innovation and enhanced customer experiences fuels the adoption of PaaS-based cloud advertising. Advertisers constantly seek novel ways to engage with their target audience and deliver personalized, interactive, and immersive advertising experiences. PaaS platforms provide the necessary infrastructure and tools to experiment with new ad formats, technologies, and channels, facilitating innovation and creativity in advertising campaigns. The software as a service(SaaS) segment is expected to witness the highest growth rate of 21.6% during the forecast period due to a shift towards self-service and automated marketing solutions

In terms of channel, the in-app segment held the largest revenue share of 33.6% in 2022 over the forecast period. Mobile gaminghas grown exponentially in recent years, with a large user base engaging in various gaming apps. Cloud advertising allows advertisers to tap into this lucrative market by integrating relevant and non-intrusive ads within gaming experiences. Advertisers can leverage cloud platforms to reach highly engaged users, offering targeted promotions, rewards, or in-app purchases, thus effectively monetizing the gaming environment. The social media segment is expected to witness the highest CAGR of 20.5% during the forecast period. Cloud advertising enables advertisers to quickly adapt their social media campaigns, adjust ad creatives, and modify targeting parameters to keep up with rapidly changing trends and user preferences. This flexibility is precious in social media marketing, where trends and conversations can evolve rapidly

In terms of deployment, the hybrid cloud segment dominated the market and captured the highest revenue share of 43.3% in 2022. Data privacy and compliance considerations are critical for cloud advertising in hybrid cloud setups. Advertisers must navigate regulatory requirements and protect consumer data while delivering personalized ads. The private cloud segment is anticipated to witness the highest growth rate of 20.4% during the forecast period owing to the growth of cloud computing in private cloud environments

Based on the enterprise size, the large-size enterprise captured the major revenue share of 56.8% in 2022 and is expected to continue its dominance over the forecast period. Cloud advertising enables large-sized enterprises to manage and streamline their advertising operations effectively. These enterprises often have multiple departments, regions, and brands, making centralized advertising management crucial for consistency and efficiency. The small and medium-sized enterprise segment is anticipated to grow at the highest CAGR of 19.7% during the forecast period. Cloud advertising offers robust tracking and measurement capabilities, allowing SMEs to monitor the performance of their campaigns in real-time

Based on the application, the campaign management captured the major revenue share of 28.9% in 2022 and is expected to continue its dominance over the forecast period. Cloud platforms provide the computational power and infrastructure needed to harness the potential of AI and ML, enabling advertisers to optimize their campaigns and deliver tailored messages to their audience. The real-time engagement segment is anticipated to grow at the highest CAGR of 22.6% during the forecast period. Growing demand for personalized and contextualized experiences has fueled the need for real-time engagement in advertising

Based on the end-use industry, the BFSI segment had a major revenue share of 14.8% in 2022. The BFSI sector has witnessed a rapid digital transformation, with financial institutions increasingly embracing cloud technology to enhance their operations and customer experiences. Cloud advertising gives these institutions the scalability and agility to deliver targeted and personalized ads to their customers. The manufacturing industry is expected to grow at a CAGR of 19.9% during the forecast period. The demand for patient engagement and education has fueled the adoption of cloud advertising in the healthcare segment. Cloud-based advertising solutions provide opportunities for healthcare organizations to deliver relevant and informative content to patients, empowering them to make informed decisions about their health

North America held the highest market share of 33.1% in 2022 and is anticipated to grow over the forecast period owing to the rise of big data and analytics. With the increasing number of internet users, mobile device usage, and online platforms' popularity, advertisers recognize the need to optimize their digital advertising strategies. Cloud advertising allows advertisers to efficiently deliver their campaigns across multiple channels and devices, ensuring maximum reach and engagement with the North American audience

Asia Pacific is expected to grow at the highest CAGR of 22.5% during the forecast period. The increasing popularity of social media and online platforms has created vast opportunities for cloud advertising in the Asia Pacific. Platforms like Facebook, Instagram, WeChat, LINE, and KakaoTalk have massive regional user bases, offering advertisers extensive reach and engagement potential

Curious about the Cloud Advertising Market? Download your FREE sample copy now and get a sneak peek into the latest insights and trends.

Cloud Advertising Market Segmentation

Grand View Research has segmented the cloud advertising market based on service, channel, deployment, enterprise size, application, end-use industry, and region:

Cloud Advertising Service Outlook (Revenue, USD Million, 2018 - 2030)

Infrastructure As A Software As A Service (IaaS)

Software As A Service (SaaS)

Platform As A Service (PaaS)

Cloud Advertising Channel Outlook (Revenue, USD Million, 2018 - 2030)

Email Marketing

In-App

Social Media Marketing

Company Website

Others

Cloud Advertising Deployment Outlook (Revenue, USD Million, 2018 - 2030)

Public

Private

Hybrid

Cloud Advertising Enterprise Size Outlook (Revenue, USD Million, 2018 - 2030)

Large Size Enterprises

Small and Medium Sized Enterprises (SMEs)

Cloud Advertising Application Outlook (Revenue, USD Million, 2018 - 2030)

Campaign Management

Customer Management

Experience Management

Analytic and Insights

Real-Time Engagement

Cloud Advertising End-use Industry Outlook (Revenue, USD Million, 2018 - 2030)

IT and Telecommunications

BFSI

Healthcare

Manufacturing

Retail and Consumer Goods

Automotive

Media and Entertainment

Travel and Hospitality

Others

Cloud Advertising Regional Outlook (Revenue, USD Million, 2018 - 2030)

North America

US

Canada

Europe

UK

Germany

France

Italy

Spain

Netherlands

Asia Pacific

China

India

Japan

Australia

Singapore

Latin America

Brazil

Mexico

Chile

Argentina

Middle East & Africa

UAE

Saudi Arabia

South Africa

Key Players in the Cloud Advertising Market

Google LLC

Oracle

IBM Corporation

Amazon Web Services, Inc.

Adobe

Salesforce, Inc.

Wipro

The Nielsen Company (US), LLC.

Viant Technology LLC

Cavai

Kubient

Imagine Communications

Order a free sample PDF of the Cloud Advertising Market Intelligence Study, published by Grand View Research.

0 notes

Text

California Attorney General Rob Bonta today issued a consumer alert to customers of 23andMe, a genetic testing and information company. The California-based company has publicly reported that it is in financial distress and stated in securities filings that there is substantial doubt about its ability to continue as a going concern. Due to the trove of sensitive consumer data 23andMe has amassed, Attorney General Bonta reminds Californians of their right to direct the deletion of their genetic data under the Genetic Information Privacy Act (GIPA) and California Consumer Protection Act (CCPA). Californians who want to invoke these rights can do so by going to 23andMe's website.

“California has robust privacy laws that allow consumers to take control and request that a company delete their genetic data,” said Attorney General Bonta. “Given 23andMe’s reported financial distress, I remind Californians to consider invoking their rights and directing 23andMe to delete their data and destroy any samples of genetic material held by the company.”

To Delete Genetic Data from 23andMe:

Consumers can delete their account and personal information by taking the following steps:

Log into your 23andMe account on their website.

Go to the “Settings” section of your profile.

Scroll to a section labeled “23andMe Data” at the bottom of the page.

Click “View” next to “23andMe Data”

Download your data: If you want a copy of your genetic data for personal storage, choose the option to download it to your device before proceeding.

Scroll to the “Delete Data” section.

Click “Permanently Delete Data.”

Confirm your request: You’ll receive an email from 23andMe; follow the link in the email to confirm your deletion request.

To Destroy Your 23andMe Test Sample:

If you previously opted to have your saliva sample and DNA stored by 23andMe, but want to change that preference, you can do so from your account settings page, under “Preferences.”

To Revoke Permission for Your Genetic Data to be Used for Research:

If you previously consented to 23andMe and third-party researchers to use your genetic data and sample for research, you may withdraw consent from the account settings page, under “Research and Product Consents.”

Under GIPA, California consumers can delete their account and genetic data and have their biological sample destroyed. In addition, GIPA permits California consumers to revoke consent that they provided a genetic testing company to collect, use, and disclose genetic data and to store biological samples after the initial testing has been completed. The CCPA also vests California consumers with the right to delete personal information, which includes genetic data, from businesses that collect personal information from the consumer.

#who could have ever foreseen any issues with giving all this sensitive data to a company#23andme#psa#california

0 notes

Text

Certificate Authority Market Landscape: Opportunities and Competitive Insights 2032

Certificate Authority Market size was valued at USD 167.7 Million in 2023 and is expected to grow to USD 442.2 Million by 2032 and grow at a CAGR of 11.4% over the forecast period of 2024-2032

The Certificate Authority (CA) market is witnessing rapid expansion, driven by the increasing demand for secure digital transactions, data protection, and regulatory compliance. With businesses shifting towards digital platforms, the need for SSL/TLS certificates, code signing, and digital signatures has surged. Organizations across industries are prioritizing cybersecurity to mitigate risks associated with online threats and data breaches.

The Certificate Authority market continues to grow as enterprises, governments, and individual users seek to enhance online security. Digital transformation initiatives, cloud adoption, and stringent cybersecurity regulations are fueling the demand for reliable CA services. The expansion of e-commerce, online banking, and IoT ecosystems has further accelerated the need for strong authentication and encryption solutions provided by certificate authorities.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/3558

Market Keyplayers:

DigiCert (DigiCert SSL Certificates, Certificate Lifecycle Manager)

GlobalSign (GlobalSign SSL Certificates, CloudSSL)

Entrust (Entrust SSL/TLS Certificates, PKI as a Service)

Comodo CA (Positive SSL, Comodo Certificate Manager)

Symantec (Symantec SSL Certificates, Endpoint Protection)

GoDaddy (GoDaddy SSL Certificates, Managed WordPress Hosting)

Sectigo (Sectigo SSL Certificates, Certificate Manager)

Let’s Encrypt (Let’s Encrypt SSL Certificates, ACME Client)

Trustwave (Trustwave SSL Certificates, Managed Security Services)

Amazon Web Services (AWS) (AWS Certificate Manager, AWS CloudFront)

Market Trends Driving Growth

1. Rising Adoption of SSL/TLS Certificates

As cyber threats become more sophisticated, organizations are increasingly implementing SSL/TLS certificates to encrypt communications and ensure website authenticity. The adoption of HTTPS protocols is now a standard requirement, with search engines and regulatory bodies pushing for secure connections.

2. Growth in Digital Signatures and E-Signature Solutions

The increasing adoption of digital transactions has driven demand for e-signature solutions backed by certificate authorities. Businesses and governments are implementing digital signature solutions to ensure document authenticity, prevent fraud, and comply with regulatory standards.

3. Expansion of IoT Security

With the rapid proliferation of Internet of Things (IoT) devices, security concerns have escalated. Certificate authorities are playing a crucial role in securing IoT ecosystems by providing device authentication, encrypted communications, and data integrity protection.

4. Regulatory Compliance and Data Protection Laws

Stringent data protection regulations such as GDPR (General Data Protection Regulation), CCPA (California Consumer Privacy Act), and eIDAS (Electronic Identification, Authentication, and Trust Services) are mandating secure digital identities and encrypted communications. Organizations are investing in CA solutions to ensure compliance with these regulations.

5. Cloud-Based and Managed PKI Solutions

The shift towards cloud computing has increased the demand for Public Key Infrastructure (PKI) as a Service and managed CA solutions. Enterprises are leveraging cloud-based certificate management services to streamline security operations, reduce costs, and ensure scalability.

Enquiry of This Report: https://www.snsinsider.com/enquiry/3558

Market Segmentation:

By Component

Certificate Type

SSL Certificates

Code Signing Certificates

Secure Email Certificates

Authentication Certificates

Services

By Certificate Validation Type

Domain Validation

Organization Validation

Extended Validation

By Enterprise Size

SMEs

Large Enterprises

By Vertical

BFSI

Retail and E-commerce

Government and Defence

Healthcare

IT and Telecom

Travel and Hospitality

Education

Market Analysis and Current Landscape

Key factors contributing to this growth include:

Rising cyber threats and phishing attacks: Organizations are prioritizing encryption and authentication to protect sensitive data.

Increase in remote work and digital transactions: Businesses require secure communication channels and authentication mechanisms for remote employees.

Demand for Zero Trust security frameworks: Organizations are integrating PKI-based authentication to enhance access control and data security.

Advancements in quantum-safe cryptography: Research and development in quantum-safe encryption are driving innovation in the CA market.

Despite strong market growth, challenges such as certificate lifecycle management complexities, lack of awareness, and evolving cyber threats persist. However, advancements in automation and AI-driven certificate management solutions are addressing these issues.

Regional Analysis: Market Expansion Across Key Geographies

North America: Leading the CA market due to stringent cybersecurity regulations, high adoption of digital transformation, and a strong presence of technology firms.

Europe: Witnessing significant growth due to compliance with GDPR, eIDAS, and other cybersecurity mandates driving the demand for trusted digital identities.

Asia-Pacific: Experiencing rapid market expansion, fueled by increasing internet penetration, government-led cybersecurity initiatives, and growing e-commerce sectors.

Latin America and Middle East & Africa: Emerging markets with growing digital adoption and rising awareness of cybersecurity threats are contributing to CA market growth.

Key Factors Influencing Market Growth

Cybersecurity Awareness & Rising Threat Landscape – The growing number of cyberattacks, ransomware incidents, and phishing scams are compelling businesses to adopt strong encryption measures.

Integration of AI & Automation in Certificate Management – AI-driven security solutions are simplifying certificate lifecycle management, reducing the risks of expired or compromised certificates.

Government & Industry-Specific Regulations – Compliance requirements across financial services, healthcare, and government institutions are driving widespread CA adoption.

Adoption of Multi-Factor Authentication (MFA) – Certificate-based authentication methods, including client certificates and smart cards, are gaining traction in identity verification solutions.

Emergence of Blockchain-Based Digital Identities – The use of blockchain for decentralized identity verification is an emerging trend, with some CAs exploring blockchain-based trust models.

Future Prospects: The Road Ahead for Certificate Authorities

The Certificate Authority market is expected to continue its upward trajectory, with advancements in encryption technologies and identity management solutions. Future developments include:

Quantum-Resistant Cryptography: As quantum computing advances, CAs will invest in post-quantum cryptography solutions to ensure future-proof security.

Automated Certificate Management Platforms: AI-powered tools will enhance the efficiency of certificate issuance, renewal, and monitoring to prevent security lapses.

Expanding Role of CAs in IoT & 5G Security: With IoT devices and 5G networks becoming more prevalent, CAs will play a critical role in securing connected ecosystems.

Growth of Decentralized Trust Models: Emerging technologies such as blockchain and Self-Sovereign Identity (SSI) will reshape the digital identity landscape.

Stronger Collaboration Between Public & Private Sectors: Governments and enterprises will work together to establish more robust cybersecurity frameworks and trust standards.Access Complete Report: https://www.snsinsider.com/reports/certificate-authority-market-3558

Conclusion

The Certificate Authority market is at the forefront of the digital security revolution, providing essential authentication, encryption, and identity verification solutions. As cyber threats evolve and regulatory requirements tighten, the demand for CA services will continue to rise. Organizations that embrace automation, AI-driven security, and emerging cryptographic technologies will be well-positioned to thrive in this rapidly expanding market.

With increasing investments in cybersecurity infrastructure and the growing reliance on digital trust, certificate authorities will play a pivotal role in shaping the future of secure online communications and transactions worldwide.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

#Certificate Authority market#Certificate Authority market Analysis#Certificate Authority market Scope#Certificate Authority market Growth#Certificate Authority market Trends

0 notes

Text

California flooding with Software development Companies

California has long been a global leader in technology and innovation, with Silicon Valley at the heart of the world’s most powerful tech ecosystem. Software development companies in California are known for their cutting-edge solutions, creativity, and fast-paced development cycles. But how exactly do these companies work, and what makes them so successful? Let’s dive into the key aspects of how software development companies operate in the Golden State. Software development companies in California thrive in this ecosystem because they are constantly encouraged to push boundaries, embrace new technologies, and deliver products that revolutionize industries. This drive for innovation is reflected in the way companies approach development — whether it’s through agile methodologies, continuous integration, or incorporating cutting-edge technologies such as artificial intelligence and machine learning into their products

California, and particularly Silicon Valley, is often referred to as the epicenter of technological innovation. The state is home to many of the world’s leading tech giants, including Apple, Google, Facebook, and countless startups. This innovative environment is fueled by the presence of top-tier universities, venture capital, and a large network of highly skilled professionals.

One of the defining characteristics of software development companies in California is the widespread adoption of agile development practices. Agile emphasizes iterative development, flexibility, and close collaboration between teams. California’s software companies are known for adopting agile methods, which allow for faster development cycles, continuous feedback, and rapid adjustments based on market or user needs.

These agile teams often work in collaborative, open-office environments, which promote communication and the free flow of ideas. Many companies use platforms like Jira, Slack, and Trello to streamline project management, task delegation, and communication, ensuring everyone is on the same page.

California is a magnet for tech talent, attracting skilled professionals from all over the world. Software development companies in the state often have access to a highly qualified talent pool, making it easier to hire software engineers, data scientists, UI/UX designers, and other essential roles.

The hiring process for software companies in California is rigorous, often involving multiple rounds of interviews, coding challenges, and technical assessments. Many companies also look for cultural fit — ensuring that the candidate aligns with the company’s values, work ethic, and vision.

Moreover, software development companies in California tend to offer attractive compensation packages, including high salaries, stock options, and other perks like flexible work hours, remote work options, and wellness programs. This competitive compensation structure reflects the high cost of living in the state and the need to retain top talent.

Companies in California are typically tasked with developing software that can scale to handle millions of users, especially those based in Silicon Valley, where startups quickly grow into large corporations. As a result, software developers focus on building robust, scalable architectures that can accommodate sudden spikes in traffic and large user bases.

Security is another major concern. Given the prevalence of cybersecurity threats, software development companies in California take great care in implementing secure coding practices and conducting rigorous testing to ensure their software is protected from potential breaches. Many companies follow industry standards and regulations, such as the General Data Protection Regulation (GDPR) or the California Consumer Privacy Act (CCPA), to ensure compliance with data protection laws.

The startup culture is alive and well in California, particularly in tech hubs like Silicon Valley, Los Angeles, and San Diego. Many software development companies in the state start as small startups, often founded by entrepreneurs with a unique idea or a desire to solve a particular problem.

California’s thriving venture capital (VC) scene plays a huge role in the success of these companies. Investors are constantly on the lookout for the next big thing, and startups can secure funding to scale their businesses quickly. This financial backing allows companies to hire top talent, invest in research and development, and launch new products that disrupt the market.

The emphasis on growth and innovation leads to a dynamic and fast-paced work environment. Employees in California’s software development companies are encouraged to think outside the box and take risks, often resulting in breakthrough technologies and products that shape the future of the industry.

California is one of the most diverse states in the U.S., and this is reflected in its tech industry. Software development companies in the state are increasingly prioritizing diversity and inclusion in their hiring practices and company culture. A diverse workforce brings varied perspectives and ideas, fostering creativity and innovation.

In response to growing awareness about the importance of diversity, many tech companies in California have implemented programs and initiatives aimed at supporting underrepresented groups, whether through mentorship programs, diversity hiring targets, or community outreach.

While California has historically been known for its in-person, office-driven culture, the landscape is changing. The COVID-19 pandemic accelerated the adoption of remote work, and many software development companies in California have embraced flexible work arrangements. Employees now have the option to work from home or remotely, often with the flexibility to set their own hours.

This shift has allowed companies to tap into talent from outside of California, broadening their hiring pool and making it easier for them to recruit top talent from around the world. Remote work has also led to improved work-life balance for many employees, making California’s tech industry an even more attractive place to work.

Software development companies in California place a significant emphasis on user experience (UX). They understand that creating an intuitive, easy-to-use interface can make or break a product. As a result, California-based software companies often have dedicated UX teams who work closely with developers, designers, and product managers to ensure that the final product delivers a seamless experience for users.

With the rapid advancement of mobile technology, companies are increasingly focusing on mobile-first design, ensuring that their software is optimized for smartphones and tablets, which are now the primary devices for many users.

Closing Insights

Software development companies in California operate in a dynamic, innovative, and competitive environment. From agile development practices to a focus on scalability, security, and user experience, these companies are constantly pushing the envelope to create groundbreaking technologies. The state’s thriving tech ecosystem, abundant talent pool, and venture capital resources make California a hub for software development and technological innovation.

For software companies looking to stay ahead of the curve, the California model of innovation, collaboration, and growth offers invaluable lessons that are shaping the future of the industry worldwide.

0 notes