#Azure API Management

Text

Azure API Management provides robust tools for securing and scaling your APIs, ensuring reliable performance and protection against threats. This blog post covers best practices for implementing Azure API Management, including authentication methods, rate limiting, and monitoring. Explore how Azure’s features can help you manage your API lifecycle effectively, enhance security protocols, and scale your services to handle increasing traffic. Get practical tips for optimizing your API management strategy with Azure.

0 notes

Text

Unlock the power of Azure API Management for your business! Discover why this tool is indispensable for streamlining operations and enhancing customer experiences.

0 notes

Text

Leverage the Benefits of Azure API Management

ISmile Technologies offers Azure API Management to create consistent and modern API gateways for the existing back-end services. We help organizations to publish APIs to Internal developers, External and partners to unlock the potential of their data and services.

What is Azure API Management?

Azure API Management is a multi-cloud, hybrid management platform for APIs in all environments. API Management, as a platform-as-a-service, supports the complete API lifecycle.

ISmile Technologies Providing Services in Azure API Management

Azure API Management has fully-managed serverless and dedicated tiers depending on the use case. They have tiers that we can choose by the consumption of the APIs. We can split it into the Consumption tier and the Developer/ Basic/ Standard/ Premium tier.

Consumption tier

In the Consumption tier, Built-in auto-scaling is down to zero, and the consumption-based micro billing is. They don’t have reserved capacity for this tier, and It’s a shared management plan. The consumption tier has a curated set of features and usage limits.

Developer | Basic | Standard | Premium tier

In this tier, we gave manual scaling or external auto-scaling. This is billing based on reserved capacity. This tier has a constant and predictable monthly cost. This tier has a reserved capacity; we have dedicated management, user, and data planes. We keep the APIM set always on, and it has a complete set of features that are not governed.

API lifecycle

The five stages of an API lifecycle must be considered for API design. These phases are:

Plan and Design

Develop

Test

Deploy

Retire

Value proposition

Trusted by thousands of enterprise customers

Globally available and supported

Dependable, secure, scalable, and performant

DevOps and developer-friendly

Azure-native and integrated with other Azure services

One solution for APIs across clouds and on-premises

Low-barrier-to-entry pricing

Abstract, secure, observe and make APIs discoverable in minutes

Read more …

1 note

·

View note

Text

try public preview - microsoft identity Conditional access filters for apps

try public preview – microsoft identity Conditional access filters for apps

As part of Zero trust posture, protecting all apps is key. At present, policies explicitly list apps. Today Microsoft announced the public preview of filters for apps. This provides a new way to manage Conditional Access (CA) assignment for apps and workload identities at scale.

With filters for apps, admins can tag applications with custom security attributes and apply Conditional Access…

View On WordPress

#$filter#access#API#apps#Azure Active Directory#conditional access#cpolicy#developers#devices#Identity platform#Management#Microsoft Entra#MS Graph API#Policy#public preview#Secure#Security#security attributes#service principal#users#workload identities#zero trust

2 notes

·

View notes

Text

Mastering Azure Container Apps: From Configuration to Deployment

Thank you for following our Azure Container Apps series! We hope you're gaining valuable insights to scale and secure your applications. Stay tuned for more tips, and feel free to share your thoughts or questions. Together, let's unlock the Azure's Power.

#API deployment#application scaling#Azure Container Apps#Azure Container Registry#Azure networking#Azure security#background processing#Cloud Computing#containerized applications#event-driven processing#ingress management#KEDA scalers#Managed Identities#microservices#serverless platform

0 notes

Text

Generative AI from an enterprise architecture strategy perspective

New Post has been published on https://thedigitalinsider.com/generative-ai-from-an-enterprise-architecture-strategy-perspective/

Generative AI from an enterprise architecture strategy perspective

Eyal Lantzman, Global Head of Architecture, AI/ML at JPMorgan, gave this presentation at the London Generative AI Summit in November 2023.

I’ve been at JPMorgan for five years, mostly doing AI, ML, and architecture. My background is in cloud infrastructure, engineering, and platform SaaS. I normally support AI/ML development, tooling processes, and use cases.

Some interesting observations have come about from machine learning and deep learning. Foundation models and large language models are providing different new opportunities and ways for regulated enterprises to rethink how they enable those things.

So, let’s get into it.

How is machine learning done?

You have a data set and you traditionally have CPUs, although you can use GPUs as well. You run through a process and you end up with a model. You end up with something where you can pass relatively simple inputs like a row in a database or a set of features, and you get relatively simple outputs back.

We evolved roughly 20 years ago towards deep learning and have been on that journey since. You pass more data, you use GPUs, and there are some different technology changes. But what it allows you to do is pass complex inputs rather than simple ones.

Essentially, the deep learning models have some feature engineering components built in. Instead of sending the samples and petals and length and width for the Iris model and figuring out how to extract that from an image, you just send an image and it extracts those out automatically.

Governance and compliance in generative AI models

Foundation models are quite interesting because first of all, you effectively have two layers, where there’s a provider of that foundation model that uses a very large data set, and you can add as many variants as you want there. They’re GPU-based and there’s quite a bit of complexity, time, and money, but the outcome is that you can pass in complex inputs and get complex outputs.

So that’s one difference. The other is that quite a lot of them are multimodal, which means you can reuse them across different use cases, in addition to being able to take what you get out of the box for some of them and retrain or fine-tune them with a smaller data set. Again, you run additional training cycles and then get the fine-tuned models out.

Now, the interesting observation is that the first layer on top is where you get the foundation model. You might have heard statements like, “Our data scientist team likes to fine-tune models.” Yes, but that initial layer makes it available already for engineers to use GenAI, and that’s the shift that’s upon us.

It essentially moves from processes, tools, and things associated with the model development lifecycle to software because the model is an API.

But how do you govern that?

Different regulated enterprises have their own processes to govern models versus software. How do you do that with this paradigm?

That’s where things need to start shifting within the enterprise. That understanding needs to feed into the control assurance functions and control procedures, depending on what your organization calls those things.

This is in addition to the fact that most vendors today will have some GenAI in them. That essentially introduces another risk. If a regulatory company deals with a third-party vendor and that vendor happens to start using ChatGPT or LLM, if the firm wasn’t aware of that, it might not be part of their compliance profile so they need to uplift their third-party oversight processes.

They also need to be able to work contractually with those vendors to make sure that if they’re using a large language model or more general AI, they mustn’t use the firm’s data.

AWS and all the hyperscalers have those opt-out options, and this is one of those things that large enterprises check first. However, being able to think through those and introducing them into the standard procurement processes or engineering processes will become more tricky because everyone needs to understand what AI is and how it impacts the overall lifecycle of software in general.

Balancing fine-tuning and control in AI models

In an enterprise setting, to be able to use an OpenAI type of model, you need to make sure it’s protected. And, if you plan to send data to that model, you need to make sure that data is governed because you can have different regulations about where the data can be processed, and stored, and where it comes from that you might not be aware of.

Some countries have restrictions about where you can process the data, so if you happen to provision your LLM endpoint in US-1 or US central, you possibly can’t even use it.

So, being aware of those kinds of circumstances can require some sort of business logic.

Even if you do fine-tuning, you need some instructions to articulate the model to aim towards a certain goal. Or even if you fine-tune the hell out of it, you still need some kind of guardrails to evaluate the sensible outcomes.

There’s some kind of orchestration around the model itself, but the interesting point here is that this model isn’t the actual deployment, it’s a component. And that’s how thinking about it will help with some of the problems raised in how you deal with vendor-increasing prices.

What’s a component? It’s exactly like any software component. It’s a dependency you need to track from a performance perspective, cost perspective, API perspective, etc. It’s the same as you do with any dependency. It’s a component of your system. If you don’t like it, figure out how to replace it.

Now I’ll talk a bit about architecture.

Challenges and strategies for cross-cloud integration

What are those design principles? This is after analyzing different vendors, and this is my view of the world.

Treating them as a SaaS provider and as a SaaS pattern increases the control over how we deal with that because you essentially componentize it. If you have an interface, you can track it as any type of dependency from performance cost, but also from an integration with your system.

So if you’re running on AWS and you’re calling Azure OpenAPI endpoints, you’ll probably be paying for networking across cloud cost and you’ll have latency to pay for, so you need to take all of those things into account.

So, having that as an endpoint brings those dimensions front and center into that engineering world where engineering can help the rest of the data science teams.

We touched on content moderation, how it’s required, and how different vendors implement it, but it’s not consistent. They probably deal with content moderation from an ethical perspective, which might be different from an enterprise ethical perspective, which has specific language and nuances that the enterprise tries to protect.

So how do you do that?

That’s been another consideration where I think what the vendors are doing is great, but there are multiple layers of defense. There’s defense in depth, and you need ways to deal with some of that risk

To be able to effectively evaluate your total cost of ownership or the value proposition of a specific model, you need to be able to evaluate those different models. This is where if you’re working in a modern development environment, you might be working on AWS or Azure, but when you’re evaluating the model, you might be triggering a model in GCP.

Being able to have those cross-cloud considerations that start getting into the network and authentication, authorization, and all those things can become extremely important to design for and articulate in the overall architecture and strategy when dealing with those models.

Historically, there were different attempts to wrap stuff up. As cloud providers, service providers, and users of those technologies, we don’t like that because you lose the value of the ecosystem and the SDKs that those provide.

To be able to solve those problems whilst using the native APIs, the native SDK is essential because everything runs extremely fast when it comes to AI and there’s a tonne of innovation, and as soon as you start wrapping stuff then, you’re already out in terms of dealing with that problem and it’s already pointless.

How do we think about security and governance in depth?

If we start from the center, you have a model provider, and this is where the provider can be an open source one where you go through due diligence to get it into the company and do the scanning or adverse testing. It could be one that you develop, or it could be a third-party vendor such as Anthropic.

They do have some responsibility for encryption and transit, but you need to make sure that that provider is part of your process of getting into the firm. You can articulate that that provider’s dealing with generative AI, that provider will potentially be sent classified data, that provider needs to make sure that they’re not misusing that data for training, etc.

You also have the orchestrator that you need to develop, where you need to think about how to prevent prompt injection in the same way that other applications deal with SQL injection and cross-site scripting.

So, how do you prevent that?

Those are the challenges you need to consider and solve.

As you go to your endpoints, it’s about how you do content moderation of the overall request and response and then also deal with multi-stage, trying to jailbreak it through multiple attempts. This involves identifying the client, identifying who’s authenticated, articulating cross-sessions or maybe multiple sessions, and then being able to address the latency requirements.

You don’t want to kill the model’s performance by doing that, so you might have an asynchronous process that goes and analyzes the risk associated with a particular request, and then it kills it for the next time around.

Being able to understand the impact of the controls on your specific use case in terms of latency, performance, and cost is extremely important, and it’s part of your ROI. But it’s a general problem that requires thinking about how to solve it.

You’re in the process of getting a model or building a model and creating the orchestrated testing as an endpoint and developer experience lifecycle loops, etc.

But your model development cycle might have the same kind of complexity when it comes to data because if you’re in an enterprise that got to the level of maturity that they can train with real data, rather than a locked up, synthesized database, you can articulate the business need and you have approval taxes, classified data for model training, and for the appropriate people to see it.

This is where your actual environment has quite a lot of controls associated with dealing with that data. It needs to implement different data-specific compliance.

So, when you train a model, you need to train this particular region, whether it’s Indonesia or Luxembourg, or somewhere else.

Being able to kind of think about all those different dimensions is extremely important.

As you go through whatever the process is to deploy the application to production, again, you have the same data-specific requirements. There might be more because you’re talking about production applications. It’s about impacting the business rather than just processing data, so it might be even worse.

Then, it goes to the standard engineering, integration, testing, load testing, and chaos testing, because it’s your dependency. It’s another dependency of the application that you need to deal with.

And if it doesn’t scale well because there’s not enough computing in your central for open AI, then this is one of your decision points when you need to think about how this would work in the real world. How would that work when I need that capacity? Being able to have that process go through all of those layers as fast as possible is extremely important.

Threats are an ongoing area of analysis. There’s a recent example from a couple of weeks ago from apps about LLM-specific threats. You’ll see similar threats to any kind of application such as excessive agency or or denial of service. You also have ML-related risks like data poisoning and prompt object injection which are more specific to large language models.

This is one way you can communicate with your controller assurance or cyber group about those different risks, how you mitigate them, and compartmentalize all the different pieces. Whether this is a third party or something you developed, being able to articulate the associated risk will allow you to deal with that more maturely.

Navigating identity and access management

Going towards development, instead of talking about risk, I’m going to talk about how I compartmentalize, how to create the required components, how to think about all those things, and how to design those systems. It’s essentially a recipe book.

Now, the starting assumption is that as someone who’s coming from a regulated environment, you need some kind of well-defined workspace that provides security.

The starting point for your training is to figure out your identity and access management. Who’s approved to access it? What environment? What data? Where’s the region?

Once you’ve got in, what are your cloud-native environment and credentials? What do they have access to? Is it access to all the resources? Specific resources within the region or across regions? It’s about being able to articulate all of those things.

When you’re doing your interactive environment, you’ll be interacting with the repository, but you also may end up using a foundation model or RAG resource to pull into your context or be able to train jobs based on whatever the model is.

If all of them are on the same cloud provider, it’s simple. You have one or more SDLC pipelines and you go and deploy that. You can go through an SDLC process to certify and do your risk assessment, etc. But what happens if you have all of those spread across different clouds?

Essentially, you need additional pieces to ensure that you’re not connecting from a PCI environment to a non-PCI environment.

Having an identity broker that can have additional conditions about access that can enforce those controls is extremely important. This is because given the complexity in the regulatory space, there are more and more controls and it becomes more and more complex, so pushing a lot of those controls into systems that can reason about them and enforce that is extremely important.

This is where you start thinking about LLM and GenAI from a use case perspective to just an enterprise architecture pattern. You’re essentially saying, “How do we deal with that once and for all?”

Well, we identify this component that deals with identity-related problems, we articulate the different data access patterns, we identify different environments, we catalog them, and we tag them. And based on the control requirements, this is how you start dealing with those problems.

And then from that perspective, you end up with fully automated controls that you can employ, whether it’s AWS Jupyter or your VS code running in Azure to talk to Bedrock or Azure OpenAI endpoint. They’re very much the same from an architecture perspective.

Innovative approaches to content moderation

Now, I mentioned a bit about the content moderation piece, and this is where I suggested that the vendors might have things, but:

They’re not really consistent.

They don’t necessarily understand the enterprise language.

What is PI within a specific enterprise? Maybe it’s some specific kind of user ID that identifies the user, but it’s a letter and a number that LLM might never know about. But you should be careful about exposing it to others, etc.

Being able to have that level of control is important because it’s always about control when it comes to risk.

When it comes to supporting more than one provider, this is essentially where we need to standardize a lot of those things and essentially be able to say, “This provider, based on our assessment of that provider can deal with data up to x, y, and z, and in regions one to five because they don’t support deployment in Indonesia or Luxembourg.”

Being able to articulate that part of your onboarding process of that provider is extremely important because you don’t need to think about it for every use case, it’s just part of your metamodel or the information model associated with your assets within the enterprise.

That content moderation layer can be another type of AI. It can be as simple as a kill switch that’s based on regular expression, but the opportunity is there to make something learn and adjust over time. But from a pure cyber perspective, it’s a kill switch that you need to think about, how you kill something in a point solution based on the specific type of prompt.

For those familiar with AWS, there’s an AWS Gateway Load Bouncer. It’s extremely useful when we’re coming to those patterns because it allows you to have the controls as a sidecar rather than part of your application, so the data scientist or the application team can focus on your orchestrator.

You can have another team that can specialize in security that creates that other container effectively and deploys and manages that as a separate lifecycle. This is also good from a biased perspective because you could have one team that’s focused on making or breaking the model, versus the application team that tries to create or extract the value out of it.

From a production perspective, this is very similar because in the previous step, you created a whole bunch of code, and the whole purpose of that code was becoming that container, one or more depending on how you think about that.

That’s where I suggest that content moderation is yet another type of kind of container that sits outside of the application and allows you to have that separate control over the type of content moderation. Maybe it’s something that forces a request-response, maybe it’s more asynchronous and kicks it off based on the session.

You can have multiple profiles of those content moderation systems and apply them based on the specific risk and associated model.

Identity broker is the same pattern. This is extremely important because if you’re developing and testing in such environments, you want your code to be very similar to how it progresses.

In the way you got your credentials, you probably want some configuration in which you can point to a similar setup in your production environment to inject those tokens into your workload.

This is where you probably don’t have your fine-tuning in production, but you still have data access that you need to support.

So, having different types of identities that are associated with your flow and being able to interact, whether it’s the same cloud or multi-cloud, depending on your business use case, ROI, latency, etc., will be extremely important.

But this allows you to have that framework of thinking, Is this box a different identity boundary? Yes or no? If it’s a no, then it’s simple. It’s an IM policy in AWS as an example, versus a different cloud, how do you federate access to it? How do you rotate credential secrets?

Conclusion

To summarise, you have two types of flows. In standard ML, you have the MDLC flow where you go and train a model and containerize it.

In GenAI, you have the MDLC only when you fine-tune. If you didn’t fine-tune, you run through pure SDLC flow. It’s a container that you just containerized that you test and do all those different steps.

You don’t have the actual data scientists necessarily involved in that process. That’s the opportunity but also the change that you need to introduce to the enterprise thinking and the cyber maturity associated with that.

Think through how engineers, who traditionally don’t really have access to production data, will be able to test those things in real life. Create all sorts of interesting discussions about the environments where you can do secure development with data versus standard developer environments with mock data or synthesized data.

#2023#access management#ai#AI/ML#Analysis#anthropic#API#APIs#applications#apps#architecture#assets#authentication#AWS#azure#azure openai#background#book#box#Building#Business#change#chaos#chatGPT#Cloud#cloud infrastructure#cloud providers#Cloud-Native#clouds#code

0 notes

Text

Azure API Management Vulnerability Let Attackers Escalate Privileges

Source: https://gbhackers.com/azure-api-management-vulnerability/

More info: https://binarysecurity.no/posts/2024/09/apim-privilege-escalation

5 notes

·

View notes

Text

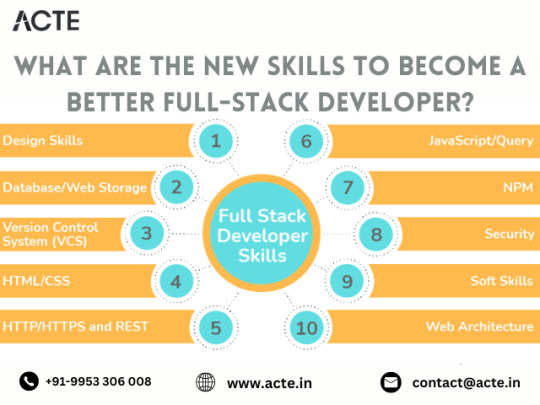

java full stack

A Java Full Stack Developer is proficient in both front-end and back-end development, using Java for server-side (backend) programming. Here's a comprehensive guide to becoming a Java Full Stack Developer:

1. Core Java

Fundamentals: Object-Oriented Programming, Data Types, Variables, Arrays, Operators, Control Statements.

Advanced Topics: Exception Handling, Collections Framework, Streams, Lambda Expressions, Multithreading.

2. Front-End Development

HTML: Structure of web pages, Semantic HTML.

CSS: Styling, Flexbox, Grid, Responsive Design.

JavaScript: ES6+, DOM Manipulation, Fetch API, Event Handling.

Frameworks/Libraries:

React: Components, State, Props, Hooks, Context API, Router.

Angular: Modules, Components, Services, Directives, Dependency Injection.

Vue.js: Directives, Components, Vue Router, Vuex for state management.

3. Back-End Development

Java Frameworks:

Spring: Core, Boot, MVC, Data JPA, Security, Rest.

Hibernate: ORM (Object-Relational Mapping) framework.

Building REST APIs: Using Spring Boot to build scalable and maintainable REST APIs.

4. Database Management

SQL Databases: MySQL, PostgreSQL (CRUD operations, Joins, Indexing).

NoSQL Databases: MongoDB (CRUD operations, Aggregation).

5. Version Control/Git

Basic Git commands: clone, pull, push, commit, branch, merge.

Platforms: GitHub, GitLab, Bitbucket.

6. Build Tools

Maven: Dependency management, Project building.

Gradle: Advanced build tool with Groovy-based DSL.

7. Testing

Unit Testing: JUnit, Mockito.

Integration Testing: Using Spring Test.

8. DevOps (Optional but beneficial)

Containerization: Docker (Creating, managing containers).

CI/CD: Jenkins, GitHub Actions.

Cloud Services: AWS, Azure (Basics of deployment).

9. Soft Skills

Problem-Solving: Algorithms and Data Structures.

Communication: Working in teams, Agile/Scrum methodologies.

Project Management: Basic understanding of managing projects and tasks.

Learning Path

Start with Core Java: Master the basics before moving to advanced concepts.

Learn Front-End Basics: HTML, CSS, JavaScript.

Move to Frameworks: Choose one front-end framework (React/Angular/Vue.js).

Back-End Development: Dive into Spring and Hibernate.

Database Knowledge: Learn both SQL and NoSQL databases.

Version Control: Get comfortable with Git.

Testing and DevOps: Understand the basics of testing and deployment.

Resources

Books:

Effective Java by Joshua Bloch.

Java: The Complete Reference by Herbert Schildt.

Head First Java by Kathy Sierra & Bert Bates.

Online Courses:

Coursera, Udemy, Pluralsight (Java, Spring, React/Angular/Vue.js).

FreeCodeCamp, Codecademy (HTML, CSS, JavaScript).

Documentation:

Official documentation for Java, Spring, React, Angular, and Vue.js.

Community and Practice

GitHub: Explore open-source projects.

Stack Overflow: Participate in discussions and problem-solving.

Coding Challenges: LeetCode, HackerRank, CodeWars for practice.

By mastering these areas, you'll be well-equipped to handle the diverse responsibilities of a Java Full Stack Developer.

visit https://www.izeoninnovative.com/izeon/

2 notes

·

View notes

Text

Quality Assurance (QA) Analyst - Tosca

Model-Based Test Automation (MBTA):

Tosca uses a model-based approach to automate test cases, which allows for greater reusability and easier maintenance.

Scriptless Testing:

Tosca offers a scriptless testing environment, enabling testers with minimal programming knowledge to create complex test cases using a drag-and-drop interface.

Risk-Based Testing (RBT):

Tosca helps prioritize testing efforts by identifying and focusing on high-risk areas of the application, improving test coverage and efficiency.

Continuous Integration and DevOps:

Integration with CI/CD tools like Jenkins, Bamboo, and Azure DevOps enables automated testing within the software development pipeline.

Cross-Technology Testing:

Tosca supports testing across various technologies, including web, mobile, APIs, and desktop applications.

Service Virtualization:

Tosca allows the simulation of external services, enabling testing in isolated environments without dependency on external systems.

Tosca Testing Process

Requirements Management:

Define and manage test requirements within Tosca, linking them to test cases to ensure comprehensive coverage.

Test Case Design:

Create test cases using Tosca’s model-based approach, focusing on functional flows and data variations.

Test Data Management:

Manage and manipulate test data within Tosca to support different testing scenarios and ensure data-driven testing.

Test Execution:

Execute test cases automatically or manually, tracking progress and results in real-time.

Defect Management:

Identify, log, and track defects through Tosca’s integration with various bug-tracking tools like JIRA and Bugzilla.

Reporting and Analytics:

Generate detailed reports and analytics on test coverage, execution results, and defect trends to inform decision-making.

Benefits of Using Tosca for QA Analysts

Efficiency: Automation and model-based testing significantly reduce the time and effort required for test case creation and maintenance.

Accuracy: Reduces human error by automating repetitive tasks and ensuring consistent execution of test cases.

Scalability: Easily scales to accommodate large and complex testing environments, supporting continuous testing in agile and DevOps processes.

Integration: Seamlessly integrates with various tools and platforms, enhancing collaboration across development, testing, and operations teams.

Skills Required for QA Analysts Using Tosca

Understanding of Testing Principles: Fundamental knowledge of manual and automated testing principles and methodologies.

Technical Proficiency: Familiarity with Tosca and other testing tools, along with basic understanding of programming/scripting languages.

Analytical Skills: Ability to analyze requirements, design test cases, and identify potential issues effectively.

Attention to Detail: Keen eye for detail to ensure comprehensive test coverage and accurate defect identification.

Communication Skills: Strong verbal and written communication skills to document findings and collaborate with team members.

2 notes

·

View notes

Text

Elevating Your Full-Stack Developer Expertise: Exploring Emerging Skills and Technologies

Introduction: In the dynamic landscape of web development, staying at the forefront requires continuous learning and adaptation. Full-stack developers play a pivotal role in crafting modern web applications, balancing frontend finesse with backend robustness. This guide delves into the evolving skills and technologies that can propel full-stack developers to new heights of expertise and innovation.

Pioneering Progress: Key Skills for Full-Stack Developers

1. Innovating with Microservices Architecture:

Microservices have redefined application development, offering scalability and flexibility in the face of complexity. Mastery of frameworks like Kubernetes and Docker empowers developers to architect, deploy, and manage microservices efficiently. By breaking down monolithic applications into modular components, developers can iterate rapidly and respond to changing requirements with agility.

2. Embracing Serverless Computing:

The advent of serverless architecture has revolutionized infrastructure management, freeing developers from the burdens of server maintenance. Platforms such as AWS Lambda and Azure Functions enable developers to focus solely on code development, driving efficiency and cost-effectiveness. Embrace serverless computing to build scalable, event-driven applications that adapt seamlessly to fluctuating workloads.

3. Crafting Progressive Web Experiences (PWEs):

Progressive Web Apps (PWAs) herald a new era of web development, delivering native app-like experiences within the browser. Harness the power of technologies like Service Workers and Web App Manifests to create PWAs that are fast, reliable, and engaging. With features like offline functionality and push notifications, PWAs blur the lines between web and mobile, captivating users and enhancing engagement.

4. Harnessing GraphQL for Flexible Data Management:

GraphQL has emerged as a versatile alternative to RESTful APIs, offering a unified interface for data fetching and manipulation. Dive into GraphQL's intuitive query language and schema-driven approach to simplify data interactions and optimize performance. With GraphQL, developers can fetch precisely the data they need, minimizing overhead and maximizing efficiency.

5. Unlocking Potential with Jamstack Development:

Jamstack architecture empowers developers to build fast, secure, and scalable web applications using modern tools and practices. Explore frameworks like Gatsby and Next.js to leverage pre-rendering, serverless functions, and CDN caching. By decoupling frontend presentation from backend logic, Jamstack enables developers to deliver blazing-fast experiences that delight users and drive engagement.

6. Integrating Headless CMS for Content Flexibility:

Headless CMS platforms offer developers unprecedented control over content management, enabling seamless integration with frontend frameworks. Explore platforms like Contentful and Strapi to decouple content creation from presentation, facilitating dynamic and personalized experiences across channels. With headless CMS, developers can iterate quickly and deliver content-driven applications with ease.

7. Optimizing Single Page Applications (SPAs) for Performance:

Single Page Applications (SPAs) provide immersive user experiences but require careful optimization to ensure performance and responsiveness. Implement techniques like lazy loading and server-side rendering to minimize load times and enhance interactivity. By optimizing resource delivery and prioritizing critical content, developers can create SPAs that deliver a seamless and engaging user experience.

8. Infusing Intelligence with Machine Learning and AI:

Machine learning and artificial intelligence open new frontiers for full-stack developers, enabling intelligent features and personalized experiences. Dive into frameworks like TensorFlow.js and PyTorch.js to build recommendation systems, predictive analytics, and natural language processing capabilities. By harnessing the power of machine learning, developers can create smarter, more adaptive applications that anticipate user needs and preferences.

9. Safeguarding Applications with Cybersecurity Best Practices:

As cyber threats continue to evolve, cybersecurity remains a critical concern for developers and organizations alike. Stay informed about common vulnerabilities and adhere to best practices for securing applications and user data. By implementing robust security measures and proactive monitoring, developers can protect against potential threats and safeguard the integrity of their applications.

10. Streamlining Development with CI/CD Pipelines:

Continuous Integration and Deployment (CI/CD) pipelines are essential for accelerating development workflows and ensuring code quality and reliability. Explore tools like Jenkins, CircleCI, and GitLab CI/CD to automate testing, integration, and deployment processes. By embracing CI/CD best practices, developers can deliver updates and features with confidence, driving innovation and agility in their development cycles.

#full stack developer#education#information#full stack web development#front end development#web development#frameworks#technology#backend#full stack developer course

2 notes

·

View notes

Text

At Kodehash, we're more than just a mobile app development company - we're your partners in growth. We blend innovation with creativity to create digital solutions that perfectly match your business needs. Our portfolio boasts over 500+ apps developed across a range of technologies. Our services include web and mobile app design & development, E-commerce store development, SaaS & Web apps support, and Zoho & Salesforce CRM & automation setup. We also offer IT managed services like AWS, Azure, and Google Cloud. Our expertise also extends to API and Salesforce integrations. We shine in leveraging cutting-edge tech like AI and Machine Learning. With a global presence in the US, UK, Dubai, Europe, and India, we're always within reach.

#Kodehash

#App Development

#Mobile App development

2 notes

·

View notes

Text

Unlocking Seamless API Management with Azure! Explore the Essentials and Boost Your Business.

0 notes

Text

Azure API Management – 5 Days Proof Of Concept

Azure API Management as part of Azure Integration Service is a fully managed service that enables customers to publish, secure, transform, maintain, and monitor APIs. API Management handles tasks like mediating API calls, request authentication and authorization, rate limit and quota enforcement policies, request and response transformation, logging and tracing, and API version management.

ISmile Technologies offer Automated Azure API Management using Terraform. We help our customers realise their API vision with full lifecycle API management capabilities on Azure API Management. A partner of choice in designing, building, securing and monetising APIs proxy for your organization, including exposing a self-service developer portal for instantaneous on-boarding.

Agenda

Day 1: Discovery & Analysis

Identifying the business requirements

Set Up Azure with Optional Integration Services Environment (ISE)

Set Up Dynamics Connectors

Communicate Your Integration Plan

Day 2: Design and Plan

Design Integration APIs

Create a targeted architecture with the help of Terraform modules in Azure DevOps.

Day 3-4: Deployment

Deploy CI/CD pipeline in Azure DevOps

AzureRm Web App Deployment

PowerShell task to parse the Swagger file

Create/Update API

Day 5: Demo & Walk-through of the approach

API Management solution and integration processes demonstration

Proof of Concept closure

Planning of next steps.

Read more ...

0 notes

Text

Advanced Techniques in Full-Stack Development

Certainly, let's delve deeper into more advanced techniques and concepts in full-stack development:

1. Server-Side Rendering (SSR) and Static Site Generation (SSG):

SSR: Rendering web pages on the server side to improve performance and SEO by delivering fully rendered pages to the client.

SSG: Generating static HTML files at build time, enhancing speed, and reducing the server load.

2. WebAssembly:

WebAssembly (Wasm): A binary instruction format for a stack-based virtual machine. It allows high-performance execution of code on web browsers, enabling languages like C, C++, and Rust to run in web applications.

3. Progressive Web Apps (PWAs) Enhancements:

Background Sync: Allowing PWAs to sync data in the background even when the app is closed.

Web Push Notifications: Implementing push notifications to engage users even when they are not actively using the application.

4. State Management:

Redux and MobX: Advanced state management libraries in React applications for managing complex application states efficiently.

Reactive Programming: Utilizing RxJS or other reactive programming libraries to handle asynchronous data streams and events in real-time applications.

5. WebSockets and WebRTC:

WebSockets: Enabling real-time, bidirectional communication between clients and servers for applications requiring constant data updates.

WebRTC: Facilitating real-time communication, such as video chat, directly between web browsers without the need for plugins or additional software.

6. Caching Strategies:

Content Delivery Networks (CDN): Leveraging CDNs to cache and distribute content globally, improving website loading speeds for users worldwide.

Service Workers: Using service workers to cache assets and data, providing offline access and improving performance for returning visitors.

7. GraphQL Subscriptions:

GraphQL Subscriptions: Enabling real-time updates in GraphQL APIs by allowing clients to subscribe to specific events and receive push notifications when data changes.

8. Authentication and Authorization:

OAuth 2.0 and OpenID Connect: Implementing secure authentication and authorization protocols for user login and access control.

JSON Web Tokens (JWT): Utilizing JWTs to securely transmit information between parties, ensuring data integrity and authenticity.

9. Content Management Systems (CMS) Integration:

Headless CMS: Integrating headless CMS like Contentful or Strapi, allowing content creators to manage content independently from the application's front end.

10. Automated Performance Optimization:

Lighthouse and Web Vitals: Utilizing tools like Lighthouse and Google's Web Vitals to measure and optimize web performance, focusing on key user-centric metrics like loading speed and interactivity.

11. Machine Learning and AI Integration:

TensorFlow.js and ONNX.js: Integrating machine learning models directly into web applications for tasks like image recognition, language processing, and recommendation systems.

12. Cross-Platform Development with Electron:

Electron: Building cross-platform desktop applications using web technologies (HTML, CSS, JavaScript), allowing developers to create desktop apps for Windows, macOS, and Linux.

13. Advanced Database Techniques:

Database Sharding: Implementing database sharding techniques to distribute large databases across multiple servers, improving scalability and performance.

Full-Text Search and Indexing: Implementing full-text search capabilities and optimized indexing for efficient searching and data retrieval.

14. Chaos Engineering:

Chaos Engineering: Introducing controlled experiments to identify weaknesses and potential failures in the system, ensuring the application's resilience and reliability.

15. Serverless Architectures with AWS Lambda or Azure Functions:

Serverless Architectures: Building applications as a collection of small, single-purpose functions that run in a serverless environment, providing automatic scaling and cost efficiency.

16. Data Pipelines and ETL (Extract, Transform, Load) Processes:

Data Pipelines: Creating automated data pipelines for processing and transforming large volumes of data, integrating various data sources and ensuring data consistency.

17. Responsive Design and Accessibility:

Responsive Design: Implementing advanced responsive design techniques for seamless user experiences across a variety of devices and screen sizes.

Accessibility: Ensuring web applications are accessible to all users, including those with disabilities, by following WCAG guidelines and ARIA practices.

full stack development training in Pune

2 notes

·

View notes

Text

Boost Productivity with Databricks CLI: A Comprehensive Guide

Exciting news! The Databricks CLI has undergone a remarkable transformation, becoming a full-blown revolution. Now, it covers all Databricks REST API operations and supports every Databricks authentication type.

Exciting news! The Databricks CLI has undergone a remarkable transformation, becoming a full-blown revolution. Now, it covers all Databricks REST API operations and supports every Databricks authentication type. The best part? Windows users can join in on the exhilarating journey and install the new CLI with Homebrew, just like macOS and Linux users.

This blog aims to provide comprehensive…

View On WordPress

#API#Authentication#Azure Databricks#Azure Databricks Cluster#Azure SQL Database#Cluster#Command prompt#data#Data Analytics#data engineering#Data management#Database#Databricks#Databricks CLI#Databricks CLI commands#Homebrew#JSON#Linux#MacOS#REST API#SQL#SQL database#Windows

0 notes

Text

Generative AI, innovation, creativity & what the future might hold - CyberTalk

New Post has been published on https://thedigitalinsider.com/generative-ai-innovation-creativity-what-the-future-might-hold-cybertalk/

Generative AI, innovation, creativity & what the future might hold - CyberTalk

Stephen M. Walker II is CEO and Co-founder of Klu, an LLM App Platform. Prior to founding Klu, Stephen held product leadership roles Productboard, Amazon, and Capital One.

Are you excited about empowering organizations to leverage AI for innovative endeavors? So is Stephen M. Walker II, CEO and Co-Founder of the company Klu, whose cutting-edge LLM platform empowers users to customize generative AI systems in accordance with unique organizational needs, resulting in transformative opportunities and potential.

In this interview, Stephen not only discusses his innovative vertical SaaS platform, but also addresses artificial intelligence, generative AI, innovation, creativity and culture more broadly. Want to see where generative AI is headed? Get perspectives that can inform your viewpoint, and help you pave the way for a successful 2024. Stay current. Keep reading.

Please share a bit about the Klu story:

We started Klu after seeing how capable the early versions of OpenAI’s GPT-3 were when it came to common busy-work tasks related to HR and project management. We began building a vertical SaaS product, but needed tools to launch new AI-powered features, experiment with them, track changes, and optimize the functionality as new models became available. Today, Klu is actually our internal tools turned into an app platform for anyone building their own generative features.

What kinds of challenges can Klu help solve for users?

Building an AI-powered feature that connects to an API is pretty easy, but maintaining that over time and understanding what’s working for your users takes months of extra functionality to build out. We make it possible for our users to build their own version of ChatGPT, built on their internal documents or data, in minutes.

What is your vision for the company?

The founding insight that we have is that there’s a lot of busy work that happens in companies and software today. I believe that over the next few years, you will see each company form AI teams, responsible for the internal and external features that automate this busy work away.

I’ll give you a good example for managers: Today, if you’re a senior manager or director, you likely have two layers of employees. During performance management cycles, you have to read feedback for each employee and piece together their strengths and areas for improvement. What if, instead, you received a briefing for each employee with these already synthesized and direct quotes from their peers? Now think about all of the other tasks in business that take several hours and that most people dread. We are building the tools for every company to easily solve this and bring AI into their organization.

Please share a bit about the technology behind the product:

In many ways, Klu is not that different from most other modern digital products. We’re built on cloud providers, use open source frameworks like Nextjs for our app, and have a mix of Typescript and Python services. But with AI, what’s unique is the need to lower latency, manage vector data, and connect to different AI models for different tasks. We built on Supabase using Pgvector to build our own vector storage solution. We support all major LLM providers, but we partnered with Microsoft Azure to build a global network of embedding models (Ada) and generative models (GPT-4), and use Cloudflare edge workers to deliver the fastest experience.

What innovative features or approaches have you introduced to improve user experiences/address industry challenges?

One of the biggest challenges in building AI apps is managing changes to your LLM prompts over time. The smallest changes might break for some users or introduce new and problematic edge cases. We’ve created a system similar to Git in order to track version changes, and we use proprietary AI models to review the changes and alert our customers if they’re making breaking changes. This concept isn’t novel for traditional developers, but I believe we’re the first to bring these concepts to AI engineers.

How does Klu strive to keep LLMs secure?

Cyber security is paramount at Klu. From day one, we created our policies and system monitoring for SOC2 auditors. It’s crucial for us to be a trusted partner for our customers, but it’s also top of mind for many enterprise customers. We also have a data privacy agreement with Azure, which allows us to offer GDPR-compliant versions of the OpenAI models to our customers. And finally, we offer customers the ability to redact PII from prompts so that this data is never sent to third-party models.

Internally we have pentest hackathons to understand where things break and to proactively understand potential threats. We use classic tools like Metasploit and Nmap, but the most interesting results have been finding ways to mitigate unintentional denial of service attacks. We proactively test what happens when we hit endpoints with hundreds of parallel requests per second.

What are your perspectives on the future of LLMs (predictions for 2024)?

This (2024) will be the year for multi-modal frontier models. A frontier model is just a foundational model that is leading the state of the art for what is possible. OpenAI will roll out GPT-4 Vision API access later this year and we anticipate this exploding in usage next year, along with competitive offerings from other leading AI labs. If you want to preview what will be possible, ChatGPT Pro and Enterprise customers have access to this feature in the app today.

Early this year, I heard leaders worried about hallucinations, privacy, and cost. At Klu and across the LLM industry, we found solutions for this and we continue to see a trend of LLMs becoming cheaper and more capable each year. I always talk to our customers about not letting these stop your innovation today. Start small, and find the value you can bring to your customers. Find out if you have hallucination issues, and if you do, work on prompt engineering, retrieval, and fine-tuning with your data to reduce this. You can test these new innovations with engaged customers that are ok with beta features, but will greatly benefit from what you are offering them. Once you have found market fit, you have many options for improving privacy and reducing costs at scale – but I would not worry about that in the beginning, it’s premature optimization.

LLMs introduce a new capability into the product portfolio, but it’s also an additional system to manage, monitor, and secure. Unlike other software in your portfolio, LLMs are not deterministic, and this is a mindset shift for everyone. The most important thing for CSOs is to have a strategy for enabling their organization’s innovation. Just like any other software system, we are starting to see the equivalent of buffer exploits, and expect that these systems will need to be monitored and secured if connected to data that is more important than help documentation.

Your thoughts on LLMs, AI and creativity?

Personally, I’ve had so much fun with GenAI, including image, video, and audio models. I think the best way to think about this is that the models are better than the average person. For me, I’m below average at drawing or creating animations, but I’m above average when it comes to writing. This means I can have creative ideas for an image, the model will bring these to life in seconds, and I am very impressed. But for writing, I’m often frustrated with the boring ideas, although it helps me find blind spots in my overall narrative. The reason for this is that LLMs are just bundles of math finding the most probable answer to the prompt. Human creativity —from the arts, to business, to science— typically comes from the novel combinations of ideas, something that is very difficult for LLMs to do today. I believe the best way to think about this is that the employees who adopt AI will be more productive and creative— the LLM removes their potential weaknesses, and works like a sparring partner when brainstorming.

You and Sam Altman agree on the idea of rethinking the global economy. Say more?

Generative AI greatly changes worker productivity, including the full automation of many tasks that you would typically hire more people to handle as a business scales. The easiest way to think about this is to look at what tasks or jobs a company currently outsources to agencies or vendors, especially ones in developing nations where skill requirements and costs are lower. Over this coming decade you will see work that used to be outsourced to global labor markets move to AI and move under the supervision of employees at an organization’s HQ.

As the models improve, workers will become more productive, meaning that businesses will need fewer employees performing the same tasks. Solo entrepreneurs and small businesses have the most to gain from these technologies, as they will enable them to stay smaller and leaner for longer, while still growing revenue. For large, white-collar organizations, the idea of measuring management impact by the number of employees under a manager’s span of control will quickly become outdated.

While I remain optimistic about these changes and the new opportunities that generative AI will unlock, it does represent a large change to the global economy. Klu met with UK officials last week to discuss AI Safety and I believe the countries investing in education, immigration, and infrastructure policy today will be best suited to contend with these coming changes. This won’t happen overnight, but if we face these changes head on, we can help transition the economy smoothly.

Is there anything else that you would like to share with the CyberTalk.org audience?

Expect to see more security news regarding LLMs. These systems are like any other software and I anticipate both poorly built software and bad actors who want to exploit these systems. The two exploits that I track closely are very similar to buffer overflows. One enables an attacker to potentially bypass and hijack that prompt sent to an LLM, the other bypasses the model’s alignment tuning, which prevents it from answering questions like, “how can I build a bomb?” We’ve also seen projects like GPT4All leak API keys to give people free access to paid LLM APIs. These leaks typically come from the keys being stored in the front-end or local cache, which is a security risk completely unrelated to AI or LLMs.

#2024#ai#AI-powered#Amazon#animations#API#APIs#app#apps#Art#artificial#Artificial Intelligence#Arts#audio#automation#azure#Building#Business#cache#CEO#chatGPT#Cloud#cloud providers#cloudflare#Companies#Creative Ideas#creativity#cutting#cyber#cyber criminals

2 notes

·

View notes