#AWS DynamoDB

Explore tagged Tumblr posts

Text

AWS DynamoDB vs GCP BigTable| AntStack

Data is a precious resource in today’s fast-paced world, and it’s increasingly stored in the cloud for its benefits of accessibility, scalability, and, most importantly, security. As data volumes grow, individuals and businesses can easily expand their cloud storage without investing in new hardware or infrastructure. In the modern context, the answer to data storage often boils down to the cloud, but the choice between cloud services like AWS DynamoDB and GCP BigTable remains crucial.

0 notes

Text

Amazon DynamoDB: A Complete Guide To NoSQL Databases

Amazon DynamoDB fully managed, serverless, NoSQL database has single-digit millisecond speed at any scale.

What is Amazon DynamoDB?

You can create contemporary applications at any size with DynamoDB, a serverless NoSQL database service. Amazon DynamoDB is a serverless database that scales to zero, has no cold starts, no version upgrades, no maintenance periods, no patching, and no downtime maintenance. You just pay for what you use. A wide range of security controls and compliance criteria are available with DynamoDB. DynamoDB Global Tables is a multi-region, multi-active database with a 99.999% availability SLA and enhanced resilience for globally dispersed applications. Point-in-time recovery, automated backups, and other features support DynamoDB dependability. You may create serverless event-driven applications with Amazon DynamoDB streams.

Use cases

Create software programs

Create internet-scale apps that enable caches and user-content metadata, which call for high concurrency and connections to handle millions of requests per second and millions of users.

Establish media metadata repositories

Reduce latency with multi-Region replication between AWS Regions and scale throughput and concurrency for media and entertainment workloads including interactive content and real-time video streaming.

Provide flawless shopping experiences

When implementing workflow engines, customer profiles, inventory tracking, and shopping carts, use design patterns. Amazon DynamoDB can process millions of queries per second and enables events with extraordinary scale and heavy traffic.

Large-scale gaming systems

With no operational overhead, concentrate on promoting innovation. Provide player information, session history, and leaderboards for millions of users at once when developing your gaming platform.

Amazon DynamoDB features

Serverless

You don’t have to provision any servers, patch, administer, install, maintain, or run any software when using Amazon DynamoDB. DynamoDB offers maintenance with no downtime. There are no maintenance windows or major, minor, or patch versions.

You only pay for what you use using DynamoDB’s on-demand capacity mode, which offers pay-as-you-go pricing for read and write requests. With on-demand, DynamoDB maintains performance with no management and quickly scales up or down your tables to accommodate capacity. Additionally, when there is no traffic or cold starts at your table, it scales down to zero, saving you money on throughput.

Amazon DynamoDB NoSQL

NoSQL

DynamoDB is a NoSQL database that outperforms relational databases in performance, scalability, management, and customization. DynamoDB supports several use cases with document and key-value data types.

DynamoDB does not offer a JOIN operator, in contrast to relational databases. To cut down on database round trips and the amount of processing power required to respond to queries, advise you to denormalize your data model. DynamoDB is a NoSQL database that offers enterprise-grade applications excellent read consistency and ACID transactions.

Fully managed

DynamoDB is a fully managed database service that lets you focus on creating value to your clients. It handles hardware provisioning, security, backups, monitoring, high availability, setup, configurations, and more. This guarantees that a DynamoDB table is immediately prepared for production workloads upon creation. Without the need for updates or downtime, Amazon DynamoDB continuously enhances its functionality, security, performance, availability, and dependability.

Single-digit millisecond performance at any scale

DynamoDB was specifically designed to enhance relational databases’ scalability and speed, achieving single-digit millisecond performance at any scale. DynamoDB is designed for high-performance applications and offers APIs that promote effective database utilization in order to achieve this scale and performance. It leaves out aspects like JOIN operations that are ineffective and do not function well at scale. Whether you have 100 or 100 million users, DynamoDB consistently provides single-digit millisecond performance for your application.

What is a DynamoDB Database?

Few people outside of Amazon are aware of the precise nature of this database. Although the cloud-native database architecture is private and closed-source, there is a development version called DynamoDB Local that is utilized on developer laptops and is written in Java.

You don’t provision particular machines or allot fixed disk sizes when you set up DynamoDB on Amazon Web Services. Instead, you design the database according to the capacity that has been supplied, which includes the number of transactions and kilobytes of traffic that you want to accommodate per second. A service level of read capacity units (RCUs) and write capacity units (WCUs) is specified by users.

As previously mentioned, users often don’t call the Amazon DynamoDB API directly. Rather, their application will incorporate an Amazon Web Services, which will manage the back-end interactions with the server.

Denormalization of DynamoDB data modeling is required. Rethinking their data model is a difficult but manageable step for engineers accustomed to working with both SQL and NoSQL databases.

Read more on govindhtech.com

#AmazonDynamoDB#CompleteGuide#Database#DynamoDB#DynamoDBDatabase#sql#data#AmazonWebServices#Singledigit#Fullymanaged#aws#gamingsystems#technology#technews#news#govindhtech

0 notes

Text

Managing ColdFusion Data with AWS DynamoDB: NoSQL Database Integration

#Managing ColdFusion Data with AWS DynamoDB: NoSQL Database Integration#Managing ColdFusion Data with AWS DynamoDB#Managing ColdFusion Data NoSQL Database Integration#ColdFusion Data with AWS DynamoDB NoSQL Database Integration#ColdFusion Data with AWS DynamoDB Database Integration

0 notes

Text

Boto3 and DynamoDB: Integrating AWS’s NoSQL Service with Python

0 notes

Text

0 notes

Text

youtube

Master AWS DynamoDB : 10 essential interview questions with answers on AWS DynamoDB!

1 note

·

View note

Text

The DynamoDB Book https://inchighal.com/product/the-dynamodb-book/

#DynamoDB #NoSQL #Database #AWS #CloudComputing #WebDevelopment #Serverless #DataManagement #TechTalk #Programming #BackEnd #AmazonDynamoDB #DeveloperLife

0 notes

Text

Welches sind die am besten geeigneten Tools & Frameworks zur Entwicklung von AWS-Cloud-Computing-Anwendungen?: "Entwicklung von AWS-Cloud-Anwendungen: Die besten Tools & Frameworks von MHM Digitale Lösungen UG"

#AWS #CloudComputing #AWSLambda #AWSEC2 #ServerlessComputing #AmazonEC2ContainerService #AWSElasticBeanstalk #AmazonS3 #AmazonRedshift #AmazonDynamoDB

In der heutigen digitalen Welt ist Cloud-Computing ein Schlüsselthema, vor allem bei aufstrebenden Unternehmen. AWS (Amazon Web Services) ist der weltweit führende Cloud-Computing-Anbieter und bietet eine breite Palette an Tools und Frameworks, die Entwicklern dabei helfen, schneller und effizienter zu arbeiten. In diesem Artikel werden die besten Tools und Frameworks erörtert, die für die…

View On WordPress

#Amazon DynamoDB.#Amazon EC2 Container Service#Amazon Redshift#Amazon S3#Amazon Web Services#AWS EC2#AWS Elastic Beanstalk#AWS Lambda#Cloud Computing#Serverless Computing

0 notes

Link

This article will cover how to store data as files using Amazon S3.

0 notes

Text

What is AWS Aurora?

When information is gathered, stored and recalled in computing, it is done so via databases. A database is simply an organized collection of data that can be recalled as needed. A database typically does minimal processing outside organizing data, but some databases parse data at a low level. These types of systems are used to collect information like customer billing details or product inventory levels, but they are also used in apps and other software settings where information needs to be stored and recalled to use features of an app or piece of software.

Amazon Web Services (AWS) provides access to cloud-based database engines, and you can turn to AWS for different types of database setups that cater to different needs. If you’re looking for a relational database engine that can integrate with MySQL, AWS Aurora is a good option.

Relational Database Systems

AWS Aurora is part of AWS relational database systems (RDS). This means that databases that take advantage of AWS Aurora are simple to set up and manage in the cloud. Aurora restore backup systems can also be used to protect sensitive data against threats like theft, deletion or corruption. Amazon already provides plenty of security oversight when it comes to database protection, but you can also implement Aurora restore backup services in addition to those offered directly through AWS.

Other Features of AWS Aurora

AWS Aurora also provides access to features like one-way replication and push-button migration. Additionally, you can gain more power from using AWS Aurora through DB clusters. These arrangements harness the power of multiple units working in concert with one another to serve data at scale for larger operations.

Within different regions, AWS Aurora can be configured to include various availability zones. This offers more options to maintain data integrity while also offering speed and enhanced connectivity options. The whole point of having a cluster is to allow data to be served faster to users in different parts of the country or the world, and AWS Aurora can do this when configured according to region and availability zone.

Read a similar article about AWS data protection here at this page.

#amazon web services ec2 backup platform#disaster recovery in aws#aurora restore backup#recovery platform for dynamodb

0 notes

Text

DYNAMODB – SCALABLE NOSQL DB ON THE CLOUD: What Type of db is Dynamodb DynamoDB is a highly scalable, fault-tolerant, and durable NoSQL database service that you can take advantage of without worrying about managing complex infrastructure. This cloud-based database service boasts incredibly high performance and low latencies, and it's been adopted by some of the largest internet companies as a primary database storage option.

0 notes

Text

Tech Advice 1:

If you're a student or a fresher in computer science, sign up for AWS free tier (free for 1 year) and learn about the different services - Lambda, DynamoDB, EC2, RDS, S3, Cognito, IAM.

You can buy any course on Udemy - NodeJs, Python, C++, Java, PhP or any language/framework you're interested in and is supported by AWS.

Be it MEAN/MERN, Python, Java or any stack you use, it's a useful skill.

(I've experience but the access to this was never provided and I didn't get to learn as much as I wanted to and it's causing me trouble with getting a job)

You can also go for GCP/Azure if that looks good to you.

5 notes

·

View notes

Text

Introducing the AWS Audit Manager Common Control Library

What is AWS Audit Manager?

AWS Audit Manager is a service offered by Amazon Web Services (AWS) that simplifies managing risk and compliance with regulations and industry standards for your AWS usage. It does this by automating the process of collecting evidence to see if your security controls are working effectively.

Audit your AWS usage frequently to streamline the risk and compliance assessment process.

Audit Manager AWS

How it functions

Map your compliance requirements to AWS usage data using prebuilt and bespoke frameworks and automated evidence collection with AWS Audit Manager.Image credit to AWS

Use cases

Shift evidence collection from manual to automated

Automated evidence collecting eliminates the need for manual evidence gathering, evaluation, and management.

Audit continuously to evaluate compliance

Gather data automatically, keep an eye on your compliance position, and adjust your controls proactively to lower risk.

Implement internal risk evaluations

Create your own custom modifications to an existing framework, then initiate an assessment to gather data automatically.

You may continuously audit your AWS consumption as part of your risk and compliance assessment by mapping your compliance criteria to AWS usage data with AWS Audit Manager. A common control library that offers common controls with predefined and pre-mapped AWS data sources is being introduced by Audit Manager today.

The rigorous mapping and assessments carried out by AWS certified auditors, which confirm that the right data sources are identified for evidence collection, form the foundation of the shared control library. In order to reduce their reliance on information technology (IT) teams, Governance, Risk, and Compliance (GRC) teams can map corporate controls into AWS Audit Manager more quickly by using the common control library.

It is simpler to comprehend your audit preparedness across several frameworks at once when you use the common control library to view the compliance requirements for multiple frameworks (like PCI or HIPAA) associated with the same common control in one location. This eliminates the need for you to execute several compliance standard requirements one at a time and then repeatedly analyse the data that results for various compliance regimes.

Furthermore, as AWS Audit Manager updates or adds new data sources, such as extra AWS CloudTrail events, AWS API calls, AWS Config rules, or maps additional compliance frameworks to common controls, you instantly inherit improvements when you use controls from this library. This makes it easier to gain from new compliance frameworks that AWS Audit Manager adds to its library and reduces the labour that GRC and IT teams must perform to maintain and update evidence sources on a regular basis.

Let’s look at an example to understand how this functions in real life

Employing the common control library of AWS Audit Manager

An airline will frequently set up a policy requiring all customer payments, including those for in-flight food and internet access, to be made using a credit card. The airline creates an enterprise control for IT operations stating that “customer transactions data is always available” in order to carry out this policy. How can businesses keep an eye on whether their AWS applications comply with this new requirement?

Danilo Poccia launch the AWS Audit Manager console and select Control library from the menu bar in my capacity as their compliance officer. The new Common category is now part of the control library. Every common control corresponds to a set of fundamental controls that gather proof from managed data sources on AWS and facilitate the demonstration of compliance with various standards and laws that overlap. He search for the word “availability” in the common control library. He now understand how the airline’s anticipated requirements relate to common control. The library’s architecture has high availability.

He reveal the fundamental basic controls by expanding the High Availability Architecture Common Control. There, he see that because Amazon DynamoDB isn’t on this list, this control doesn’t fully satisfy all of the needs of the business. Even though DynamoDB is a fully managed database, they want their DynamoDB tables to remain accessible as their workload changes because DynamoDB is heavily utilized in their application architecture. If they set a fixed throughput for a DynamoDB table, this might not be the case.

He search for “redundancy” in the common control library once more. To illustrate how it relates to core controls, he extend the common control for fault tolerance and redundancy. He can see the core control for Enabling Auto Scaling for Amazon DynamoDB tables there. Although the airline’s architecture makes use of this core control, the entire common control is not required.

Furthermore, shared control AWS Config rule is required for the two key controls in high availability architecture, which verify that Multi-AZ replication on Amazon Relational Database Service (RDS) is enabled. Since the airline does not use AWS Config, this rule is inapplicable non this particular use instance. A CloudTrail event is also used by one of these two main controls, but it is not applicable in all circumstances.

He would like to gather the real resource configuration in my capacity as compliance officer. He have a quick conversation with an IT partner in order to get this evidence, and he use a customer-managed source to develop a custom control. To minimize expenses, he choose the api-rds_describedbinstances API call and establish a weekly collection frequency.

The compliance team can handle the implementation of the custom control with little assistance from the IT team. Instead of just choosing the core control linked to DynamoDB, the compliance team can apply the full second common control (fault tolerance and redundancy) if they need to lessen their dependency on IT. The reduction of time and effort for both the IT and compliance teams, as well as the acceleration of velocity, often outweigh the benefits of optimising the controls already in place, even though it could be more than they require given their design.

He now make a custom framework with these controls by selecting Framework library in the navigation bar. Next, he make an assessment with the custom framework by selecting Assessments from the navigation pane. AWS Audit Manager begins gathering information about the chosen AWS accounts and their AWS consumption as soon as he create the evaluation.

With an implementation in accordance with their system design and their current AWS services, a compliance team can exactly report on the enterprise control “customer transactions data is always available” by following these steps.

AWS Audit Manager Pricing

All Amazon Regions where AWS Audit Manager is accessible currently have access to the common control library. The use of the common control library is free of charge. See the price for AWS Audit Manager for further details.

Read more on Govindhtech.com

#AWS#AWSAuditManager#AWSdatasources#AWSservices#DynamoDB#news#technews#technology#technologynews#technologytrends

0 notes

Text

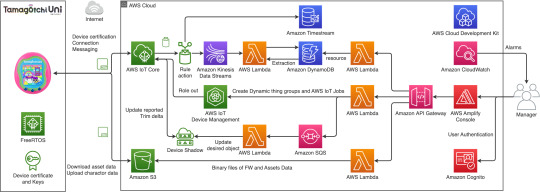

Tamagotchi Uni Uses AWS, Amazon Web Services

The Tamagotchi Uni is the first Tamagotchi to ever connect to Wi-Fi, which enables it to receive over the area updates, programing changes, and more. How exactly is this all being done by Bandai Japan? Well Bandai has built the Tamagotchi Uni on the Amazon Web Services platform (AWS).

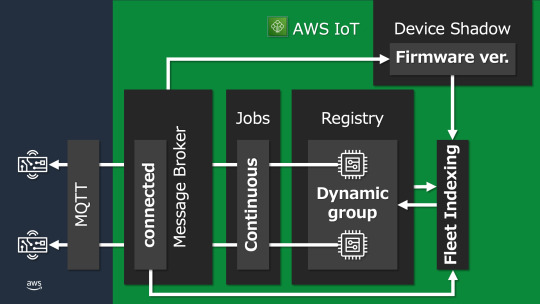

The details of this are actually outlined on a recent article on the Amazon Web Services blog. The blog post provided a detailed view on how Tamagotchi Uni use AWS to achieve secure and reliable connectivity and quickly deliver new content updates without leaving customers waiting.It details that Bandai Co., Ltd., the company responsible for product development and sales, adopted AWS IoT to realize the concept of globally interconnected Tamagotchi, enabling users to interact with each other.

Bandai partnered with their cloud development partner, Phoenisys, Inc., to connect and manage million of Tamagotchi devices. One of the critical features was the over the air software updates which uses the jobs feature of AWS IoT Device Management to distribute the latest firmware across all Tamagotchi devices without causing any delays to customers.

To make Tamagotchi Uni IoT-enabled, Bandai establish the three key goals, which was implementing secure connections, scaling the load-balancing resources to accommodate over 1 million connections worldwide, and optimizing operational costs. The article even features the AWS architecture for the Tamagotchi Uni, which is interesting.

AWS IoT Core is used to manage the state of each Tamagotchi Uni device, which helps retrieve distributed items and content. AWS IoT Device Management is used to index the extensive Tamagotchi Uni fleet and create dynamic groups on the state of each device, facilitating efficient over-the-air (OTA) updates. FreeRTOS is used to minimize the amount of resources and code required to implement device-to-cloud communication for efficient system development. AWS Lambda is used to process tasks, delivering new announcements, and registering assets. Amazon DynamoDB is used as a fully managed, sever less, key-value noSQL database that runs high-performance applications at any scale. Amazon Simple Storage Service (Amazon S3) is used for object storage service, each of these data stores are used to manage the various resources within Tamagotchi Uni. Lastly, Amazon Timestream is used to accumulate historical data of user’s actions like downloading items and additional content.

The article also details how Bandai is handling large scale firmware updates to Tamagotchi Uni devices which are executed at a rate of 1,000 units per hour which would have resulted in a delay for some devices. The team actually designed job delivery as a continuous job which automatically updates the devices under certain conditions. This is using fleet indexing that runs a query to see which devices meet the criteria for the update to be pushed out to it.

Lastly the article details how Bandai conducted system performance testing at a large-scale to emulate what it would be like after the device was released. They verified the smooth operation and performance of updates through their testing.

Be sure to check out the full article here on the Amazon AWS blog.

#tamapalace#tamagotchi#tmgc#tamagotchiuni#tamagotchi uni#uni#tamatag#virtualpet#bandai#amazonaws#amazon aws#aws#amazonwebservices#amazon web services#blog

14 notes

·

View notes

Text

Boto3 and DynamoDB: Integrating AWS’s NoSQL Service with Python

Introduction Amazon Web Services aws boto3 dynamodb as an versatile NoSQL database service while Boto3 serves, as the Python based AWS SDK. This article aims to delve into the usage of Boto3 for interacting with DynamoDB encompassing operations recommended practices and typical scenarios. It will be complemented by Python code snippets.

Overview of Boto3 and DynamoDB Boto3 stands as an AWS Software Development Kit (SDK) specifically designed for Python programmers. Its purpose is to empower software developers with the ability to utilize services such as Amazon S3 and DynamoDB.

DynamoDB on the hand is a key value and document oriented database that excels in delivering exceptional performance within milliseconds regardless of scale. As a managed database solution it offers region functionality, with multiple masters while ensuring durability.

Setting Up Boto3 for DynamoDB Before you start, you need to install Boto3 and set up your AWS credentials:

1. Install Boto3:

pip install boto3 2. Configure AWS Credentials: Set up your AWS credentials in `~/.aws/credentials`:

[default]

aws_access_key_id = YOUR_ACCESS_KEY

aws_secret_access_key = YOUR_SECRET_KEY Basic Operations with DynamoDB 1. Creating a DynamoDB Table: import boto3

dynamodb = boto3.resource(‘dynamodb’)

table = dynamodb.create_table(

TableName=’MyTable’,

KeySchema=[

{‘AttributeName’: ‘id’, ‘KeyType’: ‘HASH’}

],

AttributeDefinitions=[

{‘AttributeName’: ‘id’, ‘AttributeType’: ‘S’}

],

ProvisionedThroughput={‘ReadCapacityUnits’: 1, ‘WriteCapacityUnits’: 1}

)

table.wait_until_exists() 2. Inserting Data into a Table:

table.put_item(

Item={

‘id’: ‘123’,

‘title’: ‘My First Item’,

‘info’: {‘plot’: “Something interesting”, ‘rating’: 5}

}

) 3. Reading Data from a Table:

response = table.get_item(

Key={‘id’: ‘123’}

)

item = response[‘Item’]

print(item) 4. Updating Data in a Table: table.update_item(

Key={‘id’: ‘123’},

UpdateExpression=’SET info.rating = :val1',

ExpressionAttributeValues={‘:val1’: 6}

) 5. Deleting Data from a Table:

table.delete_item(

Key={‘id’: ‘123’}

) 6. Deleting a Table:

table.delete() Best Practices for Using Boto3 with DynamoDB

1. Manage Resources Efficiently: Always close the connection or use a context manager to handle resources.

2. Error Handling: Implement try-except blocks to handle potential API errors.

3. Secure Your AWS Credentials: Never hard-code credentials. Use IAM roles or environment variables.

Advanced Operations – Batch Operations: Boto3 allows batch operations for reading and writing data.

– Query and Scan: Utilize DynamoDB’s `query` and `scan` methods for complex data retrieval.

Use Cases for Boto3 and DynamoDB – Web Applications: Store and retrieve user data for web applications.

– IoT Applications: Capture and store IoT device data.

– Data Analysis: Store large datasets and retrieve them for analysis.

Monitoring and Optimization Use AWS CloudWatch to monitor the performance of your DynamoDB tables and optimize provisioned throughput settings as necessary.

Conclusion Boto3 provides a powerful and easy way to interact with AWS DynamoDB using Python. By understanding these basic operations and best practices, developers can effectively integrate DynamoDB into their applications.

0 notes

Text

Exploring the Power of Amazon Web Services: Top AWS Services You Need to Know

In the ever-evolving realm of cloud computing, Amazon Web Services (AWS) has established itself as an undeniable force to be reckoned with. AWS's vast and diverse array of services has positioned it as a dominant player, catering to the evolving needs of businesses, startups, and individuals worldwide. Its popularity transcends boundaries, making it the preferred choice for a myriad of use cases, from startups launching their first web applications to established enterprises managing complex networks of services. This blog embarks on an exploratory journey into the boundless world of AWS, delving deep into some of its most sought-after and pivotal services.

As the digital landscape continues to expand, understanding these AWS services and their significance is pivotal, whether you're a seasoned cloud expert or someone taking the first steps in your cloud computing journey. Join us as we delve into the intricate web of AWS's top services and discover how they can shape the future of your cloud computing endeavors. From cloud novices to seasoned professionals, the AWS ecosystem holds the keys to innovation and transformation.

Amazon EC2 (Elastic Compute Cloud): The Foundation of Scalability At the core of AWS's capabilities is Amazon EC2, the Elastic Compute Cloud. EC2 provides resizable compute capacity in the cloud, allowing you to run virtual servers, commonly referred to as instances. These instances serve as the foundation for a multitude of AWS solutions, offering the scalability and flexibility required to meet diverse application and workload demands. Whether you're a startup launching your first web application or an enterprise managing a complex network of services, EC2 ensures that you have the computational resources you need, precisely when you need them.

Amazon S3 (Simple Storage Service): Secure, Scalable, and Cost-Effective Data Storage When it comes to storing and retrieving data, Amazon S3, the Simple Storage Service, stands as an indispensable tool in the AWS arsenal. S3 offers a scalable and highly durable object storage service that is designed for data security and cost-effectiveness. This service is the choice of businesses and individuals for storing a wide range of data, including media files, backups, and data archives. Its flexibility and reliability make it a prime choice for safeguarding your digital assets and ensuring they are readily accessible.

Amazon RDS (Relational Database Service): Streamlined Database Management Database management can be a complex task, but AWS simplifies it with Amazon RDS, the Relational Database Service. RDS automates many common database management tasks, including patching, backups, and scaling. It supports multiple database engines, including popular options like MySQL, PostgreSQL, and SQL Server. This service allows you to focus on your application while AWS handles the underlying database infrastructure. Whether you're building a content management system, an e-commerce platform, or a mobile app, RDS streamlines your database operations.

AWS Lambda: The Era of Serverless Computing Serverless computing has transformed the way applications are built and deployed, and AWS Lambda is at the forefront of this revolution. Lambda is a serverless compute service that enables you to run code without the need for server provisioning or management. It's the perfect solution for building serverless applications, microservices, and automating tasks. The unique pricing model ensures that you pay only for the compute time your code actually uses. This service empowers developers to focus on coding, knowing that AWS will handle the operational complexities behind the scenes.

Amazon DynamoDB: Low Latency, High Scalability NoSQL Database Amazon DynamoDB is a managed NoSQL database service that stands out for its low latency and exceptional scalability. It's a popular choice for applications with variable workloads, such as gaming platforms, IoT solutions, and real-time data processing systems. DynamoDB automatically scales to meet the demands of your applications, ensuring consistent, single-digit millisecond latency at any scale. Whether you're managing user profiles, session data, or real-time analytics, DynamoDB is designed to meet your performance needs.

Amazon VPC (Virtual Private Cloud): Tailored Networking for Security and Control Security and control over your cloud resources are paramount, and Amazon VPC (Virtual Private Cloud) empowers you to create isolated networks within the AWS cloud. This isolation enhances security and control, allowing you to define your network topology, configure routing, and manage access. VPC is the go-to solution for businesses and individuals who require a network environment that mirrors the security and control of traditional on-premises data centers.

Amazon SNS (Simple Notification Service): Seamless Communication Across Channels Effective communication is a cornerstone of modern applications, and Amazon SNS (Simple Notification Service) is designed to facilitate seamless communication across various channels. This fully managed messaging service enables you to send notifications to a distributed set of recipients, whether through email, SMS, or mobile devices. SNS is an essential component of applications that require real-time updates and notifications to keep users informed and engaged.

Amazon SQS (Simple Queue Service): Decoupling for Scalable Applications Decoupling components of a cloud application is crucial for scalability, and Amazon SQS (Simple Queue Service) is a fully managed message queuing service designed for this purpose. It ensures reliable and scalable communication between different parts of your application, helping you create systems that can handle varying workloads efficiently. SQS is a valuable tool for building robust, distributed applications that can adapt to changes in demand.

In the rapidly evolving landscape of cloud computing, Amazon Web Services (AWS) stands as a colossus, offering a diverse array of services that address the ever-evolving needs of businesses, startups, and individuals alike. AWS's popularity transcends industry boundaries, making it the go-to choice for a wide range of use cases, from startups launching their inaugural web applications to established enterprises managing intricate networks of services.

To unlock the full potential of these AWS services, gaining comprehensive knowledge and hands-on experience is key. ACTE Technologies, a renowned training provider, offers specialized AWS training programs designed to provide practical skills and in-depth understanding. These programs equip you with the tools needed to navigate and excel in the dynamic world of cloud computing.

With AWS services at your disposal, the possibilities are endless, and innovation knows no bounds. Join the ever-growing community of cloud professionals and enthusiasts, and empower yourself to shape the future of the digital landscape. ACTE Technologies is your trusted guide on this journey, providing the knowledge and support needed to thrive in the world of AWS and cloud computing.

8 notes

·

View notes