#API-Driven Architecture

Explore tagged Tumblr posts

Text

Discover how composable analytics enables rapid, real-time insights through a modular approach that connects and analyzes diverse data streams.

#Composable Analytics#Traditional Analytics Models#API-Driven Architecture#Application Programming Interfaces#Actionable Insights#Real-Time Visualization

0 notes

Text

Discover how composable analytics enables rapid, real-time insights through a modular approach that connects and analyzes diverse data streams.

#Composable Analytics#Traditional Analytics Models#API-Driven Architecture#Application Programming Interfaces#Actionable Insights#Real-Time Visualization

0 notes

Text

Discover how composable analytics enables rapid, real-time insights through a modular approach that connects and analyzes diverse data streams.

#Composable Analytics#Traditional Analytics Models#API-Driven Architecture#Application Programming Interfaces#Actionable Insights#Real-Time Visualization

0 notes

Text

How Does Composable Analytics Enable Real-Time Insights?

Learn how composable analytics helps you extract real-time insights by assembling reusable data components to meet dynamic business needs.

As the demand for data-driven decision-making accelerates, organizations increasingly seek ways to harness insights from their data faster than ever. Composable analytics, a modern approach to data analysis, stands out as a transformative solution by enabling real-time insights tailored to diverse and changing business needs. This method offers flexibility and efficiency that traditional analytics models often lack, allowing businesses to react swiftly and strategically to market changes, operational needs, and customer behavior. Let’s explore how composable analytics empowers organizations to generate real-time insights by breaking down complex data processes into manageable, reusable components.

Understanding Composable Analytics: A Modular Approach to Data

Composable analytics is rooted in the principle of modularity, where data processes are divided into individual components or “modules” that can be independently developed, deployed, and reused. Unlike monolithic analytics systems, composable analytics enables organizations to create customized data flows by combining different analytical modules. Each module is dedicated to a specific function, such as data ingestion, transformation, or visualization.

This modularity allows businesses to build flexible, scalable analytics solutions that adapt to unique needs. Teams can select, integrate, and recombine the most relevant tools, algorithms, and data sources without starting from scratch. It also enables quicker troubleshooting, as individual components can be refined or replaced as needed, contributing to a more agile approach to real-time data processing.

The Role of API-Driven Architecture in Composable Analytics

A core aspect of composable analytics is its API-driven architecture, which allows different software components to communicate seamlessly. Through APIs (Application Programming Interfaces), organizations can connect disparate systems, data sources, and analytical tools, creating a unified analytics ecosystem.

For real-time insights, API-driven architecture is crucial. It enables rapid data flow between sources, allowing data to be ingested, processed, and delivered with minimal latency. By leveraging APIs, businesses can achieve continuous data integration, enabling faster access to fresh, actionable insights. This architecture also facilitates the integration of machine learning models, automation scripts, and third-party data sources, significantly broadening the scope and relevance of real-time analytics.

Real-Time Data Processing and Stream Analytics

For real-time insights to be actionable, they must be timely and contextually relevant. Composable analytics empowers real-time data processing through stream analytics, where data is processed as it arrives rather than waiting for batch updates. In industries like finance, healthcare, and retail, where real-time decision-making is critical, stream analytics is invaluable.

Through composable analytics, businesses can deploy stream processing engines such as Apache Kafka, Flink, or Spark Streaming as components in their architecture. These engines handle high-velocity data from various sources, analyzing it on the fly. With stream analytics, composable analytics makes it possible to detect patterns, identify anomalies, and generate alerts in near real-time, helping organizations respond promptly to dynamic scenarios.

Data Orchestration and Workflow Automation

In composable analytics, data orchestration is the coordination of various analytics components to create efficient workflows. Workflow automation is essential for real-time analytics, ensuring that data flows smoothly from ingestion through transformation to visualization, without manual intervention.

By automating these workflows, composable analytics eliminates delays often caused by repetitive manual processes. Orchestration tools such as Apache Airflow or Prefect enable seamless movement of data between components, allowing organizations to set up data pipelines that automatically adapt to new data inputs and analysis needs. This automation further accelerates time-to-insight, providing teams with up-to-date information whenever they need it.

Adaptive Machine Learning Models

Machine learning (ML) is essential to extracting real-time insights from composable analytics. Adaptive ML models, which can be trained and re-trained on live data, enable dynamic insights that evolve with changing conditions. In composable analytics, adaptive ML models are treated as modular components that can be integrated with other analytics tools, making it possible to deploy models that continually learn from new data.

For example, an e-commerce company might use an adaptive ML model to recommend products based on real-time browsing behavior, thereby optimizing the customer experience. By continually updating these models with fresh data, composable analytics ensures that insights are not only real-time but also relevant and personalized.

Enhanced Data Visualization for Real-Time Decision-Making

Visualization is the final step in the analytics process where data is transformed into actionable insights. Composable analytics provides flexibility in visualization by allowing businesses to select and integrate visualization tools best suited to their needs, whether it’s real-time dashboards, interactive charts, or custom reports.

Through real-time visualization, composable analytics enables decision-makers to quickly interpret and act on insights. Tools such as Tableau, Power BI, and custom dashboards can be configured to refresh automatically, ensuring that stakeholders have access to the most recent data. This real-time visibility into performance metrics, trends, and anomalies enables faster, data-backed decisions across all levels of the organization.

Conclusion

Composable analytics is reshaping how organizations approach data-driven decision-making by enabling real-time insights through a flexible, modular framework. From API-driven architectures and stream processing to adaptive machine learning and automated workflows, composable analytics provides businesses with the tools to turn raw data into actionable insights in record time. As industries continue to prioritize agility and responsiveness, adopting composable analytics offers a strategic advantage, empowering companies to make informed decisions with confidence and speed.

Frequently Asked Questions (FAQs)

1. What is Composable Analytics, and how does it differ from traditional analytics models?

Composable Analytics refers to a modular, flexible approach that allows organizations to assemble analytics components dynamically. Unlike traditional analytics models, which are rigid and monolithic, Composable Analytics enables the integration of different data sources and tools in real time through APIs (Application Programming Interfaces). This adaptability accelerates data processing and allows for immediate customization of insights, providing a more dynamic, real-time analysis experience tailored to specific business needs.

2. How does API-driven architecture contribute to real-time insights in Composable Analytics?

An API-driven architecture is crucial for enabling the modular nature of Composable Analytics. APIs facilitate seamless communication between various analytics components, ensuring that data flows quickly and efficiently between systems. This enables real-time visualization and the delivery of actionable insights by breaking down data silos and improving the agility of analytics processes. With API-driven architecture, businesses can continuously refine their analytics setup to respond instantly to changing data without major infrastructural overhauls.

3. How does Composable Analytics enable real-time decision-making?

Composable Analytics empowers organizations with real-time insights by allowing on-demand access to diverse data sources and analytical models. Its flexible design integrates various analytics components, from machine learning algorithms to data visualization tools, to generate insights in real time. This means decision-makers can act on the most current data, rather than relying on static reports. The ability to assemble and reassemble these analytics components ensures that organizations can rapidly respond to market changes or operational shifts.

4. How do traditional analytics models limit real-time insights?

Traditional analytics models often rely on static, pre-defined processes that require manual intervention to adapt. This limits their ability to deliver real-time insights because data integration and processing typically occur in batch mode, which introduces latency. Unlike Composable Analytics, traditional models lack the modularity and API-driven architecture required for continuous real-time data flow. As a result, insights are often delayed and less actionable, preventing organizations from responding promptly to real-time events.

5. How does Composable Analytics enhance actionable insights compared to traditional approaches?

Composable Analytics provides more actionable insights by leveraging its modularity and real-time data processing capabilities. In traditional analytics models, insights are often retrospective, requiring manual interpretation of static reports. In contrast, Composable Analytics delivers dynamic, contextually relevant insights that decision-makers can act on immediately. The API-driven architecture ensures seamless integration of diverse data sources and real-time visualization, making it easier for businesses to identify trends and take informed actions promptly.

6. How do real-time visualization capabilities enhance the effectiveness of Composable Analytics?

Real-time visualization is a key feature of Composable Analytics, offering immediate representation of data trends and anomalies as they unfold. This capability is critical for businesses that need to monitor operational metrics or market behavior on the fly. Through API-driven connections, data from multiple sources is instantly processed and displayed in a visually accessible format. This enables faster comprehension and facilitates quicker decision-making, as stakeholders can see changes in real time and adjust strategies accordingly.

7. How does the flexibility of Composable Analytics reduce time to insight?

The modularity of Composable Analytics significantly reduces the time to insight by allowing organizations to assemble analytics components on demand. This flexibility means that rather than waiting for static reports or complex reconfigurations in traditional analytics models, businesses can quickly recompose their analytics environment using APIs. The result is faster processing, real-time data integration, and more immediate access to actionable insights, which are essential for timely decision-making in fast-paced industries.

8. How can organizations integrate machine learning into Composable Analytics for enhanced real-time insights?

Composable Analytics makes it easier to integrate machine learning models into the analytics pipeline through its API-driven architecture. Organizations can seamlessly connect machine learning algorithms with real-time data sources, enabling continuous model training and refinement based on the latest data. This integration supports predictive analytics and offers deeper, more accurate insights in real time. By combining machine learning with real-time visualization, businesses can leverage both historical patterns and live data for smarter, faster decision-making.

Original Source: https://bit.ly/4fGZxgy

#Composable Analytics#Traditional Analytics Models#API-Driven Architecture#Application Programming Interfaces#Actionable Insights#Real-Time Visualization

0 notes

Text

What is Retrieval Augmented Generation?

New Post has been published on https://thedigitalinsider.com/what-is-retrieval-augmented-generation/

What is Retrieval Augmented Generation?

Large Language Models (LLMs) have contributed to advancing the domain of natural language processing (NLP), yet an existing gap persists in contextual understanding. LLMs can sometimes produce inaccurate or unreliable responses, a phenomenon known as “hallucinations.”

For instance, with ChatGPT, the occurrence of hallucinations is approximated to be around 15% to 20% around 80% of the time.

Retrieval Augmented Generation (RAG) is a powerful Artificial Intelligence (AI) framework designed to address the context gap by optimizing LLM’s output. RAG leverages the vast external knowledge through retrievals, enhancing LLMs’ ability to generate precise, accurate, and contextually rich responses.

Let’s explore the significance of RAG within AI systems, unraveling its potential to revolutionize language understanding and generation.

What is Retrieval Augmented Generation (RAG)?

As a hybrid framework, RAG combines the strengths of generative and retrieval models. This combination taps into third-party knowledge sources to support internal representations and to generate more precise and reliable answers.

The architecture of RAG is distinctive, blending sequence-to-sequence (seq2seq) models with Dense Passage Retrieval (DPR) components. This fusion empowers the model to generate contextually relevant responses grounded in accurate information.

RAG establishes transparency with a robust mechanism for fact-checking and validation to ensure reliability and accuracy.

How Retrieval Augmented Generation Works?

In 2020, Meta introduced the RAG framework to extend LLMs beyond their training data. Like an open-book exam, RAG enables LLMs to leverage specialized knowledge for more precise responses by accessing real-world information in response to questions, rather than relying solely on memorized facts.

Original RAG Model by Meta (Image Source)

This innovative technique departs from a data-driven approach, incorporating knowledge-driven components, enhancing language models’ accuracy, precision, and contextual understanding.

Additionally, RAG functions in three steps, enhancing the capabilities of language models.

Core Components of RAG (Image Source)

Retrieval: Retrieval models find information connected to the user’s prompt to enhance the language model’s response. This involves matching the user’s input with relevant documents, ensuring access to accurate and current information. Techniques like Dense Passage Retrieval (DPR) and cosine similarity contribute to effective retrieval in RAG and further refine findings by narrowing it down.

Augmentation: Following retrieval, the RAG model integrates user query with relevant retrieved data, employing prompt engineering techniques like key phrase extraction, etc. This step effectively communicates the information and context with the LLM, ensuring a comprehensive understanding for accurate output generation.

Generation: In this phase, the augmented information is decoded using a suitable model, such as a sequence-to-sequence, to produce the ultimate response. The generation step guarantees the model’s output is coherent, accurate, and tailored according to the user’s prompt.

What are the Benefits of RAG?

RAG addresses critical challenges in NLP, such as mitigating inaccuracies, reducing reliance on static datasets, and enhancing contextual understanding for more refined and accurate language generation.

RAG’s innovative framework enhances the precision and reliability of generated content, improving the efficiency and adaptability of AI systems.

1. Reduced LLM Hallucinations

By integrating external knowledge sources during prompt generation, RAG ensures that responses are firmly grounded in accurate and contextually relevant information. Responses can also feature citations or references, empowering users to independently verify information. This approach significantly enhances the AI-generated content’s reliability and diminishes hallucinations.

2. Up-to-date & Accurate Responses

RAG mitigates the time cutoff of training data or erroneous content by continuously retrieving real-time information. Developers can seamlessly integrate the latest research, statistics, or news directly into generative models. Moreover, it connects LLMs to live social media feeds, news sites, and dynamic information sources. This feature makes RAG an invaluable tool for applications demanding real-time and precise information.

3. Cost-efficiency

Chatbot development often involves utilizing foundation models that are API-accessible LLMs with broad training. Yet, retraining these FMs for domain-specific data incurs high computational and financial costs. RAG optimizes resource utilization and selectively fetches information as needed, reducing unnecessary computations and enhancing overall efficiency. This improves the economic viability of implementing RAG and contributes to the sustainability of AI systems.

4. Synthesized Information

RAG creates comprehensive and relevant responses by seamlessly blending retrieved knowledge with generative capabilities. This synthesis of diverse information sources enhances the depth of the model’s understanding, offering more accurate outputs.

5. Ease of Training

RAG’s user-friendly nature is manifested in its ease of training. Developers can fine-tune the model effortlessly, adapting it to specific domains or applications. This simplicity in training facilitates the seamless integration of RAG into various AI systems, making it a versatile and accessible solution for advancing language understanding and generation.

RAG’s ability to solve LLM hallucinations and data freshness problems makes it a crucial tool for businesses looking to enhance the accuracy and reliability of their AI systems.

Use Cases of RAG

RAG‘s adaptability offers transformative solutions with real-world impact, from knowledge engines to enhancing search capabilities.

1. Knowledge Engine

RAG can transform traditional language models into comprehensive knowledge engines for up-to-date and authentic content creation. It is especially valuable in scenarios where the latest information is required, such as in educational platforms, research environments, or information-intensive industries.

2. Search Augmentation

By integrating LLMs with search engines, enriching search results with LLM-generated replies improves the accuracy of responses to informational queries. This enhances the user experience and streamlines workflows, making it easier to access the necessary information for their tasks..

3. Text Summarization

RAG can generate concise and informative summaries of large volumes of text. Moreover, RAG saves users time and effort by enabling the development of precise and thorough text summaries by obtaining relevant data from third-party sources.

4. Question & Answer Chatbots

Integrating LLMs into chatbots transforms follow-up processes by enabling the automatic extraction of precise information from company documents and knowledge bases. This elevates the efficiency of chatbots in resolving customer queries accurately and promptly.

Future Prospects and Innovations in RAG

With an increasing focus on personalized responses, real-time information synthesis, and reduced dependency on constant retraining, RAG promises revolutionary developments in language models to facilitate dynamic and contextually aware AI interactions.

As RAG matures, its seamless integration into diverse applications with heightened accuracy offers users a refined and reliable interaction experience.

Visit Unite.ai for better insights into AI innovations and technology.

#ai#amp#API#applications#approach#architecture#artificial#Artificial Intelligence#bases#book#chatbot#chatbots#chatGPT#comprehensive#content creation#data#data-driven#datasets#developers#development#Developments#domains#economic#efficiency#engineering#engines#Fact-checking#Facts#financial#Foundation

2 notes

·

View notes

Text

Explore the best developer friendly API platforms designed to streamline integration, foster innovation, and accelerate development for seamless user experiences.

Developer Friendly Api Platform

#Developer Friendly Api Platform#Consumer Driven Banking#Competitive Market Advantage Through Data#Banking Data Aggregation Services#Advanced Security Architecture#Adr Open Banking#Accredited Data Recipient

0 notes

Text

Key steps to Building Microservices with Node.js

New Post has been published on https://www.codesolutionstuff.com/key-steps-to-building-microservices-with-node-js/

Key steps to Building Microservices with Node.js

Microservices architecture is a popular method for building complex, scalable applications. It involves breaking down a large application into smaller, independently deployable services that communicate with each other through APIs. Node.js is a popular choice for building microservices

#API#Architecture#BeginnersGuide#database#Deployment#Event-driven#Maintenance#Microservices#Network Traffic#Node.js#Non-blocking I/O#Programming Language#Scalability#Technology Stack#webdevelopment

0 notes

Text

The Future of Web Development: Trends, Techniques, and Tools

Web development is a dynamic field that is continually evolving to meet the demands of an increasingly digital world. With businesses relying more on online presence and user experience becoming a priority, web developers must stay abreast of the latest trends, technologies, and best practices. In this blog, we’ll delve into the current landscape of web development, explore emerging trends and tools, and discuss best practices to ensure successful web projects.

Understanding Web Development

Web development involves the creation and maintenance of websites and web applications. It encompasses a variety of tasks, including front-end development (what users see and interact with) and back-end development (the server-side that powers the application). A successful web project requires a blend of design, programming, and usability skills, with a focus on delivering a seamless user experience.

Key Trends in Web Development

Progressive Web Apps (PWAs): PWAs are web applications that provide a native app-like experience within the browser. They offer benefits like offline access, push notifications, and fast loading times. By leveraging modern web capabilities, PWAs enhance user engagement and can lead to higher conversion rates.

Single Page Applications (SPAs): SPAs load a single HTML page and dynamically update content as users interact with the app. This approach reduces page load times and provides a smoother experience. Frameworks like React, Angular, and Vue.js have made developing SPAs easier, allowing developers to create responsive and efficient applications.

Responsive Web Design: With the increasing use of mobile devices, responsive design has become essential. Websites must adapt to various screen sizes and orientations to ensure a consistent user experience. CSS frameworks like Bootstrap and Foundation help developers create fluid, responsive layouts quickly.

Voice Search Optimization: As voice-activated devices like Amazon Alexa and Google Home gain popularity, optimizing websites for voice search is crucial. This involves focusing on natural language processing and long-tail keywords, as users tend to speak in full sentences rather than typing short phrases.

Artificial Intelligence (AI) and Machine Learning: AI is transforming web development by enabling personalized user experiences and smarter applications. Chatbots, for instance, can provide instant customer support, while AI-driven analytics tools help developers understand user behavior and optimize websites accordingly.

Emerging Technologies in Web Development

JAMstack Architecture: JAMstack (JavaScript, APIs, Markup) is a modern web development architecture that decouples the front end from the back end. This approach enhances performance, security, and scalability by serving static content and fetching dynamic content through APIs.

WebAssembly (Wasm): WebAssembly allows developers to run high-performance code on the web. It opens the door for languages like C, C++, and Rust to be used for web applications, enabling complex computations and graphics rendering that were previously difficult to achieve in a browser.

Serverless Computing: Serverless architecture allows developers to build and run applications without managing server infrastructure. Platforms like AWS Lambda and Azure Functions enable developers to focus on writing code while the cloud provider handles scaling and maintenance, resulting in more efficient workflows.

Static Site Generators (SSGs): SSGs like Gatsby and Next.js allow developers to build fast and secure static websites. By pre-rendering pages at build time, SSGs improve performance and enhance SEO, making them ideal for blogs, portfolios, and documentation sites.

API-First Development: This approach prioritizes building APIs before developing the front end. API-first development ensures that various components of an application can communicate effectively and allows for easier integration with third-party services.

Best Practices for Successful Web Development

Focus on User Experience (UX): Prioritizing user experience is essential for any web project. Conduct user research to understand your audience's needs, create wireframes, and test prototypes to ensure your design is intuitive and engaging.

Emphasize Accessibility: Making your website accessible to all users, including those with disabilities, is a fundamental aspect of web development. Adhere to the Web Content Accessibility Guidelines (WCAG) by using semantic HTML, providing alt text for images, and ensuring keyboard navigation is possible.

Optimize Performance: Website performance significantly impacts user satisfaction and SEO. Optimize images, minify CSS and JavaScript, and leverage browser caching to ensure fast loading times. Tools like Google PageSpeed Insights can help identify areas for improvement.

Implement Security Best Practices: Security is paramount in web development. Use HTTPS to encrypt data, implement secure authentication methods, and validate user input to protect against vulnerabilities. Regularly update dependencies to guard against known exploits.

Stay Current with Technology: The web development landscape is constantly changing. Stay informed about the latest trends, tools, and technologies by participating in online courses, attending webinars, and engaging with the developer community. Continuous learning is crucial to maintaining relevance in this field.

Essential Tools for Web Development

Version Control Systems: Git is an essential tool for managing code changes and collaboration among developers. Platforms like GitHub and GitLab facilitate version control and provide features for issue tracking and code reviews.

Development Frameworks: Frameworks like React, Angular, and Vue.js streamline the development process by providing pre-built components and structures. For back-end development, frameworks like Express.js and Django can speed up the creation of server-side applications.

Content Management Systems (CMS): CMS platforms like WordPress, Joomla, and Drupal enable developers to create and manage websites easily. They offer flexibility and scalability, making it simple to update content without requiring extensive coding knowledge.

Design Tools: Tools like Figma, Sketch, and Adobe XD help designers create user interfaces and prototypes. These tools facilitate collaboration between designers and developers, ensuring that the final product aligns with the initial vision.

Analytics and Monitoring Tools: Google Analytics, Hotjar, and other analytics tools provide insights into user behavior, allowing developers to assess the effectiveness of their websites. Monitoring tools can alert developers to issues such as downtime or performance degradation.

Conclusion

Web development is a rapidly evolving field that requires a blend of creativity, technical skills, and a user-centric approach. By understanding the latest trends and technologies, adhering to best practices, and leveraging essential tools, developers can create engaging and effective web experiences. As we look to the future, those who embrace innovation and prioritize user experience will be best positioned for success in the competitive world of web development. Whether you are a seasoned developer or just starting, staying informed and adaptable is key to thriving in this dynamic landscape.

more about details :- https://fabvancesolutions.com/

#fabvancesolutions#digitalagency#digitalmarketingservices#graphic design#startup#ecommerce#branding#marketing#digitalstrategy#googleimagesmarketing

2 notes

·

View notes

Text

The Evolution of Web Development: A Journey Through the Years

Web development is the work involved in developing a website for the Internet (World Wide Web) or an intranet .

Origin/ Web 1.0:

Tim Berners-Lee created the World Wide Web in 1989 at CERN. The primary goal in the development of the Web was to fulfill the automated information-sharing needs of academics affiliated with institutions and various global organizations. Consequently, HTML was developed in 1993.

Web 2.0:

Web 2.0 introduced increased user engagement and communication. It evolved from the static, read-only nature of Web 1.0 and became an integrated network for engagement and communication. It is often referred to as a user-focused, read-write online network.

Web 3.0:

Web 3.0, considered the third and current version of the web, was introduced in 2014. Web 3.0 aims to turn the web into a sizable, organized database, providing more functionality than traditional search engines.

This evolution transformed static websites into dynamic and responsive platforms, setting the stage for the complex and feature-rich web applications we have today.

Static HTML Pages (1990s)

Introduction of CSS (late 1990s)[13]

JavaScript and Dynamic HTML (1990s - early 2000s)[14][15]

AJAX (1998)[16]

Rise of Content management systems (CMS) (mid-2000s)

Mobile web (late 2000s - 2010s)

Single-page applications (SPAs) and front-end frameworks (2010s)

Server-side javaScript (2010s)

Microservices and API-driven development (2010s - present)

Progressive web apps (PWAs) (2010s - present)

JAMstack Architecture (2010s - present)

WebAssembly (Wasm) (2010s - present)

Serverless computing (2010s - present)

AI and Machine Learning Integration (2010s - present)

Reference:

2 notes

·

View notes

Text

Harnessing the Power of Data Engineering for Modern Enterprises

In the contemporary business landscape, data has emerged as the lifeblood of organizations, fueling innovation, strategic decision-making, and operational efficiency. As businesses generate and collect vast amounts of data, the need for robust data engineering services has become more critical than ever. SG Analytics offers comprehensive data engineering solutions designed to transform raw data into actionable insights, driving business growth and success.

The Importance of Data Engineering

Data engineering is the foundational process that involves designing, building, and managing the infrastructure required to collect, store, and analyze data. It is the backbone of any data-driven enterprise, ensuring that data is clean, accurate, and accessible for analysis. In a world where businesses are inundated with data from various sources, data engineering plays a pivotal role in creating a streamlined and efficient data pipeline.

SG Analytics’ data engineering services are tailored to meet the unique needs of businesses across industries. By leveraging advanced technologies and methodologies, SG Analytics helps organizations build scalable data architectures that support real-time analytics and decision-making. Whether it’s cloud-based data warehouses, data lakes, or data integration platforms, SG Analytics provides end-to-end solutions that enable businesses to harness the full potential of their data.

Building a Robust Data Infrastructure

At the core of SG Analytics’ data engineering services is the ability to build robust data infrastructure that can handle the complexities of modern data environments. This includes the design and implementation of data pipelines that facilitate the smooth flow of data from source to destination. By automating data ingestion, transformation, and loading processes, SG Analytics ensures that data is readily available for analysis, reducing the time to insight.

One of the key challenges businesses face is dealing with the diverse formats and structures of data. SG Analytics excels in data integration, bringing together data from various sources such as databases, APIs, and third-party platforms. This unified approach to data management ensures that businesses have a single source of truth, enabling them to make informed decisions based on accurate and consistent data.

Leveraging Cloud Technologies for Scalability

As businesses grow, so does the volume of data they generate. Traditional on-premise data storage solutions often struggle to keep up with this exponential growth, leading to performance bottlenecks and increased costs. SG Analytics addresses this challenge by leveraging cloud technologies to build scalable data architectures.

Cloud-based data engineering solutions offer several advantages, including scalability, flexibility, and cost-efficiency. SG Analytics helps businesses migrate their data to the cloud, enabling them to scale their data infrastructure in line with their needs. Whether it’s setting up cloud data warehouses or implementing data lakes, SG Analytics ensures that businesses can store and process large volumes of data without compromising on performance.

Ensuring Data Quality and Governance

Inaccurate or incomplete data can lead to poor decision-making and costly mistakes. That’s why data quality and governance are critical components of SG Analytics’ data engineering services. By implementing data validation, cleansing, and enrichment processes, SG Analytics ensures that businesses have access to high-quality data that drives reliable insights.

Data governance is equally important, as it defines the policies and procedures for managing data throughout its lifecycle. SG Analytics helps businesses establish robust data governance frameworks that ensure compliance with regulatory requirements and industry standards. This includes data lineage tracking, access controls, and audit trails, all of which contribute to the security and integrity of data.

Enhancing Data Analytics with Natural Language Processing Services

In today’s data-driven world, businesses are increasingly turning to advanced analytics techniques to extract deeper insights from their data. One such technique is natural language processing (NLP), a branch of artificial intelligence that enables computers to understand, interpret, and generate human language.

SG Analytics offers cutting-edge natural language processing services as part of its data engineering portfolio. By integrating NLP into data pipelines, SG Analytics helps businesses analyze unstructured data, such as text, social media posts, and customer reviews, to uncover hidden patterns and trends. This capability is particularly valuable in industries like healthcare, finance, and retail, where understanding customer sentiment and behavior is crucial for success.

NLP services can be used to automate various tasks, such as sentiment analysis, topic modeling, and entity recognition. For example, a retail business can use NLP to analyze customer feedback and identify common complaints, allowing them to address issues proactively. Similarly, a financial institution can use NLP to analyze market trends and predict future movements, enabling them to make informed investment decisions.

By incorporating NLP into their data engineering services, SG Analytics empowers businesses to go beyond traditional data analysis and unlock the full potential of their data. Whether it’s extracting insights from vast amounts of text data or automating complex tasks, NLP services provide businesses with a competitive edge in the market.

Driving Business Success with Data Engineering

The ultimate goal of data engineering is to drive business success by enabling organizations to make data-driven decisions. SG Analytics’ data engineering services provide businesses with the tools and capabilities they need to achieve this goal. By building robust data infrastructure, ensuring data quality and governance, and leveraging advanced analytics techniques like NLP, SG Analytics helps businesses stay ahead of the competition.

In a rapidly evolving business landscape, the ability to harness the power of data is a key differentiator. With SG Analytics’ data engineering services, businesses can unlock new opportunities, optimize their operations, and achieve sustainable growth. Whether you’re a small startup or a large enterprise, SG Analytics has the expertise and experience to help you navigate the complexities of data engineering and achieve your business objectives.

5 notes

·

View notes

Text

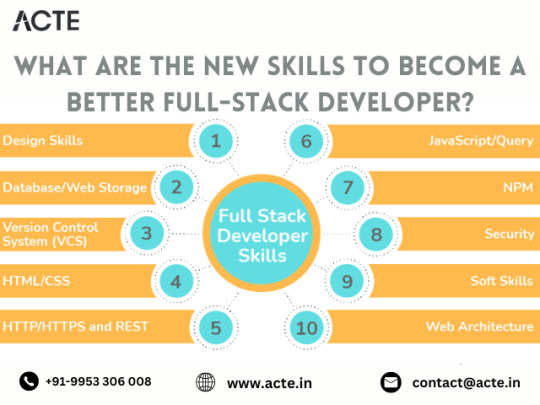

Elevating Your Full-Stack Developer Expertise: Exploring Emerging Skills and Technologies

Introduction: In the dynamic landscape of web development, staying at the forefront requires continuous learning and adaptation. Full-stack developers play a pivotal role in crafting modern web applications, balancing frontend finesse with backend robustness. This guide delves into the evolving skills and technologies that can propel full-stack developers to new heights of expertise and innovation.

Pioneering Progress: Key Skills for Full-Stack Developers

1. Innovating with Microservices Architecture:

Microservices have redefined application development, offering scalability and flexibility in the face of complexity. Mastery of frameworks like Kubernetes and Docker empowers developers to architect, deploy, and manage microservices efficiently. By breaking down monolithic applications into modular components, developers can iterate rapidly and respond to changing requirements with agility.

2. Embracing Serverless Computing:

The advent of serverless architecture has revolutionized infrastructure management, freeing developers from the burdens of server maintenance. Platforms such as AWS Lambda and Azure Functions enable developers to focus solely on code development, driving efficiency and cost-effectiveness. Embrace serverless computing to build scalable, event-driven applications that adapt seamlessly to fluctuating workloads.

3. Crafting Progressive Web Experiences (PWEs):

Progressive Web Apps (PWAs) herald a new era of web development, delivering native app-like experiences within the browser. Harness the power of technologies like Service Workers and Web App Manifests to create PWAs that are fast, reliable, and engaging. With features like offline functionality and push notifications, PWAs blur the lines between web and mobile, captivating users and enhancing engagement.

4. Harnessing GraphQL for Flexible Data Management:

GraphQL has emerged as a versatile alternative to RESTful APIs, offering a unified interface for data fetching and manipulation. Dive into GraphQL's intuitive query language and schema-driven approach to simplify data interactions and optimize performance. With GraphQL, developers can fetch precisely the data they need, minimizing overhead and maximizing efficiency.

5. Unlocking Potential with Jamstack Development:

Jamstack architecture empowers developers to build fast, secure, and scalable web applications using modern tools and practices. Explore frameworks like Gatsby and Next.js to leverage pre-rendering, serverless functions, and CDN caching. By decoupling frontend presentation from backend logic, Jamstack enables developers to deliver blazing-fast experiences that delight users and drive engagement.

6. Integrating Headless CMS for Content Flexibility:

Headless CMS platforms offer developers unprecedented control over content management, enabling seamless integration with frontend frameworks. Explore platforms like Contentful and Strapi to decouple content creation from presentation, facilitating dynamic and personalized experiences across channels. With headless CMS, developers can iterate quickly and deliver content-driven applications with ease.

7. Optimizing Single Page Applications (SPAs) for Performance:

Single Page Applications (SPAs) provide immersive user experiences but require careful optimization to ensure performance and responsiveness. Implement techniques like lazy loading and server-side rendering to minimize load times and enhance interactivity. By optimizing resource delivery and prioritizing critical content, developers can create SPAs that deliver a seamless and engaging user experience.

8. Infusing Intelligence with Machine Learning and AI:

Machine learning and artificial intelligence open new frontiers for full-stack developers, enabling intelligent features and personalized experiences. Dive into frameworks like TensorFlow.js and PyTorch.js to build recommendation systems, predictive analytics, and natural language processing capabilities. By harnessing the power of machine learning, developers can create smarter, more adaptive applications that anticipate user needs and preferences.

9. Safeguarding Applications with Cybersecurity Best Practices:

As cyber threats continue to evolve, cybersecurity remains a critical concern for developers and organizations alike. Stay informed about common vulnerabilities and adhere to best practices for securing applications and user data. By implementing robust security measures and proactive monitoring, developers can protect against potential threats and safeguard the integrity of their applications.

10. Streamlining Development with CI/CD Pipelines:

Continuous Integration and Deployment (CI/CD) pipelines are essential for accelerating development workflows and ensuring code quality and reliability. Explore tools like Jenkins, CircleCI, and GitLab CI/CD to automate testing, integration, and deployment processes. By embracing CI/CD best practices, developers can deliver updates and features with confidence, driving innovation and agility in their development cycles.

#full stack developer#education#information#full stack web development#front end development#web development#frameworks#technology#backend#full stack developer course

2 notes

·

View notes

Text

Learn how composable analytics helps you extract real-time insights by assembling reusable data components to meet dynamic business needs.

#Composable Analytics#Traditional Analytics Models#API-Driven Architecture#Application Programming Interfaces#Actionable Insights#Real-Time Visualization

0 notes

Text

Learn how composable analytics helps you extract real-time insights by assembling reusable data components to meet dynamic business needs.

#Composable Analytics#Traditional Analytics Models#API-Driven Architecture#Application Programming Interfaces#Actionable Insights#Real-Time Visualization

0 notes

Text

Learn how composable analytics helps you extract real-time insights by assembling reusable data components to meet dynamic business needs.

#Composable Analytics#Traditional Analytics Models#API-Driven Architecture#Application Programming Interfaces#Actionable Insights#Real-Time Visualization

0 notes

Text

Drasi by Microsoft: A New Approach to Tracking Rapid Data Changes

New Post has been published on https://thedigitalinsider.com/drasi-by-microsoft-a-new-approach-to-tracking-rapid-data-changes/

Drasi by Microsoft: A New Approach to Tracking Rapid Data Changes

Imagine managing a financial portfolio where every millisecond counts. A split-second delay could mean a missed profit or a sudden loss. Today, businesses in every sector rely on real-time insights. Finance, healthcare, retail, and cybersecurity, all need to react instantly to changes, whether it is an alert, a patient update, or a shift in inventory. But traditional data processing cannot keep up. These systems often delay responses, costing time and missed opportunities.

That is where Drasi by Microsoft comes in. Designed to track and react to data changes as they happen, Drasi operates continuously. Unlike batch-processing systems, it does not wait for intervals to process information. Drasi empowers businesses with the real-time responsiveness they need to stay ahead of the competitors.

Understanding Drasi

Drasi is an advanced event-driven architecture powered by Artificial Intelligence (AI) and designed to handle real-time data changes. Traditional data systems often rely on batch processing, where data is collected and analyzed at set intervals. This approach can cause delays, which can be costly for industries that depend on quick responses. Drasi changes the game by using AI to track data continuously and react instantly. This enables organizations to make decisions as events happen instead of waiting for the next processing cycle.

A core feature of Drasi is its AI-driven continuous query processing. Unlike traditional queries that run on a schedule, continuous queries operate non-stop, allowing Drasi to monitor data flows in real time. This means even the smallest data change is captured immediately, giving companies a valuable advantage in responding quickly. Drasi’s machine learning capabilities help it integrate smoothly with various data sources, including IoT devices, databases, social media, and cloud services. This broad compatibility provides a complete view of data, helping companies identify patterns, detect anomalies, and automate responses effectively.

Another key aspect of Drasi’s design is its intelligent reaction mechanism. Instead of simply alerting users to a data change, Drasi can immediately trigger pre-set responses and even use machine learning to improve these actions over time. For example, in finance, if Drasi detects an unusual market event, it can automatically send alerts, notify the right teams, or even make trades. This AI-powered, real-time functionality gives Drasi a clear advantage in industries where quick, adaptive responses make a difference.

By combining continuous AI-powered queries with rapid response capabilities, Drasi enables companies to act on data changes the moment they happen. This approach boosts efficiency, cuts down on delays, and reveals the full potential of real-time insights. With AI and machine learning built in, Drasi’s architecture offers businesses a powerful advantage in today’s fast-paced, data-driven world.

Why Drasi Matters for Real-Time Data

As data generation continues to grow rapidly, companies are under increasing pressure to process and respond to information as it becomes available. Traditional systems often face issues, such as latency, scalability, and integration, which limit their usefulness in real-time settings. This is especially critical in high-stakes sectors like finance, healthcare, and cybersecurity, where even brief delays can result in losses. Drasi addresses these challenges with an architecture designed to handle large amounts of data while maintaining speed, reliability, and adaptability.

In financial trading, for example, investment firms and banks depend on real-time data to make quick decisions. A split-second delay in processing stock prices can mean the difference between a profitable trade and a missed chance. Traditional systems that process data in intervals simply cannot keep up with the pace of modern markets. Drasi’s real-time processing capability allows financial institutions to respond instantly to market shifts, optimizing trading strategies.

Similarly, in a connected smart home, IoT sensors track everything from security to energy use. A traditional system may only check for updates every few minutes, potentially leaving the home vulnerable if an emergency occurs during that interval. Drasi enables constant monitoring and immediate responses, such as locking doors at the first sign of unusual activity, thereby enhancing security and efficiency.

Retail and e-commerce also benefit significantly from Drasi’s capabilities. E-commerce platforms rely on understanding customer behavior in real time. For instance, if a customer adds an item to their cart but doesn’t complete the purchase, Drasi can immediately detect this and trigger a personalized prompt, like a discount code, to encourage the sale. This ability to react to customer actions as they happen can lead to more sales and create a more engaging shopping experience. In each of these cases, Drasi fills a significant gap where traditional systems lack and thus empowers businesses to act on live data in ways previously out of reach.

Drasi’s Real-Time Data Processing Architecture

Drasi’s design is centred around an advanced, modular architecture, prioritizing scalability, speed, and real-time operation. Maily, it depends on continuous data ingestion, persistent monitoring, and automated response mechanisms to ensure immediate action on data changes.

When new data enters Drasi’s system, it follows a streamlined operational workflow. First, it ingests data from various sources, including IoT devices, APIs, cloud databases, and social media feeds. This flexibility enables Drasi to collect data from virtually any source, making it highly adaptable to different environments.

Once data is ingested, Drasi’s continuous queries immediately monitor the data for changes, filtering and analyzing it as soon as it arrives. These queries run perpetually, scanning for specific conditions or anomalies based on predefined parameters. Next, Drasi’s reaction system takes over, allowing for automatic responses to these changes. For instance, if Drasi detects a significant increase in website traffic due to a promotional campaign, it can automatically adjust server resources to accommodate the spike, preventing potential downtime.

Drasi’s operational workflow involves several key steps. Data is ingested from connected sources, ensuring real-time compatibility with devices and databases. Continuous queries then scan for predefined changes, eliminating delays associated with batch processing. Advanced algorithms process incoming data to provide meaningful insights immediately. Based on these data insights, Drasi can trigger predefined responses, such as notifications, alerts, or direct actions. Finally, Drasi’s real-time analytics transform data into actionable insights, empowering decision-makers to act immediately.

By offering this streamlined process, Drasi ensures that data is not only tracked but also acted upon instantly, enhancing a company’s ability to adapt to real-time conditions.

Benefits and Use Cases of Drasi

Drasi offers benefits far beyond typical data processing capabilities and provides real-time responsiveness essential for businesses that need instant data insights. One key advantage is its enhanced efficiency and performance. By processing data as it arrives, Drasi removes delays common in batch processing, leading to faster decision-making, improved productivity, and reduced downtime. For example, a logistics company can use Drasi to monitor delivery statuses and reroute vehicles in real time, optimizing operations to reduce delivery times and increase customer satisfaction.

Real-time insights are another benefit. In industries like finance, healthcare, and retail, where information changes quickly, having live data is invaluable. Drasi’s ability to provide immediate insights enables organizations to make informed decisions on the spot. For example, a hospital using Drasi can monitor patient vitals in real time, supplying doctors with important updates that could make a difference in patient outcomes.

Furthermore, Drasi integrates with existing infrastructure and enables businesses to employ its capabilities without investing in costly system overhauls. A smart city project, for example, could use Drasi to integrate traffic data from multiple sources, providing real-time monitoring and management of traffic flows to reduce congestion effectively.

As an open-source tool, Drasi is also cost-effective, offering flexibility without locking businesses into expensive proprietary systems. Companies can customize and expand Drasi’s functionalities to suit their needs, making it an affordable solution for improving data management without a significant financial commitment.

The Bottom Line

In conclusion, Drasi redefines real-time data management, offering businesses an advantage in today’s fast-paced world. Its AI-driven, event-based architecture enables continuous monitoring, instant insights, and automatic responses, which are invaluable across industries.

By integrating with existing infrastructure and providing cost-effective, customizable solutions, Drasi empowers companies to make immediate, data-driven decisions that keep them competitive and adaptive. In an environment where every second matters, Drasi proves to be a powerful tool for real-time data processing.

Visit the Drasi website for information about how to get started, concepts, how to explainers, and more.

#ai#AI-powered#AI-powered data processing#alerts#Algorithms#Analytics#anomalies#APIs#approach#architecture#artificial#Artificial Intelligence#banks#Behavior#change#Cloud#cloud services#code#Commerce#Companies#continuous#continuous monitoring#cybersecurity#data#data ingestion#Data Management#data processing#data-driven#data-driven decisions#databases

0 notes

Text

The Role of Microservices In Modern Software Architecture

Are you ready to dive into the exciting world of microservices and discover how they are revolutionizing modern software architecture? In today’s rapidly evolving digital landscape, businesses are constantly seeking ways to build more scalable, flexible, and resilient applications. Enter microservices – a groundbreaking approach that allows developers to break down monolithic systems into smaller, independent components. Join us as we unravel the role of microservices in shaping the future of software design and explore their immense potential for transforming your organization’s technology stack. Buckle up for an enlightening journey through the intricacies of this game-changing architectural style!

Introduction To Microservices And Software Architecture

In today’s rapidly evolving technological landscape, software architecture has become a crucial aspect for businesses looking to stay competitive. As companies strive for faster delivery of high-quality software, the traditional monolithic architecture has proved to be limiting and inefficient. This is where microservices come into play.

Microservices are an architectural approach that involves breaking down large, complex applications into smaller, independent services that can communicate with each other through APIs. These services are self-contained and can be deployed and updated independently without affecting the entire application.

Software architecture on the other hand, refers to the overall design of a software system including its components, relationships between them, and their interactions. It provides a blueprint for building scalable, maintainable and robust applications.

So how do microservices fit into the world of software architecture? Let’s delve deeper into this topic by understanding the fundamentals of both microservices and software architecture.

As mentioned earlier, microservices are small independent services that work together to form a larger application. Each service performs a specific business function and runs as an autonomous process. These services can be developed in different programming languages or frameworks based on what best suits their purpose.

The concept of microservices originated from Service-Oriented Architecture (SOA). However, unlike SOA which tends to have larger services with complex interconnections, microservices follow the principle of single responsibility – meaning each service should only perform one task or function.

Evolution Of Software Architecture: From Monolithic To Microservices

Software architecture has evolved significantly over the years, from traditional monolithic architectures to more modern and agile microservices architectures. This evolution has been driven by the need for more flexible, scalable, and efficient software systems. In this section, we will explore the journey of software architecture from monolithic to microservices and how it has transformed the way modern software is built.

Monolithic Architecture:

In a monolithic architecture, all components of an application are tightly coupled together into a single codebase. This means that any changes made to one part of the code can potentially impact other parts of the application. Monolithic applications are usually large and complex, making them difficult to maintain and scale.

One of the main drawbacks of monolithic architecture is its lack of flexibility. The entire application needs to be redeployed whenever a change or update is made, which can result in downtime and disruption for users. This makes it challenging for businesses to respond quickly to changing market needs.

The Rise of Microservices:

To overcome these limitations, software architects started exploring new ways of building applications that were more flexible and scalable. Microservices emerged as a solution to these challenges in software development.

Microservices architecture decomposes an application into smaller independent services that communicate with each other through well-defined APIs. Each service is responsible for a specific business function or feature and can be developed, deployed, and scaled independently without affecting other services.

Advantages Of Using Microservices In Modern Software Development

Microservices have gained immense popularity in recent years, and for good reason. They offer numerous advantages over traditional monolithic software development approaches, making them a highly sought-after approach in modern software architecture.

1. Scalability: One of the key advantages of using microservices is their ability to scale independently. In a monolithic system, any changes or updates made to one component can potentially affect the entire application, making it difficult to scale specific functionalities as needed. However, with microservices, each service is developed and deployed independently, allowing for easier scalability and flexibility.

2. Improved Fault Isolation: In a monolithic architecture, a single error or bug can bring down the entire system. This makes troubleshooting and debugging a time-consuming and challenging process. With microservices, each service operates independently from others, which means that if one service fails or experiences issues, it will not impact the functioning of other services. This enables developers to quickly identify and resolve issues without affecting the overall system.

3. Faster Development: Microservices promote faster development cycles because they allow developers to work on different services concurrently without disrupting each other’s work. Moreover, since services are smaller in size compared to monoliths, they are easier to understand and maintain which results in reduced development time.

4. Technology Diversity: Monolithic systems often rely on a single technology stack for all components of the application. This can be limiting when new technologies emerge or when certain functionalities require specialized tools or languages that may not be compatible with the existing stack. In contrast, microservices allow for a diverse range of technologies to be used for different services, providing more flexibility and adaptability.

5. Easy Deployment: Microservices are designed to be deployed independently, which means that updates or changes to one service can be rolled out without affecting the entire system. This makes deployments faster and less risky compared to monolithic architectures, where any changes require the entire application to be redeployed.

6. Better Fault Tolerance: In a monolithic architecture, a single point of failure can bring down the entire system. With microservices, failures are isolated to individual services, which means that even if one service fails, the rest of the system can continue functioning. This improves overall fault tolerance in the application.

7. Improved Team Productivity: Microservices promote a modular approach to software development, allowing teams to work on specific services without needing to understand every aspect of the application. This leads to improved productivity as developers can focus on their areas of expertise and make independent decisions about their service without worrying about how it will affect other parts of the system.

Challenges And Limitations Of Microservices

As with any technology or approach, there are both challenges and limitations to implementing microservices in modern software architecture. While the benefits of this architectural style are numerous, it is important to be aware of these potential obstacles in order to effectively navigate them.

1. Complexity: One of the main challenges of microservices is their inherent complexity. When a system is broken down into smaller, independent services, it becomes more difficult to manage and understand as a whole. This can lead to increased overhead and maintenance costs, as well as potential performance issues if not properly designed and implemented.

2. Distributed Systems Management: Microservices by nature are distributed systems, meaning that each service may be running on different servers or even in different geographical locations. This introduces new challenges for managing and monitoring the system as a whole. It also adds an extra layer of complexity when troubleshooting issues that span multiple services.

3. Communication Between Services: In order for microservices to function effectively, they must be able to communicate with one another seamlessly. This requires robust communication protocols and mechanisms such as APIs or messaging systems. However, setting up and maintaining these connections can be time-consuming and error-prone.

4. Data Consistency: In a traditional monolithic architecture, data consistency is relatively straightforward since all components access the same database instance. In contrast, microservices often have their own databases which can lead to data consistency issues if not carefully managed through proper synchronization techniques.

Best Practices For Implementing Microservices In Your Project

Implementing microservices in your project can bring a multitude of benefits, such as increased scalability, flexibility and faster development cycles. However, it is also important to ensure that the implementation is done correctly in order to fully reap these benefits. In this section, we will discuss some best practices for implementing microservices in your project.

1. Define clear boundaries and responsibilities: One of the key principles of microservices architecture is the idea of breaking down a larger application into smaller independent services. It is crucial to clearly define the boundaries and responsibilities of each service to avoid overlap or duplication of functionality. This can be achieved by using techniques like domain-driven design or event storming to identify distinct business domains and their respective services.

2. Choose appropriate communication protocols: Microservices communicate with each other through APIs, so it is important to carefully consider which protocols to use for these interactions. RESTful APIs are popular due to their simplicity and compatibility with different programming languages. Alternatively, you may choose messaging-based protocols like AMQP or Kafka for asynchronous communication between services.

3. Ensure fault tolerance: In a distributed system like microservices architecture, failures are inevitable. Therefore, it is important to design for fault tolerance by implementing strategies such as circuit breakers and retries. These mechanisms help prevent cascading failures and improve overall system resilience.

Real-Life Examples Of Successful Implementation Of Microservices

Microservices have gained immense popularity in recent years due to their ability to improve the scalability, flexibility, and agility of software systems. Many organizations across various industries have successfully implemented microservices architecture in their applications, resulting in significant benefits. In this section, we will explore real-life examples of successful implementation of microservices and how they have revolutionized modern software architecture.

1. Netflix: Netflix is a leading streaming service that has disrupted the entertainment industry with its vast collection of movies and TV shows. The company’s success can be attributed to its adoption of microservices architecture. Initially, Netflix had a monolithic application that was becoming difficult to scale and maintain as the user base grew rapidly. To overcome these challenges, they broke down their application into smaller independent services following the microservices approach.

Each service at Netflix has a specific function such as search, recommendations, or video playback. These services can be developed independently, enabling faster deployment and updates without affecting other parts of the system. This also allows for easier scaling based on demand by adding more instances of the required services. With microservices, Netflix has improved its uptime and performance while keeping costs low.

The Future Of Microservices In Software Architecture

The concept of microservices has been gaining traction in the world of software architecture in recent years. This approach to building applications involves breaking down a monolithic system into smaller, independent services that communicate with each other through well-defined APIs. The benefits of this architecture include increased flexibility, scalability, and resilience.

But what does the future hold for microservices? In this section, we will explore some potential developments and trends that could shape the future of microservices in software architecture.

1. Rise of Serverless Architecture

As organizations continue to move towards cloud-based solutions, serverless architecture is becoming increasingly popular. This approach eliminates the need for traditional servers and infrastructure management by allowing developers to deploy their code directly onto a cloud platform such as Amazon Web Services (AWS) or Microsoft Azure.

Microservices are a natural fit for serverless architecture as they already follow a distributed model. With serverless, each microservice can be deployed independently, making it easier to scale individual components without affecting the entire system. As serverless continues to grow in popularity, we can expect to see more widespread adoption of microservices.

2. Increased Adoption of Containerization

Containerization technology such as Docker has revolutionized how applications are deployed and managed. Containers provide an isolated environment for each service, making it easier to package and deploy them anywhere without worrying about compatibility issues.

Conclusion:

As we have seen throughout this article, microservices offer a number of benefits in terms of scalability, flexibility, and efficiency in modern software architecture. However, it is important to carefully consider whether or not the use of microservices is right for your specific project.

First and foremost, it is crucial to understand the complexity that comes with implementing a microservices architecture. While it offers many advantages, it also introduces new challenges such as increased communication overhead and the need for specialized tools and processes. Therefore, if your project does not require a high level of scalability or if you do not have a team with sufficient expertise to manage these complexities, using a monolithic architecture may be more suitable.

#website landing page design#magento development#best web development company in united states#asp.net web and application development#web designing company#web development company#logo design company#web development#web design#digital marketing company in usa

2 notes

·

View notes