#7 layers of osi model

Explore tagged Tumblr posts

Text

Starting with Go Development: Setting Up, Writing Your First Program, and Tools

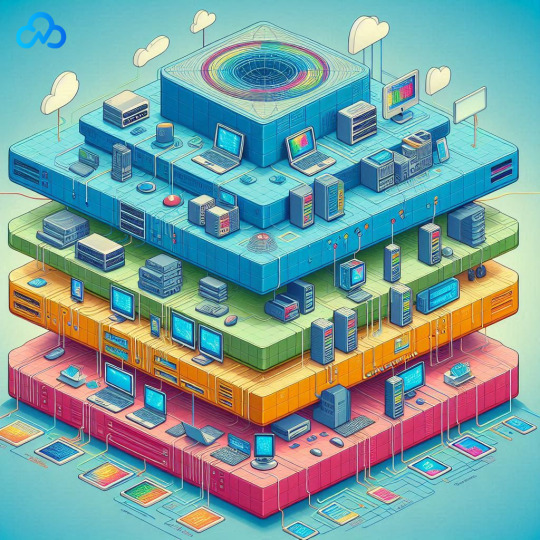

What is OSI Model? In the vast world of computer networking, 7 layers of OSI model is like a blueprint that helps to understand how different parts of a network work together. OSI stands for Open Systems Interconnection. The OSI model, established by ISO (International Organization for Standardization) in 1984, acts as a guide for understanding how computers share data.

The 7 Layers of OSI It consists of seven layers collaborating to perform specific network tasks, providing a structured way to approach networking.

1. Physical Layer

2. Data Link Layer

3. Network Layer

4. Transport Layer

5. Session Layer

6. Presentation Layer

7. Application Layer

Comprising seven layers, the 7 layers of OSI model provides a structured approach to understanding and implementing network protocols. Let’s explore each layer to understand the basics of this concept :

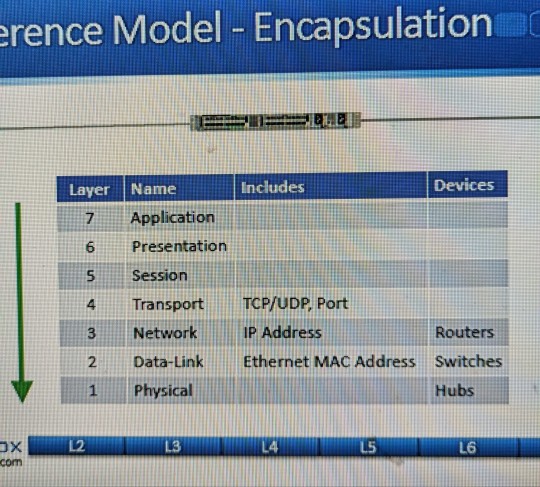

1. Physical Layer: The Physical Layer, at the bottom of the OSI model, handles the actual sending and receiving of raw binary data over physical connections. It deals with cables, connectors, and signal modulation, forming the essential infrastructure of any network.

Key features: • Responsible for the physical transmission of data using cables, switches, or routers.

• Ensures synchronization of data bits and sets the speed of data transmission.

• Converts data into signals for transmission and arranges the layout of network devices.

2. Data Link Layer: Moving up, the Data Link Layer focuses on creating dependable links between directly connected devices. It manages addressing schemes, error detection, and flow control mechanisms. Ethernet and MAC (Media Access Control) addresses play a crucial role, ensuring effective communication within the same network.

Key features: • Manages the transmission of data frames across the network.

• Detects and addresses damaged or lost frames, initiating retransmission when needed.

• Divides data into smaller units called frames and updates frame headers with sender and receiver MAC addresses.

3. Network Layer: The Network Layer introduces the concept of logical addressing, often in the form of IP addresses. Its primary role is to route data packets between different networks. Routers, operating at this layer, make decisions based on logical addresses to ensure packets reach their intended destination across interconnected networks.

Key features: • Uses logical addressing to route packets between different networks.

• Introduces IP addresses, allowing communication across diverse networks.

• Utilizes routing protocols to determine the best paths for data transmission.

• Employs ICMP for error reporting and diagnostics, improving network reliability.

4. Transport Layer: Responsible for end-to-end communication, the Transport Layer manages data flow between devices, ensuring reliable and error-free delivery. TCP and UDP are protocols that provide different ways of transmitting data depending on the requirements of the application.

Key features: • Ensures seamless communication from end to end, managing flow control and error correction.

• Segments data for efficient transmission across the network.

• Implements protocols like TCP for reliable, connection-oriented communication.

• Provides UDP for faster, connectionless communication suitable for real-time applications.

5. Session Layer: The Session Layer facilitates communication sessions between applications on different devices. It manages session setup, maintenance, and termination to ensure secure and reliable communication between applications.

Key features: • Manages the establishment, maintenance, and termination of sessions between applications.

• Creates synchronization points in data exchange and facilitates the reestablishment of disrupted sessions.

6. Presentation Layer : Focusing on data translation and encryption, the Presentation Layer ensures that information is sent and received in a format that applications can understand. It deals with data compression, encryption, and format conversions, ensuring seamless communication between diverse systems.

Key features: • Translates data formats for compatibility between different systems.

• Handles encryption, compression, and formatting to optimize data exchange.

• Standardizes data representation, ensuring smooth communication.

7. Application Layer: At the top of the OSI model is the Application Layer, acting as the interface between the user and the network. It has applications that are aware of networks and offers services like email, file transfer, and remote login. Protocols like HTTP, SMTP, and FTP operate here, enabling diverse applications to communicate over the network.

Key features: • Represents the user interface and provides network services.

• Acts as the entry point for application-specific communication.

• Facilitates various network services (e.g., HTTP, SMTP, FTP) and supports diverse applications and user interact.

Features of OSI Model The OSI model serves as a powerful tool for understanding, designing,a nd troubleshooting computer networks. It feature contribute to a comprehensive view of network communication.

• Holistic View of Communication: One of the standout features is its ability to provide a holistic view of communication over a network. The seven-layered structure helps professionals understand the process from raw data transmission to user interaction.

• Hardware and Software Collaboration: The model explains how hardware and software work together in a network. The OSI model demonstrates the collaboration of different elements, from the Physical Layer to the Application Layer.

• Adaptability to Emerging Technologies: As technology evolves, the OSI model remains relevant. Its design allows professionals to easily incorporate new technologies into existing network systems.

• Efficient Troubleshooting: Troubleshooting is simplified through the OSI model’s layered approach. By isolating functions within each layer, professionals can identify and resolve issues more efficiently, ensuring the reliability and optimal performance of networked systems.

Conclusion The 7 layers of the OSI model are useful for designing and troubleshooting networks. Each layer has a specific job, making data transmission efficient and reliable. Additionally, the Subnetting Process is crucial for optimizing network performance and managing IP addresses efficiently. The OSI model, along with subnetting, continues to be a valuable guide for networking professionals as technology advances.

0 notes

Text

7 Layer of the OSI Model

As we all aware for computer networks works that we need laptop, LANs, Router, switch, Internet, and routing protocols, but how all these parameters are interconnected with each other? We always heard that Switch works at Layer-2 and Router works on Layer-3, but what all these layers? In this Blog, we will discuss and understand this. Computer Network: 7 Layer of the OSI Model What is the OSI…

View On WordPress

#7 layer model#7 layers of osi model#define osi model#explain osi model#layers of osi model#model#open systems interconnection model#osi model#osi model 3d animation#osi model animation#osi model explained#osi model in computer network#osi model in computer networks#osi model in hindi#osi model in networking#osi model layers#osi model lecture#osi reference model#tcp/ip model#the osi model#what is osi model

0 notes

Text

The Next Web Needs To Be A Forest

Been mourning the '90s and '00's internet for a while. Been hating the enshittified, platform-capitalistic internet dystopia.

Been saying for a while that the next internet must get away from centralized control, and be founded on distribution and federation.

There is no single monolithic "TCP/IP server farm" run by one company with one mentally-diseased white man at the helm. This is why internet traffic can get more or less anywhere. But there ARE monolithic social media sites (Twitter/X, FB). And there is monolithic identity management ("log in to BuyJunk with your Google account"). Even Discord where anyone can make their own "server" is hosted and runs on Discord-proprietary software and hardware.

The next internet -- if it's to be any good and not just further enshittification -- is going to be less like the hub-and-spoke system of airports, and more like a forest where trees and clusters of trees interconnect with each other organically.

Group chats, where some members of the group chat are members of multiple group chats.

But the only way that internet is going to happen is if people -- not corporations -- make it. There's no profit in a distributed internet. It's going to have to happen for the same reason that people throw parties, or stage demonstrations, or just get together regularly to go climb rocks.

It's going to have to happen out of love.

And that means that the gap between WANTING to build this new net and BEING ABLE to build this new net needs to get a lot smaller.

Keep an eye out for technologies, organizations, and education that narrows the gap. Help them.

And beware legislation and corporations that want to put barriers in the way. Fight them.

#mine#internet#internet culture#yes i know that network traffic works this way already don't @ me#I'm talking about the application layer not the network or transport layer#OSI 7-layer model still stuck in my head I guess#ref

2 notes

·

View notes

Text

Understanding the OSI Model: A Layer-by-Layer Guide

Explore each layer of the OSI Model in detail. Understand how they work together to ensure seamless network communication.

#OSI Model#Networking Basics#7 Layers of OSI#Network Layers#Data Communication#OSI Explained#Networking Guide#IT Fundamentals#OSI Simplified#Layered Network Model

0 notes

Text

Dante's Inferno but it's the 7 layers of the OSI model

29 notes

·

View notes

Note

Hi, I’ve been interested in mesh nets since I learned about them so I was really excited to learn you were working on this project.

How does piermesh relate to the OSI model? Which layers is this seeking to implement and which, if any, would be untouched?

Great question!

First for people who don't know the OSI model here's a rundown:

In the OSI model we're implementing layers 1-5 as well as some of 6. We're mostly following the model by nature of this being similar to the current internet though not very strictly. We're also keeping it very simple in that communication is done via msgpack serialized data so it's easy to pass the data between layers/systems/languages

4 notes

·

View notes

Text

[https://open.spotify.com/episode/7p883Qd9pJismqTjaVa8qH ]

Jonah and Wednesday are joined by their guest Kerry as they delve into The part of the deep web that we aren't supposed to see.

Hear from our hosts again after winter break!

If you have a small horror or web fiction project you want in the spotlight, email us! Send your name, pronouns and project to [email protected].

Music Credits: https://patriciataxxon.bandcamp.com/

The Story: https://www.reddit.com/r/nosleep/comments/78td1x/the_part_of_the_deep_web_that_we_arent_supposed/

Our Tumblr: https://creepypastabookclub.tumblr.com/

Our Twitter: https://twitter.com/CreepypastaBC

Featuring Hosts:

Jonah (he/they) (https://withswords.tumblr.com/)

Wednesday (they/them) (https://wormsday.tumblr.com/)

Guest host:

Kerry (they/them)

Works Cited: 7 layers of OSI; https://www.networkworld.com/article/3239677/the-osi-model-explained-and-how-to-easily-remember-its-7-layers.html

Closed Shell System; https://www.reddit.com/r/AskReddit/comments/21q99r/whats_a_closed_shell_system/

Deep-Sea Audio from the Marnina Trenches; https://www.youtube.com/watch?v=pabfhDQ0fgY Deep Web Iceberg; https://www.reddit.com/r/explainlikeimfive/comments/1pzmv3/eli5_how_much_of_the_iceberg_deep_web_diagram_is/ Fury of the Demon; https://www.imdb.com/title/tt4161438/

I work on the fifteenth floor, and something just knocked on my window.; https://www.reddit.com/r/nosleep/comments/77h76n/i_work_on_the_fifteenth_floor_and_something_just/ Kitten that leaked the no-flight list; https://maia.crimew.gay/

Project PARAGON; https://scp-wiki.wikidot.com/project-paragon-hub

Tsar Bomba; https://en.wikipedia.org/wiki/Tsar_Bomba

Unknown Armies; http://www.modernfables.net/alan/unknown_armies/Unknown_Armies_2nd_Edition%20-%20Copy.pdf What is a cult and why do people join them?; https://www.teenvogue.com/story/what-is-cult

Questions? Comments? Email us at: [email protected]

#creepypasta book club#deep web#creepypasta#podcast#the part of the deep web you aren't suppose to see

6 notes

·

View notes

Text

Security in Project (2/9+)

2\\ Network Flows

Mengacu pada aliran data dan informasi melalui jaringan komputer dan sistem keamanan yang dibangun untuk melindungi aliran tersebut.

2.1\\ Flows Matrix

Sumber adalah komputer yang memulai koneksi, sedangkan tujuan adalah yang mendengarkan socket (data tentu saja dapat ditransfer dari tujuan ke sumber)

Arus keluar (Outbound flows) harus melalui Proxy Server

Arus masuk (Inbound flows) harus melewati Reverse Proxy

2.2\\ Administraton interface (nominative accounts, encryption, IP restriction or Two-Factor Authentication)

Bahasannya bakalan dalem kalau masuk kesini dipaduin sama OSI Layers

Dalam model 7 layer security, 2FA (Two-Factor Authentication) dapat diimplementasikan pada beberapa lapisan keamanan yang berbeda, tergantung pada rancangan keamanan dan infrastruktur jaringan yang digunakan. Berikut ini adalah beberapa contoh implementasi 2FA pada beberapa lapisan keamanan dalam model 7 layer security:

Lapisan Fisik (Physical Layer): 2FA dapat diimplementasikan dengan memasang sistem pengenalan sidik jari atau kartu pintar pada akses ke ruangan data center atau server room.

Lapisan Data Link (Data Link Layer): 2FA dapat diimplementasikan dengan menggunakan MAC filtering dan port security pada switch atau router jaringan.

Lapisan Jaringan (Network Layer): 2FA dapat diimplementasikan dengan menggunakan VPN (Virtual Private Network) dengan sertifikat digital atau token pada koneksi jaringan jarak jauh.

Lapisan Transport (Transport Layer): 2FA dapat diimplementasikan dengan menggunakan protokol keamanan seperti SSL/TLS pada koneksi HTTPS yang melindungi lalu lintas data antara klien dan server.

Lapisan Sesi (Session Layer): 2FA dapat diimplementasikan dengan menggunakan protokol keamanan seperti SSH pada koneksi jaringan untuk memastikan bahwa identitas pengguna yang terhubung adalah yang sebenarnya.

Lapisan Presentasi (Presentation Layer): 2FA dapat diimplementasikan dengan menggunakan protokol keamanan seperti S/MIME pada email atau PGP pada file yang dikirim melalui jaringan.

Lapisan Aplikasi (Application Layer): 2FA dapat diimplementasikan dengan menggunakan aplikasi yang memerlukan dua tahap verifikasi untuk login, seperti aplikasi perbankan online atau manajemen proyek.

Untuk penjelasan ini, terbuka banget jika mau ngasi masukan. Sejauh yang saya ketahui. ini ditahap praktis yang secara konsep mungkin belum paten atau saya belum menemukan literatur yang sesuai...

3 notes

·

View notes

Text

It‘s a Layer 8 Problem.

In the OSI model which is used to show communication between two devices there are 7 layers. The 8th layer is the person using the device.

34K notes

·

View notes

Text

The Future of Load Balancing: Trends and Innovations in Application Load Balancer

The future of load balancing is rapidly evolving with advancements in technology, particularly in the realm of application load balancers (ALBs). As businesses increasingly shift to cloud-native architectures and microservices, ALBs are becoming more crucial in ensuring efficient traffic distribution across applications. Innovations such as AI-powered load balancing, real-time traffic analytics, and integration with containerized environments like Kubernetes are enhancing the scalability and performance of ALBs. Additionally, the rise of edge computing is pushing ALBs closer to end-users, reducing latency and improving overall user experience. As security concerns grow, ALBs are also incorporating advanced threat detection and DDoS protection features. These trends promise a more reliable, efficient, and secure approach to managing application traffic in the future.

What is an Application Load Balancer (ALB) and How Does It Work?

Application Load Balancer (ALB) is a cloud-based service designed to distribute incoming network traffic across multiple servers, ensuring optimal performance and high availability of applications. ALBs operate at the application layer (Layer 7) of the OSI model, which means they can make intelligent routing decisions based on the content of the request, such as URL, host headers, or HTTP methods. This differs from traditional load balancers that operate at the network layer. ALBs offer more sophisticated features for modern web applications, making them essential for scalable, highly available cloud environments.

Key Features of Application Load Balancer (ALB)

ALBs provide several features that make them highly suitable for distributed applications:

Content-Based Routing: ALBs can route traffic based on the URL path, host headers, or HTTP methods, enabling fine-grained control over the distribution of requests.

SSL Termination: ALBs can offload SSL termination, decrypting HTTPS requests before forwarding them to backend servers, thus improving performance.

Auto Scaling Integration: ALBs integrate seamlessly with auto-scaling groups, ensuring that traffic is evenly distributed across new and existing resources.

WebSocket Support: ALBs support WebSocket connections, making them ideal for applications requiring real-time, two-way communication.

Advantages of Using an Application Load Balancer in Cloud Environments

Application Load Balancers offer several benefits, particularly in cloud environments:

Improved Availability and Fault Tolerance: ALBs distribute traffic to healthy instances, ensuring high availability even if some backend servers fail.

Better Performance and Latency Optimization: By routing traffic based on specific parameters, ALBs can reduce response times and ensure efficient resource utilization.

Scalability: With ALBs, applications can scale horizontally by adding or removing instances without affecting performance, making them ideal for elastic cloud environments like AWS and Azure.

Security Enhancements: ALBs provide SSL termination, reducing the load on backend servers and offering better security for user data during transmission.

Application Load Balancer vs. Classic Load Balancer: Which One Should You Choose?

When choosing a load balancer for your application, understanding the difference between an Application Load Balancer (ALB) and a Classic Load Balancer (CLB) is crucial:

Layer 7 vs. Layer 4: ALBs operate at Layer 7 (application layer), while CLBs work at Layer 4 (transport layer). ALBs offer more sophisticated routing capabilities, whereas CLBs are simpler and more suitable for TCP/UDP-based applications.

Routing Based on Content: ALBs can route traffic based on URLs, headers, or query parameters, whereas CLBs route traffic at the IP level.

Support for Modern Web Apps: ALBs are designed to support modern web application architectures, including microservices and containerized apps, while CLBs are more suited for monolithic architectures.

SSL Offloading: ALBs offer SSL offloading, whereas CLBs only support SSL pass-through.

How to Set Up an Application Load Balancer on AWS?

Setting up an ALB on AWS involves several steps:

Step 1: Create a Load Balancer: Begin by creating an Application Load Balancer in the AWS Management Console. Choose a name, select the VPC, and configure listeners (typically HTTP/HTTPS).

Step 2: Configure Target Groups: Define target groups that represent the backend services or instances that will handle the requests. Configure health checks to ensure traffic is only sent to healthy targets.

Step 3: Define Routing Rules: Configure routing rules based on URL paths, hostnames, or HTTP methods. You can create multiple rules to direct traffic to different target groups based on incoming request details.

Step 4: Configure Security: Enable SSL certificates for secure communication and set up access control policies for your load balancer to protect against unwanted traffic.

Step 5: Test and Monitor: Once the ALB is set up, monitor its performance via AWS CloudWatch to ensure it is handling traffic as expected.

Common Use Cases for Application Load Balancer

Application Load Balancers are suitable for various use cases, including:

Microservices Architectures: ALBs are well-suited for routing traffic to different microservices based on specific API routes or URLs.

Web Applications: ALBs can efficiently handle HTTP/HTTPS traffic for websites, ensuring high availability and minimal latency.

Containerized Applications: In environments like AWS ECS or Kubernetes, ALBs can distribute traffic to containerized instances, allowing seamless scaling.

Real-Time Applications: ALBs are ideal for applications that rely on WebSockets or require low-latency responses, such as online gaming or live chat systems.

Troubleshooting Common Issues with Application Load Balancer

While ALBs offer powerful functionality, users may encounter issues that need troubleshooting:

Health Check Failures: Ensure that the health check settings (like path and response code) are correct. Misconfigured health checks can result in unhealthy targets.

SSL/TLS Configuration Issues: If SSL termination isn’t set up properly, users might experience errors or failed connections. Ensure SSL certificates are valid and correctly configured.

Routing Misconfigurations: Ensure that routing rules are properly defined, as incorrect routing can lead to traffic being sent to the wrong target.

Scaling Issues: If targets are not scaling properly, review auto-scaling group configurations to ensure they align with the ALB's scaling behavior.

Conclusion

Application Load Balancers are critical in optimizing the performance, availability, and scalability of modern web applications. By providing intelligent routing based on content, ALBs enable efficient handling of complex application architectures, such as microservices and containerized environments. Their ability to handle high volumes of traffic with low latency, integrate seamlessly with auto-scaling solutions, and enhance security makes them an invaluable tool for businesses looking to scale their operations efficiently. When setting up an ALB, it is essential to understand its key features, use cases, and best practices to maximize its potential. Whether deployed on AWS, Azure, or other cloud platforms, an Application Load Balancer ensures your applications remain responsive and available to users worldwide.

0 notes

Text

Understanding Cloud Load Balancing: A Key To Efficient Resource Management

Cloud load balancing is integral to modern cloud computing strategies, offering a sophisticated method to manage resource allocation effectively. At its core, cloud load balancing involves distributing incoming network traffic across multiple servers or instances to ensure optimal resource utilization. This distribution is crucial for maintaining system performance and avoiding overloading any single resource. By dynamically adjusting the distribution of traffic based on current load conditions, cloud load balancers help prevent performance degradation and ensure that applications remain responsive and reliable. This approach not only enhances user experience but also contributes to the efficient management of cloud resources, reducing operational costs and improving overall system resilience. Understanding these mechanisms is essential for organizations aiming to leverage cloud environments effectively and ensure their applications can handle varying loads with ease.

How Cloud Load Balancing Enhances Application Performance And Reliability?

Cloud load balancing plays a pivotal role in enhancing both application performance and reliability. By distributing incoming traffic across multiple servers or instances, load balancers prevent any single server from becoming a bottleneck, which could otherwise lead to degraded performance and slower response times. This distribution ensures that applications remain responsive even under high traffic conditions or during peak usage times. Additionally, load balancers contribute to reliability by providing redundancy; if one server fails or becomes unavailable, the load balancer can redirect traffic to healthy servers, minimizing downtime and ensuring continuous availability. This proactive approach to managing server load and traffic distribution not only boosts performance but also fortifies the overall reliability of applications, making cloud load balancing a critical component for maintaining optimal service levels.

The Basics Of Cloud Load Balancing: What You Need To Know?

To fully grasp cloud load balancing, it's important to understand its basic principles and mechanisms. Cloud load balancing involves the use of specialized algorithms to distribute incoming network requests or traffic across a pool of servers or resources. These algorithms can be based on various factors such as server load, request type, or session persistence requirements. Load balancers can operate at different layers of the OSI model, including Layer 4 (transport layer) and Layer 7 (application layer), depending on the complexity of the traffic management needed. By intelligently routing traffic, cloud load balancers ensure that each server handles a proportionate amount of requests, optimizing resource utilization and enhancing application performance. Understanding these fundamental aspects helps organizations effectively implement load balancing strategies and leverage its benefits for improved system efficiency and scalability.

Why Cloud Load Balancing Is Essential For Modern Cloud Architectures?

Cloud load balancing is indispensable for modern cloud architectures due to its role in managing and optimizing resource distribution. In cloud environments, where applications are often distributed across multiple servers or data centers, maintaining optimal performance and availability requires sophisticated traffic management strategies. Cloud load balancing ensures that traffic is evenly distributed across available resources, preventing any single resource from becoming a point of failure. This is crucial for scaling applications to handle varying loads, improving response times, and ensuring high availability. Additionally, cloud load balancers facilitate seamless integration with auto-scaling mechanisms, which automatically adjust the number of active instances based on current demand. This dynamic scaling capability, coupled with effective load balancing, supports the scalability and resilience required for modern cloud-based applications, making load balancing a key component of cloud architecture.

Optimizing Your Cloud Infrastructure With Cloud Load Balancing

Optimizing cloud infrastructure involves leveraging cloud load balancing to achieve efficient resource utilization and improved system performance. By implementing cloud load balancers, organizations can ensure that traffic is distributed evenly across their cloud resources, preventing any single instance from becoming overwhelmed. This distribution not only enhances application performance but also reduces the risk of server failures and downtime. Load balancing algorithms can be configured to take into account various factors such as server health, traffic patterns, and request types, allowing for more precise and effective resource management. Furthermore, integrating load balancers with auto-scaling features enables dynamic adjustment of resources based on real-time demand, further optimizing infrastructure performance and cost-efficiency. Overall, cloud load balancing is a critical tool for achieving a well-optimized, resilient, and cost-effective cloud infrastructure.

Key Benefits Of Implementing Cloud Load Balancing In Your Business

Implementing cloud load balancing in a business offers numerous benefits that contribute to improved performance, reliability, and cost-efficiency. One of the primary advantages is enhanced application performance, as load balancers distribute traffic across multiple servers, preventing any single server from becoming overloaded and ensuring responsive user experiences. Additionally, cloud load balancing enhances reliability by providing redundancy and failover capabilities; if one server fails, traffic is redirected to healthy servers, minimizing downtime and ensuring continuous service availability. Another key benefit is scalability; load balancers facilitate seamless scaling of applications by distributing traffic among newly added servers or instances. This scalability, combined with efficient resource utilization, helps reduce operational costs and improves overall system resilience. Overall, cloud load balancing delivers substantial benefits that support the growth and stability of business operations.

Cloud Load Balancing Explained: Ensuring High Availability And Scalability

Cloud load balancing is essential for ensuring high availability and scalability of cloud-based applications. By distributing incoming traffic across multiple servers or instances, load balancers prevent any single resource from becoming a bottleneck, which helps maintain application performance and availability. This distribution is particularly important in handling varying loads and ensuring that applications remain responsive during peak usage times. Additionally, cloud load balancers facilitate scalability by enabling seamless addition or removal of resources based on current demand. This dynamic scaling capability ensures that applications can handle increased traffic without performance degradation, while also optimizing resource utilization. The ability to maintain high availability and scalability is crucial for delivering reliable and responsive services in cloud environments, making load balancing a fundamental aspect of cloud infrastructure.

Exploring Cloud Load Balancing Techniques For Better Resource Distribution

Exploring various cloud load balancing techniques reveals how they contribute to more effective resource distribution and improved system performance. Common techniques include round-robin, least connections, and IP hash. Round-robin distribution cycles traffic through a list of servers in order, providing a simple yet effective way to balance load. Least connections routes traffic to the server with the fewest active connections, which helps ensure that each server handles a balanced load based on current demand. IP hash assigns traffic based on the client’s IP address, which can help maintain session persistence and improve performance for specific users. Advanced techniques involve combining these methods or using application-specific algorithms to optimize load distribution further. By employing the right load balancing techniques, organizations can achieve better resource utilization, enhanced application performance, and a more resilient cloud infrastructure.

Conclusion

Cloud load balancing is a critical technology for managing and optimizing resource distribution in cloud environments. Its role in enhancing application performance, reliability, and scalability cannot be overstated. By intelligently distributing traffic across multiple servers, cloud load balancing prevents bottlenecks, reduces downtime, and ensures that applications remain responsive under varying loads. Understanding its basic principles, benefits, and techniques allows organizations to leverage cloud load balancing effectively, leading to improved system efficiency and cost-effectiveness. As cloud computing continues to evolve, mastering cloud load balancing will remain essential for maintaining high availability, optimizing infrastructure, and supporting the growth of modern applications. Implementing robust load balancing strategies will be key to achieving a resilient and scalable cloud architecture that meets the demands of today’s digital landscape.

0 notes

Text

Explore the Seven Layers of Connectivity in the OSI Model

Unlock the mysteries of the OSI Model! Beginner-friendly guide to understand the layers and functions. Dive into networking fundamentals effortlessly.

#osi model#network communication#osi model in practice#layered model#data link#network layer#osi layers#internet protocol#data transmission#transport layer#session layer#presentation layer#application layer

4 notes

·

View notes

Text

Why are SOCKS5 proxies faster than HTTP proxies?

When it comes to the choice of proxy type, SOCKS5 proxies are often considered faster than HTTP proxies due to the fact that there are major differences in how they work and how their features are implemented. Let's explore why SOCKS5 proxy is usually faster than HTTP proxy.

1. Protocol differences

SOCKS5 Proxy: It is a general-purpose proxy protocol that works at layer 5 (session layer) of the OSI model, and its main role is to pass the client's traffic to the target server without any intervention or modification.SOCKS5 Proxy supports both TCP and UDP protocols, and is able to handle almost all types of network traffic, such as web browsing, P2P, online gaming, etc. It is also a general-purpose proxy protocol that works at layer 5 of the OSI model, and its main role is to pass the client's traffic to the target server without any intervention or modification. Since it does not need to understand the specifics of the transmission, it reduces the processing time of the traffic, thus increasing the speed.

HTTP proxies: work at layer 7 (application layer) of the OSI model and are mainly used for web browsing requests and responses.HTTP proxies need to parse HTTP request and response headers, process them, filter them, and even cache them. This makes the HTTP proxy more time-consuming than SOCKS5 in transferring data because it has to do more work.

2. Lightweight on data processing

The SOCKS5 proxy is very lightweight in data handling, requiring little to no inspection or processing of the transmitted content. As a result, data streams can be forwarded as fast as possible as they pass through the SOCKS5 proxy. HTTP proxies, on the other hand, must parse the headers in the HTTP protocol and even cache or filter the data, which results in additional processing time and thus reduced speed.

3. Flexible Protocol Support

The SOCKS5 proxy supports both TCP and UDP protocols, especially for UDP traffic, which gives it an advantage when dealing with real-time applications such as video streaming and online gaming. In contrast, HTTP proxy only supports TCP protocol, so SOCKS5 proxy will be more flexible and faster when dealing with some specific application scenarios.

4. No Content Caching and Filtering

HTTP proxies usually have caching mechanisms or content filtering features, these features can accelerate web access in some cases, but for real-time requirements or not suitable for caching scenarios, on the contrary, it will slow down. The SOCKS5 proxy, on the other hand, does not have these additional processing features and is more focused on forwarding data streams quickly, thus reducing waiting time.

The SOCKS5 proxy is faster than the HTTP proxy mainly because it works at a lower level and data processing is simpler and more straightforward, without the need to parse application layer protocols or perform complex caching and filtering. For application scenarios that require fast and flexible data transfer, such as gaming, video, P2P transfer, etc., SOCKS5 proxy is often a better choice.

In addition, if you need to use efficient and stable SOCKS5 proxy service, 711Proxy provides quality proxy solutions to help you improve your network experience in different scenarios.

0 notes

Text

Comprehensive Guide to Application Load Balancer

An application load balancer is a crucial component in modern cloud-based infrastructures. It distributes incoming network traffic across multiple servers, ensuring no single server becomes overwhelmed. By efficiently managing the load, an application load balancer improves application availability and reliability. It operates at the application layer (Layer 7) of the OSI model, which allows it to make routing decisions based on content, such as URL paths or HTTP headers.

Benefits of Using an Application Load Balancer

The use of an application load balancer offers numerous benefits for managing web traffic. It enhances the scalability of applications by distributing traffic evenly across multiple servers, preventing any single server from becoming a bottleneck. This load distribution not only improves performance but also ensures high availability and fault tolerance. In the event of a server failure, the application load balancer automatically redirects traffic to healthy servers, minimizing downtime and service disruptions.

Application Load Balancers Improve Scalability

Application load balancers play a critical role in enhancing the scalability of web applications. As user demand fluctuates, an application load balancer distributes incoming traffic across multiple servers or instances. This horizontal scaling approach allows businesses to add or remove servers based on current traffic needs without affecting application performance.

Application Load Balancer vs. Network Load Balancer

Understanding the difference between an application load balancer and a network load balancer is crucial for selecting the right load balancing solution. While both types of load balancers distribute traffic to multiple servers, they operate at different layers of the OSI model. An application load balancer functions at Layer 7, allowing it to make routing decisions based on application-level data such as HTTP headers and URL paths.

Configuring Health Checks with Application Load Balancer

Configuring health checks is an essential aspect of managing an application load balancer. Health checks monitor the status of backend servers to ensure they are functioning correctly. An application load balancer uses these health checks to determine which servers are healthy and capable of handling traffic. If a server fails a health check, the load balancer redirects traffic to other healthy servers, maintaining application availability.

Securing Your Application with Application Load Balancer

Security is a critical consideration when using an application load balancer. It can enhance the security of web applications by implementing features such as SSL/TLS termination and integration with Web Application Firewalls (WAFs). SSL/TLS termination allows the load balancer to handle encryption and decryption tasks, reducing the load on backend servers and improving overall performance. Additionally, integrating a WAF with the application load balancer helps protect against common web threats such as SQL injection and cross-site scripting.

Monitoring and Analyzing Traffic with Application Load Balancer

Monitoring and analyzing traffic is a key function of an application load balancer. It provides insights into traffic patterns, server performance, and user behavior, which are essential for optimizing application performance and troubleshooting issues. With built-in monitoring tools, an application load balancer can track metrics such as request rates, latency, and error rates. Analyzing this data helps identify potential bottlenecks, assess server load, and make informed decisions about scaling and resource allocation.

Implementing Sticky Sessions with Application Load Balancer

Sticky sessions, or session persistence, are a feature that can be configured with an application load balancer to maintain session consistency for users. When sticky sessions are enabled, the load balancer ensures that requests from a particular user are consistently routed to the same backend server. This is crucial for applications that require session data to be stored locally on the server, such as online shopping carts or user-specific dashboards.

Cost Considerations for Application Load Balancers

When planning to use an application load balancer, understanding the cost implications is important for budget management. Costs associated with an application load balancer typically include charges for data processing, traffic management, and the number of requests handled. Additionally, there may be costs related to additional features such as SSL certificates and advanced monitoring tools. Evaluating these costs in relation to the benefits provided by the load balancer helps organizations make informed decisions about their load balancing strategy.

Future Trends in Application Load Balancing

The field of application load balancing is continually evolving, with new trends and technologies emerging to enhance performance and functionality. Future trends may include the integration of artificial intelligence and machine learning for dynamic traffic management and optimization. These technologies can provide predictive analytics and automated adjustments to improve load balancing efficiency.

Conclusion

Understanding and implementing the application load balancer is essential for optimizing web traffic management and ensuring high availability and performance of applications. From improving scalability and security to configuring health checks and analyzing traffic, an application load balancer plays a pivotal role in modern infrastructure. By staying updated with future trends and managing costs effectively, businesses can harness the full potential of application load balancing, providing a seamless and reliable user experience.

0 notes

Text

Because I would want to know

7 dwarfs: Happy, Doc, Grumpy, Dopey, Bashful, Sleepy, and Sneezy

taxonomical classifiction: Kingdom, Phylum, Class, Order, Family, Genus, Species

Continents: Africa, Asia, Europe, Australia, Oceania, America, Antarctica (may vary)

7 deadly sins: Lust, Wrath, Sloth, Gluttony, Envy, Pride, Greed

7 layer dip: refried beans, sour cream, guacamole, salsa, cheese, green onions, tomatos or olives

OSI model: physical, data link, network, transport, session, presentation, application layers

7 wonders of the antique world: Great Pyramid of Giza, Colossus of Rhodes, Hanging Gardens of Babylon, Pharos of Alexandria, Mausoleum of Halicarnassus, Zeus Statue of Olympia, Artemis Temple of Ephesus.

My favorite gag is mixing up the distinction between oft confused terms. Like, oh no, it's quite simple: stalactites have hit the earth's surface but stalagmites are found in space. Meteorites can be distinguished by their round snouts and asteroids by their sharper snouts. Oh, and remember: crocodiles hang from the ceiling. It's alligators that point up from the ground.

27K notes

·

View notes

Text

0 notes