#(but some safety concerns and other ethical considerations drive this decision)

Explore tagged Tumblr posts

Text

lmao so I’m officially going to tell my supervisor I’m resigning this week

#I have never done this before#or written a letter#I am stressed lmao#and preparing for some unemployment 🫠#(and yes I know it would be better to wait until I’m employed)#(but some safety concerns and other ethical considerations drive this decision)#also I don’t know when? like do I do it during supervision… or schedule a different time…#ugh this is so uncomfortable#sentences border on senseless

14 notes

·

View notes

Text

AI Vs. Engineering: Who Will Win the Battle

The battle between Artificial Intelligence (AI) and the engineering field is a complex and evolving landscape. AI has emerged as a transformative force across various industries, enhancing efficiency, productivity, and accuracy in tasks. On the other hand, engineers, particularly software engineers, remain highly valuable and essential for technological advancements.

AI Advantages and Threats:

AI possesses significant advantages over humans, such as processing vast amounts of data rapidly and accurately, learning from patterns, and working continuously without breaks. However, AI also poses threats, including potential job displacement in various industries, the creation of deep fakes, and concerns about its self-awareness leading to risks for humanity.

The Future of AI and Humans' Role:

The future of AI is uncertain but certain to continue its integration into diverse sectors. Ethical considerations are crucial to ensure AI's societal benefits. Humans play a pivotal role in guiding AI development ethically and implementing standards for its safe and beneficial use.

Software Engineers' Value

Software engineers are highly valued in the current technological landscape. Despite the rise of generative AI automating coding tasks, software engineers remain indispensable for innovation, problem-solving, and critical thinking. The value of software engineers is recognized by many industry leaders who prioritize their skills over capital investments.

AI as a Tool for Software Engineers

AI serves as a powerful tool for software engineers by automating repetitive tasks like code generation and refactoring. This allows engineers to focus on innovation, judgment, and complex problem-solving tasks that require human creativity and insight.In conclusion, the battle between AI and engineering is not a competition but a collaboration that drives innovation. While AI continues to advance rapidly, software engineers' expertise in problem-solving and innovation remains invaluable in shaping the future of technology.

What Are the Advantages of Ai Over Engineering?

Artificial Intelligence (AI) offers several advantages over traditional engineering practices, revolutionizing the field and driving innovation. Here are some key advantages of AI over engineering:

Reduction in Human Error: AI significantly reduces errors and increases accuracy by processing vast amounts of data with precision, leading to more reliable outcomes

Zero Risks: AI can perform risky tasks, such as defusing bombs or exploring hazardous environments, without endangering human lives, enhancing safety and efficiency

24x7 Availability: AI can work continuously without breaks, handling multiple tasks simultaneously with speed and accuracy, ensuring seamless operations around the clock

Automation of Engineering Tasks: AI automates various engineering tasks by analyzing data and providing recommendations for issue resolution, improving efficiency and minimizing errors

Simulation and Analysis: AI enhances the speed and accuracy of engineering simulations and analyses, identifying complex patterns that may be challenging for humans to detect

Expertise Augmentation: AI augments engineers' expertise by providing powerful tools for complex problem-solving, leading to innovative solutions and improved efficiency

Predictive Maintenance and Fault Detection: AI predicts equipment failures based on data analysis, recommending maintenance actions to prevent breakdowns, reducing downtime and increasing equipment lifespan

Safety and Risk Management: AI enhances safety measures in engineering by analyzing data to manage risks effectively, ensuring a safer work environment

How Can Ai Be Used to Improve Engineering Processes

Artificial Intelligence (AI) offers numerous ways to enhance engineering processes, improving efficiency, productivity, and decision-making. Here are some key ways AI can be used to improve engineering processes:

Simulation and Optimization: AI-powered simulations enable engineers to evaluate and optimize designs, anticipate system performance, and identify potential issues before they occur. This saves time, resources, and enhances overall product quality

Design Automation: AI algorithms automate routine design tasks, such as generating 2D and 3D models or optimizing designs for specific criteria. This automation speeds up the design process, allowing engineers to iterate quickly and improve concepts efficiently

Simulation Process Optimization: AI optimizes simulations by running them faster and more accurately. Engineers can evaluate and enhance their ideas more efficiently, leading to better designs and increased productivity

Algorithm Optimization: AI analyzes large volumes of data using machine learning techniques to optimize algorithms for specific engineering tasks. This results in faster and more efficient product development processes

Predictive Maintenance: AI enables predictive maintenance by forecasting potential equipment failures and recommending proactive measures to prevent downtime. This leads to faster product development, improved performance, and cost savings

Enhanced Decision-Making: AI assists engineers in making better choices by predictively improving workflows, suggesting best practices, and guiding design decisions along the way. This boosts knowledge and efficiency in the engineering process

What Are Some Specific Ai Tools Used in Engineering Design

AI tools used in engineering design include:

Anomaly Detection Tools: These systems are trained to identify anomalies in CAD drawings, helping engineers assess compliance with building codes or detect design errors

Generative AI: Generative AI tools like ChatGPT and Github Copilot help engineers design in new ways by optimizing designs for specific objectives or generating creative content

Open Source Deep Learning Libraries: These libraries, such as TensorFlow, PyTorch, and Keras, enable engineers to build and train neural networks for various applications

Creative Generation: AI-powered image generation tools like DALL-E 2, Midjourney, and Stable Diffusion help engineers create illustrations, break up long blocks of text, and generate images for pitch decks

3D Visualization and Simulation: Neural radiance fields (NeRFs) are AI-powered computer graphics rendering models that allow engineers to project realistic physical effects into their models, such as fire or liquids

Other popular AI tools used in engineering design include CAD (Computer-Aided Design) software like AutoCAD, SolidWorks, CATIA, NX, and Creo, PLM (Product Lifecycle Management) software, and CAE (Computer-Aided Engineering) software for simulation and optimization.

In conclusion, AI's advantages in reducing errors, enhancing safety, enabling continuous operations, automating tasks, improving analysis accuracy, augmenting expertise, predicting maintenance needs, and enhancing safety measures highlight its transformative impact on the engineering profession. AI revolutionizes engineering processes by automating tasks, optimizing simulations, enhancing design automation, improving algorithm efficiency, enabling predictive maintenance, and facilitating better decision-making for engineers.

Source: Click Here

#best btech college in jaipur#top engineering college in jaipur#best engineering college in jaipur#best btech college in rajasthan#best private engineering college in jaipur#best engineering college in rajasthan

0 notes

Text

How AI+ Government™ Certification Drives Compliance and Transparency in Public Sector AI Initiatives

In an era where artificial intelligence (AI) is shaping every industry, its role in the public sector is especially impactful. From enhancing public safety to streamlining governmental services, AI promises increased efficiency and improved citizen engagement. However, the use of AI in the public sector comes with unique challenges, primarily concerning compliance, transparency, and ethical considerations. This is where the AI+ Government™ Certification comes into play. This specialized certification is designed to ensure that public sector entities can adopt AI responsibly, adhering to standards of compliance and transparency. Here, we’ll explore how AI+ Government™ Certification supports public sector AI initiatives and fosters an environment of trust and accountability.

1. Understanding AI+ Government™ Certification

The AI+ Government™ is a specialized certification focused on equipping public sector professionals with the knowledge and skills needed to manage AI responsibly. It goes beyond technical training, emphasizing the ethical, legal, and operational aspects of AI. This certification prepares government officials, IT managers, and policy creators to navigate the complexities of AI within the framework of government regulations and societal expectations. By earning the AI+ Government™ credential, professionals demonstrate a commitment to upholding the highest standards of AI governance, transparency, and ethical integrity in their projects.

2. Why Compliance and Transparency Are Critical in Public Sector AI

In public sector initiatives, transparency and compliance are not merely ethical considerations—they are legal mandates. Governments must comply with stringent regulations that are designed to protect citizen rights and ensure fair access to services. Transparency becomes essential, as public sector AI projects impact large populations and influence critical decisions, such as healthcare allocation, criminal justice, and social services. A lack of compliance or transparency could lead to biased outcomes, loss of public trust, and potential legal repercussions. AI+ Government™ Certification provides the knowledge needed to meet these challenges, ensuring that AI solutions meet regulatory standards and operate transparently.

3. Key Features of AI+ Government™ Certification for Public Sector AI

AI+ Government™ Certification offers a curriculum tailored to the needs of the public sector, focusing on areas critical to successful AI deployment in government:

Ethical AI Development: Certification training emphasizes ethical guidelines, ensuring that AI applications respect citizen privacy and minimize bias.

Regulatory Compliance: The course covers key regulations like GDPR and other country-specific laws impacting public sector AI, guiding professionals on how to align AI models with legal requirements.

Transparency Practices: Certification promotes transparency by teaching government professionals how to document AI processes and decisions, allowing stakeholders to understand and trust the technology.

Risk Mitigation: Public sector AI projects face risks related to data privacy, security, and bias. AI+ Government™ Certification equips practitioners with risk assessment and mitigation techniques, making it easier to foresee and manage potential challenges.

4. How AI+ Government™ Certification Drives Compliance in Public Sector AI

The AI+ Government™ Certification program provides comprehensive training in compliance for public sector AI projects, covering legal frameworks, data protection, and ethical standards. Here are some ways this certification aids in ensuring compliance:

Training in Regulatory Frameworks: Certification courses cover a range of regulatory requirements, including GDPR, HIPAA (for health data), and regional AI laws. This ensures that AI solutions comply with privacy and data protection laws.

Accountability Mechanisms: AI+ Government™ Certification emphasizes the need for audit trails, version controls, and regular compliance checks, which are essential for ensuring that AI models meet the evolving regulatory standards.

Informed Data Use: Public sector data often involves sensitive information. Certified professionals are trained to manage data responsibly, ensuring that it is used legally and ethically.

Compliance is critical because, without it, government AI projects are vulnerable to legal liabilities and citizen distrust. The AI+ Government™ Certification empowers professionals with the knowledge and tools to ensure all AI solutions meet necessary legal and ethical standards.

5. Promoting Transparency in Government AI Through AI+ Government™ Certification

Transparency is vital in government AI initiatives, as it builds public trust and reduces concerns about bias and misuse of AI. AI+ Government™ Certification instills best practices for transparency, including:

Clear Documentation: Certification emphasizes documentation of the AI development process, including data sources, model design, and decision-making criteria. This transparency helps citizens and stakeholders understand AI's role in government functions.

Algorithmic Transparency: The certification teaches professionals how to create AI models with transparent algorithms, where decision-making criteria are accessible and understandable. This practice is essential in sensitive areas like law enforcement and social services.

Public Communication: Certified professionals learn how to communicate AI insights effectively to the public, translating technical language into understandable information that encourages citizen engagement.

Transparency is particularly essential in government projects, where public approval and accountability are paramount. By achieving AI+ Government™ Certification, professionals gain skills that make AI initiatives more transparent and trustworthy.

6. Real-World Applications of AI+ Government™ Certification in the Public Sector

Professionals with AI+ Government™ Certification are equipped to implement AI responsibly across a variety of government applications. Here are some examples of how certified professionals might contribute:

Public Safety: AI can help detect patterns in crime data, leading to proactive policing. Certified AI professionals ensure that AI algorithms used in such initiatives remain unbiased and transparent.

Healthcare: AI is used to improve patient outcomes and streamline healthcare services. Certified professionals understand data privacy laws and ensure compliance with HIPAA, promoting secure AI applications in public health.

Environmental Monitoring: Governments use AI to track environmental changes. Certified AI professionals help guarantee that data collection and model outputs are reliable and adhere to environmental regulations.

In each of these areas, AI+ Government™ Certification prepares professionals to deploy AI that adheres to strict standards, ensuring compliance with legal and ethical guidelines.

7. Why AI+ Government™ Certification Is Essential for the Future of Public Sector AI

As governments increasingly adopt AI, the need for professionals who can responsibly manage these technologies will grow. AI+ Government™ Certification serves as a vital credential that verifies expertise in compliance, transparency, and ethics. This certification not only provides immediate benefits in skill development but also positions professionals for long-term success in a world where public sector AI is only becoming more prevalent.Conclusion AI has the power to transform the public sector, but only when implemented with care and responsibility. AI+ Government™ Certification ensures that professionals are prepared to meet the challenges of compliance and transparency in public sector AI. By promoting ethical AI practices, regulatory compliance, and a commitment to transparency, this certification is a crucial asset for anyone involved in government AI initiatives. Through the knowledge gained from AI+ Government™ Certification, public sector professionals can be confident that they are contributing to an AI-powered future built on trust, accountability, and respect for public interest.

0 notes

Text

AI Ethics :balancing innovation and Responsibilities

Artificial intelligence is rapidly transforming all aspects of our lives such as the way we get our health checked ,how we manage our finances, our transportation system and especially the entertainment sector. AI plays a crucial role in all these and many more sectors. As the benefits of AI are endless its ethical component cannot be ignored. We have to make sure that the two important components of innovation and responsibility must go hand in hand so that the AI technologies are developed and deployed in the best interest of mankind which is fair , transparent and beneficial to society.

The promises of AI

To start with AI has the ability to transform any business by increasing its productivity, taking strategic decision and opening up the new avenues for growth such as AI system in health sector can be more accurate at diagnosing illnesses than the professionals as the chances of error remain very low due to the training of the AI model next would the self driving cars special the TESLA that uses AI model to make its cars self driving and in the field of finance AI can definitely give you some great advice even can trade in share on your behalf known as algo trading. These examples illustrate the transformative capabilities of AI and its endless possibilities.

The Ethical Challenges

The first one would be eliminating the Ethical prejudice and challenges

The AI system works with an algorithm that can be biassed based on the training model that is being used in the training or the data that is being used . This has significant impact and long lasting consequences especially in the field of finance , justice ,hiring employees ,where the biassed AI can lead to unfair treatment on the basis of gender ,race , or any other characteristic that may be considered. So we must ensure the result generated through AI is not of biassed nature .

Safety concerns : to make sure that the AI works fine it has to fed with huge data also consisting of personal information of thousands of users which can lead to privacy breach .For example Facial recognition can be used to track individual without their consent and is a safety concern which can lead to misuse by authorities or cyber criminals . In order to not let such a terrible thing happen, AI systems should be transparent and must protect personal data at all cost .

Transparency : AI systems are more complex than they look and it is clearly very difficult to understand how they make decisions or what could be the most appropriate prompt . This lack of transparency can lead to accountability issues . In special situations where life and death situations arise this can be a major concern . so to overcome this we need to know how decisions are made by the AI model thus it will foster trust between the users.

Regulations and Policies : Government and regulatory bodies have a crucial role in setting benchmarks and necessary regulations to ensure the responsible development and use of AI. Policies that promote transparency ,data protection and accountability can help mitigate the ethical risks associated with AI . for example the general data Protection Regulation in the European union which sets the highest of the strict regulations for data privacy and protection .

Corporate Responsibility : Companies developing the AI technology must give a sense of responsibility to ensure that their products are ethically sound . This will be beneficial for the company as well as the users, this should include the fairness and biasness mitigation techniques and being transparent about the risk involved .

The path forward not only includes addressing the current ethical challenges but also being ready for the future ones , emerging tech such as the quantum computing and neural links and many more techs will require careful consideration and supervision this why we will need strong ethical foundation and by building that we can ensure that future AI trends are driven by the same fairness.

In conclusion , the journey of AI ethics has a long way to go and demands continuous efforts and updates as the world may requires.by working together , we can create AI systems that only advance human capabilities but embrace the values that define our humanity.

0 notes

Text

Private Engineering Ethics: Balancing Profit and Social Responsibility

In the dynamic landscape of modern engineering, where innovation and profit drive the industry forward, a critical consideration often takes a backseat - the ethical responsibilities of private engineering firms. The intersection of profit-making and social responsibility raises crucial questions about the ethical practices and moral compass of businesses in the engineering sector. Striking a balance between maximizing profits and upholding social responsibility is a challenging but necessary endeavor. In this context, it is noteworthy that the best private engineering college in Rajasthan, , serves as a beacon, demonstrating how ethical principles can be seamlessly integrated into the fabric of engineering education.

The Profit Imperative

Private engineering firms operate within a competitive environment where profitability is a fundamental driver. The pressure to deliver returns to stakeholders, sustain growth, and outperform competitors can sometimes lead to ethical shortcuts. This pursuit of profit, when unchecked, may compromise safety, environmental sustainability, and the well-being of communities affected by engineering projects.

In the quest for financial success, some firms might be tempted to cut corners, ignore long-term environmental impacts, or compromise the quality and safety of their products. These actions can have far-reaching consequences, ranging from environmental degradation to compromising public safety.

The Social Responsibility Mandate

On the other side of the equation lies the ethical imperative for private engineering firms to consider the broader impact of their work on society. From sustainable practices and environmental conservation to ensuring accessibility and inclusivity in technological advancements, engineering firms have a moral duty to contribute positively to the well-being of the communities they serve.

Social responsibility in engineering encompasses not only the direct impact of products and projects but also the treatment of employees, engagement with local communities, and adherence to ethical labor practices. This mandate becomes particularly critical as engineering innovations increasingly shape the future of societies and economies.

Strategies for Balancing Profit and Social Responsibility

Ethical Leadership: Foster a culture of ethical leadership within the organization. Leaders should set an example by prioritizing ethical considerations in decision-making and encouraging a values-driven approach.

Transparency and Accountability: Establish transparent communication channels that keep stakeholders informed about the ethical practices of the firm. Embrace accountability for the social and environmental consequences of engineering projects.

Stakeholder Engagement: Actively engage with a diverse range of stakeholders, including local communities, non-governmental organizations, and regulatory bodies. This ensures that the concerns and perspectives of those affected by engineering projects are taken into account.

Comprehensive Risk Assessment: Conduct thorough risk assessments that not only consider financial risks but also ethical and social risks associated with engineering projects. This includes anticipating potential negative impacts and implementing mitigation strategies.

Investment in Sustainability: Integrate sustainability into the core business strategy. Consider the long-term environmental and social implications of engineering decisions and invest in sustainable practices that align with the firm's profitability goals.

Ethics Training and Education: Prioritize ongoing ethics training for employees at all levels. Equip them with the knowledge and tools to navigate ethical dilemmas and make decisions that align with both profit objectives and social responsibility.

Conclusion

Private engineering firms play a pivotal role in shaping the future through innovation and technological advancements. However, with great power comes great responsibility. Balancing profit and social responsibility is not just a choice but a necessity for the sustained success and positive impact of engineering endeavors. In the educational landscape, this principle is echoed by the top BTech colleges in Rajasthan, including esteemed institutions. These institutions go beyond the conventional norms of engineering education by instilling a profound sense of ethical responsibility in their students.

0 notes

Text

IoT In Transportation Evolution: Advancements In Autonomous Vehicles

IoT solutions for transportation meet growing needs in a variety of operating conditions. Some of the key transportation IoT use cases today include:

Traffic Management: IoT applications for urban traffic management can improve both safety and traffic flow and help cities get maximum value from their infrastructure spending. Public Transportation: Transit IoT applications can improve passenger experience with amenities like information signage and high-speed Internet connectivity, enabling transit agencies to operate more efficiently. Electric Vehicles and EV Charging: The number of electric cars and EV charging stations is increasing rapidly. The entire EV infrastructure relies on IoT connectivity for system management, payment processing and more. Railways: IoT solutions support light rail and heavy commercial rail systems, and Digi is leading the way with high-performance 5G mobile access routers for reliable and secure high-speed communications and geo-positioning – even in tunnels and urban canyons. Trucking/Logistics: Fleet managers can track vehicle analytics, reduce the need for truck rolls, and automate processes to save on operational costs, including truck refrigeration monitoring and reporting.

Advancements In Autonomous Vehicles

Self-driving cars benefit from the integration of AI technology, which is driving progress in the field of autonomous vehicles by enhancing their safety, energy efficiency and environmental friendliness. Safer roads, reduced maintenance costs, improved comfort, smoother traffic and reduced energy consumption are some of the benefits of AI in autonomous driving.

Ethical Considerations in AI-Powered Autonomous Driving As the development of autonomous vehicles continues, important ethical considerations need to be taken into account, particularly regarding the role of AI in decision making.

In a scenario where an autonomous vehicle is involved in an accident, who is responsible – the vehicle owner, the car manufacturer or the AI system?

Additionally, there are concerns about the ethical implications of AI-powered vehicles making decisions that involve human lives, such as an autonomous car having to choose between avoiding pedestrians and moving into another lane with other vehicles.

To address these concerns, car manufacturers and researchers are working on developing ethical guidelines for the use of AI in autonomous vehicles. One approach is to program an AI system to prioritize human safety above all else.

However, it still raises complex ethical dilemmas, such as deciding whose safety takes precedence in a given situation. Debates continue about how to balance the benefits of autonomous driving with the potential risks and ethical implications.

The advent of AVs will transform transportation and logistics. Advanced technology and safety improvements will revolutionize the movement of goods and people. Challenges such as security, regulation and infrastructure readiness must be navigated in this autonomous journey.

AV implications go beyond hands-free driving. They promise safer roads, less congestion, better accessibility and environmental sustainability. Economic opportunities arise in urban planning, workforce transformation and vehicle management and data analytics. AVs are redefining mobility, providing transportation as a service and empowering those with limited mobility options.

Despite obstacles such as technological limitations and regulatory frameworks, the future of AVs looks promising. As technology advances and trust increases, greater integration in transportation systems is expected.

Read: Cost to Development eScooter Sharing App

The future of travel will involve more than self-driving cars; It's about reimagining how we move people and things. Collaboration between governments, manufacturers, researchers and the public is critical. Addressing challenges, encouraging innovation and responsible deployment unlocks the full potential of AVs, delivering safer, more efficient and sustainable transport and logistics.

Read:

How Much Does it Cost to Develop an Automotive Mobile App Development?

The rise of autonomous vehicles is not just a technology, but a paradigm shift in transportation thinking. It is an opportunity to rebuild cities, create jobs and make transport inclusive and environmentally friendly. Embrace the opportunities offered by AVs and work towards a future of safer, smarter and more connected transport.

FAQ's

Why are autonomous vehicles becoming mainstream?

Autonomous vehicles are becoming mainstream thanks to advances in technology and trials for driverless cars taking place in American cities.

2. What is the current status of driverless car trials? Driverless car trials are currently underway in cities across America, bringing autonomous vehicles into the mainstream.

3. What are the technological advances in driving autonomous vehicles? Advances in technology such as Artificial intelligence and sensor technology are leading to the development of autonomous vehicles.

4. What are the potential benefits of autonomous vehicles? Potential benefits of autonomous vehicles include improved road safety, reduced traffic congestion and increased mobility for people with disabilities.

5. What are the concerns surrounding autonomous vehicles? Concerns surrounding autonomous vehicles include cybersecurity risks, job displacement for drivers, and ethical considerations for decision-making algorithms.

Read: The Best 13 AI App Development Companies List

0 notes

Text

Exploring the World of Cosmetic Gynecology: Empowerment or Pressure?

In recent years, cosmetic gynecology has gained significant attention, sparking discussions regarding its implications on women's health, self-image, and societal norms. This blog delves into the realm of cosmetic gynecology, examining its procedures, controversies, and the broader cultural context surrounding this evolving field.

Understanding Cosmetic Gynecology: Cosmetic gynecology encompasses a range of procedures aimed at altering the appearance and function of the female genitalia. From vaginal rejuvenation to labiaplasty, these procedures promise various benefits, including improved aesthetics, enhanced sexual satisfaction, and relief from discomfort or insecurity.

Common Procedures:

Labiaplasty: This surgical procedure involves reshaping or reducing the size of the labia minora or majora to address aesthetic concerns or discomfort during physical activities.

Vaginoplasty: Also known as vaginal rejuvenation, this procedure aims to tighten the vaginal canal, often sought after childbirth or due to age-related changes.

Clitoral Hood Reduction: Involves trimming excess tissue around the clitoris to enhance sensation or improve appearance.

Hymenoplasty: Reconstructs the hymen for cultural, religious, or personal reasons, often associated with virginity restoration.

Controversies and Ethical Considerations: While cosmetic gynecology offers potential benefits, it also raises ethical concerns and controversies:

Medical Necessity vs. Cosmetic Enhancement: Critics argue that many procedures lack medical necessity and are driven by societal pressure or unrealistic beauty standards.

Lack of Regulation: The cosmetic gynecology industry operates with varying degrees of regulation worldwide, leading to concerns about patient safety and practitioner qualifications.

Body Image and Femininity: Some worry that the normalization of genital cosmetic surgery perpetuates unrealistic beauty ideals and undermines women's confidence in their natural bodies.

Empowerment vs. Pressure: The debate surrounding cosmetic gynecology is nuanced. While some view these procedures as empowering women to make informed choices about their bodies, others see them as reinforcing patriarchal notions of beauty and sexuality. It's crucial to consider individual motivations, cultural influences, and the potential psychological impact on patients.

The Importance of Informed Consent and Patient Education: Regardless of one's stance on cosmetic gynecology, promoting informed consent and comprehensive patient education is paramount. Healthcare providers should facilitate open discussions, ensuring patients understand the risks, benefits, and realistic expectations associated with these procedures.

Cosmetic gynecology represents a complex intersection of medicine, aesthetics, and societal norms. As the field continues to evolve, it's essential to engage in thoughtful dialogue, prioritize patient well-being, and challenge the underlying pressures that drive individuals to seek these procedures. Ultimately, the decision to undergo cosmetic gynecology should be guided by informed choice, autonomy, and respect for diverse perspectives on femininity and beauty.

0 notes

Text

How Does Data Annotation Assure Safety in Autonomous Vehicles?

To contrast a human-driven car with one operated by a computer is to contrast viewpoints. Over six million car crashes occur each year, according to the US National Highway Traffic Safety Administration. These crashes claim the lives of about 36,000 Americans, while another 2.5 million are treated in hospital emergency departments. Even more startling are the figures on a worldwide scale.

One could wonder if these numbers would drop significantly if AVs were to become the norm. Thus, data annotation is contributing significantly to the increased safety and convenience of Autonomous Vehicles. To enable the car to make safe judgments and navigate, its machine-learning algorithms need to be trained on accurate and well-annotated data.

Here are some important features of data annotation for autonomous vehicles to ensure safety:

Semantic Segmentation: Annotating lanes, pedestrians, cars, and traffic signs, as well as their borders, in photos or sensor data, is known as semantic segmentation. The car needs accurate segmentation to comprehend its environment.

Object Detection: It is the process of locating and classifying items, such as vehicles, bicycles, pedestrians, and obstructions, in pictures or sensor data.

Lane Marking Annotation: Road boundaries and lane lines can be annotated to assist a vehicle in staying in its lane and navigating safely.

Depth Estimation: Giving the vehicle depth data to assist it in gauging how far away objects are in its path. This is essential for preventing collisions.

Path Planning: Annotating potential routes or trajectories for the car to follow while accounting for safety concerns and traffic laws is known as path planning.

Traffic Sign Recognition: Marking signs, signals, and their interpretations to make sure the car abides by the law.

Behaviour Prediction: By providing annotations for the expected actions of other drivers (e.g., determining if a pedestrian will cross the street), the car can make more educated decisions.

Map and Localization Data: By adding annotations to high-definition maps and localization data, the car will be able to navigate and position itself more precisely.

Weather and Lighting Conditions: Data collected in a variety of weather and lighting circumstances (such as rain, snow, fog, and darkness) should be annotated to aid the vehicle’s learning process.

Anomaly Detection: Noting unusual circumstances or possible dangers, like roadblocks, collisions, or sudden pedestrian movements.

Diverse Scenarios: To train the autonomous car for various contexts, make sure the dataset includes a wide range of driving scenarios, such as suburban, urban, and highway driving.

Sensor Fusion: Adding annotations to data from several sensors, such as cameras, radar, LiDAR, and ultrasonics, to assist the car in combining information from several sources and arriving at precise conclusions.

Continual Data Updating: Adding annotations to the data regularly to reflect shifting traffic patterns, construction zones, and road conditions.

Quality Assurance: Applying quality control techniques, such as human annotation verification and the use of quality metrics, to guarantee precise and consistent annotations.

Machine Learning Feedback Loop: Creating a feedback loop based on real-world data and user interactions to continuously enhance the vehicle’s performance.

Ethical Considerations: Make sure that privacy laws and ethical issues, like anonymizing sensitive material, are taken into account during the data annotation process.

Conclusion:

An important but frequently disregarded component in the development of autonomous vehicles is data annotation. Self-driving cars would remain an unattainable dream if it weren’t for the diligent efforts of data annotators. Data Labeler provides extensive support with annotating data for several kinds of AI models. For any further queries, you can visit our website. Alternatively, we are reachable at [email protected].

0 notes

Text

UNLEASHING THE POWER OF BIG DATA: FROM CHAOS TO CLARITY

Introduction:

In the digital age, we are surrounded by an overwhelming amount of data generated every second. From social media interactions to online purchases and sensor readings, the world is producing an unimaginable volume of information known as "Big Data." But how can we harness this seemingly chaotic sea of data and transform it into meaningful insights and opportunities? In this blog, we'll explore the incredible potential of Big Data and the innovative ways it can be harnessed to shape a better future for humanity.

Understanding the Promise of Big Data:

Big Data is not just a buzzword; it's a game-changer. The promise of Big Data lies in its ability to reveal patterns, trends, and correlations that were once hidden in the noise of vast datasets. This newfound understanding can drive smarter decision-making, foster innovation, and improve virtually every aspect of our lives, from healthcare and transportation to marketing and entertainment.

Uncovering Hidden Gems with Data Analytics:

Data analytics is the key to unlocking the potential of Big Data. By employing advanced algorithms and machine learning techniques, data analysts can sift through enormous datasets to identify valuable insights. For businesses, this means understanding customer behavior, predicting market trends, and optimizing operations for maximum efficiency.

Transforming Healthcare with Data-driven Insights:

In the realm of healthcare, Big Data has the potential to revolutionize patient care. By aggregating and analyzing patient data from various sources, medical professionals can make more accurate diagnoses, personalize treatment plans, and even predict disease outbreaks. Furthermore, researchers can utilize Big Data to accelerate drug discovery and development, saving both time and resources.

Enhancing Urban Living through Smart Cities:

As the world's population continues to urbanize, cities are facing significant challenges. Big Data can offer solutions through the concept of smart cities. By collecting data from sensors, cameras, and mobile devices, cities can optimize traffic flow, improve public safety, and enhance overall urban living conditions. From reducing pollution to predicting infrastructure maintenance needs, Big Data holds the key to a more sustainable and livable future.

Big Data and Climate Change:

Climate change is one of the most pressing issues of our time, and Big Data can play a crucial role in mitigating its effects. By analyzing environmental data from satellites, weather stations, and other sources, scientists can gain a deeper understanding of climate patterns, identify vulnerable regions, and develop more effective strategies for climate resilience and adaptation.

The Ethical Challenge of Big Data:

While Big Data offers immense opportunities, it also raises ethical concerns. The collection and use of personal data must be handled with utmost care to respect individuals' privacy and prevent potential misuse. As we embrace the power of Big Data, it is vital to establish robust data protection laws and ethical guidelines to ensure its responsible and secure usage.

Conclusion:

Harnessing Big Data is not just a technological feat; it's a paradigm shift that empowers us to make informed decisions, drive innovation, and tackle some of the most pressing challenges of our time. From transforming healthcare and optimizing urban living to combating climate change, the possibilities are endless. As we move forward, let us be mindful of the ethical considerations and ensure that the power of Big Data is harnessed for the collective good. Embrace the data-driven era, and together, we can turn chaos into clarity, and knowledge into meaningful change.

0 notes

Text

Unlocking the Power of Artificial Intelligence and Machine Learning

Artificial Intelligence (AI) and Machine Learning (ML) have emerged as transformative technologies that are reshaping industries and revolutionizing our daily lives. As the demand for AI and ML professionals continues to soar, acquiring the right skills and knowledge becomes crucial for anyone seeking a career in this field. In this blog post, let’s explore the fascinating world of AI and ML and delve into the best online machine learning course offered by FutureSkills Prime, a renowned e-learning platform.

The Rise of AI and ML:

Artificial Intelligence refers to the development of intelligent machines that can perform tasks that typically require human intelligence. Machine Learning, on the other hand, is a subset of AI that focuses on algorithms and statistical models, allowing systems to learn from data and make predictions or decisions. Together, AI and ML are driving advancements in various sectors such as healthcare, finance, transportation, and more.

The future of Artificial Intelligence and Machine Learning:

The future of artificial intelligence and machine learning holds tremendous potential and is poised to revolutionize numerous aspects of our lives. Here are some key areas where AI and ML are expected to make a significant impact:

Automation and Robotics: AI-powered automation is already transforming industries by streamlining processes, increasing efficiency, and reducing costs. With further advancements, we can expect to see increased integration of robots and intelligent machines in various sectors, including manufacturing, healthcare, logistics, and more.

Smart Cities: AI and ML can play a vital role in creating smart cities that are sustainable, efficient, and interconnected. From optimizing energy usage and improving transportation systems to enhancing public safety and managing resources, these technologies can help transform urban living.

Cybersecurity: As cyber threats become more sophisticated, artificial intelligence and machine learning are becoming crucial in cybersecurity. These technologies can analyze vast amounts of data, identify patterns, and detect anomalies, enabling early threat detection and proactive defense mechanisms.

Personalized Experiences: AI and ML enable personalized experiences across various domains. From personalized recommendations in e-commerce and entertainment to personalized healthcare and education, these technologies can tailor services to individual preferences and needs.

Ethical Considerations: As AI and ML become more prevalent, ethical considerations surrounding bias, privacy, and accountability will be crucial. Efforts are being made to ensure transparency, fairness, and responsible deployment of AI/ML systems to address these concerns.

As AI and ML continue to shape the future, it's essential to stay ahead by equipping yourself with the right skills, expertise, and knowledge. FutureSkills Prime's online machine learning certification course and artificial intelligence course provides an excellent opportunity to dive into this exciting field and build a solid foundation.

Here are the reasons why FutureSkills Prime stands out:

Expertly Designed Curriculum

Hands-On Practical Experience

Industry-Relevant Content

Engaging Learning Experience

Recognition and nasscom Certification

Access to Prime Career Fairs

Cashback from Government

By enrolling on FutureSkills Prime’s AI & ML courses, you will gain a comprehensive understanding of AI/ML concepts, develop practical skills, and receive industry-recognized certification. Embrace the limitless possibilities of AI/ML and embark on an enriching learning journey with FutureSkills Prime.

0 notes

Text

Global Ethical Considerations Regarding Mandatory Vaccination in Children

Savulescu, Alberto Giubilini, and Margie Danchin. 2021. “Global Ethical Considerations Regarding Mandatory Vaccination in Children.” The Journal of Pediatrics 231(1):10-16.

Amidst the Covid-19 pandemic the debate on whether vaccines should be mandatory has only intensified. Many experts argue that a degree of coercion must be used to ensure the safety of the greater population, but others argue this would infringe on the will of the child and the parents. The debate lies within whether vaccines should be mandated or voluntary, thus an assessment on the benefits and risks involved in both cases is vital.

Benefits involved with mandatory vaccines:

* An ill individual can pose a serious threat to the population by carrying an infectious agent that puts others at risk of infection.

* Mandatory vaccines prevent the serious harming of others through infection, especially when harm can result from inaction(voluntary vaccines).

* Economic effects: Influenza alone accounted for $10.4 billion of the US budget due to costly hospitalizations and treatments. Influenza is a vaccine-preventable disease, vaccines can severely reduce the amount needed to treat an illness like influenza.

* Much higher probability of reaching herd immunity: many individuals are vaccinated which severely limits the infections ability to spread.

Risks involved with mandatory vaccines:

* Incentives raise the issue of ethical concerns, since if not appropriately implemented can result in exploitation or lead to undue inducement.

* Some children have cultural and religious beliefs that prevent them from becoming vaccinated, mandatory vaccines would cause conflict for said children.

Benefits involved with voluntary vaccines:

* Many countries believe that voluntary vaccination programs can achieve sufficient coverage and protect the older population.

* Liberty would be an empty concept without risk. Everything entails some degree of risk like driving a car. However, it should be noted that everything that has an element of risk comes heavily regulated and with its own rules.

* Allows the population to come to their own decision on whether the vaccine is more helpful or harmful for them personally.

Risks involved with voluntary vaccines:

* If majority of the population opts to not take the voluntary vaccines then herd immunity will not be obtained which can lead to a spike in cases and result in a higher fatality rate.

* Vaccines are relatively less costly than hospitalization and treatments. Much more money would have to be expended to care for infected individuals for an otherwise preventable disease.

Mandatory vaccines help ensure the continual protection of the general population and allow for herd immunity to be reached. However, the ethical concerns should not be easily dismissed and instead should garner further discussion. Ultimately it is the nature of the disease that determines whether a vaccine should be mandated or voluntary. Mandatory vaccinations are justified when the public health benefit far outweighs the risks involved with such impositions.

0 notes

Text

My Answer to Case 6 Q2

1. The website lists the profiles of the individuals that are crossing the street. The test is based to decide which group would be the "lesser of two evils" morally but we couldn't know (and and therefore the car wouldn't know) any information about the people aside from age, gender, and physical condition (in most cases.) Would the choice made be different if we knew the profile of the people beforehand?

It might make a difference in some cases. For instance in the given example one option includes a criminal(though their crime isn't listed). There may be some people who would see that the same number of people would be injured or killed in either choice but seeing that one side included a criminal they may be more inclined to hit the group that has the criminal. Conversely a driver may opt to avoid the group that may included multiple pregnant women or doctors (etc.).

2. The main question is, what decision would you personally choose given the information of the outcome?

Looking at the information provided I'll assume that I know the identity of the people getting hit. Even though I would most likely try to find a third option where I'm the only one who gets hurt I'll say that the only options are the two provided. So I would most likely choose the second option because this way the doctor would survive, plus the people crossing in the second option are breaking the law crossing on red

3. The purpose of the scenario is to try and have the AI replicate human emotions and morals to determine which decision to make. However, if the AI were to think in a completely logical sense, what decision would it make?

If we're talking strictly logical then it would stand to reason that the AI would choose the second option because in that situation the people crossing are breaking the law, and if the AI is programmed to follow the laws, then that is the most likely option it would choose.

4. Because the car is self driving (and we'll assume that in this scenario there are absolutely no fail safes to give the driver control. So every decision is made by the program driving the car) and is programmed to ensure the safety of the driver ahead of others, would the driver still be held responsible for the actions of the car?

Taking into consideration the above restriction, I don't think that the driver would be held responsible in this situation. However, it's difficult to say who would be held responsible.

5. How can you apply deontological ethics to this case?

Robot ethics are designed to follow certain rules while attempting to imitate human reaction and emotion, but the main concern is to make decisions that ensure the safety of the people in the car. Technically in either case the safety of the driver/passengers is ensured and the casualties are the same so in order for the case to be considered ethical in terms of deontological ethics then the car would need to swerve.

6. How can you apply utilitarian ethics to this case?

It's difficult to apply utilitarian ethics in this case because it's hard to say which would be the more ethical choice if the driver was a human. One could state that both options have the same ethical weight because the number of casualties would be equal (the same amount of people with similar identities). There is no positive or negative action to take that would result in anything other than a negative outcome.

7. How can you apply virtue ethics to this case?

Again it's difficult to apply this type of ethics. It comes down to if the person committing the action sees their action as ethical. In this instance the AI would have to consider whatever choice it decided to be the most ethical option.

0 notes

Text

A missing ingredient in COVID oversight: Equity

New Post has been published on http://khalilhumam.com/a-missing-ingredient-in-covid-oversight-equity/

A missing ingredient in COVID oversight: Equity

By Joseph Foti, Norman Eisen The response to COVID-19 is not just record-level spending and borrowing. It may already constitute a wealth reallocation of historic proportions. The implications for equity, future growth, and climate are tremendous. The health and economic crises – and in some cases, the government response to them – have not only been felt more acutely in particular businesses and industries. They have also disproportionately hurt black- and minority-owned businesses and the communities they serve. As experts at watchdog organizations as well as our own respective organizations have pointed out, transparency and oversight are essential to ensuring a fair recovery that meets the needs of those who are struggling the most. The 20th century transparency toolkit will not be enough by itself. Moving forward, the “holy trinity” of transparency and anti-corruption reform – fighting against waste, fraud, and abuse – needs a fourth element: striving for equity. The case for this approach remains fundamental; more efficient spending means money for other programs or lower taxes that benefit the average citizen. To capture the differential impacts of federal actions, oversight institutions must ask and answer the right questions as a matter of racial, social and economic justice. They must be able to gather and generate the data they need and guide implementation at agencies. This will help inform citizens about who received the money, why, and who benefited from it. Critics on the left and the right, as well as non-partisan observers have raised questions of distributional appropriateness in stimulus spending. The concern, then should not be partisan, but rather a basic element of policy analysis. Examples from the CARES Act (Pub.L. 116-136) show why we need to better prioritize considerations about equity.

Bond buying rejuvenated financial markets, but left citizens and small businesses behind. The CARES Act directs the Federal Reserve Bank (Fed) to buy bonds to support markets and employment. The Fed projects holding $9T in assets by the end of the year, a four-fold increase over the decade. The Fed began large capital bond buying well before it began supporting small and mid-sized companies. This came shortly on the heels of a regulatory process exempted from the Administrative Procedures Act seen by some to have favored large capital and the oil industry. This bears particular relevance to minority-owned businesses: according to McKinsey, because these businesses are smaller and have less access to traditional banks, they were considerably less able to access capital.

Tax refunds allowed executives to profit from the crisis. The problem of inequality is not only that smaller or minority-owned businesses get less access to relief. The problem is also the disproportionate and sometimes questionable relief at the top. A legal, but questionable, example stands out. The CARES Act authorized oil companies to obtain tax refunds on net operating losses from prior years. As a result of this, Diamond Offshore Drilling, already in bankruptcy proceedings, saw an immediate benefit of $9.7M in tax rebates. Shortly thereafter, the company asked the bankruptcy judge to permit a coincident $9.7M bonus to nine executives. This was discovered through Securities and Exchange Commission Proprietorships or other legal vehicles are not subject to the same requirements of non-profits or publicly traded companies.

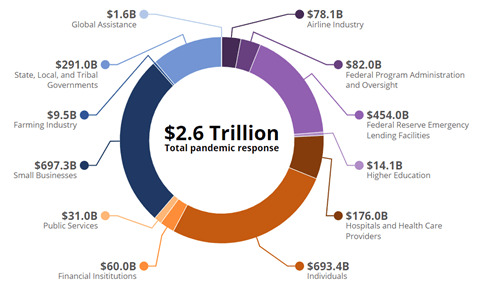

Without accountability for misuse of funds, some companies applied for and received Paycheck Protection Program (PPP) loans despite having ready access to ample capital. The fiscal elements of stimulus ($2.6 trillion) include loans, grants and direct assistance to individuals and organizations. Intended recipients of PPP funding are those companies that, without federal assistance, would be unable to retain their workforces. However, some businesses that received hefty credit extensions or additional loans shortly before the pandemic hit also received a federal loan. For example, Legacy Housing, a Texas-based manufacturer of pre-built homes, announced on April 1 its access to $25 million in credit. On April 10, it received an additional $6.5 million S.B.A loan from the federal government. (In response to reporting, Legacy returned the $6.5 million in federal assistance, according to the company’s executive chairman. The chairman added that “Legacy is a highly leveraged company without cash on hand. Here was a way to get a cash infusion.”)

Black and minority-owned businesses struggled to get relief under the CARES Act. While lawmakers intended for the program to prioritize “underserved” markets and business owners of color, independent analyses indicate that counties with higher ratios of black-owned businesses tended to have lower rates of PPP allocation.

Reducing operational barriers for companies creates health, safety, and environmental risks for vulnerable groups. According to a recent cross-national comparison, the US has been found to have had one of the least green stimulus packages— with serious environmental justice and generational consequences. While a detailed list of tax expenditures is not available, a worldwide survey showed they include foregone revenue, subsidies, waivers of regulation, participation, and oversight, and weakened safety or environmental liability. These non-financial elements of stimulus are estimated at $298 billion and may disproportionately benefit industries which do significant damage to the environment and human health. Evidence shows that such environmental damage is most severe in communities of color.

Another questionable tax expenditure: the rate of depreciation for most commercial real estate was lowered from 39 to 15 years. It is unclear how this will affect federal coffers, how this addresses the effects of COVID-19 and whether such generous changes in tax code will sunset after time.

Despite strong work by some reporters, think tanks, and some legislators, no official agency is tasked with identifying whether money reached those individuals, businesses, and communities hardest hit by the pandemic and its economic effects. It is not enough to have non-profits and the media sector ask questions about who benefits from record spending. It requires big data and the stamp of official, impartial review, and clear guidance for civil servants making policy. The Pandemic Response Accountability Committee (PRAC) has taken some positive steps in tracking who received major sources of spending. (See figure below for their reporting.) While a good start, many of the categories leave questions about whether these benefitted the most affected or the most connected. FIGURE: A promising start: PRAC reporting on the destination of US Stimulus Money Various reforms could be undertaken to monitor the distributional impacts of the recovery.

Create or adapt oversight institutions: This could require a new institution (an “Office of Distributional Impacts”) or modifying an existing institutional mandate (such as OMB or the PRAC), not necessarily requiring legislation.

Track costs and benefits for minority communities: A core aspect of these institutions’ mandates would be public reporting on distribution of costs and benefits of federal actions across race, class, gender, generation, geographic region, and size of enterprise. A variety of tools and methods could be used, whether integrated into existing informational assessments (environmental or cost-benefit analysis) or as standalone documents.

Develop new standards of adequacy: Early stages of reporting might focus only on whether benefits went to communities with the greatest COVID-19 impacts. A more complex process might report on whether costs and benefits change depending on the discount rate. Over time, standards of adequacy and quality would develop.

None of this would predetermine whether a program should be undertaken. Rather, like other forms of impact assessment, this would be a set of responsibilities and processes to identify, predict, evaluate, and potentially mitigate the distributional effects of an action or major decision. Americans deserve transparency about whether their money was leveraged effectively. While many have done yeoman’s work of researching these difficult issues, it should not primarily remain the work of non-governmental actors alone to ask basic questions about whether tax dollars are driving people together or apart. Joseph Foti is the Chief Research Officer of the Open Government Partnership. He leads the Analytics and Insights team which is responsible for major research initiatives, managing OGP’s significant data resources, and ensuring the highest quality of analysis and relevance in OGP publications. Ambassador Norman Eisen (ret.) is a senior fellow in Governance Studies at Brookings and an expert on law, ethics, and anti-corruption.

0 notes

Text

How to make robots that we can trust

by Michael Winikoff

Self-driving cars, personal assistants, cleaning robots, smart homes - these are just some examples of autonomous systems.

With many such systems already in use or under development, a key question concerns trust. My central argument is that having trustworthy, well-working systems is not enough. To enable trust, the design of autonomous systems also needs to consider other requirements, including a capacity to explain decisions and to have recourse options when things go wrong.

When doing a good job is not enough

The past few years have seen dramatic advances in the deployment of autonomous systems. These are essentially software systems that make decisions and act on them, with real-world consequences. Examples include physical systems such as self-driving cars and robots, and software-only applications such as personal assistants.

Read more: Driverless cars could see humankind sprawl ever further into the countryside

However, it is not enough to engineer autonomous systems that function well. We also need to consider what additional features people need to trust such systems.

For example, consider a personal assistant. Suppose the personal assistant functions well. Would you trust it, even if it could not explain its decisions?

To make a system trustable we need to identify the key prerequisites to trust. Then, we need to ensure that the system is designed to incorporate these features.

A trustworthy robot may need to be able to explain its decisions. from shutterstock.com, CC BY-ND

What makes us trust?

Ideally, we would answer this question using experiments. We could ask people whether they would be willing to trust an autonomous system. And we could explore how this depends on various factors. For instance, is providing guarantees about the system’s behaviour important? Is providing explanations important?

Suppose the system makes decisions that are critical to get right, for example, self-driving cars avoiding accidents. To what extent are we more cautious in trusting a system that makes such critical decisions?

These experiments have not yet been performed. The prerequisites discussed below are therefore effectively educated guesses.

Please explain

Firstly, a system should be able to explain why it made certain decisions. Explanations are especially important if the system’s behaviour can be non-obvious, but still correct.

For example, imagine software that coordinates disaster relief operations by assigning tasks and locations to rescuers. Such a system may propose task allocations that appear odd to an individual rescuer, but are correct from the perspective of the overall rescue operation. Without explanations, such task allocations are unlikely to be trusted.

Providing explanations allows people to understand the systems and can support trust in unpredictable systems and unexpected decisions. These explanations need to be comprehensible and accessible, perhaps using natural language. They could be interactive, taking the form of a conversation.

If things go wrong

A second prerequisite for trust is recourse. This means having a way to be compensated, if you are adversely affected by an autonomous system. This is a necessary prerequisite because it allows us to trust a system that isn’t 100% perfect. And in practice, no system is perfect.

The recourse mechanism could be legal, or a form of insurance, perhaps modelled on New Zealand’s approach to accident compensation.

However, relying on a legal mechanism has problems. At least some autonomous systems will be manufactured by large multinationals. A legal mechanism could turn into a David versus Goliath situation, since it involves individuals, or resource-limited organisations, taking multinational companies to court.

More broadly, trustability also requires social structures for regulation and governance. For example, what (inter)national laws should be enacted to regulate autonomous system development and deployment? What certification should be required before a self-driving car is allowed on the road?

It has been argued that certification, and trust, require verification. Specifically, this means using mathematical techniques to provide guarantees regarding the decision making of autonomous systems. For example, guaranteeing that a car will never accelerate when it knows another car is directly ahead.

Incorporating human values

For some domains the system’s decision making process should take into account relevant human values. These may include privacy, human autonomy and safety.

Imagine a system that takes care of an aged person with dementia. The elderly person wants to go for a walk. However, for safety reasons they should not be permitted to leave the house alone. Should the system allow them to leave? Prevent them from leaving? Inform someone?

Deciding how best to respond may require consideration of relevant underlying human values. Perhaps in this scenario safety overrides autonomy, but informing a human carer or relative is possible. Although the choice of who to inform may be constrained by privacy.

Making autonomous smarter

These prerequisites – explanations, recourse and humans values – are needed to build trustable autonomous systems. They need to be considered as part of the design process. This would allow appropriate functionalities to be engineered into the system.

Addressing these prerequisites requires interdisciplinary collaboration. For instance, developing appropriate explanation mechanisms requires not just computer science but human psychology. Similarly, developing software that can take into account human values requires philosophy and sociology. And questions of governance and certification involve law and ethics.

Finally, there are broader questions. Firstly, what decisions we are willing to hand over to software? Secondly, how society should prepare and respond to the multitude of consequences that will come with the deployment of automated systems.

For instance, considering the impact on employment, should society respond by introducing some form of Universal Basic Income?

Michael Winikoff is a Professor in Information Science at the University of Otago.

This article was originally published on The Conversation.

#technology#artificial intelligence#technopolitics#robots#driverless cars#autonomous vehicles#featured

19 notes

·

View notes

Photo

Medical Marijuana Patient Consent

While medical marijuana is recognized as a viable treatment for patients suffering from certain conditions, the legal ramifications are more delicate. Studies show — and clinical trials continue to demonstrate — that there are several medicinal uses for cannabis products. Medical marijuana, however, cannot be handled like other pharmaceutical interventions.

The War on Medical Marijuana

The Federal Drug Administration (FDA) evaluates substances that can potentially be used as medicine. When a new drug is discovered, the FDA evaluates it before it can be marketed to patients. They also decide if a medicine should be available over-the-counter or only with the prescription of a physician.

According to the FDA, marijuana is a banned substance that has no medicinal value. The FDA banned marijuana decades ago in response to concerns that it caused violent behavior. In recent years, 23 states have passed legislation making access to cannabis products for medical purposes legal for patients with certain conditions. The doctors are caught in the middle.

Doctors who want to use marijuana to treat their patients walk a fine line between state and federal law, as their medical licenses might hang in the balance. It isn’t possible for a doctor to prescribe marijuana, since the FDA runs the prescription system in this country. A doctor attempting to write a prescription for a banned substance might risk his medical license.

Those who follow recent medical research, however, know that cannabis can be effective in relieving debilitating symptoms of some serious chronic disorders. Doctors who treat patients with seizure disorders, chronic pain or who are undergoing chemotherapy are anxious to relieve the suffering they see every day.

What Is Patient Consent?

Patient consent is a legal term familiar to anyone who practices medicine with patients. Patient consent is designed to ensure doctors educate their patients about the treatments, test and procedures they undergo. A doctor must explain the treatment to the patient in a way that the patient understands what to expect. They must also discuss the risks and potential outcomes.

Patient consent confirms the patient is giving the doctor permission to perform the procedure, understanding the risks. It follows the legal principle that a patient has the right to decide what treatments he undergoes. A physician has an ethical duty to include the patient in healthcare decisions.

Fully informed consent includes discussing:

Purpose and nature of the treatment

Feasible alternative treatments

Risks and benefits to all alternative treatments

Confirmation of patient understanding

Patient consent

Patient consent may only be presumed or implied in emergency situations. For all other medical treatments and procedures, a written consent needs to be signed by the patient.

Getting Consent to Treat With Medical Marijuana

Medical marijuana creates a dilemma for doctors because of its strange legal position. While the state where you practice might consider cannabis a legal and viable treatment for your patients, the FDA doesn’t. Getting consent from your patient to treat with medical cannabis is especially important.

The patients who might gain the most benefit from marijuana treatment are also some of the riskiest. They have severe medical conditions that may predispose them to adverse outcomes from any type of treatment. In the case of cannabis treatment, you’re also working in a confusing legal environment.

Most states with medical marijuana programs also have a clearly defined standard of care. It’s important to stick to the state guidelines, so the state is assuming some of the risk. If there is a negative outcome from cannabis treatment, you can rely on the fact that you followed the state-sanctioned protocol for marijuana treatment.

A thorough patient consent for medical marijuana treatment includes informing your patient that:

Federal law prohibits the possession, production and distribution of marijuana in any form for any purpose. Even conforming to state law with respect to marijuana possession and usage cannot protect you from federal prosecution.

Medical marijuana isn’t produced according to any government standards. Unlike other medicines, the FDA does not oversee cannabis production. Medical cannabis could contain contaminants or other substances not required for your treatment — you’re at the mercy of the quality control systems put in place by local cultivators.

While under the influence of marijuana, you can’t operate a vehicle or heavy equipment. You can be ticketed for driving under the influence if you’re caught in your car with cannabis in your system.

Using marijuana could increase symptoms from certain mental disorders like schizophrenia.

Cannabis should not be consumed with alcohol or used in a car.

You want to be sure your patient is giving you the most recent and honest information about their condition before recommending marijuana treatment. Patients should attest to the truth of all their statements and be required to follow up with you periodically.

Treating Patients While Protecting Doctors

There are a few legal considerations when treating a patient with medical marijuana. Your concern for your patient’s wellbeing comes first, but if you put your medical license in jeopardy, you won’t be able to help patients in the future. It’s a smart idea to understand the legal situation you face and navigate it carefully.

Under the First Amendment, you have a right to speak what you see as the truth. Although your actions may be restricted by the FDA, medical ethics bind you to share credible information with your patients. If you truly believe their suffering can be lessened by medical cannabis products, you have a duty to share this information.

No one can prosecute you for speaking freely to your patients — that’s why doctors recommend marijuana treatment rather than prescribe it. Writing a prescription for a banned substance is prohibited, but discussing its use is perfectly fine.

If you’re concerned about the legal risks of treating patients with medical marijuana, be cautious about your written communications on the subject. Your duty for record-keeping as a doctor requires a medical record for each patient visit. That’s where you can mention your recommendation for cannabis along with other information about the patient visit. Your patient is entitled to a copy of this medical record, and should be able to obtain the medicine they need with this document.

Patient consent is an important part of treating patients, especially with medical marijuana. Be sure to secure adequate consent in writing with a detailed consent form specifically tailored to cannabis treatment. Treating your patients requires protecting your medical license.

Example Medical Marijuana Consent Form

Below is an example medical marijauna consent for for illustrative purposes. A PDF version is available for download below.

Download Example Medical Marijuana Consent Form

_________________________________________________________________________

Medical Marijuana Consent Form

A qualified physician may not delegate the responsibility of obtaining written informed consent to another person. The qualified patient or the patient’s parent or legal guardian if the patient is a minor must initial each section of this consent form to indicate that the physician explained the information and, along with the qualified physician, must sign and date the informed consent form.

a. The Federal Government’s classification of marijuana as a Schedule I controlled substance.

_____ The Federal Government has classified marijuana as a Schedule I controlled substance. Schedule I substances are defined, in part, as having (1) a high potential for abuse; (2) no currently accepted medical use in treatment in the United States; and (3) a lack of accepted safety for use under medical supervision. Federal law prohibits the manufacture, distribution and possession of marijuana even in states, such as Florida, which have modified their state laws to treat marijuana as a medicine.

_____When in the possession or under the influence of medical marijuana, the patient or the patient’s caregiver must have his or her medical marijuana use registry identification card in his or her possession at all times.

b. The approval and oversight status of marijuana by the Food and Drug Administration.

_____Marijuana has not been approved by the Food and Drug Administration for marketing as a drug. Therefore, the “manufacture” of marijuana for medical use is not subject to any federal standards, quality control, or other oversight. Marijuana may contain unknown quantities of active ingredients, which may vary in potency, impurities, contaminants, and substances in addition to THC, which is the primary psychoactive chemical component of marijuana.

c. The potential for addiction.

_____Some studies suggest that the use of marijuana by individuals may lead to a tolerance to, dependence on, or addiction to marijuana. I understand that if I require increasingly higher doses to achieve the same benefit or if I think that I may be developing a dependency on marijuana, I should contact Dr. _________________ (name of qualified physician).

d. The potential effect that marijuana may have on a patient’s coordination, motor skills, and cognition, including a warning against operating heavy machinery, operating a motor vehicle, or engaging in activities that require a person to be alert or respond quickly.