Don't wanna be here? Send us removal request.

Text

Keto BHB

What is XOTH Keto BHB

THE RIGHT CHOICE?

A recent study published by the Diabetes, Obesity, and Metabolism Journal found that Xoth Keto BHB supported burning fat for energy instead of carbohydrates greatly increasing weight loss and energy. Furthermore, TV shows, recently named Xoth Keto BHB the “Holy Grail” of weight loss for good reason — IT WORKS.

It is important to note that the Xoth Keto BHB with 100% BHB (Beta-Hydroxybutyrate) used in the study was the real deal and Xoth Keto BHB exceeds the studies product potency using proprietary methods.

Bottom Line: It Works and it’s Better for your Health!

Ketosis is the state where your body is actually burning fat for energy instead of carbs. Ketosis is extremely hard to obtain on your own and takes weeks to accomplish. Xoth Keto BHB actually helps your body achieve ketosis fast and helps you burn fat for energy instead of carbs!No More Stored Fat: Currently with the massive load of carbohydrates in our foods, our bodies are conditioned to burn carbs for energy instead of fat. Because it is an easier energy source for the body to use up.Fat — The New Energy: Ketosis is the state where your body is actually burning fat for energy instead of carbs. Ketosis is extremely hard to obtain on your own and takes weeks to accomplish. Xoth Keto BHB actually helps your body achieve ketosis fast and helps you burn fat for energy instead of carbs!More Health Benefits: Xoth Keto BHB works almost instantly to help support ketosis in the body by Burning FAT for energy. Fat IS the body’s ideal source of energy and when you are in ketosis you experience energy and mental clarity like never before and of course very rapid weight loss.

0 notes

Text

Keto Cook Book Free

Physical Cook Book Free Hurry Up Only Few Left

Get Your

FREE Physical Copy of

The Essential Keto Snacks Cookbook Today!

Claim Here To Get a Free Book

1 note

·

View note

Text

Pokemon Go | Gameplay | Augmented Reality Gaming | Pokemon Collection | Battel System | Development | Pokemon Go Plus

Pokemon Go

Pokemon Go is an AR Mobile Game (Augmented Reality Mobile Game). This game is developed by Niantic. In the cooperation or collaboration of Nintendo and the Pokemon Company. The company made this gaming platform for iOS and Android devices. With the permission of Pokemon company the Nintendo company collaborated with Pokemon Company and the result is in front of us.

Pokémons collection Gyms and Raids The Nintendo and Pokemon Company made a game which can be played on mobile devices. This game uses mobile device location(GPS), trains,captures, and battles virtual creatures called Pokémon.They appear in the Player’s real location.

The game is free for play. Company uses freemium business model support in app purchases for addition in the game module and united with local advertising. The game was launched with all ve 150 species of Pokemon. And in the current time these species have increased by around 700. This game is the most prominently utilised portable application in 2016, and had been downloaded 500 million times worldwide toward the finish of 2016.

This game is location based and Augmented Reality (AR) Technology. This game helps local businesses to grow and promote physical activity. It attracts disputes for providing accidents and creating public trouble. Governebts expressed their concern about game security. And some countries control its securities and use. In May 2018 the game had 147 million over users. And in 2019 the company had over a billion users and in 2020 more than 6 billion users.

0 notes

Text

Graphic Design | Graphic Design Courses | Career Opportunities for Graphic Designer

What Graphic design is?

The graphic depiction is characterized as “the workmanship and practice of arranging and projecting thoughts and encounters with visual and printed content.” In exclusive terms, visible depiction imparts a unique mind or messages outwardly. These visuals may be pretty much as sincere as an enterprise logo, or as complex as web page designs on a site.

“Visual depiction takes graphical and literary components and executes them into different kinds of media,” says planner Alexandros Clufetos, when requested to expound on the visual computerization definition. “It assists the maker with associating the buyer. It passes on the message of the venture, occasion, task or item.”

The visual depiction may be used by businesses to improve and promote gadgets thru promoting, through websites to buy skip on convoluted records in a fit to be eaten way thru infographics, or through businesses to foster a person thru marking, similarly to different things.

“Consistently, we take a huge quantity of the quietly imaginative topics around us for granted.” However, hidden in each magazine corner, departing sign or reading material lies a group of plan minds that have an effect on our insights,” says Jacob Smith, author of delineation studio ProductViz.

“It’s likewise vital to take into account that albeit numerous visible depiction tasks have enterprise functions like notices and logos, it’s far moreover applied in one-of-a-kind settings and visible depiction paintings are regularly made virtually as a way for innovative articulation.”

0 notes

Text

Video Editing | Faster Video Editor | Free Video Editing Software

Video altering is the most common way of controlling and revising video shots to make another work. Altering is generally viewed as one piece of the after creation process — other after creation assignments incorporate naming, shading remedy, sound blending, and so forth.

Many people make use of the time period changing to depict all their after introduction work, especially in non-talented circumstances. Whether or not you longer make a decision to be specific close to wording depends upon you. In this educational exercise, we’re sensibly liberal with our phraseology and we make use of the phrase changing to intend any the accompanying:

Improving, adding as well as eliminating areas of video cuts and additionally snippets. Applying shading revision, channels, and different improvements. Making advances between cuts.

How to become a faster video editor

As we go through I realize we’ve effectively got a few inquiries regarding this, is exactly how would we, and we don’t need to address right this second, individuals are searching for how would we accelerate this interaction so they can improve quality, you know better quality however generally quicker and not going the entire day doing it?

Definitely, so I think the main thing is to be acquainted with the product that you will utilize.

Certain individuals are truly sharp and acquainted with utilizing menu things like up here at the highest point of Camtasia. All the altering apparatuses are here. Be that as it may, the majority of them, if not all of them, have a related console alternate way. Those are extremely useful.

In the event that you know your console has easy routes, and the majority of them across Windows stage is essentially something similar for duplicate, and glue, and cut. Realizing those are truly useful. So console alternate ways number one. Two is knowing the remainder of your product so you’re not burrowing for something. Shortening the cycles.

Make several great recordings, make some that are practice ones. Make something on a subject that you’re as of now really acquainted with. Do you realize how to open another email address? Do you not shop in a specific web-based shopping climate? Make those recordings with the goal that the initial time isn’t your first time. You’re permitting yourself the capacity to learn through that cycle. Thirdly, utilize a type of format library if possible.

0 notes

Text

Machine Learning | Artificial Intelligence | Types of Machine Learning | Deep Learning | Algorithm Machine Learning

Machine Learning

The study of computer algorithms that can upgrade automatically through experience and by the use of data is Machine learning. It is a part of artificial intelligence. The algorithms of Machine learning made the models based on sample data. Sample data is known as training data. While giving a decision or prediction without being explicitly being programmed to do so.

Machine learning algorithms are used in medicines and a wide variety of applications, speech remembrance, email filtering, and computer vision, it is difficult to develop the traditional algorithms to perform the required tasks. The subgroups of machines are correlated with computer statistics. Which focuses on using computers to make predictions; but not all machine learning is statistical learning.

Subdivisions of Machine Learning are firmly related to computational statistics. Which focuses on presage using computers but not all Machine Learning is Statistical Learnings. Some executions of Machine Learning use data and neural networks in a way that mimics the operating of the biotic brain. In its application through business problems, machine learning is also mentioned as Predictive Analysis.

What is Artificial Intelligence(AI)

Artificial Intelligence is the science developed to make machines act and think like humans. We think this is easy, but no subsist computer begins to match the complications of human intelligence. Computers excel at ensuring rules and implementing tasks, but sometimes relatively simple actions for a person might be tremendously complex for a computer.

Eg. Conveying the tray of drinks through a pervade bar and serving it to correct customers is something servers do every day, it is a complicated exercise in decision making and based on a high volume of data being transmitted between neurons in the human brain.

Computers are not there yet but deep learning and machine learning are steps towards the key element of these goals. Examine the large volume of data and make decisions based on it with as little human mediation as possible.

2 notes

·

View notes

Text

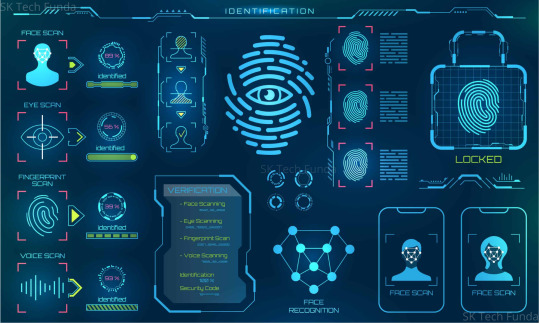

Biometrics in 2021 | Types of Biometrics | Biometric Data Security | Ways to Protect Biometric Identity Biometrics in 2021

Biometrics is ascending as a high-level layer to numerous individual and venture security frameworks. With the exceptional identifiers of your science and practices, this might appear to be secure. Notwithstanding, biometric personality has made numerous wary with regards to its utilization as independent confirmation.

Present-day network protection is centered around decreasing the dangers for this amazing security arrangement: conventional passwords have for quite some time been a state of a soft spot for security frameworks. Biometrics intends to reply to this trouble via means of connecting affirmation of character to our bodies and requirements of conduct.

In this article, we’ll investigate the rudiments of how network protection utilizes biometrics. To assist with separating things, we’ll answer some normal biometrics questions:

In this review of biometrics, you’ll get solutions to those questions:

What does biometrics mean?

What are the types of biometrics? (examples of biometric identifiers)

Why biometrics?

Who invented biometrics? (history of biometrics)

What is biometrics used for? (use cases in 7 significant domains)

Is biometrics accurate and reliable in 2021?

Why is biometrics controversial?

And much more

What is Biometrics?

For a speedy biometrics definition: Biometrics are natural estimates — or actual qualities — that can be utilized to recognize people. For instance, unique finger impression planning, facial acknowledgment, and retina examinations are large types of biometric innovation, yet these are only the most perceived choices.

Analysts guarantee the state of an ear, the manner in which somebody sits and strolls, exceptional personal stenches, the veins in one’s grasp, and surprisingly facial twistings are other interesting identifiers. These qualities further characterize biometrics.

1 note

·

View note

Text

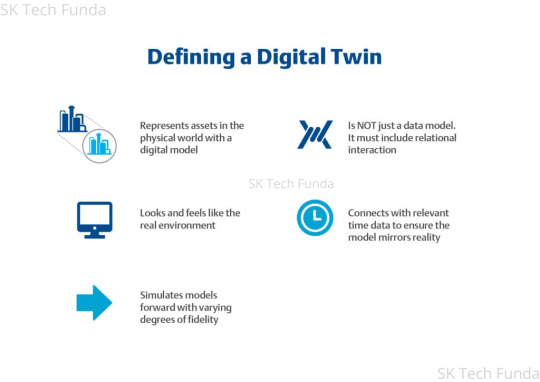

Digital Twin Technology:The Drive towards New Technology

Digital Twin is virtual imitations of actual gadgets that information researchers and IT masters can use to run reenactments before real gadgets are assembled and conveyed. They are likewise changing how innovations like IoT, AI, and investigation are upgraded. Digital twin innovation has moved past assembling and into the combining universes of the Internet of Things, man-made consciousness, and information examination.

As more mind-boggling things become associated with the capacity to deliver information, having an advanced comparable gives information researchers and other IT experts the capacity to enhance organizations for top productivity and make others consider the possibility of situations.

A digital twin is a computerized portrayal of an actual item or framework. The innovation behind Digital twin has extended to incorporate enormous things like structures, plants, and even urban communities, and some have said individuals and cycles can have Digital twin, growing the idea much further. The thought originally emerged at NASA: full-scale mockups of early space containers, utilized on the ground to reflect and analyze issues in a circle, in the end, gave way to completely advanced recreations.

Also Read: Docker Hub: The Choice for Better Business Outcome

However, the term truly took off after Gartner named Digital twin as one of its main 10 key innovation patterns for 2017 saying that inside three to five years, billions of things will be addressed by Digital twin, a unique programming model of something actual or framework". Following a year, Gartner to be surely named progressed twin as a top example, saying that with a normal 21 billion related sensors and endpoints by 2020, Digital twin will do billions of things sooner rather than later.

Basically, a Digital Twin is a PC program that takes genuine information about an actual item or framework as data sources and delivers as results predictions or recreations of how that actual article or framework will be impacted by those information sources.

How does a Digital Twin work?

A Digital twin starts its life being worked by trained professionals, frequently specialists in information science or applied math. These engineers research the physical science that underlies the actual item or framework being emulated and utilize that information to foster a numerical model that recreates this present reality unique in computerized space.

The twin is developed so it can get input from sensors gathering information from a true partner. This permits the twin to mimic the actual article progressively, in the process offering bits of knowledge into execution and expected issues. The twin could likewise be planned dependent on a model of its actual partner, in which case the twin can give criticism as the item is refined; a twin could even fill in as a model itself before any actual adaptation is assembled.

Also Read: Home Security System: Keep Track While You’re Away, Enhance Security At Your Home

The interaction is illustrated in some detail in this post from Eniram, an organization that makes Digital twin of the huge compartment deliveries that convey a lot of world business, an incredibly complicated sort of Digital twin application. In any case, a digital twin can be as confounded or as straightforward as you prefer, and the measure of information you use to construct and refresh it will decide how definitively you're mimicking an actual item. For example, this instructional exercise diagrams how to construct a straightforward Digital twin of a vehicle, taking only a couple of information factors to register mileage.

A digital twin includes three principal components:

Past Data: Authentic execution information of individual machines, by and large cycles, and explicit frameworks.

Present Data: Continuous information from hardware sensors, yields from assembling stages and frameworks, and results from frameworks all through the circulation chain. It can likewise remember yields from frameworks for other specialty units, including client care and buying.

Future Data: AI just as contributions from engineers.

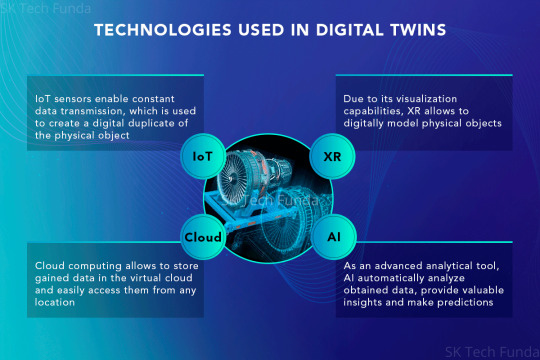

Digital Twin and IoT :

Let's see how digital Twin and IoT work together. Definitely, the explosion of IoT sensors is a part of what makes virtual twin possible. Also as IoT devices are refined, Digital twin circumstances can incorporate more modest and substantially less convoluted things, giving extra benefits to offices.

A digital twin can be used to expect exclusive results primarily based on variable records. that is similar to the run-the-simulation scenario regularly visible in technology-fiction movies, wherein a likely scenario is demonstrated within the digital surroundings. With additional product programs and information investigation, Digital twin can as often as possible streamline an IoT organization for max effectiveness, just as assist planners with knowing where things should go or how they perform before they're in essence conveyed.

The Best Thing is that a digital twin can reproduce the bodily object, the much more likely that efficiencies and different advantages may be located. For example, in manufacturing, where the more notably instrumented gadgets are, the more virtual twin might simulate how the gadgets have achieved over time, which can assist in predicting future overall performance and feasible failure.

Digital Twin and Cloud:

Digital twinning has already gained momentum to enable technology like cloud computing, artificial intelligence, and the net of things, whose improved strength and class have made digital twin more appealing and more feasible over the direction of the remaining decade. As with so many different technologies, however, the COVID-19 pandemic created new, pressing needs that acted as an accelerant on fire, growing both the velocity and scale of adoption. so much so that within the span of only a yr, virtual twin have ended up a critical device in a multitude of industries.

Digital Twin and AI:

A digital twin is definitely not another term, however, matched with headways in AI, it's inexorably significant in changing modern activities, making extra business esteem. The Digital Twin, which delivers a developing profile of a resource or interaction in a plant, catches experiences on execution across the plant life cycle (process plan, tasks, and upkeep).

It is likewise made progressively more clever by AI specialists, to where it can endorse activities in the actual world, so that organizations can reconfigure, continuously, with reality-driven decisions, substitute cycles to relieve issues like plant vacation or bottlenecking.

Giving an advanced view into regions like preparation and planning, request models, circulation models, and control and enhancement, and working on the effectiveness of those activities through prescriptive activity, prompts intensified business productivity (maybe the main advantage that rings a bell, for some, when considering carrying out Digital twin).

Benefits of Digital Twin:

Digital twin offer an ongoing gander at what's going on with actual resources, which can fundamentally lighten upkeep loads. Chevron is carrying out Digital twin tech for its oil fields and treatment facilities and hopes to save a large number of dollars in upkeep costs. What's more, Siemens as a feature of its pitch says that utilizing digital twin to show and model items that poor people have fabricated at this point can lessen item abandons and abbreviate time to showcase.

Yet, remember that Gartner cautions that Digital twin aren't constantly called for, and can superfluously build intricacy. A digital twin could be an innovation pointless excess for a specific business issue. There are likewise worries about cost, security, protection, and coordination.

Applications for Digital Twin:

Utilizing prescient support to keep up with gear, creation lines, and offices.

Improving comprehension of items by observing them continuously as they are utilized by genuine clients or end clients.

Producing process improvement.

Upgrading item recognizability processes.

Testing, approving, and refining suspicions.

Expanding the degree of joining between detached frameworks.

Remote investigating of hardware, paying little mind to geological areas.

Conclusion

Digital Twin Technology is continually progressing. This leads to new positions and businesses, like coding and man-made consciousness. Innovation gives creators training in AI, IT, plan, and many STEM fields. All of this is gainful on the grounds that it's assessed that AI will supplant 40% of occupations later on.

Frequently Asked Question?

What is the use of Digital Twin?

Ans - The digital twin permits the regular collection and distribution of information throughout the physical asset's complete lifecycle to maximize enterprise outcomes, optimize operations and investment (for each customer and ABB) thru statistics processing (eg, simulation, advanced analytics).

What technology is used in Digital Twin?

Ans - An Digital twin uses information from associated sensors to recount the narrative of a resource entirely through its life-cycle. From testing to use in reality. With IoT information, we can quantify explicit marks of resource wellbeing and execution, similar to temperature and dampness, for instance.

What are the types of Digital Twin?

Ans - There are three types of digital twin: Performance, Product, and Promotion.

1 note

·

View note

Text

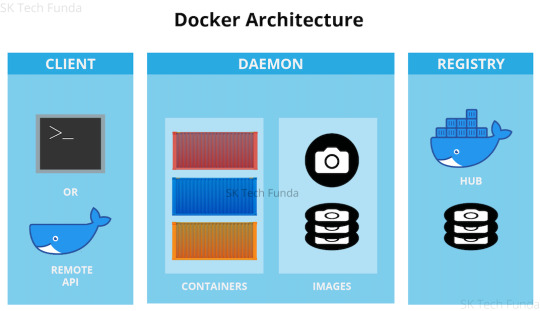

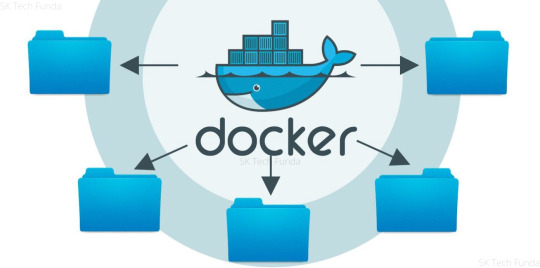

Docker Hub: The Choice for Better Business Outcome

Docker store and store cloud are presently important for Docker Hub which gives a solitary encounter to finding, sharing, and putting away compartment pictures. This obviously implies that docker confirmed and affirmed distributor pictures are presently accessible for download and revelation on docker center point. Docker center presents another client experience.

Thousands of organizations and millions of individual users use Docker Hub, cloud, and store for their container needs. This docker hub is designed to update to carry together the features that users of each product recognize and love the most, meanwhile addressing docker hub requests around repository and group control.

Docker removes redundant, everyday setup assignments and is utilized all through the improvement lifecycle for quick, simple, and versatile application advancement - work area and cloud. Docker's complete start to finish stage incorporates UIs, CLIs, APIs, and security that is designed to cooperate across the whole application conveyance lifecycle.

Docker Business:

With this declaration, Docker presented our new membership levels, including Docker Business–our contribution explicitly for associations who need to scale their utilization of Docker all while keeping up with security and consistency with added undertaking grade the board and control. Already, the base number of seats needed for a Docker Business membership was 50+, which restricted admittance to bigger associations.

In any case, Docker has been hearing from clients who need the additional elements and advantages that accompany Docker Business however don't right now meet the base seats. A few clients are prepared to take the action yet need to keep away from extensive deals and buy request (PO) processes. Others may likewise be keen on "trying out" Docker Business at a more limited size prior to focusing on a bigger rollout.

Indeed, today we've made it much more straightforward and more open than any time in recent memory for our clients to take the action to Docker Business.

Advanced Image Management:

how about we investigate the most recent component of docker expert and group client is the new progressed picture the board dashboard accessible on docker center. the brand new dashboard affords developers with a brand new degree of getting entry to all the content material you have got saved in Docker Hub supplying you with extra quality-grained management over getting rid of antique content material and exploring antique versions of driven images.

Generally in Docker Hub, we have had perceivability into the most recent variant of a label that a client has pushed, however, what has been exceptionally difficult to see or even comprehend is what befallen those old things that you pushed. At the point when you push a picture to Docker Hub, you are pushing a show, a rundown of every one of the layers of your picture, and the actual layers.

So when you want to update an existing tag, only the new layers will be pushed along the new manifest that reference these layers.it will be given a tag that you can specify when you push, such as bengotch/simple whale: latest. one thing you have to remember is that this does not mean that all of the old manifests which present at the previous layer that made up your images are removed from the hub.

This implies you can have many old forms of pictures that your frameworks can in any case be pulling by hash rather than by the tag and you might be uninformed of which old renditions are as yet being used. Alongside this, the main way as of recently to eliminate these old variants was to erase the whole repo and start once more!

We trust that you are energized for the initial step of us giving more prominent knowledge into your substance on Docker Hub, assuming you need to get everything rolling investigating your substance then everything clients can perceive the number of dormant pictures they have and Pro and Team clients can see which labels these used to be related with, what the hashes of these are and begin eliminating these today.

Docker Hub Autobuild :

As a significant number of you know, it has been a troublesome period for organizations offering free cloud processes. Shockingly, Docker's Autobuild administration has been designated by similar troublemakers, so today we are frustrated to report that we will be ending Auto expands on the complementary plan beginning from June 18, 2021.

Over the most recent couple of months, we have seen gigantic development in the number of troublemakers who are exploiting this assistance determined to manhandle it for crypto mining. Throughout the previous 7 years, we have been pleased to offer our Autobuild administration to every one of our clients as the most straightforward method for setting up CI for containerized projects. Just as the expanded expense of running the help, this sort of misuse occasionally impacts execution for paying Autobuild clients and prompts numerous restless evenings for our group.

Get an early advantage on your coding by utilizing Docker pictures to productively foster your own special applications on Windows and Mac. Make your multi-holder application utilizing Docker Compose. Incorporate with your beloved devices all through your advancement pipeline - Docker works with all improvement instruments you use including VS Code, CircleCI, and GitHub. Bundle applications as compact compartment pictures to run in any climate reliably from on-premises Kubernetes to AWS ECS, Azure ACI, Google GKE and that's only the tip of the iceberg.

New Self-Serve Payment Option:

Assuming you are prepared to take the action to Docker Business, you would now be able to buy a base 5 seats through credit/charge card by marking in or making a Docker account. Once bought, you have moment admittance to all the extraordinary administration and security highlights elite to Docker Business clients things like Image Access Management for confining client admittance to explicit pictures (e.g., Docker Official Images and Verified Publisher Images), a brought together administration console for complete perceivability on the entirety of your Docker surroundings, SAML single sign-on for consistent onboarding/offboarding (coming soon), and a whole lot more! Obviously, your designer groups can proceed to work together and keep up with the usefulness of Docker Desktop, our trusted multi-stage engineer apparatus.

What's more, you can without much of a stretch overhaul from your present membership to Docker Business. Essentially do as such by signing into your Docker Hub record and refreshing your charging plan there. Your present membership will be credited for the excess term, and the equilibrium will be applied to the expense of your new Docker Business membership. Another membership period will likewise be set.

Benefits:

Each association is in a pursuit to convey better business results, and top entertainers are utilizing programming development to get it going. Definitely, while overseeing many contending needs, programming pioneers will be confronted with a form versus purchase choice sooner or later. At the point when you consider a few variables including the expense of time, opportunity cost, time to esteem, the expense of safety hazards, and when DIY with OSS seems OK, the information shows that most associations will be in an ideal situation purchasing business programming versus building their own elective arrangements. Offloading the undifferentiated work diminishes interruptions and empowers designers to zero in on conveying worth to clients.

Keep it simple:

Docker eliminates intricacies for designers and assists them with accomplishing more prominent usefulness. We are proceeding to put resources into making mystically straightforward encounters for engineers while likewise conveying the scale and security organizations depend on. Docker offers memberships for designers and groups of each size, including our most current membership: Docker Business.

Move Fast:

Introduce from a solitary bundle to get ready for action in minutes. Code and test locally while guaranteeing consistency among advancement and creation.

Collaborate:

Utilize Certified and local area pictures in your venture. Push to a cloud-based application vault and work together with colleagues.

Conclusion

Docker is a containerization stage that bundles your application and every one of its conditions together as holders in order to guarantee that your application works consistently in any climate, be it an improvement, test, or creation. Docker holders, envelop a piece of programming by a total filesystem that contains all that is expected to run: code, runtime, framework apparatuses, framework libraries, and so on It wraps essentially whatever can be introduced on a server. This ensures that the product will consistently run something very similar, paying little mind to its current circumstance.

Frequently Asked Question?

1. What are Docker Images?

Ans - Docker Images is the wellspring of the Docker compartment. At the end of the day, Docker images are utilized to make holders. At the point when a client runs a Docker picture, a case of a holder is made. These docker pictures can be sent to any Docker climate.

2. What is Docker Hub?

Ans - Docker pictures create docker containers. There needs to be a registry wherein those docker images stay. This registry is Docker Hub. customers can pick up images from Docker Hub and use them to create customized pictures and packing containers. presently, the Docker Hub is the arena’s largest public repository of image containers

3. What is a Dockerfile?

Ans - Docker can assemble Images naturally by perusing the guidelines from a document called Dockerfile. A Dockerfile is a text report that contains every one of the orders a client could approach the order line to gather a picture. Utilizing docker assembler, clients can make a mechanized form that executes a few order line directions in progression.

4. What is Docker Machine?

Ans - A Docker machine is a device that allows you to introduce Docker Engine on virtual hosts. These hosts would now be able to be overseen utilizing the docker-machine orders. Docker machine additionally allows you to arrange Docker Swarm Clusters.

1 note

·

View note

Link

MUMBAI: India carried out a decimation of New Zealand, successful the second Take a look at by a record-breaking 372-run margin to clinch the two-Take a look at sequence 1-0 with comeback-man Jayant Yadav cleansing up the visiting workforce’s decrease half on the fourth morning right here on Monday.

1 note

·

View note

Text

Quantum Computing: The Next Generation High Performance Computing

Quantum Computing is the biggest revolution in computing due to the fact that computing. Our international is made of quantum information, but we perceive the arena in classical facts. This is, there is an entire lot taking place at small scales that are not reachable with our regular senses. As people we advanced to manage classical statistics, now not quantum records: our brains are wired to think about Sabertooth cats, no longer Schrodinger’s cats.

we will encode our classical facts easily sufficient with zeros and ones, however, what approximately gaining access to the extra statistics available that make up our universe? Can we use the quantum nature of reality to method facts? Of route, otherwise, we would have to give up this post right here and that would be unsatisfying to us all. permit’s discovered the power of quantum computing then gets you commenced writing a number of your very own quantum code.

Quantum PC frameworks do computations dependent on the chance of a thing's state sooner than it is estimated - instead of essentially 1s or 0s - which implies they can technique dramatically additional records when contrasted with old-style PCs. A solitary realm - consisting of on or off, up or down, 1 or zero - is known as a little.

Quantum Computing tackles the peculiarities of quantum mechanics to supply an enormous leap forward in the calculation to clear up certain difficulties. IBM planned quantum PC frameworks to take care of muddled issues that the furthest down the line greatest viable supercomputers can not satisfactory up, and in no way, shape or form will.

Google declared it has a quantum PC that is 100 million times quicker than any old-style PC in its lab. Quantum PCs will make it conceivable to deal with the measure of information we're producing in a time of enormous information. To keep quantum PCs stable, they require a cool environment.

Quantum Computing is a class of innovation that works by utilizing the standards of quantum mechanics (the material science of sub-nuclear particles), including quantum trap and quantum superposition. more exact medical care imaging through quantum detecting. all the more remarkable processing.

What is Quantum Computer:

Quantum Computers are machines that make use of the houses of quantum material technology to keep statistics and carry out calculations. This can be incredibly beneficial for specific assignments where they could inconceivably outflank even our best supercomputers.

Old-style PCs, which incorporate cell phones and workstations, encode data in double "bits" that can either be 0s or 1s. In a quantum computer, the essential unit of memory is a quantum cycle or qubit.

Qubits are made using genuine systems, similar to the touch of an electron or the heading of a photon. These frameworks can be in various courses of action at the same time, a property known as quantum superposition. Qubits can likewise be inseparably connected together utilizing a peculiarity called quantum ensnarement. The result is that a movement of qubits can address different things simultaneously.

For example, eight pieces are sufficient for a traditional computer to address any number somewhere in the range of 0 and 255. Nevertheless, eight qubits are adequate for a quantum PC to address each number someplace in the scope of 0 and 255 all the while. A few hundred trapped qubits would be adequate to address a more prominent number of numbers than there are particles in the universe.

This is the place where quantum Computers get their edge over old-style ones. In circumstances where there is an enormous number of potential blends, quantum PCs can consider them all the while. Models incorporate attempting to track down the excellent elements of an exceptionally enormous number or the best course between two spots.

Notwithstanding, there may likewise be a lot of circumstances where traditional PCs will in any case beat quantum ones. So the PCs of things to come might be a mix of both these sorts.

For the existing, quantum computers are altogether sensitive: warmth, electromagnetic fields, and contacts with air debris can motive a qubit to lose its quantum properties. This cycle, known as quantum decoherence, makes the system crash, and it happens every one of the more quickly the more particles that are involved.

Quantum PCs need to shield qubits from outer impedance, either by truly disconnecting them, keeping them cool, or destroying them with painstakingly controlled heartbeats of energy. Extra qubits are expected to address blunders that drag into the framework.

Be that as it may, quantum PCs can convey more than exceptionally exact arrangements. They can likewise convey a variety of arrangements, any of which meet the objectives of your enhancement. You can get more arrangements that are more exact, utilizing every one of the information you've paid such a huge amount to gather and store. Traditional PCs, then again, the battle to give precise, excellent responses to enhancement demands. Assuming they don't vacillate totally, they frequently offer just a solitary plausible response, and it might possibly be precise.

Quantum PCs will utilize the remainder of the data to accomplish more computational power. This will be extraordinary with applications in pharma, green new materials, coordinations, finance, huge information, and then some. For instance, quantum figuring will be better at working out the energy of atoms since this is in a general sense quantum issue. So in case, you can envision an industry that arranges with atoms, you can envision the use of quantum processing.

Frequently individuals need to know whether quantum PCs will be quicker, and keeping in mind that they will actually want to do calculations quicker, it isn't on the grounds that they are doing likewise with more cycles. Rather quantum PCs exploit a generally unique method of handling data. To discover that essential contrast we will stroll through a model that assists with showing the force of quantum calculation.

Why Quantum Computing?

The examination in Quantum Computing targets finding a method of accelerating the most common way of executing long chains of computational guidelines. This method for execution would take advantage of a normally noticed quantum mechanics peculiarity that doesn't appear to check out when recorded.

When this essential objective of quantum registering is accomplished, and all that the physicists are hypothetically certain works, in reality, would without a doubt change figuring. The numerical issues that need long stretches of computations chipping away at the present supercomputers, and some that even can't be tackled now, would probably get a prompt arrangement.

Models of environmental change, models of the resistant framework's ability to obliterate disease cells, gauges on the potential outcomes of Earth-like planets in our perceptible world, and any remaining testing issues that we are confronted with today may unexpectedly get results inside an hour or so after program execution.

Indeed, these outcomes probably won't be the finished arrangement, yet all things being equal, they could give a likelihood table that focuses on the expected arrangements. Notwithstanding, recollect that even these probabilities are out of reach, utilizing the best-performing supercomputers we have accessible to us.

Quantum PCs process information utilizing diverse qubits (like the paired pieces of old-style Computing, aside from they hold more than each state in turn). All the more essentially, quantum PCs reenact our genuine world. They process information and connections in three aspects. That implies they will actually want to crunch a lot bigger volumes of information, even as they recreate each of the connections between information as they exist, or could exist, contingent upon various events in differentiating possible real factors.

For optimization, quantum PCs map your information into a multi-dimensional true reenactment that portrays the singular information components and every one of the connections. They then, at that point, mimic likely circumstances that will result from possible changes in that information. These circumstances mirror the outcomes you'd insight into reality.

Conclusion

Quantum Computers can possibly reform the universe of calculation by addressing a few sorts of traditionally immovable issues. While there isn't any quantum PC assembled modern enough to date that could perform computations that can't be performed on an old-style PC (aside from the opportunity to arrange requirements), critical advancement has been made in this field.

Frequently Asked Question?

1. What is the biggest problem with Quantum Computing?

Ans - Current quantum computers regularly smother decoherence by detaching the qubits from their current circumstance as well as could be expected. The difficulty is, as the quantity of qubits duplicates, this segregation turns out to be very difficult to keep up with: Decoherence will undoubtedly occur, and blunders creep in.

2. What is Quantum Coherence?

Ans - Quantum intelligibility alludes back to the capability of a quantum nation to keep up with its trap and superposition notwithstanding communications and the results of thermalization.

3. What is Quantum Power?

Ans - Quantum Power is a designer, investor, and administrator of energy innovation and strength foundation possessions, with nine interests in eight countries in sub-Saharan Africa. Quantum power puts all through the capital design in tasks and companies, from early improvement to functional stage.

1 note

·

View note

Text

Digital Transformation (DX) Drive | Communicating Machines

Digital Transformation (DX) Drive

Advanced change (DX) is a methodology of empowering business development predicated on the consolidation of computerized innovations into your functional cycle, items, arrangements, and client collaborations. The procedure is centered around utilizing the chances of new advancements and their effect on the business by zeroing in on the creation and adaptation of computerized assets.

Digital change includes the structure of an advanced environment wherein there is lucidness and consistent reconciliation between clients, accomplices, workers, providers, and outer elements, offering more prominent generally speaking benefit to the entirety.

Computerized change can be either direct or remarkable. Organizations leaving on direct computerized change look at how to modernize and work on current tasks. In many examples, this is where numerous associations analyze the chances to carefully change. Generally changing how an association works, however, is a part of the remarkable computerized change.

This requires a principal shift in thinking about the whole association comprehensively accepting change utilizing computerized innovation for all cycles and communications, both inside and remotely.

For instance, a retailer that modernizes its dispersion framework however computerized innovation is going through direct change. A retailer that forms an internet business website to significantly change how it reaches and offers to clients is displaying remarkable advanced change.

Advanced innovations like man-made brainpower, enormous information, and AI are progressively critical to the assembling business. Sandvik has collaborated with IBM on a few computerized key tasks.

At Sandvik, paintings have commenced to sign up for Industry 4.0 into its advent processes. The Sandvik emblem Dormer Pramet, an international cutting-tool maker, is running with IBM, one of the world’s using statistics exam organizations, on some key ventures.

You can see models wherever of DX reception. GE constructed a totally different division through the formation of Predix, a device to help GE clients assemble the product that powers Internet-associate.

“These comprise using loads of records to plot the really well worth chain during every department of our introduction unit in Sumperk, withinside the Czech Republic, and consolidating PC programming to understand abandons in apparatuses all through the start levels of assembling,” says Radim Bullawa, Industry Engineering Manager, at Dormer Pramet.d modern hardware like fly turbines, trains, and manufacturing plant robots.

LEGO has created a 20% CAGR starting around 2009 dependent on the achievement of its advanced business. Audi has expanded YoY deals through the making of Audi City, permitting clients to flawlessly arrange their Audi essentially in standard, on “power walls” all through select display areas.

Association Pacific’s PS Technology is a revenue-driven innovation auxiliary that takes innovation Union Pacific forms inside, adjusts it for outer use, and sells it in the marketplace.

Probably the best illustration of a current association undertaking computerized change can be found in the development of Netflix from DVD rentals to online membership-based amusement streaming, including unique substance creation.

In each industry, computerized change has turned into a corporate goal. From assembling to biotechnology, driving organizations bridle information to remain cutthroat. To address these information challenges, gain upper hand, and flourish in the computerized change time, NetApp information texture arrangements empower your advanced change journey.

1 note

·

View note

Text

Cloud Migration: The Way of Moving to Digital Transformation

Do you know about cloud migration? Let's see the details of cloud migration. it is the process of moving data, business elements, and applications to a cloud computing environment. There are various types of cloud migration an enterprise can perform. The most basic model is the transfer of application and data from the local data center to the public cloud.

There are different kinds of cloud movements an undertaking can perform. One typical model is the trading of data and applications from an area, on-premises server homestead to the public cloud. In any case, a cloud development could in like manner include moving data and applications beginning with one cloud stage or provider then onto the following - a model known as cloud-to-cloud migration. The third sort of Migration is a converse cloud Migration cloud bringing home or cloud leave, where information or applications are moved off of the cloud and back to a nearby server farm.

The benefits of this approach:

The Simplicity of Deployment – with RiverMeadow's Platform you can get fully operational and Migrate from anyplace to anyplace in just 15 minutes without sending virtual framework to the source datacenter

Simplicity and Speed of Access - no source hypervisor access is required which forestalls delays caused by authorizations issues bringing about Access Denied mistakes (source hypervisor access is beyond the realm of possibilities in most MSP/CSP circumstances).

Scalability - distributed computing can scale to help bigger jobs and more clients, considerably more effectively than an on-premises framework. In conventional IT conditions, organizations needed to buy and set up actual servers, programming licenses, stockpiling, and organization hardware to increase business administrations.

Cost - cloud suppliers assume control over upkeep and overhauls, organizations moving to the cloud can spend fundamentally less on IT activities. They can give more assets to advancement - growing new items or working on existing items.

Performance- moving to the cloud can further develop execution and end-client experience. Applications and sites facilitated in the cloud can undoubtedly scale to serve more clients or higher throughput and can run in topographical areas close to ending clients, to lessen network dormancy.

Digital Experience - clients can get to cloud administrations and information from any place, regardless of whether they are workers or clients. This adds to computerized change, empowers a further developed encounter for clients, and furnishes representatives with current, adaptable devices.

Cloud Migration Challenge:

Lack of Strategy:

Numerous associations begin moving to the cloud without committing adequate time and regard for their methodology. Fruitful cloud reception and execution require thorough start to finish cloud Migration arranging. Every application and dataset may have various necessities and contemplations and may require an alternate way to deal with cloud Migration. The association should have a reasonable business case for every responsibility it Migrating to the cloud.

Cost Management:

When Migrating to the cloud, numerous associations have not set clear KPIs to get what they intend to spend or save after movement. This makes it hard to comprehend assuming that movement was effective, according to a monetary perspective. Moreover, cloud conditions are dynamic and expenses can change quickly as new administrations are embraced and application use develops.

Vendor Lock-In:

Vendor lock-in is a typical issue for adopters of cloud Migration. Cloud suppliers offer an enormous assortment of administrations, however, a large number of them can't be reached out to other cloud stages. Moving jobs starting with one cloud then onto the next is an extended and exorbitant interaction. Numerous associations begin utilizing cloud administrations, and later think that it is hard to switch suppliers assuming the current supplier sometimes falls short for their necessities.

Information Security and Compliance:

One of the significant deterrents to cloud movement is information security and consistency. Cloud administrations utilize a common obligation model, where they assume liability for getting the framework, and the client is answerable for getting information and responsibilities. So while the cloud supplier might give vigorous safety efforts, it is your association's obligation to arrange them accurately and guarantee that all administrations and applications have the proper security controls. The Migration interaction itself presents security chances. Moving enormous volumes of information, which might be delicate, and arranging access controls for applications across various conditions makes critical openness.

Cloud Migration Strategy:

When justifying cloud Migration, the principal question all CEOs experience is the dangers and advantages of moving. A great deal has been said about the additional worth, speed, and seriousness procured alongside cloud innovation. However, a portion of the questions that should be tended to firsthand, are foundation similarity, staff cloud-preparing, public cloud permeability, information security and encryption, keys to the cloud and putting away your client information, and so on

As you gauge all the advantages and disadvantages of cloud Migration, it's an ideal opportunity to get to a cloud movement methodology. We should discover what precisely it is, the means by which to set one up, and what faculty is required from your side.

What is a Cloud Migration Strategy?

Let's see about Cloud Migration Strategy. A cloud Migration technique is an expert archive that depicts the reason, business results, and key partners of the cloud inside an association. It contains the choice places of the cloud movement procedure, including applications, IT frameworks, and foundation Migration. The record additionally fuses the critically important points on the innovation pathways and choices from the cloud reception and cloud Migration plans. Be that as it may, it's only unique in relation to them and assumes a vital part in the entire course of movement.

A cloud Migration methodology characterizes the jobs and choices about the cloud in your association. It distinguishes the key administrations devoured by the cloud supplier and decides the measure of code to be composed and refactored.

A cloud Migration methodology fills in as a manual for cloud administration reception, tending to innovation storehouses, non-standard arrangements, non-advanced expenses, and uncovering hazards from inadequately designed conditions.

A cloud Migration methodology record is a book of scriptures project divided among every one of the partners, tech staff, and other key dynamic faculty, filling in as a go-to asset while lining up with the present status of the organization's innovation, and extending the condition of the cloud for the years to come. It's a living archive that mirrors the digitalized organization's development factors.

That being said, an absence of cloud Migration methodology will pass in the group with almost no rational direction for cloud administration reception in your association. This can bring about various difficulties.

A 4-Step Cloud Migration Process:

Cloud Migration Planning:

Let's see the cloud migration process one-by-one. One of the initial steps to don't forget previous to moving facts to the cloud is to determine the usage case that the public cloud will serve. Will it be utilized for calamity recuperation? DevOps? Facilitating undertaking responsibilities by totally moving to the cloud? Or on the other hand, will a crossbreed approach turn out best for your organization.

In this stage, survey your current circumstance and decide the elements that will oversee the relocation, like basic application information, heritage information, and application interoperability.

It is additionally important to decide your dependence on information: do you have information that should be resynced routinely, information consistency prerequisites to meet, or non-basic information that might potentially be relocated during the initial not many passes of the movement?

Migration Business Case:

Work with cloud suppliers to comprehend the choices for cost reserve funds, given your proposed cloud arrangement. Cloud suppliers offer various estimating models and give profound limits in return to long-haul obligations to cloud assets (held occurrences) or a guarantee to a specific degree of cloud spend (reserve funds plans). These limits should be calculated into your marketable strategy, to comprehend the genuine long haul cost of your cloud Migration

Cloud Data Migration Execution:

When your current circumstance has been surveyed and an arrangement has been outlined, it's important to execute your relocation. The primary test here is completing your relocation with insignificant disturbance to typical activity, at the most reduced expense, and over the briefest time of time. If your information becomes unavailable to clients during a movement, you may affect your business tasks. The equivalent is valid as you proceed to synchronize and refresh your frameworks after the underlying movement happens. Each responsibility component separately relocated ought to be demonstrated to work in the new climate prior to moving another component.

Ongoing Upkeep:

As soon as that data has been migrated to the cloud, it's essential to make sure that it's far optimized, relaxed, and easily retrievable transferring ahead. It also enables to display for real-time adjustments to essential infrastructure and are expecting workload contentions. apart from actual-time tracking, you need to also determine the safety of the facts at relaxation to ensure that running for your new surroundings meets regulatory compliance legal guidelines inclusive of HIPAA and GDPR.another attention to maintain in thoughts is assembly ongoing overall performance and availability benchmarks to make certain your RPO and RTO goals should they trade.

Conclusion

A cloud Migration system is a record that characterizes the 'why', 'who', 'what', and 'how' of the cloud movement. It fills in as the manual for an effective heritage framework modernization and increasing your business.

On the off chance that your organization has the chiefs to play the jobs in the cloud movement process, the following intelligent advance is to have your cloud expert ready. A committed cloud group will assist with your underlying applications' evaluation and will recognize the difficulties related to cloud reception in your association.

1 note

·

View note

Text

5 Future Cloud Computing Trends

5 Future Cloud Computing Trends

Shopper and endeavor reception of distributed storage has detonated as of late, as examination and warning firm Gartner gauges public spending on cloud administrations will shut out the year down 23% from 2020, adding up to $332 billion. A large part of the development has been credited to the pandemic-driven turn to remote work, and the cloud business is continually tracking down better approaches to stay aware of the interest, alongside developing reception of existing philosophies. The following are five patterns that are set to stir up distributed computing in 2022.

We are probably going to see a change in the center from conveying cloud stages and instruments to work on explicit capacities (like the shift to zoom gatherings) to more all-encompassing systems focused on an association-wide cloud movement.

Since more associations embrace worldwide stock chains, half and a half and remote workplaces, and information-driven plans of action, distributed computing will undoubtedly be more well known. What’s more, the development of new sending models and capacities offers organizations of various sizes and enterprises more decisions in their utilization of the cloud and advantage from this speculation.

As per some IT-trained professionals, distributed computing will start to lead the pack in advancements for handling pivotal business challenges for a long time. The cloud spending of enormous organizations extending at a CAGR of 16% from 2016 to 2026 demonstrates this.

Furthermore, numerous associations currently use cloud security to accomplish a few business destinations. A report asserts that over 80% of associations store their obligations in the cloud, and an expanding number of associations are moving towards the public cloud. Subsequently, the public cloud is relied upon to develop from $175 in 2018 to $331Billion in 2022.

The development of distributed computing is unquestionably going to proceed in 2022 and then some. Along these lines, it’s an ideal opportunity to begin noticing a portion of the significant distributed computing patterns to watch in 2022.

For an organization like Amazon, it is great to see that maintainability is high on its plan. In any case, one can contend that there’s something else entirely to it, with a new conjecture guaranteeing that environmental change impacts will cost organizations about $1.6 trillion consistently by 2025.

1 note

·

View note

Text

Playstation | Playstation 5 | Playstation 4 | Playstation 3 | Playstation 2 | Playstation Direct | Playstation Store | Playstation Network | Playstation Plus

Playstation

Playstation is developed by Sony. It is a video game console. The first playstation was launched in 1994. The first playstation was released in Japan and also released worldwide. In Japanese word, Playstation is written like プレイステ��ション.

Ps is the short form Playstation. Playstation is the Video Game brand. Playstation comprises a home video game console, two handhelds, a media center, and Smartphone. Also online service and multiple machines.

In the series, the original console was the first console of 100 million units of any type of ship over. Did this for a period of 10 years. This was the successor for Sony Interactive Entertainment.

The Playstation2 was launched in 2000. This was the best-selling home console to date. 155 million units were sold by the Sony company at the end of 2012. Sony Companies next console Playstation3 was launched in 2006. 87.4 million units sold by the company in March 2017. The Playstation4 was launched in 2013 by Sony. The company has a million units of Playstation in a day. This became the fastest-selling console in history. The Playstation5 was released in 2020. And this is the latest version of Playstation.

The first version of playstation exhibited a remarkable amelioration in the term graphic over the existing cartridge players of early 1990.

CD-ROM allowed rapid loading times. Games are encoded on CD-ROM. CD-ROM improves the GUI(Graphic User Interface) prosperous visual environment.

In the series, the beginning handheld game console, (PSP)Playstation Portable, sold 80 million units worldwide in November 2013. The Playstation Vita is the successor which was released in Japan. Playstation Vita was released in December 2011.

Playstation5

Playstation5 is the home video game console and the short form of playstation5 is PS5. The release date of Playstation was announced in 2019. And the Playstation5 was finally released on 12 Nov 2020. The announcement of Playstation5 is a successor decision for the company. Playstation5 first launched in Australia, Japan, New Zealand, North America, and South Korea, and worldwide in the same week later.

Playstation is the ninth generation of video game consoles alongside Microsoft’s Xbox Series X and Series S consoles, which were launched in the same month. The basic model of Playstation5 contains an optical disc drive compatible with Ultra HD Blu-ray discs.

The PlaySstaion5 leading hardware features involve a solid-state drive customized for rapid speed data streaming to allow notable improvement in storage performance.

Playstation5 Console

The PS5 console unshackles new gaming possibilities that you never expected. Feel lightning and speedy loading with an ultra-high-speed SSD, deeper attention with support for the adaptive trigger., haptic feedback, and 3D Audio, and an all-new generation of incredible PlayStation games.

Features of Playstation5 (PS5)

PS5 is capable of 4K/120fps gameplay and also supports 8K/60.

PS5 gives you faster loading times because of the new SSD(Solid State Storage).

Playstation5 temptest 3D audio tech is like Atmos-lite.

Playstation’s system is cool and quiet, nearly running all the time.

Special about Playstation5

The PS5 is really a leap forward for console gaming, offering gorgeous 4K performance, impressive fast load times, and a truly game-changing controller that makes playing games more immersive and tactile than ever. It plays nearly all Playstation4 games, and, in many cases, allows them to run and load better than ever before.

Playstation5 Price and availability

The price of Playstation5 is Rs,49,990 only in India.

Playstation5 is available in India on online shopping sites for eg., Flipkart, Amazon, etc.

Game overview of Playstation5

Horizon Forbidden West

This is the exclusive game on Playstation5. This game is made by Sony Interactive Entertainment. This is an epic RPG(Role Playing video-Game) adventure game.

Following are the key features of Horizon Forbidden West-

Brave and expensive unbarred world

Discover distant lands, rich culture, new enemies, and striking characters.

A majestic boundary

Explore the sea forests, towering mountains of a fa future-America and sunken cities

New danger challenges

Participated in strategic battles, climbed human enemies by using weapons, and opposed enormous machines, gears, and traps crafted from salvaged parts.

Untangle Startling mysteries

Expose the secret behind Earth’s imminent collapse and unlock a hidden chapter in the antiquated past…..one that will change Aloy forever.

And as we see the overview and performance of Playstation5 are good and this is the successor for Sony Interactive Entertainment.

#playstation#playstation 5#playstation5#playstation4#playstation 4#playstation3#playstation2#playstation 2

1 note

·

View note

Text

Serverless Computing: Introducing Rising Technology

An Organizational traditional way to deal with establishing an IT climate/foundation by getting equipment and programming assets separately has become obsolete. With the coming and present, there is presently an assortment of approaches to virtualizing IT frameworks and accessing required applications over the Internet through online applications.

Distributed computing is extremely popular today and with numerous serious cloud specialist co-ops on the lookout, there are a ton of inquiries on which one to pick. Prior to jumping profound into understanding Serverless Compute, revive your memory with some cloud ideas.

Serverless Computing is a distributed computing code execution model in which the cloud supplier handles the working/tasks of virtual machines on a case by case basis to satisfy demands, which are charged by a theoretical computation of the assets needed to fulfill the solicitation rather than per virtual machine, each hour. Regardless of the term, it doesn't include the execution of code without the utilization of servers. The name serverless Computing comes from the way that the proprietor of the framework doesn't need to purchase, lease, or arrange servers or virtual machines on which the back-end code runs.

Why Serverless Computing?

Serverless Computing can be more practical than leasing or purchasing a proper number of servers, which likewise brings about enormous times of underuse or inactive time.

Moreover, a serverless design implies that engineers and administrators don't have to invest energy in setting up and tuning auto-scaling approaches or frameworks; the cloud supplier is liable for scaling the ability to the interest.

As the cutting-edge cloud-local design intrinsically downsizes just as up, these frameworks are known as flexible rather than versatile. The units of code uncovered to the rest of the world with work as-a-administration are fundamental occasion-driven capacities. This disposes of the requirement for the developer to contemplate multithreading or expressly dealing with HTTP demands in their code, improving on the assignment of back-end programming improvement.

Benefits of the Serverless Model:

Dynamic scalability:

One critical element of serverless is that you can use auto adaptability abilities that develop and treat your processing power as per the main job. For instance, when a serverless figuring climate is acquainted with the public cloud, framework scaling becomes adaptable and independent. This makes serverless processing alluring for capricious jobs. With a serverless plan, the versatility of cloud-based applications isn't restricted by the hidden foundation.

Cost-friendly hosted solutions:

Cloud expenses can rapidly add up. Serverless figuring utilizes assets more monetarily than devoted arrangements of foundation. With this sort of model, you don't have a huge CapEx venture with the in-house framework, which can aggregate rapidly. All things being equal, cloud-local serverless processing gives a dexterous strategy for utilizing assets when you want them.

Increased agility:

Serverless processing can make a designer's life more straightforward. These arrangements are intended to ease advancement migraines, computerize center cycles, and even assist with more limited delivery cycles. Serverless figuring assuages engineers from the dreary work of keeping up with servers, empowering them to make more opportunities for composing code and making imaginative administrations. Besides lessening improvement time, serverless figuring likewise guarantees flexible adaptability and further nimbleness by guaranteeing that engineers don't need to deal with the basic framework.

Faster time to market:

Your capacity to react to showcase requests is extraordinarily improved with serverless figuring. Applications and administrations can be created and sent quicker and changed over into attractive arrangements. You won't need to hang tight for a costly framework or get hung up by IT arrangements that take an eternity. Staff is not generally diverted by refreshing and keeping up with the foundation. Rather, they can zero in on enhancing and reacting to client needs.

Top Serverless Computing Tools:

AWS Lambda

Azure Functions

Google Functions

AWS Lambda:

AWS Lambda is a serverless figure administration that runs your code in light of occasions and naturally deals with the basic register assets for you. These occasions might remember changes for state or an update, for example, a client setting a thing in a shopping basket on a web-based business site.

AWS Lambda is a serverless process administration that runs your code because of occasions and naturally deals with the fundamental register assets for you. These occasions might remember changes for state or an update, for example, a client setting a thing in a shopping basket on a web-based business site. You can use AWS Lambda to grow other AWS organizations with custom reasoning, or make your own backend organizations that work at AWS scale, execution, and security.AWS Lambda naturally runs code in light of different occasions, for example, HTTP demands by means of Amazon API Gateway, adjustments to objects in state advances in AWS Step Functions. Amazon Simple Storage Service (Amazon S3) pails, table updates in Amazon DynamoDB,

Lambda runs your code on a high accessibility process foundation and plays out all the organization of your register assets. This incorporates server and working framework support, limit provisioning and programmed scaling, code and security fix sending, and code observing and logging. You should simply supply the code.

Key Features:

Extend other AWS services with custom logic

Build custom backend services

Bring your own code

Completely automated administration

Built-in fault tolerance

Package and deploy functions as container images

Automatic scaling

Connect to relational databases

Fine-grained control over performance

Connect to shared file systems

Amazon CloudFront requests

Orchestrate multiple functions

Integrated security model

Trust and integrity controls

Only pay for what you use

Flexible resource model

Integrate Lambda with your favorite operational tools.

Azure Functions:

Azure Functions is a serverless register administration that runs our code on request without expecting to have it on the server and overseeing foundation. Azure Functions can be triggered by an assortment of occasions. It permits us to compose code in different dialects, like C#, F#, Node.js, Java, or PHP.

Azure Functions provide the following features:

Flexible development:

We can write our code without delay to the portal or can install continuous integration and set up our code through Azure DevOps, GitHub, or every other supported improvement tool.

Integrated security:

we will guard HTTP-caused features with OAuth companies, inclusive of Azure advert, Microsoft account, or different 0.33-celebration accounts, which include Google, Facebook, and Twitter.

Allow selecting development language:

It permits us to jot down a function in the language of our preference, which includes C#, F#, Java, PHP, JavaScript, etc. you may take a look at the listing of supported languages here.

Bring our own dependencies:

Azure highlights grant us to download code conditions from NuGet and NPM, so we can utilize libraries that need to execute our code and are to be had on either NuGet or NPM.

Pay per use price model:

Azure affords a bendy intake plan according to our requirements. Also, if we already use the App provider for our different software, we can run the function on the same plan. So, there is no extra cost we want to pay.

Open Source:

The characteristic runtime is open Source.

Google Functions:

Google Cloud Serverless figuring makes the versatility of the server endlessness, i.eThere may be no heap control, it needs to run code or capacity on solicitation or occasion, if there can be equal solicitations, there can be a capacity walking around each exact programming. We have been remembered for a gigantic span of serverless registering.

GCP Serverless Computing is actually adaptable with any code or holder sending over it. The purchaser can ship his code as a supply code or as a holder. complete-stack serverless applications with Google Cloud's stockpiling, information bases, AI, and lots more strategies might be utilized to assemble.

GCP Serverless Computing, allows the patron to make use of any language that he is agreeable in and furnishes all types of assistance with structures and libraries as nicely. The customer can bring as capacity, supply code or compartment, and so on, inside the way he's agreeable.

Features of Google Function:

No need for Managing server

Fully Managed Security

Pay only for what you use

Flexible environment for developer

Easy to understand interface

Various types of options regarding Full-Stack Serverless

build an end to end serverless application

Deployment is quite fast.

Conclusion

Serverless Cloud Computing isn't best for agility and fee optimization. It’s approximately continuously building, innovating, and staying beforehand. enable us to offer backend offerings on an as-used basis and charged based on their computation. Google Cloud Platform also unlocks an entirely new world of virtual possibilities.

Frequently Asked Question:

What is a serverless computing example?

Ans - Server less Computing is an effectively adaptable, practical, cloud-based framework that permits endeavors to embrace cloud administrations by zeroing in their time and assets on composition, conveying, and improving code without the weight of provisioning or overseeing waiter cases.

What is server less computing Good For?

Ans - Server less Computing gives various endowments over regular cloud-based or server-driven frameworks. For some designers, serverless structures offer additional versatility, more noteworthy adaptability, and speedier opportunity to deliver, all at a decreased expense.

Where is server less computing used?

Ans - The maximum commonplace use cases are net of factors (IoT) programs and mobile backends that want event-driven processing, Carvalho says. Different use instances are batch processing or circulate processing for real-time reactions to events.

1 note

·

View note

Text

Artificial Intelligence | Types of Artificial Intelligence | Artificial Intelligence Applications

Man-made recognition makes use of PCs and machines to emulate the crucial questioning and dynamic capacities of the human brain.

While diverse meanings of artificial brainpower (AI) have surfaced all through the maximum latest couple of a few years, John McCarthy gives the accompanying definition in this 2004 paper (PDF, 106 KB) (join dwells out of doors IBM), " It is the technology and designing of creating smart machines, specifically insightful PC programs. It is diagnosed with the similar errand of utilizing PCs to recognize human insight, however, AI would not want to limit itself to techniques which might be organically perceptible."

Be that because it may, a few years earlier than this definition, the creation of the man-made recognition dialogue changed into signified through Alan Turing's unique work, "Registering Machinery and Intelligence" (PDF, 89.8 KB) (interface dwells out of doors of IBM), which changed into allotted in 1950. In this paper, Turing, often alluded to as the "father of software program engineering", poses the accompanying inquiry, "Can machines suppose?" From there, he gives a take a look at, currently extensively referred to as the "Turing Test", wherein a human cross-examiner might try to understand a PC and human message reaction.

While this takes a look at has long passed through a whole lot exam given that its distribution, it remains a vast piece of the ancient backdrop of AI simply as a non-stop concept withinside the manner of questioning because it makes use of mind round etymology.

Stuart Russell and Peter Norvig then, at that point, persisted to distribute, Artificial Intelligence: A Modern Approach (join lives out of doors IBM), turning into one of the important path books withinside the research of AI. In it, they dive into 4 probably goals or meanings of AI, which separates PC frameworks primarily based totally on soundness and questioning as opposed to acting:

Human methodology

Frameworks that suppose like people

Frameworks that keep on like people

Optimal methodology

Frameworks that suppose judiciously

Frameworks that act judiciously

1 note

·

View note