Don't wanna be here? Send us removal request.

Text

In today’s world, data is considered one of the most valuable assets any business can have. However, to truly unlock the power of data, it’s not enough to simply collect it—organizations need to ensure that the data they are working with is accurate, consistent, and reliable. That’s where Data Quality Observability comes in.

Data Quality Observability is the ability to monitor, understand, and proactively manage the state of data across an entire ecosystem. With the growing complexity of data pipelines and the increasing reliance on data-driven decisions, organizations can no longer afford to ignore the health of their data. Data quality observability helps businesses identify issues before they impact operations, making it a critical part of any data strategy.

#datagaps#data quality#data#dataops#dataops suite#data quality observability#QA tester#Data Analysts#BI Experts

0 notes

Text

How Databricks Unity Catalog and Datagaps Automate Governance and Validation

Data quality is the backbone of accurate analytics, regulatory compliance, and efficient business operations. As organizations scale their data ecosystems, maintaining high data integrity becomes more challenging.

The seamless integration between Databricks Unity Catalog and Datagaps DataOps Suite provides a powerful framework for automated governance and validation, ensuring that data remains accurate, complete, and compliant at all times.

In our previous discussion, we highlighted how Datagaps enhances metadata management, lineage tracking, and automation within Unity Catalog.��This article takes the next step by diving into data quality assurance ��� a crucial component of enterprise-wide data governance.

By leveraging Datagaps Data Quality Monitor, organizations can implement automated validation strategies, reduce manual effort, and integrate real-time data quality scores into Unity Catalog for proactive governance. Let’s explore how these technologies work together to ensure high-quality, reliable data that drives better decision-making and compliance.

The Growing Need for Automated Data Quality Assurance

Modern enterprises manage vast amounts of structured and unstructured data across multiple platforms. Ensuring data accuracy, completeness, and consistency is no longer just a best practice – it’s a necessity for regulatory compliance and business intelligence.

Databricks Unity Catalog provides a centralized governance framework for managing metadata, access controls, and data lineage across an organization. By integrating with Datagaps Data Quality Monitor, enterprises can automate data validation, reduce errors, and gain deeper insights into data health and integrity.

6 Key Data Quality Dimensions

Effective data quality management revolves around six fundamental dimensions:

Accuracy – Ensuring data reflects real-world values without discrepancies.

Completeness – Verifying that all required fields and records are present.

Consistency – Maintaining uniformity across multiple data sources and systems.

Timeliness – Ensuring data is up-to-date and available when needed.

Uniqueness – Eliminating duplicate records and redundant data entries.

Validity – Enforcing compliance with defined formats, business rules, and constraints.

By addressing these dimensions, organizations can improve the trustworthiness of their data assets, enhance AI/ML outcomes, and comply with industry regulations.

Automating Data Quality Validation with White-Box and Black-Box Testing

Ensuring data integrity at scale requires a systematic approach to validation. Two widely used methodologies are:

1. White-Box Testing

Examines internal data transformations, lineage, and business rules.

Ensures that every step in the ETL (Extract, Transform, Load) process adheres to defined standards.

Provides deeper insights into data processing logic to catch issues at the source.

2. Black-Box Testing

Focuses on output validation by comparing actual results against expected benchmarks.

Useful for detecting anomalies, missing records, and schema mismatches.

Works well for regulatory compliance and end-to-end data pipeline testing.

A hybrid approach combining both techniques ensures robust validation and proactive anomaly detection.

How Unity Catalog and Datagaps Data Quality Monitor Work Together

1. Unified Governance and Automated Validation

Databricks Unity Catalog centralizes metadata management, access control, and lineage tracking.

Datagaps Data Quality Monitor extends these capabilities with automated quality checks, reducing manual efforts.

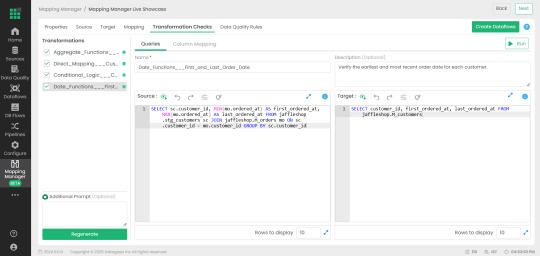

2. Mapping Manager Utility: Simplifying Test Case Automation

One of the standout features of Datagaps Data Quality Monitor is the Mapping Manager Utility, which:

Extracts mapping configurations from Databricks Unity Catalog.

Automatically generates white-box and black-box test cases.

Reduces the need for manual intervention, increasing efficiency and scalability.

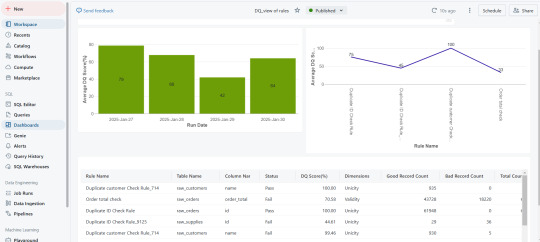

3. Real-Time Data Quality Scores for Proactive Governance

After test execution, a data quality score is generated.

These scores are seamlessly integrated into Databricks Unity Catalog, allowing real-time monitoring.

Organizations can visualize data quality insights through dashboards and take corrective actions before issues impact business operations.

Key Use Cases

ETL and Data Pipeline Validation – Ensuring data transformations adhere to defined business rules.

Regulatory Compliance and Audit Readiness – Mitigating risks associated with inaccurate reporting.

Enterprise Data Lakehouse Governance – Enhancing consistency across distributed datasets.

AI/ML Data Preprocessing – Ensuring clean, high-quality data for better model performance.

Automated Data Quality Checks – Reducing manual data validation efforts for faster, more reliable insights.

Scalability for Large Datasets – Efficiently managing high-volume, high-velocity enterprise data.

Faster QA Cycles – Automating test case execution for rapid turnaround.

Lower Operational Resources – Reducing human intervention, saving time and resources.

The Business Impact: Why This Integration Matters

Enhanced Automation – Eliminates manual quality checks and increases efficiency.

Real-Time Monitoring – Provides instant visibility into data quality metrics.

Stronger Compliance – Supports industry standards and regulations effortlessly.

Scalability – Designed for large-scale, complex data ecosystems.

Cost Efficiency – Reduces operational overhead and improves ROI on data management initiatives.

Ensuring data quality at scale requires a combination of automated governance, real-time monitoring, and seamless integration. The connection between Databricks Unity Catalog and Datagaps Data Quality Monitor provides a comprehensive solution to achieve this goal.

With automated test case generation, continuous data validation, and integrated governance, organizations can ensure their data is always accurate, complete, and compliant—laying the foundation for data-driven decision-making and regulatory confidence.

0 notes

Text

#dataquality#Databricks#cloud data testing#DataOps#Datagaps#Catalog#Unity Catalog#Datagaps BI Validator

0 notes

Text

Automating Tableau Reports Validation: The Easy Path to Trusted Insights

Automating Tableau Reports Validation is essential to ensure data accuracy, consistency, and reliability across multiple scenarios. Manual validation can be time-consuming and prone to human error, especially when dealing with complex dashboards and large datasets. By leveraging automation, organizations can streamline the validation process, quickly detect discrepancies, and enhance overall data integrity.

Going ahead, we’ll explore automation of Tableau reports validation and how it is done.

Importance of Automating Tableau Reports Validation

Automating Tableau report validation provides several benefits, ensuring accuracy, efficiency, and reliability in BI reporting.

Automating the reports validation reduces the time and effort, which allows analysts to focus on insights rather than troubleshooting the errors

Automation prevents data discrepancies and ensures all reports are pulling in consistent data

Many Organizations deal with high volumes of reports and dashboards. It is difficult to manually validate each report. Automating the reports validation becomes critical to maintain efficiency.

Organizations update their Tableau dashboards very frequently, sometimes daily. On automating the reports validation process, a direct comparison is made between the previous and current data to detect changes or discrepancies. This ensures metrics remain consistent after each data refresh.

BI Validator simplifies BI testing by providing a platform for automated BI report testing. It enables seamless regression, stress, and performance testing, making the process faster and more reliable.

Tableau reports to Database data comparison ensures that the records from the source data are reflected accurately in the visuals of Tableau reports.

This validation process extracts data from Tableau report visuals and compares it with SQL Server, Oracle, Snowflake, or other databases. Datagaps DataOps Suite BI Validator streamlines this by pulling report data, applying transformations, and verifying consistency through automated row-by-row and aggregate comparisons (e.g., counts, sums, averages).

The errors detected usually identify missing, duplicate or mismatched records.

Automation ensures these issues are caught early, reducing manual effort and improving trust in reporting.

Tableau Regression

In the DataOps suite, Regression testing is done by comparing the benchmarked version of tableau report with the live version of the report through Tableau Regression component.

This Tableau regression component can be very useful for automating the testing of Tableau reports or Dashboards during in-place upgrades or changes.

A diagram of a process AI-generated content may be incorrect.

Tableau Upgrade

Tableau Upgrade Component in BI validator helps in automated report testing by comparing the same or different reports of same or different Tableau sources.

The comparison is done in the same manner as regression testing where the differences between the reports can be pointed out both in terms of text as well as appearance.

Generate BI DataFlows is a handy and convenient feature provided by Datagaps DataOps suite to generate multiple dataflows at once for Business Intelligence components like Tableau.

Generate BI DataFlows feature is beneficial in migration scenarios as it enables efficient data comparison between the original and migrated platforms and supports the validations like BI source, Regression and Upgrade. By generating multiple dataflows based on selected reports, users can quickly detect discrepancies or inconsistencies that may arise during the migration process, ensuring data integrity and accuracy while minimizing potential errors. Furthermore, when dealing with a large volume of reports, this feature speeds up the validation process, minimizes manual effort, and improves overall efficiency in detecting and resolving inconsistencies.

As seen from the image, the wizard starts by generating the Dataflow details. The connection details like the engine, validation type, Source-Data Source and Target-Data Source are to be provided by users.

Note: BI source validation and Regression validation types do not prompt for Target-Data source

Let’s take a closer look at the steps involved in “Generate BI Dataflows”

Reports

The Reports section prompts users to select pages from the required reports in the validation process. For Data Compare validation and Upgrade Validation, both source and target pages will be required. For other cases, only the source page will be needed.

Here is a sample screenshot of the extraction of source and target pages from the source and target report respectively

Visual Mapping and Column Mapping (only in Data Compare Validation)

The "Visual Mapping" section allows users to load and compare source and target pages and then establish connections between corresponding tables.

It consists of three sections namely Source Page, Target Page, and Mapping.

In the source page and target page, respective Tableau worksheets are loaded and on selecting the worksheets option, users can preview the data.

After loading the source and target pages, in the mapping section, the dataset columns of source and target will be automatically mapped for each mapping.

After Visual Mapping, the "Column Mapping" section displays the columns of the source dataset and target dataset that were selected for the data comparison. It provides a count of the number of dataset columns that are mapped and unmapped in the "Mapped" and "Unmapped" tabs respectively.

Filters (for the rest of the validation types)

The filters section enables users to apply the filters and parameters on the reports to help in validating them. These filters can either be applied and selected directly through reports or they can be parameterized as well.

Options section varies depending on the type of validation selected by the user. Options section is the pre final stage of generating the flows where some of the advanced options and comparison options are prompted to be selected as per the liking of the user to get the results as they like.

Here’s a sample screenshot of options section before generating the dataflows

This screenshot indicates report to report comparison options to be selected.

Generate section helps to generate multiple dataflows with the selected type of validation depending on the number of selected workbooks for tableau.

The above screenshot indicates that four dataflows are set to be generated on clicking the Generate BI Dataflows button. These dataflows are the same type of validation (Tableau Regression Validation in this case)

Stress Test Plan

To automate the stress testing and performance testing of Tableau Reports, Datagaps DataOps suite BI Validator comes with a component called Stress Test Plan to simulate the number of users actively accessing the reports to analyze how Tableau reports and dashboards perform under heavy load. Results of the stress test plan can be used to point out performance issues, optimize data models and queries to ensure the robustness of the Tableau environment to handle heavy usage patterns. Stress Test Plan allows users to perform the stress testing for multiple views from multiple workbooks at once enabling the flexibility and automation to check for performance bottlenecks of Tableau reports.

For more information on Stress Test Plan, check out “Tableau Performance Testing”.

Integration with CI/CD tools and Pipelines

In addition to these features, DataOps Suite comes with other interesting features like application in built pipelines where the set of Tableau BI dataflows can be run automatically in a certain order either in sequence or parallel.

Also, there’s an inbuilt scheduler in the application where the users can schedule the run of these pipelines involving these BI dataflows well in advance. The jobs can be scheduled to run once or repeatedly as well.

Achieve the seamless and automated Tableau report validation with the advanced capabilities of Datagaps DataOps Suite BI Validator.

0 notes

Text

#Tableau#Tableau Reporting#Data Validation#DataOps Suite#Datagaps#Automating Tableau Reports Validation

0 notes

Text

#datagaps#bi testing#application testing#ETL testing#data testing#bi validator#etl validator#dataquality

0 notes

Text

The Ultimate Tableau Dashboard Testing Checklist

Ensuring the quality of a Tableau dashboard goes beyond building. It requires thorough testing to validate its reliability and usability. This tableau dashboard testing checklist focuses on essential aspects like verifying data accuracy, evaluating functionality, security testing to protect sensitive data, stress testing for performance under load, and visual testing to maintain clarity and design standards.

Aspects Involved in Testing the Tableau Dashboard

Testing Data Sources:

Ensure the dashboard is connected to the correct data sources and that credentials are set up properly.

Checking Data Accuracy:

1. Check whether the source data is reflected in the dashboard. This involves cross-checking the data on the dashboard with the data from the sources.

2. Verify that the calculated fields, aggregates, and measures are as expected.

Functionality Testing:

1. Report or dashboard design check.

2. Filters and parameters testing to see if they work as expected and do not display incorrect data. Also, if the dynamic changes to data visuals are applied and reflected.

3. Drilldown reports checking.

4. Ease of navigation, interactivity, and responsiveness in terms of usability.

Security Testing:

1. To check the security for report access and Row Level Security Permissions.

2. Integration of Single Sign On (SSO) security.

3. Multi-factor authentication.

Regression Testing:

Any change to the BI Dashboard/Model can impact the existing reports. It is important to perform regression testing so that after updates or modifications, the data/visuals shown in the dashboard remain the same before and after the changes.

youtube

Stress Testing:

To test the load time, run time, filter application time, and to simulate the access of reports and user behavior.

Visual Testing:

To check alignments, layouts, consistencies in terms of texts, visuals, or images to ensure all the elements are properly aligned.

How Does DataOps Suite BI Validator Enable Testing of Tableau Dashboards?

BI Validator is a no-code testing tool to automate the regression, stress, and functional testing of Tableau reports and dashboards.

Checking Data Accuracy:

DataOps Suite allows users to validate the data from the visuals of the report to be compared to the source databases. On connecting to Tableau and selecting a report, the datasets underlying the visuals of the reports are accessible through the suite as well. Each Visual has its own dataset, which can be compared to a source database used to build the tableau report.

This is possible with the help of the Data Compare component in the suite, which can compare the aggregated data from the databases and the datasets of the visuals. The differences are captured, thus allowing users to check the data accuracy between the reports and databases.

Functionality Testing and Visual Testing:

Once the connection is established, the users can access the reports and the exact workspace to work with. The specific workbook/report is accessible. The report will be loaded without any changes, and the visuals and filters of the report can be accessed from the BI Validator itself, thus verifying the responsiveness of the visuals and filters and verifying whether the dynamic changes are reflected.

The BI Validator comes with the Tableau Upgrade component to compare two reports, which can be the same or different from one or different data sources.

A common use case is the comparison of views and worksheets across multiple environments. Comparison can be done in terms of both text and appearance, where the differences are captured and pointed out wherever mismatch occurs in both reports. Also, BI Validator allows the differences in the filters of both reports to be pointed out on enabling “Capture filters after applying.”.

youtube

Security Testing:

BI Validator connects with Tableau Testing through direct trust authentication, default authentication, or personal access token, where the users must provide their respective Tableau credentials, secret key, and secret ID (in direct trust connection) and the necessary web login commands (for default or personal access token connection). Thus, ensuring the authentication is secure so that only the users with valid credentials are accessing their reports to be validated.

BI Validator restricts the users from downloading the testing results when it comes to BI Reports to prevent the sensitive information from being downloaded.

The DataOps suite also has user-based access through roles and containers to prevent access to reports for everyone. The BI Validator can only allow users with defined roles and permissions to access reports.

Regression Testing:

BI Validator supports regression testing of the reports through the Tableau Regression component, which automates the testing of Tableau reports during any in-place upgrades and workbook deployments. This testing happens by comparing a benchmarked/baseline version of the dashboard/report with the live version. The filters can be changed accordingly if needed before/after the benchmarking. These filter changes can be bookmarked as the latest checkpoint before running the test.

Similar to upgrades, regression test runs can validate the differences in terms of appearance or text. Also, differences in the filters can be pointed out on enabling “capture filters after applying.”

Stress Testing:

BI Validator comes with a stress test plan to simulate concurrent users accessing reports to evaluate how reports and dashboards perform under heavy load. The plan typically involves running multiple users through different types of interactions, such as viewing reports, applying filters, refreshing data, and interacting with custom visuals.

The stress test plan allows the users to select the pages/dashboards from required workspaces to perform stress testing. These pages can be either from the same or different report.

Users can run the stress test plan on specific run options like number of parallel users, time spent on each page, total simulation run time, number of seconds to reach parallel users, refresh time, and other options to run the stress test plan.

The runs will result in showing metrics like Average Open Time and Max Open Time, Average Filter Apply Time, SLA Failures.

#datagaps#Tableau#Tableau Testing#Tableau dashboard#Tableau Testing Checklist#BI Validator#BI#Youtube

0 notes

Text

Accelerate Power BI Reports with Performance Optimization and Stress Testing

Power BI reports can slow down significantly when too many users access them at the same time, especially on a Monday morning when demand is highest. In this video, we dive into a common BI challenge and introduce a solution to keep your reports running smoothly under heavy load: stress testing with Data Gaps.

Timestamps:

0:06 – "The reports are too slow; please fix them" – Monday morning BI issues.

0:09 – BI developers’ challenge with performance demands.

0:17 – Why reports load slowly: latency, volume, and user traffic.

0:32 – The Monday problem: high user volume impacts performance.

0:40 – Solution overview: Stress testing with Data Gaps.

0:45 – Setting up the Power BI connection for testing.

1:07 – Selecting reports and defining test options.

1:13 – Configuring user simulation, navigation speed, SLAs.

1:26 – Testing with shared or unique credentials.

1:36 – Tracking response times for filters and parameters.

2:01 – Viewing metrics: load times, exporting results.

2:12 – Insights on filter response metrics.

2:23 – Solving BI challenges with DataGaps stress testing.

Learn how to optimize Power BI dashboards by simulating high-user environments and analyzing performance metrics. Stay ahead of peak usage times and keep your team happy with faster, more responsive reports!

0 notes

Text

The Importance of Data Quality in AI Projects: Key Practices for Success

Data quality is the backbone of any successful AI project. High-quality data ensures that AI models are accurate, reliable, and unbiased, which is crucial for making informed decisions and achieving desired outcomes. On the flip side, poor data quality can lead to incorrect predictions, flawed insights, and costly mistakes. In fact, Gartner estimates that poor data quality costs organizations an average of $15 million annually, primarily due to inefficiencies and missed opportunities. The stakes are even higher in AI, where inaccurate data can result in significant financial losses and reputational damage.

A McKinsey report underscores that continuous data health monitoring and a data-centric approach are essential for unlocking AI’s full potential. This highlights the necessity of ongoing data quality management. Maintaining high data quality is not just a best practice—it's a critical requirement for the success and sustainability of AI projects.

Understanding Data Quality in AI

Data quality refers to how accurate, complete, reliable, and relevant a dataset is for its intended use. In AI, high-quality data directly impacts the performance and accuracy of models.

Common Data Quality Issues in AI Projects

AI projects often face issues such as data inconsistency, incomplete datasets, and data bias. For instance, Zillow's home-buying algorithm failed due to outdated and inconsistent data, leading to overpayments and significant financial losses. This case illustrates the critical need for up-to-date and accurate data in AI models to avoid costly errors.

Similarly, a mining company developing a predictive model for its mill processes faced challenges due to data being analyzed only once before storage. This lack of continuous monitoring resulted in unreliable predictions. By implementing real-time data health monitoring, the company improved its data quality and prediction accuracy.

Best Practices for Ensuring Data Quality in AI

Implement Data Governance Frameworks A robust data governance framework establishes policies, procedures, and standards for data management, ensuring consistency and accountability. Key components include data stewardship, quality metrics, and lifecycle management. According to IDC, organizations with strong data governance frameworks see a 20% improvement in data quality.

Data Profiling and Cleansing Data profiling examines data to understand its structure and quality, while data cleansing corrects inaccuracies. Effective profiling and cleansing can significantly enhance data quality. For instance, a financial institution reduced data errors by 30% through these practices.

Continuous Data Monitoring and Validation Regularly checking and validating data ensures it remains accurate and reliable. Advanced tools like data observability platforms can automate this process, offering real-time insights and early detection of issues. Continuous monitoring helps prevent costly downstream effects.

Data Integration and ETL Best Practices Standardizing data formats and validating data during the ETL (Extract, Transform, Load) process are crucial. Proper ETL practices can prevent data loss and corruption, leading to a 25% increase in data accuracy, as reported by TDWI.

Utilizing AI and Machine Learning for Data Quality Management AI and ML technologies can automate the detection and correction of data anomalies, enhancing data quality management. AI-powered tools can identify patterns and trends, enabling proactive quality management. By 2025, AI-driven data quality solutions are expected to become a standard in the industry.

Data Quality Metrics and KPIs Measuring data quality through metrics such as accuracy, completeness, consistency, and timeliness is essential. Setting and monitoring these metrics helps evaluate the effectiveness of data quality initiatives, guided by industry benchmarks from DAMA International.

Ensuring high data quality is crucial for the success of AI projects. By implementing robust governance frameworks, profiling and cleansing data, continuously monitoring quality, following ETL best practices, leveraging AI technologies, and setting quality metrics, organizations can overcome data challenges and achieve superior AI outcomes.

Referred by Datagaps

#DataOpsSuite

Request a demo today

Demo: https://www.datagaps.com/request-a-demo/#utm_source=youtube&utm_medium=yt_video&utm_campaign=yt_request_demo&utm_id=yt_request_demo

0 notes

Text

Master Power BI Slicers: Ensuring Data Accuracy and Performance

Understanding Power BI Slicers

Definition and functionality of Power BI slicers.

Importance in user interaction and data segmentation.

2. Importance of Functional Testing

Ensuring data accuracy and integrity through slicer functionality.

Optimizing performance by mitigating potential bottlenecks.

Enhancing user experience with seamless interaction capabilities.

Driving informed decisions with actionable business insights.

3. Challenges in Testing Power BI Slicers

Performance issues with large datasets.

Ensuring consistent data accuracy and integrity.

Managing complexities of slicer configurations.

4. Solution: Datagaps BI Validator

Automating testing processes for consistent and accurate results.

Simplifying testing of complex slicer configurations.

Benefits of automated testing for reliability and efficiency.

5. Conclusion

Importance of maintaining slicer accuracy and performance.

Role of Datagaps BI Validator in ensuring reliable Power BI reports.

Driving better business outcomes through enhanced data integrity and usability.

Key Takeaways:

Automate slicer testing for reliability and efficiency.

Resolve performance bottlenecks in Power BI reports.

Simplify testing of intricate slicer configurations with automated solutions.

Call to Action: Explore how Datagaps BI Validator can streamline your Power BI slicer testing. Ensure your reports deliver accurate insights and optimal performance with our automated testing solutions. Contact us today to learn more.

Additional Notes:

Include relevant images or diagrams illustrating Power BI slicers and testing processes.

Incorporate customer testimonials or case studies showcasing successful implementations.

Provide links to related resources or whitepapers for further reading on Power BI best practices

Referred by Datagaps

#DataOpsSuiteBIValidator

Request a demo today

Demo: https://www.datagaps.com/request-a-demo/#utm_source=youtube&utm_medium=yt_video&utm_campaign=yt_request_demo&utm_id=yt_request_demo

0 notes

Text

Automate Tableau Regression Testing with Datagaps BI Validator

Introduction

In today's data-driven world, the accuracy and reliability of business intelligence (BI) reports are paramount. As Tableau gains prominence for visualizing data, ensuring the integrity of these reports through effective regression testing becomes increasingly critical.

Challenges of Tableau Regression Testing

Tableau users often grapple with several challenges during regression testing. Issues such as handling complex data volumes, managing frequent updates, ensuring visualization accuracy, and maintaining consistent performance metrics pose significant hurdles.

Datagaps BI Validator: Your Solution

Enter Datagaps BI Validator—a robust tool tailored to streamline and enhance Tableau regression testing processes. From data comparison and UI consistency checks to comprehensive validation across multiple environments, BI Validator empowers organizations to maintain data accuracy effortlessly.

Key Features and Benefits

Automated Comparison: Automatically compare Tableau worksheet data and UI elements to detect discrepancies.

Detailed Reporting: Generate comprehensive reports with visual indicators to pinpoint changes between versions.

Integration and Automation: Seamlessly integrate with CI/CD pipelines for continuous testing and validation.

Comprehensive Validation: Validate data integrity, calculations, and visualizations across different Tableau environments.

Proactive Issue Detection: Receive alerts and notifications to promptly address discrepancies, ensuring data quality.

Expertise and Support: Leverage Datagaps' expertise in BI validation for unmatched accuracy and reliability.

Conclusion

Datagaps BI Validator empowers organizations to conquer Tableau regression testing challenges efficiently. By automating processes and providing actionable insights, BI Validator ensures that Tableau reports are always accurate, reliable, and up-to-date. Take charge of your data integrity with Datagaps BI Validator today.

Referred by Datagaps

#DataOpsSuiteBIValidator

Request a demo today

Demo: https://www.datagaps.com/request-a-demo/#utm_source=youtube&utm_medium=yt_video&utm_campaign=yt_request_demo&utm_id=yt_request_demo

#BI #PowerBI #BIValidator #Tableau #Datagaps

2 notes

·

View notes

Text

Transform Your Power BI Reports with Datagaps BI Validator

Are you ready to take your Power BI reports to the next level? Say goodbye to manual testing and embrace the power of automation with Datagaps BI Validator. Our detailed product explainer video shows you how to automate and optimize your Power BI report testing processes, ensuring top-notch performance and data quality.

Key Benefits of Power BI Testing Automation with BI Validator:

Automated Testing: Eliminate time-consuming manual testing processes.

Enhanced Accuracy: Ensure your Power BI reports are reliable and error-free.

Efficiency: Optimize report performance and resource allocation.

Comprehensive Validation: Seamlessly cover functional, regression, performance, and stress testing.

User-Friendly: No-code interface makes it easy for anyone to use.

Why Choose Datagaps BI Validator?

Integration Capabilities: Easily integrate with various data sources to streamline your workflows.

Real-Time Insights: Gain valuable insights quickly, enhancing decision-making processes.

Cost-Effective: Reduce operational costs by automating repetitive tasks.

Watch our video to learn how Datagaps BI Validator can revolutionize your Power BI report testing. Ensure accuracy, efficiency, and top-notch performance in your data analytics with our innovative automation tool. Unlock the potential of your Power BI reports today!

Youtube link: https://www.youtube.com/watch?v=LUGwPpy1T5k

Referred by Datagaps

#DataOpsSuiteBIValidator

Request a demo today

Demo: https://www.datagaps.com/request-a-demo/#utm_source=youtube&utm_medium=yt_video&utm_campaign=yt_request_demo&utm_id=yt_request_demo

0 notes

Text

Power-BI Precision in Healthcare Data Quality with Datagaps BI Validator is a comprehensive exploration of how Power BI, in conjunction with Datagaps BI Validator, addresses the critical need for high data quality in the healthcare industry, particularly within the healthcare insurance sector. The blog highlights the paramount importance of data accuracy and integrity in healthcare, where the stakes involve financial outcomes, patient safety, and treatment effectiveness. It sheds light on the substantial costs associated with poor data management and emphasizes the necessity of reliable data for informed decision-making and efficient healthcare service provision.

The blog details how Power BI aids healthcare insurers by offering robust data integration, visualization, and analysis tools. It elaborates on specific applications such as claims analysis, customer insights, operational efficiency, risk management, regulatory compliance, and strategic planning. Datagaps BI Validator is introduced as a critical solution that enhances Power BI’s capabilities by ensuring data accuracy, consistency, and reliability, addressing key challenges like data privacy and security, complex data integration, and scalability.

Additionally, the blog identifies specific hurdles in utilizing Power BI for healthcare insurance, including data privacy concerns, the complexity of data integration, and the necessity of real-time data analysis. It further discusses the broader issues of poor data collection practices in healthcare. It outlines the risks of low data quality, such as misdiagnoses, billing errors, and regulatory non-compliance.

Conclusively, Datagaps BI Validator is presented as an invaluable tool for overcoming these challenges. It supports healthcare insurance companies by automating BI report validation, enhancing data security, improving user adoption, and ensuring regulatory compliance. The blog emphasizes the validator’s role in facilitating accurate and reliable data analytics, ultimately contributing to more effective insurance fraud detection and predictive analytics in healthcare

Referred by Datagaps

#DataOpsSuiteBIValidator

Request a demo today

Demo: https://www.datagaps.com/request-a-demo/#utm_source=youtube&utm_medium=yt_video&utm_campaign=yt_request_demo&utm_id=yt_request_demo

0 notes

Text

Leading The Way in ETL Testing: Proven Strategies with ETL Validator

In data management, maintaining the accuracy and reliability of information is paramount for informed decision-making. ETL (Extract, Transform, Load) testing plays a pivotal role in safeguarding data integrity throughout its lifecycle. Datagaps' ETL Validator emerges as a game-changer in this domain, boasting remarkable efficiency and cost-saving benefits. For instance, a leading French personal care company witnessing significant reductions in migration testing time and overall Total Cost of Ownership (TCO) through its adoption.

This blog delves into the core practices of ETL testing, delineating its importance in ensuring data fidelity from extraction to loading. While ETL focuses on data processing, ETL testing verifies this data's accuracy and completeness. It encompasses numerous techniques such as data completeness, correctness, performance, metadata, anomaly testing, and validation, each playing a crucial role in guaranteeing data reliability.

The ETL testing process comprises phases like test planning, design, execution, and closure, all aimed at meticulously assessing data integrity and system performance. A comprehensive ETL testing checklist ensures thorough validation, covering data transformation, integrity, volume verification, error logging, and validation.

The business impact of effective ETL testing cannot be overstated, as it mitigates risks, boosts productivity, and ensures data-driven decisions are based on clean, reliable data. Datagaps' ETL Validator emerges as a key player in this landscape, offering automated data validation, comprehensive test coverage, pre-built test cases, metadata comparison, performance testing, seamless integration with CI/CD pipelines, enhanced reporting, and regulatory compliance.

In conclusion, ETL testing serves as a linchpin in a successful data management strategy, enabling organizations to harness the full potential of their data assets. By embracing advanced ETL testing tools and methodologies, enterprises can enhance operational efficiency, mitigate risks, and confidently drive business growth.

1 note

·

View note

Text

The Era of Data-Driven Decision Making with Data Reconciliation

Unveil the future of data management with Datagaps' DataOps 🚀 Suite! Dive into our latest blog to explore the transformative power of data reconciliation in today's data-driven world. Discover how automation and advanced tools are reshaping data integrity and consistency for strategic decision-making. 🔍💡

HERE IS THE BLOG

#DataReconciliation#DataIntegrity#DataOps#DataTestingAutomation#PowerBI#ETL#DataDrivenDecisionMaking

1 note

·

View note

Text

"Mastering ETL Validation: Ensuring Data Integrity in Your Processes"

Introduction: In the dynamic landscape of data management, Extract, Transform, Load (ETL) processes play a pivotal role in ensuring that data moves seamlessly from source to destination. However, the reliability of these processes heavily depends on effective validation mechanisms. This blog explores the importance of ETL validation and strategies to master this critical aspect of data integration.

The Essence of ETL Validation

Understanding ETL: A brief overview of ETL processes and their significance in data management. The Need for Validation: Discussing the challenges and risks associated with inaccurate or incomplete data during ETL. Key Components of ETL Validation

Data Quality Checks:

Exploring techniques for assessing the quality of extracted data. Importance of addressing anomalies, inconsistencies, and missing values. Schema Validations:

Ensuring data adheres to predefined structures and formats. Highlighting the role of schema validations in maintaining data integrity. Business Rule Validations:

Defining and enforcing business rules to align data with organizational requirements. Case studies illustrating the impact of adhering to or neglecting business rules.

Strategies for Effective ETL Validation

Automated Testing:

Emphasizing the role of automated testing in enhancing the efficiency and accuracy of ETL validation. Overview of popular testing frameworks and tools. Continuous Monitoring:

Discussing the importance of ongoing monitoring to identify issues in real-time. Implementing alerts and notifications for proactive issue resolution. Documentation Best Practices:

Stresses on the significance of comprehensive documentation for ETL processes and validation rules. A guide on maintaining clear documentation for future reference and team collaboration.

Real-world Challenges and Solutions

Addressing common challenges faced during ETL validation, such as data volume, data variety, and evolving business rules. Strategies and best practices to overcome these challenges based on industry experiences. Conclusion: In conclusion, mastering ETL validation is pivotal for organizations aiming to harness the power of accurate and reliable data. By implementing robust validation strategies and staying abreast of industry best practices, businesses can ensure the seamless flow of quality data through their ETL processes, ultimately contributing to informed decision-making and sustained success.

0 notes

Text

Automated ETL Testing

The Rise of Automated ETL Testing:

Traditionally, ETL testing has been a manual and resource-intensive process. However, with the increasing demands for agility, speed, and accuracy, automated ETL testing has emerged as a strategic solution. Automated testing involves the use of specialized tools and scripts to execute tests, validate results, and identify potential issues in the ETL process.

Challenges in Automated ETL Testing:

Tool Selection: Choosing the right automation tool is crucial. Consider factors such as compatibility with ETL platforms, ease of use, and the ability to support a variety of test scenarios.

Script Maintenance: As ETL processes evolve, test scripts must be updated accordingly. Maintenance can become challenging without proper version control and documentation.

Data Quality: Automated testing is only as effective as the quality of the test data. Ensuring realistic and representative test data is crucial for meaningful results.

Complex Transformations: Some ETL processes involve intricate business rules and complex transformations. Creating accurate and maintainable automated tests for such scenarios requires careful consideration.

Conclusion:

Automated ETL testing is a transformative approach that empowers organizations to enhance the reliability and efficiency of their data pipelines. By adopting best practices, addressing challenges proactively, and leveraging the right tools, businesses can streamline their ETL testing processes, ensuring that data remains a trustworthy asset in the era of data-driven decision-making

0 notes