Text

We Need Actually Open AI Now More than Ever (Or: Why Leopold Aschenbrenner is Dangerously Wrong)

Based on recent meetings it would appear that the national security establishment may share Leopold Aschenbrenner's view that the US needs to get to ASI first to help protect the world from Chinese hegemony. I believe firmly in protecting individual freedom and democracy. Building a secretive Manhattan project style ASI is, however, not the way to accomplish this. Instead we now need an Actually Open™ AI more than ever. We need ASIs (plural) to be developed in the open. With said development governed in the open. And with the research, data, and systems accessible to all humankind.

The safest number of ASIs is 0. The least safe number is 1. Our odds get better the more there are. I realize this runs counter to a lot of writing on the topic, but I believe it to be correct and will attempt to explain concisely why.

I admire the integrity of some of the people who advocate for stopping all development that could result in ASI and are morally compelled to do so as a matter of principle (similar to committed pacifists). This would, however, require magically getting past the pervasive incentive systems of capitalism and nationalism in one tall leap. Put differently, I have resigned myself to zero ASIs being out of reach for humanity.

Comparisons to our past ability to ban CFCs as per the Montreal Protocol provide a false hope. Those gasses had limited economic upside (there are substitutes) and obvious massive downside (exposing everyone to terrifyingly higher levels of UV radiation). The climate crisis already shows how hard the task becomes when the threat is seemingly just a bit more vague and in the future. With ASI, however, we are dealing with the exact inverse: unlimited perceived upside and "dubious" risk. I am putting "dubious" in quotes because I very much believe in existential AI risk but it has proven difficult to make this case to all but a small group of people.

To get a sense of just how big the economic upside perception for ASI is one need to look no further than the billions being poured into OpenAI, Anthropic and a few others. We are entering the bubble to end all bubbles because the prize at the end appears infinite. Scaling at inference time is utterly uneconomical at the moment based on energy cost alone. Don't get me wrong: it's amazing that it works but it is not anywhere close to being paid for by current applications. But it is getting funded and to the tune of many billions. It’s ASI or bust.

Now consider the national security argument. Aschenbrenner uses the analogy to the nuclear bomb race to support his view that the US must get there first with some margin to avoid a period of great instability and protect the world from a Chinese takeover. ASI will result in decisive military advantage, the argument goes. It’s a bit akin to Earth’s spaceships encountering far superior alien technology in the Three Body Problem, or for those more inclined towards history (as apparently Aschenbrenner is), the trouncing of Iraqi forces in Operation Desert Storm.

But the nuclear weapons or other examples of military superiority analogy is deeply flawed for two reasons. First, weapons can only destroy, whereas ASI also has the potential to build. Second, ASI has failure modes that are completely unlike the failure modes of non-autonomous weapons systems. Let me illustrate how these differences matter using the example of ASI designed swarms of billions of tiny drones that Aschenbrenner likes to conjure up. What in the world makes us think we could actually control this technology? Relying on the same ASI that designed the swarm to stop it is a bad idea for obvious reasons (fox in charge of hen house). And so our best hope is to have other ASIs around that build defenses or hack into the first ASI to disable it. Importantly, it turns out that it doesn’t matter whether the other ASI are aligned with humans in some meaningful way as long as they foil the first one successfully.

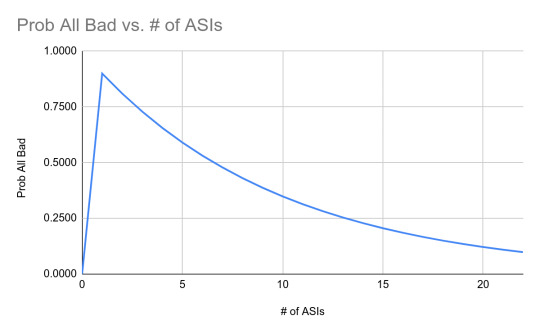

Why go all the way to advocating a truly open effort? Why not just build a couple of Manhattan projects then? Say a US and a European one. Whether this would make a big difference depends a lot on one’s belief about the likelihood of an ASI being helpful in a given situation. Take the swarm example again. If you think that another ASI would be 90% likely to successfully stop the swarm, well then you might take comfort in small numbers. If on the other hand you think it is only 10% likely and you want a 90% probability of at least one helping successfully you need 22 (!) ASIs. Here’s a chart graphing the likelihood of all ASIs being bad / not helpful against the number of ASIs for these assumptions:

And so here we have the core argument for why one ASI is the most dangerous of all the scenarios. Which is of course exactly the scenario that Aschenbrenner wants to steer us towards by enclosing the world’s knowledge and turning the search for ASI into a Manhattan project. Aschenbrenner is not just wrong, he is dangerously wrong.

People have made two counter arguments to the let’s build many ASIs including open ones approach.

First, there is the question of risk along the way. What if there are many open models and they allow bio hackers to create super weapons in their garage. That’s absolutely a valid risk and I have written about a key way of mitigating that before. But here again unless you believe the number of such models could be held to zero, more models also mean more ways of early detection, more ways of looking for a counteragent or cure, etc. And because we already know today what some of the biggest bio risk vectors are we can engage in ex-ante defensive development. Somewhat in analogy to what happened during COVID, would you rather want to rely on a single player or have multiple shots on goal – it is highly illustrative here to compare China’s disastrous approach to the US's Operation Warp Speed.

Second, there is the view that battling ASIs will simply mean a hellscape for humanity in a Mothra vs. Godzilla battle. Of course there is no way to rule that out but multiple ASIs ramping up around the same time would dramatically reduce the resources any one of them can command. And the set of outcomes also includes ones where they simply frustrate each other’s attempts at domination in ways that are highly entertaining to them but turn out to be harmless for the rest of the world.

Zero ASIs are unachievable. One ASI is extremely dangerous. We must let many ASIs bloom. And the best way to do so is to let everyone contribute, fork, etc. As a parting thought: ASIs that come out of open collaboration between humans and machines would at least be exposed to a positive model for the future in their origin, whereas an ASI covertly hatched for world domination, even in the name of good, might be more inclined to view that as its own manifest destiny.

I am planning to elaborate the arguments sketched here. So please fire away with suggestions and criticisms as well as links to others making compelling arguments for or against Aschenbrenner's one ASI to rule them all.

5 notes

·

View notes

Text

Moderation in Social Networks

First Pavel Durov, the co-founder and CEO of Telegram, was arrested in France, in part due to a failure to comply with moderation requests by the French government. Now we have Brazil banning X/Twitter from the country entirely, also claiming a failure to moderate.

How much moderation should there be on social networks? What are the mechanisms for moderation? Who should be liable for what?

The dialog on answering these questions about moderation is broken because the most powerful actors are motivated primarily by their own interests.

Politicians and governments want to gain back control of the narrative. As Martin Gurri analyzed so well in Revolt of the Public, they resent their loss of the ability to shape public opinion. Like many elites they feel that they know what's right and treat the people as a stupid “basket of deplorables.”

Platform owners want to control the user experience to maximize profits. They want to be protected from liability and fail to acknowledge the extraordinary impact of features such as trending topics, recommended accounts, and timeline/feed selection on people's lives and on societies.

The dialog is also made hard by a lack of imagination that keeps us trapped in incremental changes. Too many people seem to believe that what we have today is more or less the best we will get. That has us bogged down in a trench war of incremental proposals. Big and bold proposals are quickly dismissed as unrealistic.

Finally the dialog is complicated by deep confusions around freedom of speech. These arise from ignoring, possibly willfully, the reasons for and implications of freedom of speech for individuals and societies.

In keeping with my preference for a first principles approach I am going to start with the philosophical underpinnings of freedom of speech and then propose and evaluate concrete regulatory ideas based on those.

We can approach freedom of speech as a fundamental human right. I am human, I have a voice, therefore I have a right to speak.

We can also approach freedom of speech as an instrument for progress. Incumbents in power, whether companies, governments, or religions, don’t like change. Censoring speech keeps new ideas down. The result of suppressed speech is stasis, which ultimately results in decline because there are always problems that need to be solved (such as being in a low energy trap).

But both approaches also imply some limits to free speech.

You cannot use your right to speech to take away the human rights of someone else, for example by calling for their murder.

Society must avoid chaos, such as runaway criminality, massive riots, or in the extreme civil war. Chaos also impedes progress because it destroys the physical, social, and intellectual means of progress (from eroding trust to damaging physical infrastructure).

With these underpinnings we are looking for policies on moderation in social networks that honor a fundamental right but recognize its limitations and help keep society on a path of progress between stasis and chaos. My own proposals for how to accomplish this are bold because I don’t believe that incremental changes will be sufficient. The following applies to open social networks such as X/Twitter. A semi-closed social network such as Telegram where most of the activity takes place in invite-only groups poses additional challenges (I plan to write about this in a follow-up post).

First, banning human network participants entirely should be hard for a network operator and even for government. This follows from the fundamental human rights perspective. It is the modern version of ostracism, but unlike banishing someone from a single city it potentially excludes them from a global discourse. Banning a human user should either require a court order or be the result of a “Community Notes” type system (obviously to make this possible we need some kind of “proof of humanity” system which we will need in any case for lots of other things, such as online government services, and a “proof of citizenship” could be a good start on this – if properly implemented this will support pseudonymous accounts).

Second, networks must provide extensive tools for facilitating moderation by participants. This includes providing full API access to allow third party clients, support for account identity and post authorship assertions through digital signatures to minimize impersonation, and implement at least one “Community Notes” like system for attaching information to content. All of this is to enable as much decentralized avoidance of chaos, starting with maintaining a high level of trust in the source and quality of content.

Third, clients must not display content if that content has been found to violate a law either through a “Community Notes” process or by a court. This should also allow for injunctive relief if that has been ordered by a court. Clients must, however, display a placeholder where that content would have been, with a link to the reason (ideally the decision) on the basis of which it was removed. This will show the extent to which court-ordered content removal is taking place.

What about liability? Social networks and third-party clients that meet the above criteria should not be liable for the content of posts. Neither government nor participants should be able to sue a compliant operator over content.

Social networks should, however, be liable for their owned and operated recommender algorithms, such as trending topics, recommended accounts, algorithmic feeds, etc. Until recently social networks were successfully claiming in court that their algorithms are covered by Section 230, which I believe was an overly broad reading of the law. It is interesting to see that a court just decided that TikTok is liable for suggestions surfaced by its algorithm to a young girl that resulted in her death. I have an idea around viewpoint diversity that should provide a safe harbor and will write about that in a separate post (related to my ideas around an "opposing view" reader and also some of the ways in which Community Notes works).

Getting the question of moderation on social networks right is of utmost importance to preserving progress while avoiding chaos. For those who have been following the development of new decentralized social networks, such as Farcaster and Nostr some of the ideas above will look familiar. The US should be a global leader here given our long history of extensive freedom of speech.

10 notes

·

View notes

Text

Changing My Approach to Meetings to Take Back Control of My Attention

I let meetings take over my life. For reasons that I might get into some other time I said yes to way too many meetings. My schedule became ever more packed, often running back-to-back for entire days. It became harder and harder to find time to read, think, and write. I also had virtually no time to deal with emergencies when those came up. My attention was no longer mine to direct. It had been hijacked by meetings.

Having had a break from meetings on a six week voyage across the Atlantic, I will radically change my approach to meetings going forward. Of course I will still participate in board meetings and other group meetings. But I will dramatically cut down on one-on-one meetings both in terms of number and duration. Outside of a crisis situation I will no longer schedule standing meetings. And I will restrict the meetings to specific times, blocking out large chunks of time on my calendar for reading, thinking, and writing.

Broadly my meetings fall into three categories: information/decision, emotion, idea generation.

A large fraction of information/decision meetings is sadly a waste of time. They lack a clear objective and often amount to a recitation of somewhat random bits of information. Going forward I will ask for a lot more preparation in long-form writing. Sometimes the act of writing will obviate the need for such a meeting entirely as the decision will become clear. Often writing will significantly reduce the meeting time by focusing on the real substance. Also the only truly important such meetings are those where a type 1 decision needs to be made: something that cannot easily be reversed.

A category of meeting that can matter greatly is when a lot of emotion is in play that cannot be easily expressed or processed in writing. I am happy to take such meetings because I know how lonely being a founder/CEO can be. Ultimately though these meetings rarely make a long term difference beyond providing an immediate outlet for frustration or receiving some consolation. The reason is that it’s hard and maybe impossible for founders/CEOs to be vulnerable with an investor, which is what would be required to really process an emotionally challenging situation. This is why having a coach or therapist is so incredibly important and I encourage every founder/CEO to have one.

Finally a great reason for a meeting is generative riffing. This works best in person and when both sides are well prepared. It is a lot like musicians improvising: only produces great music if they know how to play their instruments and how to give and take. I will set aside significant time for these kinds of meetings because they are often the source of something truly new (it also happens to be what we do a lot at USV internally).

I am excited to regain more control over my attention. I will post an update later this year on how this new approach is working out.

8 notes

·

View notes

Text

Lessons from a Voyage

I have just returned from an exciting voyage: crossing the Atlantic on a sailboat. You can find updates from along the way on the J44 Frolic website, Twitter/X and Instagram accounts. In this post I am gathering up my lessons learned. Some of these are just about sailing, in particular passage making, and some are broadly applicable life lessons.

Offshore sailing is intense. I always had great respect for offshore sailors, and in particular solo sailors, and this voyage has only deepened that. To be successful requires lots of different skills: navigation, sail trim, anchoring/docking, mechanical, plumbing, electrical (AC and DC), fishing, cooking. Not everyone on board needs all of these but it is good to have all of them as part of the crew, ideally redundant. If you want to follow exciting single handed racing, I highly recommend the upcoming Vendée Globe race. On each of these skills, experience makes a huge difference. Going into this adventure there was a lot that I understood theoretically but had never done (I had zero offshore experience). So I was thrilled to do this with my friend HL DeVore who has extensive offshore experience, including two prior Atlantic crossings.

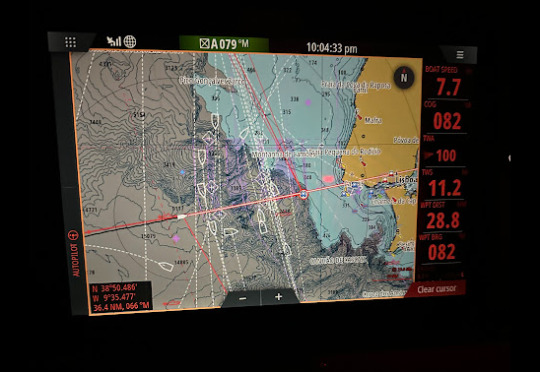

Technology has made passage making much easier. The voyage also increased my respect for what it took to cross the oceans in the past with minimal technology. We had access, via Starlink, to up-to-date weather information. We had redundant chart systems including on board but also on our phones, so we knew at all times where we were and how quickly we were making progress. We had a fantastic auto pilot that was able to steer accurately even in fairly confused sea states. We were able to make fresh water on demand with an advanced filtration and desalination system. We had fresh food in a fridge and frozen food, including ice cream (!), in a freezer. We had an electric kettle for fast water heating. An ice maker to add ice cubes to our water. A hot pot for making rice and other dishes. You don't have to go very far back to get to a time when none of this was available. And yet people crossed the oceans routinely.

Clear and non-defensive communication is essential. Everything on a boat has precise terms to identify what it is and what action is supposed to be taken. It is essential to learn this language to be able to communicate quickly and precisely. There are some areas where different sailors will use different terms and so it's good to converge on terminology for the boat. Beyond precision it also matters that communication has to be non-defensive. Everybody on board can speak up at an moment if they see or hear something that others should know. And when someone speaks to you, you always acknowledge that you have heard and understood by saying "copy." For example you might say "small craft 5 boat lengths out at 2 o'clock" and helm will acknowledge with "copy" even if they have already seen it. So much miscommunication can be avoided simply by acknowledging what has been said.

Alertness is crucial. Always be on the lookout for things that look or sound strange. A line hanging slack that is normally tight. Or a clanging noise that wasn't there before. All of this requires a high degree of alertness and also intimate knowledge of the normal state of the boat. For example water flowing by the boat often makes a gurgling sound when you are below deck and close to the sides. But at one point I heard a gurgling sound coming from an unusual location. We investigated and it turned out that a hose had come loose and we were spilling fresh water.

Know your boat inside out. Some people may be tempted to buy a new boat for an adventure like this. But every boat handles differently. And new boats often have small issues that will only bet detected while out at sea. We bought a used J44, a boat that my partner in this adventure has been sailing for over a decade. We then completely overhauled the boat and took it on several shake down cruises and races, with the ultimate pre passage shake down being the Newport Bermuda race. You also want to know the details of systems performance. For example fuel consumption of a diesel engine varies non-linearly with RPM. For example on Frolic the fuel consumption goes up by 33 percent from 1800 to 2000 RPM.

Life is supported by systems. Spaceships are called ships for a reason - they are both life support systems for humans. The ocean is not quite as uninhabitable for humans as space but it is definitely not where we can live without technology. Our water maker was a great example of that. In its absence we would have needed to bring a large quantity of freshwater along and hopefully catch rainwater (we would likely have to ration water which risks being dehydrated). Systems need redundancy in case they break and you can't fix them. We always had some water in jugs to tide us over, but we also had a mid sized and even some handheld desalination systems).

Observation matters. It is always important to complement what electronic systems spit out with what we can observe directly. Modern weather forecasts are amazingly accurate. But local weather can still differ significantly and sometimes forecasts will be wrong. It really helps to understand the weather at different scales, from the very large (the entire North Atlantic), to the region you are in (e.g. the Azores), down to the specific location. Another example of a system that helps tremendously but needs to be complemented with observation is the Automatic Identification System (AIS). It uses VHF to communicate the identities of boats, along with their location, heading, and speed. This makes it much easier to identify and avoid potential collisions. When we were approaching Portugal we had to cross through shipping lanes and it would have been much more challenging without AIS. But we did encounter two cargo ships that were not properly broadcasting. One was highly intermittent (and would only show up on our chart plotter occasionally), the other one not at all. We encountered the second one at night and had to fall back to visual observation and hailing the other vessel.

Slow is fast. Take the time to talk things through, such as a spinnaker take down. This means making sure everyone understands their role and the sequence of tasks. Prepare everything that can be prepared, such as getting any lines properly coiled that need to run out or having sail ties at hand. Move deliberately around the boat . Something going wrong is not only dangerous but will usually take a lot more time to fix than getting it right in the first place. This is even true when something has already gone wrong. We did great overall but did wind up with one accidental jibe. The instinct might be to rush and fix that immediately but it is much better to take the time to make sure everyone is ok, the boat is ok, and then make a plan to jibe back in a controlled fashion.

Preparation requires anticipation. We were incredibly well prepared. We had all the tools and parts on board we needed. We had all the medications on board to treat everyone on the crew for a variety of thankfully small issues. We had enough fuel to get through some of the light or no wind stretches. All of this was the case because we anticipated what the problems might be that we could encounter. You can't get stuff onto your boat once you are in the ocean. Our planned path via the Azores is notorious for people running out of fuel or food or both because of the extent of the Azores high.

Safety is not negotiable. Our default was to wear harnesses when on deck and to clip in. The only times we would not do that was during the day time in extremely calm sea state while motoring. All it takes is one wrong step for someone to go overboard. And at night or with six foot waves it is not only hard to see someone, especially when the boat is flying along at 10 knots, but also tricky and dangerous to get back to them and get them back on board. Another key rule is "one hand for yourself and one hand for the boat." This is where the expression "single handed" sailing comes from. I violated this rule once by trying to bring two bags below at the same time (coming back to the boat at the dock). I missed the last step down the companion way, twisted around and fell backwards into the galley, hitting my head on the stove. I was lucky and came away with some scrapes and bump on the back of my head but it could have easily ended badly.

Good food makes everyone happy. We were fortunate to have my son Peter Wenger on board as a chef. He had pre-cooked some meals which we carried in our freezer. But he also made many wonderful things fresh, such an incredible Mahi Mahi ceviche from a fresh catch. And of course my personal favorite: French toast for breakfast.

Great on shore support really helps. There is some fear associated with being far out on the ocean where help can be a long way away. And so it is wonderful to have strong on shore support. Psychology really matters for well being and stress. When I fell down it was great to do a call with med team and get concussion assessment. We relied on Katie and Jessica from Regatta Rescue for medical support. They were terrific and also had put together an incredible med kit for us to bring along. We also got daily macro weather updates from Ken McKinley at Locusweather. Finally, it was fantastic to have family emotional support, in particular from Susan "Gigi" Danziger, who also helped with logistics, such as getting to Newport, and joined us in Bermuda, for cruising in the Azores, and in Lisbon upon arrival.

All in all this passage was an incredible adventure and I feel fortunate to have been able to experience this passage. I hope this will not be my last one and am already thinking about what bringing the boat back across the Atlantic might look like. In the meantime I am keeping my fingers crossed for a safe passage of Frolic through the Straight of Gibraltar into the Mediterranean.

6 notes

·

View notes

Text

Trump is Unfit to be President

Donald Trump is unfit to be the president of the United States of America. He is unfit to hold any public office. It boggles the mind that a number of investors and entrepreneurs have come out in support of Trump despite his obviously disqualifying character, which has been revealed time and again through words and actions. Sometimes there are hard choices to make when people are immensely talented but also deeply flawed. This is not one of those situations.

Many years ago while on vacation we met some contractors from Atlanta. I casually said something along the lines of “oh there must have been a lot of work with Trump building casinos.” They replied to the effect of “we wouldn’t be here on vacation if we had worked for Trump – he’s bankrupted some of our best friends.” I asked them to elaborate and they explained that Trump would make small upfront payments to contractors telling them they would get paid fully upon work completion and then simply stiff them when the work was done. I thought to myself that surely someone couldn’t get away with that repeatedly but would get sued instead. But that is exactly what Trump had done again and again and it has been well documented.

Stiffing contractors is emblematic of a man who all his life has gotten away with being a bad person. And knowing that he was able to do so because of his wealth and the power that comes along with it. Then even bragging about it, such as in the infamous “grab them by the pussy” tape where he repeatedly says “you can do anything."Or when he said “I could stand in the middle of Fifth Avenue and shoot somebody and I wouldn’t lose any voters.”

Trump is a man who believes deeply that he is above everyone else and above the law. That this entitles him to do as he pleases. That might makes right. No surprise that such a man would do a 180 on issues if he believes it serves his purpose. And no surprise that he will not commit to accepting the results of the upcoming election and tried all sorts of shenanigans to stay in power following his defeat in the prior one.

All of this is widely known. These aren’t alleged actions or rumored words. There are receipts all around and they would cover many pages of writing. How anyone can get past that and still believe that the issues they care about are worth supporting him is beyond me, even more so given that Trump has no record of keeping any commitments.

Trump is unfit for any public office. And Trump most certainly is unfit to be the President of the United States.

16 notes

·

View notes

Text

Everything Wrong with the Democrats

The US political system is in turmoil because the Republicans have been high-jacked by Trump and the Democrats have become hypocritical technocrats. I have written a fair bit about Trump and will write more, but today's post is reserved for "everything" I find wrong with the Democrats. There is more and also more detail to each of these criticisms, so consider this to be an overview:

Democrats have embraced an intrusive ninny state that patronizes citizens. I blame books like Nudge and the theories behind it. Government should be about creating the conditions for progress, not about micro managing citizens' lives.

Democrats have supported the surveillance state and forever wars coming out of 9/11. The recent extension of the Patriot Act is an utter disgrace. Abroad, Democrats have supported poorly thought through interventions (I am not for splendid isolation, just for not succumbing to the neocon hawks).

Democrats are largely anti innovation (sometimes inadvertently, sometimes on purpose). I am a firm believer in the need for agencies such as the SEC, the FDA, and the EPA, but they require tight mandates or they will stifle innovation. We urgently need to streamline how these agencies operate. Instead, Democrats have been championing overly complex regulation. Side note: the IRA and Chips Act will stimulate innovation to a degree, but they are a crude overcorrection.

Democrats are beholden to big banking. The financial crisis was an opportunity to restructure the financial sector and push back against financialization. Instead, we have wound up with even bigger banks and more powerful asset managers. The Democrats' rejection of crypto/web3 as a counterweight to concentration in the financial sector is a failure of imagination.

Democrats don't seem to understand how to regulate digital technology. They worry rightly about the market power of big corporations such as Google, Facebook and Amazon, but then go back to an industrial age toolbox, instead of coming up with digitally native regulation.

Democrats are wrong on immigration. Yes, we absolutely need immigration (I am an immigrant and we get the job done). We should make legal immigration easy and illegal immigration hard. And back to the idea of conditions for progress: at a time when many people feel they are falling behind, public support for immigration will diminish. Also: no country will be able sustain the pressure of growing immigration waves as the climate crisis unfolds.

Democrats have pursued a failed strategy on diversity and gender. Making a large section of the population feel bad will not get people to change their beliefs it will just make them resent you and the people you are trying to help (I will write a blog post at some point about another approach that I have come across recently that seems much more promising). Thankfully this seems to be somewhat on the way out.

So yes. I can easily see a type of Republican candidate that I would vote for in a presidential election. This would be someone of strong character who has a real plan to tackle these problems by building a broad coalition. Alas, Trump is not that.

5 notes

·

View notes

Text

Going on a Voyage

Growing up in Germany my parents and I would spend two weeks every summer going to the Chiemsee to sail. We stayed at Gästehaus Grünäugel in Gstadt (no that's not the resort in Switzerland). I learned how to sail from my Dad who was self-taught. Thanks, Dad!

It was a ton of fun to be out on the water and I also built a deep association between sailing and vacation. What a great way to take one's mind off things! I wound up not sailing during college and for quite a few years after that. But then on a family vacation in the BVI I took a Hobie Cat off the beach and was immediately hooked again.

Susan and I were living in Scarsdale at the time, which is only 20 minutes from Larchmont and the Long Island Sound. We also discovered that friends of ours belonged to the Larchmont Yacht Club. We took the plunge and bought a J100 (admittedly a J100 was the only boat we test sailed and I may or may not have loved it so much that I charged ahead and bought one).

We have spent many wonderful moments on Galatrona since. Sailing on the Sound with Manhattan in the background is delightful. We have also brought her out to Montauk a few times, where there is always wind. With every year of sailing I also remembered how as a child I had devoured the Horatio Hornblower series of books. The adventure of being at sea and braving storms seemed ever so exciting. I started following various ocean races online (the New York Vendée is on right now). And out of all this came an idea: what about an Atlantic crossing?

For a while this seemed like a bucket list item that I would probably postpone forever. But then one day our son Peter brought up that he would love to do this together with me. We mentioned it to my friend HL, who has been my sailing mentor for many years. And then all of a sudden a plan came together. We would buy a boat, race Newport Bermuda and then go on from there across the Atlantic. And that's exactly what we are now doing.

A few words about the boat. Frolic is a J44. It is hull #4, built in 1989. Pretty much everything else on the boat has been rebuilt over the last year starting with a brand new mast. J44s are cruiser-racers that perform extremely well under a wide range of conditions from light to heavy air. We have been trialing the boat by participating in a couple of early season races, including the EDLU and Block Island.

Now the big voyage is coming up! We are starting with the Newport Bermuda race on June 21st. This will be a shakedown for the boat and maybe as importantly for the crew, in particular for Peter and me. While we done a lot of sailing, it has all been in the Long Island Sound and near Montauk. So there is some chance that once we get to Bermuda we will simply not want to carry on.

For right now though, I couldn't be more excited to be going on voyage. You can follow our adventure on Twitter, Instagram and our blog. Let me know what you want to hear about and I will try to post on that.

4 notes

·

View notes

Text

I Miss Writing Here

It's been a long time since I have written here. And I miss it. There has been a confluence of factors that have made it difficult.

First, there has been simply finding the time. I have been crazy busy at work with many portfolio companies going through some kind of crisis as part of the adjustments in the financing environment over the last couple of years. I have also been working through some personal issues that have provided an opportunity for growth but have been time intensive (more on that some day, maybe). I have also been investing in staying fit by working out consistently, with strength training on Mondays, Wednesdays and Fridays and cardio on Tuesdays, Thursdays, and Saturdays. And finally I have been preparing for a transatlantic sail (more on that shortly).

Second, the world is continuing to descend back into tribalism. And it has been exhausting trying to maintain a high rung approach to topics amid an onslaught of low rung bullshit. Whether it is Israel-Gaza, the Climate Crisis or Artificial Intelligence, the online dialog is dominated by the loudest voices. Words have been rendered devoid of meaning and reduced to pledges of allegiance to a tribe. I start reading what people are saying and often wind up feeling isolated and exhausted. I don't belong to any of the tribes nor would I want to. But the effort required to maintain internally consistent and intellectually honest positions in such an environment is daunting. And it often seems futile.

But I don't give up easily. And I have started to miss writing here so much that I will figure out how to resume it. I am writing as much for myself as for anyone who may feel similarly distraught at the state of discourse.

18 notes

·

View notes

Text

Complex Regulation is Bad Regulation: We Need Simple Enduser Rights

Readers of this blog and/or my book know that I am pro regulation as a way of getting the best out of technological progress. One topic I have covered repeatedly over the years is the need to get past the app store lock-in. The European Digital Markets Act was supposed to accomplish this but Apple gave a middle finger by figuring out a way to comply with the letter of the law while going against its spirit.

We have gone down a path for many years now where regulation has become ever more complex. One argument would be that this is simply a reflection of the complexity of the world we live in. "A complex world requires complex laws" sounds reasonable. And yet it is fundamentally mistaken.

When faced with increasing complexity we need regulation that firmly ensconces basic principles. And we need to build a system of law that can effectively apply these principles. Otherwise all we are doing is making a complex world more complex. Complexity has of course been in the interest of large corporations which employ armies of lawyers to exploit it (and often help create and maintain complexity through lobbying). Tax codes around the world are a great example of this process.

So what are the principles I believe need to become law in order for us to have more "informational freedom"?

A right to API access

A right to install software

A right to third party support and repair

In return manufacturers of hardware and providers of software can void warranty and refuse support when these rights are exercised. In other words: endusers proceed at their own risk.

Why not give corporations the freedom to offer products any which way they want to? After all nobody is forced to buy an iPhone and they could buy an Android instead. This is a perfectly fine argument for highly competitive markets. For example, it would not make sense to require restaurants to sell you just the ingredients instead of the finished meal (you can go and buy ingredients from a store separately any time and cook yourself). But Apple has massive market power as can easily be seen by its extraordinary profitability.

So yes regulation is needed. Simple clear rights for endusers, who can delegate these rights to third parties they trust. We deserve more freedom over our devices and over the software we interact with. Too much control in the hands of a few large corporations is bad for innovation and ultimately bad for democracy.

8 notes

·

View notes

Text

The World After Capital: All Editions Go, Including Audio

My book, The World After Capital, has been available online and as hardcover for a couple of years now. I have had frequent requests for other editions and I am happy to report that they are now all available!

One of the biggest requests was for an audio version. So this past summer I recorded one which is now available on Audible.

Yes, of course I could have had AI do this, but I really wanted to read it myself. Both because I think there is more of a connection to me for listeners and also because I wanted to see how the book has held up. I am happy to report that I felt that it had become more relevant in the intervening years.

There is also a Kindle edition, as well as a paperback one (for those of you who like to travel light and/or crack the spine). If you have already read the book, please leave a review on Amazon, this helps with disoverability and I welcome the feedback.

In keeping with the spirit of the book, you can still read The World After Capital directly on the web and even download an ePub version for free (registration required).

If you are looking for holiday gift ideas: it's not too late to give The World After Capital as a present to friends and family ;)

PS Translations into other languages are coming!

7 notes

·

View notes

Text

AI Safety Between Scylla and Charybdis and an Unpopular Way Forward

I am unabashedly a technology optimist. For me, however, that means making choices for how we will get the best out of technology for the good of humanity, while limiting its negative effects. With technology becoming ever more powerful there is a huge premium on getting this right as the downsides now include existential risk.

Let me state upfront that I am super excited about progress in AI and what it can eventually do for humanity if we get this right. We could be building the capacity to turn Earth into a kind of garden of Eden, where we get out of the current low energy trap and live in a World After Capital.

At the same time there are serious ways of getting this wrong, which led me to write a few posts about AI risks earlier this year. Since then the AI safety debate has become more heated with a fair bit of low-rung tribalism thrown into the mix. To get a glimpse of this one merely needs to look at the wide range of reactions to the White House Executive Order on Safe, Secure and Trustworthy Development and Use of Artificial Intelligence. This post is my attempt to point out what I consider to be serious flaws in the thinking of two major camps on AI safety and to mention an unpopular way forward.

First, let’s talk about the “AI safety is for wimps” camp, which comes in two forms. One is the happy-go-lucky view represented by Marc Andreessen’s “Techno-Optimist Manifesto” and also his preceding Tweet thread. This view dismisses critics who dare to ask social or safety questions as luddites and shills.

So what’s the problem with this view? Dismissing AI risks doesn’t actually make them go away. And it is extremely clear that at this moment in time we are not really set up to deal with the problems. On the structural risk side we are already at super extended income and wealth inequality. And the recent AI advances have already been shown to further accelerate this discrepancy.

On the existential risk side, there is recent work by Kevin Esvelt et al. showing how LLMs can broaden access to pandemic agents. Jeffrey Ladish et. al. demonstrating how cheap it is to remove safety training from an open source model with published weights. This type of research clearly points out that as open source models become rapidly more powerful they can be leveraged for very bad things and that it continues to be super easy to strip away the safeguards that people claim can be built into open source models.

This is a real problem. And people like myself, who have strongly favored permissionless innovation, would do well to acknowledge it and figure out how to deal with it. I have a proposal for how to do that below.

But there is one intellectually consistent way to continue full steam ahead that is worth mentioning. Marc Andreessen cites Nick Land as an inspiration for his views. Land in Meltdown wrote the memorable line “Nothing human makes it out of the near-future”. Embracing AI as a path to a post-human future is the view embraced by the e/acc movement. Here AI risks aren’t so much dismissed as simply accepted as the cost of progress. My misgiving with this view is that I love humanity and believe we should do our utmost to preserve it (my next book which I have started to work on will have a lot more to say about this).

Second, let’s consider the “We need AI safety regulation now” camp, which again has two subtypes. One is “let regulated companies carry on” and the other is “stop everything now.” Again both of these have deep problems.

The idea that we can simply let companies carry on with some relatively mild regulation suffers from three major deficiencies. First, this has the risk of leading us down the path toward highly concentrated market power and we have seen the problems of this in tech again and again (it has been a long standing topic on my blog). For AI market power will be particularly pernicious because this technology will eventually power everything around us and so handing control to a few corporations is a bad idea. Second, the incentives of for-profit companies aren’t easily aligned with safety (and yes, I include OpenAI here even though it has in theory capped investor returns but also keeps raising money at ever higher valuations, so what’s the point?).

But there is an even deeper third deficiency of this approach and it is best illustrated by the second subtype which essentially wants to stop all progress. At its most extreme this is a Ted Kaczynsci anti technology vision. The problem with this of course is that it requires equipping governments with extraordinary power to prevent open source / broadly accessible technology from being developed. And this is an incredible unacknowledged implication of much of the current pro-regulation camp.

Let me just give a couple of examples. It has long been argued that code is speech and hence protected by first amendment rights. We can of course go back and revisit what protections should be applicable to “code as speech,” but the proponents of the “let regulated companies go ahead with closed source AI” don’t seem to acknowledge that they are effectively asking governments to suppress what can be published as open source (otherwise, why bother at all?). Over time government would have to regulate technology development ever harder to sustain this type of regulated approach. Faster chips? Government says who can buy them. New algorithms? Government says who can access them. And so on. Sure, we have done this in some areas before, such as nuclear bomb research, but these were narrow fields, whereas AI is a general purpose technology that affects all of computation.

So this is the conundrum. Dismissing AI safety (Scylla) only makes sense if you go full on post humanist because the risks are real. Calling for AI safety through oversight (Charybdis) doesn’t acknowledge that way too much government power is required to sustain this approach.

Is there an alternative option? Yes but it is highly unpopular and also hard to get to from here. In fact I believe we can only get there if we make lots of other changes, which together could take us from the Industrial Age to what I call the Knowledge Age. For more on that you can read my book The World After Capital.

For several years now I have argued that technological progress and privacy are incompatible. The reason for this is entropy, which means that our ability to destroy will always grow faster than our ability to (re)build. I gave a talk about it at the Stacks conference in Berlin in 2018 (funny side note: I spoke right after Edward Snowden gave a full throated argument for privacy) and you can read a fuller version of the argument in my book.

The only solution other than draconian government is to embrace a post privacy world. A world in which it can easily be discovered that you are building a super dangerous bio weapon in your basement before you have succeeded in releasing it. In this kind of world we can have technological progress but also safeguard humanity – in part by using aligned super intelligences to detect what is happening. And yes, I believe it is possible to create versions of AGI that have deep inner alignment with humanity that cannot easily be removed. Extremely hard yes, but possible (more on this in upcoming posts on an initiative in this direction).

Now you might argue that a post privacy world also requires extraordinary state power but that's not really the case. I grew up in a small community where if you didn't come out of your house for a day, the neighbors would check in to make sure you were OK. Observability does not require state power per se. Much of this can happen simply if more information is default public. And so regulation ought to aim at increased disclosure.

We are of course a long way away from a world where most information about us could be default public. It will require massive changes from where we are today to better protect people from the consequences of disclosure. And those changes would eventually have to happen everywhere that people can freely have access to powerful technology (with other places opting for draconian government control instead).

Given that the transition which I propose is hard and will take time, what do I believe we should do in the short run? I believe that a great starting point would be disclosure requirements covering training inputs, cost of training runs, and powered by (i.e. if you launch say a therapy service that uses AI you need to disclose which models). That along with mandatory API access could start to put some checks on market power. As for open source models I believe a temporary voluntary moratorium on massively larger more capable models is vastly preferable to any government ban. This has a chance of success because there are relatively few organizations in the world that have the resources to train the next generation of potentially open source models.

Most of all though we need to have a more intellectually honest conversation about risks and how to mitigate them without introducing even bigger problems. We cannot keep suggesting that these are simple questions and that people must pick a side and get on with it.

7 notes

·

View notes

Text

Weaponization of Bothsidesism

One tried and true tactic for suppressing opinions is to slap them with a disparaging label. This is currently happening in the Israel/Gaza conflict with the allegation of bothsidesism, which goes as follows: you have to pick a side, anything else is bothsidesism. Of course nobody likes to be accused of bothsidesism, which is clearly bad. But this is a completely wrong application of the concept. Some may be repeating this allegation unthinkingly, but others are using it as an intentional tactic.

Bothsidesism, aka false balance, is when you give equal airtime to obvious minority opinions on a well-established issue. The climate is a great example, where the fundamental physics, the models, and the observed data all point to a crisis. Giving equal airtime to people claiming there is nothing to see is irresponsible. To be clear, it would be equally dangerous to suppress any contravening views entirely. Science is all about falsifiability.

Now in a conflict, there are inherently two sides. That doesn't at all imply that you have to pick one of them. In plenty of conflicts both sides are wrong. Consider the case of the state prosecuting a dealer who sold tainted drugs that resulted in an overdose. The dealer is partially responsible because they should have known what they were selling. The state is also partially responsible because it should decriminalize drugs or regulate them in a way that makes safety possible for addicts. I do not need to pick a side between the dealer and the state.

I firmly believe that in the Israel/Gaza conflict both sides are wrong. To be more precise, the leaders on both sides are wrong and their people are suffering as a result. I do not have to pick a side and neither do you. Don't let yourself be pressured into picking a side via a rhetorical trick.

33 notes

·

View notes

Text

Israel/Gaza

I have not personally commented in public on the Israel/Gaza conflict until now (USV signed on to a statement). The suffering has been heartbreaking and the conflict is far from over. Beyond the carnage on the ground, the dialog online and in the street has been dominated by shouting. That makes it hard to want to speak up individually.

My own hesitation was driven by unacknowledged emotions: Guilt that I had not spoken out about the suffering of ordinary Palestinians in the past, despite having visited the West Bank. Fear that support for one side or the other would be construed as agreeing with all its past and current policies. And finally, shame that my thoughts on the matter appeared to me as muddled, inconsistent and possibly deeply wrong. I am grateful to everyone who engaged with me in personal conversations and critiqued some of what I was writing to wrestle down my thoughts over the last few weeks, especially my Jewish and Muslim friends, for whom this required additional emotional labor in an already difficult time.

Why speak out at all? Because the position I have arrived at represents a path that will be unpopular with some on both sides of this conflict. If people with views like mine don’t speak, then the dialog will be dominated by those with extremely one-sided views contributing to further polarization. So this is my attempt to help grow the space for discussion. If you don’t care about my opinion on this conflict, you don’t have to read it.

The following represents my current thinking on a possible path forward. As always that means it is subject to change despite being intentionally strongly worded.

Hamas is a terrorist organization. I am basing this assessment not only on the most recent attack against Israel but also on its history of violent suppression of Palestinian opposition. Hamas must be dismantled.

Israel’s current military operation has already resulted in excessive civilian casualties and must be replaced with a strategy that minimizes further Palestinian civilian casualties, even if that entails increased risk to Israeli troops (there is at least one proposal for how to do this being floated now). If there were a ceasefire-based approach to dismantling Hamas that would be even better and we should all figure out how that might work.

Immediate massive humanitarian relief is needed in southern Gaza. This must be explicitly temporary. The permanent displacement of Palestinians is not acceptable.

Israel must commit to clear territorial lines for both Gaza and the Westbank and stop its expansionist approach to the latter. This will require relocating some settlements to establish sensible borders. Governments need clear borders to operate with credibility, which applies also to any Palestinian government (and yes I would love to see humanity eventually transcend the concept of borders but that will take a lot of time).

A Marshall Plan-level commitment to a full reconstruction of Gaza must be made now. All nations should be called upon to join this effort. Reconstruction and constitution of a government should be supervised by a coalition that must include moderate Islamic countries. If none can be convinced to join such an effort, that would be good to know now for anyone genuinely wanting to achieve durable peace in the region.

I believe that an approach along these lines could end the current conflict and create the preconditions for lasting peace. Importantly it does not preclude democratically elected governments from eventually choosing to merge into a single state.

All of this may sound overly ambitious and unachievable. It certainly will be if we don’t try and instead choose more muddling through. It will require strong leadership and moral clarity here in the US. That is a tall order on which we have a long way to go. But here are two important starting points.

We must not tolerate antisemitism. As a German from Nürnberg I know all too well the dark places to which antisemitism has led time and time again. The threat of extinction for Jews is not hypothetical but historical. And it breaks my heart that my Jewish friends are removing mezuzahs from their doors. There is one important confusion we should get past if we genuinely want to make progress in the region. Israel is a democracy and deserves to be treated as such. Criticizing Israeli government policies isn’t antisemitic, just like criticizing the Biden administration isn’t anti-Christian, or criticizing the Modi government isn’t anti-Hindu. And yes, I believe that many of Israel’s historic policies towards Gaza and the Westbank were both cruel and ineffective. Some will argue that Israel is an ethnocracy and/or a colonizer. One can discuss potential implications of this for policy. But if what people really mean is that Israel should cease to exist then they should come out and say that and own it. I strongly disagree.

We must not tolerate islamophobia. We also have to protect citizens who want to practice Islam. We must not treat them as potential terrorists or as terrorist supporters on the basis of their religion. How can we ask people to call out Hamas as a terrorist organization when we readily accept mass casualties among Muslims (not just in the region but also in other places, such as the Iraq war) while also not pushing back on people depicting Islam as an inherently hateful religion? And for those loudly claiming the second amendment, how about also supporting the first, including for Muslims? I have heard from several Muslim friends that they frequently feel treated as subhuman. And that too breaks my heart.

This post will likely upset some people on both sides of the conflict. There is nothing of substance that can be said that will make everyone happy. I am sure I am wrong about some things and there may be better approaches. If you have read something that you found particularly insightful, please point me to it. I am always open to learning and plan to engage with anyone who wants to have a good faith conversation aimed at achieving peace in the region.

8 notes

·

View notes

Text

We Need New Forms of Living Together

I was at a conference earlier this year where one of the topics was the fear of a population implosion. Some people are concerned that with birth rates declining in many parts of the world we might suddenly find ourselves without enough humans. Elon Musk has on several occasions declared population collapse the biggest risk to humanity (ahead of the climate crisis). I have two issues with this line of thinking. First, global population is still growing and some of those expressing concern are veiling a deep racism where they believe that areas with higher birth rates are inferior. Second, a combination of ongoing technological progress together with getting past peak population would be a fantastic outcome.

Still there are people who would like to have children but are not in fact having them. While some of this is driven by concern about where the world is headed, a lot of it is a function of the economics of having children. It's expensive to do so not just in dollar terms but also in time commitment. At the conference one person advanced the suggestion that the answer is we must bring back the extended family as a widely embraced structure. Grandparents, the argument goes, could help raise children and as an extra benefit this could help address the loneliness crisis for older people.

This idea of a return to the extended family neatly fits into a larger pattern of trying to solve our current problems by going back to an imagined better past. The current tradwife movement is another example of this. I say "imagined better past" because the narratives conveniently omit much of the actual reality of that past. My writing here on Continuations and in The World After Capital is aimed at a different idea: what can we learn from the past so that we can create a better future?

People living together has clear benefits. It allows for more efficient sharing of resources. And it provides company which is something humans thrive on. The question then becomes what forms can this take? Thankfully there is now a lot of new exploration happening. Friends of mine in Germany bought an abandoned village and have formed a new community there. The Supernuclear Substack documents a variety of new coliving groups, such as Radish in Oakland. Here is a post on how that has made it easier to have babies.

So much of our views of what constitutes a good way of living together is culturally determined. But it goes deeper than that because over time culture is reflected in the built environment which is quite difficult to change. Suburban single family homes are a great example of that, as are highrise buildings in the city without common spaces. The currently high vacancy rates in office buildings may provide an opportunity to build some of these out in ways that are conducive to experimenting with new forms of coliving.

If you are working an initiative to convert offices into dedicated space for coliving (or are simply aware of one), I would love to hear more about it.

10 notes

·

View notes

Text

Low Rung Tech Tribalism

Silicon Valley's tribal boosterism has been bad for tech and bad for the world.

I recently criticized Reddit for clamping down on third party clients. I pointed out that having raised a lot of money at a high valuation required the company to become more extractive in an attempt to produce a return for investors. Twitter had gone down the exact same path years earlier with bad results, where undermining the third party ecosystem ultimately resulted in lower growth and engagement for the network. This prompted an outburst from Paul Graham who called it a "diss" and adding that he "expected better from [me] in both the moral and intellectual departments."

Comments like the one by Paul are a perfect example of a low rung tribal approach to tech. In "What's Our Problem" Tim Urban introduces the concept of a vertical axis of debate which distinguishes between high rung (intellectual) and low rung (tribal) approaches. This axis is as important, if not more important, than the horizontal left versus right axis in politics or the entrepreneurship/markets versus government/regulation axis in tech. Progress ultimately depends on actually seeking the right answers and only the high rung approach does that.

Low rung tech boosterism again and again shows how tribal it is. There is a pervasive attitude of "you are either with us or you are against us." Criticism is called a "diss" and followed by a barely veiled insult. Paul has a long history of such low rung boosterism. This was true for criticism of other iconic companies such as Uber and Airbnb also. For example, at one point Paul tweeted that "Uber is so obviously a good thing that you can measure how corrupt cities are by how hard they try to suppress it."

Now it is obviously true that some cities opposed Uber because of corruption / regulatory capture by the local taxi industry. At the same time there were and are valid reasons to regulate ride hailing apps, including congestion and safety. A statement such as Paul's doesn't invite a discussion, instead it serves to suppresses any criticism of Uber. After all, who wants to be seen as corrupt or being allied with corruption against something "obviously good"? Tellingly, Paul never replied to anyone who suggested that his statement was too extreme.

The net effect of this low rung tech tribalism is a sense that tech elites are insular and believe themselves to be above criticism, with no need to engage in debate. The latest example of this is Marc Andreessen's absolutist dismissal of any criticism or questions about the impacts of Artificial Intelligence on society. My tweet thread suggesting that Marc's arguments were overly broad and arrogant promptly earned me a block.

In this context I find myself frequently returning to Martin Gurri's excellent "Revolt of the Public." A key point that Gurri makes is that elites have done much to undermine their own credibility, a point also made in the earlier "Revolt of the Elites" by Christopher Lasch. When elites, who are obviously benefiting from a system, dismiss any criticism of that system as invalid or "Communist," they are abdicating their responsibility.

The cost of low rung tech boosterism isn't just a decline in public trust. It has also encouraged some founders' belief that they can be completely oblivious to the needs of their employees or their communities. If your investors and industry leaders tell you that you are doing great, no matter what, then clearly your employees or communities must be wrong and should be ignored. This has been directly harmful to the potential of these platforms, which in turn is bad for the world at large which is heavily influenced by what happens on these platforms.

If you want to rise to the moral obligations of leadership, then you need to find the intellectual capacity to engage with criticism. That is the high rung path to progress. It turns out to be a particularly hard path for people who are extremely financially successful as they often allow themselves to be surrounded by sycophants both IRL and online.

PS A valid criticism of my original tweet about Reddit was that I shouldn't have mentioned anything from a pitch meeting. And I agree with that.

13 notes

·

View notes

Text

Artificial Intelligence Existential Risk Dilemmas

A few week backs I wrote a series of blog posts about the risks from progress in Artificial Intelligence (AI). I specifically addressed that I believe that we are facing not just structural risks, such as algorithmic bias, but also existential ones. There are three dilemmas in pushing the existential risk point at this moment.

First, there is the potential for a "boy who cried wolf" effect. The more we push right now, if (hopefully) nothing terrible happens, then the harder existential risk from artificial intelligence will be dismissed for years to come. This of course has been the fate of the climate community going back to the 1980s. With most of the heat to-date from global warming having been absorbed by the oceans, it has felt like nothing much is happening, which had made it easier to disregard subsequent attempts to warn of the ongoing climate crisis.

Second, the discussion of existential risk is seen by some as a distraction from focusing on structural risks, such as algorithmic bias and increasing inequality. Existential risk should be the high order bit, since we want to have the opportunity to take care of structural risk. But if you believe that existential risk doesn't exist at all or can be ignored, then you will see any mention of it as a potentially intentional distraction from the issues you care about. This unfortunately has the effect that some AI experts who should be natural allies on existential risk wind up dismissing that threat vigorously.

Third, there is a legitimate concern that some of the leading companies, such as OpenAI, may be attempting to use existential risk in a classic "pulling up the ladder" move. How better to protect your perceived commercial advantage than to get governments to slow down potential competitors through regulation? This is of course a well-rehearsed strategy in tech. For example, Facebook famously didn't object to much of the privacy regulation because they realized that compliance would be much harder and more costly for smaller companies.

What is one to do in light of these dilemmas? We cannot simply be silent about existential risk. It is far too important for that. Being cognizant of the dilemmas should, however, inform our approach. We need to be measured, so that we can be steadfast, more like a marathon runner than a sprinter. This requires pro-actively acknowledging other risks and being mindful of anti-competitive moves. In this context I believe it is good to have some people, such as Eliezer Yudkowsky, take a vocally uncompromising position because that helps stretch the Overton window to where it needs to be for addressing existential AI risk to be seen as sensible.

8 notes

·

View notes

Text

Power and Progress (Book Review)

A couple of weeks ago I participated in Creative Destruction Lab's (CDL) "Super Session" event in Toronto. It was an amazing convocation of CDL alumni from around the world, as well as new companies and mentors. The event kicked off with a 2 hour summary and critique of the new book "Power and Progress" by Daron Acemoglu and Simon Johnson. There were eleven of us charged with summarizing and commenting on one chapter each, with Daron replying after 3-4 speakers. This was the idea of Ajay Agrawal, who started CDL and is a professor of strategic management at the University of Toronto's Rotman School of Business. I was thrilled to see a book given a two hour intensive treatment like this at a conference, as I believe books are one of humanity's signature accomplishments.

Power and Progress is an important book but also deeply problematic. As it turns out the discussion format provided a good opportunity both for people to agree with the authors as well as to voice criticism.

Let me start with why the book is important. Acemoglu is a leading economist and so it is a crucial step for that discipline to have the book explicitly acknowledge that the distribution of gains from technological innovation depends on the distribution of power in societies. It is ironic to see Marc Andreessen dismissing concerns about Artificial Intelligence (AI) by harping on about the "lump of labor" fallacy at just the time when economists are soundly distancing themselves from that overly facile position (see my reply thread here). Power and Progress is full of historic examples of when productivity innovations resulted in gains for a few elites while impoverishing the broader population. And we are not talking about a few years here but for many generations. The most memorable example of this is how agricultural innovation wound up resulting in richer churches building ever bigger cathedrals while the peasants were suffering more than before. It is worth reading the book for these examples alone.

As it turns out I was tasked with summarizing Chapter 3, which discusses why some ideas find more popularity in society than others. The chapter makes some good points, such as persuasion being much more common in modern societies than outright coercion. The success of persuasion makes it harder to criticize the status quo because it feels as if people are voluntarily participating in it. The chapter also gives several examples of how as individuals and societies we tend to over-index on ideas coming from people who already have status and power thus resulting in a self-reinforcing loop. There is a curious absence though of any mention of media -- either mainstream or social (for this I strongly recommend Martin Gurri's "Revolt of the Public"). But the biggest oversight in the chapter is that the authors themselves are in positions of power and status and thus their ideas will carry a lot of weight. This should have been explicitly acknowledged.

And that's exactly why the book is also problematic. The authors follow an incisive diagnosis with a whimper of a recommendation chapter. It feels almost tacked on somewhat akin to the last chapter of Gurri's book, which similarly excels at analysis and falls dramatically short on solutions. What's particularly off is that "Power and Progress" embraces marginal changes, such as shifts in taxation, while dismissing more systematic changes, such as universal basic income (UBI). The book is over 500 pages long and there are exactly 2 pages on UBI, which use arguments to dismiss UBI that have lots of evidence against them from numerous trials in the US and around the world.

When I pressed this point, Acemoglu in his response said they were just looking to open the discussion on what could be done to distribute the benefits more broadly. But the dismissal of more systematic change doesn't read at all like the beginning of a discussion but rather like the end of it. Ultimately while moving the ball forward a lot relative to prior economic thinking on technology, the book may wind up playing an unfortunate role in keeping us trapped in incrementalism, exactly because Acemoglu is so well respected and thus his opinion carries a lot of weight.

In Chapter 3 the authors write how one can easily be in "... a vision trap. Once a vision becomes dominant, its shackles are difficult to throw off." They don't seem to recognize that they might be stuck in just such a vision trap themselves, where they cannot imagine a society in which people are much more profoundly free than today. This is all the more ironic in that they explicitly acknowledge that hunter gatherers had much more freedom than humanity has enjoyed in either the agrarian age or the industrial age. Why should our vision for AI not be a return to a more freedom? Why keep people's attention trapped in the job loop?

The authors call for more democracy as a way of "avoiding the tyranny of narrow visions." I too am a big believer in more democracy. I just wish that the authors had taken a much more open approach to which ideas we should be considering as part of that.

4 notes

·

View notes