he/him. I like science. Formerly known as turing-complete-mammal. This is a side blog, and I follow from @turing-complete-eukaryote-2

Last active 60 minutes ago

Don't wanna be here? Send us removal request.

Text

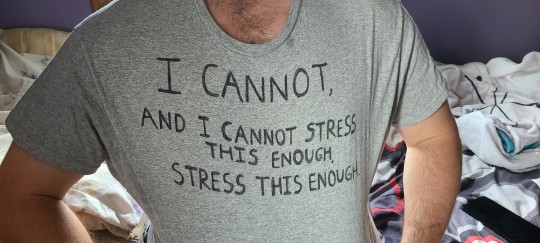

figured youd all appreciate my friend's shirt

11K notes

·

View notes

Text

I downloaded this high resolution picture of a grain of basmati rice from wikimedia commons (for personal reasons) and because it has inbuilt date data and stuff it dissapeared deep into my downloads folder never to be seen again. I feel like I've dropped it down the back of my couch

2K notes

·

View notes

Text

People are always saying this or that society has/had a completely sui generis understanding of gender and sexuality that doesn't map onto modern Western categories in the least, and when you look inside.jpg it's just

Older men in positions of power fucking younger men and boys

Lesbians who consider themselves sort of male

Desexed men (eunuchs, monks)

Transgender women

414 notes

·

View notes

Text

of course you have blue curtains and subtext

12K notes

·

View notes

Text

the speed of the early muslim conquests is so bonkers... and from such a poor region. I honestly kind of think they were hacking

16 notes

·

View notes

Text

oh you're ethereally beautiful? i don't care. it should have been me

21 notes

·

View notes

Text

Whenever I see the phrase 'read Marx' I think of what's probably the most belabored, groan-inducing pun in a joke I've ever heard: so there's this nudist colony for communists, and two of them are sitting on the porch. One says to the other, "I say, comrade, have you read Marx?" The second says "Yes, must be these wicker chairs."

618 notes

·

View notes

Text

girl are you a psychology study because you're one of a kind and i don't think anybody could replicate you

380 notes

·

View notes

Text

It's true that statistics are imperfect, there are a lot of well-known reasons this can be the case. I've been reading pretty intensively about the Chinese economy lately, and the ways that a large bureaucratic organization can produce misleading statistics are a pretty salient part of any analysis there! But even the most bearish on China don't estimate its official stats are off by an order of magnitude...

Which is not to say that no stats ever are off by an order of magnitude! But if even the people who are most skeptical of the CCP are saying "the official stats are probably better than what I could guesstimate just by looking", that tells you something! These are people who think the official numbers are outward-facing propaganda (which I'm sure they are at least a little bit) rather than the result of intra-bureaucratic incentive misalignment (which I believe they are mostly). And yet people are still willing to take the propaganda within an order of magnitude.

Why? Because for however much statistics may be flawed, personal experience, on quantitative issues like this, tells you literally nothing. It is nigh-informationless. Here's an exercise: go to a city which you don't know the population of, and guess the population just by your personal observation. Uh, can you do that? Probably not. If you've been to a lot of cities that you do know the population of, maybe you can, because you've fit an internal model of what cities of different population levels are like based on already knowing those stats and correlating them with observation. That's cheating; you're implicitly trusting stats. Walk into some new domain you know nothing about, and guess a number based on your individual experience. Go to a forest and guess how many squirrels live there, without looking at any data or performing any systematized data collection yourself. Just spend some time in the forest and then guess the number. Can you do this accurately?

So "your statistics are imperfect, that's why you should trust my personal judgement" really does not work as an argument.

187 notes

·

View notes

Text

Vision: A mathematical wiki which tracks a dependency graph for each theorem. You can click on the theorem and it builds a path from foundations to that theorem for you as a big long page you can scroll through to learn it, and it automatically inserts little suggestions of examples to look at along the way.

400 notes

·

View notes

Text

Shoutout to that one time Wonder Woman accidentally did 9/11

Also shoutout to the writers for predicting 9/11???

10 notes

·

View notes

Text

ruse-based international order

36 notes

·

View notes

Text

I've said this before, but "the US is diverse while China is homogenous" is a mainstream-ish talking point that you see sometimes, and if you have an academic background anywhere adjacent to mine (linguistics) it will probably strike you as pretty ridiculous. The US has a lot of largely-culturally-assimilated recent immigrant diversity, while China has dozens of centuries-old minority communities of various sizes which often retain not just their own language and culture, but indeed in some cases traditional subsistence methods, kinship systems, and so on, not commensurate with mainstream Chinese society. The diversity in China is considerably deeper. Whereas in the US you have white, black, asian people etc. all speaking the same language and practicing essentially the same culture (with local variations), subsisting in the same ways, practicing the same marriage norms, etc. etc. It's objectively much more homogenous. The outliers here are indigenous communities, which have a real and significant cultural diversity, but make up a very small proportion of the US population.

1K notes

·

View notes

Text

"Would you rather get what you want, or be happy?"

Unironically I would rather get what I want. If I end up happy that's great, but what I want is to get what I want.

697 notes

·

View notes