Don't wanna be here? Send us removal request.

Text

Can software quality be assured by "testers"?

You'd have to live under a rock not to notice the constant flurries of anti-QA rhetoric here on LinkedIn and elsewhere in blogsphere. Have no fear though. In this article, I address some of the misconceptions that are being made that cause folks to have this anti-QA stance that usually revolves around the statement that "testers" cannot assure quality because, in the context of software development, they are not in a position to influence/change the code and therefor have no power to change the quality, directly.

I want to come right off the bat and say this is 100% true! "Testers," as defined by the folks that seem to hold this stance, cannot assure quality. Does this surprise you? It shouldn't, here is why.

Testers only provide information

If testers, per the definition used by Bolton and others, only "evaluate a product to provide information" (this is an overly simple and paraphrased version I use for brevity but you can find their definition readily online), then of course they cannot change the quality of the software! Duh!

And here lies the crux of the matter, testers don't assure quality but Quality Assurance Analysts and Engineers via a Quality Assurance process, do. Now, I don't think that Bolton is confusing Quality Assurance with testing, but I think that he is adding to the confusion by not making this very clear. Which results in practitioners being confused about what they really should do.

When Bolton and others tell folks to "get out of the Quality Assurance business" it could be because they would like them to get into the single purpose testing business instead.

Quality Assurance drives the Quality

In a mature organization, QA is responsible for working with management (executive management), understanding the Quality Objectives of an organization, crafting a process that will ensure that these quality objectives are being met and then reporting on said quality; for the purpose of continuous improvement.

Quality Assurance and Testing are not the same thing

"A picture says a thousand words", one tool that I like to use to illustrate who is responsible for what without using a lot of words is a RACI. To understand this nuanced difference between testing and QA and how they are not the same thing but do work together, let's examine the below example in the context of Software Quality:

As you can see by creating a RACI chart in order for us to outline the responsibilities, in terms of quality, across various roles in the project team (such as Testing (testers), Development, Quality Assurance (QA), Scrum Masters, User) helps to clarify who is responsible for what, this ensures that all aspects of quality are adequately covered thereby assuring quality.

In order to assure quality, though, you first have to define what quality is. What are the Quality Standards for the project? This is the first line in the RACI, if you notice QA is Responsible and Accountable for setting the Quality Standards while "testers" are consulted (this is in line with testers only provide information, no?).

Another difference between QA and testing is that writing the test cases and also actually performing testing are in the realm of "testers" who are both Accountable and Responsible for this. QA plays a Supporting role.

Yet another difference is that testers are Accountable for reporting bugs, risks, etc, but share the Responsibility with QA. This is clearly in line with the "testers" only provide information mantra.

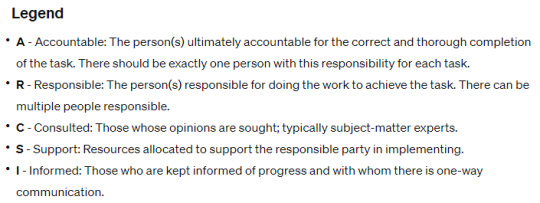

Here is the Legend I used for the RACI for completeness:

Summary

As you can see from the above, it is clear (well at least to me) that "testers" cannot assure quality. It is also clear to me that Quality Assurance is Accountable and Responsible for both owning the Quality Standards for the project as well as setting up the process and following up with the other stakeholders to ensure that the quality standards are being met, therefore resulting in a Quality product (as defined by the project team).

What are your thoughts on this topic? Do you still think that "testers" can assure quality? Are you ready to get out of the "tester" business and join Quality Assurance? A profession that actually has a body of knowledge and common practices that are widely known? Leave your thoughts in the comments!

0 notes

Text

AIDT - AI Driven Testing

Today, I'm coining a new term: "AI Driven Testing" (AIDT).

This story is totally fictional, and any resemblance to real-life characters is 100% coincidental. It is meant to illustrate at a high level what AI Driven Testing means in the context of product software development.

In the beginning, there was Jimmy

Once, there was a software tester named Jimmy. Jimmy had been in the industry for over three decades and was known for his meticulous and thorough testing methods. He had a traditional approach to software testing, relying heavily on manual testing techniques and resisting the integration of new technologies.

As the tech world evolved, Jimmy's company began to embrace cutting-edge technologies, including AI, to enhance its software testing processes as well as the services it offered. The company brought in a team of new, technically savvy testers who were well-versed in leveraging AI for automated testing, data analysis, and even predictive bug tracking. These new testers were enthusiastic about using AI to increase efficiency and accuracy.

Skepticism sets in

Initially, Jimmy was skeptical about the use of AI in testing. He believed that his years of experience and manual testing skills were irreplaceable and that AI could never match the intuition and depth of understanding that a human tester brings; somewhat true, to an extent. However, as time went on, it became increasingly clear that AI was transforming the testing landscape. The AI-driven testing methods were faster and more efficient in identifying complex bugs and patterns that were sometimes missed in non-AI-assisted human-driven testing.

AI shows promise

The company's management started noticing the significant improvements in testing efficiency and accuracy brought about by AI. They began encouraging all testers, including Jimmy, to upskill and adapt to these new technologies. Training sessions were organized, and resources were provided to help the existing staff integrate these new tools into their workflow.

Despite these opportunities, Jimmy remained resistant to change. He felt overwhelmed and out of place amidst the rapidly evolving tech landscape. The new AI tools seemed complex and intimidating, and Jimmy was hesitant to step out of his comfort zone.

As the company continued to progress, the gap between Jimmy's traditional methods and the new AI-driven testing approaches widened. Projects requiring advanced testing techniques were increasingly assigned to the AI-savvy testers, and Jimmy was frequently sidelined. The efficiency and accuracy brought by AI in software testing were undeniable, and it became clear that not adapting to these changes was not an option in the competitive tech industry.

Skepticism turns into denial

Eventually, Jimmy's reluctance to embrace new technologies led to his replacement by testers who were more adaptable and proficient with AI-driven testing. This marked a significant shift in the company's approach to software testing, highlighting the importance of staying current with technological advancements.

From denial to elimination - Conclusion

Jimmy's story serves as a reminder that in the fast-paced world of technology, adaptability, and continuous learning are crucial. It underscores the transformative impact of AI in various fields, including software testing, and the necessity for professionals to evolve alongside technological advancements to remain relevant and effective in their roles.

Moral of the story

Don't be a Jimmy!

0 notes

Text

Consistency in Quality Practices

What does it take to achieve consistency in software product quality across a large and geographically dispersed engineering organization while at the same time maintaining divisional autonomy so that you don’t stifle but rather foster innovation? It requires striking a delicate balance between centralized quality standards and decentralized execution.

Before we start, though, we have to think about why we would want consistency in the first place, though, right? In my case, I would like to align our distributed engineering force around a common definition of quality that is aligned with our company vision and goals. We have many vertical engineering teams whose focus is to develop software that is aligned with their specific divisions and business units all serving different markets.

In this article, I'll briefly describe one way of accomplishing this.

A structured approach

Agree on Clear, Unified Quality Standards

The first thing we have to do is establish clear, unified Quality Standards. You may think this may turn some folks off, and you are right in thinking that. Software development is more art than science and, as such, should not be constrained. But understand that I am talking about Quality Standards influencing development, not driving it. A standard in the context of the company is how your company views quality, what it means to it, and how it aligns with your company values.

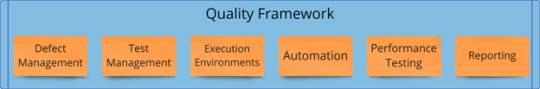

This can be easily accomplished by developing a high-level framework (let's call it a Quality Framework) that defines what quality means across the organization. This includes in the realm of Quality Assurance and Quality Engineering, guidelines for Issue Management, Test Management, Test Environments, Reporting, Performance, and Automation.

Make all of these available via a "Good Practices Repository." This will be a central repository where all the good practices relative to the areas listed are documented, tracked, and easily accessible.

Decentralized Autonomy with Centralized Guidance

Division vertical teams are allowed to innovate and operate independently but within the framework of the established quality standards. They can adapt processes to fit their specific needs, provided they meet the overarching quality goals embodied in the standards.

Utilize the concept of a horizontal support team for Quality Engineering as a center of excellence that provides guidance, good practices, and support to the vertical teams. The CoEs help to bridge the gaps between divisions and ensure consistency in quality.

Guiding the vertical teams

By establishing an assessment model that is based on the quality standards we've already defined, we arm the vertical teams with the tools needed to self-assess against the "gold standard" and identify what, if anything, they need to do to align with the given quality standard. In the graphic above I'm using Issue Management as an example. This allows them to visualize where they are now relative to the standard, as well as what they need to do in order to be aligned. These current/future state items can then be incorporated into the goals of individual contributors, managers, and executives so they become actionable items that have a good chance of being worked on, therefore getting us closer to achieving and maintaining alignment with the agreed upon Quality Gold Standards.

Management commitment

There is good news and bad news in this category. The good news is that this is a relatively straightforward and noninvasive way to get alignment on consistency. The bad news is that none of this will probably be possible unless it starts with buy-in at the top by being a vocal supporter and encouraging teams to incorporate the alignment work into their yearly goals. They must also establish clear metrics and corresponding accountability, empower team members to take ownership of the quality and success of their work and invest in ongoing training and mentorship to develop skills that promote a quality-centric culture. This is the work of an empowered CoE.

Conclusion

The key to achieving consistency in product quality across a large and diverse engineering organization without stifling innovation lies in establishing a balance between centralized quality standards and decentralized execution. By fostering a culture of quality, continuous improvement, and collaboration, your organization can maintain high standards of product quality while still encouraging innovation and autonomy within divisions.

What are your thoughts on this approach? Please share your feedback in the comments.

1 note

·

View note

Text

To be or not to be, a "tester"

That is the question.

I have observed, for the past 10 years or so, that there is a movement out there in the “Information Superhighway” to somehow try to win back a perceived (wrongly IMO) loss or lack of reputation for folks that focus their careers on #qualityassurance, #qualityengineering, #qualityanalyst or any other supporting and ancillary disciplines, which they seem to roll up into a single purpose sounding title called “tester”.

I ask, does this problem really exist? I fear it is manufactured by some folks that may stand a lot to gain (from a business / financial perspective) from folks in our craft that buy into this perceived problem and, more importantly, folks that may be thinking about going into our field, so that they can then be “properly trained” on how to be a what is in their opinion a “good tester”.

To me, I see the problem as being rooted in language and how us humans perceive / interpret such language. The problem is in the term “tester”.

What if folks were to stop advertising themselves as a “tester” of any type (manual, automated, etc.) altogether and instead advertise themselves as a Product Development person that happens to be skilled at testing, among other skills, needed for product development.

Testing is tool that folks use when they assume the role of information gatherer; it’s a hat, if you will, that someone wears while on the pursuit of information with the aim of improving the product by identifying, mitigating and even elimination risks (to the product, to the business, to the customers). IMO, advertising yourself as a “tester” not only makes you sound single purpose (i.e. a person that just tests) but also feeds into the stereotype that all we do is test and therefore are somehow expendable.

I’m interested in folks' opinions on this topic. Do you call yourself a “tester” if so, why? If not, why not?

0 notes

Text

Developers can't test - a bedtime story

Automated tests written after the fact or not by developers are tech debt. BDD as an end-to-end testing framework is a supercharged tech debt creator.

Now that I have your attention allow me to blow your mind a little bit more. The best developers I know are also the best at testing their software. Not only that but they are the best at imagining how their product will be used (and abused) by their customers. They also acknowledge that they can only accomplish this when they build the product by themselves. By the way, I have only met about half a dozen of these unicorns in my career to date. Why do you think that is? I’ll go into it in this article.

The Lone Ranger

The best developers prefer to work alone. I've asked my friend Oscar (a real developer and unicorn) about the reason for this, and the answer is quite simple: "Because developers are taught either by their organizational policies or product test teams, that they shouldn't have to test because we have specialists that take care of it, and they know this is just not true! So it's the path of least resistance to just ignore the status quo and focus on producing high quality, tested software (by themselves) for others to test". At least this is the case for Oscar. I'm picking on him because I've worked with him at a few companies and have gotten first-hand experience at what he can do alone; that is, build products of high quality the first time, in record time, and on time (as promised). One example is a multi-agent system based point-of-sale application that is dynamically configured depending on the context it is deployed in (this was in the early 2000s when AI wasn't a mainstream fad). This was a personal project for him as he is also an entrepreneur (another trait of good developers), that I helped him test.

The first delivery I saw of this product was 99% complete and bug-free. Why was that? Read on.

Batman and Robin

The best developers value QA specialists' involvement and do so from the beginning and they incorporate feedback from ideation. When Oscar came up with the idea for his product, he immediately reached out to me because he knew that, as an entrepreneur myself, I had owned and operated retail stores in the past, so I was the perfect partner for this new endeavor.

I later found out, though that the product wasn't really a point-of-sale system but a way to prove that the Multi-agent system engine he had built the year before to incorporate into a hobby product that teaches a player how to win in Black Jack (which I also helped him with) would in fact be able to be used in multiple products and for multiple purposes.

Just like superheroes team up and combine their superpowers in fantasy series, developers and quality specialists (the good ones) team up in product development. Hence, a product was delivered with only 2 bugs that were edge cases we both missed during design.

Superman

It so happens that the best developers and quality specialists are outcasts from development communities, forcing them into a life of solitude. Not in the sense that they are alone, but just like Superman was forced to live his life as a common person for fear of persecution, they must hide their true talents from a team that may feel threatened by this unknown power that they possess. Little do they know that they, too, can have this power if they only open up to different perspectives.

Transformers - More than meets the eye!

So how do we fix this? I would take a multi-faceted approach.

The first thing we have to do is acknowledge there is a problem. The problem is this false perception that developers shouldn't test because they can't. This is just not true. Developers don't test because we don't teach those who don't know they have to do this AND don't trust those who want to do it.

Second, assess your teams and figure out who on the team is of the Batman and Robin type and who isn't. Pair those that are with those that aren't.

Third create a safe space where the newly formed superteams can come and share their challenges, solutions, and triumphs. Focus your efforts on those who are trying to get there by praising them to elevate their strengths.

This doesn't have to be a huge transformation. What we are trying to accomplish here is a two-pronged shift in mindset. The developers think they shouldn't test, and the test specialists think that developers can't test. These two completely false mindsets need to be reversed.

Conclusion

Once you are able to overcome this developers can't test and test specialists are the only ones that can problem (which I will go on the line here and assume is present in a lot of organizations), you'll be able to not only deliver high-quality software the first time but you'll be able to do it faster as well. If you find yourself struggling with continuous testing and continuous delivery to production and cannot pinpoint why that is, do a quick sniff test around your organization and see if it is not rooted or minimally related to what I just described here.

What are your thoughts on this topic?

0 notes

Text

About Manual vs Automated

In my opinion, focusing energies on non existent dichotomies takes away from the real work / focus of system verification and validation: system design, test strategy, risk identification and reduction, test techniques to incorporate based on system design and strategy, controlled and uncontrolled experiments to both verify and validate the product, etc.

In other words (basic triage here) if the important work is to understand testing and all that it encompasses (as mentioned above) then focusing on manual vs automation is like (in a ER situation) focusing on placing band-aids on scratches when a person is bleeding and the bleeding is synchronized with heart beat. Focusing on the distinction first is a bit backwards (not to mention controversial - see my last sentence).

From my perspective once a person learns system design (the building blocks of a system), testing techniques (what to do in each situation), testing strategy (your overall approach), etc then, by design and as part of the learning they would have already realized (intuitively) the distinction this false dichotomy is trying to make (which is a really hard distinction to make because both (manual and automated) are intertwined, hence why its controversial to folks that understand this already).

1 note

·

View note

Text

About “Flaky Tests”

A test is an experiment through which we aim to learn information. In Automation (that is, when you are in the process of getting a computer to execute all or part of your experiment) all tests begin as a “flaky test”. This is not only expected but it’s the testing portion of automation; which includes more than just automating steps. During this portion we are looking for unknowns and how it impacts our test (experiment). It’s an iterative process. It is also an investigation to reveal unknowns or “flakiness” of all things about the experiment I’m automating: The environment, the variables, the constants, the content, etc. So I don’t call a test “flaky” just because it fails for an unknown reason. all automated tests start out flaky. I call a test flaky while it’s in the investigation portion and we are working to reveal all of the unknowns as mentioned above. To summarize: is a test strategy really flaky if it takes "flakiness" into account as I have outlined above?

0 notes

Text

Test Cases - Some Benefits Derived

On numerous occasions I've been involved in discussions regarding test cases with other folks involved in a software development life cycle where the overall accepted sentiment is that when all test cases are "executed" we are done testing; or testing has not been done unless test cases have been executed. Unfortunately for our field, I've often found myself alone in trying to show that just because you executed 100 test cases does not necessarily mean that you did any testing at all. You could have, but this depends on the person executing the test cases and whether they understand test cases and how to use them in testing. The below presentation I gave at a former employer that had a huge catalog of test cases with detailed steps and was still struggling with product quality. They were hesitant, however, to accept that they weren't really testing when purely executing the test cases. Mainly because they feared that all the effort that went into the creation and maintenance of the test cases would be wasted. In the session I explained to them how they can actually use these test cases to get the maximum return on your investment in the creation of these artifacts. Test cases, since they are designed by subject matter experts, contain all the information that most real world users of your product will need to perform the very same functions; and since they contain detailed steps, are suitable for many applications. Your thoughts, comments are welcomed.

Test Cases - Benefits Derived from Freddy Vega

1 note

·

View note

Quote

The only part of software development that may not be predictable is when the programmer is actually coding.

FV

0 notes

Text

Purpose, Mission and Vision keep testers self focused on things that matter

Purpose, Mission and Vision may sound like pointy hair mumbo jumbo to you but what if it isn't?. In fact I believe it applies to testing and, specifically to Context Aware Testing. To be context aware means to adapt according to the location, the collection of nearby people and accessible resources as well as to changes to such things over time. To be a context aware tester means that you have the capabilities to examine the computing and human environments and react to changes to the environments that may affect the product under test. To help guide us in our journey the Context Aware Tester always lays out his "Test Pact" from the onset. The pact includes the Purpose (the why), Mission (the how) and Vision (the what) for her testing. Lets review an example: As a contractor she bids for and wins an assignment to test Widget A (an address book application for Windows). During a meeting with the stakeholders you find out this application is for internal use only, their main concern is stability and don't want the app to crash and cause loss of contact information. From this bit of information we can begin to define our Test Pact:

You start with your purpose. To make sure the contacts application is stable by testing it using real world scenarios.

We then lay out what it is that we anticipate when we're done, our vision. This is our definition of done. In this case we anticipate application stability.

Next we state the mission. How are we going to accomplish our goal of verifying the state of the application stability. Load testing, stress testing, volume testing.

By defining your purpose, mission and vision before starting your testing project (no matter how small) you'd have given yourself a road map as well as a set of constraints to wrap around your testing effort to help keep you focused on the things that matter most (i.e. what's important). Once you start working, this is also a great way to gauge if what you are being asked to do now (an interruption) interferes with or contradicts any of the Test Pacts you are currently working on. In nutshell Test Pacts encapsulate the definition of testing for its specific context in the form of purpose, vision and mission. This implies that for a context-aware tester, the definition of testing is not only depending on context, but also possibly different each time. To a context aware tester, purpose (why) is her guide while the mission (how) is what drives her towards the vision (what). This keeps us closely and tightly aligned with, not only the technical aspects, but also the vision, of the stakeholders as captured in the Test Pacts.

1 note

·

View note

Text

Context-Aware Testing

What is Context? "The word "context" stems from a study of human "text"; and the idea of "situated cognition," that context changes the interpretation of text" *** What does it means to be a Context Aware Tester? A Context Aware tester knows (see above) that context changes the interpretation of what "testing" and "tests" means. And, as well:

A context-aware tester knows that each situation will most likely require a custom approach.

Likewise, A context-aware tester rejects the notion that a specific approach is the only approach to all problems.

A context-aware tester does not reject any practice, technique, or method (not even another approach) when it comes to the who, what, when, where, and why of testing.

In his 1994 paper at the Workshop on Mobile Computing Systems and Applications (WMCSA), Bill Schilit introduces the concept of context-aware computing and describes it as follows:

“ Such context-aware software adapts according to the location of use, the collection of nearby people, hosts, and accessible devices, as well as to changes to such things over time. A system with these capabilities can examine the computing environment and react to changes to the environment. ”

-- Schilit et al 1994

Just like Schilit describes "context-aware software" as adapting "according to the location of use, the collection of nearby people, hosts, and accessible devices as well as to changes to such things over time.", so is a Context Aware tester and in doing so has the capabilities to examine the computing and human environment and react to changes to the environment that may affect the product under test. Oh, and no, being a Context Aware tester does not mean you are now a member of a school. Context Aware is not a school. Is an approach to help solve hard, and easy, testing problems. *** http://en.wikipedia.org/wiki/Context_awareness#Qualities_of_context

1 note

·

View note

Quote

To avoid criticism, do nothing, say nothing, be nothing.

0 notes