Stay updated with the latest in EdTech, technology trends & IT updates. Explore tech news, innovations and insights shaping the future of education and technology today.

Don't wanna be here? Send us removal request.

Text

DevOps Engineer Courses List : Choices to Enhance Your Career

In today's highly-competitive tech world demand for competent DevOps engineers is increasing. As businesses shift to continuous integration and agile development, DevOps plays a crucial function in closing the gap between operations and development. No matter if you're an aspiring beginner into IT or a seasoned professional who wants to get better by taking a top-quality DevOps course could change your life.

To help you sort through the myriad of choices to choose from, we've put together the best DevOps engineering courses for 2025. These courses were chosen by their content high-quality, their relevance to industry as well as user feedback and their overall experience.

What are the importance of DevOps courses in 2025

Before we get into the syllabus Let's look at the reasons why learning DevOps is more crucial than ever before in 2025:

More automation: Organizations automatizing more processes, which makes DevOps techniques and tools vital.

Demand is growing: Companies are looking for engineers that can handle pipelines for CI/CD and infrastructure as code as well as cloud-based deployments.

Paying high salaries: DevOps engineers are among the highest-paid workers in the tech industry.

Therefore it's worth making the investment in the DevOps training course isn't only about gaining skills, but also about ensuring the future of your career.

Top DevOps Engineer Courses in 2025

Here's our selection of the top DevOps classes you can take this year:

1. Google Cloud DevOps Engineer Certification (Coursera)

Level Intermediate to Advanced Time of the course 4 to 6 months (at 5-10 hours/week)

This certificate for professionals issued by Google has been designed specifically for people who are looking to get hands-on experience in cloud-native DevOps methods. It includes Site reliability engineering (SRE) as well as CI/CD monitoring as well as deployment pipelines.

Highlights:

Led to students by Google Cloud experts

Hands-on labs using Google Cloud Platform (GCP)

It prepares you for an official DevOps Engineer certification exam.

2. DevOps Engineer Career Path (Codecademy)

Niveau from Beginner to Intermediate Duration: Self-paced

The structured career path offered by Codecademy is a great option for those who want to start at the beginning. You'll learn about Linux, Git, Docker, Kubernetes and other essential tools used by the DevOps engineer.

Highlights:

Interactive Coding Environment

Real-world projects and exercises

The course covers both technical and soft abilities. Covers both technical and soft

3. The Complete DevOps Engineer Course 2025 (Udemy)

Level All levels Duration 40plus hours

This top seller on Udemy is often updated and is packed with useful examples. From coding infrastructure by using Terraform to automation using Jenkins and Ansible It's a complete set of.

Highlights:

Access to lifetime access and downloadable materials

It covers AWS, Docker, Kubernetes and pipelines for CI/CD

Ideal for beginners as well as professionals.

4. Microsoft Certified: DevOps Engineer Expert (Microsoft Learn)

Level Intermediate to Advanced Duration: Depending on the learning speed

This certification is designed for engineers working on Microsoft Azure. The course combines theory and practical exercises that will assist you in the design and implementation of DevOps techniques by using Azure DevOps as well as GitHub.

Highlights:

Industry-recognized certification

In-depth integration Azure services

Excellent for corporate DevOps roles.

5. Linux Foundation DevOps Bootcamp

Niveau: Beginner to Intermediate Duration Time: 6 months

The course is offered through The Linux Foundation, this bootcamp offers two courses The first is an introduction to DevOps and Cloud Native Development. It's perfect for students seeking vendor-neutral, open-source-focused education.

Highlights:

The emphasis is on open-source tools

Forums that are supported by the community

A certificate of completion issued by the Linux Foundation

Bonus Picks

Here are some other areas worth looking into if you're searching for specific focus areas:

Kubernetes for DevOps Engineers (Pluralsight)

CI/CD Pipelines with Jenkins and GitLab (LinkedIn Learning)

AWS Certified DevOps Engineer - Professional (A Cloud Guru)

How to Pick the Right Course

The best DevOps course will depend on your professional stage and your goals for learning. Here's a quick guide:

Also, think about the following prior to registering:

Course reviews and scores

Project work and hands-on labs

Industry-recognized certification

Support or mentorship available

Final Thoughts

In 2025, becoming a DevOps engineer will be more than being able to master some tools. This is about mastering systems, enhancing collaboration, and making it easier to deliver faster and more reliable software delivery. The above-mentioned courses give an excellent foundation and hands-on experience to be an effective DevOps professional.

Take that next step. If you're looking to brush on the latest technologies or beginning from scratch there's a program out available that's specifically tailored to your needs.

1 note

·

View note

Text

The Future of AWS Certified Developers: The Key Trends and Forecasts

Cloud computing has increased dramatically in recent years and Amazon Web Services (AWS) continues to lead the field. With companies rapidly moving to cloud computing, AWS certifications have become an ideal option for developers who want to grow their careers and higher pay. But as technology develops and so do expectations for AWS certified developers.

This article will look at what's in store for AWS Certified Developers, key trends affecting the industry in addition to what development professionals can anticipate to see in the next few years.

1. What is the reason AWS Certified Developers are in Demand?

AWS powers many of the biggest companies as well as startups and governments across the world. Companies rely on AWS-certified experts to design, build cloud-based solutions effectively. This is why AWS-certified developers are more valuable than ever before:

The growth of cloud Adoption Businesses are shifting to cloud, creating a greater need to hire AWS experts.

Security & Compliance - Companies require experts to protect their cloud infrastructures.

Serverless and Microservices Modern application development relies on AWS services such as Lambda as well as ECS.

Cost Optimization Cost Optimization AWS developers aid businesses to optimize cloud expenditure and improve efficiency.

With these considerations with in our minds, lets look at the most important trends that will define the next generation of AWS Certified Developers.

2. Important Trends that Shape the Future of AWS Developers

2.1. The growth of AI & Machine Learning in AWS

AWS has made significant investments on Artificial Intelligence (AI) and Machine Learning (ML) with services such as Amazon SageMaker, Rekognition, and Lex. AWS-certified developers need to improve their knowledge in AI/ML to create more intelligent applications.

The prediction is that AI as well as ML integration will become a key capability for AWS developers in 2025.

2.2. More widespread adoption of Serverless Computing

Serverless architecture reduces the need to manage infrastructure, making development of applications quicker as well as more effective. AWS services such as AWS Lambda API Gateway, and DynamoDB are accelerating the adoption of serverless computing.

The prediction is that serverless computing will be the dominant cloud technology for development, which makes AWS Lambda expertise a must-have expertise for developers.

2.3. Multi-Cloud & Hybrid Cloud Strategies

While AWS is the top cloud provider, a lot of companies are taking a multi-cloud strategy that integrates AWS along with Microsoft Azure and Google Cloud. AWS-certified developers need to understand hybrid cloud environments as well as tools like AWS Outposts, and Anthos.

Prediction: Developers who have multi-cloud expertise will enjoy an edge in jobs.

2.4. There is a demand Cloud Security & Compliance Experts

As cyber-attacks are growing, businesses are placing a high priority on Cloud security as well as compliance. AWS services such as AWS Shield Macie as well as Security Hub are essential for protecting cloud environments.

Prognosis: AWS security certificates (AWS Certification for Security-Specialty) will be highly useful as security threats to cloud computing increase.

2.5. Edge Computing & IoT Growth

The growth of Edge Computing and the Internet of Things (IoT) is changing industries such as automotive, healthcare manufacturing, and healthcare. AWS services such as AWS IoT Core, and AWS Greengrass are driving this change.

The prediction is that AWS experts equipped with IoT or Edge Computing expertise will be in high demand by 2026.

3. Skills and Certificates for Future AWS Developers

To stay competitive to stay ahead in this competitive AWS community, AWS developers need to constantly improve their skills. Here are the most sought-after qualifications and certifications that are essential:

In response to these trends, many developers are turning to comprehensive training like an AWS Developer Course to sharpen their skills and stay relevant.

Essential AWS Skills:

AI & Machine Learning - Use AWS SageMaker and Rekognition.

Serverless Architecture - Master AWS Lambda and API Gateway.

Cloud Security and Compliance Learn about IAM, Security Hub, and AWS Shield.

Multi-Cloud and Hybrid Cloud - Gain experience with Azure, Google Cloud, and AWS hybrid solutions.

The DevOps & Automation - Use AWS CodePipeline, CloudFormation, and Terraform.

AWS Certifications to Take into Account:

AWS Certified Developer Associate (For software developers who work using AWS)

AWS Certified Solutions Architect - Associate (For cloud solution design)

AWS certified DevOps Engineer Professional (For Automation and CI/CD)

AWS Certified Security Specific (For cloud security experts)

4. Career and Job Market Opportunities for AWS Developers

AWS developers are highly compensated professionals, earning between $100,000 and $150,000 per year based on experience and the location. Some of the most sought-after jobs for AWS-certified developers include:

Cloud Developer - Creates cloud-based applications by using AWS services.

Engineering DevOps Engineer. Manages pipelines for CI/CD along with cloud automation.

Cloud Security Engineer Specializes in AWS safety and security as well as compliance.

IoT Developer - works with AWS IoT and Edge Computing solutions.

Big Data Engineer Handles AWS analysis of data as well as Machine Learning solutions.

As cloud adoption continues to grow AWS-certified professionals will enjoy endless career options across a variety of sectors.

5. How can you keep up with the times As an AWS Developer?

To be successful in the constantly-changing AWS environment, developers must:

Stay Up-to-date - Keep up to date - AWS blogs, take part in webinars and discover the latest AWS features.

Experience with Build Projects is essential. Implement the real world AWS applications.

Join AWS Communities Join AWS Communities - Participate in forums such as AWS Post and also attend AWS events.

Earn Certifications - Continue to upgrade your capabilities by earning AWS certifications.

Explore AI and Serverless Technology - Stay up to date with the latest trends in AI/ML and serverless.

The most important factor to be successful being an AWS Certified Developer is continuous learning and adapting to the latest cloud technology.

Final Thoughts

The future for AWS Certified Developers is bright and full of potential. With new developments such as AI servers, serverless computing cloud-based multi-cloud, and cloud security influencing the market, AWS professionals must stay current and keep learning.

If you're a potential AWS developer Now is the perfect opportunity to get AWS certified and look into different career options.

0 notes

Text

Angular vs React: What to Choose in 2025?

With the constantly evolving web development landscape, selecting the best front-end framework is vital. Of the top options, Angular and React have been on the cutting edge for many years. Which one is the best option for 2025? Both have strengths and weak points, and the correct option will depend on your specific project's requirements. This article will discuss their advantages and disadvantages and other important aspects to take into consideration before making a choice.

What is Angular?

Angular is a fully-fledged front-end framework that was developed and is managed by Google. The framework is built on TypeScript. platform that is structured and has an opinionated method, which makes it an excellent choice for business-level applications.

What is React?

React in contrast is an JavaScript library that was developed by Facebook. It is mostly utilized to create interactive user interfaces that use components-based architecture. In contrast to Angular, React is more flexible and lets developers incorporate third-party libraries with ease.

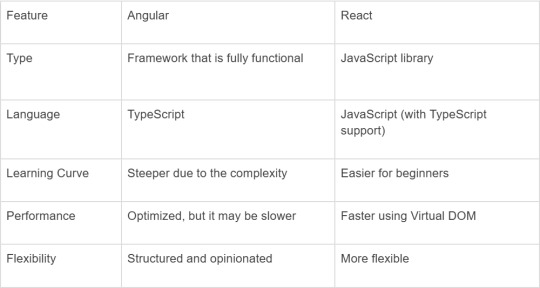

Key Differences Between Angular and React

Advantages of Angular

Comprehensive Framework Angular offers everything you need to build sophisticated applications, including integrated route routing, dependency injection and form handling.

Two-Way Data Binding This feature makes sure that any change made in the user interface will be immediately visible in the model, and reversed.

Secure security: Angular offers built-in security features such as DOM Sanitization, which makes it a safe choice.

Business-Grade Service: The largest businesses are more likely to use Angular because of its structured approach and the support that is long-term from Google.

Modular Development Modular Development: The modular design aids in organizing code effectively, making huge projects easier to manage.

Advantages of React

Virtual DOM to speed up performance React makes use of a Virtual DOM that makes rendering quicker and faster.

React is a Component Based Architecture. It facilitates reuse, which makes development more efficient and more flexible.

Flexibility In contrast to Angular, React is less than a skeptic and allows developers to choose their own libraries and tools.

Strong Community and Ecosystem with a huge developers' community React provides extensive assistance from 3rd party vendors as well as a broad variety of libraries.

SEO-Friendly: React apps perform better in SEO since they can support Server-side rendering (SSR) with frameworks such as Next.js.

When to Choose Angular?

It is recommended to use Angular If:

You are creating a massive enterprise application that needs a structured framework.

Your project requires high security and robust built-in security features.

You like TypeScript and you require an unambiguous framework and guidelines.

You're looking for a comprehensive package that covers everything from state management to routing without having to rely on libraries from outside.

You are looking for an Angular Course to master TypeScript-based development with a well-structured framework.

When to Choose React?

React is a better option If:

You must design a light, fast and user-friendly interface.

You want a more flexible approach and would like the ability to combine and mix different third-party libraries.

Your project needs to be highly efficient thanks to React's virtual DOM.

You are creating an application with a single page (SPA) or a progressive web application (PWA).

You're looking to make use of the server-side rendering (SSR) using Next.js to improve SEO.

Final Verdict: Angular or React in 2025?

The two Angular and React both are effective in their own way. Your decision should be based on the needs of the project as well as the team's expertise and the long-term objectives.

If you're developing an extensive, complex system that has strict guidelines, Angular is the better choice.

If you require flexibility in your application, speed, and a flourishing ecosystem React is the best way to take.

The bottom line is that both technologies will continue to advance and being aware of the latest developments will allow you to make informed decisions for 2025 and beyond.

Which do you prefer? Let us know your thoughts in the comments below!

0 notes

Text

A Comprehensive Learning Path to Tableau in 2025

Tableau has grown to be an extremely effective and widely-used software for data visualization, helping professionals and businesses transform raw data into useful insights. If you're a novice or a seasoned data analyst getting the most out of Tableau by 2025 requires a systematic approach to learning. This guide will guide you through step-by-step method to help you build proficiency in Tableau efficiently.

Why Learn Tableau in 2025?

Before we get started on the path to learning first, let's look at the reasons Tableau remains the most popular option for data visualization into 2025:

A User-Friendly Interface if they don't have the ability to code users can design interactive dashboards quickly.

Integration with various data sources Supports multiple databases, cloud services as well as spreadsheets.

A high demand in the job market Tableau abilities are sought-after in sectors like healthcare, finance as well as marketing.

AI as well as Automation features: automated tools and AI driven insights can make analysis more efficient and faster.

Now, let's look at the steps-by-step method to master Tableau in 2025.

Step 1: Understanding the Basics of Data Visualization

Before you dive into Tableau it's important to know the basics for data visualization. Some of the most fundamental concepts are:

The importance of telling stories using data.

Chart types and how to make use of charts and when to use.

Best practices for dashboard design.

Recommended Resources:

The Books "Storytelling with Data" by Cole Nussbaumer Knaflic.

Courses such as Data Visualization classes are available through Coursera as well as Udemy.

Step 2: Starting using Tableau

Install and Explore Tableau

Download Tableau Public (free) or Tableau Desktop (paid).

Get familiar using the Tableau interface which includes the menus, workspace and the toolbar.

Learn the basics of operations like dropping and dragging data, creating basic charts, and implementing filters.

Key Topics to Cover:

Connecting to various data sources.

Understanding dimensions vs. measures.

Making basic visualizations such as line graphs, bar charts or scatter plots.

Step 3: Building Intermediate Skills

Once you're confident working with basic features, you can begin exploring advanced features:

Calculated Fields: Discover to design custom calculations to alter data.

Parameters allow people to connect with dashboards in a dynamic manner.

Hierarchies and Filters: Increase the usability of dashboards by using interactive filters.

Tableau Functions: Know the logic, date and string functions to help improve the data manipulation.

During this phase, enrolling in a Tableau Course can provide structured learning and hands-on exercises to reinforce your skills.

Practice Resources:

Official Tableau eLearning platform.

Hands-on exercises on Tableau Public Gallery.

Step 4: Mastering Advanced Features

To advance your Tableau abilities beyond the finish line, concentrate on:

Tableau Prep: Learn to prepare and clean information efficiently.

LOD (Level of Detail) Expressions: Gain greater control over the data granularity.

Combining or Joins Mix data from a variety of sources efficiently.

Advanced Charts master waterfall charts, bullet graphs along with heat maps.

Storytelling using Dashboards Utilize the animations as well as navigational buttons to improve the user experience.

Step 5: Exploring Tableau Server & Tableau Online

For those who work as part of a team or organization for whom the ability to master Tableau Server along with Tableau Online is essential:

Publishing dashboards securely.

Controlling access and permissions.

Working together on reports.

Step 6: Getting Hands-on Experience

The most effective way to learn is to put your learning into practice:

Take part to participate in Makeover Tuesday challenges.

Create real-world projects with public datasets.

Join the Tableau Community Forums or get advice from experts.

Step 7: Preparing for Tableau Certification

If you are looking to prove your knowledge, think about the Tableau certification exam:

Tableau Desktop Specialist (Beginner Level)

Tableau Certified Data Analyst (Intermediate Level)

Tableau Desktop Certified Professional (Advanced Level)

These credentials can improve your career prospects and show your knowledge to prospective employers.

Step 8: Stay Up-to-date with Tableau Trends

Tableau is constantly evolving with new features and updates. To stay ahead:

Visit the official website of Tableau.

Participate in Tableau Conference and user group gatherings.

Connect to LinkedIn Groups and Online communities.

Final Thoughts

The ability to master Tableau in 2025 could provide you with amazing career options in the field of data analytics and business intelligence. If you follow this planned learning process in regular practice, as well as keeping up-to-date with the latest trends, you will be an Tableau professional and use data visualization to make impactful decisions.

0 notes

Text

Machine Learning to Predict Test Failures

In the current fast-paced world of software development demands for speedier reliable, more secure and effective software testing is never higher. Traditionally, testers and developers employ various techniques including manual testing as well as automated scripts to ensure the quality of software But despite all these efforts, errors in testing are still commonplace and the consequences could cost time as well as money and reputation. This is the point where Machine Learning (ML) comes into the picture. Utilizing the machine-learning algorithms we are able to identify test failures ahead of time before they occur, thereby simplifying the process of testing and reducing overall cost. The article we'll examine how machine learning can be used to predict failures in tests as well as the benefits of this technique, as well as the difficulties that accompany it.

Understanding the Role of Machine Learning in Software Testing

Machine learning, which is a subset of artificial Intelligence (AI) can allow systems to gain knowledge from data and enhance the performance of their systems over time, without having to be explicitly programed. When it comes to testing software, ML can analyze vast quantities of data, such as previous test results or code changes as well as performance metrics, to discover patterns that could indicate the possibility of failure in tests. Instead of relying on human judgment models of ML can tell the probability that a particular test is likely to fail based upon previous information and patterns.

Machine learning's application for software testing doesn't merely about automating the execution of tests. It's about improving the whole test process through identifying and fixing problems before they become. Through the incorporation of prescriptive models in the testing process businesses can avoid expensive delays and increase its software quality.

How Does Machine Learning Predict Test Failures?

Machine learning is able to predict test failures by following the steps:

1. Data Collection

The first step to apply ML to predict failures in tests is to collect relevant information. This information could be derived from many sources, including:

Past test results: Information regarding past tests, like whether they were passed or not.

Code changes The frequency, the nature and consequences of changes to code which could lead to the introduction of new bugs.

Test environment metrics Performance metrics like CPU utilization, memory consumption or system load during tests.

Activities of developers: Details about what changes were made by the developer and how these changes might affect specific tests.

2. Feature Engineering

After the data has been gathered After acquiring the data, the following step will be to design the features (input variables) that are used to create the ML model. This could include analyzing the complexity of the code, the testing case execution times and past failure rates. These are indicators that help an algorithm for machine learning to predict the probability of failure.

3. Model Training

Once the data is set The next step is to create a machine learning model. The most popular algorithms for this purpose are random forests, decision trees and SVM, support vector machines (SVM) as well as neural networks. The objective is to train the model to identify patterns that are that are associated with failures in tests through the use of labeled data (tests that failed or passed before).

4. Prediction

After the model has been built, it will begin making predictions. If a test is given the model analyzes the characteristics and determines if the test is likely to be successful or not. It may give an probability score to show the degree of confidence in the prediction.

5. Model Evaluation

It is essential to test the effectiveness of the model often to ensure that the predictions are reliable. This usually involves testing the predicted results against actual test results. Retraining and continuous refinement of the model could be required to keep the predictions current and accurate.

Benefits of Using Machine Learning to Predict Test Failures

Machine learning to predict failures in tests provides a number of significant advantages to teams working on software development and testing:

1. Early Identification of Issues

Machine learning assists in identifying possible test failures early in the development process. By knowing which tests are more likely to be unsuccessful, programmers can correct problems before they become more serious in time and cost to fix issues later.

2. Prioritization of Tests

All tests are not made to be the same. Certain tests are more important to the system's performance than others. ML helps to prioritize tests based on their risk of failure, or the importance to the system's performance. This will ensure that the most crucial tests are run first, which reduces the chance of ignoring critical bugs.

3. Optimization of Test Coverage

When analyzing test history Machine learning is able to suggest areas where more testing may be needed or areas where redundant tests could be removed. This will result in better testing coverage as well as a more effective overall test plan.

4. Reduced Costs and Time

The ability to predict test failures lets testers to concentrate on areas in which they are most likely to experience issues. This cuts down on unnecessary test runs and accelerates the testing process. This means that the time it takes to test is reduced, and the costs are reduced, which allows businesses to introduce products quicker and with greater confidence.

5. Continuous Improvement

As ML models are exposed to more information over time, they are more adept at forecasting failures. The continuous learning process lets the testing process grow over time, which leads to better predictions and fewer unexpected problems.

Challenges of Implementing Machine Learning in Test Failure Prediction

Although the possibilities of machine learning for testing software is huge but there are some hurdles that businesses may face when they implement these systems:

1. Data Quality and Quantity

The models that use machine learning need top-quality data in order to function properly. Uncomplete, biased, or noisy data could cause incorrect predictions. In addition, obtaining enough historical data can be a challenge for smaller or new teams.

2. Complexity of the Model

Machine learning models that are being trained particularly those that involve deep learning, are extremely expensive in terms of computational cost and time consumption. The right infrastructure and skills are required to ensure that the model's efficiency and adaptable.

3. Model Interpretability

While machine learning models can be extremely effective, they often serve like "black boxes," meaning the reasoning for their predictions might not be comprehended. This is a major challenge for test subjects who wish to analyze the results and take informed decision based on the results.

4. Integration with existing Tools

Integration of machine learning-based failure prediction models into existing testing frameworks and tools could be difficult. Making sure that there is a seamless integration among automated testing, bug-tracking along with the machine-learning model, is the key to maximizing its potential.

Conclusion

Machine learning is revolutionizing the way test failures are predicted and prevented in software development. By providing early warnings about likely test failures, ML enables teams to save time, cut costs, and enhance software quality.

While challenges exist, the benefits of integrating ML into testing workflows far outweigh the difficulties. As technology advances and more sophisticated models emerge, ML-based test failure prediction will become even more accurate and effective, making software testing smarter and more proactive.

If you want to enhance their expertise in ML-driven software testing, enrolling in Machine Learning training online can be a great step toward mastering this innovative approach.

0 notes

Text

Adversarial Misuse of Generative

Generative AI has revolutionized content creation for businesses and individuals alike, helping automate tasks, generate realistic images, and craft human-like text. However, alongside its benefits also comes an unwanted side: adversarial misuse. Cybercriminals and malicious actors have started exploiting generative AI for illicit and harmful uses that pose significant threats to individuals, businesses, and even global security. This article explores various forms of misuse as well as its possible repercussions and offers strategies on how to counter these risks effectively.

Understanding Adversarial Misuse of Generative AI

Adversarial misuse refers to any use of generative AI for misleading, deceptive or harmful content production. This misuse may take several forms such as:

1. Deepfake Technology

Deepfakes use AI-powered algorithms to produce highly realistic but entirely false audio, video, and image content - capable of impersonating public figures, spreading misinformation or damaging reputations. As such, key concerns include:

Political Misinformation: False speeches and videos created through disinformation can influence elections and public opinion, while AI-generated deepfake voices can trick financial institutions into authorizing illegal transactions.

Deepfakes can also be used for defamation and harassment purposes, aiming to mislead individuals for political or personal gain.

2. AI-Generated Misinformation and Fake News

Artificial intelligence tools can produce fake news articles, social media posts and narratives at unprecedented scale - leading to further misinformation, disinformation campaigns and false narratives being spread on a massive scale.

This can have serious ramifications such as: Public Panic and Fear: News reports about pandemics, economic crashes or natural disasters can quickly create widespread panic among the general public. Stock Market Manipulation: Artificial Intelligence-generated false reports regarding major companies can affect investor behavior and stock prices directly.

Erosion of Trust: AI-generated misinformation has the power to undermine public faith in legitimate news sources and institutions, including legitimate news sources like CNN.

3. Cybercrime and Phishing Attacks

Malicious actors use AI technology for more sophisticated cyberattacks that are harder to detect; examples include:

AI-Powered Phishing Emails: Generative AI can create highly convincing phishing emails that look official, convincing users into divulging sensitive data.

Automated Scam Bots: AI-driven chatbots can pose as customer support representatives to deceive users into disclosing sensitive data.

Malware Generation: Artificial intelligence can be exploited to produce more advanced and adaptive malware which makes cybersecurity defenses even harder to defend against.

4. Biased and Discriminatory AI Outputs

Artificial intelligence models are intended to be neutral; however, adversarial actors may manipulate them in such a way as to generate biased or discriminatory content that contributes to prejudice, such as:

Racial, Gender or Political Bias: AI-generated content may perpetuate harmful stereotypes or unfairly target specific groups.

Automated Hate Speech: Malicious users can exploit AI models to produce hateful or extremist propaganda at scale. Consequences of Adversarial AI Misuse

The Consequences of Adversarial AI Misuse

AI misuse has many detrimental ramifications across multiple domains:

Political Destabilization: Deepfakes and disinformation created using artificial intelligence can wreak havoc by shifting public sentiment, disrupting elections and shaping policymaking decisions.

Economic Damages: Economic damages associated with fake financial news, AI-driven cyber fraud and stock market manipulation can lead to significant economic losses.

Personal and Social Harm: Victims of AI-powered scams such as deepfake blackmail or identity theft may endure devastating emotional, financial, and reputational repercussions as a result of being duped into participating.

Legal and Ethical Challenges: Current legal frameworks struggle to keep up with the rapid advancement of AI technology, leaving gaps in regulation and enforcement.

Strategies to Combat Misuse

Even with these threats in place, various strategies exist that can help mitigate risks associated with adversarial misuse of generative AI:

1. Advanced AI Detection Systems

Organizations are investing heavily in advanced AI detection systems to identify deepfakes, fake news articles and AI-powered phishing attempts. Some key advances include:

Deepfake Detection Algorithms: AI models trained to recognize inconsistencies in digital content can help identify any manipulation.

Automated Misinformation Screening: Google and social media companies have deployed AI solutions that detect and eliminate fake news before its spread occurs.

2. Regulation and Policy Enforcement

Governments and international organizations are working on policies that will regulate the ethical use of AI-generated content, including legislating it as unlawful use; such actions might include creating laws criminalizing such misuse.

Industry Standards: Encouraging tech companies to adopt AI ethics guidelines and transparency when producing AI-generated content.

3. AI Model Guardrails and Ethical AI Development

To prevent misuse, developers must ensure their models include safeguards to prevent abuse:

Content Moderation Filters: AI models should include safeguards that detect and block harmful output.

User Access Restrictions: Implementing stricter access controls for AI tools may help keep them out of the wrong hands.

4. Public Awareness and Digital Literacy

Education of the public on the risks associated with AI misuse is vital to combatting deception and manipulation. This involves: Teaching Media Literacy: Training individuals how to recognize AI-generated misinformation.

Encouraging Critical Thinking: Promoting skepticism when viewing suspicious material and verifying sources before sharing any data.

5. Collaboration Between Tech Companies and Governments

A joint effort from AI developers, social media platforms, cybersecurity firms, and governments is necessary in order to prevent AI misuse. Key collaborative measures may include:

Threat Intelligence Sharing: Organizations should share intelligence on emerging AI threats to strengthen defense mechanisms. AI Transparency Initiatives: Tech companies should disclose when content produced using artificial intelligence for better accountability purposes.

Conclusion

Generative AI Course offers great potential, yet its adversarial misuse poses a growing challenge. Deepfakes, misinformation campaigns, cybercrime using AI systems, and biased outputs could have far-reaching repercussions if left unchecked. By improving detection technologies, enforcing regulations, implementing ethical AI development practices and informing the public, we can minimize risks associated with AI misuse - taking a multifaceted approach will be necessary to ensure it remains a tool for innovation rather than exploitation.

0 notes

Text

Salesforce CPQ Pricing Strategies for Smarter Sales

As organizations across industries seek tools that allow them to extract meaningful insights from vast amounts of information, Salesforce CPQ (Configure, Price, Quote) has emerged as one of the go-to tools. Offering powerful analytics and reporting features that empower businesses with data-driven decisions making capabilities, Salesforce CPQ will soon become an essential skill set in order to remain competitive and relevant within their fields in 2025.

Let's investigate why Salesforce CPQ will remain an indispensable asset in 2025, its distinction from traditional business intelligence tools, and why its significance extends across professionals, students, and decision-makers alike.

The Complexity of Pricing in Today’s Sales Environment

Imagine trying to navigate an uncharted sea without any means of navigation; data in today's modern world is like that: unstructured and overwhelming. By 2025, organizations are expected to produce more data than ever before--IDC estimates global data creation will reach 163 Zettabytes by that year alone! Extracting meaningful insights from such vast amounts of information is no longer a luxury; rather it has become essential.

Salesforce CPQ is designed to offer businesses a structured solution for navigating this sea of data. By acting as a guide through the maze of data complexity and turning raw numbers into actionable insights, businesses can track key performance indicators, identify hidden trends, and make informed decisions that contribute to growth.

Empowering Decision-Makers

Decision makers in any organization face the challenging task of navigating through complex challenges and seizing opportunities, yet without access to relevant tools they may find their best decisions clouded by incomplete or inaccessible data - which is where Salesforce CPQ comes into its own.

Salesforce CPQ empowers decision-makers with intuitive dashboards and reports for data visualization. It transforms raw information into understandable insights that provide a clear view of business performance, from customer trends identification, sales performance analysis and forecasting future growth forecasting - giving decision-makers all of the tools needed for effective leadership.

Consider Salesforce CPQ the control tower of an organization, providing leaders with real-time access to critical data just like airline pilots use a control tower for safe flight navigation. In 2025's increasingly competitive and rapidly shifting business landscape, leaders will require fast and accurate interpretation of data in order to be competitive and stay relevant.

Bridge the Gap between Data and Action

Professionals often face difficulty translating data into actionable insights. Salesforce CPQ addresses this challenge by breaking complex information down into digestible chunks for ease of use - even those without technical backgrounds can utilize data effectively with this solution.

Salesforce CPQ's intuitive user-interface ensures seamless data interaction. Professionals can effortlessly build interactive reports, create custom dashboards, and perform advanced analytics - without needing extensive coding skills - making Salesforce CPQ an indispensable tool for marketing analysts, financial planners, operations managers, and those seeking online training with Salesforce CPQ. CPQ Training Online makes its accessibility a key advantage; users can learn and implement its capabilities easily to enhance decision making processes.

Imagine trying to bake a cake without instructions! Salesforce CPQ serves as the recipe book of data analysis--providing step-by-step guidance so users can generate accurate yet actionable insights. By 2025, businesses that aim to remain competitive must be able to turn data into clear strategies in order to stay ahead.

The Rise of Self-Service Analytics

Salesforce CPQ's signature feature is its emphasis on self-service analytics. In 2025, data democratization will continue its upward trajectory as more professionals, regardless of technical knowledge, seek ways to access, analyze and visualize data.

Salesforce CPQ empowers users to explore data independently, cultivating a culture of data-driven decision making. Its intuitive drag-and-drop interface, integration with other Salesforce tools like CRM and Analytics Cloud, as well as its extensive library of pre-built connectors makes this tool accessible for users of all skill levels.

Consider Salesforce CPQ like a self-service gas station: users don't require specialists or external resources to fuel up their vehicles. In 2025, professionals won't need IT teams to access and analyze data - instead they will have the tools at their fingertips that enable them to explore insights on their own.

Salesforce CPQ for Professionals and Students

Acquiring Salesforce CPQ skills can open up new career prospects for professionals already employed, especially as demand for data analysts, business intelligence specialists, and data-driven decision makers continues to rise. According to LinkedIn's list of sought-after skills by employers for roles requiring data analysis or business intelligence - Salesforce CPQ being one of them!

Students entering the workforce in 2025 will find Salesforce CPQ an indispensable skill, especially given today's data-driven world. Learning to interpret and visualize information is not simply advantageous but essential; therefore Salesforce CPQ gives students an edge that makes them highly marketable across fields such as finance, marketing, healthcare and technology.

Conclusion

Looking ahead to 2025, Salesforce CPQ promises to remain not just an invaluable tool but an indispensable skill set for professionals across industries. With data growing exponentially and demand increasing for actionable insights and self-service analytics gaining ground as work changes over time, Salesforce CPQ will play a central role in shaping the future of work.

0 notes

Text

Docker Tutorial for Beginners 2025

Today is software development landscape the need for efficient scalable and portable applications has never been greater. As businesses strive to adopt modern methodologies like cloud computing and microservices tools that allow for quicker deployment isolation and consistency are critical. Docker has emerged as one of the essential tools for developers offering a solution to many of these challenges. In 2025, knowing Docker is not only advantageous; it is essential for anyone hoping to remain relevant in the quickly changing software development industry.

In this Docker tutorial for beginners we will walk you through the fundamentals of Docker explain why it has become such a powerful tool and provide clear steps on how you can get started in mastering Docker. Whether you are a student a professional in need of training or a decision-maker evaluating technologies this tutorial aims to make Docker approachable while delivering valuable insights into how it fits into modern development practices.

What is Docker and Why Does it Matter?

To understand Docker let’s imagine you’re packing for a trip. You have your clothes toiletries and essentials but you do not want to bring everything separately—things might get scattered and your luggage would be unorganized. Instead, you decide to pack everything into a single suitcase neatly organized ready to go wherever you need. Docker works in a similar way but for applications.

At its core Docker is a platform that allows developers to create deploy and run applications inside isolated environments called containers. These containers bundle everything an application needs—its code runtime libraries and dependencies—into a single lightweight package. Instead of worrying about whether your code will run on different machines or in varying environments Docker ensures that your application behaves the same way regardless of where it is deployed.

This ability to provide consistent portable environments is why Docker has become indispensable. In traditional development practices applications often relied on virtual machines which were heavy and resource-intensive. Containers however are far more lightweight and efficient allowing developers to package and deploy their applications quickly. In 2025 as organizations continue to embrace cloud-native architectures and microservices Docker has become the go-to solution for enabling seamless deployment and scalability.

The Anatomy of a Docker Container

The operation of Docker containers will be examined in more detail. Picture a well-stocked toolbox. Each tool is neatly placed ready to be grabbed and used when needed. Similarly, a Docker container contains everything needed to run an application from the code itself to the libraries configuration files and dependencies.

A Docker container consists of several key components:

Image: Think of an image as a blueprint or snapshot of your application. It contains everything required to run the app—operating system libraries binaries and configuration files. Once an image is built it can be shared and reused across different environments.

Container: A container is a running instance of a Docker image. When you run a container you are essentially running your application inside an isolated environment independent of the underlying infrastructure.

Docker Engine: This is the core software responsible for building running and managing containers. It acts as a bridge between your application and the host system, ensuring containers can operate effectively without conflicting with each other or the host environment.

Docker CLI: The command-line interface that developers use to interact with Docker. It provides a set of commands for building images running containers managing networks and much more.

Why Docker is Essential in 2025

In 2025 Docker’s importance has only grown. As organizations continue to move toward cloud-native architectures and adopt microservices the need for tools that promote consistency scalability and efficiency has become non-negotiable.

Here is why Docker remains an essential part of the software development toolkit:

Portability: Containers ensure that applications run consistently across different environments be it on-premises in the cloud or at the edge. This removes the "it works on my machine" problems that plagued traditional development workflows.

Efficiency and Resource Management: Unlike virtual machines containers share the host system’s kernel reducing overhead and allowing multiple containers to run on a single host. This improves resource utilization saves costs and boosts overall system efficiency.

Scalability and Microservices: Docker simplifies the deployment of microservices architectures. Each microservice can run in its own container isolated from others and easily scaled up or down based on demand. This makes it easier to maintain update and manage applications at scale.

Collaboration and Reusability: Docker containers allow teams to share and reuse consistent environments. Whether you’re a developer operations team or data scientist Docker ensures everyone is working in the same environment reducing the risk of configuration drift and deployment issues.

Getting Started with Docker

We will start by discussing how to get started with Docker. Imagine building a birdhouse from scratch. First you need the right tools and instructions. Docker offers just that—a streamlined process for creating running and managing containers. To help you get started, here is a condensed guide:

Install Docker: The first step is to install Docker on your machine. You can download the Docker Desktop application for your operating system (Windows macOS or Linux). Docker Desktop provides an intuitive user interface and includes everything you need to start working with containers.

Understand Docker Images: Start by pulling pre-built images from Docker Hub (the official Docker registry). For example you might pull a popular image like node:14 to get a Node.js environment. Images serve as the foundation of your containers.

Run Your First Container: Once you have an image running a container is simple. Use the Docker CLI to run your application. For instance if you have a simple Node.js application you can run docker run -d -p 8080:8080 node:14 to start your app in a container.

Build Your Own Images: For more control you can build your own custom Docker images. Create a Dockerfile that specifies the environment libraries and dependencies your application needs. Then use docker build -t myapp . to create your image.

Explore and Manage Containers: Use Docker commands like docker ps to list running containers docker logs to view logs and docker stop to stop containers. Docker Compose a tool provided by Docker makes it even easier to define and manage multi-container applications.

Docker Training Online for Professionals

As you explore Docker, consider joining in Docker training online to gain a deeper understanding of containerization and its applications. Such training can provide hands-on experience, advanced use cases and best practices that ensure you maximize Docker’s potential in real-world scenarios.

Conclusion

Docker has become a cornerstone of modern software development enabling organizations to build deploy and scale applications more efficiently. In 2025 mastering Docker is not just a technical advantage—it is a career necessity. Whether you are a student preparing for the future of work or a professional looking to upskill understanding Docker’s principles and capabilities will position you to thrive in the increasingly complex world of containerization and cloud-native architectures.

0 notes

Text

How to Overcome Key Challenges In MuleSoft Implementation

MuleSoft is a leading platform for integration that allows businesses to connect applications, data and devices seamlessly. MuleSoft offers powerful tools that streamline operations and increase efficiency. However, it also comes with a set of challenges. If these challenges are not addressed, they can cause project delays, higher costs and a reduced ROI. This article will examine the most common obstacles encountered during MuleSoft's implementation, and offer strategies for overcoming them.

1. Insufficient Planning and Scoping

MuleSoft implementation is often plagued by inadequate planning and unclear scope. Teams may experience scope creep, misaligned goals, and disappointing results if they don't clearly define integration goals.

Solution-

Comprehensive requirements analysis- Before beginning, engage stakeholders to collect detailed requirements and clearly define objectives.

Create a Roadmap- Divide the project into manageable stages, prioritizing integrations that are critical.

Define KPIs - Define metrics for measuring the success of MuleSoft, such as reduced processing times or improved data accuracy.

2. Lack of Expertise in MuleSoft and APIs

MuleSoft features are robust and require a thorough understanding of connectors, APIs, integration patterns, etc. Teams lacking sufficient expertise may face delays or errors during implementation.

Solution-

Training- Invest in Mulesoft training online for your team or hire certified developers.

MuleSoft documentation- MuleSoft provides extensive resources and guides to help you understand its platform.

Use the MuleSoft Partner Network- Collaborate on complex projects with MuleSoft certified implementation partners.

3. Managing Data Complexity

Integrating data across multiple systems can lead to redundancies, inconsistencies and issues with quality. It can be difficult to handle complex data transformations while ensuring a clean data exchange.

Solution- Implement Data governance- Establish policies to ensure data security, consistency, and quality before integration.

Use- MuleSoft DataWeave for efficient data transformation.

Test Early & Often- Perform thorough testing to ensure accuracy of data across integrated systems.

4. Performance Bottlenecks

Integrations that are not optimized well can lead to slow response times, increased latency and system downtimes, disrupting business processes.

Solution-

Optimize the Flows - Reduce system load by using asynchronous processing, caching and synchronization.

Monitor Performance - Use MuleSoft Anypoint Monitoring to track performance metrics and improve them.

Scale infrastructure- Make sure your infrastructure is able to handle an increase in traffic and workloads.

5. Security and Compliance Issues

MuleSoft's implementation is not complete without ensuring data security and regulatory compliance. Incorrect steps can lead to the exposure of sensitive information and even legal penalties.

Solution-

Use MuleSoft security features such as encryption, OAuth and tokenization.

Regular Auditors- Conduct periodic compliance audits in order to ensure adherence with regulations, such as GDPR and HIPAA.

Role Based Access Control (RBAC)- Restriction of access to integration resources according to user roles.

6. Resistance to Change

MuleSoft implementations are often hampered by employees who have become accustomed to their legacy systems and resist the adoption of new tools.

Solution-

Spread Awareness- Tell your team about the benefits MuleSoft offers, like streamlined workflows or improved efficiency.

Training- Organize training sessions that involve hands-on experience to familiarize your employees with the platform.

Include Stakeholders - Involve key team members to increase buy-in.

7. Integration with Legacy Systems

MuleSoft integration with old systems, which lack modern APIs and have inadequate documentation or limited documentation, is a common challenge.

Solution-

MuleSoft connectors- Use pre-built connectors, or create custom APIs for bridging gaps.

Gradual migration- If possible, transition gradually from legacy systems to new solutions to reduce the complexity.

Consult Experts - Seek MuleSoft professionals' guidance for any legacy system integration issues.

8. Scalability Concerns

MuleSoft is often implemented for the current requirements of a business without consideration of future scalability. This leads to rework when demand grows.

Solution-

Scalability Design- Create reusable APIs, integration components and a scalable architecture to support future growth.

Cloud Deployment - MuleSoft can be deployed on cloud platforms for elastic scaling.

Monitor trends- Assess business needs regularly and adjust integration strategy.

9. Cost Overruns

MuleSoft costs can increase due to poor planning, unanticipated technical challenges or prolonged project timelines.

Solution-

Budget realistically - Include software licensing, training and maintenance costs in your budget.

Agile Project management- Adopt a cost-effective iterative approach.

Use the built-in tools- MuleSoft has pre-built components that can reduce development costs and time.

10. Maintenance and Upgrades

Maintaining MuleSoft after implementation and updating it can be difficult without planning.

Solution-

Create a Maintenance Plan- Define roles and responsibility for monitoring and maintaining the integrations.

Automate updates- Use automation to deploy upgrades with minimum disruption.

Regular Training- Keep your team up to date on MuleSoft is latest features, best practices and updates.

Bottom Line

MuleSoft can be used to transform the integration capabilities of an organization, but this requires careful planning, expert execution and constant monitoring. Businesses can maximize the potential of MuleSoft by proactively addressing issues such as data integration, legacy system integration and security concerns. MuleSoft can be a cornerstone in your digital transformation journey with the right strategies and commitment to best practices.

0 notes

Text

What is a DevOps Engineer? A Look Inside The Role

The position of a DevOps Engineer has become an essential link between teams of developers & operations in the rapidly evolving field of technology. In addition to emphasizing teamwork this hybrid role incorporates a number of procedures & technologies to improve software delivery dependability & efficiency. However what are the duties of a DevOps Engineer & why are they so important in the current digital environment? Let us set out on a quest to discover the true nature of this position.

The Birth of DevOps

To understand the role of a DevOps Engineer it is essential to grasp the context from which it arose. Imagine a scenario where two teams development & operations are like two rival sports teams each with their playbook. Developers focus on building features while operations teams are concerned with stability & uptime. Traditionally these two groups often worked in silos leading to communication gaps & conflicts.

The DevOps movement emerged as a solution to this challenge advocating for a cultural shift where collaboration & shared responsibility take center stage. By bringing these two teams together organizations can deliver software more quickly & reliably much like a well coordinated sports team executing a flawless play.

What Does a DevOps Engineer Do?

Facilitating this communication & guaranteeing a smooth workflow are the fundamental responsibilities of a DevOps engineer. These are a few of the main duties that characterize their work –

Collaboration & Communication

Development & operations are connected by DevOps engineers. They provide an environment of open communication so that both teams are aware of each others objectives & difficulties. This partnership shortens the time it takes to launch new features & streamlines procedures.

Continuous Integration & Continuous Deployment (CI/CD)

Implementing CI/CD pipelines is one of the defining characteristics of a DevOps Engineers job. Consider this procedure as a manufacturing assembly line that is well maintained. While continuous installation automates the distribution of new code to production continuous integration enables developers to merge their modifications back into the main branch. This lowers the possibility of mistakes & enables quick update delivery.

Infrastructure as Code (IaC)

The Infrastructure as Code (IaC) concept which handles infrastructure setup similarly to application code is frequently used by DevOps engineers. Consider constructing a Lego set. You may design a script that puts everything together automatically rather than having to place each brick by hand. This method expedites the deployment process improves uniformity & lowers manual errors.

Monitoring & Performance Optimization

Once applications are deployed DevOps Engineers continuously monitor their performance akin to a coach analyzing a players game to identify areas for improvement. They use various tools to track application health gather metrics & make data driven decisions to optimize performance & ensure user satisfaction.

Security Integration

In today digital landscape security is paramount. DevOps Engineers integrate security practices into the development process a practice often referred to as DevSecOps. By considering security from the outset they help mitigate risks & ensure that applications are resilient against potential threats.

Skills & Tools

To excel as a DevOps Engineer a mix of technical skills & soft skills is essential. Proficiency in programming languages cloud platforms & automation tools is crucial. Familiarity with containerization technologies like Docker & orchestration tools like Kubernetes is also highly advantageous.

Moreover soft skills such as problem solving communication & collaboration are just as important. DevOps Engineers must navigate various personalities & work styles all while keeping the end goal in mind delivering high quality software that meets user needs.

The Impact of a DevOps Engineer

The influence of a DevOps Engineer can be likened to a skilled conductor leading an orchestra. Each section plays its part but it is the conductor who ensures harmony & synchrony. By fostering collaboration implementing efficient processes & emphasizing automation DevOps Engineers significantly improve software delivery speed & reliability. This not only enhances team productivity but also leads to increased customer satisfaction.

Organizations embracing DevOps practices often experience shorter development cycles higher deployment frequencies & reduced failure rates of new releases. In a world where customer expectations are ever increasing having a dedicated DevOps Engineer can be the difference between a thriving application & one that falls flat.

Bottom Line

It becomes evident as we explore the fields of software development & operations that DevOps engineers are essential in encouraging cooperation & bridging gaps. Their capacity to automate workflows improve communication & combine processes enables businesses to produce high quality goods & react quickly to market demands.

For those aspiring to become proficient in this field, engaging in DevOps training online can provide a solid foundation. These courses often cover essential tools, methodologies, and best practices, allowing students to develop the necessary skills to thrive as DevOps Engineers.

In essence a DevOps Engineer is not just a role it is a critical element of modern software development that embodies the spirit of teamwork innovation & continuous improvement. So the next time you think about software delivery remember the invisible architects behind the scenes orchestrating the perfect blend of development & operations for success.

#devops#web devlopment#teamwork#technology#software development#devops certification#devops training

0 notes