#what is data visualization in python

Text

What is Data Visualization Explained By Best Online Institute Analytics training Hub

All About Data Visualization:

Data visualization tools have made the interpretation and study of large amounts of datasets extremely convenient and it has ended the worries of being able to manipulate and handle data. Earlier methods of analyzing data without the means of data and tools proved to be a very laborious and mind-boggling job. Since the dawn of tools that were capable of handling data and showcasing them using via visualizations, it has become a lot easier and more convenient to handle data and gain insights through visually enticing graphics which help in decision-making.

Data visualization is an age-old human requirement of gaining insights into the hidden truth. The best example of this is when Galileo had access to a telescope, he looked at the sun for 40 days and every day he would draw a picture of what he saw on the surface of the sun. the post-analysis of these 40 pictures made clear a phenomenon called “the sunspots” which to date baffles many astronomers.

The story behind successful infographics is that they should be able to tell a complete and precise story about the data that it is representing.

From the first data visualization created by Galileo to the most recent data visualization tool, humanity has come a long way. The essence of data visualization in today’s time is often connected to Business intelligence & business analytics.

In this context, we like to bring to light the styles of the two very well-known data visualization tools which have left a deep impact on the lives of their users and help them transform the most complex and unorganized data into the most simple and most informative data visualization. The two powerful & interactive data visualization tools are Power BI & Tableau.

Data Visualization using Power BI

MS Power BI was a gift to the world from Microsoft released in the fall of 2013 which was based on the MS Excel platform. The concept behind creating Power BI data visualization was to enable small scale business to create data visualizations for them to gain meaningful insights and help nurture ideas to grow their business. Also, the data generated using Power BI is encrypted and can only be viewed if uploaded to the public domain, which not only ensures zero editing possibility and because Power BI is a paid service through enterprise access the data model or the integrity of the data visualization is not threatened by competitors.

What is the Power BI?

Power BI is a cloud-based business intelligence service module/tool that assists in importing data, cleaning it & transforming it to create a dynamic visualization of the information gathered from the data processed.

Power BI helps combine Excel’s power query, and power pivot data model whilst using DAX (Data Analysis Expressions), Charting, and visualization into one tool. Below mentioned are the pros & cons of using Power BI as a data visualization tool.

Advantages of Power BI: –Detailed custom data visualization

It is the most exclusive feature of this tool as it brings together the uniqueness of the user and the options of data visualization to help the user create custom visualizations that may differ from user to user. This tool allows the flow of ideas while making visualization a fun task.

Integrated with different data software

Power BI training Microsoft can import data from several data sources e.g. MS Excel, My SQL, XML, JSON, cloud-based sources, etc to make visualization easy and productive. It was initially designed to work with data only from MS Excel, but over the years has been made compatible to extract, clean, and transforming data from other sources as well.

Integrated with MS Excel

The tool allows the user to extract raw data from different data sources and post-cleaning, filtration, and slicing of data in a Power Bi report or Dashboard to paste it into Excel. The integration of the Power BI tool with MS Excel allows the users to transform raw data into a much simpler & manageable tabular form of data in Excel behind a present work-in-progress Power Bi visualization.

Timely Updates

Microsoft-integrated Power BI tends to get monthly updates which are constantly notified to the user with the help of notifications. There is a Microsoft Power BI community forum where suggestions to improve and upscale are welcomed by users and the user with the best idea/suggestion and rating has a great chance of their idea being picked for a new update.

Power BI Embedded

It is one of the positive aspects and features of Power BI which enables an application developer to embed or include Power BI reports and features into applications, which enables them to include Power BI visuals or reports into emails or websites to maximize the reach to readers.

Collaborative visualizations

The most talked-about feature or advantage of Power BI is the power to create interactive or powerful business intelligent data analytic visualizations which help the users and the readers or visualizers to gain a fresh perspective or insight on how to create a decision or pathway which will help the business to develop or attain better sales, profits, market capture, etc. The interactive reports or dashboards allow a descriptive & detailed representation of data and the truth hidden in it.

for the best Power BI course, Power BI Training, and Power BI certification course in Delhi, India.

Disadvantages of using Power BI: –Complex Relationships

Power BI works extremely well with single-directional relationships between datasets in a data model. But if the datasets were to need more than one interlink to create a visualization or dashboard, Power BI might not be the appropriate platform to work on. Thus, whilst creating a relationship in Power BI the users need to be extremely careful about what kind of dataset they wish to integrate into a visualization.

Jammed UI

A lot of users have reported or given feedback on the forum of Power BI that the user interface of Power BI tends to be a bit crowded with multiple functions that give the feel of an extremely overcrowded interface which often comes in the line of work and obstructs the flow of momentum gained whilst creating a data visualization.

Not a master of large data

Power BI is not a master of large data sets, it works well with data within the 10GB space limit. Anything above and beyond the specified data space Power BI is not the most recommended visualization tool. This means Power BI works well with smaller industries and businesses; thus, it also explains the lower cost variable of Power BI.

Data Visualization using Tableau

Tableau was the brainchild of 3 American developers named Chris Stolte, Pat Hanrahan, and Christian Chabot, the idea was to create a platform to able to create interactive heavy data sets that collaborate to create visually capturing data visualization to provide intuitive insights to decision making management. The aim of data visualization using business intelligence has been afore lone concept and has always amazed many, with the introduction of Tableau interpreting data and meaningful insights became easier than ever before.

What is Tableau?

Tableau is an interactive software designed to explore and work with multiple datasets to create an enigma of visually appealing infographics using powerful Tableau visualizations and business intelligence to help the management of a company gain meaningful insights into the current & future market trends and with the interactive capabilities of Tableau like ‘Ask Data’ which allows users to raise queries to the data from the sources of different data sets which are being imported to tableau.

Industries like healthcare, insurance, manufacturing, education, marketing, wholesale, and many other business domains have been making widespread use of perceptive data visualizations being achieved by Tableau.

Advantages of using Tableau: –Exceptional Visualizations

The most important or rather exclusive advantage is certainly the extraordinary storytelling with data capabilities using business intelligence which has helped Tableau earn the title of “the best data visualization tool for business intelligence” by Gartner which is an international advisory firm well-known for Information Technology & web solutions.

Ease of Use

Compared to any other business intelligence tool in the market, Tableau is considered for being the most compatible and user-friendly software. Tableau data visualization professionals have been quoted saying that “to master Tableau, one does not need to be from the technical field”, which in layman’s terms translates to anyone without any knowledge of coding or programming can master and learn tableau to create visually capturing visualizations.

Master of Large Datasets

Tableau with its capabilities of being capable of extracting data from large datasets and with no limits on the amount of data being extracted from these datasets is a dream come true for many data analysts. The fluency it has over extremely large datasets is a sight to behold. Tableau works with utmost efficiency and ease with large data sets and can handle data from different data sources all at the same time.

for the best interactive training on Tableau or Tableau Certification courses in Delhi, India

Disadvantages of using Tableau: –High Cost

This is probably the most impact-oriented disadvantage of Tableau, the cost of the software itself is something small to mid-size businesses might have trouble catering to. In addition to this the proper utilization, integration, maintenance & staff training adds to the cost of the software.

Tableau for basically designed for larger business enterprises which is economical for them in the long run.

Meager post-sale support

The major issue faced by a lot of users is the post-sale support of the Tableau sales team. Once any of the software’s services are procured by the user. The support post transactions are poor and not very supportive, whether it is renewal or procurement of a new service or licensee. The sales team is extremely rigid in providing offers that would best suit the user’s needs.

Asking Query

The earlier versions of Tableau had an extremely poor query editor which could not be used to ask definitive questions to the tableau. The latest update of Tableau, 2019.01 has integrated a feature in Tableau called ‘Ask Data’ which allows the user to raise queries but not to the complied visualization. The new feature allows users to ask questions about the data source as a whole and not to the compiled data visualization examples created by Tableau. The queries raised to the data sources create a visualization but is not integrated to compile multiple query visualization into one common visualization.

Conclusion

Both data visualization tools Power BI & Tableau are the game changers in their fields. Both the tools come with their own set of pros & con’s which redefine the way we do data visualization. No comparison between the two is why the content works briefly describing the features of Power BI & Tableau. Where Power BI is perfect for small to mid-sized businesses that deal with data not exceeding the 10 GB limit, Tableau works wonders for multinational firms with a global outreach.

Where Power Bi allows its users to integrate a query within its visualization, Tableau allows the user to raise queries to the data collected from the data source. Both tools are integrated to create a data visualization best suited to the business needs of the user. Thus, the baseline is that if you are a small to mid-size business planning to make it big, Power BI is the tool you would need to move forward with time which is also cost-effective in achieving long-term goals for SMEs (Small and medium enterprises). On the other hand, Tableau works best for companies with a larger expense budget and proves cost-effective in the long term with the ability to handle extremely massive data with ease and help create Tableau dashboard examples.

Some useful links are Below:

To know more about our Certification in Tableau

To know more about our Certification in Power BI

To know more about our Certification in Data Analytics Basic

To know more about our Certification in Data Analytics Intermediate

To know more about our Certification in Data Analytics Advanced

must visit our official website - Analytics Training Hub

Must visit our Official youtube channel - Youtube

Connect with us - on Facebook

Connect with us - on Instagram

#data visualization tools#what is data visualization in python#what is data visualization in power bi#what is data visualization in data science#what is data visualization in machine learning#importance of data visualization

1 note

·

View note

Text

What is Pandas in Python?

Introduction

What is Pandas? What does the name “Pandas” stand for? Why use Pandas?

Getting Started with Pandas

Installing Pandas Creating your first DataFrame Exploring and manipulating data in DataFrames

Key Features of Pandas

Data Structures Data Manipulation and Analysis Visualization

Conclusion

Summary of key benefits Why Pandas is essential for Python data science

FAQs

What is…

View On WordPress

0 notes

Text

What kind of bubble is AI?

My latest column for Locus Magazine is "What Kind of Bubble is AI?" All economic bubbles are hugely destructive, but some of them leave behind wreckage that can be salvaged for useful purposes, while others leave nothing behind but ashes:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

Think about some 21st century bubbles. The dotcom bubble was a terrible tragedy, one that drained the coffers of pension funds and other institutional investors and wiped out retail investors who were gulled by Superbowl Ads. But there was a lot left behind after the dotcoms were wiped out: cheap servers, office furniture and space, but far more importantly, a generation of young people who'd been trained as web makers, leaving nontechnical degree programs to learn HTML, perl and python. This created a whole cohort of technologists from non-technical backgrounds, a first in technological history. Many of these people became the vanguard of a more inclusive and humane tech development movement, and they were able to make interesting and useful services and products in an environment where raw materials – compute, bandwidth, space and talent – were available at firesale prices.

Contrast this with the crypto bubble. It, too, destroyed the fortunes of institutional and individual investors through fraud and Superbowl Ads. It, too, lured in nontechnical people to learn esoteric disciplines at investor expense. But apart from a smattering of Rust programmers, the main residue of crypto is bad digital art and worse Austrian economics.

Or think of Worldcom vs Enron. Both bubbles were built on pure fraud, but Enron's fraud left nothing behind but a string of suspicious deaths. By contrast, Worldcom's fraud was a Big Store con that required laying a ton of fiber that is still in the ground to this day, and is being bought and used at pennies on the dollar.

AI is definitely a bubble. As I write in the column, if you fly into SFO and rent a car and drive north to San Francisco or south to Silicon Valley, every single billboard is advertising an "AI" startup, many of which are not even using anything that can be remotely characterized as AI. That's amazing, considering what a meaningless buzzword AI already is.

So which kind of bubble is AI? When it pops, will something useful be left behind, or will it go away altogether? To be sure, there's a legion of technologists who are learning Tensorflow and Pytorch. These nominally open source tools are bound, respectively, to Google and Facebook's AI environments:

https://pluralistic.net/2023/08/18/openwashing/#you-keep-using-that-word-i-do-not-think-it-means-what-you-think-it-means

But if those environments go away, those programming skills become a lot less useful. Live, large-scale Big Tech AI projects are shockingly expensive to run. Some of their costs are fixed – collecting, labeling and processing training data – but the running costs for each query are prodigious. There's a massive primary energy bill for the servers, a nearly as large energy bill for the chillers, and a titanic wage bill for the specialized technical staff involved.

Once investor subsidies dry up, will the real-world, non-hyperbolic applications for AI be enough to cover these running costs? AI applications can be plotted on a 2X2 grid whose axes are "value" (how much customers will pay for them) and "risk tolerance" (how perfect the product needs to be).

Charging teenaged D&D players $10 month for an image generator that creates epic illustrations of their characters fighting monsters is low value and very risk tolerant (teenagers aren't overly worried about six-fingered swordspeople with three pupils in each eye). Charging scammy spamfarms $500/month for a text generator that spits out dull, search-algorithm-pleasing narratives to appear over recipes is likewise low-value and highly risk tolerant (your customer doesn't care if the text is nonsense). Charging visually impaired people $100 month for an app that plays a text-to-speech description of anything they point their cameras at is low-value and moderately risk tolerant ("that's your blue shirt" when it's green is not a big deal, while "the street is safe to cross" when it's not is a much bigger one).

Morganstanley doesn't talk about the trillions the AI industry will be worth some day because of these applications. These are just spinoffs from the main event, a collection of extremely high-value applications. Think of self-driving cars or radiology bots that analyze chest x-rays and characterize masses as cancerous or noncancerous.

These are high value – but only if they are also risk-tolerant. The pitch for self-driving cars is "fire most drivers and replace them with 'humans in the loop' who intervene at critical junctures." That's the risk-tolerant version of self-driving cars, and it's a failure. More than $100b has been incinerated chasing self-driving cars, and cars are nowhere near driving themselves:

https://pluralistic.net/2022/10/09/herbies-revenge/#100-billion-here-100-billion-there-pretty-soon-youre-talking-real-money

Quite the reverse, in fact. Cruise was just forced to quit the field after one of their cars maimed a woman – a pedestrian who had not opted into being part of a high-risk AI experiment – and dragged her body 20 feet through the streets of San Francisco. Afterwards, it emerged that Cruise had replaced the single low-waged driver who would normally be paid to operate a taxi with 1.5 high-waged skilled technicians who remotely oversaw each of its vehicles:

https://www.nytimes.com/2023/11/03/technology/cruise-general-motors-self-driving-cars.html

The self-driving pitch isn't that your car will correct your own human errors (like an alarm that sounds when you activate your turn signal while someone is in your blind-spot). Self-driving isn't about using automation to augment human skill – it's about replacing humans. There's no business case for spending hundreds of billions on better safety systems for cars (there's a human case for it, though!). The only way the price-tag justifies itself is if paid drivers can be fired and replaced with software that costs less than their wages.

What about radiologists? Radiologists certainly make mistakes from time to time, and if there's a computer vision system that makes different mistakes than the sort that humans make, they could be a cheap way of generating second opinions that trigger re-examination by a human radiologist. But no AI investor thinks their return will come from selling hospitals that reduce the number of X-rays each radiologist processes every day, as a second-opinion-generating system would. Rather, the value of AI radiologists comes from firing most of your human radiologists and replacing them with software whose judgments are cursorily double-checked by a human whose "automation blindness" will turn them into an OK-button-mashing automaton:

https://pluralistic.net/2023/08/23/automation-blindness/#humans-in-the-loop

The profit-generating pitch for high-value AI applications lies in creating "reverse centaurs": humans who serve as appendages for automation that operates at a speed and scale that is unrelated to the capacity or needs of the worker:

https://pluralistic.net/2022/04/17/revenge-of-the-chickenized-reverse-centaurs/

But unless these high-value applications are intrinsically risk-tolerant, they are poor candidates for automation. Cruise was able to nonconsensually enlist the population of San Francisco in an experimental murderbot development program thanks to the vast sums of money sloshing around the industry. Some of this money funds the inevitabilist narrative that self-driving cars are coming, it's only a matter of when, not if, and so SF had better get in the autonomous vehicle or get run over by the forces of history.

Once the bubble pops (all bubbles pop), AI applications will have to rise or fall on their actual merits, not their promise. The odds are stacked against the long-term survival of high-value, risk-intolerant AI applications.

The problem for AI is that while there are a lot of risk-tolerant applications, they're almost all low-value; while nearly all the high-value applications are risk-intolerant. Once AI has to be profitable – once investors withdraw their subsidies from money-losing ventures – the risk-tolerant applications need to be sufficient to run those tremendously expensive servers in those brutally expensive data-centers tended by exceptionally expensive technical workers.

If they aren't, then the business case for running those servers goes away, and so do the servers – and so do all those risk-tolerant, low-value applications. It doesn't matter if helping blind people make sense of their surroundings is socially beneficial. It doesn't matter if teenaged gamers love their epic character art. It doesn't even matter how horny scammers are for generating AI nonsense SEO websites:

https://twitter.com/jakezward/status/1728032634037567509

These applications are all riding on the coattails of the big AI models that are being built and operated at a loss in order to be profitable. If they remain unprofitable long enough, the private sector will no longer pay to operate them.

Now, there are smaller models, models that stand alone and run on commodity hardware. These would persist even after the AI bubble bursts, because most of their costs are setup costs that have already been borne by the well-funded companies who created them. These models are limited, of course, though the communities that have formed around them have pushed those limits in surprising ways, far beyond their original manufacturers' beliefs about their capacity. These communities will continue to push those limits for as long as they find the models useful.

These standalone, "toy" models are derived from the big models, though. When the AI bubble bursts and the private sector no longer subsidizes mass-scale model creation, it will cease to spin out more sophisticated models that run on commodity hardware (it's possible that Federated learning and other techniques for spreading out the work of making large-scale models will fill the gap).

So what kind of bubble is the AI bubble? What will we salvage from its wreckage? Perhaps the communities who've invested in becoming experts in Pytorch and Tensorflow will wrestle them away from their corporate masters and make them generally useful. Certainly, a lot of people will have gained skills in applying statistical techniques.

But there will also be a lot of unsalvageable wreckage. As big AI models get integrated into the processes of the productive economy, AI becomes a source of systemic risk. The only thing worse than having an automated process that is rendered dangerous or erratic based on AI integration is to have that process fail entirely because the AI suddenly disappeared, a collapse that is too precipitous for former AI customers to engineer a soft landing for their systems.

This is a blind spot in our policymakers debates about AI. The smart policymakers are asking questions about fairness, algorithmic bias, and fraud. The foolish policymakers are ensnared in fantasies about "AI safety," AKA "Will the chatbot become a superintelligence that turns the whole human race into paperclips?"

https://pluralistic.net/2023/11/27/10-types-of-people/#taking-up-a-lot-of-space

But no one is asking, "What will we do if" – when – "the AI bubble pops and most of this stuff disappears overnight?"

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/12/19/bubblenomics/#pop

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

--

tom_bullock (modified)

https://www.flickr.com/photos/tombullock/25173469495/

CC BY 2.0

https://creativecommons.org/licenses/by/2.0/

4K notes

·

View notes

Text

The FreeCodeCamp Study Challenge!

I literally just completed this challenge and I thought why not share the challenge on here for other people to take part in if they wanted to!

FreeCodeCamp is an open-source platform that offers various coding courses and certifications for web developers. The goal of this challenge is to choose one of the available courses on the FreeCodeCamp platform, complete the course, and earn the certificate at the end.

The challenge is self-paced, so the duration is entirely up to you. The challenge is there to motivate people into coding and/or continue their coding studies! Especially people in the Codeblr community!

FreeCodeCamp [LINK] offers the following courses:

(NEW) Responsive Web Design Certification (I've done this one)

JavaScript Algorithms and Data Structures Certification (I am going to do this one next)

Front End Libraries Certification

Data Visualization Certification

APIs and Microservices Certification

Quality Assurance Certification

Scientific Computing with Python Certification

Data Analysis with Python Certification

Information Security Certification

Machine Learning with Python Certification

Each course is broken down into multiple sections, and completing all the sections in a course will earn you a certification for that course.

To start the FreeCodeCamp Challenge, follow the steps below:

Choose a course on the FreeCodeCamp platform that you would like to complete.

Complete the course and earn the certificate.

Post about your progress every day that you study using the #freecodecampchallenge hashtag. You can post about what you have done towards the challenge, what you have learned, and any challenges you faced and how you overcame them.

The FreeCodeCamp Challenge is an excellent opportunity to improve your coding skills and earn a valuable certification!!!! Even add that to your resume/CV! I completed this challenge and you can see me posting about it - LINK.

Remember to post about your progress using the #freecodecampchallenge hashtag to track your progress and connect with other participants AND you don't have to study straight days, meaning you can take days off whenever you feel like it!

Good luck!

#freecodecampchallenge#freecodecamp#studyblr challenge#study challenge#codeblr#learn to code#progblr#studyblr#cs studyblr#cs academia#computer science#online learning#coding#programming#compsci#studying#webdev#frontend development#html css#html#css#comp sci#100 days of code#coding study#coding bootcamp

254 notes

·

View notes

Text

Share Your Anecdotes: Multicore Pessimisation

I took a look at the specs of new 7000 series Threadripper CPUs, and I really don't have any excuse to buy one, even if I had the money to spare. I thought long and hard about different workloads, but nothing came to mind.

Back in university, we had courses about map/reduce clusters, and I experimented with parallel interpreters for Prolog, and distributed computing systems. What I learned is that the potential performance gains from better data structures and algorithms trump the performance gains from fancy hardware, and that there is more to be gained from using the GPU or from re-writing the performance-critical sections in C and making sure your data structures take up less memory than from multi-threaded code. Of course, all this is especially important when you are working in pure Python, because of the GIL.

The performance penalty of parallelisation hits even harder when you try to distribute your computation between different computers over the network, and the overhead of serialisation, communication, and scheduling work can easily exceed the gains of parallel computation, especially for small to medium workloads. If you benchmark your Hadoop cluster on a toy problem, you may well find that it's faster to solve your toy problem on one desktop PC than a whole cluster, because it's a toy problem, and the gains only kick in when your data set is too big to fit on a single computer.

The new Threadripper got me thinking: Has this happened to somebody with just a multicore CPU? Is there software that performs better with 2 cores than with just one, and better with 4 cores than with 2, but substantially worse with 64? It could happen! Deadlocks, livelocks, weird inter-process communication issues where you have one process per core and every one of the 64 processes communicates with the other 63 via pipes? There could be software that has a badly optimised main thread, or a badly optimised work unit scheduler, and the limiting factor is single-thread performance of that scheduler that needs to distribute and integrate work units for 64 threads, to the point where the worker threads are mostly idling and only one core is at 100%.

I am not trying to blame any programmer if this happens. Most likely such software was developed back when quad-core CPUs were a new thing, or even back when there were multi-CPU-socket mainboards, and the developer never imagined that one day there would be Threadrippers on the consumer market. Programs from back then, built for Windows XP, could still run on Windows 10 or 11.

In spite of all this, I suspect that this kind of problem is quite rare in practice. It requires software that spawns one thread or one process per core, but which is deoptimised for more cores, maybe written under the assumption that users have for two to six CPU cores, a user who can afford a Threadripper, and needs a Threadripper, and a workload where the problem is noticeable. You wouldn't get a Threadripper in the first place if it made your workflows slower, so that hypothetical user probably has one main workload that really benefits from the many cores, and another that doesn't.

So, has this happened to you? Dou you have a Threadripper at work? Do you work in bioinformatics or visual effects? Do you encode a lot of video? Do you know a guy who does? Do you own a Threadripper or an Ampere just for the hell of it? Or have you tried to build a Hadoop/Beowulf/OpenMP cluster, only to have your code run slower?

I would love to hear from you.

13 notes

·

View notes

Text

AO3 analysis [May 2022, English]

Back in May I scraped a bunch of Detective Conan metadata off of AO3 and did some data analysis!

I used English-language works only. There were a total of 5,945 works included.

Important caveat: This analysis does not include fics that are locked to logged-in AO3 users only. For example, Curiosity Made the Cat is very popular, but wasn't included in these numbers.

Another caveat: This doesn't include the 50 or so fics that were Magic-Kaito-only, at the time of scraping.

The following graphics all have image descriptions. Please hover or tap to see them.

Works over time

First, total number of DetCo works on AO3, over time!

Big acceleration around 2016. I'll let more informed minds speculate as to why.

Characters

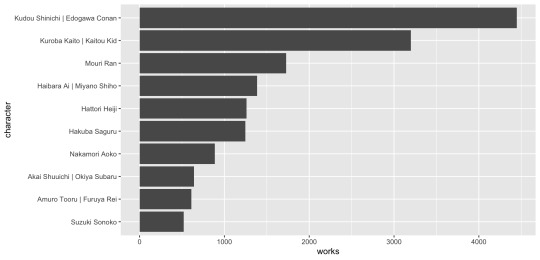

Next, let's look at some per-character statistics. Here are the number of AO3 works the top 10 characters appear in.

As is probably appropriate for the main character, Shinichi/Conan is in 75% of fics. Kaito, who isn't even part of the regular cast, comes in at #2 with in 3,000 works.

And here are the number of ships they appear in.

Shinichi has probably been shipped with the entire cast at this point, including himself.

Creators

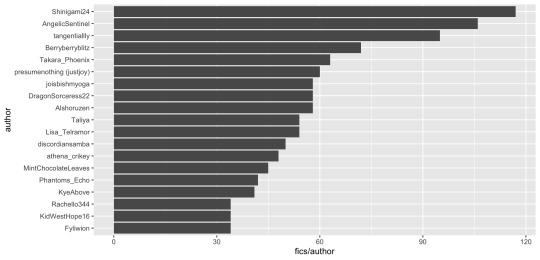

Some authors write a lot. The most prolific by number of Detective Conan works posted to AO3 are:

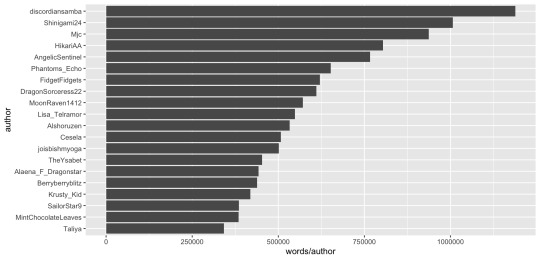

And by total number of words posted across all Detective Conan works on AO3:

Some authors write for a large variety of relationships, too.

Kudos per hit

Want to know what brings in kudos (and probably Kudous)?

Note: The following graphs are density estimates using R's ggplot's default parameters.

Caveat: Works with very high kudos/hits ratios, but low hits and all guest kudos were excluded, as their statistics are probably the result of bot activity.

The following graphs are all kernel density estimates, essentially smoothed versions of a histogram, scaled so the area under the curve equals 1. If you don't know what that means, right equals more kudos per hit, and left equals fewer.

First, how rating affects the kudos-to-hits ratio.

The more family-friendly a work is, the more kudos per hit it's likely to see.

Here are kudos over hits, broken down by the top four Detective Conan ships on AO3.

Caveat: Only single-ship works were included.

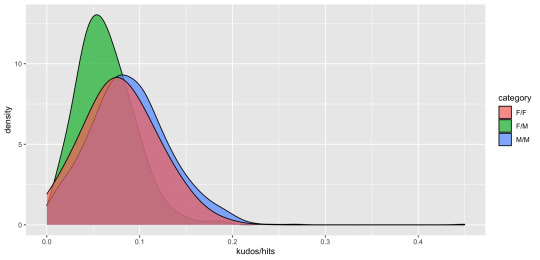

And here it a similar visualization, broken down by M/M, F/F, or F/M.

Caveat: Only single-category works were included.

Additional tags

And for fun, a word cloud of the 100 most common tags in the "additional tags" field.

FLUFF!

Keep in mind that the largest tag, "Fluff," only represents 793 fics out of 6,000. For other reference points, "Angst," represents 487 fics and "Aged-up Characters" represents 62.

(If you saw a different version of this graphic on Discord, with Angst as the largest tag, I fixed a bug. Fluff is biggest :D)

Other notes

FFN version is here <3.

I used a lightly modified version of Radiolarian's AO3 Scraper to pull data off of AO3, Python to canonicalize tags and do some CSV reformatting, and R to generate the graphs.

Please ask me if you want to see the data or code.

An earlier version of this post mistakenly stated that Shinichi is in 5,000 fics. He was in just under 4,500, in May.

An earlier version of this post mistakenly stated that the rate of new fics was 50 fics per day in 2016. It was 50 fics per month.

An earlier version used “Curiosity Killed the Cat” as an example of a locked fic; the actual name of the fic is “Curiosity Made the Cat.”

#circ talks#circ computes#detective conan#dcmk#data analysis#reposting because tumblr didn't let the previous one into the tags#T_T

200 notes

·

View notes

Note

Favorite programming language?

honestly? kind of a basic answer but i default to python whenever i need to make something in my free time, it's just so much more hassle-free than other languages in the ways that help me skip the small obstacles at the start and along the way that ADHD would not let me get past otherwise

for something cooler and more specialized: Pure Data, a visual programming language for audio/music/synthesizer type stuff, i was making a synth from scratch in that years back and even planned on running it off a Raspberry Pi or something with a specific midi controller as its interface (i might still have the control layout plans somewhere)

what i do NOT have is the code itself, like 2 weeks after starting the project the hard drive it was on died

though i have literally just earlier this week looked into finally attempting data recovery on that drive so maybe i can revive it after all this time after all?

anyway yeah i've done audio/DSP stuff in other languages more recently than that but not in languages as cool or unique as that one

6 notes

·

View notes

Text

"Master Data Analytics at Softcrayons | Enroll in Noida Today!"

Unlock your future with a Data Analytics Course in Noida at SoftCrayons. Are you prepared to take your career into the upper echelons of your profession? Enroll now in the Data Analytics Training Institute in Noida at SoftCrayons and convert your potential into excellence. The course has been designed with the most balanced approach possible in the present data-driven world regarding theoretical and practical knowledge.

Why SoftCrayons?

Full Curriculum: The program spans all the way from creating a basic, introductory understanding of Python programming to advanced machine learning techniques. You will perform statistical and predictive analyses through data visualization to make sure you gain in-depth knowledge about everything related to data analytics.

Hands-on Experience: Get your hands dirty with industry projects and experience working on live projects with experts in the industry. It allows you to practice what you have learned in the course and prepares you for challenging data problems at work.

Expert Instructors: Learn from industry professionals who bring their extensive knowledge and experience into the classroom. Instructors commit one-on-one with you throughout your learning experience toward your success. After the course, you will be certified to prove your skills and knowledge to make yourself competitive and valuable in any organization. SoftCrayons is centrally located in Noida, providing easily accessible learning in a bright environment.

Key Features Highlights

Data Analytics: Master the data analytics skill with our various modules.

Python Programming: This module of Python is the most demanded language in data science.

Machine Learning: Learn all the machine learning algorithms hand in practice.

Data Visualization: Helps convert large quantities of data into compelling visual stories.

Big Data: Learn how to handle the complexity of big data.

Real-time Industry Projects: Live projects that are going to be handed to you are going to help grow your practical skills.

Certification: Get an outstanding accreditation in the market.

Enroll now, and do not miss this great chance to upgrade your career with a Data Analytics training course in Noida from SoftCrayons and be a part of the data revolution. Our course curriculum is designed to let you acquire the skills leading to incredible success in Data Analytics.

Visit our site to learn more about the course and enroll today. Take the first step towards a career in Data Analytics!

#data analytics#data analysis course in noida#Data Analaytics Training In Noida#Data Analaytics Training Institute In Noida

2 notes

·

View notes

Text

What are the skills needed for a data scientist job?

It’s one of those careers that’s been getting a lot of buzz lately, and for good reason. But what exactly do you need to become a data scientist? Let’s break it down.

Technical Skills

First off, let's talk about the technical skills. These are the nuts and bolts of what you'll be doing every day.

Programming Skills: At the top of the list is programming. You’ll need to be proficient in languages like Python and R. These are the go-to tools for data manipulation, analysis, and visualization. If you’re comfortable writing scripts and solving problems with code, you’re on the right track.

Statistical Knowledge: Next up, you’ve got to have a solid grasp of statistics. This isn’t just about knowing the theory; it’s about applying statistical techniques to real-world data. You’ll need to understand concepts like regression, hypothesis testing, and probability.

Machine Learning: Machine learning is another biggie. You should know how to build and deploy machine learning models. This includes everything from simple linear regressions to complex neural networks. Familiarity with libraries like scikit-learn, TensorFlow, and PyTorch will be a huge plus.

Data Wrangling: Data isn’t always clean and tidy when you get it. Often, it’s messy and requires a lot of preprocessing. Skills in data wrangling, which means cleaning and organizing data, are essential. Tools like Pandas in Python can help a lot here.

Data Visualization: Being able to visualize data is key. It’s not enough to just analyze data; you need to present it in a way that makes sense to others. Tools like Matplotlib, Seaborn, and Tableau can help you create clear and compelling visuals.

Analytical Skills

Now, let’s talk about the analytical skills. These are just as important as the technical skills, if not more so.

Problem-Solving: At its core, data science is about solving problems. You need to be curious and have a knack for figuring out why something isn’t working and how to fix it. This means thinking critically and logically.

Domain Knowledge: Understanding the industry you’re working in is crucial. Whether it’s healthcare, finance, marketing, or any other field, knowing the specifics of the industry will help you make better decisions and provide more valuable insights.

Communication Skills: You might be working with complex data, but if you can’t explain your findings to others, it’s all for nothing. Being able to communicate clearly and effectively with both technical and non-technical stakeholders is a must.

Soft Skills

Don’t underestimate the importance of soft skills. These might not be as obvious, but they’re just as critical.

Collaboration: Data scientists often work in teams, so being able to collaborate with others is essential. This means being open to feedback, sharing your ideas, and working well with colleagues from different backgrounds.

Time Management: You’ll likely be juggling multiple projects at once, so good time management skills are crucial. Knowing how to prioritize tasks and manage your time effectively can make a big difference.

Adaptability: The field of data science is always evolving. New tools, techniques, and technologies are constantly emerging. Being adaptable and willing to learn new things is key to staying current and relevant in the field.

Conclusion

So, there you have it. Becoming a data scientist requires a mix of technical prowess, analytical thinking, and soft skills. It’s a challenging but incredibly rewarding career path. If you’re passionate about data and love solving problems, it might just be the perfect fit for you.

Good luck to all of you aspiring data scientists out there!

#artificial intelligence#career#education#coding#jobs#programming#success#python#data science#data scientist#data security

2 notes

·

View notes

Text

redheadintech

After checking my messages, I concluded that most of them are about AI and what key skills are required to pursue a career in AI. So here are some essential skills that are necessary:

✨Mathematics: AI involves a lot of mathematical concepts, such as linear algebra, calculus, probability theory, statistics. These concepts are used in developing AI algorithms and models.

✨Programming: Knowledge of programming languages are crucial for learning AI (such as Python, R, C++). These programming languages are used for developing AI applications and implementing algorithms.

✨Data Analysis: AI relies heavily on data, so it is essential to have skills in data analysis, data cleaning, and data visualization. Understanding data structures and algorithms are also helpful.

✨Machine Learning: A strong understanding of machine learning concepts is essential for AI. This includes supervised and unsupervised learning, deep learning, and neural networks.

✨Critical Thinking: AI requires a lot of problem-solving skills and critical thinking. You need to be able to analyze data, identify patterns, and develop algorithms that can make predictions or decisions based on that data.

✨Patience: AI requires a lot of patience. Chances that your first model training will be successful with a very good accuracy rate are low. You constantly have to find new ways how to improve the training process and it takes time.

✨Hope this helps and hope you all are having a great day!

.

#redhead #redhair #ginger #gingerhair #freckledfaces #freckles #sunkissedskin #sunkissed #selfie #selfietime #greeneyes #paleskin #redhairdontcare #hairgoals #hairstyle #makeup #artificialintelligence #ai

18 notes

·

View notes

Text

Code is beautiful

Ever since I was young, coding felt like an impossible dream—one that involved mysterious languages like Python and C++. I bought books about these languages, but I never had the courage to dive deep into them. Perhaps I lacked the motivation.

Today, at 34, I now feel a renewed energy when I consider how useful coding skills can be. It's a language that speaks to machines. Instructions put in logical order, translated into binary code, tell the machine where to switch on and off various transistors. At least, that's my very primitive understanding of how it all works.

The idea of this blog is to share my journey with you—a very chaotic journey of finding what I truly want to do with my career. I studied graphic design and switched to media design. After almost a decade working for two companies, I always thought of myself as a creative designer, aka "the artist" 😄. Be it print, videos, UX, UI, or mood boards, the visual domain always felt to me like a very strong connection to my soul.

We, as humans, probably need to be part of a "tribe," and my tribe was always design. Today, at this stage of my life, I've made a very unexpected decision: I will learn how to code. I will put all my heart and energy into learning how to do it with intention and intensity. And I will share my journey here. Is it a good idea? Not sure. Can I write in a way that will be interesting for you to read? Not sure either. But hey, here I am, doing my thing. And on the journey to learning how to code, I will be sharing some "golden nuggets" I discover along the way.

Why is the title of this article "Code is Beautiful"? After working for almost a decade in the design field, I always struggled to "see" or "feel" the impact of my work. Of course, there are analytics, trackers, and all these fancy statistical tools that, in theory, could indicate how your design impacts the users. But for me, after seeing my work published online, I never felt like, "Wow, good job, you made something great." The feeling was always neutral. And today, design is almost a copy/paste; you simply follow the trend. With code, it's different. Every line is important; every instruction, variable, and piece of data is part of a bigger picture. And it's useful. Very useful. It impacts society. It helps people. It saves lives. That was always the element I struggled with in design: the tangible. This is why I find code is beautiful, not because of its visual beauty, but because of its crucial role in our civilization and the potential impact it has.

This, of course, is my own opinion. Other people might find design more important. And maybe one day I will change my mind, but today that's the way I see it.

See you in the next article 😉

3 notes

·

View notes

Text

Can someone that knows how to code Python tell me what this code means?

I've been trying to use Visual Novel Maker, but when I'm testing the game and try to load a save, I get this error and the whole thing crashes:

Uncaught TypeError: Cannot read properties of undefined (reading 'id')

File: ENGINE.js@1:155081

Stack trace:

TypeError: Cannot read properties of undefined (reading 'id')

at t.makeMutable (file:///C:/Users/jaymp/Ravens%20Rise/data/ENGINE.js:1:155081)

at t.blt (file:///C:/Users/jaymp/Ravens%20Rise/data/ENGINE.js:1:161972)

at Component_ImageMap.onDataBundleRestore (Component_ImageMap_117.js:24:12)

at Function.ObjectCodec.onRestore (file:///C:/Users/jaymp/Ravens%20Rise/data/ENGINE.js:1:12529)

at Function.ObjectCodec.onRestore (file:///C:/Users/jaymp/Ravens%20Rise/data/ENGINE.js:1:12373)

at Function.ObjectCodec.onRestore (file:///C:/Users/jaymp/Ravens%20Rise/data/ENGINE.js:1:12148)

at Function.ObjectCodec.onRestore (file:///C:/Users/jaymp/Ravens%20Rise/data/ENGINE.js:1:12148)

at Function.ObjectCodec.onRestore (file:///C:/Users/jaymp/Ravens%20Rise/data/ENGINE.js:1:12373)

at Component_GameSceneBehavior.restoreScene (Component_GameSceneBehavior_42.js:715:22)

at Component_GameSceneBehavior.prepareVisual (Component_GameSceneBehavior_42.js:776:10)

Idk what any of this means, it may as well be written in runes. I even tried remaking the game in a fresh file and it STILL crashes in the exact same way. I just can't make a game that has saving and loading which severely limits the use of this $60 software

#visual novel maker#coding#programming#python#I literally bought this software bc it said you didn't need to know how to code to use it :/

2 notes

·

View notes

Text

Exploring Python: Features and Where It's Used

Python is a versatile programming language that has gained significant popularity in recent times. It's known for its ease of use, readability, and adaptability, making it an excellent choice for both newcomers and experienced programmers. In this article, we'll delve into the specifics of what Python is and explore its various applications.

What is Python?

Python is an interpreted programming language that is high-level and serves multiple purposes. Created by Guido van Rossum and released in 1991, Python is designed to prioritize code readability and simplicity, with a clean and minimalistic syntax. It places emphasis on using proper indentation and whitespace, making it more convenient for programmers to write and comprehend code.

Key Traits of Python :

Simplicity and Readability: Python code is structured in a way that's easy to read and understand. This reduces the time and effort required for both creating and maintaining software.

Python code example: print("Hello, World!")

Versatility: Python is applicable across various domains, from web development and scientific computing to data analysis, artificial intelligence, and more.

Python code example: import numpy as np

Extensive Standard Library: Python offers an extensive collection of pre-built libraries and modules. These resources provide developers with ready-made tools and functions to tackle complex tasks efficiently.

Python code example: import matplotlib.pyplot as plt

Compatibility Across Platforms: Python is available on multiple operating systems, including Windows, macOS, and Linux. This allows programmers to create and run code seamlessly across different platforms.

Strong Community Support: Python boasts an active community of developers who contribute to its growth and provide support through online forums, documentation, and open-source contributions. This community support makes Python an excellent choice for developers seeking assistance or collaboration.

Where is Python Utilized?

Due to its versatility, Python is utilized in various domains and industries. Some key areas where Python is widely applied include:

Web Development: Python is highly suitable for web development tasks. It offers powerful frameworks like Django and Flask, simplifying the process of building robust web applications. The simplicity and readability of Python code enable developers to create clean and maintainable web applications efficiently.

Data Science and Machine Learning: Python has become the go-to language for data scientists and machine learning practitioners. Its extensive libraries such as NumPy, Pandas, and SciPy, along with specialized libraries like TensorFlow and PyTorch, facilitate a seamless workflow for data analysis, modeling, and implementing machine learning algorithms.

Scientific Computing: Python is extensively used in scientific computing and research due to its rich scientific libraries and tools. Libraries like SciPy, Matplotlib, and NumPy enable efficient handling of scientific data, visualization, and numerical computations, making Python indispensable for scientists and researchers.

Automation and Scripting: Python's simplicity and versatility make it a preferred language for automating repetitive tasks and writing scripts. Its comprehensive standard library empowers developers to automate various processes within the operating system, network operations, and file manipulation, making it popular among system administrators and DevOps professionals.

Game Development: Python's ease of use and availability of libraries like Pygame make it an excellent choice for game development. Developers can create interactive and engaging games efficiently, and the language's simplicity allows for quick prototyping and development cycles.

Internet of Things (IoT): Python's lightweight nature and compatibility with microcontrollers make it suitable for developing applications for the Internet of Things. Libraries like Circuit Python enable developers to work with sensors, create interactive hardware projects, and connect devices to the internet.

Python's versatility and simplicity have made it one of the most widely used programming languages across diverse domains. Its clean syntax, extensive libraries, and cross-platform compatibility make it a powerful tool for developers. Whether for web development, data science, automation, or game development, Python proves to be an excellent choice for programmers seeking efficiency and user-friendliness. If you're considering learning a programming language or expanding your skills, Python is undoubtedly worth exploring.

8 notes

·

View notes

Text

Python: What is it all about?

Python is a high-level, interpreted programming language.

A programming language is a formal language that is used to create instructions that can be executed by a computer. Programming languages are used to develop a wide range of software applications, from simple scripts to complex operating systems.

It is widely used for a variety of applications.

What is Python?

Python is a dynamic, object-oriented programming language.

A dynamic programming language is a programming language in which the type of a variable is not known until script is run. This is in contrast to static typing, in which the type of a variable is set explicitly .

It is an interpreted language, meaning that it is executed line by line by an interpreter, rather than being compiled into machine code like some other languages. This makes it easy to develop and test Python programs quickly and efficiently.

Python is also a general-purpose language, meaning that it can be used for a wide range of tasks. It comes with a comprehensive standard library that provides modules for common tasks such as file handling, networking, and data manipulation. This makes it easy to get started with Python and to develop complex applications without having to write a lot of code from scratch.

Origin of Python

Python was created by Guido van Rossum in the late 1980s as a successor to the ABC programming language. ABC was a simple, interpreted language that was designed for teaching programming concepts. However, van Rossum felt that ABC was too limited, and he wanted to create a more powerful and versatile language.

Python was influenced by a number of other programming languages, including C, Modula-3, and Lisp. Van Rossum wanted to create a language that was simple and easy to learn, but also powerful enough to be used for a variety of applications. He also wanted to create a language that was portable across different platforms.

Python was first released in 1991, and it quickly gained popularity as a teaching language and for scripting tasks. In the late 1990s and early 2000s, Python began to be used for more complex applications, such as web development and data science. Today, Python is one of the most popular programming languages in the world, and it is used for a wide variety of applications.

The name "Python" is a reference to the British comedy group Monty Python. Van Rossum was a fan of the group, and he thought that the name "Python" was appropriate for his new language because it was "short, unique, and slightly mysterious."

How is Python Used?

Python is used in a wide variety of applications, including:

Web development: Python is a popular choice for web development, thanks to its simplicity and the availability of powerful frameworks such as Django, Flask, FastAPI.

Data science: Python is widely used for data science and machine learning, thanks to its extensive data analysis and visualization libraries such as NumPy, Pandas, dask, Matplotlib, plotly, seaborn, alatair.

Machine learning: Python is a popular choice for machine learning, thanks to its support for a wide range of machine learning algorithms and libraries such as scikit-learn, TensorFlow, Keras, transformers, PyTorch.

Scripting: Python is often used for automating repetitive tasks or creating custom tools.

Advantages

Python offers a number of advantages over other programming languages, including:

Simplicity: Python is a relatively simple language to learn and use, making it a good choice for beginners.

Readability: Python code is known for its readability, making it easy to understand and maintain.

Extensibility: Python is highly extensible, thanks to its large community of developers and the availability of numerous libraries and frameworks.

Portability: Python is a cross-platform language, meaning that it can be run on a variety of operating systems without modification.

Drawbacks

Despite all of it's advantages, python also has several drawbacks.

Speed: Python is an interpreted language, which means that it is slower than compiled languages such as C++ and Java. This can be a disadvantage for applications that require high performance.

Memory usage: Python programs can use a lot of memory, especially when working with large datasets. This can be a disadvantage for applications that need to run on devices with limited memory.

Lack of type checking: Python is a dynamically typed language, which means that it does not check the types of variables at compile time. This can lead to errors that are difficult to find and debug.

Global interpreter lock (GIL): The GIL is a lock that prevents multiple threads from executing Python code simultaneously. This can be a disadvantage for applications that need to use multiple cores or processors.

5 notes

·

View notes

Text

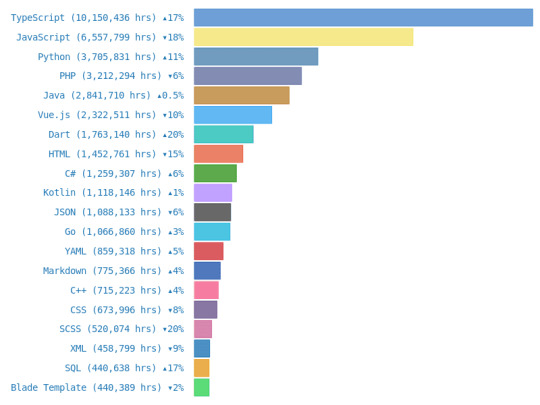

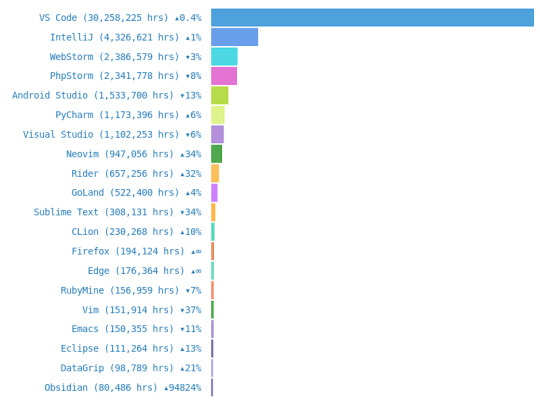

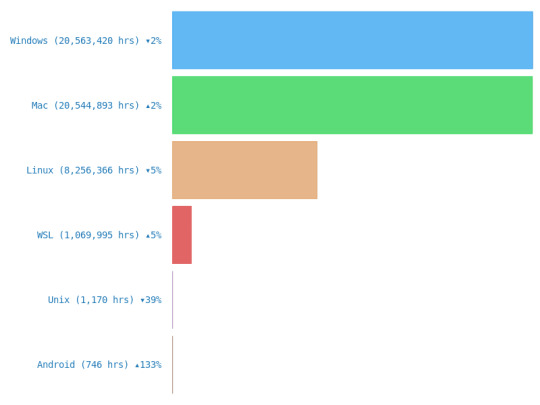

Programming stats for 2023

Always interesting to see what are the tops for 2023 in terms of programming~! 🥰🙌🏾 So, I got this email this morning from WakaTime (which is an extension on most IDEs and coding editors that tracks your coding process) and they gathered some information for 2023!

Remember these stats are according to WakaTime's data from more than 500k developers who spent a combined 51 million hours programming using their extension/plugin!

> link to the website <

Top Languages

TypeScript

JavaScript

Python

PHP

Java

Vue.js

Dart

HTML

C#

Kotlin

Top Editors

VS Code

IntelliJ

WebStorm

PhpStorm

Android Studio

PyCharm

Visual Studio

Neovim

Rider

GoLand

Top Operating System Used

Windows

Mac

Linux

WSL

Unix

Android

#codeblr#coding#progblr#programming#studyblr#studying#computer science#tech#statistics#coding advice#coding tips

24 notes

·

View notes

Text

AvatoAI Review: Unleashing the Power of AI in One Dashboard

Here's what Avato Ai can do for you

Data Analysis:

Analyze CV, Excel, or JSON files using Python and libraries like pandas or matplotlib.

Clean data, calculate statistical information and visualize data through charts or plots.

Document Processing:

Extract and manipulate text from text files or PDFs.

Perform tasks such as searching for specific strings, replacing content, and converting text to different formats.

Image Processing:

Upload image files for manipulation using libraries like OpenCV.

Perform operations like converting images to grayscale, resizing, and detecting shapes or

Machine Learning:

Utilize Python's machine learning libraries for predictions, clustering, natural language processing, and image recognition by uploading

Versatile & Broad Use Cases:

An incredibly diverse range of applications. From creating inspirational art to modeling scientific scenarios, to designing novel game elements, and more.

User-Friendly API Interface:

Access and control the power of this advanced Al technology through a user-friendly API.

Even if you're not a machine learning expert, using the API is easy and quick.

Customizable Outputs:

Lets you create custom visual content by inputting a simple text prompt.

The Al will generate an image based on your provided description, enhancing the creativity and efficiency of your work.

Stable Diffusion API:

Enrich Your Image Generation to Unprecedented Heights.

Stable diffusion API provides a fine balance of quality and speed for the diffusion process, ensuring faster and more reliable results.

Multi-Lingual Support:

Generate captivating visuals based on prompts in multiple languages.

Set the panorama parameter to 'yes' and watch as our API stitches together images to create breathtaking wide-angle views.

Variation for Creative Freedom:

Embrace creative diversity with the Variation parameter. Introduce controlled randomness to your generated images, allowing for a spectrum of unique outputs.

Efficient Image Analysis:

Save time and resources with automated image analysis. The feature allows the Al to sift through bulk volumes of images and sort out vital details or tags that are valuable to your context.

Advance Recognition:

The Vision API integration recognizes prominent elements in images - objects, faces, text, and even emotions or actions.

Interactive "Image within Chat' Feature:

Say goodbye to going back and forth between screens and focus only on productive tasks.

Here's what you can do with it:

Visualize Data:

Create colorful, informative, and accessible graphs and charts from your data right within the chat.

Interpret complex data with visual aids, making data analysis a breeze!

Manipulate Images:

Want to demonstrate the raw power of image manipulation? Upload an image, and watch as our Al performs transformations, like resizing, filtering, rotating, and much more, live in the chat.

Generate Visual Content:

Creating and viewing visual content has never been easier. Generate images, simple or complex, right within your conversation

Preview Data Transformation:

If you're working with image data, you can demonstrate live how certain transformations or operations will change your images.

This can be particularly useful for fields like data augmentation in machine learning or image editing in digital graphics.

Effortless Communication:

Say goodbye to static text as our innovative technology crafts natural-sounding voices. Choose from a variety of male and female voice types to tailor the auditory experience, adding a dynamic layer to your content and making communication more effortless and enjoyable.

Enhanced Accessibility:

Break barriers and reach a wider audience. Our Text-to-Speech feature enhances accessibility by converting written content into audio, ensuring inclusivity and understanding for all users.

Customization Options:

Tailor the audio output to suit your brand or project needs.

From tone and pitch to language preferences, our Text-to-Speech feature offers customizable options for the truest personalized experience.

>>>Get More Info<<<

#digital marketing#Avato AI Review#Avato AI#AvatoAI#ChatGPT#Bing AI#AI Video Creation#Make Money Online#Affiliate Marketing

2 notes

·

View notes