#unless i need to make corrections or something similar for accuracy/accountability

Explore tagged Tumblr posts

Note

Just read your post about Kyle. Do people really not see your comparison? You're saying that George Floyd was determined guilty enough to deserve to die over accusations of a counterfeit 20$ bill, and Kyle is found not guilty in spite of traveling to the area, arming himself, and seeking conflict that resulted in him using his weapon that killed 2 people.

yep! precisely anon. (also slight TW for rest of post.)

it's funny how the metric changes based upon who agrees with them and who doesn't.

now, I didn't word it as well as I should've, because I could see how people could think I said George Floyd got no justice.

but the point was that the right says George's death was fine and excusable bc he was a criminal committing a crime -- despite this being an accusation at the time.

(which is so ironic considering their "innocent until proven guilty" chant.)

then, they find "excuses" such as drug use and other things that "rationalize" why police suffocated him for 10min until he passed.

they painted George as a criminal over something as simple as saying he had a fake $20 bill and drugs in his system, and his rightful punishment was death.

but Kyle can go out of his way to insert himself in the situation, aim to cause conflict, arm himself with weapons, and even intended on shooting people before even arriving in the area.

(idk how you can predict you need to shoot someone out of self-defense, bc he's on record for already intending to use his gun to shoot/kill protestors days before interacting w anyone.)

the right doesn't see their actual aggressors as aggressors.

hence "any crime is alright if you're white."

that's why the cops had so much support from the right when the George Floyd murder trial started.

yes, they were held accountable at the end, but it was fought for. justice almost wasn't given.

and the right doesn't agree with the cops being found guilty, as they say they've done nothing wrong, just like Kyle.

the right says Kyle also deserves pity and sparing, and he is just a child.

but then black kids like Treyvon Martin are not spared or given pity. they said this 17yr old child deserved to die bc he was "violent" and a "thug." Treyvon wasn't even armed, and was still shot and killed by a man with the same agenda as Kyle.

I had someone in the notes bring up Breonna Taylor's boyfriend, Kenneth Walker, saying his self-defense claim should be negated since I personally negate Kyle's.

which is a completely incomparable situation, considering Breonna and Kenneth were shot at by police while sleeping and without announcement they were police. additionally, their suspect was already in custody, and they were at the wrong address to boot.

all Kenneth knew is that guns were being fired into his apartment, and his girlfriend was shot and murdered in her sleep.

it's all about painting the VICTIMS as the guilty party, and that's EXACTLY what they did with Kyle's case.

the point is: George Floyd, Joseph Rosenbaum, and Anthony Huber lost their lives unnecessarily, and THEY are painted guilty when they are simply reacting to what people like Kyle do to them.

the right says murders such as these, it's always self-defense.

but really, people like George Floyd die trying to defend themselves from people like Kyle.

the only thing Kyle is defending is the ability to use this system to his advantage, and harm those that he thinks deserve to die, simply bc they don't side with or look like him.

I'm just very disappointed with the world and the way that the justice system is literally Black and White.

#luffy posts#hope thats enough clarification#blm#black lives matter#last post about this#unless i need to make corrections or something similar for accuracy/accountability

7 notes

·

View notes

Photo

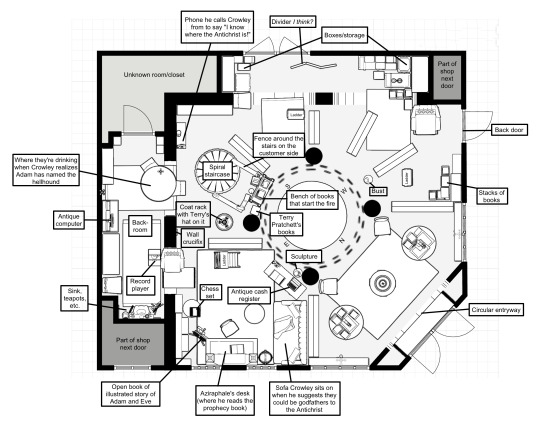

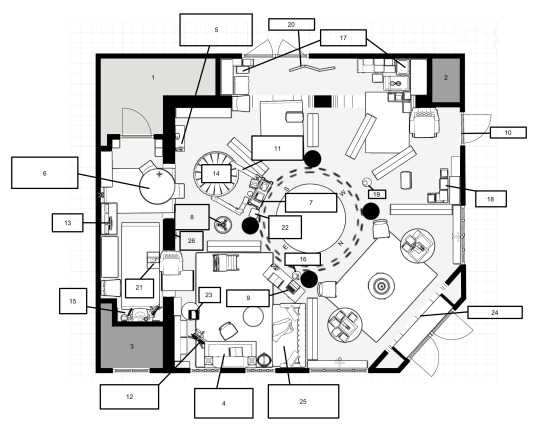

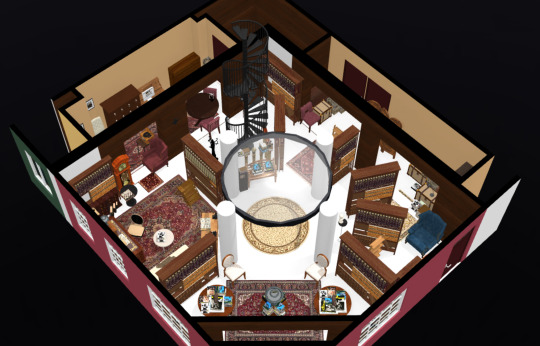

I created a 3D model and floor plan of Aziraphale’s bookshop in Good Omens!

I really wanted one for reference and it seemed like many others did too, so I put together my best approximation of where everything is. Beneath the color version, you’ll see I’ve included two simplified, labeled versions of the plan. The verbal labels are so you know what the object is. The numerical labels are there to make it easy to find more information about the object. I’ve put a numbered index below the cut that features the relevant reference images I used for each object and some more information about why I put it where I did/why it’s relevant/etc. I want to be very clear that I did not add anything to this from my own imagination; every single item and feature represents something I actually saw in the shop.

If you have any questions or want more information about this, PLEASE do not hesitate to ask! I put so much time into figuring it out and I would be more than happy to be a resource for anyone who needs it. Also, if you notice any errors, let me know and I’ll update the post. I hope this is helpful!

Update: Here’s a link to an interactive view of the shop! It takes a moment to load. You can click the “3D” tab in the top right to view it in first person and walk around inside. Double click a spot on the floor to move there and pan around by clicking and dragging. The oval symbol next to the person walking gives you a birds-eye view.

Update 2: Here’s a higher quality rendering of the first person perspective! Update 3: I made an alternate first person render here complete with a ceiling, light fixtures, and ambient lighting from outside. This one is optimized for making it seem more like you’re actually there, whereas the previous one is for maximum visibility. This render also has some minor accuracy improvements, which are detailed under the cut in the relevant sections. (The first interactive link with the birds-eye view updates automatically.) Update 4: In case you’re interested in Aziraphale’s books specifically, I’ve made a catalogue of those here.

1. Unknown closet

Images

There is a door behind Gabriel when he talks to Aziraphale in the backroom. So where does it lead? Well. The wall we can see behind Aziraphale when he encounters Shadwell in the shop (see #17: boxes/storage) doesn’t have a door in it. It’s also facing the wrong direction and it’s in the middle of the southwest wall — we know this because Aziraphale can see Shadwell in the entrance from there. So the wall behind him at that moment is definitely not the wall of the backroom. We’re left with this door and unaccounted-for corner. The only thing that makes sense to me then is that there’s a closet there between the two spaces. My personal theory is that this closet is “the back” that Aziraphale refers to keeping the Châteauneuf-du-Pape in since I didn’t see any other obvious alcohol storage space in the shop. Update: @n0nb1narydemon has suggested this could be a bathroom for guests or because culturally it’s a room you can use to extricate yourself from situations, which is another possibility! They also asked where I think the doors behind object #20 lead, and I thought it would be good to add here that they might lead to the shop next door or to this unknown room. It’s possible the room actually extends further into the next shop and encompasses the part of the wall where the doors are, but I didn’t have concrete evidence to support that idea so I didn’t include it in the floor plan. Update: I was wrong about the Châteauneuf-du-Pape! In the DVD bookshop tour we learn that the cabinet in the top left corner of the backroom is where Aziraphale keeps his alcohol, including that particular wine. I added a reference photo of Neil pointing it out. Thanks to @fuckyeahgoodomens for bringing the existence of this tour to my attention — ya girl got the special edition blu-ray even though I don’t have a blu-ray player yet so I hadn’t actually seen it. Also, there is a chair right next to this cabinet against the wall which I missed in my initial rendering of the shop but have since added.

2. Part of shop next door (top right)

Images

This was very tricky to figure out because you can see from the exterior of the shop that there is no wall past the back door, but from the interior there is clearly more space there. BUT in a behind the scenes photo of David during the fire scene, you can see on this back wall that there’s actually a nook with two large entryways, similar to the one that makes up the backroom. From the exterior you can see that the area next to the back door is taken up by the window of the next shop, so I concluded that this little square of space was not part of the bookshop’s interior, but the nook did extend further back than where the shop appears to end from the outside. I had to make one bookshelf more nubby than the others to make this work, but after a LOT of trial and error I decided one nubby bookshelf was the only thing that could explain the apparent architecture of the space. Any floor design that accounted for a bookshelf of the same length as the others just did not make sense on a fundamental level.

3. Part of shop next door (bottom left)

Images

From the exterior of the shop you can see that this window belongs to the adjacent store, as the wall is a different color. Within the bookshop you can also see when Gabriel and Sandalphon enter the backroom, there’s no window behind them; there’s a sink. So it’s definitely not Aziraphale’s window. The wall of the backroom is also further into the shop’s interior than the wall Aziraphale’s desk sits against, so there’s a corner of space inside that’s unaccounted for. At first I assumed it was plumbing from the sink that had been sealed off or something, but when I realized that’s where the window was on the outside, I figured the space is probably part of the next shop over.

4. Aziraphale’s desk

Images

This is where Aziraphale sits in the shop like 90% of the time. It’s on the Eastern side of the shop because Aziraphale was the guardian of the Eastern gate in Eden and because production designer Michael Ralph is a goddamn genius (source). Shout out to @posted-omens for this fascinating post analyzing the chariot sculpture on his desk. Update: Fun fact, the ladder behind his desk is actually called a library chair, supposedly designed by Benjamin Franklin. It functions as a ladder but you can also fold it into a chair! Neil mentions this in the DVD extra bookshop tour. I added screen caps of it to the reference photos above since I don’t have a specific section for the ladders!

5. Phone Aziraphale calls Crowley from

Images

I will be honest with you: I think there’s something a little fucked up about this corner. It is my nemesis. I tried so many things to make it work and I just could not get it exactly right, but what you see in the floor plan is my best guess as to what’s going on. The conundrum is that the spot where Aziraphale stands when he’s on the phone with Crowley is definitely closer to the fence around the staircase than it is in my layout. But the table he’s in front of is also clearly against the outside wall of the backroom, and the stairs being where I’ve put them here is the only thing that made sense based on the reference photos. So there’s some weird spacing issue where there’s a little too much room between the fence around the stairs and this phone. If I were to move the walls to close that gap then there would be way too much space in the backroom and way too little space on the southwest side of the shop, so I think the walls are correct as they are. So ¯\_(ツ)_/¯. What I can say for certain is that the phone is there and it’s on a table next to a lamp, and the table is definitely against the wall of the backroom and behind the staircase. The distance between these things doesn’t hold up perfectly, but their arrangement does. On another note, this is one of two phones in the shop. The other is on the table next to the cash register (see #9) which Aziraphale picks up when Crowley calls to say they need to talk about Armageddon. I believe this is the same one he uses to call Adam’s house in episode two, only he moves it from the table by the register to the top of a pile of books (which I’m pretty sure were stacked on the circular table between his desk and the sofa). Update: OKAY SO it turns out in the behind-the-scenes bookshop tour on the DVD we get two more teeny tiny glimpses of this corner! I added them to the reference photo album above. It appears I was right about the lamp, phone, and bookshelf being where they are, except that the bookshelf and table are touching. There’s also a ladder propped against the shelf. I’d say it’s possible there are actually two bookshelves here; based on the parallax in the DVD tour, the one next to the phone didn’t appear to be against the wall, but we know there is a bookshelf against that wall because we see it in the show. (P.S. There’s also another chair against that wall which I didn’t see because Aziraphale was standing in front of it, so I added that too.) This leads me to believe there’s one against the wall and another one further from it next to the table. But that’s just my speculation, so I won’t change the actual floor plan unless I find more evidence.

6. Where they’re drinking when Crowley realizes Adam has named the hellhound

Images

When Aziraphale sits down at this table, the background is of the same space he refers to as the “backroom” when Gabriel and Sandalphon show up. He’s across the table from Crowley, behind whom you can see a bookshelf, the staircase, and the coat rack. The table is half in the backroom half out, since the room has two large entryways in its wall. Update: I realized the wall behind this table actually dips back further! It is a weirdly-shaped wall! But in the DVD special tour of the bookshop Neil walks past it and there’s clearly an area that recesses even further, so I’ve modified that in the interactive floor plan :)

7. Bench of books that start the fire

Images

When Shadwell leaves the book shop and slams the door, one of the candles knocks over and rolls into a pile of books and other papers (including the Sound of Music lmao). You can see it’s the same bench the customer is standing in front of when he gives Gabriel a weird look after he yells about pornography. (I love this customer so much because they gave me a super HD shot of this particular area.) The poles of the fence around the bench, the staircase behind it, and the smaller shelves beside it holding Terry Pratchett’s books make it clear that the bench is in that spot in the shop and that it’s the place the fire starts.

8. Coat rack with Terry’s hat on it

Images

Aziraphale hangs his coat here right before Crowley calls him to say they need to talk about Armageddon. Out of focus in the frame you can see the lion sculpture that sits on the fence surrounding the stairs (see #11) and a bookshelf. The camera pans past the shelf and we see him walk past his desk to pick up the phone by the cash register, which puts that shelf right next to his sitting area. We can also see the coat rack in the background when Crowley realizes Adam has named the hellhound. The coat rack has Terry Pratchett’s hat and scarf on it in his honor (source).

9. Antique cash register

Images

You can see this register in the background when Crowley is on the couch and when Aziraphale invites Gabriel and Sandalphon into the backroom. I know it’s an antique cash register because it’s photographed and referenced directly on page 79 of the Good Omens TV Companion. It’s a typewriter in my floor plan because the website I used (floorplanner.com), who knows why, did not have a 3D model of a cash register from the early 1900s.

10. Back door

Images

Thank you so much to @fuckyeahgoodomens for this post where they figured all this out!! Wonderful work! You can see this door from the exterior of the shop and its existence is referenced in the Good Omens script book on page 94. It’s also in the background of a behind the scenes shot of Aziraphale pulling away the carpet so he can contact heaven. Behind him in that shot you can see the bust (which moves around a lot - see #19) and a grandfather clock, and in the show from one of the aerial shots you can see that the carpet is pulled west, further confirming the door’s location.

11. Fence around the stairs

Images

I have concluded that this is a fence to keep customers from going up to the second floor. It looks to be made of golden pillars with wooden shelving atop them. The fence crosses beneath the staircase on one side and the other side ends about where the stair’s railing does. You can see this fence behind Crowley when he realizes Adam has named the hellhound, behind Aziraphale when he calls Crowley to tell him he knows where the antichrist is, and next to the customer who gives Gabriel a look after he yells “PORNOGRAPHY!” It’s also visible in one of the aerial shots of the shop. Update: In the DVD extra bookshop tour I noticed the lion sculpture on this fence is not just a lion, but a lion with a woman holding its mane. I think it might also be a lamp? In one of the reference photos, the one that looks down from the second floor, it appears there’s a light in the woman’s other hand. I’d be interested to see if we can track down what this particular sculpture is and what it might mean. Update: @cantdewwrite has suggested here that the light/sculpture could be a replica of one of the bronze statues in the Victoria Memorial, which does look quite similar. I’m fairly certain Aziraphale’s sculpture is of a woman, which would make it the figure in the memorial representing peace.

12. Open book of illustrated story of Adam and Eve

Images

Shout out to @amuseoffyre for this post where she figured out what this was! Update: I’ve determined that this book is The Gospel in the Old Testament: A series of pictures by Harold Copping. The painting is, naturally, by Harold Copping. It’s called “Adam and Eve after the fall.” Unfortunately this book is out of print and I haven’t been able to track down an ebook or scan of it, so I can’t confirm the text just yet. But based on its premise, I think it’s safe to assume that it’s telling the story of Adam and Eve directly. Aziraphale has a second copy of this book visible on the shelf next to the sofa.

13. Antique computer

Images

This is the computer Aziraphale does his extremely scrupulous taxes with, as confirmed in this ask that @neil-gaiman answered from @prismatic-bell! It’s an Amstrad, according to the bookshop tour in the DVD extras.

14. Spiral staircase

Images

These stairs are in many shots of the shop so it was pretty obvious where they were.

15. Sink, teapots, etc.

Images

You can see this wall right before Gabriel walks into the backroom and behind Aziraphale when he’s drinking with Crowley at the end of episode one. It appears he has two hand towels, a ceramic angel soap dish (aw), some teapots, and a decorated box above it, among other things. On the floor beside the sink is what I believe to be a broom handle, though it could be a mop? Next to that is a bronze statue of an angel atop a small table piled with books. On the other side of the sink is an open book on a stand — it has a fabric bookmark in it with a crucifix at the end, so I’m assuming it’s a bible. Light reading while you make your tea I guess. Update: Thank you so much to @brightwanderer for pointing out in this post that he has four extra angel wing mugs above the sink as well! I couldn’t figure out what they were! Update: Neil said in this ask that you can see an oven by the sink when Gabriel and Sandalphon walk in. Which you can! It’s real small and there’s a little pot on top of it. I’ve added a screencap of it to the images album for this area. Update: I’m donating my heart and soul to @ack-emma for suggesting in the replies to this ask that the central object above the sink is a samovar!! I had never heard of this so I had absolutely no idea what it was, but I think they hit the nail on the head. Y’all Aziraphale really likes tea.

16. Sculpture

Images

Thank you @ineffable-endearments, @behold-my-squeees, @srebrnafh, @aethelflaedladyofmercia for contributing to this post about the statue and its potential symbolism! Update: @doctorscienceknowsfandom has added some analysis to the post above suggesting that this is a sculpture of Paris, the figure from Greek mythology. I’m inclined to agree! Update: BINGO! @tifaria has found Aziraphale’s exact statue (confirmed Paris!) in this post. Brilliant work!! This community continues to blow me away. Further discussion about the sculpture’s meaning in the context of the show here — be sure to check the notes for further commentary.

17. Boxes/storage

Images

These boxes and piles of books can be seen behind Aziraphale when he encounters Shadwell in the shop and behind Crowley while he’s rambling drunkenly about why they should stop Armageddon in episode one. They’re in a nook that goes further back than where the shop appears to end from its exterior (see #2 for more info on that!).

18. Stacks of books

Images

You can see this stack in one of the aerial shots of Shadwell in the shop. I didn’t include most stacks of books in the floor plan because they’re literally everywhere and I had to manually set how high each book would be from the floor, so putting them in piles got tedious very quickly. But I did include a few notable ones, and this is one of those imo because there’s not much else in that area as far as I can tell.

19. Bust

Images

This little guy moves around quite a bit, unlike most things in the shop. In some photos/scenes it’s where I put it on the floor plan, but in others it’s closer to the northwestern wall and in this 360 video of the shop it’s right between two of the columns. I chose to put it where I did because it’s there in the scene where Crowley is drunkenly rambling about Armageddon, whereas the other locations I’ve seen it in were from behind the scenes shots and stuff. I’m not sure who the bust is of! It appears to have a little ribbon with a medal around its next though. Update: More speculation about the bust here, courtesy of @aethelflaedladyofmercia! Update: @fuckyeahgoodomens has confirmed in this post that the thing around the bust’s neck is the medal given to Aziraphale by Gabriel in this deleted scene!

20. Divider I think?

Images

Please for the love of god if you know what this thing is, tell me. My best guess is it’s a room divider because what else looks like that?? But I don’t know why you would put a room divider there. And it still doesn’t look exactly like a divider either. But the decorative element at the top and apparent gap between the metal frame and the red bit leads me to believe it’s not furniture or a box. This mystery object is my second nemesis after the weird corner (#5). Update: @brightwanderer has suggested that it might be an embroidered/tapestry draft screen, which I think makes more sense! Update: In the DVD extra bookshop tour I found a very brief image of this item over Neil’s shoulder which I added to the reference photos above. I think by some miracle I was right and it is a divider. It could be a draft screen but at the very least it is shaped like a divider with at least three sections. Wahoo!

21. Record player

Images

This is the phonograph that’s playing Franz Schubert’s String Quintet in C major (thank you again to @fuckyeahgoodomens for that info) when we first see Aziraphale in the shop. It also plays Queen’s You’re My Best Friend when Crowley runs into the fire.

22. Terry Pratchett’s books

Images

Another one of the many little Terry easter eggs in the show is this set of his books! @devoursjohnlock made a post highlighting some other specific books you can find in the shop.

23. Chess set

Images

I saw a post once pointing out this chess set and the implication that Aziraphale and Crowley must play together sometimes, which I thought was a really nice detail to put into the set. But I can’t find the post to credit it! I will update this with a link if I do. Update: Pretty sure this is the post I saw. Thank you to @losyanya for mentioning it :)

24. Circular entryway

Images

This is one of many circle motifs that production designer Michael Ralph incorporated into the shop. It’s gorgeous. I think there’s actually more room between the archway and the door than I’ve included in this floor plan; Shadwell takes a few steps through it when he runs out of the shop. But I think the fix is just the door being further out from the entryway rather than the entryway being further in. I didn’t want to fuck with the walls to improve this particular area because when I realized the spacing was wrong, I was almost done and would’ve had to manually move each object in the shop over a few inches over. Made more sense to leave the caveat in a footnote. Update: In the DVD extra bookshop tour you get a brief glimpse of something on the inside wall of the entryway. I think it’s a wall sconce or something along those lines. There’s one on either side. I added them to the reference album above! I also figured out how to extend the walls to accommodate some more space there without having to move everything else, so I did that. Update: Here’s a link to some meta discussion about the cupid sculpture in front of this entryway!

25. Sofa Crowley sits on when he suggests they could be godfathers

Images

You can see that the sofa is next to Aziraphale’s desk and the cash register, and also that there’s a bookshelf behind it. From the entrance to the shop you can see two bookshelves on either side of the central circle, so it was pretty clear that the couch was on the other side of one of those shelves.

26. Wall crucifix

Images

I find it very interesting that Aziraphale has this considering Jesus isn’t a big part of angelic lore or heaven’s general priorities in the show. It would make more sense to me that he has it because it’s another memento of his time with Crowley, sort of like the illustrated story of Adam and Eve by his desk (#12). Also, fun fact, the opposite side of this wall segment is where he put up all his maps and notes about the whereabouts of the Antichrist in episode three.

#good omens#aziraphale's bookshop#good omens reference#floor plan#ref#i hope people see this i posted it at 2am because i was too excited about it to wait until daylight hours#OKAY SO PEOPLE SAW IT THANKS GUYS#check out the tag:#bookshop questions#for follow-up Qs!

11K notes

·

View notes

Text

I am against the "Americanization" of fandoms.

What this applies to

Holding non American characters (and sometimes even fans) to an American moral standard. This includes

Refusing to take into account that, first things first, America is NOT the target audience, so certain tropes that would or would not pass in the west are different in Japan.

Like seriously, quite a few of the jokes are just not going to pass or hit, because they require background information that is not universal.

Assuming all American experience is standard. (This could mean watering down just how much pressure is placed on Japanese youth irl by saying that sort of thing is universal (while it is, to a degree, Japanese suicide rates are pretty fucking high because of how fast paced and work heavy some of their loads tend to be), and it's really annoying and rude when someone is trying to speak out about how heavy and harsh the standards are placed on them to succeed just for some American whose mom occasionally yells at them to do their homework dropping by to say "it's like that everywhere")

Demonizing (or wubbifying) a character using American morals, including and up to harassing fans over their interpretations or gatekeeping whether or not a character "should" get development (while you shouldn't do that fucking period, it's rude and annoying- this is specifically for the people who use American standards without acknowledging the cultural gap between them and, you know, the fucking target audience) ((Like seriously, saying "It's different in Japan" is not the end all be all excusing someone's actions, but sometimes the author didn't immediately think that maybe (insert vaguely universal thing) was that bad or that heavy of a topic before they put it into their media. If you don't want to see things like that? Pick a different series and stop harassing the fans))

Getting mad at or making fun of Japan's attempts to satirize their own culture. (A good example is Ace Attorney! To most of us, it's just a funny laugh can you imagine if courts were actually like that- guess what? Japan's are! (Not that America's are actually that much better, they just look good on paper))

Making America/American issues the center of your fan spaces

(Usually without sharing or bringing light to the issues that other countries are going through)

Your

Experiences

Are

Not

Univseral!

Seriously, very few things across America, even, are universal. Texas things the hundreds are nothing while Minnesota's like "oh it's only thirty degrees below zero"- so for fucks sake, stop assuming that all other countries work in ways similar to America.

It's good and important to share Ameican issues with your American followers, but guess what? America isn't the only country out there, and it's certainly not the only one going through bullshit. Don't pull shit like "why's no one reblogging this?" or "why should I care about what's happening in (X country)?"

Don't assume everyone lives in America.

Stop assuming everyone lives in America.

America is not and has never been the target audience for anime, and it's certainly not the only country outside of Japan that enjoys it.

Like I said above, sometimes Japan attempts to satirize its own culture. We can't tell what is and isn't meant as satire, because it's not our culture.

Social media activism can be tiring and maybe you don't have the energy to focus on things that are out of your control, but, if someone tells you about the shit they're going through, don't bring American politics up.

For the neurodivergent crowd out there thinking, "But why?" it's because a lot of social media, especially, is very heavily Americanized- sometimes to the point where people assume that everyone is American. Not to mention, it's disheartening. I'm sorry to say, but you're not actually relating to the conversation, you're often diverting the focus away from the topic at hand. Even if you mean well, America is heavily pedestaled and talked about frequently, and people from other countries are tired of America taking precedent over their own issues.

Don't divert non-American issues into American ones. Seriously. It's not your place. Please just support the original issue or move on.

Racist Bullshit

This especially goes for islanders and South Asian characters, as well as poc characters (because, yes, Japan DOES have black people)

Making "funny" racist headcanons. Not fucking cool.

Changing the canon interpretation of an explicit character of color in order to fit racist stereotypes.

Whitewashing or color draining characters. Different artistic skill sets can be hard, yes, but are you seriously going to look at someone and say "I don't feel like accurately portraying you or people that look like you, because it's difficult for me." If someone tries to correct you on your cultural depiction of a character and/or their life style, don't be an ass. (If possible, it would be nice for those that do the corrections to be polite as well, but it does get really frustrating).

Seriously, no offense guys, but, if you want to persue art, you're going to need to learn to depict different body types, skin colors, and/or ethnic features.

On that note, purposefully, willingly, or consistently inaccurately portraying people or characters of color (especially if someone in the fandom has "called you out" or specifically told you that what you're doing comes across as racist and you continue to do it). If you need help or suck at looking things up, there are references for you! Ask your followers if they have tutorials on poc (issue that you're having), whether it be bodily portrayal, facial proportions, or coloring and shading. Art is so much more fun when you can depict a wider variety, and guess what? Before you drew the same skinny, basic, white character over and over, you couldn't even draw that!

Attempting or claiming to DEPECT CULTURAL ACCURACY within a work or meta, while being completely fucking wrong. ESPECIALLY and specifically if someone calls you out, and you refuse to fix, correct, or change anything.

*little side note that the discussion revolving art is a very multilayered conversation, and it has quite a few technical potholes, which I'll bring up again farther into this post.

Fucking history

Stop demonizing or for absolute fucks sake wubbifying Japanese history because UwU Japan ♡0♡ or bringing up shit like "you know they sided with Nazis, right?" It's good to recognize poor past decisions, but literally it's not your country keep your nose out of it. And? A lot of decisions made by countries were not made by their general peoples. Even those that were, often involved heavy propaganda that made them think what they were doing was right.

Seriously, it's not your country, not your history. Unless you have some sort of higher education (but honestly even then a lot of those contain heavy bias), just don't butt in.

^^^ this also goes to all countries that are NOT Japan (specifically when people from non American countries talk about their history while in fandoms and someone wants to Amerisplain to them why "well, actually-"). When we said, "question your sources," we didn't mean "question the people who know better than you, while blindly accepting the (more than likely biased) education you were given in the past."

What this does NOT include:

Fanfiction

FANfiction

FanFICTION

FANFICTION.

Seriously, fanfiction is literally UNPAID WORK from RANDOM FANS- a lot of which who are or have started as kids. ((No, I'm not trying to excuse racist depictions of people just because they're free, please see above where I talk about learning to grow a skill and how it's possible tone bad and get good, on top of the fact that some inaccuracies are not just willful ignorance))

"Looking it up" doesn't work

"Looking it up" almost never works

Please, for fucks sake, you know that most all online search engines are heavily biased, right? Not to mention, not everything is universal across the entirety of Japan. You want to look up how the school system works in Hokkaido? Well it's different from the ones in Osaka!

Most fanfiction is meant to be an idealized version of the world. Homophobia, transphobia, misogyny, ableism, and racism are very prevalent and heavy topics that some fan authors would prefer to avoid. (Keep in mind, this is also used by some people in those minorities often because thinking about how relevant those kinds of things are is to them every day).

A lot of shit that happens in writing is purely because it's an ideal setting. I've seen a few arguments recently about how fan authors portray Japanese schools wrong- listen, I can't tell you how many random school systems I have pulled from my ass purely because (I need them to interact at these points, in these ways). Sometimes the only compliment I can think of is 'I like your shirt' or sometimes I need character A to realize that character B likes the same thing as they do, so I might ignore the fact that most all Japanese schools require uniforms, so that I can put my character in a shirt that will get someone else's attention.

Sometimes it's difficult to find information on different types of systems, and sometimes when you DO know those things, they directly rule out a plot point that needs to happen (like back on the topic of schools (from what I've seen/heard/read- which guess what? Despite being from multiple sources, might still be inaccurate!) Japanese schools don't have mandatory elective classes (outside of like gym and most of them usually learn English or another language- I've seen stuff about art classes? But the information across the board varies.), but, if I need my character to walk in and see someone completely in their element, I'm probably not going to try and gun for accuracy or make up a million and two reasons as to why this (non elective) person would possibly need something from (elective teacher) after school of all things.)

Some experiences ARE universal- or at least overlap American and Japanese norms! Like friends going to fast food places after school doesn't /sound Japanese/ or whatever, but it's not like a horrible inaccuracy to say that your characters ate at McDonald's because they were hungry. Especially when you consider that the Japanese idolization of American "culture" is also a thing.

Also I saw someone complaining about how, in December, a lot of (usually westerners) write Christmas fics! Well, not only are quite a few of those often gift fics, with it being the season if giving and all, but Japanese people do celebrate Christmas! Not as "the birth of Christ," but rather as a popularized holiday about gift giving (also pst: America isn't the only place that celebrates Christmas)

But, on that note, sometimes things like Holidays are "willfully ignorant" of what actually happens (I've made this point several times, but (also this does by no means excuse actual racism)), because, again: plot convenience! Hey what IF they celebrated Halloween by Trick or Treating? What if Easter was a thing and they got to watch their kids or younger siblings crawl around on the ground looking for tiny plastic eggs?

Fanfiction authors can put in hours of work for one or two thousand words- let alone ten thousand words, fifty thousand words, a hundred thousand words. And all of these are free. There is absolutely no (legal) way to make money off of their fanworks, but they spent hours, days, weeks, months- sometimes even years- writing. It is so unnecessary to EXPECT or REQUIRE them to spend even more hours looking up shit that, no offense, almost no one is going to notice. No one is going go care that all of my combini prices are accurate or that I wrote a fic with a Japanese map of a train station that I had to backwards search three times to find an English version that I could read.

Not everyone has the attention span or ability to spend hours of research before writing a single word. Neurodivergent people are literally a thing yall. Instead of producing the perfectly pretty accurate version of Japan that people want to happen, what ACTUALLY happens is that the writer reads and reads and reads and either never finds the information they need or they lose the motivation to write.

^^^ (This does NOT apply to indigenous or native peoples, like Pacific Islanders or tribes that exist in real life. Please make sure that you portray tribal minorities accurately. If you can't find the information you need (assuming that the content of the series is not specifically about a tribe), please just make one up (and for fucks sake, recognize that a lot of what you've been taught about tribal practices, such as shit like human sacrifices or godly worship, is actually just propaganda.)

Not to mention, it often puts a wall in front of readers who would then need to pull up their OWN information (that may or may not be biased) just in order to interact with the fic ((okay, this one has a little bit of arguability when it comes to things like measurements and currency, because Americans don't know what a meter is and no one else knows what a foot is- either way, one of yall is going to have to look up measurements if they want to get a better understanding of the fic)). However, a lot of Americans who do write using 'feet, Fahrenheit, dollars,' also write for their American followers or friends (which really could go both ways).

On a less easily arguable side, most fic readers aren't going to open up a new tab just to search everything that the author has written (re the whole deep topics, not everyone wants to read about those sorts of things, either). Not only are you making it more difficult on the writer, but you're also making it more difficult for the reader who's now wondering why you decided to add in Grandma's Katsudon recipe, and whether or not the details you have added are accurate.

Some series, themselves, ignore Japanese norms! Piercings, hair dye, and incorrectly wearing ones uniform are frowns upon in Japanese schools- sometimes up to inflicting punishment on those students because of it. However, some anime characters still have naturally or dyed blond hair some of them still have piercings or wear their uniforms wrong. Some series aren't set specifically in Japan, but rather in a vague based-off-real-life Japan that's just slightly different (like Haikyuu and all of its different prefectures). Sometimes they're based on real places, but real places that have gone through major changes (like the Hero Academia series with its quirks and shit).

Fandom is not a full time job. Please stop treating it like it is one. Most people in fandoms have to engage in other things like school or work that most definitely take precident over frantically Googling the cultural implications of dying your hair pink in Japan.

Art is also meant to be a creative freedom and is almost always a hobby, so there are a few cracks that tend to spark debate. Like I said, it is still a hobby, something that's meant to be fun (on this note!)

If trying new things and expanding your portfolio is genuinely making you upset, it's okay to take a break from it. You're not going to get it right on the first try and please, please to everyone out there critiquing artists' works, please take this into account before you post things.

I'm sorry to say, but, while it gets frustrating to see the same things done wrong over and over again, some people are genuinely trying. If it matters enough for you to point out, please offer solutions or resources that would possibly help the artist do better (honestly this could be said about a lot of online activism). I get that they should "want" to do better (and maybe they don't and your annoyance towards them is completely justified- again, as I said, if this becomes a repeated offense and they don't listen to or care about the people trying to help them, yeah you can be a bitch if it helps you feel better- just please don't assume that everyone is willfully ignorant of how hurtful/upsetting/annoying a certain way of portraying things is), but also WANTING to do better and ACTUALLY doing better are two different things.

Maybe they didn't realize what they were doing was inaccurate. Maybe they didn't have the right tutorials. Maybe they tried to look it up, but that failed them. Either way, to some- especially neurodivergent artists- just being told that their work is bad or racist or awful isn't going to make them want to search for better resources in order to be more accurate, it's just going to make them give up.

Also! In fic and in writing, no one is going to get it right on the first try. Especially at the stage where we creators ARE merely in fan spaces is a great time to "fuck around and find out", before we bring our willfully or accidentally racist shit into monetized media. Absolutely hold your fan creators to higher standards, but literally fan work has so little actual impact on popular media (and this goes for just about every debate about fan spaces), and constructive criticism as well as routine practice can mean worlds for representation in future media. NOT allowing for mistakes in micro spaces like fandoms is how you get genuinely harmful or just... bad... portrayals of minorities in popularized media that DOES have an impact on the greater public. OR you get a bunch of creators who are too afraid to walk out of their own little bubbles, because what if they get it wrong and everyone turns against them. It's better to just "stick with what they know" (hobbies are something that you are meant to get better at, even if that is a slow road- for all of my writers and artists out there, it does take time, but you will get it. To everyone else, please do speak up about things that are wrong, but don't make it all about what's wrong and please don't be rude. It's frustrating on both ends, so, if you can, please try not to escalate the situation more.)

Anyways, I'm tired of everyone holding fictional characters to American Puritanical standards, but I'm also tired of seeing every "stop Americanizing fandom" somehow loop into fanfiction and how all authors who don't make their fics as accurate as possible are actually just racist and perpetuating or enabling America's take over of the world or some shit.

Fan interpretation of published media is different than fan creation of mon monetized media. Americans dominating or monopolizing spaces meant for all fans (especially in a fandom that was never meant for them to begin with) is annoying and can be harmful sometimes. Americans writing out their own personal experience using random fictional characters (more often than not) isn't.

#just google it#better represent real life#if you tell a fic reader to ngl you're being pretty ableist and don't really have a good idea of how search engines work#also when people DO try to make culturally accurate fics often times at least one or two people will pop in and say 'actually that's wrong'#not to mention sometimes they might not even be right to begin with...#and okay once or twice it is what it is#but seriously if this keeps happening over and over most people are just going to stop writing or caring#fanfiction#fanfiction is literally free#fanfiction is free labor#adding layers upon layers of research and knowledge needed- on top of how difficult it can be to portray human emotion#it's not going to it's just going to make once starry eyed writers loss their ability to enjoy their work#and guess what#some ACTUALLY racist (or homophobic or transphobic or misogynistic) writer is going to swoop in not giving two fucks#and they're going to go on and get their work published because they don't care about accuracy

78 notes

·

View notes

Note

So I’ve been thinking about doing PAC readings here on tumblr to help strengthen my intuition and just get better at reading but I’m nervous lol. Your the PAC queen to me so I was wondering what are some pros and cons that you may have about doing general readings? — loved the newest reading as well, I picked pile 3 & 5🥺🥺

I'm happy you liked the reading!! 😊 Regarding your question, I'm going to compare it with free personal readings. Comparison below is only applicable for free online readings:

1) Energy and time

🧡 General pick-a-card: Doing a PAC reading is more exhausting than a personal reading. PAC reading requires you to tune into more energies and consider more situations; which can be confusing. You also need to do at least 2 piles. But if there are too few to choose from, the risk is that there might not be much variation, which can make it hard for many people to relate to the reading. Imo, 3-4 piles in one reading are ideal in balancing your needs with your audience's needs. It might still be exhausting if we are not used to it.

💜 Personal reading: Logically, it’s less exhausting than PAC unless you are dealing with heavy energy coming from one person. This can be done quicker than PAC reading, your intuition can just go with the strongest interpretation instead of weighing whether or not you should include different scenarios- simply because there is only one querent to focus on. The querent can (should tbh) give you a more specific context behind their question which will help you narrow down the interpretation, thus conserving your energy.

...

2) Accuracy

🧡 General pick-a-card: General readings are bound to be less accurate than personal readings, and the message might be harder to nail down because many interpretations are possible since we are talking about many different people/situations. So you might not know when you do something right and when you do something wrong; because different people might find different parts not/resonating. So who’s right/wrong? There's no way to know.

💜 Personal reading: You can be more sure when you do something right AND when you do something wrong, because you are dealing with one person/situation and you can give much more weight to the querent’s words when they correct you. Certainty allows you to fix or fine-tune your method based on the feedback you get. It leads to practical actions, which lead to better result and improvement.

...

3) Accountability

🧡 General pick-a-card: It's easier to hide behind general readings, readers can simply claim their mistakes or inaccuracy as "it's a general reading it's not meant to completely fit you" AND just leave it at that. Which doesn’t really help the reader if their goal is to improve their tarot reading skills.

Personally, I do acknowledge that general reading won’t be able to fit someone to a T (I mean, sometimes it can but don’t bet on it). But at the same time as someone who does PAC reading- I want mine to resonate with many people. So I do try to figure out what went wrong or how I could interpret something better- whenever I get negative feedback. But this is just my personal goal, and I am balancing it with the possibility that something might not be my mistake at all (eg. it could be due to the querent’s misunderstanding, their refusal to accept truth, the fact that it’s a general reading, etc).

I won’t immediately take it as my mistake, but I won’t immediately reject the possibility either.

💜 Personal reading: It's harder to hide behind personal readings. If you are not accurate, you are not accurate. There's no running away from it. You have to look at the issue, and think critically whether the 'inaccuracy' is coming from your querent (their biases, their refusal to accept truth, their lack of self-awareness, etc) or if it's because of your shortcomings (eg. exhaustion, lack of concentration, being superficial with interpretations, ignoring intuitive pulls, etc). Even if it’s coming from your querent, you can still try to figure out how you can handle similar situation better in the future.

...

4) Emotional reward

🧡 General pick-a-card: Doing PAC reading is more emotionally rewarding than free personal reading, simply because it targets more audience hence increasing your chances of getting feedback; more interaction, more often. It also gets you exposure. It's just a matter of probability. More people = more likely.

PAC is a better investment of time and energy if your goal is to gain exposure (it’s not easy, but it’s better than personal reading). It doesn’t hurt that you can still get feedback from people.

💜 Personal reading: When you do free personal reading, you are depending on one person to give you feedback, which they might not give, and other people might not care about the personal reading since it’s not for them thus they might not respond to it. This can be demotivating, it’s like talking to the void. But when the querent does come back and give you detailed feedback, it’s like receiving gold. It’s very emotionally rewarding because it gives you a clearer view of your skills, especially when you are told what you did right (because of the certainty I mentioned before).

Personal reading is a better investment if your goal is to hone your skills more, but like I said this is highly dependent on whether or not you get feedback. It’s hard to improve when you don’t get any feedback at all.

...

Conclusion:

Any tarot readings can help improve intuition, whether it’s general or personal, because what matters most in improving intuition and skills are practice and feedback; if you can get more practice and feedback from personal reading, then personal reading may be better for you. Vice versa.

If you choose to do PAC readings;

At first it might be hard to get interaction or feedback, unless you have a decent following already. Just stick to it, hopefully you’ll gain traction over time.

You can also approach other tarot readers to see if they are willing to give you some feedback on your general readings, but accept it if they don’t want to; people have reasons why they do/don’t do something.

It's better to have more piles and shorter messages, than fewer piles but longer messages (if you can’t do many piles with long messages). Give people more to choose, like I said 3-4 piles. But this is just my opinion, you can do a survey and see what people prefer.

Remember to use relevant tags for your post; check what tags people use for their PAC.

Good luck! 🔮🎴

#tarotblr#tarot tips#tarot community#it's a great idea to start with PAC in my opinion#despite it not having the 'certainty' that personal readings can give you#theegeminibabie

44 notes

·

View notes

Text

Trust Your Data: How to Efficiently Filter Spam, Bots, & Other Junk Traffic in Google Analytics

Posted by Carlosesal

There is no doubt that Google Analytics is one of the most important tools you could use to understand your users' behavior and measure the performance of your site. There's a reason it's used by millions across the world.

But despite being such an essential part of the decision-making process for many businesses and blogs, I often find sites (of all sizes) that do little or no data filtering after installing the tracking code, which is a huge mistake.

Think of a Google Analytics property without filtered data as one of those styrofoam cakes with edible parts. It may seem genuine from the top, and it may even feel right when you cut a slice, but as you go deeper and deeper you find that much of it is artificial.

If you're one of those that haven’t properly configured their Google Analytics and you only pay attention to the summary reports, you probably won't notice that there's all sorts of bogus information mixed in with your real user data.

And as a consequence, you won't realize that your efforts are being wasted on analyzing data that doesn't represent the actual performance of your site.

To make sure you're getting only the real ingredients and prevent you from eating that slice of styrofoam, I'll show you how to use the tools that GA provides to eliminate all the artificial excess that inflates your reports and corrupts your data.

Common Google Analytics threats

As most of the people I've worked with know, I’ve always been obsessed with the accuracy of data, mainly because as a marketer/analyst there's nothing worse than realizing that you’ve made a wrong decision because your data wasn’t accurate. That’s why I’m continually exploring new ways of improving it.

As a result of that research, I wrote my first Moz post about the importance of filtering in Analytics, specifically about ghost spam, which was a significant problem at that time and still is (although to a lesser extent).

While the methods described there are still quite useful, I’ve since been researching solutions for other types of Google Analytics spam and a few other threats that might not be as annoying, but that are equally or even more harmful to your Analytics.

Let’s review, one by one.

Ghosts, crawlers, and other types of spam

The GA team has done a pretty good job handling ghost spam. The amount of it has been dramatically reduced over the last year, compared to the outbreak in 2015/2017.

However, the millions of current users and the thousands of new, unaware users that join every day, plus the majority's curiosity to discover why someone is linking to their site, make Google Analytics too attractive a target for the spammers to just leave it alone.

The same logic can be applied to any widely used tool: no matter what security measures it has, there will always be people trying to abuse its reach for their own interest. Thus, it's wise to add an extra security layer.

Take, for example, the most popular CMS: Wordpress. Despite having some built-in security measures, if you don't take additional steps to protect it (like setting a strong username and password or installing a security plugin), you run the risk of being hacked.

The same happens to Google Analytics, but instead of plugins, you use filters to protect it.

In which reports can you look for spam?

Spam traffic will usually show as a Referral, but it can appear in any part of your reports, even in unsuspecting places like a language or page title.

Sometimes spammers will try to fool by using misleading URLs that are very similar to known websites, or they may try to get your attention by using unusual characters and emojis in the source name.

Independently of the type of spam, there are 3 things you always should do when you think you found one in your reports:

Never visit the suspicious URL. Most of the time they'll try to sell you something or promote their service, but some spammers might have some malicious scripts on their site.

This goes without saying, but never install scripts from unknown sites; if for some reason you did, remove it immediately and scan your site for malware.

Filter out the spam in your Google Analytics to keep your data clean (more on that below).

If you're not sure whether an entry on your report is real, try searching for the URL in quotes (“example.com”). Your browser won’t open the site, but instead will show you the search results; if it is spam, you'll usually see posts or forums complaining about it.

If you still can’t find information about that particular entry, give me a shout — I might have some knowledge for you.

Bot traffic

A bot is a piece of software that runs automated scripts over the Internet for different purposes.

There are all kinds of bots. Some have good intentions, like the bots used to check copyrighted content or the ones that index your site for search engines, and others not so much, like the ones scraping your content to clone it.

2016 bot traffic report. Source: Incapsula

In either case, this type of traffic is not useful for your reporting and might be even more damaging than spam both because of the amount and because it's harder to identify (and therefore to filter it out).

It's worth mentioning that bots can be blocked from your server to stop them from accessing your site completely, but this usually involves editing sensible files that require high technical knowledge, and as I said before, there are good bots too.

So, unless you're receiving a direct attack that's skewing your resources, I recommend you just filter them in Google Analytics.

In which reports can you look for bot traffic?

Bots will usually show as Direct traffic in Google Analytics, so you'll need to look for patterns in other dimensions to be able to filter it out. For example, large companies that use bots to navigate the Internet will usually have a unique service provider.

I’ll go into more detail on this below.

Internal traffic

Most users get worried and anxious about spam, which is normal — nobody likes weird URLs showing up in their reports. However, spam isn't the biggest threat to your Google Analytics.

You are!

The traffic generated by people (and bots) working on the site is often overlooked despite the huge negative impact it has. The main reason it's so damaging is that in contrast to spam, internal traffic is difficult to identify once it hits your Analytics, and it can easily get mixed in with your real user data.

There are different types of internal traffic and different ways of dealing with it.

Direct internal traffic

Testers, developers, marketing team, support, outsourcing... the list goes on. Any member of the team that visits the company website or blog for any purpose could be contributing.

In which reports can you look for direct internal traffic?

Unless your company uses a private ISP domain, this traffic is tough to identify once it hits you, and will usually show as Direct in Google Analytics.

Third-party sites/tools

This type of internal traffic includes traffic generated directly by you or your team when using tools to work on the site; for example, management tools like Trello or Asana,

It also considers traffic coming from bots doing automatic work for you; for example, services used to monitor the performance of your site, like Pingdom or GTmetrix.

Some types of tools you should consider:

Project management

Social media management

Performance/uptime monitoring services

SEO tools

In which reports can you look for internal third-party tools traffic?

This traffic will usually show as Referral in Google Analytics.

Development/staging environments

Some websites use a test environment to make changes before applying them to the main site. Normally, these staging environments have the same tracking code as the production site, so if you don’t filter it out, all the testing will be recorded in Google Analytics.

In which reports can you look for development/staging environments?

This traffic will usually show as Direct in Google Analytics, but you can find it under its own hostname (more on this later).

Web archive sites and cache services

Archive sites like the Wayback Machine offer historical views of websites. The reason you can see those visits on your Analytics — even if they are not hosted on your site — is that the tracking code was installed on your site when the Wayback Machine bot copied your content to its archive.

One thing is for certain: when someone goes to check how your site looked in 2015, they don't have any intention of buying anything from your site — they're simply doing it out of curiosity, so this traffic is not useful.

In which reports can you look for traffic from web archive sites and cache services?

You can also identify this traffic on the hostname report.

A basic understanding of filters

The solutions described below use Google Analytics filters, so to avoid problems and confusion, you'll need some basic understanding of how they work and check some prerequisites.

Things to consider before using filters:

1. Create an unfiltered view.

Before you do anything, it's highly recommendable to make an unfiltered view; it will help you track the efficacy of your filters. Plus, it works as a backup in case something goes wrong.

2. Make sure you have the correct permissions.

You will need edit permissions at the account level to create filters; edit permissions at view or property level won’t work.

3. Filters don’t work retroactively.

In GA, aggregated historical data can’t be deleted, at least not permanently. That's why the sooner you apply the filters to your data, the better.

4. The changes made by filters are permanent!

If your filter is not correctly configured because you didn’t enter the correct expression (missing relevant entries, a typo, an extra space, etc.), you run the risk of losing valuable data FOREVER; there is no way of recovering filtered data.

But don’t worry — if you follow the recommendations below, you shouldn’t have a problem.

5. Wait for it.

Most of the time you can see the effect of the filter within minutes or even seconds after applying it; however, officially it can take up to twenty-four hours, so be patient.

Types of filters

There are two main types of filters: predefined and custom.

Predefined filters are very limited, so I rarely use them. I prefer to use the custom ones because they allow regular expressions, which makes them a lot more flexible.

Within the custom filters, there are five types: exclude, include, lowercase/uppercase, search and replace, and advanced.

Here we will use the first two: exclude and include. We'll save the rest for another occasion.

Essentials of regular expressions

If you already know how to work with regular expressions, you can jump to the next section.

REGEX (short for regular expressions) are text strings prepared to match patterns with the use of some special characters. These characters help match multiple entries in a single filter.

Don’t worry if you don’t know anything about them. We will use only the basics, and for some filters, you will just have to COPY-PASTE the expressions I pre-built.

REGEX special characters

There are many special characters in REGEX, but for basic GA expressions we can focus on three:

^ The caret: used to indicate the beginning of a pattern,

$ The dollar sign: used to indicate the end of a pattern,

| The pipe or bar: means "OR," and it is used to indicate that you are starting a new pattern.

When using the pipe character, you should never ever:

Put it at the beginning of the expression,

Put it at the end of the expression,

Put 2 or more together.

Any of those will mess up your filter and probably your Analytics.

A simple example of REGEX usage

Let's say I go to a restaurant that has an automatic machine that makes fruit salad, and to choose the fruit, you should use regular xxpressions.

This super machine has the following fruits to choose from: strawberry, orange, blueberry, apple, pineapple, and watermelon.

To make a salad with my favorite fruits (strawberry, blueberry, apple, and watermelon), I have to create a REGEX that matches all of them. Easy! Since the pipe character “|” means OR I could do this:

REGEX 1: strawberry|blueberry|apple|watermelon

The problem with that expression is that REGEX also considers partial matches, and since pineapple also contains “apple,” it would be selected as well... and I don’t like pineapple!

To avoid that, I can use the other two special characters I mentioned before to make an exact match for apple. The caret “^” (begins here) and the dollar sign “$” (ends here). It will look like this:

REGEX 2: strawberry|blueberry|^apple$|watermelon

The expression will select precisely the fruits I want.

But let’s say for demonstration's sake that the fewer characters you use, the cheaper the salad will be. To optimize the expression, I can use the ability for partial matches in REGEX.

Since strawberry and blueberry both contain "berry," and no other fruit in the list does, I can rewrite my expression like this:

Optimized REGEX: berry|^apple$|watermelon

That’s it — now I can get my fruit salad with the right ingredients, and at a lower price.

3 ways of testing your filter expression

As I mentioned before, filter changes are permanent, so you have to make sure your filters and REGEX are correct. There are 3 ways of testing them:

Right from the filter window; just click on “Verify this filter,” quick and easy. However, it's not the most accurate since it only takes a small sample of data.

Using an online REGEX tester; very accurate and colorful, you can also learn a lot from these, since they show you exactly the matching parts and give you a brief explanation of why.

Using an in-table temporary filter in GA; you can test your filter against all your historical data. This is the most precise way of making sure you don’t miss anything.

If you're doing a simple filter or you have plenty of experience, you can use the built-in filter verification. However, if you want to be 100% sure that your REGEX is ok, I recommend you build the expression on the online tester and then recheck it using an in-table filter.

Quick REGEX challenge

Here's a small exercise to get you started. Go to this premade example with the optimized expression from the fruit salad case and test the first 2 REGEX I made. You'll see live how the expressions impact the list.

Now make your own expression to pay as little as possible for the salad.

Remember:

We only want strawberry, blueberry, apple, and watermelon;

The fewer characters you use, the less you pay;

You can do small partial matches, as long as they don’t include the forbidden fruits.

Tip: You can do it with as few as 6 characters.

Now that you know the basics of REGEX, we can continue with the filters below. But I encourage you to put “learn more about REGEX” on your to-do list — they can be incredibly useful not only for GA, but for many tools that allow them.

How to create filters to stop spam, bots, and internal traffic in Google Analytics

Back to our main event: the filters!

Where to start: To avoid being repetitive when describing the filters below, here are the standard steps you need to follow to create them:

Go to the admin section in your Google Analytics (the gear icon at the bottom left corner),

Under the View column (master view), click the button “Filters” (don’t click on “All filters“ in the Account column):

Click the red button “+Add Filter” (if you don’t see it or you can only apply/remove already created filters, then you don’t have edit permissions at the account level. Ask your admin to create them or give you the permissions.):

Then follow the specific configuration for each of the filters below.

The filter window is your best partner for improving the quality of your Analytics data, so it will be a good idea to get familiar with it.

Valid hostname filter (ghost spam, dev environments)

Prevents traffic from:

Ghost spam

Development hostnames

Scraping sites

Cache and archive sites

This filter may be the single most effective solution against spam. In contrast with other commonly shared solutions, the hostname filter is preventative, and it rarely needs to be updated.

Ghost spam earns its name because it never really visits your site. It’s sent directly to the Google Analytics servers using a feature called Measurement Protocol, a tool that under normal circumstances allows tracking from devices that you wouldn’t imagine that could be traced, like coffee machines or refrigerators.

Real users pass through your server, then the data is sent to GA; hence it leaves valid information. Ghost spam is sent directly to GA servers, without knowing your site URL; therefore all data left is fake. Source: carloseo.com

The spammer abuses this feature to simulate visits to your site, most likely using automated scripts to send traffic to randomly generated tracking codes (UA-0000000-1).

Since these hits are random, the spammers don't know who they're hitting; for that reason ghost spam will always leave a fake or (not set) host. Using that logic, by creating a filter that only includes valid hostnames all ghost spam will be left out.

Where to find your hostnames

Now here comes the “tricky” part. To create this filter, you will need, to make a list of your valid hostnames.

A list of what!?

Essentially, a hostname is any place where your GA tracking code is present. You can get this information from the hostname report:

Go to Audience > Select Network > At the top of the table change the primary dimension to Hostname.

If your Analytics is active, you should see at least one: your domain name. If you see more, scan through them and make a list of all the ones that are valid for you.

Types of hostname you can find

The good ones:

Type

Example

Your domain and subdomains

yourdomain.com

Tools connected to your Analytics

YouTube, MailChimp

Payment gateways

Shopify, booking systems

Translation services

Google Translate

Mobile speed-up services

Google weblight

The bad ones (by bad, I mean not useful for your reports):

Type

Example/Description

Staging/development environments

staging.yourdomain.com

Internet archive sites

web.archive.org

Scraping sites that don’t bother to trim the content

The URL of the scraper

Spam

Most of the time they will show their URL, but sometimes they may use the name of a known website to try to fool you. If you see a URL that you don’t recognize, just think, “do I manage it?” If the answer is no, then it isn't your hostname.

(not set) hostname

It usually comes from spam. On rare occasions it's related to tracking code issues.

Below is an example of my hostname report. From the unfiltered view, of course, the master view is squeaky clean.

Now with the list of your good hostnames, make a regular expression. If you only have your domain, then that is your expression; if you have more, create an expression with all of them as we did in the fruit salad example:

Hostname REGEX (example) yourdomain.com|hostname2|hostname3|hostname4

Important! You cannot create more than one “Include hostname filter”; if you do, you will exclude all data. So try to fit all your hostnames into one expression (you have 255 characters).

The “valid hostname filter” configuration:

Filter Name: Include valid hostnames

Filter Type: Custom > Include

Filter Field: Hostname

Filter Pattern: [hostname REGEX you created]

Campaign source filter (Crawler spam, internal sources)

Prevents traffic from:

Crawler spam

Internal third-party tools (Trello, Asana, Pingdom)

Important note: Even if these hits are shown as a referral, the field you should use in the filter is “Campaign source” — the field “Referral” won’t work.

Filter for crawler spam

The second most common type of spam is crawler. They also pretend to be a valid visit by leaving a fake source URL, but in contrast with ghost spam, these do access your site. Therefore, they leave a correct hostname.

You will need to create an expression the same way as the hostname filter, but this time, you will put together the source/URLs of the spammy traffic. The difference is that you can create multiple exclude filters.

Crawler REGEX (example) spam1|spam2|spam3|spam4

Crawler REGEX (pre-built) As I promised, here are latest pre-built crawler expressions that you just need to copy/paste.

The “crawler spam filter” configuration:

Filter Name: Exclude crawler spam 1

Filter Type: Custom > Exclude

Filter Field: Campaign source

Filter Pattern: [crawler REGEX]

Filter for internal third-party tools

Although you can combine your crawler spam filter with internal third-party tools, I like to have them separated, to keep them organized and more accessible for updates.

The “internal tools filter” configuration:

Filter Name: Exclude internal tool sources

Filter Pattern: [tool source REGEX]

Internal Tools REGEX (example) trello|asana|redmine

In case, that one of the tools that you use internally also sends you traffic from real visitors, don’t filter it. Instead, use the “Exclude Internal URL Query” below.

For example, I use Trello, but since I share analytics guides on my site, some people link them from their Trello accounts.

Filters for language spam and other types of spam

The previous two filters will stop most of the spam; however, some spammers use different methods to bypass the previous solutions.

For example, they try to confuse you by showing one of your valid hostnames combined with a well-known source like Apple, Google, or Moz. Even my site has been a target (not saying that everyone knows my site; it just looks like the spammers don’t agree with my guides).

However, even if the source and host look fine, the spammer injects their message in another part of your reports like the keyword, page title, and even as a language.

In those cases, you will have to take the dimension/report where you find the spam and choose that name in the filter. It's important to consider that the name of the report doesn't always match the name in the filter field:

Report name

Filter field

Language

Language settings

Referral

Campaign source

Organic Keyword

Search term

Service Provider

ISP Organization

Network Domain

ISP Domain

Here are a couple of examples.

The “language spam/bot filter” configuration:

Filter Name: Exclude language spam

Filter Type: Custom > Exclude

Filter Field: Language settings

Filter Pattern: [Language REGEX]

Language Spam REGEX (Prebuilt) \s[^\s]*\s|.{15,}|\.|,|^c$

The expression above excludes fake languages that don't meet the required format. For example, take these weird messages appearing instead of regular languages like en-us or es-es:

Examples of language spam

The organic/keyword spam filter configuration:

Filter Name: Exclude organic spam

Filter Type: Custom > Exclude

Filter Field: Search term

Filter Pattern: [keyword REGEX]

Filters for direct bot traffic

Bot traffic is a little trickier to filter because it doesn't leave a source like spam, but it can still be filtered with a bit of patience.

The first thing you should do is enable bot filtering. In my opinion, it should be enabled by default.

Go to the Admin section of your Analytics and click on View Settings. You will find the option “Exclude all hits from known bots and spiders” below the currency selector:

It would be wonderful if this would take care of every bot — a dream come true. However, there's a catch: the key here is the word “known.” This option only takes care of known bots included in the “IAB known bots and spiders list." That's a good start, but far from enough.

There are a lot of “unknown” bots out there that are not included in that list, so you'll have to play detective and search for patterns of direct bot traffic through different reports until you find something that can be safely filtered without risking your real user data.

To start your bot trail search, click on the Segment box at the top of any report, and select the “Direct traffic” segment.

Then navigate through different reports to see if you find anything suspicious.

Some reports to start with:

Service provider

Browser version

Network domain

Screen resolution

Flash version

Country/City

Signs of bot traffic

Although bots are hard to detect, there are some signals you can follow:

An unnatural increase of direct traffic