#transformers powl

Explore tagged Tumblr posts

Text

Poll: Round 1d #1

*Reminder that Break up is being used loosely here and not all relationships may be romantic in nature

Propaganda under cut:

Powl and Mesothulas(Trantulas):

Prowl is a high-ranking Autobot. Mesothulas is a mad scientist Prowl isolates and contracts to make stuff for the Autobots. Among the things Mesothulas builds for Prowl are a kid for the two of them, a machine that determines guilt that is used to wild success on innocent people who just feel guilty, a bomb that is dropped on a Neutral city that Prowl blames on the Decepticons, and a pocket-dimension prison called the Noisemaze that erodes your sense of self and makes you go insane. After bombing the Neutral city and having the survivors sign up with the Autobots, Prowl decides that he can’t do this anymore. Prowl asks Impactor to destroy everything he and Mesothulas have built; Impactor shoves Mesothulas into the Noisemaze and destroys everything, except neither he nor Prowl can bring themselves to kill the kid, so Prowl gives him to a better role model to look after him. (he took the kid in the divorce x] )

After a while inside the Noisemaze, Mesothulas remembers himself enough to press a button and teleport out. He becomes Tarantulas and rebuilds himself into a spider furry with a tarantula alt mode. He’s still completely obsessed with Prowl. (eventually he kidnaps Prowl to try to get him to come back, which leads into the present-day bits of Sins of the Wreckers.)

Jason Mcconnel and Peter Simmonds:

They are secretly boyfriends and well-known to be roommates at a Catholic boarding school. In their graduating year, Peter wants to come out as gay to his (Peter's) mother, and has requested several times that Jason be there for morale support. Jason does NOT want to be outed by association, however, so they break up with each other via ballad/rock duet (the song "Ever After").

#transformers#transformers powl#transformers mesothulas#transformers trantulas#jason mcconnell#peter simmonds#bare a pop opera#poll tournament#tournament poll#poll

10 notes

·

View notes

Text

i hope they make earthspark prowl incredibly mean and awful and they talk about him being a cop and he's a big hater of the kids and the emphasis they have on emotional bonds. i also hope he gets a lot of screentime and eventually gets forced into their extended family

#pls pls spls prowl in s2 im begging#got so many powl thoughts in my brain#god it is just one fictional robot after another in there taking turns day by day#tfe#transformers earthspark#prowl#tf prowl#my art

3K notes

·

View notes

Text

day 30: cold

#transformers#prowl#chip chase#maccadam#g1 prowl#if you want it to be#i draw one powl in all locations. an ultimate pal soldered together from#all the best parts of every prowl#the big horns… the sweet rack… the little cat toes#the cute ribs of that earthspark one

104 notes

·

View notes

Text

I love this asshole and I want him to get therapy and also get slapped 💜

thinking about Prowler thinking about how nobody figured out he was being controlled thinking about how everyone sees him as the bad guy even if they’re on the same side thinking about how even STARSCREAM becomes an important figure with (some) allies yet Prowl goes feral because of everything thinking about how the only people to support Prowl were the ones he was forced to mind-meld with thinking about all his relationships ending with others hating him thinking about

#i can fix him#powl my favourite worst guy my sopping wet loser#prowl#prowler#transformers idw#tf idw

712 notes

·

View notes

Text

powl is very fascinating and theres an interesting point of discussion where the line between "correct and justified in-universe" and "unfairly punished out-of-universe" starts because while i would never ever even think about saying prowl was correct in basically anything he did there is a lot to say about hipocrisy in barbers narrative and its treatment of characters like prowl who challenge OP in a way the narrative cannot easily refute on basis of argument and so dispose of on basis of morality (ie: prowl is not kind and his argument therefore is worth less) however you can also argue the intentionallness of that in-universe to demonstrate the oppressiveness of ops autobots and the double standards of his ideology (though looking at it strictly through this lense discards the implications of particular characters treatment - namely jazz, and how that may have been affected by out-of-universe racial biases or how it reads with those biases in mind even if unintentional) basically what i mean is idw1 phase 2 robots in disguise/the transformers/optimus prime is both the best most interesting transformers story ever written and a barely glued together mess that tripped over every theme it tried to handle in such a way that it coincidentially became a highly effective deconstruction of the liberal status quo it sought to defend

24 notes

·

View notes

Text

*scrolling through tumblr half asleep after a hectic day.*

Me *sees my favourite transformer on my feed*: [Gasp] "powl"

*proceeds to giggle for a few minutes for the way I said that *

0 notes

Photo

my friends made me simp for Prowl so this was my response. He is looking at u

93 notes

·

View notes

Text

9 notes

·

View notes

Text

Google gobbling DeepMinds health app might be the trust shock we need

DeepMind’s health app being gobbled by parent Google is both unsurprising and deeply shocking.

First thoughts should not be allowed to gloss over what is really a gut punch.

It’s unsurprising because the AI galaxy brains at DeepMind always looked like unlikely candidates for the quotidian, margins-focused business of selling and scaling software as a service. The app in question, a clinical task management and alerts app called Streams, does not involve any AI.

The algorithm it uses was developed by the UK’s own National Health Service, a branch of which DeepMind partnered with to co-develop Streams.

In a blog post announcing the hand-off yesterday, “scaling” was the precise word the DeepMind founders chose to explain passing their baby to Google . And if you want to scale apps Google does have the well oiled machinery to do it.

At the same time Google has just hired Dr. David Feinberg, from US health service organization Geisinger, to a new leadership role which CNBC reports as being intended to tie together multiple, fragmented health initiatives and coordinate its moves into the $3TR healthcare sector.

The company’s stated mission of ‘organizing the world’s information and making it universally accessible and useful’ is now seemingly being applied to its own rather messy corporate structure — to try to capitalize on growing opportunities for selling software to clinicians.

That health tech opportunities are growing is clear.

In the UK, where Streams and DeepMind Health operates, the minister for health, Matt Hancock, a recent transplant to the portfolio from the digital brief, brought his love of apps with him — and almost immediately made technology one of his stated priorities for the NHS.

Last month he fleshed his thinking out further, publishing a future of healthcare policy document containing a vision for transforming how the NHS operates — to plug in what he called “healthtech” apps and services, to support tech-enabled “preventative, predictive and personalised care”.

Which really is a clarion call to software makers to clap fresh eyes on the sector.

In the UK the legwork that DeepMind has done on the ‘apps for clinicians’ front — finding a willing NHS Trust to partner with; getting access to patient data, with the Royal Free passing over the medical records of some 1.6 million people as Streams was being developed in the autumn of 2015; inking a bunch more Streams deals with other NHS Trusts — is now being folded right back into Google.

And this is where things get shocking.

Trust demolition

Shocking because DeepMind handing the app to Google — and therefore all the patient data that sits behind it — goes against explicit reassurances made by DeepMind’s founders that there was a firewall sitting between its health experiments and its ad tech parent, Google.

“In this work, we know that we’re held to the highest level of scrutiny,” wrote DeepMind co-founder Mustafa Suleyman in a blog post in July 2016 as controversy swirled over the scope and terms of the patient data-sharing arrangement it had inked with the Royal Free. “DeepMind operates autonomously from Google, and we’ve been clear from the outset that at no stage will patient data ever be linked or associated with Google accounts, products or services.”

As law and technology academic Julia Powles, who co-wrote a research paper on DeepMind’s health foray with the New Scientist journalist, Hal Hodson, who obtained and published the original (now defunct) patient data-sharing agreement, noted via Twitter: “This isn’t transparency, it’s trust demolition.”

This is TOTALLY unacceptable. DeepMind repeatedly, unconditionally promised to *never* connect people's intimate, identifiable health data to Google. Now it's announced…exactly that. This isn't transparency, it's trust demolition https://t.co/EWM7lxKSET (grabs: Powles & Hodson) pic.twitter.com/3BLQvH3dg1

— Julia Powles (@juliapowles) November 13, 2018

Turns out DeepMind’s patient data firewall was nothing more than a verbal assurance — and two years later those words have been steamrollered by corporate reconfiguration, as Google and Alphabet elbow DeepMind’s team aside and prepare to latch onto a burgeoning new market opportunity.

Any fresh assurances that people’s sensitive medical records will never be used for ad targeting will now have to come direct from Google. And they’ll just be words too. So put that in your patient trust pipe and smoke it.

The Streams app data is also — to be clear — personal data that the individuals concerned never consented to being passed to DeepMind. Let alone to Google.

Patients weren’t asked for their consent nor even consulted by the Royal Free when it quietly inked a partnership with DeepMind three years ago. It was only months later that the initiative was even made public, although the full scope and terms only emerged thanks to investigative journalism.

Transparency was lacking from the start.

This is why, after a lengthy investigation, the UK’s data protection watchdog ruled last year that the Trust had breached UK law — saying people would not have reasonably expected their information to be used in such a way.

Nor should they. If you ended up in hospital with a broken leg you’d expect the hospital to have your data. But wouldn’t you be rather shocked to learn — shortly afterwards or indeed years and years later — that your medical records are now sitting on a Google server because Alphabet’s corporate leaders want to scale a fat healthtech profit?

In the same 2016 blog post, entitled “DeepMind Health: our commitment to the NHS”, Suleyman made a point of noting how it had asked “a group of respected public figures to act as Independent Reviewers, to examine our work and publish their findings”, further emphasizing: “We want to earn public trust for this work, and we don’t take that for granted.”

Fine words indeed. And the panel of independent reviewers that DeepMind assembled to act as an informal watchdog in patients’ and consumers’ interests did indeed contain well respected public figures, chaired by former Liberal Democrat MP Julian Huppert.

The panel was provided with a budget by DeepMind to carry out investigations of the reviewers’ choosing. It went on to produce two annual reports — flagging a number of issues of concern, including, most recently, warning that Google might be able to exert monopoly power as a result of the fact Streams is being contractually bundled with streaming and data access infrastructure.

The reviewers also worried whether DeepMind Health would be able to insulate itself from Alphabet’s influence and commercial priorities — urging DeepMind Health to “look at ways of entrenching its separation from Alphabet and DeepMind more robustly, so that it can have enduring force to the commitments it makes”.

It turns out that was a very prescient concern since Alphabet/Google has now essentially dissolved the bits of DeepMind that were sticking in its way.

Including — it seems — the entire external reviewer structure…

"We encourage DeepMind Health to look at ways of entrenching its separation from Alphabet and DeepMind more robustly, so that it can have enduring force to the commitments it makes." !

— Eerke Boiten (@EerkeBoiten) November 13, 2018

A DeepMind spokesperson told us that the panel’s governance structure was created for DeepMind Health “as a UK entity”, adding: “Now Streams is going to be part of a global effort this is unlikely to be the right structure in the future.”

It turns out — yet again — that tech industry DIY ‘guardrails’ and self-styled accountability are about as reliable as verbal assurances. Which is to say, not at all.

This is also both deeply unsurprisingly and horribly shocking. The shock is really that big tech keeps getting away with this.

None of the self-generated ‘trust and accountability’ structures that tech giants are now routinely popping up with entrepreneurial speed — to act as public curios and talking shops to draw questions away from what’s they’re actually doing as people’s data gets sucked up for commercial gain — can in fact be trusted.

They are a shiny distraction from due process. Or to put it more succinctly: It’s PR.

There is no accountability if rules are self-styled and therefore cannot be enforced because they can just get overwritten and goalposts moved at corporate will.

Nor can there be trust in any commercial arrangement unless it has adequately bounded — and legal — terms.

This stuff isn’t rocket science nor even medical science. So it’s quite the pantomime dance that DeepMind and Google have been merrily leading everyone on.

It’s almost as if they were trying to cause a massive distraction — by sicking up faux discussions of trust, fairness and privacy — to waste good people’s time while they got on with the lucrative business of mining everyone’s data.

Original Article : HERE ; This post was curated & posted using : RealSpecific

=> *********************************************** See Full Article Here: Google gobbling DeepMinds health app might be the trust shock we need ************************************ =>

Google gobbling DeepMinds health app might be the trust shock we need was originally posted by ViralAutobots News - Feed

0 notes

Text

Google gobbling DeepMind’s health app might be the trust shock we need

DeepMind’s health app being gobbled by parent Google is both unsurprising and deeply shocking.

First thoughts should not be allowed to gloss over what is really a gut punch.

It’s unsurprising because the AI galaxy brains at DeepMind always looked like unlikely candidates for the quotidian, margins-focused business of selling and scaling software as a service. The app in question, a clinical task management and alerts app called Streams, does not involve any AI.

The algorithm it uses was developed by the UK’s own National Health Service, a branch of which DeepMind partnered with to co-develop Streams.

In a blog post announcing the hand-off yesterday, “scaling” was the precise word the DeepMind founders chose to explain passing their baby to Google . And if you want to scale apps Google does have the well oiled machinery to do it.

At the same time Google has just hired Dr. David Feinberg, from US health service organization Geisinger, to a new leadership role which CNBC reports as being intended to tie together multiple, fragmented health initiatives and coordinate its moves into the $3TR healthcare sector.

The company’s stated mission of ‘organizing the world’s information and making it universally accessible and useful’ is now seemingly being applied to its own rather messy corporate structure — to try to capitalize on growing opportunities for selling software to clinicians.

That health tech opportunities are growing is clear.

In the UK, where Streams and DeepMind Health operates, the minister for health, Matt Hancock, a recent transplant to the portfolio from the digital brief, brought his love of apps with him — and almost immediately made technology one of his stated priorities for the NHS.

Last month he fleshed his thinking out further, publishing a future of healthcare policy document containing a vision for transforming how the NHS operates — to plug in what he called “healthtech” apps and services, to support tech-enabled “preventative, predictive and personalised care”.

Which really is a clarion call to software makers to clap fresh eyes on the sector.

In the UK the legwork that DeepMind has done on the ‘apps for clinicians’ front — finding a willing NHS Trust to partner with; getting access to patient data, with the Royal Free passing over the medical records of some 1.6 million people as Streams was being developed in the autumn of 2015; inking a bunch more Streams deals with other NHS Trusts — is now being folded right back into Google.

And this is where things get shocking.

Trust demolition

Shocking because DeepMind handing the app to Google — and therefore all the patient data that sits behind it — goes against explicit reassurances made by DeepMind’s founders that there was a firewall sitting between its health experiments and its ad tech parent, Google.

“In this work, we know that we’re held to the highest level of scrutiny,” wrote DeepMind co-founder Mustafa Suleyman in a blog post in July 2016 as controversy swirled over the scope and terms of the patient data-sharing arrangement it had inked with the Royal Free. “DeepMind operates autonomously from Google, and we’ve been clear from the outset that at no stage will patient data ever be linked or associated with Google accounts, products or services.”

As law and technology academic Julia Powles, who co-wrote a research paper on DeepMind’s health foray with the New Scientist journalist, Hal Hodson, who obtained and published the original (now defunct) patient data-sharing agreement, noted via Twitter: “This isn’t transparency, it’s trust demolition.”

This is TOTALLY unacceptable. DeepMind repeatedly, unconditionally promised to *never* connect people's intimate, identifiable health data to Google. Now it's announced…exactly that. This isn't transparency, it's trust demolition https://t.co/EWM7lxKSET (grabs: Powles & Hodson) pic.twitter.com/3BLQvH3dg1

— Julia Powles (@juliapowles) November 13, 2018

Turns out DeepMind’s patient data firewall was nothing more than a verbal assurance — and two years later those words have been steamrollered by corporate reconfiguration, as Google and Alphabet elbow DeepMind’s team aside and prepare to latch onto a burgeoning new market opportunity.

Any fresh assurances that people’s sensitive medical records will never be used for ad targeting will now have to come direct from Google. And they’ll just be words too. So put that in your patient trust pipe and smoke it.

The Streams app data is also — to be clear — personal data that the individuals concerned never consented to being passed to DeepMind. Let alone to Google.

Patients weren’t asked for their consent nor even consulted by the Royal Free when it quietly inked a partnership with DeepMind three years ago. It was only months later that the initiative was even made public, although the full scope and terms only emerged thanks to investigative journalism.

Transparency was lacking from the start.

This is why, after a lengthy investigation, the UK’s data protection watchdog ruled last year that the Trust had breached UK law — saying people would not have reasonably expected their information to be used in such a way.

Nor should they. If you ended up in hospital with a broken leg you’d expect the hospital to have your data. But wouldn’t you be rather shocked to learn — shortly afterwards or indeed years and years later — that your medical records are now sitting on a Google server because Alphabet’s corporate leaders want to scale a fat healthtech profit?

In the same 2016 blog post, entitled “DeepMind Health: our commitment to the NHS”, Suleyman made a point of noting how it had asked “a group of respected public figures to act as Independent Reviewers, to examine our work and publish their findings”, further emphasizing: “We want to earn public trust for this work, and we don’t take that for granted.”

Fine words indeed. And the panel of independent reviewers that DeepMind assembled to act as an informal watchdog in patients’ and consumers’ interests did indeed contain well respected public figures, chaired by former Liberal Democrat MP Julian Huppert.

The panel was provided with a budget by DeepMind to carry out investigations of the reviewers’ choosing. It went on to produce two annual reports — flagging a number of issues of concern, including, most recently, warning that Google might be able to exert monopoly power as a result of the fact Streams is being contractually bundled with streaming and data access infrastructure.

The reviewers also worried whether DeepMind Health would be able to insulate itself from Alphabet’s influence and commercial priorities — urging DeepMind Health to “look at ways of entrenching its separation from Alphabet and DeepMind more robustly, so that it can have enduring force to the commitments it makes”.

It turns out that was a very prescient concern since Alphabet/Google has now essentially dissolved the bits of DeepMind that were sticking in its way.

Including — it seems — the entire external reviewer structure…

"We encourage DeepMind Health to look at ways of entrenching its separation from Alphabet and DeepMind more robustly, so that it can have enduring force to the commitments it makes." !

— Eerke Boiten (@EerkeBoiten) November 13, 2018

A DeepMind spokesperson told us that the panel’s governance structure was created for DeepMind Health “as a UK entity”, adding: “Now Streams is going to be part of a global effort this is unlikely to be the right structure in the future.”

It turns out — yet again — that tech industry DIY ‘guardrails’ and self-styled accountability are about as reliable as verbal assurances. Which is to say, not at all.

This is also both deeply unsurprisingly and horribly shocking. The shock is really that big tech keeps getting away with this.

None of the self-generated ‘trust and accountability’ structures that tech giants are now routinely popping up with entrepreneurial speed — to act as public curios and talking shops to draw questions away from what’s actually going on as people’s data gets sucked up for commercial gain — can in fact be trusted.

They are a shiny distraction from due process. Or to put it more succinctly: It’s PR.

There is no accountability if rules are self-styled and therefore cannot be enforced because they can just get overwritten and goalposts moved at corporate will.

Nor can there be trust in any commercial arrangement unless it has adequately bounded — and legal — terms.

This stuff isn’t rocket science nor even medical science. So it’s quite the pantomime dance that DeepMind and Google have been merrily leading everyone on.

It’s almost as if they were trying to cause a massive distraction — by sicking up faux discussions of trust, fairness and privacy — to waste good people’s time while they got on with the lucrative business of mining everyone’s data.

0 notes

Text

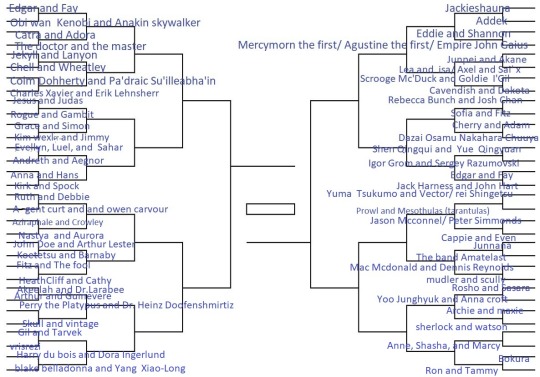

Bracket!

Its done! 64 competitors on this.

its so small I will put the mashups under the cut, but know that the first 8 polls, Round 1 A will release on Sunday, August 20th, 3pm EST, and will last 1 week.

If anyone has ANY specific photos they want me to use for anyone here, please send them to me. (Also if you see anyone on this list you like, feel free to send n more propaganda for them because I may put it in their poll and some people here don't have any lol)

Round 1 A

Edgar and Fay from Dolls of New Albion vs Obi Wan Kenobi and Anakin Skywalker from star wars

Catra and Adora from She ra vs The doctor and the Master from Doctor who

Jekyll and Lanyon from The glass scientist vs Chell and Wheatley from Portal

Colm Doherty and Pádraic Súilleabháin from Banshees of Inisherin vs Chalres Xavier and Erik Lehnsherr from x -men

Jesus and Judas from Jesus Christ Superstar vs Rogue and Gambit from x- men

Grace and Simon from Infinity train vs kim Wexler and Jimmy from Better call Saul

Evellyn, Luel, and Sahar from Luna Story picross/coloring book apps vs Andreth and Aegnor from Silmarillion!

Anna and Hans from Frozen vs Kirk and SPock from Star trek

Round 1b

Ruth and Debbie from GLOW vs Agent Curt and Owen Carvour from Spies are forever

aziraphale & crowley from good omens vs Nastya and Aurora from Mechanism

John Doe and Arthur Lester from Malevolent vs Kotetsu and Barnaby from tiger and Bunny

Fitz and the fool from Realm of the Elderlings vs HeathCliff and cathy from Wuthering Heights

Akeelah and Dr.Larabee from akeelah and the bee vs Arthur and Guinevere from Arthurianna.

Peery the platypus and Dr. Heniz Doofenshmirtiz from phineas and Ferb vs Skull and vintage from Spatoon

Hil and Tavek from Girl Genius vs Vrisrezi from Homestuck

Harry Du bois and Dora Ingerlund from Disco Elysium vs Blake Belladonna and Yang Xiao-Long from RWBY

Round 1c

Jackieshannua from Yellow Jackets vs Addek from Greys Anatomy

Eddie and Shannon from 9-1-1 vs Mercymorn the First/Augustine the First/Emperor John Gaius from The locked tomb seris

Junpei and Skane from Zero escape vs Lea & Isa / Axel & Saïx from Kingdom hearts

Scooge MC'Duck and Goldie I'Gill Ducktails (2017) vsCavendish and Dakota from Milo Murphy's law

Rebecca Bunch and Josh Chen from Crazy ex-girlfriend vs Sophia and fitz from keeper of lost cities

Cherry and Adam from sk8 vs Dazi Osamu and Nakahara chuuya from Bungo Stray dog

Shen Qingqiu & Yue Qingyuan from Scum Villain’s Self-Saving System vs Igor Grom and Sergey razumovski from Major Grom: Plague Doctor

Jack harness and John Hart from Torchwood vs Yuma Tsukumo and Vector/Rei Shingetru from Yu-Gi-Oh! Zexal

Round 1d

Powl and Mesothulas (trantulas) from transformers vs Jason Mcconnel and Peter simmonds from Bare: A pop Opera

Cappie and Even from Greek (2007) vs Junnana from Revue Starlight

The band Amatelast from Show By Rock! vs Mac Macdonald and Dennis Reynolds from Its always sunny in Philadelphia

Mulder and scully from x-files vsRosho and Sasara from Hypnosis Microphone!

Yoo Junghyuk and Anna Croft from omniscient reader vs Archie and maxie from Pokemon

sherlock and Watson from Blackeyed Theatre's Valley of Fear vs Anna, Sasha, and Marcy from Amphibia

The two boys from Bokura from Bokura vs Rom and Tammy from Parks and recs

33 notes

·

View notes

Text

Google gobbling DeepMind’s health app might be the trust shock we need

DeepMind’s health app being gobbled by parent Google is both unsurprising and deeply shocking.

First thoughts should not be allowed to gloss over what is really a gut punch.

It’s unsurprising because the AI galaxy brains at DeepMind always looked like unlikely candidates for the quotidian, margins-focused business of selling and scaling software as a service. The app in question, a clinical task management and alerts app called Streams, does not involve any AI.

The algorithm it uses was developed by the UK’s own National Health Service, a branch of which DeepMind partnered with to co-develop Streams.

In a blog post announcing the hand-off yesterday, “scaling” was the precise word the DeepMind founders chose to explain passing their baby to Google . And if you want to scale apps Google does have the well oiled machinery to do it.

At the same time Google has just hired Dr. David Feinberg, from US health service organization Geisinger, to a new leadership role which CNBC reports as being intended to tie together multiple, fragmented health initiatives and coordinate its moves into the $3TR healthcare sector.

The company’s stated mission of ‘organizing the world’s information and making it universally accessible and useful’ is now seemingly being applied to its own rather messy corporate structure — to try to capitalize on growing opportunities for selling software to clinicians.

That health tech opportunities are growing is clear.

In the UK, where Streams and DeepMind Health operates, the minister for health, Matt Hancock, a recent transplant to the portfolio from the digital brief, brought his love of apps with him — and almost immediately made technology one of his stated priorities for the NHS.

Last month he fleshed his thinking out further, publishing a future of healthcare policy document containing a vision for transforming how the NHS operates — to plug in what he called “healthtech” apps and services, to support tech-enabled “preventative, predictive and personalised care”.

Which really is a clarion call to software makers to clap fresh eyes on the sector.

In the UK the legwork that DeepMind has done on the ‘apps for clinicians’ front — finding a willing NHS Trust to partner with; getting access to patient data, with the Royal Free passing over the medical records of some 1.6 million people as Streams was being developed in the autumn of 2015; inking a bunch more Streams deals with other NHS Trusts — is now being folded right back into Google.

And this is where things get shocking.

Trust demolition

Shocking because DeepMind handing the app to Google — and therefore all the patient data that sits behind it — goes against explicit reassurances made by DeepMind’s founders that there was a firewall sitting between its health experiments and its ad tech parent, Google.

“In this work, we know that we’re held to the highest level of scrutiny,” wrote DeepMind co-founder Mustafa Suleyman in a blog post in July 2016 as controversy swirled over the scope and terms of the patient data-sharing arrangement it had inked with the Royal Free. “DeepMind operates autonomously from Google, and we’ve been clear from the outset that at no stage will patient data ever be linked or associated with Google accounts, products or services.”

As law and technology academic Julia Powles, who co-wrote a research paper on DeepMind’s health foray with the New Scientist journalist, Hal Hodson, who obtained and published the original (now defunct) patient data-sharing agreement, noted via Twitter: “This isn’t transparency, it’s trust demolition.”

This is TOTALLY unacceptable. DeepMind repeatedly, unconditionally promised to *never* connect people's intimate, identifiable health data to Google. Now it's announced…exactly that. This isn't transparency, it's trust demolition https://t.co/EWM7lxKSET (grabs: Powles & Hodson) pic.twitter.com/3BLQvH3dg1

— Julia Powles (@juliapowles) November 13, 2018

Turns out DeepMind’s patient data firewall was nothing more than a verbal assurance — and two years later those words have been steamrollered by corporate reconfiguration, as Google and Alphabet elbow DeepMind’s team aside and prepare to latch onto a burgeoning new market opportunity.

Any fresh assurances that people’s sensitive medical records will never be used for ad targeting will now have to come direct from Google. And they’ll just be words too. So put that in your patient trust pipe and smoke it.

The Streams app data is also — to be clear — personal data that the individuals concerned never consented to being passed to DeepMind. Let alone to Google.

Patients weren’t asked for their consent nor even consulted by the Royal Free when it quietly inked a partnership with DeepMind three years ago. It was only months later that the initiative was even made public, although the full scope and terms only emerged thanks to investigative journalism.

Transparency was lacking from the start.

This is why, after a lengthy investigation, the UK’s data protection watchdog ruled last year that the Trust had breached UK law — saying people would not have reasonably expected their information to be used in such a way.

Nor should they. If you ended up in hospital with a broken leg you’d expect the hospital to have your data. But wouldn’t you be rather shocked to learn — shortly afterwards or indeed years and years later — that your medical records are now sitting on a Google server because Alphabet’s corporate leaders want to scale a fat healthtech profit?

In the same 2016 blog post, entitled “DeepMind Health: our commitment to the NHS”, Suleyman made a point of noting how it had asked “a group of respected public figures to act as Independent Reviewers, to examine our work and publish their findings”, further emphasizing: “We want to earn public trust for this work, and we don’t take that for granted.”

Fine words indeed. And the panel of independent reviewers that DeepMind assembled to act as an informal watchdog in patients’ and consumers’ interests did indeed contain well respected public figures, chaired by former Liberal Democrat MP Julian Huppert.

The panel was provided with a budget by DeepMind to carry out investigations of the reviewers’ choosing. It went on to produce two annual reports — flagging a number of issues of concern, including, most recently, warning that Google might be able to exert monopoly power as a result of the fact Streams is being contractually bundled with streaming and data access infrastructure.

The reviewers also worried whether DeepMind Health would be able to insulate itself from Alphabet’s influence and commercial priorities — urging DeepMind Health to “look at ways of entrenching its separation from Alphabet and DeepMind more robustly, so that it can have enduring force to the commitments it makes”.

It turns out that was a very prescient concern since Alphabet/Google has now essentially dissolved the bits of DeepMind that were sticking in its way.

Including — it seems — the entire external reviewer structure…

"We encourage DeepMind Health to look at ways of entrenching its separation from Alphabet and DeepMind more robustly, so that it can have enduring force to the commitments it makes." !

— Eerke Boiten (@EerkeBoiten) November 13, 2018

A DeepMind spokesperson told us that the panel’s governance structure was created for DeepMind Health “as a UK entity”, adding: “Now Streams is going to be part of a global effort this is unlikely to be the right structure in the future.”

It turns out — yet again — that tech industry DIY ‘guardrails’ and self-styled accountability are about as reliable as verbal assurances. Which is to say, not at all.

This is also both deeply unsurprisingly and horribly shocking. The shock is really that big tech keeps getting away with this.

None of the self-generated ‘trust and accountability’ structures that tech giants are now routinely popping up with entrepreneurial speed — to act as public curios and talking shops to draw questions away from what’s they’re actually doing as people’s data gets sucked up for commercial gain — can in fact be trusted.

They are a shiny distraction from due process. Or to put it more succinctly: It’s PR.

There is no accountability if rules are self-styled and therefore cannot be enforced because they can just get overwritten and goalposts moved at corporate will.

Nor can there be trust in any commercial arrangement unless it has adequately bounded — and legal — terms.

This stuff isn’t rocket science nor even medical science. So it’s quite the pantomime dance that DeepMind and Google have been merrily leading everyone on.

It’s almost as if they were trying to cause a massive distraction — by sicking up faux discussions of trust, fairness and privacy — to waste good people’s time while they got on with the lucrative business of mining everyone’s data.

from RSSMix.com Mix ID 8204425 https://ift.tt/2DkT23T via IFTTT

0 notes

Link

DeepMind’s health app being gobbled by parent Google is both unsurprising and deeply shocking.

First thoughts should not be allowed to gloss over what is really a gut punch.

It’s unsurprising because the AI galaxy brains at DeepMind always looked like unlikely candidates for the quotidian, margins-focused business of selling and scaling software as a service. The app in question, a clinical task management and alerts app called Streams, does not involve any AI.

The algorithm it uses was developed by the UK’s own National Health Service, a branch of which DeepMind partnered with to co-develop Streams.

In a blog post announcing the hand-off yesterday, “scaling” was the precise word the DeepMind founders chose to explain passing their baby to Google . And if you want to scale apps Google does have the well oiled machinery to do it.

At the same time Google has just hired Dr. David Feinberg, from US health service organization Geisinger, to a new leadership role which CNBC reports as being intended to tie together multiple, fragmented health initiatives and coordinate its moves into the $3TR healthcare sector.

The company’s stated mission of ‘organizing the world’s information and making it universally accessible and useful’ is now seemingly being applied to its own rather messy corporate structure — to try to capitalize on growing opportunities for selling software to clinicians.

That health tech opportunities are growing is clear.

In the UK, where Streams and DeepMind Health operates, the minister for health, Matt Hancock, a recent transplant to the portfolio from the digital brief, brought his love of apps with him — and almost immediately made technology one of his stated priorities for the NHS.

Last month he fleshed his thinking out further, publishing a future of healthcare policy document containing a vision for transforming how the NHS operates — to plug in what he called “healthtech” apps and services, to support tech-enabled “preventative, predictive and personalised care”.

Which really is a clarion call to software makers to clap fresh eyes on the sector.

In the UK the legwork that DeepMind has done on the ‘apps for clinicians’ front — finding a willing NHS Trust to partner with; getting access to patient data, with the Royal Free passing over the medical records of some 1.6 million people as Streams was being developed in the autumn of 2015; inking a bunch more Streams deals with other NHS Trusts — is now being folded right back into Google.

And this is where things get shocking.

Trust demolition

Shocking because DeepMind handing the app to Google — and therefore all the patient data that sits behind it — goes against explicit reassurances made by DeepMind’s founders that there was a firewall sitting between its health experiments and its ad tech parent, Google.

“In this work, we know that we’re held to the highest level of scrutiny,” wrote DeepMind co-founder Mustafa Suleyman in a blog post in July 2016 as controversy swirled over the scope and terms of the patient data-sharing arrangement it had inked with the Royal Free. “DeepMind operates autonomously from Google, and we’ve been clear from the outset that at no stage will patient data ever be linked or associated with Google accounts, products or services.”

As law and technology academic Julia Powles, who co-wrote a research paper on DeepMind’s health foray with the New Scientist journalist, Hal Hodson, who obtained and published the original (now defunct) patient data-sharing agreement, noted via Twitter: “This isn’t transparency, it’s trust demolition.”

This is TOTALLY unacceptable. DeepMind repeatedly, unconditionally promised to *never* connect people's intimate, identifiable health data to Google. Now it's announced…exactly that. This isn't transparency, it's trust demolition https://t.co/EWM7lxKSET (grabs: Powles & Hodson) pic.twitter.com/3BLQvH3dg1

— Julia Powles (@juliapowles) November 13, 2018

Turns out DeepMind’s patient data firewall was nothing more than a verbal assurance — and two years later those words have been steamrollered by corporate reconfiguration, as Google and Alphabet elbow DeepMind’s team aside and prepare to latch onto a burgeoning new market opportunity.

Any fresh assurances that people’s sensitive medical records will never be used for ad targeting will now have to come direct from Google. And they’ll just be words too. So put that in your patient trust pipe and smoke it.

The Streams app data is also — to be clear — personal data that the individuals concerned never consented to being passed to DeepMind. Let alone to Google.

Patients weren’t asked for their consent nor even consulted by the Royal Free when it quietly inked a partnership with DeepMind three years ago. It was only months later that the initiative was even made public, although the full scope and terms only emerged thanks to investigative journalism.

Transparency was lacking from the start.

This is why, after a lengthy investigation, the UK’s data protection watchdog ruled last year that the Trust had breached UK law — saying people would not have reasonably expected their information to be used in such a way.

Nor should they. If you ended up in hospital with a broken leg you’d expect the hospital to have your data. But wouldn’t you be rather shocked to learn — shortly afterwards or indeed years and years later — that your medical records are now sitting on a Google server because Alphabet’s corporate leaders want to scale a fat healthtech profit?

In the same 2016 blog post, entitled “DeepMind Health: our commitment to the NHS”, Suleyman made a point of noting how it had asked “a group of respected public figures to act as Independent Reviewers, to examine our work and publish their findings”, further emphasizing: “We want to earn public trust for this work, and we don’t take that for granted.”

Fine words indeed. And the panel of independent reviewers that DeepMind assembled to act as an informal watchdog in patients’ and consumers’ interests did indeed contain well respected public figures, chaired by former Liberal Democrat MP Julian Huppert.

The panel was provided with a budget by DeepMind to carry out investigations of the reviewers’ choosing. It went on to produce two annual reports — flagging a number of issues of concern, including, most recently, warning that Google might be able to exert monopoly power as a result of the fact Streams is being contractually bundled with streaming and data access infrastructure.

The reviewers also worried whether DeepMind Health would be able to insulate itself from Alphabet’s influence and commercial priorities — urging DeepMind Health to “look at ways of entrenching its separation from Alphabet and DeepMind more robustly, so that it can have enduring force to the commitments it makes”.

It turns out that was a very prescient concern since Alphabet/Google has now essentially dissolved the bits of DeepMind that were sticking in its way.

Including — it seems — the entire external reviewer structure…

"We encourage DeepMind Health to look at ways of entrenching its separation from Alphabet and DeepMind more robustly, so that it can have enduring force to the commitments it makes." !

— Eerke Boiten (@EerkeBoiten) November 13, 2018

A DeepMind spokesperson told us that the panel’s governance structure was created for DeepMind Health “as a UK entity”, adding: “Now Streams is going to be part of a global effort this is unlikely to be the right structure in the future.”

It turns out — yet again — that tech industry DIY ‘guardrails’ and self-styled accountability are about as reliable as verbal assurances. Which is to say, not at all.

This is also both deeply unsurprisingly and horribly shocking. The shock is really that big tech keeps getting away with this.

None of the self-generated ‘trust and accountability’ structures that tech giants are now routinely popping up with entrepreneurial speed — to act as public curios and talking shops to draw questions away from what’s they’re actually doing as people’s data gets sucked up for commercial gain — can in fact be trusted.

They are a shiny distraction from due process. Or to put it more succinctly: It’s PR.

There is no accountability if rules are self-styled and therefore cannot be enforced because they can just get overwritten and goalposts moved at corporate will.

Nor can there be trust in any commercial arrangement unless it has adequately bounded — and legal — terms.

This stuff isn’t rocket science nor even medical science. So it’s quite the pantomime dance that DeepMind and Google have been merrily leading everyone on.

It’s almost as if they were trying to cause a massive distraction — by sicking up faux discussions of trust, fairness and privacy — to waste good people’s time while they got on with the lucrative business of mining everyone’s data.

via TechCrunch

0 notes