#schölkopf

Explore tagged Tumblr posts

Photo

#Repost - @gerdycoool65 by @elbadipalace Palace El Badi #marrakech #marrakesh #marrakech🇲🇦 #marrakesch #palace #palast #elbadipalace #türe #door #schölkopf #schoelkopf #historisch #antik #alt #altbauliebe #charme #marokko #maroc #canon #canon_photos #vacation #mustsee #schön #holzarbeiten #kunst (at El Badi Palace) https://www.instagram.com/p/Cp-_wLdouPu/?igshid=NGJjMDIxMWI=

#repost#marrakech#marrakesh#marrakech🇲🇦#marrakesch#palace#palast#elbadipalace#türe#door#schölkopf#schoelkopf#historisch#antik#alt#altbauliebe#charme#marokko#maroc#canon#canon_photos#vacation#mustsee#schön#holzarbeiten#kunst

0 notes

Text

Interesting Papers for Week 6, 2019

Correlation of Synaptic Inputs in the Visual Cortex of Awake, Behaving Mice. Arroyo, S., Bennett, C., & Hestrin, S. (2018). Neuron, 99(6), 1289–1301.e2.

Automatic Differentiation in Machine Learning: a Survey. Baydin, A. G., Pearlmutter, B. A., Radul, A. A., & Siskind, J. M. (2018). Journal of Machine Learning Research, 18(153), 1–43.

Recurrent cortical circuits implement concentration-invariant odor coding. Bolding, K. A., & Franks, K. M. (2018). Science, 361(6407), eaat6904.

Hippocampal CB1 Receptors Control Incidental Associations. Busquets-Garcia, A., Oliveira da Cruz, J. F., Terral, G., Pagano Zottola, A. C., Soria-Gómez, E., Contini, A., … Marsicano, G. (2018). Neuron, 99(6), 1247–1259.e7.

Conditioning sharpens the spatial representation of rewarded stimuli in mouse primary visual cortex. Goltstein, P. M., Meijer, G. T., & Pennartz, C. M. (2018). eLife, 7, e37683.

Recognition Dynamics in the Brain under the Free Energy Principle. Kim, C. S. (2018). Neural Computation, 30(10), 2616–2659.

Elimination of the error signal in the superior colliculus impairs saccade motor learning. Kojima, Y., & Soetedjo, R. (2018). Proceedings of the National Academy of Sciences, 115(38), E8987–E8995.

Neural Entrainment Determines the Words We Hear. Kösem, A., Bosker, H. R., Takashima, A., Meyer, A., Jensen, O., & Hagoort, P. (2018). Current Biology, 28(18), 2867–2875.e3.

Traits of criticality in membrane potential fluctuations of pyramidal neurons in the CA1 region of rat hippocampus. Kosmidis, E. K., Contoyiannis, Y. F., Papatheodoropoulos, C., & Diakonos, F. K. (2018). European Journal of Neuroscience, 48(6), 2343–2353.

Big-Loop Recurrence within the Hippocampal System Supports Integration of Information across Episodes. Koster, R., Chadwick, M. J., Chen, Y., Berron, D., Banino, A., Düzel, E., … Kumaran, D. (2018). Neuron, 99(6), 1342–1354.e6.

Multi-dimensional Coding by Basolateral Amygdala Neurons. Kyriazi, P., Headley, D. B., & Pare, D. (2018). Neuron, 99(6), 1315–1328.e5.

Gain control explains the effect of distraction in human perceptual, cognitive, and economic decision making. Li, V., Michael, E., Balaguer, J., Castañón, S. H., & Summerfield, C. (2018). Proceedings of the National Academy of Sciences, 115(38), E8825–E8834.

Generative Predictive Codes by Multiplexed Hippocampal Neuronal Tuplets. Liu, K., Sibille, J., & Dragoi, G. (2018). Neuron, 99(6), 1329–1341.e6.

Sensing with tools extends somatosensory processing beyond the body. Miller, L. E., Montroni, L., Koun, E., Salemme, R., Hayward, V., & Farnè, A. (2018). Nature, 561(7722), 239–242.

Monitoring and Updating of Action Selection for Goal-Directed Behavior through the Striatal Direct and Indirect Pathways. Nonomura, S., Nishizawa, K., Sakai, Y., Kawaguchi, Y., Kato, S., Uchigashima, M., … Kimura, M. (2018). Neuron, 99(6), 1302–1314.e5.

Invariant Models for Causal Transfer Learning. Rojas-Carulla, M., Schölkopf, B., Turner, R., & Peters, J. (2018). Journal of Machine Learning Research, 19(36), 1–34.

Functional properties of stellate cells in medial entorhinal cortex layer II. Rowland, D. C., Obenhaus, H. A., Skytøen, E. R., Zhang, Q., Kentros, C. G., Moser, E. I., & Moser, M.-B. (2018). eLife, 7, e36664.

A cortical filter that learns to suppress the acoustic consequences of movement. Schneider, D. M., Sundararajan, J., & Mooney, R. (2018). Nature, 561(7723), 391–395.

Making Better Use of the Crowd: How Crowdsourcing Can Advance Machine Learning Research. Vaughan, J. W. (2018). Journal of Machine Learning Research, 18(193), 1–46.

Computing with Spikes: The Advantage of Fine-Grained Timing. Verzi, S. J., Rothganger, F., Parekh, O. D., Quach, T.-T., Miner, N. E., Vineyard, C. M., … Aimone, J. B. (2018). Neural Computation, 30(10), 2660–2690.

#science#Neuroscience#computational neuroscience#Brain science#research#scientific publications#neurobiology#cognition#cognitive science#psychophysics#machine learning

13 notes

·

View notes

Text

DIFFERENT TYPES OF CORONAS AND MACHINE LEARNING

CLASSIFICATION OF DIFFERENT TYPES OF CORONAS USING PARAMETRIZATION OF IMAGES AND MACHINE LEARNING

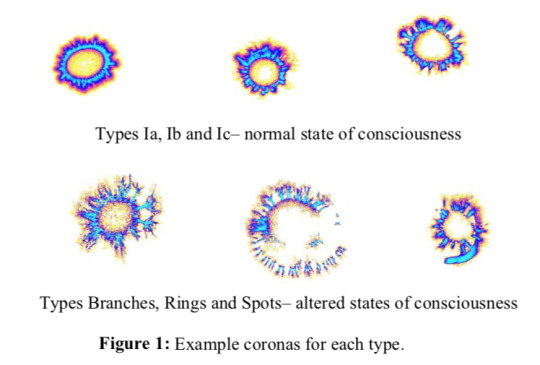

Igor Kononenko, Matjaz Bevk, Sasa Sadikov, Luka Sajn University of Ljubljana, Faculty of Computer and Information Science, Ljubljana, Slovenia Abstract We describe the development of computer classifiers for various types of coronas. In particular, we were interested to develop an algorithm for detection of coronas of people in altered states of consciousness (two-classes problem). Such coronas are known to have rings (double coronas), special branch-like structure of streamers and/or curious spots. Besides detecting altered states of consciousness we were interested also to classify various types of coronas (six-classes problem). We used several approaches to parametrization of images: statistical approach, principal component analysis, association rules and GDV software approach extended with several additional parameters. For the development of the classifiers we used various machine learning algorithms: learning of decision trees, naïve Bayesian classifier, K-nearest neighbors classifier, Support vector machine, neural networks, and Kernel Density classifier. We compared results of computer algorithms with the human expert’s accuracy (about 77% for the two-classes problem and about 60% for the six-classes problem). Results show that computer algorithms can achieve the same or even better accuracy than that of human experts (best results were up to 85% for the two-classes problem and up to 65% for the six-classes problem). 1. Introduction Recently developed technology by dr. Korotkov (1998) from Technical University in St.Petersburg, based on the Kirlian effect, for recording the human bio-electromagnetic field (aura) using the Gas Discharge Visualization (GDV) technique provides potentially useful information about the biophysical and/or psychical state of the recorded person. In order to make the unbiased decisions about the state of the person we want to be able to develop the computer algorithm for extracting information/describing/classifying/making decisions about the state of the person from the recorded coronas of fingertips. The aim of our study is to differentiate 6 types of coronas, 3 types in normal state of consciousness: Ia, Ib, Ic (pictures were recorded with single GDV camera in Ljubljana, all with the same settings of parameters, classification into 3 types was done manually): Ia – harmonious energy state (120 coronas) Ib – non-homogenous but still energetically full (93 coronas) Ic – energetically poor (76 coronas) and 3 types in altered states of consciousness (pictures obtained from dr. Korotkov, recorded by different GDV cameras with different settings of parameters and pictures were not normalized – they were of variable size): Rings – double coronas (we added 7 pictures of double coronas recorded in Ljubljana) (90 coronas) Branches – long streamers branching in various directions (74 coronas) Spots – unusual spots (51 coronas) Our aim is to differentiate normal from altered state of consciousness (2 classes) and to differentiate among all 6 types of coronas (6 classes). Figure 1 provides example coronas for each type.

GDV Corona's types 2. The methodology We first had to preprocess all the pictures so that all were of equal size (320 x 240). We then described the pictures with various sets of numerical parameters (attributes) with five different parametrization algorithms (described in more detail in the next section): a) IP (Image Processor – 22 attributes), b) PCA (Principal Component Analysis), c) Association Rules, d) GDV Assistant with some basic GDV parameters, e)GDV Assistant with additional parameters. Therefore we had available 5 different learning sets for two-classes problem: altered (one of Rings, Spots, and Branches) versus non- altered (one of Ia, Ib, Ic) state of consciousness. Some of the sets were used also as six-classes problems (differentiating among all six different types of coronas). We tried to solve some of the above classification tasks by using various machine learning algorithms as implemented in Weka system (Witten and Frank, 2000): Quinlan's (1993) C4.5 algorithm for generating decision trees; K-nearest neighbor classifier by Aha, D., and D. Kibler (1991); Simple Kernel Density classifier; Naïve Bayesian classifier using estimator classes: Numeric estimator precision valuesare chosen based on analysis of the training data. For this reason, the classifier is not an Updateable Classifier (which in typical usage are initialized with zero training instances, see (John and Langley, 1995)); SMO implements John C. Platt's sequential minimal optimization algorithm for training a support vector classifier using polynomial kernels. It transforms the output of SVM Types Ia, Ib and Ic– normal state of consciousness into probabilities by applying a standard sigmoid function that is not fitted to the data. This implementation globally replaces all missing values and transforms nominal attributes into binary ones (see Platt, 1998; Keerthi et al., 2001); Neural networks: standard multilayared feedforward neural network with backpropagation of errors learning mechanism (Rumelhart et al., 1986). SMO algorithm can be used only for two-classes problems, while the other algorithms can be used on two-classes and on six-classes problems. Types Branches, Rings and Spots– altered states of consciousness Figure 1: Example coronas for each type. Full text PDF: 2004-Kononenko-altered-coronas References Aha, D., and D. Kibler (1991) "Instance-based learning algorithms", Machine Learning, vol.6, pp. 37-66. R. Agrawal, T. Imielinski, and A. Swami (1993) Mining association rules between sets of items in large databases. In P. Buneman and S. Jajodia, editors, Proceedings of the 1993 ACM SIGMOD International Conference on Mangement of Data, pages 207-216, Washington, D.C., 1993. R. Agrawal and R. Srikant (1994) Fast algorithms for mining association rules. In J. B. Bocca, M. Jarke, and C. Zaniolo, editors, Proc. 20th Int. Conf. Very Large Data Bases, VLDB, pages 487-499. Morgan Kaufmann. Bevk M. (2003) Texture Analysis with Machine Learning, M.Sc. Thesis, University of Ljubljana, Faculty of Computer and Information Science, Ljubljana, Slovenia. (in Slovene) Julesz, B., Gilbert, E.N., Shepp, L.A., Frisch H.L.(1973). Inability of Humans To Discriminate Between Visual Textures That Agree in Second-Order-Statistics, Perception 2, pp. 391-405. M. Bevk and I. Kononenko (2002) A statistical approach to texture description: A preliminary study. In ICML-2002 Workshop on Machine Learning in Computer Vision, pages 39-48, Sydney, Australia, 2002. R. Haralick, K. Shanmugam, and I. Dinstein (1973) Textural features for image classification. IEEE Transactions on Systems, Man and Cybernetics, pages 610-621. G. H. John and P. Langley (1995). Estimating Continuous Distributions in Bayesian Classifiers. Proceedings of the Eleventh Conference on Uncertainty in Artificial Intelligence. pp. 338-345. Morgan Kaufmann, San Mateo. S.S. Keerthi, S.K. Shevade, C. Bhattacharyya, K.R.K. Murthy (2001). Improvements to Platt's SMO Algorithm for SVM Classifier Design. Neural Computation, 13(3), pp 637-649, 2001. Korotkov, K. (1998) Aura and Consciousness, St.Petersburg, Russia: State Editing & Publishing Unit “Kultura”. Korotkov, K., Korotkin, D. (2001) Concentration dependence of gas discharge around drops of inorganic electrolytes, Journal of Applied Physics, Vol. 89, pp. 4732-4736. J. Platt (1998). Fast Training of Support Vector Machines using Sequential Minimal Optimization. Advances in Kernel Methods - Support Vector Learning, B. Schölkopf, C. Burges, and A. Smola, eds., MIT Press. W.H. Press, B.P. Flannery, S.A. Teukolsky, and W.T. Vetterling (1992) Numerical Recipes: The Art of Scientific Computing. Cambridge University Press, Cambridge (UK) and New York, 2ndedition. J.R. Quinlan (1993) C4.5 Programs for Machine Learning, Morgan Kaufmann. D.E. Rumelhart, G.E. Hinton, R.J. Williams (1986) Learning internal representations by error propagation. In: Rumelhart D.E. and McClelland J.L. (eds.) Parallel Distributed Processing, Vol. 1: Foundations. Cambridge: MIT Press. J. A. Rushing, H. S. Ranagath, T. H. Hinke, and S. J. Graves (2001) Using association rules as texture features. IEEE Transactions on Pattern Analysis and Machine Intelligence, pages 845-858. A. Sadikov (2002) Computer visualization, parametrization and analysis of images of electrical gas discgarge (in Slovene), M.Sc. Thesis, University of Ljubljana, 2002. L. Sirovich and M. Kirby (1987) A low-dimensional procedure for the characterisation of human faces. Journal of the Optical Society of America, pages 519-524. A. Sadikov, I. Kononenko, F. Weibel (2003) Analyzing Coronas of Fruits and Leaves, This volume. M. Turk and A. Pentland (1991) Eigenfaces for recognition. Journal of Cognitive Neuroscience, pages 71-86. I. H. Witten, E. Frank (2000) Data mining: Practical machine learning tools and techniques with Java implementations, Morgan Kaufmann. Read the full article

#coronadischarge#DIFFERENTTYPESOFCORONAS#Electrophotonicimaging#GDV#GDVGraph#GDVImage#GDVSoftware#GDV-gram#Korotkov'simages#MACHINELEARNING

0 notes

Text

Understanding Machine Learning: From Theory to Algorithms - eBook

Check out https://duranbooks.net/shop/understanding-machine-learning-from-theory-to-algorithms-ebook/

Understanding Machine Learning: From Theory to Algorithms - eBook

Machine learning is one of the fastest growing areas of computer science, with far-reaching applications. The aim of this digital textbook Understanding Machine Learning: From Theory to Algorithms (PDF) is to introduce machine learning, and the algorithmic paradigms it offers, in a principled way. The ebook provides a theoretical account of the fundamentals underlying machine learning and the mathematical derivations that transform these principles into practical algorithms. Following a presentation of the basics, the ebook covers a wide array of central topics unaddressed by previous textbooks. These include a discussion of the computational complexity of learning and the concepts of stability and convexity; important algorithmic paradigms including neural networks, stochastic gradient descent, and structured output learning; and emerging theoretical concepts such as the PAC-Bayes approach and compression-based bounds. Designed for beginning graduates or advanced undergraduates, the textbook makes the fundamentals and algorithms of machine learning accessible to students and non-expert readers in computer science, mathematics, statistics, and engineering.

Reviews

“This elegant ebook covers both rigorous theory and practical methods of machine learning. This makes it a rather unique resource, ideal for all those who want to understand how to find structure in data.” – Professor Bernhard Schölkopf, Max Planck Institute for Intelligent Systems

“This textbook gives a clear and broadly accessible view of the most important ideas in the area of full information decision problems. Written by 2 key contributors to the theoretical foundations in this area, it covers the range from algorithms to theoretical foundations, at a level appropriate for an advanced undergraduate course.” – Dr. Peter L. Bartlett, University of California, Berkeley

“This is a timely textbook on the mathematical foundations of machine learning, providing a treatment that is both broad and deep, not only rigorous but also with insight and intuition. It presents a wide range of classic, fundamental algorithmic and analysis techniques as well as cutting-edge research directions. This is a great ebook for anyone interested in the computational and mathematical underpinnings of this important and fascinating field.” – Avrim Blum, Carnegie Mellon University

0 notes

Text

Je me suis intéressé récemment aux travaux de Mehdi S. M. Sajjadi, Bernhard Schölkopf et Michael Hirsch présentés lors de l‘ICCV 2017 (International Conference on Computer Vision). Il s’agit d’un réseau de neurones profonds entrainé pour améliorer la résolution d’images d’animaux, au delà de ce qu’on arrive à faire avec des filtres graphiques évolués.Pourquoi faire ? Pensez à toutes ces caméras et appareils photos qui sont disposés dans les réserves naturelles et qui servent à la gestion de ces dernières. Et puis, au final, ce procédé peut-être adopté pour d’autres images, le tout est d’entrainer le réseau avec un échantillon suffisamment grand de photos.

Bref, dans l’idée, le principe est simple: on prend des photos en haute résolution, on divise leur résolution par 4 (SD), puis on demande au DNN de reformer une image HD à partir de la SD… et on corrige les poids en réutilisant la HD. Mais dans l’implémentation, c’est plus complexe. Leurs travaux sont expliqués ici.

L’implémentation a été réalisée en utilisant Tensorflow pour la partie DNN, et scipy pour les calculs et les filtres. On dispose du code source, non pas de l’entrainement du réseau, mais du modèle “pre-trained” qui sait déjà réaliser un tas de choses.

Voici mes propres tests réalisés à partir de photos prises sur google images (cliquez dessus pour agrandir et passer en mode galerie):

#gallery-0-10 { margin: auto; } #gallery-0-10 .gallery-item { float: left; margin-top: 10px; text-align: center; width: 33%; } #gallery-0-10 img { border: 2px solid #cfcfcf; } #gallery-0-10 .gallery-caption { margin-left: 0; } /* see gallery_shortcode() in wp-includes/media.php */

Image Source

Image Résultante

Image Originale

#gallery-0-11 { margin: auto; } #gallery-0-11 .gallery-item { float: left; margin-top: 10px; text-align: center; width: 33%; } #gallery-0-11 img { border: 2px solid #cfcfcf; } #gallery-0-11 .gallery-caption { margin-left: 0; } /* see gallery_shortcode() in wp-includes/media.php */

Image Source

Image Résultante

Image Originale

Image Source, c’est celle que je fournis au réseau pour avoir l’image résultante. A coté, je vous met l’originale pour comparaison.

J’ai même essayé avec un Tardigrade car j’imagine qu’il n’a pas du servir pour les tests:

#gallery-0-12 { margin: auto; } #gallery-0-12 .gallery-item { float: left; margin-top: 10px; text-align: center; width: 33%; } #gallery-0-12 img { border: 2px solid #cfcfcf; } #gallery-0-12 .gallery-caption { margin-left: 0; } /* see gallery_shortcode() in wp-includes/media.php */

Image Source

Image Résultante

Image Originale

C’est assez impressionnant, sachant que la base d’entrainement ne fait que 3339Ko !

L’architecture du DNN mise en place est résumée ici:

On peut télécharger différents sets, ainsi que les codes sources utilisant le modèle pré-entrainé sur cette page. Il n’y a pas que des animaux: on trouve aussi des visages, des fleurs, etc.

EnhanceNet: Single Image Super-Resolution Je me suis intéressé récemment aux travaux de Mehdi S. M. Sajjadi, Bernhard Schölkopf et Michael Hirsch présentés lors de l…

#Animaux#Bernhard Schölkopf#DNN#EnhanceNet#IA#ICCV 2017#Images#Mehdi S. M. Sajjadi#Michael Hirsch#TensorFlow

0 notes

Link

Researchers have used adversarial neural networks to create a system capable of turning pixelated images into high-resolution ones. The system is essentially comprised of one network that invents the missing details, and a second network that verifies the inventions’ plausibility.

But while this system could be used to turn the blurry image of a suspect’s face into a photorealistic one, it could not be used for convictions—as it’s not a real face revealed, but rather a fictional face imagined. Sometimes the truth is blurrier than fiction.

0 notes

Text

Isabelle Guyon, Bernhard Schölkopf and Vladimir Vapnik win the BBVA Frontiers Award in ICT#HIV #ResearchMatters #BioTech #Virologyhttps://t.co/0HW0qFQfNN via @scienmag

— HIV & AIDS Updates U=U (@HIVAIDSupdates) February 25, 2020

from Twitter https://twitter.com/HIVAIDSupdates February 25, 2020 at 09:00AM

0 notes

Link

Isabelle Guyon, Bernhard Schölkopf and Vladimir Vapnik win the BBVA Frontiers Award in ICT - EurekAlert https://ift.tt/3a5gMGz

0 notes

Text

Tweeted

Isabelle Guyon, Bernhard Schölkopf y Vladimir Vapnik, Premio «Fronteras del Conocimiento» de la Fundación BBVA https://t.co/2GPH2fJdc5

— AGS&B (@agsb_bilbao) February 21, 2020

0 notes

Quote

Bernhard Schölkopf’s work has included a significant contribution to the development of support-vector machines and of a more general class of algorithms based on similar mathematical principles. These calculation specifications, which are called kernel methods, make it possible to classify objects. Even the first algorithms were able to recognize handwritten numbers almost as well as people, and better than any computing programs. In doing so, they use a mathematically transparent process. Schölkopf’s work has made it possible to develop support-vector machines and kernel methods further for applications in many areas. Today, they are e.g. used while processing medical images, in the manufacture of semi-conductors and in search engines.

Körber Prize 2019 for Bernhard Schölkopf | Max-Planck-Gesellschaft

0 notes

Photo

#Repost - @gerdycoool65 by @elbadipalace Palace El Badi #elbadipalace #marrakech #marrakesh #marrakesch #marokko #marocco #maroc #northafrica #canon #canonphotography #canonfotografie #canon_photos #canonglobal #canondeutschland #schölkopf #schoelkopf #museum #holiday #vacation #trip #art (at El Badi Palace) https://www.instagram.com/p/Cp-7yqqIu6Q/?igshid=NGJjMDIxMWI=

#repost#elbadipalace#marrakech#marrakesh#marrakesch#marokko#marocco#maroc#northafrica#canon#canonphotography#canonfotografie#canon_photos#canonglobal#canondeutschland#schölkopf#schoelkopf#museum#holiday#vacation#trip#art

0 notes

Text

Körber Prize 2019 Goes to Bernhard Schölkopf

Körber Prize 2019 Goes to Bernhard Schölkopf

The 2019 Körber European Science Prize, endowed with one million euros, is to be awarded to the German physicist, mathematician and computer scientist Bernhard Schölkopf. He has developed mathematical methods that have made a significant contribution to helping artificial intelligence (AI) reach its most recent heights. Schölkopf achieved worldwide renown with support-vector machines (SVMs).…

View On WordPress

0 notes

Text

End of February Reading List

Yang, Tianbao and Li, Yu-Feng and Mahdavi, Mehrdad and Jin, Rong and Zhou, Zhi-Hua, "Nystrom Method vs Random Fourier Features A Theoretical and Empirical Comparison", Advances in Neural Information Processing Systems, 2012

Bach, Francis, "On the Equivalence between Kernel Quadrature Rules and Random Feature Expansions", 2015

Eldredge, Nathaniel, "Analysis and Probability on Infinite-Dimensional Spaces", 2016

Bach, Francis, "Breaking the curse of dimensionality in regression", JMLR, 2017

Roy, D. and Teh, Y. W., "The Mondrian Process", Advances in Neural Information Processing Systems, 2009

Rudi, Alessandro and Camoriano, Raffaello and Rosasco, Lorenzo, "Less is More: Nystr$\backslash$"om Computational Regularization", 2015

Schölkopf, Bernhard and Smola, A J and Muller, K R, "Kernel Principal Component Analysis", Advances in kernel methods support vector learning, 1999

Rosasco, Lorenzo, "On Learning with Integral Operators", Journal of Machine Learning Research, 2010

Avron, Haim and Clarkson, Kenneth L. and Woodruff, David P., "Faster Kernel Ridge Regression Using Sketching and Preconditioning", 2016

Gonen, Alon and Shalev-Shwartz, Shai, "Faster SGD Using Sketched Conditioning", 2015

0 notes

Text

Amazon to open visually focused AI research hub in Germany

Ecommerce giant Amazon has announced a new research center in Germany focused on developing AI to improve the customer experience — especially in visual systems.

Amazon said research conducted at the hub will also aim to benefit users of Amazon Web Services and its voice driven AI assistant tech, Alexa.

The center will be based in Tübingen, near the Max Planck Institute for Intelligent Systems‘ campus, and will be staffed with more than 100 machine learning engineers.

The new 100+ “highly qualified” jobs will be created over the next five years, it said today. The site is Amazon’s fourth Research Center in Germany — after Berlin, Dresden and Aachen.

For the Tübingen hub, the company is collaborating with the Max Planck Society on an earlier regional research collaboration that kicked off in December 2016 and is also focused on AI, as well as on bolstering a local startup ecosystem.

Robotics, machine learning and machine vision are key areas of focus for the so-called ‘Cyber Valley’ initiative. Existing partner companies in that effort include BMW, Bosch, Daimler, IAV, Porsche and ZF Friedrichshafen — and now Amazon.

As with other research partners, Amazon will be contributing €1.25 million to set up research groups in the Stuttgart and Tübingen regions, the Society said today.

“We appreciate Amazon’s commitment in the Cyber Valley and to research on artificial intelligence,” said Max Planck president Martin Stratmann in a statement. “We gain another strong cooperation partner who will further increase the international significance of research in the area of machine learning and computer vision in the Stuttgart and Tübingen region.”

“With our Amazon Research center in Tübingen, we will become part of one of the largest research initiatives in Europe in the area of artificial intelligence. This underlines our commitment to create high-skilled jobs in breakthrough technologies,” added Ralf Herbrich, director of machine learning at Amazon and MD of the Amazon Development Center Germany, in another supporting statement.

Earlier this month TechCrunch broke the news that Amazon had acquired 3D body model startup, Body Labs, whose scientific advisor and co-founder — Dr Michael J Black — is a director at the Max Planck Institute for Intelligent Systems’ Department of Perceptive Systems.

The Institute generally describes its goal being “to understand the principles of perception, learning and action in autonomous systems that successfully interact with complex environments and to use this understanding to design future systems”.

Amazon said today that Dr Black will support its the new research hub as an Amazon Scholar, along with another Max Planck director, Dr Bernhard Schölkopf, who is based in the Department of Empirical Inference.

Both will also continue to manage their respective departments at the Institute, it added.

“Schölkopf is a leading expert in machine learning in Europe and co-inventor of computer-aided photography. He has also developed pioneering technologies through which computer causality can be learned. With causality, AI systems predict customer behavior in response to automated decisions, such as the order of the search results, to optimize the search experience,” said Amazon. “Black is a leading expert in the field of machine vision and co-founder of the Body Labs company, which markets AI body procedures for capturing human body movements and shapes from 3D images for use in various industries.”

As we suggested at the time, Amazon’s purchase of the 3D body model startup looks primarily like a talent-based acquihire — to bring Black’s visual systems’ expertise into the fold.

Although the Max Planck Institute also manages and licenses thousands of patents — so smoother access, via Black’s connections, to key technologies for licensing purposes may also be part of its thinking as it spends a few euros to forge closer ties with the German research network.

Investing in business critical research and the next generation of AI researchers is also clearly on the slate here for Amazon: As part of the collaboration it says it will be providing the Society with research awards worth €420,000 per year.

A spokesperson confirmed this funding will be provided for five years, although it’s not clear exactly how many PhD candidates and Post-Doc research students will get funded from out of Amazon’s pot of money each year.

The Society said it will use the funding to finance the research activities of doctoral and postdoctoral students at the Max Planck Institute for Intelligent Systems.

“The support from Amazon and the other Cyber Valley partners enables us to further improve the training of highly qualified junior researchers in the field of artificial intelligence,” said Schölkopf in a statement. “This will help to ensure that we continue to provide both science and industry with creative minds to consolidate our pioneering position in intelligent systems.”

Computer vision has become a hugely important AI research area over the past decade — yielding powerful visual systems that can, for example, quickly and accurately detect and recognize objects, individual faces and body postures, which in turn can be used to feed and enhance the utility and intelligence of AI assistant systems.

And while CV research has already been fairly widely commercially applied by tech giants, there’s plenty of challenges remaining and academics continue to work on enhancing and expanding the power of visual AI systems — with tech giants like Amazon in close pursuit of any gains.

The basic rule of thumb is: The bigger the platforms, the bigger the potential rewards if smarter visual systems can shave operating costs and user friction from products and services at scale.

The Tübingen R&D hub is Amazon’s first German center focused on visual AI research. Though it’s just the latest extension of already extensive Amazon R&D efforts on this front (a quick LinkedIn job search currently lists ~470 Amazon jobs involving computer vision in various locations worldwide).

Amazon’s Berlin research hub started as a customer service center but since 2013 has also included dev work for the cloud business of Amazon Web Services (including hypervisors, operating systems, management tools and self-learning technologies).

While its Dresden hub houses the kernel and OS team that works on the core of EC2, the actual virtual compute instance definitions and Amazon Linux, the operating system for its cloud.

In Aachen its R&D hub houses engineers working on Alexa and architecting cloud AWS services.

Read more: http://ift.tt/2gD1K4N

from Viral News HQ http://ift.tt/2AJOKze via Viral News HQ

0 notes

Link

• Amazon plans to create over 100 high-skilled jobs in machine learning at a new Amazon Research Center adjacent to Tuebingen’s Max Planck Society campus within the next five years with renowned Max Planck scientists Bernhard Schölkopf and Michael J. via Pocket

0 notes

Photo

mothers, beware Schölkopf (MLSS, Tübingen, 2017)

0 notes