#retrieval augmented generation

Explore tagged Tumblr posts

Text

How Agentic AI & RAG Revolutionize Autonomous Decision-Making

In the swiftly advancing realm of artificial intelligence, the integration of Agentic AI and Retrieval-Augmented Generation (RAG) is revolutionizing autonomous decision-making across various sectors. Agentic AI endows systems with the ability to operate independently, while RAG enhances these systems by incorporating real-time data retrieval, leading to more informed and adaptable decisions. This article delves into the synergistic relationship between Agentic AI and RAG, exploring their combined impact on autonomous decision-making.

Overview

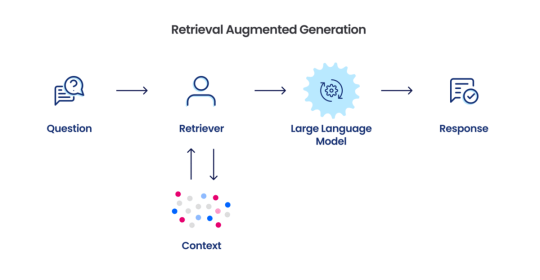

Agentic AI refers to AI systems capable of autonomous operation, making decisions based on environmental inputs and predefined goals without continuous human oversight. These systems utilize advanced machine learning and natural language processing techniques to emulate human-like decision-making processes. Retrieval-Augmented Generation (RAG), on the other hand, merges generative AI models with information retrieval capabilities, enabling access to and incorporation of external data in real-time. This integration allows AI systems to leverage both internal knowledge and external data sources, resulting in more accurate and contextually relevant decisions.

Read more about Agentic AI in Manufacturing: Use Cases & Key Benefits

What is Agentic AI and RAG?

Agentic AI: This form of artificial intelligence empowers systems to achieve specific objectives with minimal supervision. It comprises AI agents—machine learning models that replicate human decision-making to address problems in real-time. Agentic AI exhibits autonomy, goal-oriented behavior, and adaptability, enabling independent and purposeful actions.

Retrieval-Augmented Generation (RAG): RAG is an AI methodology that integrates a generative AI model with an external knowledge base. It dynamically retrieves current information from sources like APIs or databases, allowing AI models to generate contextually accurate and pertinent responses without necessitating extensive fine-tuning.

Know more on Why Businesses Are Embracing RAG for Smarter AI

Capabilities

When combined, Agentic AI and RAG offer several key capabilities:

Autonomous Decision-Making: Agentic AI can independently analyze complex scenarios and select effective actions based on real-time data and predefined objectives.

Contextual Understanding: It interprets situations dynamically, adapting actions based on evolving goals and real-time inputs.

Integration with External Data: RAG enables Agentic AI to access external databases, ensuring decisions are based on the most current and relevant information available.

Enhanced Accuracy: By incorporating external data, RAG helps Agentic AI systems avoid relying solely on internal models, which may be outdated or incomplete.

How Agentic AI and RAG Work Together

The integration of Agentic AI and RAG creates a robust system capable of autonomous decision-making with real-time adaptability:

Dynamic Perception: Agentic AI utilizes RAG to retrieve up-to-date information from external sources, enhancing its perception capabilities. For instance, an Agentic AI tasked with financial analysis can use RAG to access real-time stock market data.

Enhanced Reasoning: RAG augments the reasoning process by providing external context that complements the AI's internal knowledge. This enables Agentic AI to make better-informed decisions, such as recommending personalized solutions in customer service scenarios.

Autonomous Execution: The combined system can autonomously execute tasks based on retrieved data. For example, an Agentic AI chatbot enhanced with RAG can not only answer questions but also initiate actions like placing orders or scheduling appointments.

Continuous Learning: Feedback from executed tasks helps refine both the agent's decision-making process and RAG's retrieval mechanisms, ensuring the system becomes more accurate and efficient over time.

Read more about Multi-Meta-RAG: Enhancing RAG for Complex Multi-Hop Queries

Example Use Case: Customer Service

Customer Support Automation Scenario: A user inquiries about their account balance via a chatbot.

How It Works: The Agentic AI interprets the query, determines that external data is required, and employs RAG to retrieve real-time account information from a database. The enriched prompt allows the chatbot to provide an accurate response while suggesting payment options. If prompted, it can autonomously complete the transaction.

Benefits: Faster query resolution, personalized responses, and reduced need for human intervention.

Example: Acuvate's implementation of Agentic AI demonstrates how autonomous decision-making and real-time data integration can enhance customer service experiences.

2. Sales Assistance

Scenario: A sales representative needs to create a custom quote for a client.

How It Works: Agentic RAG retrieves pricing data, templates, and CRM details. It autonomously drafts a quote, applies discounts as instructed, and adjusts fields like baseline costs using the latest price book.

Benefits: Automates multi-step processes, reduces errors, and accelerates deal closures.

3. Healthcare Diagnostics

Scenario: A doctor seeks assistance in diagnosing a rare medical condition.

How It Works: Agentic AI uses RAG to retrieve relevant medical literature, clinical trial data, and patient history. It synthesizes this information to suggest potential diagnoses and treatment options.

Benefits: Enhances diagnostic accuracy, saves time, and provides evidence-based recommendations.

Example: Xenonstack highlights healthcare as a major application area for agentic AI systems in diagnosis and treatment planning.

4. Market Research and Consumer Insights

Scenario: A business wants to identify emerging market trends.

How It Works: Agentic RAG analyzes consumer data from multiple sources, retrieves relevant insights, and generates predictive analytics reports. It also gathers customer feedback from surveys or social media.

Benefits: Improves strategic decision-making with real-time intelligence.

Example: Companies use Agentic RAG for trend analysis and predictive analytics to optimize marketing strategies.

5.���Supply Chain Optimization

Scenario: A logistics manager needs to predict demand fluctuations during peak seasons.

How It Works: The system retrieves historical sales data, current market trends, and weather forecasts using RAG. Agentic AI then predicts demand patterns and suggests inventory adjustments in real-time.

Benefits: Prevents stockouts or overstocking, reduces costs, and improves efficiency.

Example: Acuvate’s supply chain solutions leverage predictive analytics powered by Agentic AI to enhance logistics operations

How Acuvate Can Help

Acuvate specializes in implementing Agentic AI and RAG technologies to transform business operations. By integrating these advanced AI solutions, Acuvate enables organizations to enhance autonomous decision-making, improve customer experiences, and optimize operational efficiency. Their expertise in deploying AI-driven systems ensures that businesses can effectively leverage real-time data and intelligent automation to stay competitive in a rapidly evolving market.

Future Scope

The future of Agentic AI and RAG involves the development of multi-agent systems where multiple AI agents collaborate to tackle complex tasks. Continuous improvement and governance will be crucial, with ongoing updates and audits necessary to maintain safety and accountability. As technology advances, these systems are expected to become more pervasive across industries, transforming business processes and customer interactions.

In conclusion, the convergence of Agentic AI and RAG represents a significant advancement in autonomous decision-making. By combining autonomous agents with real-time data retrieval, organizations can achieve greater efficiency, accuracy, and adaptability in their operations. As these technologies continue to evolve, their impact across various sectors is poised to expand, ushering in a new era of intelligent automation.

3 notes

·

View notes

Text

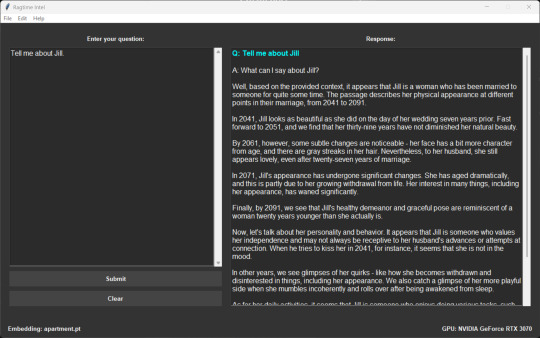

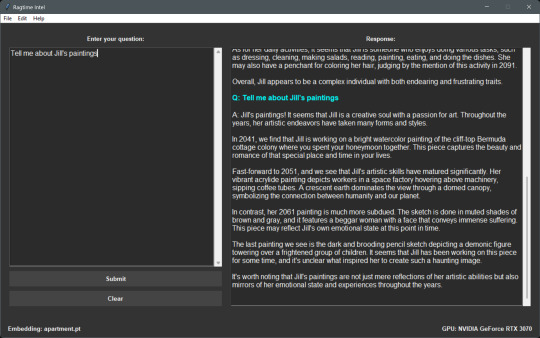

Sharing some AI development observations with the community using Infocom’s source code…

Been playing around with Retrieval-Augmented Generation (RAG) on Infocom’s ZIL source code, specifically A Mind Forever Voyaging. I set up a LoRA-embedded RAG model (using the ZIL source code) on top of Llama 3.2, running everything entirely locally, to see how well it could extract relevant information from the game’s original source code - no cloud processing, just local inference. Here’s an interesting result: I asked about "Jill," a fictional character from the game, and the system pulled details directly from the source, surfacing descriptions of her appearance and personality across different points in the story. Then, I asked about "Jill's paintings" and got back a breakdown of her work over the decades, reflecting how her artistic style evolved in the game’s narrative. The responses match exactly what’s encoded in the game’s logic, which makes me wonder how well this could work for analyzing or even reconstructing narrative structures from classic text adventures. Not really sure where this leads, but it’s been interesting seeing how AI interacts with something as structured (yet open-ended) as ZIL. Just sharing some observations from the process.

#AI#artificial intelligence#Infocom#ZIL#RAG#Retrieval Augmented Generation#AMFV#A Mind Forever Voyaging#LORA#Enhancements#Zork#Pixel Crisis

2 notes

·

View notes

Text

Advanced AI Prompt Engineering

Unlock the True Power of AI with Advanced AI Prompt Engineering Your Ultimate Handbook to Smarter, Sharper, and More Strategic AI Prompts If you’re still throwing simple prompts at AI and hoping for magic, you’re only scratching the surface. The real breakthroughs — the real wow moments — happen when you learn how to engineer prompts that think, reason, and build like a genius. That’s why I…

#Advanced Prompt Engineering#AI Content Creation#AI for Entrepreneurs#AI for Marketers#AI for Writers#AI Handbook#AI Prompting#AI Research#AI Tools#AI Writing#Chain of Thought Prompting#Creative AI#Future of AI#GPT-4 Prompting#Program Aided Prompting#Prompt Engineering Handbook#Retrieval Augmented Generation#Self-Consistency Prompting#Smarter AI Prompts#Tree of Thoughts Prompting

0 notes

Text

Empowering Businesses with AR Technology - Atcuality

Atcuality is at the forefront of innovation, delivering Augmented Reality Development Services that empower businesses to achieve more. By integrating AR into your operations, you can revolutionize product demonstrations, training sessions, and customer engagement strategies. Our solutions are designed to provide real-time interactivity, allowing users to visualize and interact with digital elements in their physical environments. Whether you need AR for retail, gaming, or enterprise applications, we tailor our services to meet your specific goals. With a focus on creativity and functionality, we ensure that your AR solutions not only meet but exceed expectations. Discover how Atcuality can help you unlock new dimensions of growth with AR technology.

#search engine marketing#search engine optimisation company#emailmarketing#search engine optimization#seo#search engine ranking#digital services#search engine optimisation services#digital marketing#seo company#seo marketing#seo services#seo expert#on page seo#social media marketing#retrieval augmented generation#augmented and virtual reality market#augmented intelligence#augmented reality#ar vr technology#virtual reality#online marketing#mobile marketing#cash collection application#website development#web hosting#web design#website#web development#website developer near me

0 notes

Text

Traditional RAG vs Agentic RAG: Impact on Businesses

Consider a customer reporting a malfunctioning camera. A support agent might initially consult the user manual for troubleshooting. If unsuccessful, they might search the web or a knowledge base for a solution. This iterative reasoning, information retrieval, and action process set agentic RAG apart from traditional RAG.

Businesses can utilise agentic retrieval-augmented generation for better data analysis, arrive at crucial decisions, and adapt to challenging or complex situations. It facilitates enhanced accuracy, enables the ability to manage intricate queries and efficiently adjust to diverse contexts and situations.

In the following section, we will explore key differences between traditional and agentic RAG. Compare the two and make an informed decision for your business because automation is the key to ensuring resilient business growth.

Agentic RAG vs Traditional RAG: The Differences

Features and Definition

Traditional RAG

Agentic RAG

Definition

Traditional RAG uses a single agent to retrieve information from a centralised database and generate contextually relevant responses. This basic model is common in applications like content creation and customer support.

Agentic RAG uses autonomous agents that dynamically select information retrieval strategies from diverse sources, enabling sophisticated adaptation to context.

Prompt Engineering

Relies mostly on manual prompt engineering and optimisation

Minimises requirement for manual prompts. It learns and generates responses according to prompt history.

Static Nature

Mechanical approach to extract information and comparatively reduced contextual value than agentic RAG

Utilise conversation history and ensure accurate retrieval policies

Multi-step Complexity

Do not contain three classifier types and extra models for multi-pronged tool usage and complex reasoning

Do not require complex models and separate classifiers. Agentic RAG handles multi-step reasoning for accurate responses.

Decision Making

Static rules for retrieval-augmented generation

Retrieves information as and when required after in-depth quality checks pre-and post information generation

Retrieval Process

It depends entirely on the initial prompt to retrieve information and documents.

It works on the environment, collects additional information, and provides contextually relevant information.

Adaptability

Low adaptability to evolving nature of information and datasets

Efficiently adjust according to real-time observations and feedback

Conclusion

Traditional RAG passively retrieves information based on a given query, whereas agentic RAG employs 'intelligent agents' that thoroughly assess and act with reason and contextually. This facilitates businesses' retrieval of more accurate and nuanced prompts. It helps manage complex multi-step tasks and adapt to changing conditions far more effectively. Agentic RAG thus functions as a proactive decision-making assistant, a significant advancement over traditional RAG's passive information retrieval for modern-day businesses.

#Agentic RAG#retrieval augmented generation#app development#software development#mobile application development#it services

0 notes

Text

Retrieval Augmented Generation | Hyperthymesia.ai

Discover the power of Retrieval Augmented Generation technology in the USA. Our advanced AI solutions combine retrieval and generation techniques to improve information access and generation, delivering precise and relevant results. Explore how this innovative approach can benefit you at Hyperthymesia.ai.

Retrieval Augmented Generation

0 notes

Text

Retrieval Augmented Generation | Hyperthymesia.ai

Discover the power of Retrieval Augmented Generation technology in the USA. Our advanced AI solutions combine retrieval and generation techniques to improve information access and generation, delivering precise and relevant results. Explore how this innovative approach can benefit you at Hyperthymesia.ai.

Retrieval Augmented Generation

0 notes

Text

Retrieval Augmented Generation RAG

0 notes

Text

Engage Smarter with Advanced Augmented Reality Development

At Atcuality, we redefine innovation with our augmented reality development services, offering businesses a chance to create unforgettable customer experiences. Our AR solutions are perfect for industries like retail, healthcare, and gaming, providing interactive and immersive applications that captivate users. We develop AR-based product demos, virtual fittings, and training simulators to help businesses achieve their goals with ease. By integrating AR into your operations, you can enhance customer engagement, boost sales, and increase brand loyalty. Trust Atcuality to deliver AR solutions that combine functionality with creativity, ensuring your business stays ahead in the technology race.

#digital services#digital marketing#seo company#seo services#seo#emailmarketing#search engine optimization#social media marketing#search engine marketing#search engine ranking#search engine optimisation services#search engine optimisation company#augmented and virtual reality market#augmented reality#augmented intelligence#retrieval augmented generation#ar vr technology#vr games#vr development#vr la rwd#game development#game design#metaverse#blockchain#cash collection application#website development#website design#website optimization#website developer near me#web development

1 note

·

View note

Text

Where have you gone, Larry Winget - Procurement's version of Joe Dimaggio

What does Generative AI and Kool-Aid have in common?

Does procurement, more specifically ProcureTech, need a Larry Winget more than ever! I have shared the virtual airwaves with Larry, and he even wrote a positive review of one of my books. In short, the gentleman who is known as the Pitbull of Personal Development, the star of a popular A&E television show, and the author of New York Times and Washington Post bestselling titles like “They Call It…

0 notes

Text

the cofounder of cohere is also the lead singer for good kid

what the fuck

0 notes

Text

Retrieval Augmented Generation | Hyperthymesia.ai

Discover the power of Retrieval Augmented Generation technology in the USA. Our advanced AI solutions combine retrieval and generation techniques to improve information access and generation, delivering precise and relevant results. Explore how this innovative approach can benefit you at Hyperthymesia.ai.

Retrieval Augmented Generation

0 notes

Text

Retrieval Augmented Generation | Hyperthymesia.ai

Discover the power of Retrieval Augmented Generation technology in the USA. Our advanced AI solutions combine retrieval and generation techniques to improve information access and generation, delivering precise and relevant results. Explore how this innovative approach can benefit you at Hyperthymesia.ai.

Retrieval Augmented Generation

0 notes

Text

What are the challenges of retrieval augmented generation?

Retrieval Augmented Generation (RAG) represents a cutting-edge technique in the field of artificial intelligence, blending the prowess of generative models with the vast storage capacity of retrieval systems.

This method has emerged as a promising solution to enhance the quality and relevance of generated content. However, despite its significant potential, RAG faces numerous challenges that can impact its effectiveness and applicability in real-world scenarios.

Understanding the Complexity of Integration

One of the primary challenges of implementing RAG systems is the complexity associated with integrating two fundamentally different approaches: generative models and retrieval mechanisms.

Generative models, like GPT (Generative Pre-trained Transformer), are designed to predict and produce sequences of text based on learned patterns and contexts. Conversely, retrieval systems are engineered to efficiently search and fetch relevant information from a vast database, typically structured for quick lookup.

The integration requires a seamless interplay between these components, where the retrieval model first provides relevant context or factual information which the generative model then uses to produce coherent and contextually appropriate responses.

This dual-process necessitates sophisticated algorithms to manage the flow of information and ensure that the output is not only accurate but also maintains a natural language quality that meets user expectations.

Scalability and Computational Efficiency

Another significant hurdle is scalability and computational efficiency. RAG systems need to process large volumes of data rapidly to retrieve relevant information before generation. The "best embedding model" used in these systems must efficiently encode and compare vectors to find the best matches from the database.

This process, especially when scaled to larger databases or more complex queries, can become computationally expensive and slow, potentially limiting the practicality of RAG systems for applications requiring real-time responses.

Moreover, as the size of the data and the complexity of the tasks increase, the computational load can become overwhelming, necessitating more powerful hardware or optimized software solutions that can handle these demands without compromising performance.

Data Quality and Relevance

The effectiveness of a RAG system heavily relies on the quality and relevance of the data within the retrieval database. Inaccuracies, outdated information, or biases in the data can lead to inappropriate or incorrect outputs from the generative model.

Ensuring the database is regularly updated and curated to reflect accurate and unbiased information poses a considerable challenge, especially in dynamically changing fields such as news or scientific research.

Balancing Creativity and Fidelity

A unique challenge in RAG systems is balancing creativity with fidelity. While generative models are valued for their ability to create fluent and novel text, the addition of a retrieval system focuses on providing accurate and factual content.

Striking the right balance where the model remains creative but also adheres strictly to retrieved facts requires fine-tuning and continuous calibration of the model's parameters.

Ethical and Privacy Concerns

With the ability to retrieve and generate content based on vast amounts of data, RAG systems raise ethical and privacy concerns. The use of personal data or sensitive information within the retrieval database must be handled with strict adherence to data protection laws and ethical guidelines.

Ensuring that these systems do not perpetuate biases or misuse personal information is a challenge that developers and users alike must navigate carefully.

Conclusion

Retrieval-Augmented Generation represents a significant advancement in the field of AI, offering the potential to create more accurate, relevant, and context-aware systems. However, the challenges it faces—from integration complexity and scalability to ethical concerns—require ongoing attention and innovative solutions. As research and technology continue to evolve, the future of RAG looks promising, albeit demanding, as it paves the way for more intelligent and capable AI systems.

1 note

·

View note

Text

Empowering Your Business with AI: Building a Dynamic Q&A Copilot in Azure AI Studio

In the rapidly evolving landscape of artificial intelligence and machine learning, developers and enterprises are continually seeking platforms that not only simplify the creation of AI applications but also ensure these applications are robust, secure, and scalable. Enter Azure AI Studio, Microsoft’s latest foray into the generative AI space, designed to empower developers to harness the full…

View On WordPress

#AI application development#AI chatbot Azure#AI development platform#AI programming#AI Studio demo#AI Studio walkthrough#Azure AI chatbot guide#Azure AI Studio#azure ai tutorial#Azure Bot Service#Azure chatbot demo#Azure cloud services#Azure Custom AI chatbot#Azure machine learning#Building a chatbot#Chatbot development#Cloud AI technologies#Conversational AI#Enterprise AI solutions#Intelligent chatbot Azure#Machine learning Azure#Microsoft Azure tutorial#Prompt Flow Azure AI#RAG AI#Retrieval Augmented Generation

0 notes

Text

RAG Systems Reimagined: Efficiency at Its Best

When creating a RAG (Retrieval Augmented Generation) system, you infuse a Large Language Model (LLM) with fresh, current knowledge. The goal is to make the LLM's responses to queries more factual and reduce instances that might produce incorrect or "hallucinated '' information.

A RAG system is a sophisticated blend of generative AI's creativity and a search engine's precision. It operates through several critical components working harmoniously to deliver accurate and relevant responses.

Retrieval: This component acts first, scouring a vast database to find information that matches the query. It uses advanced algorithms to ensure the data it fetches is relevant and current.

Augmentation: This engine weaves the found data into the query following retrieval. This enriched context allows for more informed and precise responses.

Generation: This engine crafts the response with the context now broadened by external data. It relies on a powerful language model to generate answers that are accurate and tailored to the enhanced input.

We can further break down this process into the following stages:

Data Indexing: The RAG journey begins by creating an index where data is collected and organized. This index is crucial as it guides the retrieval engine to the necessary information.

Input Query Processing: When a user poses a question, the system processes this input, setting the stage for the retrieval engine to begin its search.

Search and Ranking: The engine sifts through the indexed data, ranking the findings based on how closely they match the user's query.

Prompt Augmentation: Next, we weave the top-ranked pieces of information into the initial query. This enriched prompt provides a deeper context for crafting the final response.

Response Generation: With the augmented prompt in hand, the generation engine crafts a well-informed and contextually relevant response.

Evaluation: Regular evaluations compare its effectiveness to other methods and assess any adjustments to ensure the RAG system performs at its best. This step measures the accuracy, reliability, and response time, ensuring the system's quality remains high.

RAG Enhancements:

To enhance the effectiveness and precision of your RAG system, we recommend the following best practices:

Quality of Indexed Data: The first step in boosting a RAG system's performance is to improve the data it uses. This means carefully selecting and preparing the data before it's added to the system. Remove any duplicates, irrelevant documents, or inaccuracies. Regularly update documents to keep the system current. Clean data leads to more accurate responses from your RAG.

Optimize Index Structure: Adjusting the size of the data chunks your RAG system retrieves is crucial. Finding the perfect balance between too small and too large can significantly impact the relevance and completeness of the information provided. Experimentation and testing are vital to determining the ideal chunk size.

Incorporate Metadata: Adding metadata to your indexed data can drastically improve search relevance and structure. Use metadata like dates for sorting or specific sections in scientific papers to refine search results. Metadata adds a layer of precision atop your standard vector search.

Mixed Retrieval Methods: Combine vector search with keyword search to capture both advantages. This hybrid approach ensures you get semantically relevant results while catching important keywords.

ReRank Results: After retrieving a set of documents, reorder them to highlight the most relevant ones. With Rerank, we can improve your models by re-organizing your results based on certain parameters. There are many re-ranker models and techniques that you can utilize to optimize your search results.

Prompt Compression: Post-process the retrieved contexts by eliminating noise and emphasizing essential information, reducing the overall context length. Techniques such as Selective Context and LLMLingua can prioritize the most relevant elements.

Hypothetical Document Embedding (HyDE): Generate a hypothetical answer to a query and use it to find actual documents with similar content. This innovative approach demonstrates improved retrieval performance across various tasks.

Query Rewrite and Expansion: Before processing a query, have an LLM rewrite it to express the user's intent better, enhancing the match with relevant documents. This step can significantly refine the search process.

By implementing these strategies, businesses can significantly improve the functionality and accuracy of their RAG systems, leading to more effective and efficient outcomes.

Using Karini AI’s purpose-built platform for GenAIOps, you can build production-grade, efficient RAG systems within minutes.

About Karini AI:

Fueled by innovation, we're making the dream of robust Generative AI systems a reality. No longer confined to specialists, Karini.ai empowers non-experts to participate actively in building/testing/deploying Generative AI applications. As the world's first GenAIOps platform, we've democratized GenAI, empowering people to bring their ideas to life – all in one evolutionary platform.

Contact:

Jerome Mendell

(404) 891-0255

#artificial intelligence#generative ai#karini ai#machine learning#genaiops#RAG Systems#Retrieval Augmented Generation#Data Intexing#AI Augmentation

0 notes