#refactor that logic away

Note

I was really excited about this project, and got immensely disappointed with the release of the UI. The fact it's almost a carbon copy of FlightRising's interface is just so... sad. It basically drains Pawborough of its own identity. I really-really hope you guys will consider changing it, because the original concept is lovely. Here's a few things I'd like to comment on:

1. FR's interface is dated. Currently, they're refactoring the site, and i wouldn't be surprised if their next goal after the refactor would be a UI redesign. And you guys, instead of trying to modernise it and make it your own, are copying a UI from *a decade ago*.

2. Why are you keeping the narrow column format of the site? Most people have a wide-screen monitor nowadays, and it looks bizarre to have such a compressed website. Please consider automatically adapting the site's layout to a user's screen width, sorta like Wolvden/Lioden does. IIRC, they have two columns of content (main stuff in the center & a column with user's data on the left), which stack into one column when you're using a mobile device (aka a narrow screen). But since you're planning to make a mobile version anyway, you should consider making the desktop version of the site wide and, well, desktop-friendly, and then figure out the mobile version as it's own thing.

3. Does the user box in the top right of the site really have to be literally identical to FR, with the same data placed in the same way, and also with the energy bar literally having the same exact segments on it? Especially since you have removed the flight banner, making that user block much less balanced looking. Instead, I think you guys can consider making it much more horizontal and narrow. Assuming the two variables on the right (book and turnip icons) are pet/familiar count and cat count, I feel like they can be dropped completely and moved to the user page. I don't think they need to be displayed at all times on all site's pages.

Image link

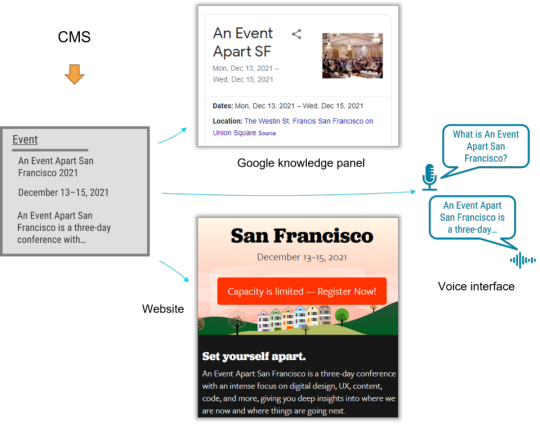

Alternatively, you can also use Lioden/Wolvden's approach and make a separate column, and then put all user information and bookmarks in that side column. As a compromise between FR & LD, you can place that user column on the right. Here's a screenshot from google for reference:

Image link

I'd also like to point out the bookmarks box in the column with user data. It's virtually unlimited and you can name your own bookmarks. Introducing this would allow you to largely collapse the left column with site navigation, because you don't use most of those links every day. As a FR player, I would only keep ~13 links in my always-visible bookmarks instead of the 29 available in the left navigation bar. It would be especially nice if I could add emojies into my bookmark names like you can on LD/WD, so it's easier to see what i need right away.

As a mini-addition, please work on the navigation column on the left. It's horrible on FR, and it will be horrible on PB if you introduce it. There's gotta be better ways to navigate the site.

4. I hope you reconsider some of the artstyle choices regarding backgrounds and decorations. What got me excited about PB was the absolutely fantastic character art from NPCs to the breed artworks. You seriously couldn't have picked a better artist for this over Amelia B. It has so much personality and immediately elevated PB in my eyes over Lorwolf, which had absolutely soulless art and looked almost traced from photos. But then I was absolutely heartbroken to see you have went with this odd, textured, sketchy style for the backdrops. Why? I'm assuming it's something like anime-style logic, where characters are cell-shaded and flat and backgrounds are painterly, but to pull that off you need a simply spectacular background artist and... i'm sorry, but your artists just don't pull it off. There's color going outside of lines on the opal waterfall background, some "hairy" scratchy lines that don't follow the shape of the object and dang it just looks flat in terms of values. Its not a terrible thing to make the cat pop, but the fact it's literally the opposite of the cats' style just makes it look odd. The obsidian sentry artwork is probably my least favorite out of everything shown by far. the head anatomy is all over the place and the shading is blurry and muddy where it looks like it should have sharp edges. It just seems like you hired a professional to do the breed artwork, and an actual newbie to do some of the items/backgrounds. Addittionally, i noticed that you're planning to introduce player-made familiars/decors, so that would introduce even more style variation into the equation. Please, please consider making the style same-ish for all site assets. It's not bad if the background assets stay low-contast and a bit flat, but at least give them the same linework as the cats in terms of weight, texture, and quality. Perhaps you can use colored lineart in decorations and backgrounds to make it more muted in comparison to the cats. And I see I'm not the only one asking for this, so please don't fall for the sunk cost fallacy and rework the assets you already have, instead of "dooming" your sites for decades of having blurry, sketchy backgrounds.

Anyway, all of this is coming from a good place, I promise this wasn't written in bad faith. Im extremely excited and passionate about this project and I really-really hope it succeeds. But please, not only listen to user feedback, but also make changes before it's too late.

Thank you for the feedback. To address a few things I believe were lost in translation that we hadn't made clear, and to address this feedback:

The narrow width of the container actually is a placeholder! We do wish to switch to a wider version, and the demo utilizes an early test build. We do already utilize flexbox, which will adjust to the player's screen size, but it's our intention to make the container limit bigger. On top of that, we are hoping to modernize much of the old interface style while keeping a nostalgic charm, including things such as integration of flexbox as an example. Thank you for pointing this out, as this was something that wasn't made clear, and that we did not think to mention.

The userbox is our design nadir right now, we understand, and we are looking to redesign it immediately. Our idea at this time is to disperse the pixel components among it into the ribbon, place where a graphic was intended to go into the navigation, and leave only the icon and energy bar at the top. Actually, we have design mockups with much similar placement to your mockup! Though we were considering putting money in the ribbon too so as to distance ourselves as much as possible. We will consider the feedback of leaving the components strictly on a user's page, so we will fiddle with this. As well, we will fiddle with information potentially in a column. Thank you for the effort you put in!

Regarding the user navigation, we could potentially make it collapsable, as well as introduce bookmarks for those who prefer it. However, to avoid the inaccessibility of dropdowns, the default state would have to remain uncollapsed. Making the navigation collapsable has actually been discussed previously as a good idea. As of now we are looking into increasing the margin of the list and importing imagery into it for better readability, thinking similar to Marapets and early Neopets, but different. What we enjoy about the navigation is a consistent knowledge and potential memorization of everything on a site that collapsed information does not provide, but perhaps we can design something that will allow for the best of both worlds. We are taking in this feedback to heart. Thank you for sharing what you as a user prefer from your game experience!

We understand your distaste for some of the Kickstarter assets, and should there be a demand we can reconsider the rendering for some of them, such as decor. We do, however, have several artists on the team that can do backgrounds and decor, and we shall work to better instruct style discrepancy. We apologize for the distaste in the assets, and we will improve with both stylistic direction and artistic synergy. The intention for our backgrounds is to bring a storybook feeling, and there is going to be an amount of subjectivity to the judgement of this choice. We apologize that it is not to your taste. Regardless, we appreciate your feedback, will note it for future adaptation of our stylistic direction, and once again apologize for your distaste. To clarify, "user-made" decor and companions will simply be user instruction on the design, while our art and design team is still controlling the final piece.

Thank you for feedback on the user experience, and we promise we have not been writing off these points entirely, but rather wanting to express that we understand and, as stated, want to incorporate this feedback while remaining true to our philosophy. We apologize that our communication was lackluster and will be keeping this as our statement on the matter before updating with our new designs. We will do better to communicate our intentions. We do want to hear what a user will most value from an interface experience! Once again, thank you for taking the time and effort to share this information with us, and thank you for the patience as we learn!

24 notes

·

View notes

Text

tired

made lots of progress on my point and click adventure game! by lots I mean the characters can now react to if the player has a specific item they care about lol.

am feeling somewhat conflicted about sharing more specific details about it. Part of the reason it exists is to be a surprise for a family member. I'm keeping more detailed logs on a private tumblr for now

am also tired. my interest is very much in learning godot to develop this game, and I feel like it's the most consistent forward momentum I've had with a project. Learning coding now, after some on-and-off flirtations with coding since about 2016, it feels like I'm... actually doing things. I'm struggling, and learning every step of the way, but I'm not floundering and giving up. I get really excited when I'm finally able to piece together a big new piece of functionality!

It's like gamedev in and of itself is like a logic puzzle game. Inheritance, composition, I look at the code I write and think about how can I make this prettier, or how can I make this more readable and understandable?

I think recently trying to really understand my ADHD, and how my brain works, is a key factor here. Before when i was trying to learn Unity in high school, I wasn't aware of what my ADHD was, y'know, actually doing for my attention and motivation, so the interest would come to make something, and then it would fly away the moment i didn't understand a concept.

Now though, I think being aware of my lack of consistent executive functioning means I'm constantly trying to accommodate myself in code. I'm not always 100% successful (I know for a fact today I wrote the same code twice in two places where it should definitely be just one function in a shared parent class...), but learning about these organizational maxims for object oriented programming is rewarding.

I really like the organization of code, the process of refactoring, and the mission to make it really clean and accessible and readable for myself, so i don't get lost. It really is like sitting down with a nice crossword puzzle, or picross, it just gets my brain engaged just right.

I think tomorrow I've got some refactoring work to do. I look forward to that.

Other stuff

my screenwriting isn't happening at the moment, partially because I'm obviously focused on prototyping my game, which is much more immediately rewarding and novel. But I'm chipping away at ideas and outlines. I'm actually toying with turning one of those ideas into a point and click game, or at least a visual novel. I think the budget and time to do so would be much more attainable than doing some of those ideas as a feature length movie. But I don't know really.

It's absurd how much I'd like to be a film writer-director with my ADHD. There's just so much executive functioning required at every step. It's incredibly logistical, with so many moving parts constantly juggling and adapting and stumbling forward. I need to do research on how directors with ADHD are able to live with that as their job.

I don't have anything else to say, I'm just waiting to be tired enough to fall asleep. good night

8 notes

·

View notes

Text

Price: [price_with_discount]

(as of [price_update_date] - Details)

[ad_1]

“Eric Evans has written a fantastic book on how you can make the design of your software match your mental model of the problem domain you are addressing. “His book is very compatible with XP. It is not about drawing pictures of a domain; it is about how you think of it, the language you use to talk about it, and how you organize your software to reflect your improving understanding of it. Eric thinks that learning about your problem domain is as likely to happen at the end of your project as at the beginning, and so refactoring is a big part of his technique. “The book is a fun read. Eric has lots of interesting stories, and he has a way with words. I see this book as essential reading for software developers―it is a future classic.” ―Ralph Johnson, author of Design Patterns “If you don’t think you are getting value from your investment in object-oriented programming, this book will tell you what you’ve forgotten to do. “Eric Evans convincingly argues for the importance of domain modeling as the central focus of development and provides a solid framework and set of techniques for accomplishing it. This is timeless wisdom, and will hold up long after the methodologies du jour have gone out of fashion.” ―Dave Collins, author of Designing Object-Oriented User Interfaces “Eric weaves real-world experience modeling―and building―business applications into a practical, useful book. Written from the perspective of a trusted practitioner, Eric’s descriptions of ubiquitous language, the benefits of sharing models with users, object life-cycle management, logical and physical application structuring, and the process and results of deep refactoring are major contributions to our field.” ―Luke Hohmann, author of Beyond Software Architecture “This book belongs on the shelf of every thoughtful software developer.” ―Kent Beck “What Eric has managed to capture is a part of the design process that experienced object designers have always used, but that we have been singularly unsuccessful as a group in conveying to the rest of the industry. We've given away bits and pieces of this knowledge...but we've never organized and systematized the principles of building domain logic. This book is important.” ―Kyle Brown, author of Enterprise Java™ Programming with IBM® WebSphere® The software development community widely acknowledges that domain modeling is central to software design. Through domain models, software developers are able to express rich functionality and translate it into a software implementation that truly serves the needs of its users. But despite its obvious importance, there are few practical resources that explain how to incorporate effective domain modeling into the software development process. Domain-Driven Design fills that need. This is not a book about specific technologies. It offers readers a systematic approach to domain-driven design, presenting an extensive set of design best practices, experience-based techniques, and fundamental principles that facilitate the development of software projects facing complex domains. Intertwining design and development practice, this book incorporates numerous examples based on actual projects to illustrate the application of domain-driven design to real-world software development. Readers learn how to use a domain model to make a complex development effort more focused and dynamic. A core of best practices and standard patterns provides a common language for the development team. A shift in emphasis―refactoring not just the code but the model underlying the code―in combination with the frequent iterations of Agile development leads to deeper insight into domains and enhanced communication between domain expert and programmer. Domain-Driven Design then builds on this foundation, and addresses modeling and design for complex systems and larger organizations.Specific topics covered include: Getting

all team members to speak the same language Connecting model and implementation more deeply Sharpening key distinctions in a model Managing the lifecycle of a domain object Writing domain code that is safe to combine in elaborate ways Making complex code obvious and predictable Formulating a domain vision statement Distilling the core of a complex domain Digging out implicit concepts needed in the model Applying analysis patterns Relating design patterns to the model Maintaining model integrity in a large system Dealing with coexisting models on the same project Organizing systems with large-scale structures Recognizing and responding to modeling breakthroughs With this book in hand, object-oriented developers, system analysts, and designers will have the guidance they need to organize and focus their work, create rich and useful domain models, and leverage those models into quality, long-lasting software implementations.

Publisher : Addison-Wesley; 1st edition (4 September 2003)

Language : English

Hardcover : 560 pages

ISBN-10 : 0321125215

ISBN-13 : 978-0321125217

Item Weight : 1 kg 240 g

Dimensions : 18.8 x 3.56 x 24.26 cm

Country of Origin : India

[ad_2]

0 notes

Text

seriously though healing causing F.C.G pain sure uh. Sure throws some complications into the works huh. At least for me. Mentally.

#im gonna need fearne to up her healing spell count reaaaal quick#critical role#cr spoilers#c3e32#fcg#cr liveblogging#like. hm. HM.#can we remove that particular subroutine somehow#just comment out that bit of code#refactor that logic away#pls

97 notes

·

View notes

Text

☄️ Pufferfish, please scale the site!

We created Team Pufferfish about a year ago with a specific goal: to avert the MySQL apocalypse! The MySQL apocalypse would occur when so many students would work on quizzes simultaneously that even the largest MySQL database AWS has on offer would not be able to cope with the load, bringing the site to a halt.

A little over a year ago, we forecasted our growth and load-tested MySQL to find out how much wiggle room we had. In the worst case (because we dislike apocalypses), or in the best case (because we like growing), we would have about a year’s time. This meant we needed to get going!

Looking back on our work now, the most important lesson we learned was the importance of timely and precise feedback at every step of the way. At times we built short-lived tooling and process to support a particular step forward. This made us so much faster in the long run.

🏔 Climbing the Legacy Code Mountain

Clear from the start, Team Pufferfish would need to make some pretty fundamental changes to the Quiz Engine, the component responsible for most of the MySQL load. Somehow the Quiz Engine would need to significantly reduce its load on MySQL.

Most of NoRedInk runs on a Rails monolith, including the Quiz Engine. The Quiz Engine is big! It’s got lots of features! It supports our teachers & students to do lots of great work together! Yay!

But the Quiz Engine has some problems, too. A mix of complexity and performance-sensitivity has made engineers afraid to touch it. Previous attempts at big structural change in the Quiz Engine failed and had to be rolled back. If Pufferfish was going make significant structural changes, we would need to ensure our ability to be productive in the Quiz Engine codebase. Thinking we could just do it without a new approach would be foolhardy.

⚡ The Vengeful God of Tests

We have mixed feelings about our test suite. It’s nice that it covers a lot of code. Less nice is that we don’t really know what each test is intended to check. These tests have evolved into complex bits of code by themselves with a lot of supporting logic, and in many cases, tight coupling to the implementation. Diving deep into some of these tests has uncovered tests no longer covering any production logic at all. The test suite is large and we didn’t have time to dive deep into each test, but we were also reluctant to delete test cases without being sure they weren’t adding value.

Our relationship with the Quiz Engine test suite was and still is a bit like one might have with an angry Greek god. We’re continuously investing effort to keep it happy (i.e. green), but we don’t always understand what we’re doing or why. Please don’t spoil our harvest and protect us from (production) fires, oh mighty RSpec!

The ultimate goal wasn’t to change Quiz Engine functionality, but rather to reduce its load on MySQL. This is the perfect scenario for tests to help us! The test suite we want is:

fast

comprehensive, and

not dependent on implementation

includes performance testing

Unfortunately, that’s not the hand we were given:

The suite takes about 30 minutes to run in CI and even longer locally.

Our QA team finds bugs that sneaked past CI in PRs with Quiz Engine changes relatively frequently.

Many tests ensure that specific queries are performed in a specific order. Considering we might replace MySQL wholesale, these tests provide little value.

And because a lot of Quiz Engine code is extremely performance-sensitive, there’s an increased risk of performance regressions only surfacing with real production load.

Fighting with our tests meant that even small changes would take hours to verify in tests, and then, because of unforeseen regressions not covered by the tests, take multiple attempts to fix, resulting in multiple-day roll-outs for small changes.

Our clock is ticking! We needed to iterate faster than that if we were going to avert the apocalypse.

🐶 I have no idea what I’m doing 🧪

Reading complicated legacy Rails code often raises questions that take surprising amounts of effort to answer.

Is this method dead code? If not, who is calling this?

Are we ever entering this conditional? When?

Is this function talking to the database?

Is this function intentionally talking to the database?

Is this function only reading from the database or also writing to it?

It isn’t even clear what code was running. There are a few features of Ruby (and Rails) which optimize for writing code over reading it. We did our best to unwrap this type of code:

Rails provides devs the ability to wrap functionality in hooks. before_ and after_ hooks let devs write setup and tear-down code once, then forget it. However, the existence of these hooks means calling a method might also evaluate code defined in a different file, and you won’t know about it unless you explicitly look for it. Hard to read!

Complicating things further is Ruby’s dynamic dispatch based on subclassing and polymorphic associations. Which load_students am I calling? The one for Quiz or the one for Practice? They each implement the Assignment interface but have pretty different behavior! And: they each have their own set of hooks🤦. Maybe it’s something completely different!

And then there’s ActiveRecord. ActiveRecord makes it easy to write queries — a little too easy. It doesn’t make it easy to know where queries are happening. It’s ergonomic that we can tell ActiveRecord what we need, and let it figure how to fetch the data. It’s less nice when you’re trying to find out where in the code your queries are happening and the answer to that question is, “absolutely anywhere”. We want to know exactly what queries are happening on these code paths. ActiveRecord doesn’t help.

🧵 A rich history

A final factor that makes working in Quiz Engine code daunting is the sheer size of the beast. The Quiz Engine has grown organically over many years, so there’s a lot of functionality to be aware of.

Because the Quiz Engine itself has been hard to change for a while, APIs defined between bits of Quiz Engine code often haven’t evolved to match our latest understanding. This means understanding the Quiz Engine code requires not just understanding what it does today, but also how we thought about it in the past, and what (partial) attempts were made to change it. This increases the sum of Quiz Engine knowledge even further.

For example, we might try to refactor a bit of code, leading to tests failing. But is this conditional branch ever reached in production? 🤷

Enough complaining. What did we do about it?

We knew this was going to be a huge project, and huge projects, in the best case, are shipped late, and in the average case don’t ever ship. The only way we were going to have confidence that our work would ever see the light of day was by doing the riskiest, hardest, scariest stuff first. That way, if one approach wasn’t going to work, we would find out about it sooner and could try something new before we’d over-invested in a direction.

So: where is the risk? What’s the scariest problem we have to solve? History dictates: The more we change the legacy system, the more likely we’re going to cause regressions.

So our first task: cut away the part of the Quiz Engine that performs database queries and port this logic to a separate service. Henceforth when Rails needs to read or change Quiz Engine data, it will talk to the new service instead of going to the database directly.

Once the legacy-code risk has been minimized, we would be able to focus on the (still challenging) task of changing where we store Quiz Engine data from single-database MySQL to something horizontally scalable.

⛏️ Phase 1: Extracting queries from Rails

🔪 Finding out where to cut

Before extracting Quiz Engine MySQL queries from our Rails service, we first needed to know where those queries were being made. As we discussed above this wasn’t obvious from reading the code.

To find the MySQL queries themself, we built some tooling: we monkey-patched ActiveRecord to warn whenever an unknown read or write was made against one of the tables containing Quiz Engine data. We ran our monkey-patched code first in CI and later in production, letting the warnings tell us where those queries were happening. Using this information we decorated our code by marking all the reads and writes. Once code was decorated, it would no longer emit warnings. As soon as all the writes & reads were decorated, we changed our monkey-patch to not just warn but fail when making a query against one of those tables, to ensure we wouldn’t accidentally introduce new queries touching Quiz Engine data.

🚛 Offloading logic: Our first approach

Now we knew where to cut, we decided our place of greatest risk was moving a single MySQL query out of our rails app. If we could move a single query, we could move all of them. There was one rub: if we did move all queries to our new app, we would add a lot of network latency. because of the number of round trips needed for a single request. Now we have a constraint: Move a single query into a new service, but with very little latency.

How did we reduce latency?

Get rid of network latency by getting rid of the network — we hosted the service in the same hardware as our Rails app.

Get rid of protocol latency by using a dead-simple protocol: socket communication.

We ended up building a socket server in Haskell that took data requests from Rails, and transformed them into a series of MySQL queries, which rails would use to fetch the data itself.

🛸 Leaving the Mothership: Fewer Round Trips

Although co-locating our service with rails got us off the ground, it required significant duct tape. We had invested a lot of work building nice deployment systems for HTTP services and we didn’t want to re-invent that tooling for socket-based side-car apps. The thing that was preventing the migration was having too many round-trip requests to the Rails app. How could we reduce the number of round trips?

As we moved MySQL query generation to our new service, we started to see this pattern in our routes:

MySQL Read some data ┐ Ruby Do some processing │ candidate 1 for MySQL Read some more data ┘ extraction Ruby More processing MySQL Write some data ┐ Ruby Processing again! │ candidate 2 for MySQL Write more data ┘ extraction

To reduce latency, we’d have to bundle reads and writes: In addition to porting reads & writes to the new service, we’d have to port the ruby logic between reads and writes, which would be a lot of work.

What if instead, we could change the order of operations and make it look like this?

MySQL Read some data ┐ candidate 1 for MySQL Read some more data ┘ extraction Ruby Do some processing Ruby More processing Ruby Processing again! MySQL Write some data ┐ candidate 2 for MySQL Write more data ┘ extraction

Then we’d be able to extract batches of queries to Haskell and leave the logic behind in Rails.

One concern we had with changing the order of operations like this was the possibility of a request handler first writing some data to the database, then reading it back again later. Changing the order of read and write queries would result in such code failing. However, since we now had a complete and accurate picture of all the queries the Rails code was making, we knew (luckily!) we didn’t need to worry about this.

Another concern was the risk of a large refactor like this resulting in regressions causing long feedback cycles and breaking the Quiz Engine. To avoid this we tried to keep our refactors as dumb as possible: Specifically: we mostly did a lot of inlining. We would start with something like this

class QuizzesControllller 9000 :super_saiyan else load_sub_syan_fun_type # TODO: inline me end end end end

These are refactors with a relatively small chance of changing behavior or causing regressions.

Once the query was at the top level of the code it became clear when we needed data, and that understanding allowed us to push those queries to happen first.

e.g. from above, we could easily push the previously obscured QuizForFun query to the beginning:

class QuizzesControllller 9000 :super_saiyan else load_sub_syan_fun_type # TODO: inline me end end end

You might expect our bout of inlining to introduce a ton of duplication in our code, but in practice, it surfaced a lot of dead code and made it clearer what the functions we left behind were doing. That wasn’t what we set out to do, but still, nice!

👛 Phase 2: Changing the Quiz Engine datastore

At this point all interactions with the Quiz Engine datastore were going through this new Quiz Engine service. Excellent! This means for the second part of this project, the part where we were actually going to avert the MySQL apocalypse, we wouldn’t need to worry about our legacy Rails code.

To facilitate easy refactoring, we built this new service in Haskell. The effect was immediately noticeable. Like an embargo had been lifted, from this point forward we saw a constant trickle of small productive refactors get mixed in the work we were doing, slowly reshaping types to reflect our latest understanding. Changes we wouldn’t have made on the Rails side unless we’d have set aside months of dedicated time. Haskell is a great tool to use to manage complexity!

The centerpiece of this phase was the architectural change we were planning to make: switching from MySQL to a horizontally scalable storage solution. But honestly, figuring the architecture details here wasn’t the most interesting or challenging portion of the work, so we’re just putting that aside for now. Maybe we’ll return to it in a future blog post (sneak peek: we ended up using Redis and Kafka). Like in step 1, the biggest question we had to solve was “how are we going to make it safe to move forward quickly?”

One challenge was that we had left most of our test suite behind in Rails in phase one, so we were not doing too well on that front. We added Haskell test coverage of course, including many golden result tests which are worth a post on their own. Together with our QA team we also invested in our Cypress integration test suite which runs tests from the browser, thus integration-testing the combination of our Rails and Haskell code.

Our most useful tool in making safe changes in this phase however was our production traffic. We started building up what was effectively a parallel Haskell service talking to Redis next to the existing one talking to MySQL. Both received production load from the start, but until the very end of the project only the MySQL code paths’ response values were used. When the Redis code path didn’t match the MySQL, we’d log a bug. Using these bug reports, we slowly massaged the Redis code path to return identical data to MySQL.

Because we weren’t relying on the output of the Redis code path in production, we could deploy changes to it many times a day, without fear of breaking the site for students or teachers. These deploys provided frequent and fast feedback. Deploying frequently was made possible by the Haskell Quiz Engine code living in its own service, which meant deploys contained only changes by our team, without work from other teams with a different risk profile.

🥁 So, did it work?

It’s been about a month since we’ve switched entirely to the new architecture and it’s been humming along happily. By the time we did the official switch-over to the new datastore it had been running at full-load (but with bugs) for a couple of months already. Still, we were standing ready with buckets of water in case we overlooked something. Our anxiety was in vain: the roll-out was a non-event.

Architecture, plans, goals, were all important to making this a success. Still, we think the thing most crucial to our success was continuously improving our feedback loops. Fast feedback (lots of deploys), accurate feedback (knowing all the MySQL queries Rails is making), detailed feedback (lots of context in error reports), high signal/noise ratio (removing errors we were not planning to act on), lots of coverage (many students doing quizzes). Getting this feedback required us to constantly tweak and create tooling and new processes. But even if these processes were sometimes short-lived, they've never been an overhead, allowing us to move so much faster.

3 notes

·

View notes

Text

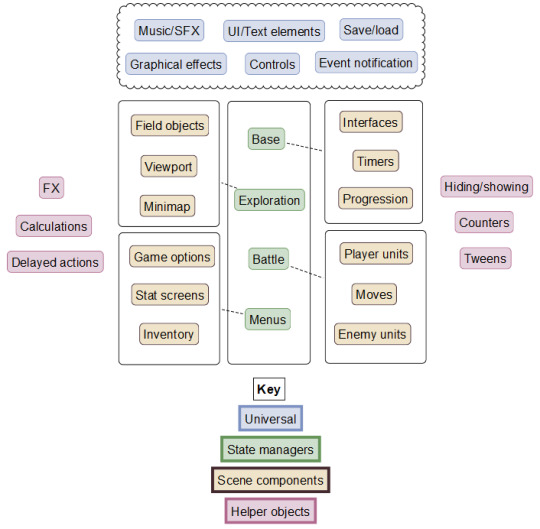

Project code anatomy

It’s been ages since I’ve done one of these discussion-focused posts, and I really only do them when I have something (relatively) interesting I’ve been thinking about! Well, that time has come, and today I’d like to talk about trade-offs between code quality and speed of development.

Limitless time? I wish! While I would love to keep this post short and say - hey, you know your project? Make every single bit of code watertight, modular, extensible, and well-documented! Unfortunately, we live in the real world where time is a very real and very limited resource. Budgeting your time (and therefore efforts) is a whole other blog which I’d love to write about at some point!

Classifying code

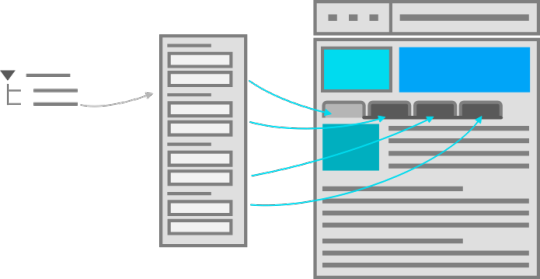

Anyway, the main point is, due to these constraints, some code will be better written and more bug-free than others! How can you determine where best to focus your efforts to ensure your project doesn’t fall over in a smoking heap when you realise key code has been incorrectly implemented? Well, the first step is to determine what your project looks like. I’ve done up a quick image of what I see in my mind’s eye:

I classify my code into one of 4 main groups. Here’s where the anatomy part of the blog title comes into play!

Universal code which is called/applicable throughout the entire codebase (the blood)

State managers which affect the main game flow for the player (the brain and spine)

Scene components which are manipulated/used throughout certain game states (The limbs)

Miscellanous, single-purpose helper objects/code (the hair, eyes, nails, fingers, toes, and importantly, belly button)

With this sort of analogy, one can perhaps start to see what parts of code are more important than others. It might hurt if you stub your toe, but that’s something that can be recovered from. So, here are my rules for how much effort I focus into each area.

Universal code

Starting with universal code, I feel this should be the most flexible type of code, as it will be used in a multitude of situations. The addition of easy to use and remember arguments to functions that can affect behaviour of these objects is essential - things like start and stop delays, depth, colour, speed, whether or not to follow an object, start/end animations, alignment, and so on.

This code should be lightweight, well-documented, and modular in order to add functionality to it as needed. I might spend about 25% of my development time here, because this code is fairly simple, although needs to be well-programmed. I would also argue this code should also be robust if you are working in a team, as other members may not know the quirks associated with the various functions, but working solo, I feel it is permissable to not program edge cases if they are documented and you keep these in mind when using them.

Of course, any universal code that deals directly with the player (e.g. textbox inputs, draggable windows, menus) MUST be 100% watertight, as there is no telling what the user will do, nor what their system specifications will be. All edge cases need to be accounted for because the player doesn’t know the quirks of your code, nor should they be expected to!

State managers

These are the main controllers of everything that happens within a general game phase, and control is passed from one manager to the next in a highly controlled and structured manner. This is core code - the game is highly dependent on the framework that you will be setting up here, and as such should be thoroughly developed, tested, and organised. As the state managers will be calling the bulk of the game’s code, it is imperative they are structured in a sensible and modular manner.

An example would be the overall battle manager object in my game. This determines the flow of the battle - things like what menu you’re choosing from, what the enemy is doing, whether attacks should be executed, recruiting, fleeing, and so on. About 40% of bugs in my code will stem from incorrect logic within these managers. Thus, a good portion of my time is spent here writing code that manages other code!

Scene components

Scene components are objects that are very active but in a limited context, and are otherwise ignored/removed when not needed. For example, field objects, which are interactive features found during exploration need to do two things - check if the player has interacted with them, and if so, execute the thing that they’re supposed to do (a ore vein will give out materials, a healing machine will ask for a fee, a trap will blow up).

As a result, scene components get enough attention that they do their jobs, but are not as rigorously programmed as the state managers unless they are complex objects (like players and enemies). These objects are often in a state of limbo during development - they are functional but often incomplete, as they are all interdependent within a particular state. I’ll probably spend about 30% of my development time here.

Thus there is usually a lot of placeholder code that I tend to go back and refactor at some point. It’s kind of like a road where you alternate between building it and driving down it one meter at a time, and then once you’re done you go over the whole thing with a road roller.

Miscellaneous

These are ultra-specialised bits of code/objects that do one thing and do it well. More often than not I use these when an object is getting too complex, and I need to separate unrelated bits of code from one another. I’ll admit it, most of this code is programmed hastily and poorly. Once I check that it does what I want it to do without any bugs, it’s left alone and I move on to other things. I tend not to revise or refactor this code at all, and I try to mitigate the large amount of single-use scripts and objects by having a nice folder structure so the code can be tucked away and I don’t have to wade through it looking for other bits of code. I estimate a very modest 5% of development time is spent on these functions.

Summing up

So, there you go, this is the unspoken thought processes that are going on while I program. I’ve never actually formally written any of this out until today, so my apologies if it doesn’t totally make sense or match your own experiences!

Oh, and while I’ve broken my code down in this way, it’s not like I say, ok, today I’ll work on all the state managers. Game development is a much more organic process than that, and generally I write whatever I need at the moment, which will be code from all 4 categories that are all related to each other in whatever I’m developing at the moment.

Nevertheless when I’m writing/developing, I keep in mind what sort of code this is going to be, and where it fits into the big picture of the project. This way I try my best to monitor and manage my time. The last thing I want is for the the game to be stuck in a permanent state of development, undergoing endless changes and iterations! In fact, it’s quite the opposite - I want to finish the game so you guys can all play and (hopefully) enjoy it!!

#game#videogame#devblog#gamedev#scifi#spacegame#dungeon crawler#rpg#entropy#gamemaker#programming#pixel art#robot#indiedev

66 notes

·

View notes

Text

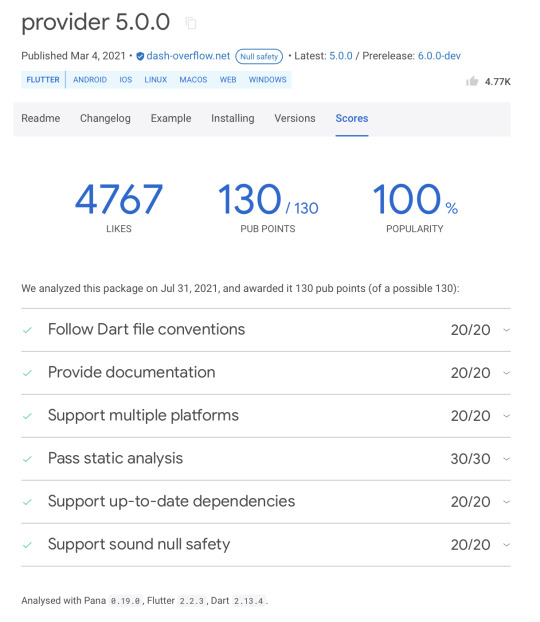

Comparative Study on Flutter State Management

Background

I am going to build a new flutter app. The app is aimed to be quite big. I'm going to need a state management tools. So, I think it’s a good idea to spent some time considering the options. First of all, i do have a preference on flutter’s state management. Itu could affect my final verdict. But, I want to make a data based decision on it. So, let’s start..

Current State of the Art

Flutter official website has a listing of all current available state management options. As on 1 Aug 2021, the following list are those that listed on the website.

I marked GetIt since it’s actually not a state management by it’s own. It’s a dependency injection library, but the community behind it develop a set of tools to make it a state management (get_it_mixin (45,130,81%) and get_it_hooks (6,100,33%)). There’s also two additional lib that not mentioned on the official page (Stacked and flutter_hooks). Those two are relatively new compared to others (since pretty much everything about flutter is new) but has high popularity.

What is Pub Point

Pub point is a curation point given by flutter package manager (pub.dev). Basically this point indicate how far a given library adhere to dart/flutter best practices.

Provider Package Meta Scores

Selection Criteria

I concluded several criteria that need to be fulfilled by a good state management library.

Well Known (Popular)

There's should be a lot of people using it.

Mature

Has rich ecosystem, which mean, resources about the library should be easily available. Resources are, but not limited to, documentation, best practices and common issue/problem solutions.

Rigid

Allow for engineers to write consistent code, so an engineer can come and easily maintain other's codebase.

Easy to Use

Easy in inter-component communication. In a complex app, a component tend to need to talk to other component. So it's very useful if the state manager give an easy way to do it.

Easy to test. A component that implement the state management need to have a good separation of concern.

Easy to learn. Has leaner learning curve.

Well Maintained:

High test coverage rate and actively developed.

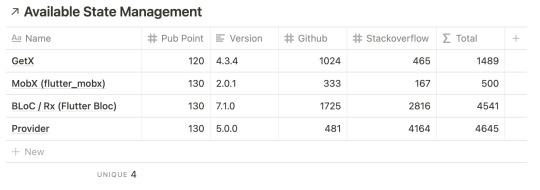

First Filter: Popularity

This first filter can give us a quick glance over the easiness of usage and the availability of resources. Since both are our criteria in choosing, the first filter is a no brainer to use. Furthermore when it’s not popular, there’s a high chance that new engineers need more time to learn it.

Luckily, we have a definitive data to rank our list. Pub.dev give us popularity score and number of likes. So, let’s drop those that has less than 90% popularity and has less than 100 likes.

As you can see, we drop 6 package from the list. We also drop setState and InheritedWidget from the list, since it’s the default implementation of state management in flutter. It’s very simple but easily increase complexity in building a bigger app. Most of the other packages try to fix the problem and build on top of it.

Now we have 9 left to go.

Second Filter: Maturity

The second filter is a bit hard to implement. After all, parameter to define “maturity” is kinda vague. But let’s make our own threshold of matureness to use as filter.

Pub point should be higher than 100

Version number should be on major version at least “1.0.0”

Github’s Closed Issue should be more than 100

Stackoverflow questions should be more than 100

Total resource (Github + Stackoverflow) should be more than 500

The current list doesn’t have 2 parameter defined above, so we need to find it out.

So let’s see which state management fulfill our threshold.

As you can see, “flutter_redux” is dropped. It’s not satisfied the criteria of “major version”. Not on major version can be inferred as, the creator of the package marked it is as not stable. There could be potentially breaking API changes in near future or an implementation change. When it happens we got no option but to refactor our code base, which lead to unnecessary work load.

But, it’s actually seems unfair. Since flutter_redux is only a set of tool on top redux . The base package is actually satisfy our threshold so far. It’s on v5.0.0, has pub point ≥ 100, has likes ≥ 100 and has popularity ≥ 90%.

So, if we use the base package it should be safe. But, let’s go a little deeper. The base package is a Dart package, so it means this lib can be used outside flutter (which is a plus). Redux package also claims it’s a rich ecosystem, in which it has several child packages:

As i inspect each of those packages, i found none of them are stables. In fact, none of them are even popular. Which i can assume it’s pretty hard to find “best practices” around it. Redux might be super popular on Javascript community. We could easily find help about redux for web development issue, but i don’t think it stays true for flutter’s issue (you can see the total resource count, it barely pass 500, it’s 517).

Redux package promises big things, but as a saying goes “a chain is as strong as its weakest link”. It’s hard for me to let this package claim “maturity”.

Fun fact: On JS community, specifically React community, redux is also losing popularity due to easier or simpler API from React.Context or Mobx.

But, Just in case, let’s keep Redux in mind, let’s say it’s a seed selection. Since we might go away with only using the base package. Also, it’s might be significantly excel on another filter. So, currently we have 4+1 options left.

Third Filter: Rigid

Our code should be very consistent across all code base. Again, this is very vague. What is the parameters to say a code is consistent, and how consistent we want it to be. Honestly, i can’t find a measurable metric for it. The consistency of a code is all based on a person valuation. In my opinion every and each public available solutions should be custom tailored to our needs. So to make a codebase consistent we should define our own conventions and stick on it during code reviews.

So, sadly on this filter none of the options are dropped. It stays 4+1 options.

Fourth Filter: Easy to Use

We had already define, when is a state management can be called as easy to use in the previous section. Those criteria are:

Each components can talk to each other easily.

Each components should be easy to test. It can be achieved when it separates business logic from views. Also separate big business logic to smaller ones.

We spent little time in learning it.

Since the fourth filter is span across multiple complex criteria, I think to objectively measure it, we need to use a ranking system. A winner on a criteria will get 2 point, second place will get 1, and the rest get 0 point. So, Let’s start visiting those criteria one by one.

Inter Component Communication

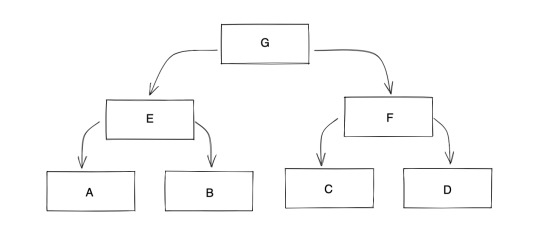

Let’s say we have component tree like the following diagram,

In basic composition pattern, when component A needs something from component D it needs to follow a chain of command through E→G→F→D

This approach is easily get very complex when we scale up the system, like a tree with 10 layers deep. So, to solve this problem, state management’s tools introduce a separate class that hold an object which exposed to all components.

Basically, all state management listed above allows this to happen. The differences located on how big is the “root state” allowed and how to reduce unnecessary “render”.

Provider and BLoC is very similar, their pattern best practices only allow state to be stored as high as they needed. In example, on previous graph, states that used by A and B is stored in E but states that used by A and D is stored in root (G). This ensure the render only happen on those component that needed it. But, the problem arise when D suddenly need state that stored in E. We will need a refactor to move it to G.

Redux and Mobx is very similar, it allows all state to be stored in a single state Store that located at root. Each state in store is implemented as an observable and only listened by component that needs it. By doing it that way, it can reduce the unnecessary render occurred. But, this approach easily bloated the root state since it stores everything. You can implement a sub store, like a store located in E to be used by A and B, but then they will lose their advantages over Provider and BLoC. So, sub store is basically discouraged, you can see both redux and mobx has no implementation for MultiStore component like MultiProvider in provider and MultiBlocProvider in BLoC.

A bloated root state is bad due to, not only the file become very big very fast but also the store hogs a lot of memory even when the state is not actively used. Also, as far as i read, i can’t find any solution to remove states that being unused in either Redux and Mobx. It’s something that won’t happen in Provider, since when a branch is disposes it will remove all state included. So, basically choosing either Provider or Redux is down to personal preferences. Wether you prefer simplicity in Redux or a bit more complex but has better memory allocation in Provider.

Meanwhile, Getx has different implementation altogether. It tries to combine provider style and redux style. It has a singleton object to store all active states, but that singleton is managed by a dependency injector. That dependency injector will create and store a state when it’s needed and remove it when it’s not needed anymore. Theres a writer comment in flutter_redux readme, it says

Singletons can be problematic for testing, and Flutter doesn’t have a great Dependency Injection library (such as Dagger2) just yet, so I’d prefer to avoid those. … Therefore, redux & redux_flutter was born for more complex stories like this one.

I can infer, if there is a great dependency injection, the creator of flutter redux won’t create it. So, for the first criteria in easiness of usage, i think, won by Getx (+2 point).

There is a state management that also build on top dependency injection GetIt. But, it got removed in the first round due to very low popularity. Personally, i think it got potential.

Business logic separation

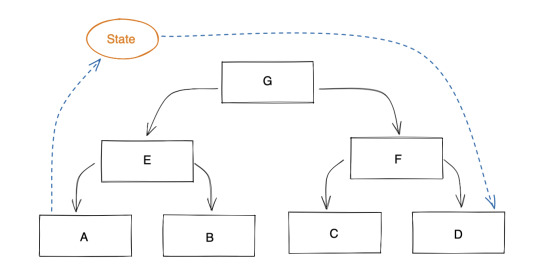

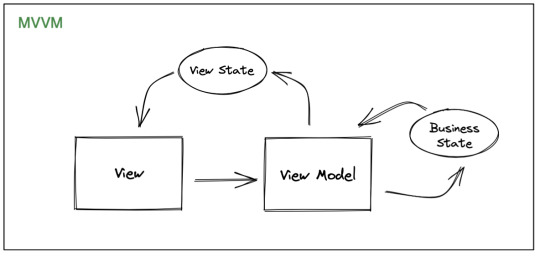

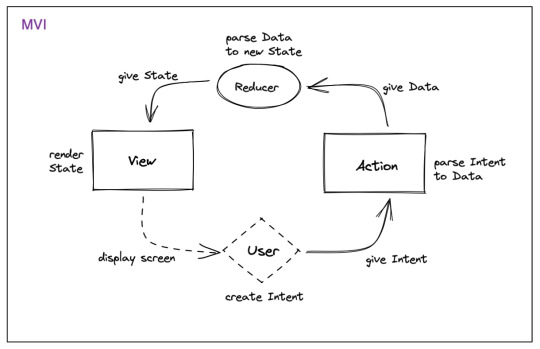

Just like in the previous criteria, all state management also has their own level of separation. They differ in their way in defining unidirectional data flow. You can try to map each of them based on similarity to a more common design pattern like MVVM or MVI.

Provider, Mobx and Getx are similar to MVVM. BLoC and Redux are similar to MVI.

In this criteria, i think there’s no winner since it boils down to preference again.

Easy to learn

Finally, the easiest criteria in easiness of usage, easy to learn. I think there’s only one parameter for it. To be easy to learn, it have to introduced least new things. Both, MVVM and MVI is already pretty common but the latter is a bit new. MVI’s style packages like redux and bloc, introduce new concepts like an action and reducer. Even though Mobx also has actions but it already simplified by using code generator so it looks like any other view model.

So, for this criteria, i think the winner are those with MVVM’s style (+2 Point), Provider, Mobx and Getx. Actually, google themself also promote Provider (Google I/O 2019) over BLoC (Google I/O 2018) because of the simplicity, you can watch the show here.

The fourth filter result

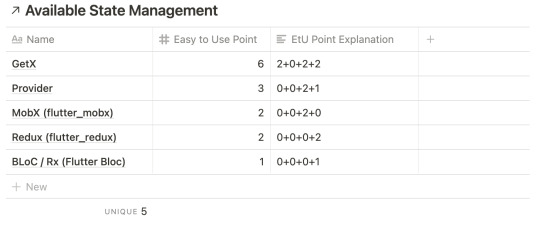

We have inspect all criteria in the fourth filter. The result are as the following:

Getx won twice (4 point),

Provider and Mobx won once (2 point) and

BLoC and Redux never won (0 point).

I guess it’s very clear that we will drop BLoC and Redux. But, i think we need to add one more important criteria.

Which has bigger ecosystem

Big ecosystem means that a given package has many default tools baked or integrated in. A big ecosystem can help us to reduce the time needed to mix and match tools. We don’t need to reinvent the wheel and focused on delivering products. So, let’s see which one of them has the biggest ecosystem. The answer is Getx, but also unsurprisingly Redux. Getx shipped with Dependency Injection, Automated Logging, Http Client, Route Management, and more. The same thing with Redux, as mentioned before, Redux has multiple sub packages, even though none of it is popular. The second place goes to provider and BLoC since it gives us more option in implementation compared to one on the last place. Finally, on the last place Mobx, it offers only state management and gives no additional tools.

So, these are the final verdict

Suddenly, Redux has comeback to the race.

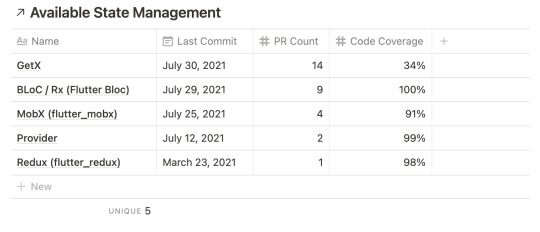

Fifth Filter: Well maintained

No matter how good a package currently is, we can’t use something that got no further maintenance. We need a well maintained package. So, as always let’s define the criteria of well maintained.

Code Coverage

Last time a commit merged to master

Open Pull Request count

Just like previous filter, we will implement ranking system. A winner on a criteria will get 2 point, second place will get 1, and the rest get 0 point.

So, with above data, here are the verdicts

Getx 4 point (2+2+0)

BLoC 4 point (1+1+2)

MobX 0 point (0+0+0)

Provider 1 point (0+0+1)

Redux 0 point (0+0+0)

Lets add with the previous filter point,

Getx 10 point (4+6)

BLoC 5 point (4+1)

MobX 2 point (0+2)

Provider 4 point (1+3)

Redux 2 point (0+2)

By now we can see that the winner state management, that allowed to claim the best possible right now, is Getx. But, it’s a bit concerning when I look at the code coverage, it’s the lowest by far from the others. It makes me wonder, what happen to Getx. So i tried to see the result more closely.

After seeing the image above, i can see that the problem is the get_connect module has 0 coverage also several other modules has low coverage. But, let’s pick the coverage of the core modules like, get_core (100%), get_instance(77%), get_state_manager(51%,33%). The coverage get over 50%, not too bad.

Basically, this result means we need to cancel a winner status from Getx. It’s the win on big ecosystem criteria. So, lets subtract 2 point from the end result (10-2). It got 8 points left, it still won the race. We can safely say it has more pros than cons.

Conclusions

The final result, current best state management is Getx 🎉🎉🎉. Sure, it is not perfect and could be beaten by other state management in the future, but currently it’s the best.

So, my decision is "I should use GetX"

1 note

·

View note

Text

[my own game] #20 – rotating spikes obstacles

Challenge: How can I create a mace-like obstacle that rotates either clockwise or counter-clockwise, deals damage and knocks back the player on collision for my 2D elemental platformer?

Our 2D elemental platformer will feature different element-based obstacles that deal damage to the player when in contact and hinder their progress while progressing through a level. I decided to create a rotating spikes obstacle which represents the Earth element as the first element-based obstacle because I had a general idea of how I want it to behave and how to implement it.

Methodology

For this challenge I got inspired by the rotating spikes contraptions in Ori and the Blind Forest. In order to get familiar with it, I played the part of the game that features them in order to see how the mechanic feels like. While implementing the mechanic in a Unity prototype, I brainstormed on how to achieve the desired behaviour. Furthermore, I did some playtesting in order to ensure that the mechanic works in a similar way to the one in Ori and the Blind Forest. In the meantime, I looked at some forums for problems that others have encountered in order to incorporate what they have learned. I also checked the Unity documentation to see how certain methods and classes work. I validated the game design and game technology aspects of the challenge results in separate peer review sessions with two teachers.

DOT framework methods used:

Resources

Game concepts:

Dangers (Ori and the Blind Forest) - Ori and the Blind Forest Fandom Wiki

Unity documentation:

Transform.Rotate

Rigidbody.AddForce

UnityEvent<T0>

Unity answers:

What exactly is RelativeVelocity?

[2D] Can't AddForce to x but y works fine.

Using AddForce for horizontal movement doesn't quite work the way I want it to (2D)

Products

The rotating spikes obstacle prefab is composed of 3 GameObjects: a circular base, a rectangular body and square spikes. The body and the spikes are put under the circle object in order to achieve the desired rotation. The rotation logic is put in a script which is attached to the parent object, whereas the knock back damage script is attached only to the spikes object which also has a 2D box collider component since this is the part of the object that the player can collide with.

The desired way of rotation - make the body and the spikes rotate around the base

If all 3 objects are put under one GameObject, the rotation would look like this which is unwanted behaviour

When in contact, the obstacle deals damage to the player

Configuring the rotation speed to be similar to the speed of the rotating spikes in Ori and the Blind Forest

Desired behaviour of the knockback - push the character away in a direction that depends on the corner that hit the character

As far as I managed to observe in Ori and the Blind Forest, a hit by an outside corner would push the player away from the obstacle, whereas a hit by an inside corner would drag the player inside the rotating obstacle where the player can stay without being hit.

For playtesting purposes, I turned off the damage dealing functionality.

The player can be knocked back by the obstacle

After some playtests, I discovered I had to tweak the knockback power because it pushed the player too far away in the air or on the ground.

Push the player away from the obstacle when hit by an outside corner

Drag the player inside the rotating obstacle when hit by an inside corner

Adding multiple rotating spikes obstacles with clockwise and counter-clockwise rotation directions

Game technology validation:

I did 3 iterations on the implementation of this mechanic and each was committed to our GitLab repository.

Iteration 1: Initial working implementation of the knockback functionality

In order to implement the knockback mechanic, I had to change the velocity of the player GameObject with Rigidbody.AddForce when it collides with the obstacle. I had some troubles doing that because I set the player velocity in the Update() method for the player movement which resets the velocity on every frame and omits the changes that AddForce did as mentioned in [2D] Can't AddForce to x but y works fine. and in Using AddForce for horizontal movement doesn't quite work the way I want it to (2D). In order to fix this, I decided to “pause” the movement for 1-2 seconds, apply the force and reset it back. This workaround solved my issue and also provided a logical explanation for the gameplay - the player gets hammered for 1-2 seconds after being hit by the spikes which totally makes sense.

This version of the code can be found on the following links:

PlayerMovement.cs - attached to the player GameObject; defines the knockback functionality

ElementalObstacle.cs - attached to the obstacle GameObject; triggers the knockback functionality

Iteration 2: Make the knockback functionality be triggered from the player instead from the obstacle

After I achieved the desired results, I showed my progress to Jack. He said that my knockback workaround is a good and not problematic approach since it is okay to do one thing in the Update method and then pause to do something else for a certain period of time. Furthermore, he gave me some nice advice about the current code structure - it is better to trigger the knockback functionality in the player rather than triggering it in the obstacle because if I want to create other obstacles with the same behaviour, it would be easier to handle the knockback in the player since the script will be already attached to the player instead of attaching the script with the knockback functionality to each obstacle GameObject.

This version of the code can be found on the following links:

PlayerMovement.cs - attached to the player GameObject; defines the knockback functionality

PlayerHealth.cs - attached to the player GameObject; triggers the knockback functionality

KnockBackDamage.cs - attached to the obstacle GameObject; empty script, only used to identify whether the obstacle GameObject has it

Iteration 3: Refactor Unity Events

Jack also advised me that it would be cleaner and more flexible to use Unity Events to handle the knockback functionality so I refactored my code like so.

This version of the code can be found on the following links:

Player.cs - attached to the player GameObject; defines the knockback Unity Event

PlayerMovement.cs - attached to the player GameObject; listens for the knockback Unity Event and defines the knockback functionality

PlayerHealth.cs - attached to the player GameObject; invokes the knockback Unity Event which triggers the knockback functionality

Game design validation:

My duo project teammate and I showed our current progress to Mina - our game design semester coach, who was satisfied with our progress so far. She told us to think about ways of informing the player what each thing in the game does so that they know what to expect while playing the game. This also affects the rotating spikes obstacle - how to inform the player that this obstacle would deal them damage?

The current version of the code and other resources can be found on our GitLab Repository.

Next

The next steps are to add spikes on the current square part of the obstacle in order to indicate to the player that this part of the obstacle is harmful and implement more obstacles.

#my own game#devlog#library#benchmark creation#community research#literature study#lab#usability testing#showroom#peer review#workshop#brainstorm#prototyping

1 note

·

View note

Text

Once upon a time…

I learned the skill of storytelling when studying theatre improv in 2006. I learned the power of its application in a multitude of contexts over the next nine years.

In the world of software development and new product creation we have a lot of good ideas, and we want to tell people, so we talk a lot. We explain, we pitch, we sell, we defend, we justify. This descriptive, detailed style of communication engages our logical brain, and (we hope) the logical brains of those we are talking to. But connections are not made through logic, they are made through emotion. In order to inspire and engage we need to connect on an emotional, heartfelt level with our colleagues, our customers, our audience.

Storytelling allows us to do this. Moving away from dry, technical, accurate renditions of our ideas, we can move into metaphor and dreamscapes, stirring up passion in our listeners, making them laugh, frown, question and challenge—taking them on a journey.

I’ve taught storytelling for setting vision, refactoring and eliminating waste, improving collaboration, discovering shared purpose, giving legal counsel, and various other contexts. Over the past year I’ve been facilitating storytelling sessions specifically to help people improve their presentation skills. It’s an area of continual discovery for me, and I’m seeking more opportunities to apply this learning, through offering public workshops.

The first of these workshops will be held at Agile Cymru, on 6th July. Details and registration at AgileCymru.uk.

Source: Once upon a time...

2 notes

·

View notes

Text

Why to upgrade to Angular 2

Introduction of Angular 2

Angular 2 is one of the most popular platforms which are a successor to Google Angular 1 framework. With its help, Angular JS developers can build complex applications in browsers and beyond. Angular 2 is not only the next or advanced version of Angular 1, it is fully redesigned and rewritten. Thus, the architecture of Angular 2 is completely different from Angular 1. This tutorial looks at the various aspects of Angular 2 framework which includes the basics of the framework, the setup of Angular and how to work with the various aspects of the framework. Unlike its predecessor, Angular 2 is a TypeScript-based, web application development platform that makes the switch from MVC (model-view-controller) to a components-based approach to web development.

Benefits of Angular 2

Mobile Support: Though the Ionic framework has always worked well with Angular, the platform offers better mobile support with the version 2. The 1.x version compromised heavily on user experience and application performance in general. With its built-in mobile-orientation, Angular 2.0 is more geared for cross-platform mobile application development.

Faster and Modern Browsers: Faster and modern browsers are demanded by developers today. Developers want Angular 2 stress more on browsers like IE10/11, Chrome, Firefox, Opera & Safari on the desktop and Chrome on Android, Windows Phone 8+, iOS6 & Firefox mobile. Developers believe that this would allow AngularJS codebase to be short and compact and AngularJS would support the latest and greatest features without worrying about backward compatibility and polyfills. This would simplify the AngularJS app development process.

High Performance: Angular2 uses superset of JavaScript which is highly optimized which makes the app and web to load faster. Angular2 loads quickly with component router. It helps in automatic code splitting so user only load code required to vendor the view. Many modules are removed from angular’s core, resulting in better performance means you will be able to pick and choose the part you need.

Changing World of Web: The web has changed noticeably and no doubt it will continue changing in the future as well. The current version of AngularJS cannot work with the new web components like custom elements, HTML imports; shadow DOM etc. which allow developers to create fully encapsulated custom elements. Developers anticipate with all hopes that Angular 2 must fully support all web components.

Component Based Development: A component is an independent software unit that can be composed with the other components to create a software system. Component based web development is pretty much future of web development. Angular2 is focused on component base development. Angularjs require entire stack to be written using angular but angular2 emphasis separation of components /allow segmentation within the app to be written independently. Developers can concentrate on business logic only. These things are not just features but the requirement of any thick-client web framework.

Why to upgrade to Angular 2 ?

Angular 2 is entirely component-based and even the final application is a component of the platform. Components and directives have replaced controllers and scopes. Even the specification for directives has been simplified and will probably further improve. They are the communication channels for components and run in the browser with elements and events. Angular 2 components have their own injector so you no longer have to work with a single injector for the entire application. With an improved dependency injection model, there are more opportunities for component or object-based work.

Optimized for Mobile- Angular 2 has been carefully optimized for boasting improved memory efficiency, enhanced mobile performance, and fewer CPU cycles. It’s as clear of an indication as any that Angular 2 is going to serve as a mobile-first framework in order to encourage the mobile app development process. This version also supports sophisticated touch and gesture events across modern tablet and mobile devices.

Typescript Support- Angular 2 uses Typescript and variety of concepts common in back-end. That is why it is more back-end developer-friendly. It's worth noting that dependency injection container makes use of metadata generated by Typescript. Another important facet is IDE integration is that it makes easier to scale large projects through refactoring your whole code base at the same time. If you are interested in Typescript, the docs are a great place to begin with. Moreover, Typescript usage improves developer experience thanks to good support from text editors and IDE's. With libraries like React already using Typescript, web/mobile app developers can implement the library in their Angular 2 project seamlessly.

Modular Development- Angular 1 created a fair share of headaches when it came to loading modules or deciding between Require.js and Web Pack. Fortunately, these decisions are removed entirely from Angular 2 as the new release shies away from ineffective modules to make room for performance improvements. Angular 2 also integrates System.js, a universal dynamic modular loader, which provides an environment for loading ES6, Common, and AMD modules.

$scope Out, Components in- Angular 2 gets rid of controllers and $scope. You may wonder how you’re going to stitch your homepage together! Well, don’t worry too much − Angular 2 introduces Components as an easier way to build complex web apps and pages. Angular 2 utilizes directives (DOMs) and components (templates). In simple terms, you can build individual component classes that act as isolated parts of your pages. Components then are highly functional and customizable directives that can be configured to build and specify classes, selectors, and views for companion templates. Angular 2 components make it possible to write code that won’t interfere with other pieces of code within the component itself.

Native Mobile Development- The best part about Angular 2 is “it’s more framework-oriented”. This means the code you write for mobile/tablet devices will need to be converted using a framework like Ionic or Native Script. One single skillset and code base can be used to scale and build large architectures of code and with the integration of a framework (like, you guessed it, NativeScript or Ionic); you get a plethora of room to be flexible with the way your native applications function.

Code Syntax Changes- One more notable feature of Angular 2 is that it adds more than a few bells and whistles to the syntax usage. This comprises (but is not limited to) improving data-binding with properties inputs, changing the way routing works, changing an appearance of directives syntax, and, finally, improving the way local variables that are being used widely. One more notable feature of Angular 2 is that it adds more than a few bells and whistles to the syntax usage. This comprises improving data-binding with properties inputs, changing the way routing works, changing an appearance of directives syntax, and, finally, improving the way local variables that are being used widely.

Comparison between Angular 1 and Angular 2

Angular 1

In order to create service use provider, factory, service, constant and value

In order to automatically detection changed use $scope, $watch, $scope, $apply, $timeout.

Syntax event for example ng-click

Syntax properties for example ng-hid, ng-checked

It use Filter

Angular 2

In order to create service use only class

In order to automatically detection changed use Zone.js.

Syntax event for example (click) or (dbl-click)

Syntax properties for example [class: hidden] [checked]

It use pipe

How to migrate Angular 1 to Angular 2

It is a very simple and easy task to upgrade Angular 1 to Angular 2, but this has to be done only if the applications demand it. In this article, I will suggest a number of ways which could be taken into consideration in order to migrate existing applications from Angular 1.x to 2. Therefore, depending on the organizational need, the appropriate migration approach should be used.

Upgrading to Angular 2 is quite an easy step to take, but one that should be made carefully. There are two major ways to feel the taste of Angular 2 in your project. Which you use depends on whatever requirements your project has. The angular team have provided two paths to this:

ngForward

ngForward is not a real upgrade framework for Angular 2 but instead we can use it to create Angular 1 apps that look like Angular 2.

If you still feel uncomfortable upgrading your existing application to Angular 2, you can fall to ngForward to feel the taste and sweetness of the good tidings Angular 2 brings but still remain in your comfort zone.

You can either re-write your angular app gradually to look as if it was written in Angular 2 or add features in an Angular 2 manner leaving the existing project untouched. Another benefit that comes with this is that it prepares you and your team for the future even when you choose to hold onto the past for a little bit longer. I will guide you through a basic setup to use ngForward but in order to be on track, have a look at the Quick Start for Angular 2.

If you took time to review the Quick Start as I suggested, you won't be lost with the configuration. SystemJS is used to load the Angular application after it has been bootstrapped as we will soon see. Finally in our app.ts, we can code like its Angular 2.

ngUpgrade

Writing an Angular 1.x app that looks like Angular 2 is not good enough. We need the real stuff. The challenge then becomes that with a large existing Angular 1.x project, it becomes really difficult to re-write our entire app to Angular 2, and even using ngForward would not be ideal. This is where ngUpgrade comes to our aid. ngUpgrade is the real stuff.

Unlike ngForward, ngUpgrade was covered clearly in the Angular 2 docs. If you fall in the category of developers that will take this path, then spare few minutes and digest this.

We'll also be writing more articles on upgrading to Angular 2 and we'll focus more on ngUpgrade in a future article.

6 notes

·

View notes

Text

2018 May Update

Wow! End of May already?

For the past two months, we've been focusing on really finalizing the levels down to their very last details - and in a linear order starting from when Gale first wakes up. It's easy to fall into the trap of thinking you're further ahead than you really are. I thought of the levels as existing in a disconnected state - and by simply connecting them together - Viola! Done! Finished!

Not so.

In finally stitching the levels together, I've discovered there were still many in-between areas I hadn't yet accounted for.

(Upon leaving the first town, the player is free to travel anywhere they can reach)

(One example secret unmarked area accessible from the world map houses a Perro. There's a side quest to recruit Perros for your vacant coop back home)

And of the levels themselves, they're all receiving heavy rounds of polish. Here are some select areas from a build dating back last year vs. today's version:

(Forest trees and background received a major overhaul)

(since the game's art style doesn't rely on dark pixel outlines, every level requires a unique lighting configuration to ensure good visibility)

We're also not shying away from heavy refactoring and cuts to ensure smoother and more logical level flow.

(The dark Tomb section of Anuri Temple is now blocked off until the player obtains the Bombs)

Not even bosses are sacrosanct. The first Toad Boss has been a difficult point for me. He's challenging and his weak point is hard to telegraph. I've yet to see anyone discover his true vulnerability on their own in a blind playthrough. Unlike the demo presented long ago, the player will not have bombs this early on. And so, I've decided to push the Toad Boss deeper into the dungeon as a secret boss encounter. In his place, a special variant of the Slargummy (big slimes) now occupies the first boss role.

NEW BOSS BATTLE MUSIC

On the topic of bosses, I've requested of Will a new boss battle score to breathe some freshness to the boss fights. And Will has delivered! (Listen to it here)

If you're a fan of the old boss battle song, rest assured that's in the game too as a hidden track.

(Faster Neutral attacks. This should display more smoothly at 60fps)

COMBAT