#power bi to fabric migration

Explore tagged Tumblr posts

Text

PreludeSys helps businesses navigate complex transitions like moving from Power BI Premium to Microsoft Fabric. Our tailored Microsoft Fabric services ensure a seamless migration process, providing your organization with the full benefits of this powerful data platform. Whether you need assistance with workspace reassignment, license management, or understanding how to maximize the capabilities of Microsoft Fabric, we’ve got you covered.

To know more visit us - https://preludesys.com/power-bi-premium-to-fabric-transition/

#transition power bi to fabric#power bi to fabric migration#power bi premium to microsoft fabric migration#microsoft fabric services

0 notes

Text

Accelerating Digital Transformation with Acuvate’s MVP Solutions

A Minimum Viable Product (MVP) is a basic version of a product designed to test its concept with early adopters, gather feedback, and validate market demand before full-scale development. Implementing an MVP is vital for startups, as statistics indicate that 90% of startups fail due to a lack of understanding in utilizing an MVP. An MVP helps mitigate risks, achieve a faster time to market, and save costs by focusing on essential features and testing the product idea before fully committing to its development

• Verifying Product Concepts: Validates product ideas and confirms market demand before full development.

Gathering User Feedback: Collects insights from real users to improve future iterations.

Establishing Product-Market Fit: Determines if the product resonates with the target market.

Faster Time-to-Market: Enables quicker product launch with fewer features.

Risk Mitigation: Limits risk by testing the product with real users before large investments.

Gathering User Feedback: Provides insights that help prioritize valuable features for future development.

Here are Acuvate’s tailored MVP models for diverse business needs

Data HealthCheck MVP (Minimum Viable Product)

Many organizations face challenges with fragmented data, outdated governance, and inefficient pipelines, leading to delays and missed opportunities. Acuvate’s expert assessment offers:

Detailed analysis of your current data architecture and interfaces.

A clear, actionable roadmap for a future-state ecosystem.

A comprehensive end-to-end data strategy for collection, manipulation, storage, and visualization.

Advanced data governance with contextualized insights.

Identification of AI/ML/MV/Gen-AI integration opportunities and cloud cost optimization.

Tailored MVP proposals for immediate impact.

Quick wins and a solid foundation for long-term success with Acuvate’s Data HealthCheck.

know more

Microsoft Fabric Deployment MVP

Is your organization facing challenges with data silos and slow decision-making? Don’t let outdated infrastructure hinder your digital progress.

Acuvate’s Microsoft Fabric Deployment MVP offers rapid transformation with:

Expert implementation of Microsoft Fabric Data and AI Platform, tailored to your scale and security needs using our AcuWeave data migration tool.

Full Microsoft Fabric setup, including Azure sizing, datacenter configuration, and security.

Smooth data migration from existing databases (MS Synapse, SQL Server, Oracle) to Fabric OneLake via AcuWeave.

Strong data governance (based on MS PurView) with role-based access and robust security.

Two custom Power BI dashboards to turn your data into actionable insights.

know more

Tableau to Power BI Migration MVP

Are rising Tableau costs and limited integration holding back your business intelligence? Don’t let legacy tools limit your data potential.

Migrating from Tableau to Microsoft Fabric Power BI MVP with Acuvate’s Tableau to Power BI migration MVP, you’ll get:

Smooth migration of up to three Tableau dashboards to Power BI, preserving key business insights using our AcuWeave tool.

Full Microsoft Fabric setup with optimized Azure configuration and datacenter placement for maximum performance.

Optional data migration to Fabric OneLake for seamless, unified data management.

know more

Digital Twin Implementation MVP

Acuvate’s Digital Twin service, integrating AcuPrism and KDI Kognitwin, creates a unified, real-time digital representation of your facility for smarter decisions and operational excellence. Here’s what we offer:

Implement KDI Kognitwin SaaS Integrated Digital Twin MVP.

Overcome disconnected systems, outdated workflows, and siloed data with tailored integration.

Set up AcuPrism (Databricks or MS Fabric) in your preferred cloud environment.

Seamlessly integrate SAP ERP and Aveva PI data sources.

Establish strong data governance frameworks.

Incorporate 3D laser-scanned models of your facility into KDI Kognitwin (assuming you provide the scan).

Enable real-time data exchange and visibility by linking AcuPrism and KDI Kognitwin.

Visualize SAP ERP and Aveva PI data in an interactive digital twin environment.

know more

MVP for Oil & Gas Production Optimalisation

Acuvate’s MVP offering integrates AcuPrism and AI-driven dashboards to optimize production in the Oil & Gas industry by improving visibility and streamlining operations. Key features include:

Deploy AcuPrism Enterprise Data Platform on Databricks or MS Fabric in your preferred cloud (Azure, AWS, GCP).

Integrate two key data sources for real-time or preloaded insights.

Apply Acuvate’s proven data governance framework.

Create two AI-powered MS Power BI dashboards focused on production optimization.

know more

Manufacturing OEE Optimization MVP

Acuvate’s OEE Optimization MVP leverages AcuPrism and AI-powered dashboards to boost manufacturing efficiency, reduce downtime, and optimize asset performance. Key features include:

Deploy AcuPrism on Databricks or MS Fabric in your chosen cloud (Azure, AWS, GCP).

Integrate and analyze two key data sources (real-time or preloaded).

Implement data governance to ensure accuracy.

Gain actionable insights through two AI-driven MS Power BI dashboards for OEE monitoring.

know more

Achieve Transformative Results with Acuvate’s MVP Solutions for Business Optimization

Acuvate’s MVP solutions provide businesses with rapid, scalable prototypes that test key concepts, reduce risks, and deliver quick results. By leveraging AI, data governance, and cloud platforms, we help optimize operations and streamline digital transformation. Our approach ensures you gain valuable insights and set the foundation for long-term success.

Conclusion

Scaling your MVP into a fully deployed solution is easy with Acuvate’s expertise and customer-focused approach. We help you optimize data governance, integrate AI, and enhance operational efficiencies, turning your digital transformation vision into reality.

Accelerate Growth with Acuvate’s Ready-to-Deploy MVPs

Get in Touch with Acuvate Today!

Are you ready to transform your MVP into a powerful, scalable solution? Contact Acuvate to discover how we can support your journey from MVP to full-scale implementation. Let’s work together to drive innovation, optimize performance, and accelerate your success.

#MVP#MinimumViableProduct#BusinessOptimization#DigitalTransformation#AI#CloudSolutions#DataGovernance#MicrosoftFabric#DataStrategy#PowerBI#DigitalTwin#AIIntegration#DataMigration#StartupGrowth#TechSolutions#ManufacturingOptimization#OilAndGasTech#BusinessIntelligence#AgileDevelopment#TechInnovation

1 note

·

View note

Text

Microsoft Fabric data warehouse

Microsoft Fabric data warehouse

What Is Microsoft Fabric and Why You Should Care?

Unified Software as a Service (SaaS), offering End-To-End analytics platform

Gives you a bunch of tools all together, Microsoft Fabric OneLake supports seamless integration, enabling collaboration on this unified data analytics platform

Scalable Analytics

Accessibility from anywhere with an internet connection

Streamlines collaboration among data professionals

Empowering low-to-no-code approach

Components of Microsoft Fabric

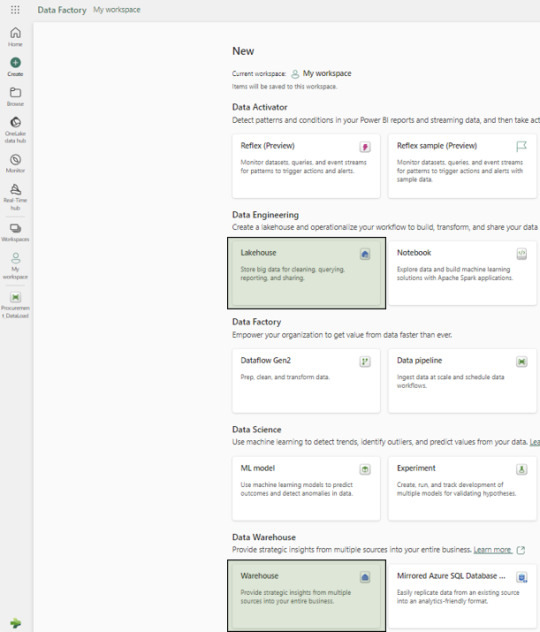

Fabric provides comprehensive data analytics solutions, encompassing services for data movement and transformation, analysis and actions, and deriving insights and patterns through machine learning. Although Microsoft Fabric includes several components, this article will use three primary experiences: Data Factory, Data Warehouse, and Power BI.

Lake House vs. Warehouse: Which Data Storage Solution is Right for You?

In simple terms, the underlying storage format in both Lake Houses and Warehouses is the Delta format, an enhanced version of the Parquet format.

Usage and Format Support

A Lake House combines the capabilities of a data lake and a data warehouse, supporting unstructured, semi-structured, and structured formats. In contrast, a data Warehouse supports only structured formats.

When your organization needs to process big data characterized by high volume, velocity, and variety, and when you require data loading and transformation using Spark engines via notebooks, a Lake House is recommended. A Lakehouse can process both structured tables and unstructured/semi-structured files, offering managed and external table options. Microsoft Fabric OneLake serves as the foundational layer for storing structured and unstructured data Notebooks can be used for READ and WRITE operations in a Lakehouse. However, you cannot connect to a Lake House with an SQL client directly, without using SQL endpoints.

On the other hand, a Warehouse excels in processing and storing structured formats, utilizing stored procedures, tables, and views. Processing data in a Warehouse requires only T-SQL knowledge. It functions similarly to a typical RDBMS database but with a different internal storage architecture, as each table’s data is stored in the Delta format within OneLake. Users can access Warehouse data directly using any SQL client or the in-built graphical SQL editor, performing READ and WRITE operations with T-SQL and its elements like stored procedures and views. Notebooks can also connect to the Warehouse, but only for READ operations.

An SQL endpoint is like a special doorway that lets other computer programs talk to a database or storage system using a language called SQL. With this endpoint, you can ask questions (queries) to get information from the database, like searching for specific data or making changes to it. It’s kind of like using a search engine to find things on the internet, but for your data stored in the Fabric system. These SQL endpoints are often used for tasks like getting data, asking questions about it, and making changes to it within the Fabric system.

Choosing Between Lakehouse and Warehouse

The decision to use a Lakehouse or Warehouse depends on several factors:

Migrating from a Traditional Data Warehouse: If your organization does not have big data processing requirements, a Warehouse is suitable.

Migrating from a Mixed Big Data and Traditional RDBMS System: If your existing solution includes both a big data platform and traditional RDBMS systems with structured data, using both a Lakehouse and a Warehouse is ideal. Perform big data operations with notebooks connected to the Lakehouse and RDBMS operations with T-SQL connected to the Warehouse.

Note: In both scenarios, once the data resides in either a Lakehouse or a Warehouse, Power BI can connect to both using SQL endpoints.

A Glimpse into the Data Factory Experience in Microsoft Fabric

In the Data Factory experience, we focus primarily on two items: Data Pipeline and Data Flow.

Data Pipelines

Used to orchestrate different activities for extracting, loading, and transforming data.

Ideal for building reusable code that can be utilized across other modules.

Enables activity-level monitoring.

To what can we compare Data Pipelines ?

microsoft fabric data pipelines Data Pipelines are similar, but not the same as:

Informatica -> Workflows

ODI -> Packages

Dataflows

Utilized when a GUI tool with Power Query UI experience is required for building Extract, Transform, and Load (ETL) logic.

Employed when individual selection of source and destination components is necessary, along with the inclusion of various transformation logic for each table.

To what can we compare Data Flows ?

Dataflows are similar, but not same as :

Informatica -> Mappings

ODI -> Mappings / Interfaces

Are You Ready to Migrate Your Data Warehouse to Microsoft Fabric?

Here is our solution for implementing the Medallion Architecture with Fabric data Warehouse:

Creation of New Workspace

We recommend creating separate workspaces for Semantic Models, Reports, and Data Pipelines as a best practice.

Creation of Warehouse and Lakehouse

Follow the on-screen instructions to setup new Lakehouse and a Warehouse:

Configuration Setups

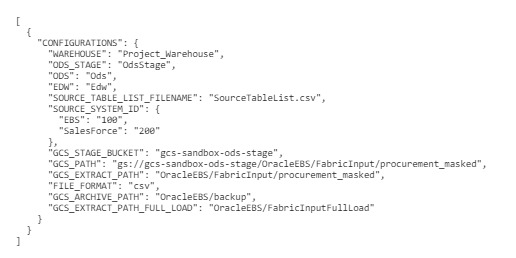

Create a configurations.json file containing parameters for data pipeline activities:

Source schema, buckets, and path

Destination warehouse name

Names of warehouse layers bronze, silver and gold – OdsStage,Ods and Edw

List of source tables/files in a specific format

Source System Id’s for different sources

Below is the screenshot of the (config_variables.json) :

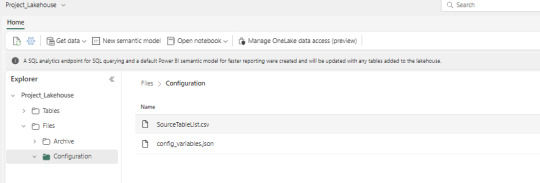

File Placement

Place the configurations.json and SourceTableList.csv files in the Fabric Lakehouse.

SourceTableList will have columns such as – SourceSystem, SourceDatasetId, TableName, PrimaryKey, UpdateKey, CDCColumnName, SoftDeleteColumn, ArchiveDate, ArchiveKey

Data Pipeline Creation

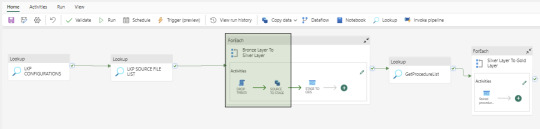

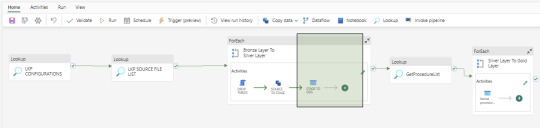

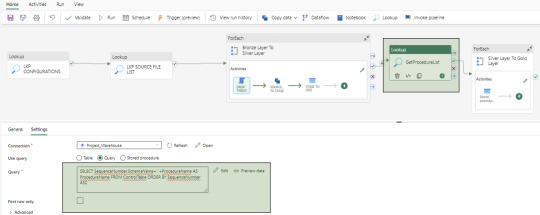

Create a data pipeline to orchestrate various activities for data extraction, loading, and transformation. Below is the screenshot of the Data Pipeline and here you can see the different activities like – Lookup, ForEach, Script, Copy Data and Stored Procedure

Bronze Layer Loading

Develop a dynamic activity to load data into the Bronze Layer (OdsStage schema in Warehouse). This layer truncates and reloads data each time.

We utilize two activities in this layer: Script Activity and Copy Data Activity. Both activities receive parameterized inputs from the Configuration file and SourceTableList file. The Script activity drops the staging table, and the Copy Data activity creates and loads data into the OdsStage table. These activities are reusable across modules and feature powerful capabilities for fast data loading.

Silver Layer Loading

Establish a dynamic activity to UPSERT data into the Silver layer (Ods schema in Warehouse) using a stored procedure activity. This procedure takes parameterized inputs from the Configuration file and SourceTableList file, handling both UPDATE and INSERT operations. This stored procedure is reusable. At this time, MERGE statements are not supported by Fabric Warehouse. However, this feature may be added in the future.

Control Table Creation

Create a control table in the Warehouse with columns containing Sequence Numbers and Procedure Names to manage dependencies between Dimensions, Facts, and Aggregate tables. And finally fetch the values using a Lookup activity.

Gold Layer Loading

To load data into the Gold Layer (Edw schema in the warehouse), we develop individual stored procedures to UPSERT (UPDATE and INSERT) data for each dimension, fact, and aggregate table. While Dataflow can also be used for this task, we prefer stored procedures to handle the nature of complex business logic.

Dashboards and Reporting

Fabric includes the Power BI application, which can connect to the SQL endpoints of both the Lakehouse and Warehouse. These SQL endpoints allow for the creation of semantic models, which are then used to develop reports and applications. In our use case, the semantic models are built from the Gold layer (Edw schema in Warehouse) tables.

Upcoming Topics Preview

In the upcoming articles, we will cover topics such as notebooks, dataflows, lakehouse, security and other related subjects.

Conclusion

microsoft Fabric data warehouse stands as a potent and user-friendly data manipulation platform, offering an extensive array of tools for data ingestion, storage, transformation, and analysis. Whether you’re a novice or a seasoned data analyst, Fabric empowers you to optimize your workflow and harness the full potential of your data.

We specialize in aiding organizations in meticulously planning and flawlessly executing data projects, ensuring utmost precision and efficiency.

Curious and would like to hear more about this article ?

Contact us at [email protected] or Book time with me to organize a 100%-free, no-obligation call

Follow us on LinkedIn for more interesting updates!!

DataPlatr Inc. specializes in data engineering & analytics with pre-built data models for Enterprise Applications like SAP, Oracle EBS, Workday, Salesforce to empower businesses to unlock the full potential of their data. Our pre-built enterprise data engineering models are designed to expedite the development of data pipelines, data transformation, and integration, saving you time and resources.

Our team of experienced data engineers, scientists and analysts utilize cutting-edge data infrastructure into valuable insights and help enterprise clients optimize their Sales, Marketing, Operations, Financials, Supply chain, Human capital and Customer experiences.

0 notes

Text

0 notes

Text

Microsoft Power BI users warned over pace of Fabric migration

http://securitytc.com/T79q1K

0 notes

Text

OneLake: Simplifying Your Data Lake with Microsoft Fabric

Microsoft OneLake or Azure OneLake

Azure will first investigate the barriers that keep people from finding, obtaining, and using data to innovate and improve decision-making. Azure will also demonstrate the potential of Microsoft Fabric’s one, SaaS, multi-cloud data lake, OneLake, which is intended to link to any data inside the company and provide access to it for all users in a well-organised, user-friendly hub. Your data teams can manage your data, promote high-quality data to promote utilisation, and control access using the OneLake data hub. The data elements that users have access to are simple to locate, examine, and use, whether they are found within data tools like Fabric or even inside programs like Teams and Excel.

OneLake

What is Microsoft OneLake?

The basis upon which all Fabric services are constructed is the data lake. OneLake is another name for Microsoft Fabric Lake. It is a feature of the Fabric service that gives all organisational data operating in the experiences a single, accessible area to be stored.

On ADLS Gen2, OneLake is constructed. It offers a tenant-wide data storage and a unified SaaS experience for both professional and amateur developers. OneLake SaaS streamlines the user experience by removing the requirement for users to comprehend any infrastructure concepts, like regions, resource groups, Azure Resource Manager, RBAC (Role-Based Access Control), or redundancy. Furthermore, the user does not even need to have an Azure account to utilize it.

Individual developers provide and setup their own segregated storage accounts, creating the ubiquitous and disorderly data silos that exist today. OneLake solves this problem. Rather, OneLake offers all developers a single, unified storage solution where policy and security settings are enforced consistently and centrally, and data sharing and discovery are simple.

In addition to making your data available to people who want it, you must provide them with strong analytics tools that enable them to grow in tandem with the demands of the company. Here’s where Microsoft Fabric really excels. Data teams may use Fabric to use a single product with a unified architecture and experience that offers all the features needed for analysts to pull insights out of data and deliver them to the business customer.

With the necessary tools, data scientists, data engineers, data analysts, business users, and data stewards may all feel perfectly at home in the analytics process. Because the experience is provided as a Software as a Service (SaaS) platform, customers may sign up in a matter of seconds and get substantial commercial value in a matter of minutes. Everything is immediately integrated and optimised.

Getting insights into the hands of everyone in your business is the next stage, especially with your data centralised and your data teams equipped to find insights more quickly than before. She’ll demonstrate how you can integrate reports and insights from Power BI with third-party programs like Salesforce and the SAP as well as your own products like Dynamics 365, Microsoft 365, and Power Platform.

Azure Fabric

Data migration, data science, real-time analytics, and business intelligence are all covered by Microsoft Fabric, an all-in-one analytics solution for businesses. The company provides data lake, engineering, and integration services in one location. You don’t have to combine various services from various providers while using Fabric. Alternatively, you may take advantage of a fully integrated, comprehensive, user-friendly solution that streamlines your analytics requirements.

Microsoft Fabric is an implementation of data mesh architecture that helps businesses and people to transform massive and complicated data stores into useful workloads and analytics.

It takes more than just putting technological features into place to promote adoption and foster a data culture. Technology may help an organization have the most effect, but creating a positive data culture requires taking a variety of factors into account, including people, procedures, and technology.

The appropriate instruments have always ignited change, from the development of steam power to the introduction of smartphones, which have placed the world’s information at people’s fingertips. And now they are beginning to see the possibilities of the next major change: the AI age. They are seeing this influence, along with other leaders, on people, whole teams, and every industry. It is one of the most exciting transformations of the generation. Everything from voice analytics and content creation to corporate chat for improved knowledge mining and data analysis for more insights and data accessibility.

Consider PricewaterhouseCoopers (PwC), a longtime developer in technology and a leader in the professional services industry. PwC is using generative AI to gather, process, and evaluate data more quickly in order to better serve its staff and provide clients better audit experiences.

Use Fabric to connect your data

PwC is not by itself. Businesses are using AI more and more to change their data cultures in order to get better business results. Building this culture traditionally calls for the following essential components:

Logically arranging your data into a mesh to facilitate users’ ability to find, use, and improve the best accessible data.

Building a smooth analytics engine that can satisfy the company’s need for real-time insight discovery.

Incorporating such insights into the daily apps that your employees use to enable data-driven decision-making.

While these stages remain essential, you can now use generative AI to speed up the process of creating a data-rich culture by increasing your data teams’ productivity and increasing everyone’s access to analytics tools. Microsoft can assist you at every stage of this process, as she explains in her webinar, Infusing AI into your Data Culture: A Guide for Data Leaders.

Read more on Govindhtech.com

#fabric#microsoftfabric#azure#onelake#govindhtech#microsoft365#microsoftazure#news#technews#technology#technologytrends#technologynews

0 notes

Text

【 🌌 MYTHOPOEIA V. 】

TLDR: A lore/world-building headcanon that focuses on the chronology (in this case, Epochs or definitive eras) of the in-universe of Nocturne’s canon. Also has some information, vaguely, regarding the mythology of divinity and important figures.

An era is defined by the most significant factor of its time. While planets and countries may have their own eras, defined by the reigning monarch or a particular age of change, the Bright Star System, as a whole, follows the timeline of Epochs, which denote significance of a grander scale. As of Nocturne’s position in the chronology, we are in the Sixth Epoch, which would be known by her people’s descendants as the Age of Anarchy. In-universe scholars will argue about the true beginning of the Sixth Epoch, as they argued about the Fifth before it, and the Fourth, and so-on; it is the Epoch’s nature to be debated, discussed, analysed and re-interpreted to fit whatever narrative is best to be served. Epochs are not limited by a particular stretch of time—there is no mandatory “limit” of days, months, years or centuries that permit a new Epoch being determined. Rather, it is determined by a time of significant change that alters how the denizens of Bright Star understand or adapt to their environment. For example, while the Genesis Migration was a significant cross-system event, it did not, on its own, cause enough of a cosmic upset to earn an Epoch-level importance to begin an era. Rather, it was but a mere instrument in the grander scheme of the Age of Champions, the Fifth Epoch.

This headcanon exists to give a context towards the chronology and a greater understanding of the world Nocturne is a mere part of. There will be references towards the in-universe mythology and other significant events that took place far beyond our hero’s birth, but there will be no in-depth description of those events, as I want to keep everything that could reveal too much—or is unnecessary in understanding Nocturne—under wraps. However, hopefully there will be enough information to provide a better grounding of the world Nocturne lives in, particularly if you are interested in combining universes or developing deeper threads with her character.

Despite the fact Nocturne exists in the Sixth Epoch, truthfully there are Seven; the first of all Epochs is known by scholars as the “Zero Epoch”, a time before time, a space before space, where the original Primordial first willed itself into existence. Here is where Essences, the foundation of all life, magic and matter in the Essential Universe, first came into being. It would not be until the First Epoch that actual physical space began to take form, as the Ancients—Gods comparable to the Titans of Greco-Roman mythology, who were more a physical embodiment of the things they ruled over and interpreted to be “carriers” of the Primordial’s divine will where it could not directly enact on its wishes—came into being. These Ancients are also comparable to the incomprehensible deities of the Cthulhu mythos, with titanic, unbearable bodies and minds so alien to us that they evade description or empathy. They are more like machinations of cosmic law, unkillable and undestroyable, for on their shoulders rests the entire Universe.

The Second Epoch is when the Divines, Gods who created “bi-essences” that combined the Primordial Essences into Lesser Essences, came into being as “children” of the Ancients that possessed a sentience closer to the realm of comprehension. They are capable of whimsy, of want, of ire and of fondness. Here, they would be most comparable to most pantheons of deities, with inter-relationships both within the circle of the Divines and with their creations, the Kinetics, pseudo-mortals who co-existed with the Divines and were taught their magic in return of being subordinate with them.

The Third Epoch is the first Epoch marked by a war of tremendous proportion, that resulted in the death of Divines and the weakening of magic that is still felt to this day. Here, the Divine Nolu, the God of Secrets and Mystery, prompted the Kinetics into rebelling against their deities by telling them forbidden secrets of mortality, encouraging them to upheave the heavens and take their power by storm rather than tolerating watered down lessons that kept them under their benevolent Gods’ thumbs. Nolu would abandon the Kinetics during this war, leading to slaughter on both sides, only to return at last moment to assure the death of all Divines—aside from themselves. The Third Epoch was solely this war, though the duration of it is unknown, and the true extent of the damage and knowledge of what the pre-Divine War world was like is knowledge lost, perhaps for eternity. All that is known is that likely it was a time of Edenic bliss, where magic flowed like wine and mortals were cared for by Divines. After the trauma of the War, the Fourth Epoch was birthed: the Age of Ruin, the Age of Loss, the Age of Abandonment.

Kinetics, now scorned by the Ancients whose children they had revolted against and punished by the Primordial who hosted them, suffered the punishment of agelessness. They were removed from the life-death cycle that promised reincarnation and forgiveness of the soul, forcing them to live an eternity of repentance and grief as they watched the world they knew rot into a mere husk of its former self. Magic weakened with nobody there to teach them, and without Divines to create Kinetics with such innate skill, they were condemned to physically reproduce until there were only Mortals.

Mortals lacked the intimate tutelage that gave Kinetics their mastery over the Primordial Essences, or the Divine Essences, and so their powers weakened too. Magical knowledge was not lost completely, but it would take lifetimes to achieve a level that most Kinetics had earned in adolescence. Over time, the era of bliss and magic that had once been an undeniable reality would fade to myth across the Cosmos, with the division of the New Way (the belief that all of this was purely mythology) and the Old Way (the belief that all of this was fact) separating mortals across the Universe, severing some from their magical heritage entirely to make way for man-made scientific advancement devoid of spiritual attunement.

The Spider Star System was a System that followed the New Way, forcing the less-magically repressed mortals—known as Undanes—into hiding lest they be rejected or destroyed for their absurdities. This System would also become the grounds for one of the greatest calamities recorded, with the Genesis Collapse marking a potentially unrepairable wound in the very fabric of reality whose effects are still present today, giving way to the Paroxysms that blight the Bright Star System in the Sixth Epoch. The Bright Star System followed the Old Way, however, and magic is still understood and studied with varying levels of skill and mastery across the System. It was the Genesis Migration that introduced the Genesse people, Undane and Mundane alike, to the cohabitation of magic-repressed and magic-expressive people, though not without duress. It was this discovery for the Mundanes that contributed to the genesis of the Ametsuchi, forged out of hardship, exile and sacrifice brought on by a primal rejection of this magical nature.

The Genesis Collapse was the locus of the Fifth Epoch, the Age of Champions, where it became apparent that Divinity could be reached by mortalkind should the Primordial bestow upon them the capability. The nature of Champions is debated among scholars; some argue that Champions, of which there is only one certainty and one other heavily contested, are the Divines reborn, returned from their celestial graves, while others argue that the Champions are entirely new in spirit as it would be disrespectful to the Divines to ignore the devastation they had suffered at the hands of men. Unfortunately, the effects of the loss of Divines is still felt to this day, as the sole Champion of the people, Genevieve (the sacred figure of the Holy Order), is absent. Whether she perished after the Genesis Collapse or otherwise went to another System or was killed by the Goliath in some unseen battle of tremendous proportion, is completely unknown. Mortals can only emulate what they think she would have done, such as the Divine Right of Kings applied to the Boucher imperial line on Neo, or the Holy Order’s fight against Paroxysms.

The Fifth Epoch is potentially the shortest of all Epochs, having spanned only several generations, perhaps not even a millennium.

The Sixth Epoch, then, is the playground for the plot of this blog and its attached extended canon. It is the Age of Anarchy, the Age of Monsters, of all things Eldritch. It is uncertain when the Sixth Epoch came into play, for some argue it was with the formation of Spider’s Eye as it tried to awaken the Spider-God Goliath, the destroyer of Genesis and the foe of the Champion Genevieve, or with their first use of Chaos manipulation and Paroxysm invocation as a weapon in the assassination of the Green-King Eoin of Namana. It is potentially even incited by the Ametsuchi Massacre, which was tied to the actions of Spider’s Eye and the High King Kazumi Ametsuchi, resulting in Chaotic manipulations and mutilations of all remaining Ametsuchi. The onus of the Sixth Epoch may be debated, but the end of the Sixth Epoch is entirely unknown: some fear that it may never end, others fear that it is the end, but hopefuls pray for a better, kinder Seventh Epoch, just on the horizon of what may be the most horrifying Epoch to exist in.

7 notes

·

View notes

Text

PowerApps and Power BI – Finally together!

We have already discussed about PowerApps & its advantages in our previous posts. In case you have missed out on one, click here.

Before I move ahead, let us discuss what Power BI is, its advantages & how well does it go with PowerApps. PowerApps and Power BI can now be merged have been a great announcement for the PowerApps developers & the end users as well.

What is a Power BI?

Offered by Microsoft, it is a service that provides business analytics. It allows you to visualize your information and offer bits of knowledge over your company or organization. You can embed Power BI in your mobile apps as well as your websites.

It means to give business intelligence & intuitive perceptions capacities with an interface basic enough for end clients to make their very own reports and dashboards in real time.

Features of Power BI

Pre-assembled reports & dashboards for well-known SaaS arrangements.

Dashboards that give real-time updates.

No need to worry when your data is secure even though live, both on cloud & on the premises.

Incorporated with recognizable Microsoft items uses duty for scale and accessibility in Azure.

Coordinated with all the IT frameworks also supports hybrid configuration and can be deployed faster.

Work together with your team, share data on & off the campus of the published dashboard & reports.

PowerApps and Power BI

PowerApps empowers everybody to fabricate and utilize business applications which can associate with your data whereas Power BI engages everybody to get profound bits of knowledge from their data, and settle on better business choices. What a great combo they can make! Hire PowerApps developer for any of your idea today!

Further, so this announcement has been beneficial for both PowerApps developers & end users. The announcement says that the accessibility of custom visual of PowerApps for Power BI in see which empowers you to utilize these items far better together.

Utilizing the PowerApps custom visual, you will certainly pass mindful information to a PowerApps app which refreshes continuously as you make changes to your report. This means that users can have insights into business within the Power BI and also take necessary actions right away from Power BI dashboards & reports. There is shuffle between the tabs, you can directly copy & paste anything and everything that you require be it an invoice number or invoice amount.

How to migrate data from Power BI into PowerApps?

Following are the steps you need to follow for the same:

Add your PowerApps custom visual & drop it in your workspace.

Choose the fields you require in your Power BI.

After that, you can either build a new application or choose from the ones previously made. You can only choose from the ones registered in your email address.

If you click on Create New, the PowerApps framework will open a new tab in the browser named “designer”

Once you login to a designer, you will find a tab named “PowerBIIntegration” which will have all the fields you added in Power BI in step 2.

And there you go!! It’s done.

Note: If you make any change in the visual’s data field, make sure you edit the app within Power BI with the help of ellipsis and then the edit option. If you fail to do so, your PowerApp will crash!

An alternative way to migrate your data – Power BI Tiles

An alternative way to merge PowerApps and Power BI is embedding a particular Power BI tile into your app.

Just follow the following steps:

Add the new Power BI tile control to your app, to show a Power BI tile.

Select the tile you wish to show. For that go to Option panel > Data, and change the properties of Dashboard, Workspace & Tile.

Once the properties are changed, the Power BI visual will appear on the design surface.

As simple as that!

Note: So as to make the Power BI content accessible to the clients, the dashboard where the tile originates from should be imparted to the client on Power BI. This guarantees Power BI sharing authorizations are regarded when Power BI content is utilized to in an application.

The PowerApp will be used by all who have the sharing link.

To Conclude,

If you are interested in developing a PowerApps with Power BI and looking to hire PowerApps developer, we are here to serve you. We have the most experienced team of PowerApp developers and have client stand all over the globe.

Also, let us know in the comments section how useful this article was to you. We would also appreciate queries related to PowerApps and Power BI if any.

Until next time!

Originally Published by Concetto Labs > PowerApps and Power BI – Finally together!

0 notes

Text

Cloud-Based Warehouse Management - Is It Right For You?

As systems increase their data throughput so when the price of processing capacity continues its reduction, customers find they could faithfully run software from cloud.

Together with cloud-delivery now using many different architectural options (private, hybrid or public ), the structure to encourage a stable and powerful platform today exists in many data centers.

From the WMS view, the"Cloud" has allowed Tier 1 and Tier two associations to effectively deploy complex distribution chain functionality internationally without maintaining a persistent and high priced on-premises footprint. Advantages can likewise exist for smaller, more geographically diverse and growing Tier 3 associations who want to appreciate a hosted, pre-tax price version.

Lowering the Cost of Deployment

The most usual advantage mentioned for just about any cloud-based installation is the fact that the implementations are faster compared to classic on-premises installation. Setup and setup apart (which has to occur with almost any WMS), both the IT work force and calculating structure are offered by the alternative supplier in a hosted version, handled in a continuing basis and thus offer a faster reinvestment.

Up front expenses of installation maybe be postponed when things like licensing costs will be distributed over the duration of a contingency arrangement and also eventually become a operational expenditure instead of a capital expenditure. Additionally, a lesser funding expenditure can provide cash flow added benefits to a business and reduce investment risk after calculating a warehouse management resolution.

A Concentrated Skill Set and Dedicated Tracking

Back ups and disaster restoration are also contained in a fully controlled, hosted service, and , eliminates the dependence on company IT tools to encourage an WMS server environment and local backup programs. Regularly owning a warehouse management system demands a distinctive skillset (comprehension of a specific ERP port, data tables, critical trades and changes in commodity SKU data etc.), which has to be kept by your client, that reflects extra cost or time.

With all the WMS from the cloud, even the purchaser can trust the answer provider's Support Desk that's dedicated visibility and expertise into the applications 24/7. The supplier can honestly track the server surroundings, perform seamless back ups and updates, and examine data bases increasing that the procedures complete reliability and lessening the cycle time for problem resolution.

This functions as a warranty to this company and gives a level of risk reduction against exposures that some times businesses never have applied .

Easier accessibility and Information Sharing

Unlike spreadsheets or background software, a cloud-based solution will not induce an individual to some desk at the warehouse office. Webbased inventory direction gives your employees anywhere, anytime use of realtime data, whether traveling with a cellular device or receiving alarms at other warehouse locations.

Cloud-based inventory management methods which possess the capability to"federate" modules together with down stream providers enables the managing of consignment stock, and the arrangement of parts straight to save or perhaps the pre-printing of ASNs as well as other bar code labels before sending to chief store or distribution locations.

Cloud-based solutions also offer you the benefit of routine software upgrades and maintenance patterns, available 24/7. Larger version upgrades and new modules could be fully analyzed in emulated production surroundings, using far greater usage of varying test surroundings which might utilize various chips, systems, storage and transfer fabric. Each one these activities might be managed in a way which don't disrupt daytoday small business services.

With usage of best-to-breed integration applications, a cloud-based setup is ideal to give conventional integration structure and service for EDI mapping along with bi directional communications between various partners. At the same time, small companies may manage the economies of scale in regards to the licensing costs entailed with data bases, specially if moving from state models to larger data bases which encourage increasing data requirements.

Other conventional costs might be avoided also. Costs like managing firewalls and providing VPN structure for platform access (along with also the installment of VPN customers on lap-tops) are currently managed by update web tools which offer secure single-sign online functionality.

Maybe not Consistently a Combination

Cloud-based solutions aren't just a fit for several businesses, even though the reason why against making the most of these economies of scale and also subscription-based prices are getting to be less defensible, according to the gain of WMS adoption year annually.

As an example, if a business has a strong IT section and longstanding expertise together with all the WMS and hosts additional in house software, the prices for a further platform could already be distributed, and perhaps not regarded as a location of concern.

Most remote solutions, when correctly installed employing a pro site questionnaire for helpful tips offer significantly more than considerable reporting and band width from the warehouse. The information pipe which supports RF scanning from the warehouse needs to be tracked and tracked to stop slow and drops answers.

Meeting the Criteria

Can you're looking for any time, anywhere access for your inventory rankings? Are you currently growing company that'll spread over a broad geographical location? Is the business seasonal or move through periods of little or low use at which you might you take advantage of a flexible regular pricing model? Can you've limited IT capacities being an company and might rather never to get in additional computer tools to back up your warehouse management atmosphere? Can you expect your warehousing needs might be temporary sometimes, demanding the migration out of a logical warehouse or even physical location into another? Can be the company mutually Cloud based ERP sensitive and favors financing from the kind of functional expenses? Can you get a diverse collection of user groups which have to observe precisely the very same pair of stock information between multiple locations? Can you want to see dash type metrics and KPIs on different form factors such as for example smartphones and tablet computer devices? Are you currently conducting a surgical procedure which could excel without radiofrequency radio and scanners infrastructure, ie. paper-based? Can you have additional vendors or providers that may possibly utilize your WMS to organize purchase orders, publish carton tags, and make ASNs to be able to improve supply chain visibility and standardization? Can you have data security conditions that rise above what you are able to afford or sell locally on-premises? Is it crucial your tech seller is accountable to get 100-percent in charge of maintenance, upgrades, and 24hour support? Have you got on-going or diverse training conditions for warehouse staff which could possibly be simplified by online delivery within multiple locations? (Certifications, quality and safety assurance conditions might be embedded within warehouse management procedures to meet protection and insurance policies)? In case you answered"yes" to any one of those aforementioned, you might well be a fantastic candidate for some cloud-based warehouse management strategy and ought to start to focus with a company case that contrasts the charges of on-premises equipment, up front licensing and IT tools to the spread monthly expenses of a cloud-based WMS solution.

0 notes

Link

Sage Enterprise Management Partner Summit, the largest worldwide gathering of Sage Business Cloud Enterprise Management Partners will also witness the featured keynote by John Barrows, Professional Sales Trainer and Consultant. Delegates will also have the opportunity to hear from other standard keynote speakers like Blair Crump (President Sage), Ron McMurtrie (CMO Sage), Jennifer Warawa (EVP Partners Accountants and Alliances Sage), Nick Goode (EVP Product Marketing Sage), Mark Fairbrother (EVP Product Engineering Enterprise Management Suite Sage), Robert Sinfield (VP Product Enterprise Management Suite Sage) and Fabrice Alonso (Product Management Director Enterprise Management Sage). Be among the first to see the V12 updates in action on the keynote stage. The meaningful conversations are sure to spark imagination and leave you with an a-ha moment.

The Sage Enterprise Management Partner Summit combines inspiration, insights, technology, and networking for a fantastic experience that powers future business success. The Sage Sessions deliver exactly the same objectives. The Sage Sessions is an opportunity for Partners to discover the scope to grow their business and gain insights from various showcased products.

The delegates can also interact in the Sage Genius bar and the Sage meeting center which is an opportunity for the attendees to discover how to best utilize the Sage technology to increase product performance and accelerate business.

What is Greytrix bringing to Sage Enterprise Management Partner Summit as a Gold Sponsor this year?

Greytrix has been a leading Sage Gold Development Partner for over two decades, providing a broad range of Sage Enterprise Management Services and Solutions across Sage Partner ecosystem. Being recognized for its 5-star rated GUMU™ app on Salesforce AppExchange for Sage ERP – Salesforce and Sage Enterprise – Sage CRM systems, Greytrix combines the unmatched experience and specialized skills of working across industries & verticals driving innovation to improve the way businesses operate. Wondering what’s special or new with Greytrix this year? This year Greytrix is bringing the GreyPortal for Sage Enterprise Management which automates the power of personalizing and delivering business information through self-service web portals and mobile applications to their customers keeping your business on an “always-on” mode.

Greytrix Sage Enterprise Management Products & Services

GreyPortal- a B2B self-service customer portal

Integrations: Salesforce | Sage CRM

Add-Ons: Catch Weight | Letter of Credit | India Localization | eCommerce (Magento) integration

Integration Services: Payment Gateway | POS | WMS | BI | Shipping System | EDI

Greytrix Sage Enterprise Management Professional Services

Implementation & Configuration

Bespoke Customizations

Technical Support

Migration

Integration

Onsite Resource Augmentation

Offshore Development Center

Greytrix Sage Enterprise Management Development Skillset

4GL Programming

Java Bridge Integration

Version Upgrades

SOAP Webservices

Crystal and BI Reporting

ADC Programming

Designing Dashboards

SEI Installation & Upgrades

Designing Workflows

Kumar Siddhartha, CEO – Greytrix, along with techno-functional experts will be presenting a session: “Take a customer centric approach and win deals with an integrated CRM (Salesforce.com) & Customer Portal for Sage Enterprise Management”. Apart from exhibiting, we also look forward to meeting our Sage Partners and Sage Team at the event and to provide you with insights on how business roadblocks can be eliminated by optimal use of Greytrix products & services, also why GUMU™ utility is the best fit for your specific business environments. Meet us at Greytrix booth and experience the power of our services and solutions!

To schedule your meeting with our Executive and Techno-functional team at Sage Enterprise Management Partner Summit, write to us at [email protected].

Let’s Connect and Grow together. See you soon at the event!

#sage enterprise management partner summit#Sage Enterprise Management Partner Summit Dubai#Sage Enterprise Management Products#Sage Enterprise Management Professional Services#sage event#Sage Partner Summit#Sage X3#sage x3 addon#Salesforce and Sage EM Integration#Salesforce and Sage X3 Inetgration#warehouse management system#WMS#Drive to Thrive#Gold Sponsor#Greytrix Event#Letter of Credit#Catch Weight

0 notes

Text

HOLD: Exploring the Contemporary Vessel

When we think about what it is to hold, the obvious reference to physical matter soon gives way to other contents: to hold meaning, memory, tradition or association. To hold your attention, to hold your gaze. The vessel, in its ubiquity and historical significance, serves as a powerful device for expressing concepts and provoking thought. This survey exhibition observes ways in which contemporary makers approach the vessel, particularly through the language of materiality. Looking at themes of making, narrative, value and use, HOLD considers the contemporary vessel’s potential to enrich and expand on the concept of containment.

Making

The conduct of thought goes along with, and continually answers to, the fluxes and flows of the materials with which we work. These materials think in us, as we think through them. (Ingold 2013, 6)

Social anthropologist Tim Ingold speaks of a making theory he coins as “The art of inquiry”, at odds with the hylomorphic model of the maker imposing a preconceived form onto inert matter. In these works, we see a clear embodiment of that idea. An expression formed through a dialogue with material.

The work of Peter Bauhuis exemplifies a meticulous approach to making matched with a trust and relinquishment of control. In his bi-metal casting process, Bauhuis pours one molten metal on top of the other, allowing the two to interact and form a tonal gradation directly affected by the intrinsic qualities of the alloys. The science of metallurgy becomes choreography, a dance between control and chance.

The process of making is laid bare in Sally Marsland’s work. Within this group, vessels are formed using a poured casting technique Marsland has refined in the making of her jewellery pieces. Surrounding these works are vessels that occur as byproducts of her process. Mixing pots, drip sheets and tools covered in excess, revealing the temperament and viscosity of the fast setting resin. The variety of hues, textures and volumes achieved in these works speak of Marsland’s harmony with a material as responsive as it is unruly.

The malleable and receptive nature of clay is a distinctive aesthetic feature in the work of Danish artist Christina Schou Christensen. With its undulating fleshy forms, her Soft Fold Pink vessel displays a haptic connection to material, which is both sensually raw and refined. Through her hand building process, Christensen allows the material’s plasticity to dictate how each fold lands, droops and protrudes. Rather a designed outcome, the final form acts as an artefact of the making experience.

Narrative

In understanding these vessels as objects with their own history and agency, we can unlock their narratives, cultural contexts and the relationship to their makers.

A sense of place and experience is imbued in Marian Hosking’s Cape Conran vessels. Comprising fabricated forms and cast elements taken directly from the environment, these vessels tell a story of the gatherer, retaining memories through collected souvenirs. Much like a seashell exposing its inner architecture or a native leaf revealing its venation, these vessels privilege the eye of the close and curious observer.

A similarly close attention is employed by Lindy McSwan in her Fragment vessels, focusing on the charred surfaces of native forests devastated by bushfire. McSwan emulates the changing states of burning charcoal through a finely honed enamelling technique. The assemblage of these vessels express a passing of time and a way of contemplating the overwhelming tragedy of bushfire.

Tradition and family history play a significant role in Robin Bold’s Series 3 - 1,3,7 vessels. Part of a larger work of 19 pieces, Series 3 uses the concept of family silver to explore wider narratives of migration and family lineage. The nickel, steel and kauri used in Bold’s work, directly correspond to materials prominent in her own family history of trans-Tasman migrants and seafarers. This family portrait speaks of a deeply personal relationship to material and the artist's identity as a maker.

Value

The idea of material hierarchy and value is ingrained in our cultural consciousness; from Greek Mythology’s Ages of Man, to the recurrent custom of wedding anniversary gifts. Craft tradition certainly heeds these conventions, giving contemporary makers the ability to challenge lines of thought and uncover new ways of expressing value.

Renowned for his subversion of silversmithing traditions, David Clarke uses his Baroque Beauties to question material value and ideas of form. Clarke purchased 5 candlesticks from eBay and cast each in pewter, with their packaging materials intact. The cheap and ephemeral packing is absorbed into the vessel’s permanent form, its function as a protective layer reasserted as decorative embellishment.

In an ode to the beauty of the everyday object, David Bielander fabricates and patinas sterling silver to create his Paper Bag (Sugar) vessel. The familiar and disposable form is afforded a new value, not just in its material reimagining, but in the care and attention evident in its meticulous replication. Bielander’s ability to capture the beauty of the humble paper bag, submissively battered and textured through use, proves this vessel to be so much more than just a clever juxtaposition.

The value of paper is also reconsidered by Barbara Schrobenhauser’s Time is on my side - Blue like the future vessel. Schrobenhauser creates her vessels from pulped paper mixed with various pigments and materials, pressed into specially made moulds. The deceptively lightweight vessels have a colouring and texture akin to a heavy stone, their size suggesting a ritualistic purpose. Schrobenhauser takes advantage of our material associations and assumptions to channel thoughts of time, memory and perception.

Use

The ongoing discourse surrounding function and the craft object’s tie to it, are no less present in these contemporary works. In her essay “The Maker’s Eye”, British ceramicist Alison Britton (1982, 442) contemplates the distinction between the “prose” and “poetic” object, and the potential for the vessel to oscillate between the two. In acknowledging that the vessel will always be answerable to a question of function, Britton highlights an opportunity to redefine use.

In her Heavy water (Fukushima butterflies) vessel, Inari Kiuru references the form and function of the Japanese mizusashi water container. An ongoing exploration in her work is the rapid mutation of butterflies following the Fukushima radioactive accident. Utilising concrete and steel, materials inherently tied to function, she reflects on the convergence of the industrial and natural worlds. What Kiuru achieves is a poetic and contemplative object which references and fulfils function in its most utilitarian sense.

The question of function is met with no sense of constraint in Vito Bila’s Vessel Cluster #1. By Bila’s own definition, these are non-functional, although there is form, materiality and construction processes associated with utilitarian objects. Made from copper and stainless steel, the vessels expose oxides, tool markings and welded seams to reveal a dialogue between maker and material. Bila employs the language of traditional silversmithing to create new associations and redefine the craft object’s relationship to function.

The artists in this exhibition represent a myriad of approaches and perceptions in regards to making, narrative, value and use. As an object embedded in human experience, the vessel is profound in its quotidian nature. Contemporary interpretations serve to honour the vessel’s place in our lives, whilst also challenging it. Just as the word ‘hold’ carries so much rich association in its semantic scope, these works embody the vessel’s power as a truly polysemic object.

Natasha Sutila, 2017

Source

Britton, Alison. 1982. "The Maker’s Eye" In The Craft Reader, edited by Glenn Adamson, 441-444. New York: Berg.

Ingold, Tim. “Knowing from the inside” In Making: Anthropology, archaeology, art and architecture, 1-16. Oxon:Routledge, 2013.

0 notes

Text

Making the Leap: Power BI to Microsoft Fabric Migration – What You Need to Know

If you’re a Power BI enthusiast or an organization relying heavily on Power BI services, you’ve likely heard the buzz about Microsoft Fabric services—the next evolution of Microsoft’s data ecosystem. Fabric isn’t just a new tool; it’s a game-changing platform designed to unify data integration, analytics, and governance in a single, seamless environment.

So, why should you consider migrating from Power BI to Microsoft Fabric? And how can a Power BI implementation partner or a Microsoft Fabric consulting company help ensure a smooth transition? Let’s dive in.

Why Migrate to Microsoft Fabric?

Power BI has been a trusted tool for business intelligence and reporting, but as data ecosystems grow increasingly complex, organizations need more than just dashboards. Enter Microsoft Fabric—a unified data platform that brings together tools for data integration, data governance, and analytics.

1. Unified Data Ecosystem

Fabric combines the power of Power BI, Azure Synapse, and Data Factory into one cohesive platform. This integration eliminates data silos and creates a streamlined, collaborative environment.

2. Scalability for Modern Data Needs

Fabric’s scalable architecture supports massive data volumes while ensuring top-notch performance. Whether you're dealing with IoT data streams or complex machine learning pipelines, Fabric handles it all.

3. Enhanced Data Governance and Compliance

With built-in tools like Azure Purview, Fabric simplifies compliance with regulations such as GDPR and HIPAA. As data governance consultants, we’ve seen businesses struggle with fragmented compliance tools—Fabric offers a unified solution.

4. AI and Automation at Scale

Fabric is built with AI at its core. From automating data pipelines to enabling advanced analytics, Fabric empowers businesses to derive insights faster and smarter.

What Does Migration Involve?

Transitioning from Power BI to Microsoft Fabric isn’t a simple "lift and shift." It’s about reimagining how you manage, process, and analyze data. Here’s what you need to know:

1. Assessment of Current Workloads

Evaluate your current Power BI environment.

Identify dependencies on Azure integration services, SQL Server migration, or data engineering services.

2. Modernizing Data Pipelines

Fabric’s data integration service allows you to modernize and centralize pipelines, ensuring seamless connectivity across multiple data sources.

Partner with a data modernization consulting company like PreludeSys to realign your pipelines with Fabric’s medallion architecture.

3. Upgrading Reporting and Dashboards

Fabric enhances Power BI’s capabilities with real-time dashboards and AI-powered insights.

Our business intelligence consulting services ensure your dashboards leverage Fabric’s advanced features for maximum impact.

4. Ensuring Governance and Security

Fabric’s deep integration with data compliance services like Purview ensures robust governance frameworks.

We provide data governance consulting services to help you implement policies that protect data while enabling agility.

How PreludeSys Can Help

Migrating to Microsoft Fabric may feel like a daunting task, but it doesn’t have to be. With a trusted partner like PreludeSys, a Microsoft Fabric consulting company and Power BI implementation partner, you’ll have access to a team of experts who specialize in:

Data Modernization Services: Modernize your data architecture with Fabric’s medallion framework, ensuring scalability and flexibility.

Cloud Migration Services: Move workloads to Azure seamlessly, reducing downtime and ensuring performance.

Data Integration Consulting Services: Consolidate fragmented data into Fabric for a single source of truth.

Power BI Consulting Services: Transform your existing Power BI reports into Fabric-ready, AI-driven dashboards.

Data Governance Services: Implement governance frameworks that align with industry standards and regulations.

Benefits of Power BI to Fabric Migration

The benefits of migrating to Microsoft Fabric extend far beyond enhanced analytics:

1. Faster Decision-Making

Real-time data processing and analytics empower businesses to act on insights instantly.

2. Reduced Costs

By centralizing your data ecosystem, Fabric eliminates the need for multiple tools, driving cost efficiencies.

3. Future-Proofing Your Business

Fabric’s integration of AI and low-code tools ensures your data platform evolves with emerging technologies.

A Seamless Migration Journey Awaits

Migrating from Power BI to Microsoft Fabric is not just a technical upgrade—it’s a strategic move to future-proof your data ecosystem. By partnering with PreludeSys, you gain access to the expertise of a data engineering consulting services provider, ensuring a smooth and successful transition.

Are you ready to make the leap to Microsoft Fabric? Let us guide you every step of the way. Contact us today to learn more.

#microsoft fabric services#power bi premium to fabric transition#transition power bi to fabric#convert power bi premium p1 to fabric#microsoft fabric consulting#microsoft fabric consulting company#fabric for power bi users#powre bi premium p1 transition to fabric#power bi consulting services

0 notes