#of community-based reporting for content moderation being used to harass people.

Explore tagged Tumblr posts

Text

so there's a new campaign going round hoping to Make Fandom Less Racist by... pushing for moderation on ao3. no way this could Possibly go wrong :)

#mine#first of all i'm glad ao3 is committed to its stances bc like. 'we need a moderation team that decides which works are Too Racist'#gang what the Fuck are you talking about. who the FUCK do you trust to make that call.#obvs ao3 Isn't gonna do that bc they are An Archive but it's genuinely insane to me to see people Missing The Point So Bad#'ao3 should be able to administratively hide works that don't violate the TOS' first of all No#'and should plan to regularly use this ability to hide works that are Too Harmful by x definition' HELL no.#i do agree that there may be a need for better anti-harassment rules and stuff but like.#'moderate ao3' no. make your own archive. hope this helps <3#also like. jesus christ do you KNOW how many fanworks are on there?#'just hide the racist ones' bestie WHO do u think is gonna read thru the entire corpus of work posted on ao3 so far#in order to root out the ones which are Too Racist.#'well we're gonna make it a community reporting model—' let me stop you right there.#kind of embarrassing to be talking abt racism and specifically harassment in fandom spaces and fail to acknowledge the MASSIVE tendency#of community-based reporting for content moderation being used to harass people.#that's gonna be a pass from me gang.

10 notes

·

View notes

Text

I’d like to post about something important and special to me in the hopes that perhaps someone might see it and derive meaning from it too: the Something Awful Forums. I want to talk about it because I think it’s the best place on the internet.

This is one of the oldest communities on the internet. Once upon a time it was the secondary component of a website mainly dedicated to ridiculing other stuff on the internet. That’s a vein of content that has led to a lot of terrible behavior over the years, and the Something Awful community has certainly had its bad moments. However, within it there has always been a certain strain of, well, I don’t want to say “niceness” per se because at the beginning it was really all about being mean, and we still take any opportunity to lightheartedly razz on each other when it comes up. Instead I would say that there’s always been a strain of accountability. “Don’t touch the poop” is a mantra nearly as old as the site itself, and what it means is "you can use this website as a platform to make fun of things that are silly or dumb, but you absolutely cannot use it as a harassment platform." This hasn’t always been executed perfectly (and in particular was not held to by the site’s original owner, good riddance) but it has guided the SA forums on a journey of evolution that has led to it becoming a less mean-spirited, less volatile, less divisive, less reactionary place while the rest of internet has become (imo) more of those things. In the current iteration the number one rule at play is DON’T BE A DICK.

Nowadays the front page, which was once the main attraction dedicated to skewering other parts of the web, is gone except as an archive. The forums are now the main attraction, and they are now dedicated to simply discussing things you like. Posts go into threads sorted by topic, in chronological order. There is no algorithm. There are no bots. The rules are based on what will result in the best website for users rather than advertisers, so you don’t have to say unalive or sewer-slide. The site is attended to by an active, engaged moderation staff made of community members who are genuinely interested in making it the best community possible. If you see bigotry or harassment, you don’t have to fire off a report to a vaguely defined group of anonymous reviewers who may or may not do something about it. When a report is acted on, the moderator action automatically goes on a big list alongside the moderator’s name and their reasoning for the punishment. If someone is a nazi, they get fucking banned. If someone is a transphobe, they get fucking banned. If someone is an asshole, they get probated for a few days so they can cool off. The result is a forum full of people who are, generally speaking, chill and nice.

My favorite example of the evolutions of the site’s culture and userbase is that we used to say it was a “dead gay forum” to complain about how it was losing popularity, using gay as a pejorative because that’s edgy. Now? We still call it a dead gay forum, but positively because we love how dead it is (low pop makes for a tighter community) and we love how gay it is (the site has a significant queer population, myself included!)

.. and now, I would like to cordially invite you to make the site a little less dead and a little more gay (or not) by joining us!

One of the ways that the SA Forums maintain quality is a high barrier to entry. Well, not that high but certainly high relative to the big sites. It costs ten dollars to make a forums account, which is required to read and post on the forums. However, to celebrate the site’s 25th anniversary, the management is doing something the forums have not done in a long time, a free weekend! This weekend only, you can register a forms account, read the forums, and post in a limited number of them, absolutely free!

If you are nostalgic for the Internet of the late 90s and early 2000s, or you never got to experience them and are curious what posting in them was like, this is your chance to do so. To celebrate the 25th anniversary the forums now have a new section designed to look and feel like the early days, but without all the casual homophobia and such. Same posting vibes, new posting sensibilities. If you are a new member, it is advised that you take the time to not just read the rules but also read existing threads and get a feel for what the forms are like before you post. Newbies beware, you may get flamed!!!

You’ve already read my pitch, but if you want some more convincing, here is a thread where SA posters talk about what the site means to them and why they like it. Some of these people have been posting on this website for longer than I’ve been alive.

But the forums aren’t just for posting about the forums, they’re also for posting about things you love! Here are a few threads dedicated to things I’m interested in, in case you share them.

Balatro:

Jerma:

Excellent birds:

Fanart (56k WARNING NOT DIALUP FRIENDLY):

That’s just a few of my favorite threads. If none of that interests you, check out the main page and start browsing! I guarantee you’ll find something you like. Take note that FYAD is kind of the “anything goes” subforum (still no nazis but you might see a picture of a penis or something) while every other subforum is more or less SFW. My favorite forums are Post your Favorite, Video Games, Ask/Tell, and Rapidly Going Deaf. I tend to steer clear of FYAD and the politics subforums since they’re really not to my taste. Thanks so much for reading, and I hope you’ll find a place on the only forums that acknowledge the truth that modern social media will not: The Internet Makes You Stupid!

#retro#retro internet#internet#forum#forums#something awful#something awful forums#90s#90s internet#2000s#2000s internet#90s aesthetic#2000s aesthetic#online community#moderation#balatro#jerma#fanart

2 notes

·

View notes

Note

apologies if this is kinda out of left field but what is your current opinion on ao3? me personally i do like to read and write fic on it due to the tagging system and other aspects but the amount of whack shit makes me uncomfortable and I do wish you could report harmful content easily (I think you can now but it's a bit of a process)

sorry this took so long to answer! i had to finish graduating college 😔 i tend not to think about ao3 too much or too in depth, and i don't really get involved in the discourse on this site about it. please take everything i say with a grain of salt because im uninformed and not really looking to involve myself more in this discourse! but i will gladly answer this ask with the information i do have because i think youre starting an interesting conversation and i like to talk ( •̀ .̫ •́ )✧! feel free to hmu i dms, anon.

under read more because i started to ramble a bit. tldr please stop donating to ao3 and donate to one of these vetted palestinian fundraisers instead, especially for eid

personally i agree with all that youve said about ao3 in your ask already. i wont get on a soapbox and moralize about fanfiction and stuff like that because, again, i really dont care, but also i think it is really neat to have these spaces where people can safely interact with IPs in a transformative way and share their works with others without the threat of legal action. that's not ao3s doing, but it does provide a hub for people to publish without ever running into the treat of legal action. and, like you said, the tagging system makes it really easy to sort through fics and find what you like vs. dislike.

my main problems with ao3 come from its mismanagement, and the fact that it really has no competitors. ive been on ao3 since 2015, and i think the only significant change i can remember in the 9 years ive used it was the fact that they added the ability to filter out tags along with filtering in some. that's literally it. there could definitely have been some significant behind the scenes additions im unaware of, but from a users point of view, there has only been 1 update in 9 years that has majorly impacted the way i use the site. that seems like extreme mismanagement to me, especially since ao3 manages to meet and then significantly past all of their donation goals that they run pretty frequently. it seems like ao3 is making a lot of money which isn't being put into improving the site in any significant way. ao3 has been a site since 2008, its making a lot of money, and has a huge base of donors and volunteers, but it's still in beta? that seems like major mismanagement to me. again, this is just me speaking as a layperson not involved in the site itself.

also, it's pretty much become the only site that you can read fanfic on. there are sparse communities on tumblr, ff.net, and wattpad, but they're definitely a lot smaller and spread out. ao3 is the biggest and most centralized place for posting and reading fanfiction on the internet, meaning it virtually has no competition, and there's no alternatives that offer the same scale or quality. despite all that, it has almost no internal user moderation, and ao3 has actively been resistant to implementing those kinds of changes. that has resulted primarily in users of color getting targeted and attacked by racists. harassment is easy on ao3, and people can easily abuse the tagging system to do whatever they like. there's not even a perma-block tag feature, which is the first thing i would've implemented with the filter out feature. like you said, anon, if they're even implementing these changes, they're implementing them slowly, and behind a convoluted multi-step process. there's still little to no internal moderation or blocking system, which is just incredibly irresponsible.

ao3 almost has a monopoly on fanfiction on the internet, which means that it can kind of just do whatever, and no matter the gripes it knows people will keep coming back if they want fanfic because there's no alternative. and people keep chucking money at it because, again, its a hub for people to post and read fanfic without ever running into the threat of legal action because the website provides a safe, transformative works shield for authors. it's just insane how mismanaged the site is, but people keep giving it money despite that, so there's no real incentive for OTW to change anything or put in any effort. HOW IS IT STILL IN BETA. ITS BEEN 16 YEARS AND THEY'VE MADE MILLIONS OF DOLLARS. MINECRAFT CLASSIC WAS LAUNCHED IN 2009 AND THEY MOVED THROUGH BETA TO ALPHA TO A FULL LAUNCH AND THEN HUNDREDS OF UPDATES IN THE SAME TIME FRAME AND WITH A SMALLER TEAM AND FUNDS, INITIALLY😭😭😭 that's just insane to me, there's definitely something weird behind the scenes, how can they be this bad at site management, it's gotta be deliberate or something.

anyway, please stop donating to ao3 and donate to one of these vetted palestinian fundraisers instead, especially for eid

4 notes

·

View notes

Text

Independent Archive Survey

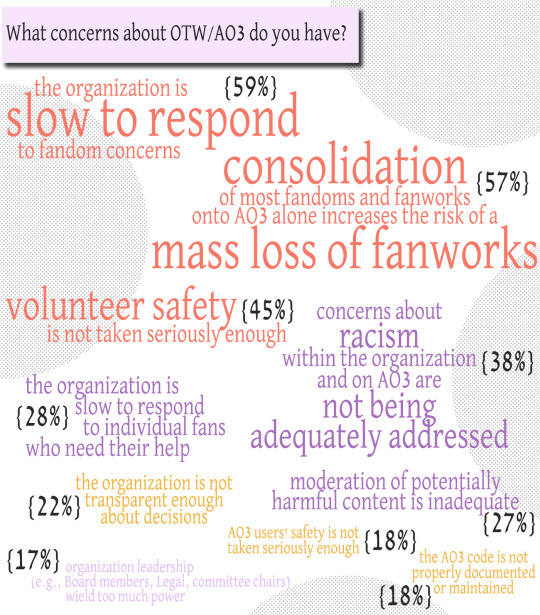

What concerns about OTW/AO3 do you have?

Check all that apply.

the organization is slow to respond to fandom concerns: 59% consolidation of most fandoms and fanworks onto AO3 increases the risk of a mass loss of fanworks: 57% volunteer safety is not taken seriously enough: 45% concerns about racism within the organization and AO3 are not being adequately addressed: 38% the organization is slow to respond to individual fans who need their help: 28% moderation of potentially harmful content is inadequate: 27% the organization is not transparent enough about decisions: 22% AO3 users' safety is not taken seriously enough: 18% the AO3 code is not properly documented and maintained: 18% organization leadership (e.g., Board members, Legal, committee chairs) wield too much power: 17% I don't have any concerns about OTW/AO3 archives: 12% (note: 2 of the 10 respondents who chose this did select concerns from the list; eliminating these responses, 10% of respondents had no concerns) I don't know: 2% Responses in the "other" field:

Other projects besides AO3 seem to fall by the wayside (e.g. fanlore); AO3 is hostile to outside fixes for code problems; volunteers are burned through quickly; volunteers must go through an intensive onboarding process that weeds out people who actually want to help; functions of AO3 don't work as intended/advertised (the exchange interface, the prompt meme, tagsets)

I have concerns as noted but I also hope and want Ao3 to improve and succeed (while also supporting the existence of more archives!)

Moderation of illegal content is inadequate

My main concern with OTW is that it has grown too large as an organization/project to continue operating solely on volunteer labor. To be honest, most of their issues stem out from that main problem or are exacerbated by it, in my opinion. But it isn't some simple thing to start bringing on paid staff either. Anyway, in short, the org has outgrown its model, but switching to a new model will also take time and there will be more growing pains as a result before things improve.

Not enough moderation in general. Hard to remove/report harassing comments, spam fics, etc.

for how long it's been around, the feature set is surprisingly immature (e.g., blocking/muting is just now being added, the time-based posting bug)

No sense of community

The size makes for a lack of community; the weight placed on quantitative measures (work stats)

I use it too little to personally experience the negative effects, however I'll support people I know and trust who do.

administration of the site feels to far from the individual user

Responses: 82

Analysis

I hesitated to include this item at all. I really do not want this to become a small archive vs. AO3 issue or to be presented as an either-or. We can and should have both, and for the 999th time, I want the OTW and AO3 to succeed for a variety of reasons. However, getting a sense of concerns seemed important as we move forward into crafting next-generation small archives that meet the needs of their creators, visitors, and fandoms. So the question went in.

Not surprisingly, fewer people overall are concerned about OTW/AO3 than small archives. About one in ten respondents did not have concerns at all, and no single concern was selected as often as the top ones in the corresponding dataset for small archives. Again, this is not a surprise. Despite the past few months, many of the concerns on the OTW/AO3 list remain hypotheticals, whereas concerns about small archives have happened at one time or another (if only because there have been thousands of small archives and just one AO3!) Furthermore, many of the concerns on this list were in response to some of the whistleblowing of recent months, and it's possible not all respondents were even aware of what was going on.

What were the concerns? Two dominated. The organization's slow response to fandom concerns, was top—also not a surprise. It's nearly cliche to point out that the wheels of large bureaucracies grind slowly, and one needn't be versed in the latest discussions around the OTW to have likely seen this at some point in its almost fifteen-year history. I will note that this is an area where smaller archives can succeed ... but aren't guaranteed, of course. On the SWG, it has always been a policy to take no longer than twenty-four hours to respond to a task, question, or issue, and most of the time we are significantly quicker than that. (Sometimes actually fixing the issue takes longer, but even that is rare.) However, you have to commit to doing this. The potential is there (where I'd argue it's really never going to be for an organization the size of the OTW), but it needs to be realized.

Secondmost was the worry about consolidation and the possibility of the mass loss of fanworks. I have been yelling about this for years, so I'll admit that it felt pretty good to see that those words haven't gone entirely unheeded. Is this unlikely? Yep. Is it possible? It is. Sorry, sweet summer children, it really is, and if it does happen, it is devastating in a way that the closure of a small archive never will be. And for the last dataset about small archive concerns, I made the case that the data around archive closures possibly reflected the Tolkien fandom's "collective trauma" about the unannounced transfer of ownership or closure of small archives. (And I imagine most respondents participate in the Tolkien fandom; my signal boost wasn't passed that widely around.) Of course, this happens against a backdrop of Fandom's collective trauma around unannounced content purges. Point being, these possibilities are on our mind.

There are a couple responses that pair naturally between the small archive and OTW/AO3 datasets. There is much more worry about the technical stability of small archives than AO3. Again, we've seen small archives fail and degrade due to tech issues, so this isn't hypothetical in the way it is for AO3, for all that's been said about spaghetti code. On leadership and the power given to a site's leaders, the two sets are remarkably even. This does surprise me! For all that's been revealed about the OTW's governance in recent months, they do have a process of governance that is more transparent than most archives, and they do offer points of democratic input, whereas many small sites do not.

The "Other" option was also more used for the OTW/AO3 dataset than the small archive dataset and includes some interesting responses that elaborate on the concerns from the list and identify some new ones. A couple mentions of "community" jump out at me here—and again, this is what small archives have to offer (potentially! again, "potential" and "actual" can be quite starkly divided) and what AO3 really cannot in most circumstances (and I'd further add was not intended to. I've argued before that a universal archive cannot offer the community features many people want and need by definition.)

What is the independent archive survey?

The independent archive survey ran from 23 June through 7 July 2023. Eighty-two respondents took the survey during that time. The survey asked about interest in independent archives and included a section for participants interested in building or volunteering for an independent archive. The survey was open to all creators and readers/viewers of fanworks.

What is an independent archive?

The survey defined an independent archive as "a website where creators can share their fanworks. What makes it 'independent' is that it is run by fans but unaffiliated with any for-profit or nonprofit corporations or organizations. Historically, independent archives have grown out of fan communities that create fanworks."

Follow the tag #independent archives for more survey results and ongoing work to restore independent archives to fandoms that want them.

Independent Archives Survey Masterpost

16 notes

·

View notes

Text

Week 10 - Digital Citizenship and Conflict: Social Media Governance

The ‘Snowflake generation’ (Haslop, C., O’Rourke, F., & Southern, R. 2021), that's what we are. A generation of feeling offended by let's say, offensive things, but hey! It's nothing close to what the generation before us went through, right?

The term ‘Snowflake generation’, can be described as a somewhat derogatory way of labelling “young people” perceived for their “intolerance and over-sensitivity.” (Haslop, C., O’Rourke, F., & Southern, R. 2021)

The term, often utilised in the mouths of older generations, that can’t understand the concern surrounding harassment online. Who are, hypocritically, also the very individuals who refuse to engage in specific social media applications so would have no experience on the topic.

Digital conflict exists as different groups of communities online demand a sense of power, and therefore, diminish certain groups or individuals through online harassment.

Examples of online harassment range from sexual, impersonation, physical threats, rumour spreading, etc. and exist on the digital realm as a result of the lack of accountability, posed to individuals hidden behind screens seeking to impose power.

One of the most common online harassment examples is sexual abuse, in the form of ‘dick pics’.

A sad common denominator amongst my female peers, is that we all either have received a non-solicited dick pic, or know someone close to us who has…gross!

Unfortunately, this is not the only example of female targeted abuse online, “young women aged 18–19 years old were more likely to report degrading comments on their gender, sexual harassment and unwanted sexual requests” (Laura Vitis Fairleigh Gilmour 2017) However, being one of the most common forms, it can be argued that dick pics are a power move in the hands of men, so there is no questioning “women and girls’ participation in online spaces…marked by concerns for their safety.” (Laura Vitis Fairleigh Gilmour 2017)

The existing power thirst from men online, reflects that of real life, whereby similarly, women face higher levels of harassment, which Laura Vitis and Fairleigh Gilmour, 2017 raises a similar question of, “whether such harassment simply repeats real world gendered inequalities and tensions or whether it is a product of the Internet” (Laura Vitis Fairleigh Gilmour 2017)

Presently, attempts to minimise online harassment can be seen in social media governance, which takes form on applications such as Instagram and TikTok as something you might be familiar with as… ‘community guidelines.’

Personally, the times I have stumbled across community guideline restrictions, I haven’t been its biggest fan, with experience having my very PG rated TikTok videos removed based off ‘explicit content’ that falls into one of the many brackets of ‘unsafe viewing'.

Although, this is a good step in the right direction and allows prevention of explicit content, sometimes the governance might be argued as too strong - pushing the boundaries of removing digital communities freedom of expression. On the other hand, content moderation techniques imposed by social media applications such as Instagram, highlight the need for corporate social responsibility - Whereby, accountability has limited space to fall onto the individuals doing the bullying, so platforms need to assist in preventing it.

Another example of attempts to minimise digital conflict and harassment is the legislation put into place to encourage safety online ‘online safety act 2021.’

Yet still, harassment is prevalent and expected on all forms of social media as we speak, proving a difficult problem to combat - so what can be done?

References -

Haslop, C., O’Rourke, F., & Southern, R. (2021). #NoSnowflakes: The toleration of harassment and an emergent gender-related digital divide, in a UK student online culture. Convergence, 27(5), 1418–1438.

Laura Vitis and Fairleigh Gilmour (2017) 'Dick pics on blast: A woman’s resistance to online sexual harassment using humour, art and Instagram', Download 'Dick pics on blast: A woman’s resistance to online sexual harassment using humour, art and Instagram', Crime, Media, Culture. 13(3) 335-355.

6 notes

·

View notes

Text

it seems that since the last update there’s been a lot of hostility going around especially toward modders trying to update their mods and moderators of sims communities, especially in discord, who are trying to help people who are having issues with the new update and pack. in response some larger discord servers have decided to “close” for a few days in hopes that people will calm down and some cc creators and modders have considered quitting and no longer uploading and updating their content publicly.

since the situation is so bad that others have tapped out and because i’m kind of a veteran sims player i thought i could give some advice if you’re having problems with your game and mods/cc, so here’s some steps that you might want to take instead of harassing people who are trying to help make your gaming experience better for free:

back up your saves and tray files. sometimes patches are broken and can fuck up your saves and even your tray files (your lots and sims from your library). before loading your game, back those up.

remove your entire mods folder from your game before you open it for the first time after the patch.

play the vanilla game to make sure that there are no problems with your game w/o the mods.

check to see if the mods you use have been updated since the patch was released. check if the modder has made a statement about when they might be able to update. check if others in the community have reported that the mods you use are broken with the patch and need updated. if you’re part of a larger discord server or forum that often helps people with these problems, check announcements, pinned posts, and other information already made available on where or how to find out if your mods need to be updated.

if available, update the mods you use for the patch. if not available, wait until the modders are able to update their mods. don’t harass them as they are people who have to take out time in their day to do (usually) unpaid work for the love of making the game a fun experience for others in the community. keep it a fun experience for them so they’ll want to keep sharing their work with you.

if your game is still broken even though it ran fine when you tested the vanilla game and you’ve updated all your mods, use the 50/50 method to see which mod still isn’t working. remove half of your mods at a time to see which group the problem mod is in, then split that group 50/50, and continue doing that until you’ve narrowed down the mod. once you’re sure that’s the mod, then you can contact the modder to ask if there’s a mod conflict (assuming you’ve already read any and all info about known conflicts before using the mod and that’s not the problem) or to let them know of a persisting issue. be polite and wait for a solution.

if you’re struggling with doing any of these steps, ask for help in a sims 4 based discord, subreddit, or other forum. be polite because these people are volunteering their time to help you. remember that they’re not being paid and they don’t owe anyone their time. they’re usually helping because they love the community and want to help others have fun with their game. keep it a good experience for them and they’ll continue to want to help you in the future.

if you’ve decided no, you don’t want to check your vanilla game, you’re not going to check for updates or broken mods or mod conflicts, and you’re not going to remove your mods folder to play the game, don’t pester others with whatever problems you’ve chosen to create for yourself. and especially, don’t be a prick because you created a bad situation for yourself.

hope that helps! stay safe out there, be kind to one another, give the modders and moderators some room to breathe, and once they’re in a good space, don’t forget to show them love for the work they do for free to make the community a better place and to help others have a fun time with the game.

2 notes

·

View notes

Text

Sure, let's talk about it!

First, you will be happy to know the following! (citations below) That any images (real or otherwise) of such are banned on Ao3. That directing the attention of a real person to a story containing written sexual material about them can be considered harassment and is against Ao3 policy. And that doing so is furthermore against US sexual harassment law - the details of which are essentially that Ao3 is not legally liable for its hosting, but is obligated to maintain channels for users to report it to them, and for Ao3 to forward this to the government if actionable.

While Ao3 does permit the hosting of content describing sexual content involving real people, including people who are underage, which is not being used in a harassing capacity, this is in line with common archival policies regarding obscenity, including the US Library of Congress' own archival policies. This is because real people do sometimes have sex while underage, and there are non-prurient reasons why one might document such events or write of fictionalized or hypothetical events involving underage sex, just as there are non-prurient reasons one might write about a child's death, maiming, or other disturbing event occurring to them. This makes drawing a clean censorship line difficult and subject to personal judgement, which is exactly what Ao3 is designed to prevent.

As an example, let's say someone is writing a historical novel about the rise of the USSR (which qualifies for the Ao3 hosting criteria). This includes the murder of the Romanov royal children, a beyond-the-pale crime against children. Should such a work be banned? Obviously not you might say, surely it would be an exception to a policy of not letting people write stories about bad things happening to real children!

Except history shows time and time again that these exceptions are not made, or are made specifically to censor unrelated content. A government could easily selectively enforce such a principle only against content about the Russian Revolution to censor discussion of it. So censoring stories about bad things happening to real children can't be the policy. Nor can you narrow it down to sexual material - that still removes real historical events. Nor can you narrow it down to fictional sexual material - not all historical events are well attested and discussion of hypotheticals can be non-prurient.

And that's assuming the censors even care! Remember, censorship safety isn't just about how well good actors can employ it, but how effectively bad actors can too. Because ultimately the capacity to censor stories is just a checklist and a button! Check the list, press the button. Nothing actually ensures the checked boxes correspond to reality! This is how historical fandom purges of queer content have worked. Get a policy permitting community or moderator purging of pedophilic material and then either collectively report queer stories for pedophilia, or get anti-queer activists into the moderator ranks and have them do it. It is extremely difficult to create a censorship criteria which isn't judgement prone, and even more difficult to create a judgement-based censorship system that isn't prone to exploitation.

So ultimately this becomes a question of harm vs. harm. Which has the potential for more harm? Permitting the hosting of stories involving child sexual content of real people, some of which yes, will be prurient. Or permitting a censorship mechanism for the archive?

Well, if we're discussing the harms of what you would want to censor, fully and obviously fictional stories containing sexual material of real children ... well that's such a specific scenario we can ask: What exactly is the real world harm of those stories, who is being hurt? To use the strawberry metaphor above, whose allergies are being triggered, which laborers are being exploited? And the answer is, well ... none and no one. Unless that material is being brought to the attention of and used to harass someone...

And that is against both Ao3 policy and US law.

How fortunate that Ao3 policy already addresses your concerns.

Unless your concern isn't harm being done to real people, but simply about personal revulsion.

II.K. Illegal and Inappropriate Content You may not upload Content that appears or purports to contain, link to, or provide instructions for obtaining sexually explicit or suggestive photographic or photorealistic images of real children; malware, warez, cracks, hacks, or other executable files and their associated utilities; or trade secrets, restricted technologies, or other classified information. ... If you encounter Content that you believe violates a specific law of the United States, you can report it to us.

Real-Person Fiction (RPF) Creating RPF never constitutes harassment in and of itself. Posting works where someone dies, is subjected to slurs, or is otherwise harmed as part of the plot is usually not a violation of the Harassment Policy. However, deliberately posting such Content in a manner designed to be seen by the subject of the work, such as by gifting them the work, may result in a judgment of harassment.

P.S. This is an incredibly sensitive topic. And this is not a call made lightly. Ao3's content policy was created with the contributions of volunteer lawyers, civil rights advocates, and censorship experts, people whose actual professional jobs involve understanding all the minutiae of these things and thinking long and hard on them.

This is a decision which a lot of thought got poured into. The least any of us can do before jerking our knees in horror over the depiction of something revolting, is to put some thought and consideration into what the mechanics of removing that grossness would look like, and if it would be exploitable by bad actors.

saying ao3 needs to censor certain content is like saying a museum can't have still life art that includes strawberries because you don't like them.

these are not real strawberries. you do not have to, and in fact cannot, eat them. no one with a strawberry allergy will be harmed by looking at them. no migrant workers were exploited in the picking of these strawberries. there were no questionable farming practices or negative environmental impacts from growing or transporting them.

because - and i cannot stress this enough - they are not real strawberries.

if you don't like strawberries, you don't have to look at the paintings. in fact, you can get a map of the museum that lists what works are in what rooms and just. not go in there. if you see one by mistake, you can look away. just keep walking. there's plenty of other stuff to see.

yes, real strawberries can cause real quantifiable harm to real people.

but again. these are not real strawberries.

you may have whatever feelings you like about strawberries, and so can i. you can draw and write about whatever fruit floats your boat, and so can i, even if that happens to be strawberries. and we can hang our art side by side in the same gallery, provided you understand that my strawberries are not about you (and your kumquats are, shocker, not about me) and that - and this is true - neither are real.

and when the fascists break down the doors and grab all the strawberry paintings and heap them in the street and set them on fire, please know that they are coming for your kumquats next.

so if you want a place where you can show off your beautiful kumquat art safely, you're gonna have to tolerate having some strawberries in the next room.

and that's okay. because the strawberries aren't real.

15K notes

·

View notes

Text

Week 11: Digital Citizenship and Conflict: Social Media Governance

Hello guys welcome back! Have you ever thought about how social media influences the way we act online and how it deals with drama, especially harassment and cyberbullying? Let’s explore digital citizenship, social media governance, and how they relate to online harassment!

First of all, let’s talk about what is digital citizenship. Digital citizenship involves using technology responsibly, safely, and respectfully. It includes protecting private information online, reducing risks from cyber threats, and using information and media in a respectful, informed, and legal manner (Digital Citizenship: What it is & What it Includes | Learning.com 2023). While we live and interact online much like we do offline, we aren't always mindful of our online actions. Sometimes we act without considering the impact on our reputation, safety, and growth as digital citizens. Meanwhile, everything we do online shapes our digital world and identity. Social Media Governance refers to the guidelines, methods, and steps that social media platforms use to control and oversee how users behave and what they post on their platforms (Murthy 2024). The purpose of social media governance is to keep the internet a safe, respectful, and legal place to be while also protecting user rights and free speech.

Do you know that if you don’t use social media wisely will lead to harassment and cyberbullying? These are serious issues that affect many people online. Harassment involves repeated, unwanted behavior that makes someone feel intimidated or threatened. Cyberbullying is a type of harassment that happens specifically online. It can include spreading rumors, sending threatening messages, or publicly humiliating someone. Both can have devastating effects on victims, leading to anxiety, depression, and even suicidal thoughts. Based on my research, over 3000 cases is reported in Malaysia in 2023 (Rashidi 2024). MCMC also found that Facebook was the most common platform for cyberbullying, with 1,401 reports. WhatsApp came in second with 667, followed by Instagram with 388, TikTok with 258 and X with 159 and both adults and teenagers also reported being harassed within the past 12 months, up from 23% in 2022 to 33% in 2023 for adults, and 36% to 51% for teenagers (Wahab 2023). Social media platforms like Facebook, Twitter, and Instagram have their own rules to keep things in check. These rules are supposed to prevent stuff like cyberbullying, fake news, and hate speech. But let's be real—sometimes these platforms don't always get it right. They have to balance free speech with the need to protect users from harmful content.

One big part of social media governance is content moderation. This is where platforms use a mix of algorithms and human moderators to check what’s being posted (Content Moderation Justice and Fairness on Social Media: Comparisons Across Different Contexts and Platforms 2024). While AI can catch some bad stuff, it’s not perfect. Human moderators have the tough job of deciding what stays and what goes, which can be super stressful. One of the examples where conflicts often pop up is when misinformation and fake news, lead to misunderstandings and disputes. Users may share unverified information, which can cause disagreements over what is true (The Role of Social Media in Modern Conflicts n.d.)

Let’s talk about the legal framework for cyberbullying in Malaysia. In Malaysia, there is no existing legal provision specifically to tackle cyberbullying cases in Malaysia. Besides, we can also refer to Section 233 of the Communication and Multimedia Act 1998 which is Improper use of network services, which includes making any comment that is considered offensive, abusive, and intended to harass another person, if anyone is convicted, the offender could be fined of not more than RM50,000 or imprisonment for up to 1 year, or both (Iskandar 2023). Malaysia’s government should take cyberbullying seriously and come out with a legal law for cyberbullying.

So, what can we do as digital citizens? We can start by being mindful of what we post and how we interact with others. Think twice before sharing something controversial or unverified. Be respectful in your comments and conversations. And if you see something harmful, report it. Together, we can make social media a better place.

Reference

Content Moderation Justice and Fairness on Social Media: Comparisons Across Different Contexts and Platforms, 2024. arXiv.org e-Print archive. viewed 27 May 2024. Available at: https://arxiv.org/html/2403.06034v1#:~:text=To%20fight%20harmful%20content%20and,screening%20of%20user-generated%20content%20

Digital Citizenship: What it is & What it Includes | Learning.com, 2023. Learning. viewed 27 May 2024. Available from: https://www.learning.com/blog/what-is-digital-citizenship/

Iskandar, I. M., 2023, Activists want ambiguity in Communications and Multimedia Act cleared up, NST Online, viewed 27 May 2024, Available at: https://www.nst.com.my/news/nation/2023/03/885208/activists-want-ambiguity-communications-and-multimedia-act-cleared

Murthy, S., 2024. Social Media Governance: 9 Essential Components. Sprinklr: Unified AI-Powered Customer Experience Management Platform. viewed 27 May 2024, Available at: https://www.sprinklr.com/blog/social-media-governance/#:~:text=A%20social%20media%20governance%20plan%20is%20a%20structured%20framework%20for,risks%20associated%20with%20social%20media

Rashidi, Q.N.M., 2024, Over 3,000 cyberbullying complaints recorded in 2023, thesun.my, viewed 27 May 2024, Available at : https://thesun.my/local_news/over-3000-cyberbullying-complaints-recorded-in-2023-AK12097214

The Role of Social Media in Modern Conflicts n.d., PCRF, viewed 27 May 2024, https://www.pcrf.net/information-you-should-know/item-1707234928.html

Wahab, F., 2023. ‘Cyberbullying laws need more bite’. The Star. viewed 27 May 2024. Available at : https://www.thestar.com.my/metro/metro-news/2023/07/01/cyberbullying-laws-need-more-bite

0 notes

Text

I have largely been not engaging with the latest go-around of end racism in the OTW, in part because I don't feel it's really my lane, but mostly because I do not think the things they are asking for are going to solve the problem they want to solve, and has great potential to cause more problems.

The above post has a lot of very detailed breakdown on why content moderation on the scale of the Archive is not feasible with their current budget and infrastructure, but I really want to highlight and delve more into the issue they briefly touched on of malicious reporting.

I have been in fandom in one form or another since the turn of the century, and the one thing I have seen repeatedly -- time and time again, with evolving vocabulary falling into the exact same structures -- is that fans will weaponize any available tool to attack other fans. They have done, they still do, they will continue to do this.

I can see a position that says, well that's an acceptable collateral for the greater good of reducing racial harm. But I also believe, pretty firmly, that POC creators are going to be the ones hurt most by this. This will happen for three reasons:

POC creators are more likely to be targeted for harassment in the first place, because racism (because there's just 'something they can't put their finger on' about this person that they don't like, that drives people to dig through their work and biography for excuses;)

Non-American creators are more likely to be POC creators, and non-American fans are the most likely to be not English speakers, not steeped in American purity culture, and not up on the shibboleths of whatever is considered Right And Good on (american) social media this week;

POC creators are more likely to be engaging with POC characters, works, OCs and themes in the first place, and are already being policed for performing their ethnicity to the audience's satisfaction

Here are a few things I have seen happen in the past few years, and these are only things that I have directly seen happen in real time, not getting into stuff that may happen in other channels I'm not witness to:

Asian artists being targeted and harassed for "whitewashing" Asian characters (eg, drawing them Not Asian Enough; there's an entire conversation to be had on marked vs unmarked styles of depiction that I don't have space for here;)

SEA artists being targeted and harassed for drawing characters at the same level of color as the character's official art, when the community has decided that they should be darker (see also the American hangup of judging a character's ethnic representation according to color of skin and color of skin only;)

Black creators being targeted and harassed for making "fetish" content of OCs and characters based on their own selves.

In some of these cases, that things got so out of hand could be attributed to well-meant but misguided zealotry. But in the majority of harassment cases I've seen over two decades in this fandom, the single driving root of every conflict is that a fan decided they did not like the way another creator shipped, drew, or wrote their favorite character, so they looked around for a suitably hefty stick to whack them with; and that stick might be wrapped in a concealing cellophane of social justice but it's still going to be used as a weapon.

Fans are not going to stop doing this. POC creators are going to continue to be in the cross-sights. I do not see any measurable benefit in giving them bigger and heftier sticks, or trying to pull AO3 moderators into these arguments as referees.

For those who might happen across this, I'm an administrator for the forum 'Sufficient Velocity', a large old-school forum oriented around Creative Writing. I originally posted this on there (and any reference to 'here' will mean the forum), but I felt I might as well throw it up here, as well, even if I don't actually have any followers.

This week, I've been reading fanfiction on Archive of Our Own (AO3), a site run by the Organisation for Transformative Works (OTW), a non-profit. This isn't particularly exceptional, in and of itself — like many others on the site, I read a lot of fanfiction, both on Sufficient Velocity (SV) and elsewhere — however what was bizarre to me was encountering a new prefix on certain works, that of 'End OTW Racism'. While I'm sure a number of people were already familiar with this, I was not, so I looked into it.

What I found... wasn't great. And I don't think anyone involved realises that.

To summarise the details, the #EndOTWRacism campaign, of which you may find their manifesto here, is a campaign oriented towards seeing hateful or discriminatory works removed from AO3 — and believe me, there is a lot of it. To whit, they want the OTW to moderate them. A laudable goal, on the face of it — certainly, we do something similar on Sufficient Velocity with Rule 2 and, to be clear, nothing I say here is a critique of Rule 2 (or, indeed, Rule 6) on SV.

But it's not that simple, not when you're the size of Archive of Our Own. So, let's talk about the vagaries and little-known pitfalls of content moderation, particularly as it applies to digital fiction and at scale. Let's dig into some of the details — as far as credentials go, I have, unfortunately, been in moderation and/or administration on SV for about six years and this is something we have to grapple with regularly, so I would like to say I can speak with some degree of expertise on the subject.

So, what are the problems with moderating bad works from a site? Let's start with discovery— that is to say, how you find rule-breaching works in the first place. There are more-or-less two different ways to approach manual content moderation of open submissions on a digital platform: review-based and report-based (you could also call them curation-based and flag-based), with various combinations of the two. Automated content moderation isn't something I'm going to cover here — I feel I can safely assume I'm preaching to the choir when I say it's a bad idea, and if I'm not, I'll just note that the least absurd outcome we had when simulating AI moderation (mostly for the sake of an academic exercise) on SV was banning all the staff.

In a review-based system, you check someone's work and approve it to the site upon verifying that it doesn't breach your content rules. Generally pretty simple, we used to do something like it on request. Unfortunately, if you do that, it can void your safe harbour protections in the US per Myeress vs. Buzzfeed Inc. This case, if you weren't aware, is why we stopped offering content review on SV. Suffice to say, it's not really a realistic option for anyone large enough for the courts to notice, and extremely clunky and unpleasant for the users, to boot.

Report-based systems, on the other hand, are something we use today — users find works they think are in breach and alert the moderation team to their presence with a report. On SV, this works pretty well — a user or users flag a work as potentially troublesome, moderation investigate it and either action it or reject the report. Unfortunately, AO3 is not SV. I'll get into the details of that dreadful beast known as scaling later, but thankfully we do have a much better comparison point — fanfiction.net (FFN).

FFN has had two great purges over the years, with a... mixed amount of content moderation applied in between: one in 2002 when the NC-17 rating was removed, and one in 2012. Both, ostensibly, were targeted at adult content. In practice, many fics that wouldn't raise an eye on Spacebattles today or Sufficient Velocity prior to 2018 were also removed; a number of reports suggest that something as simple as having a swearword in your title or summary was enough to get you hit, even if you were a 'T' rated work. Most disturbingly of all, there are a number of — impossible to substantiate — accounts of groups such as the infamous Critics United 'mass reporting' works to trigger a strike to get them removed. I would suggest reading further on places like Fanlore if you are unfamiliar and want to know more.

Despite its flaws however, report-based moderation is more-or-less the only option, and this segues neatly into the next piece of the puzzle that is content moderation, that is to say, the rubric. How do you decide what is, and what isn't against the rules of your site?

Anyone who's complained to the staff about how vague the rules are on SV may have had this explained to them, but as that is likely not many of you, I'll summarise: the more precise and clear-cut your chosen rubric is, the more it will inevitably need to resemble a legal document — and the less readable it is to the layman. We'll return to SV for an example here: many newer users will not be aware of this, but SV used to have a much more 'line by line, clearly delineated' set of rules and... people kind of hated it! An infraction would reference 'Community Compact III.15.5' rather than Rule 3, because it was more or less written in the same manner as the Terms of Service (sans the legal terms of art). While it was a more legible rubric from a certain perspective, from the perspective of communicating expectations to the users it was inferior to our current set of rules — even less of them read it, and we don't have great uptake right now.

And it still wasn't really an improvement over our current set-up when it comes to 'moderation consistency'. Even without getting into the nuts and bolts of "how do you define a racist work in a way that does not, at any point, say words to the effect of 'I know it when I see it'" — which is itself very, very difficult don't get me wrong I'm not dismissing this — you are stuck with finding an appropriate footing between a spectrum of 'the US penal code' and 'don't be a dick' as your rubric. Going for the penal code side doesn't help nearly as much as you might expect with moderation consistency, either — no matter what, you will never have a 100% correct call rate. You have the impossible task of writing a rubric that is easy for users to comprehend, extremely clear for moderation and capable of cleanly defining what is and what isn't racist without relying on moderator judgement, something which you cannot trust when operating at scale.

Speaking of scale, it's time to move on to the third prong — and the last covered in this ramble, which is more of a brief overview than anything truly in-depth — which is resources. Moderation is not a magic wand, you can't conjure it out of nowhere: you need to spend an��enormous amount of time, effort and money on building, training and equipping a moderation staff, even a volunteer one, and it is far, far from an instant process. Our most recent tranche of moderators spent several months in training and it will likely be some months more before they're fully comfortable in the role — and that's with a relatively robust bureaucracy and a number of highly experienced mentors supporting them, something that is not going to be available to a new moderation branch with little to no experience. Beyond that, there's the matter of sheer numbers.

Combining both moderation and arbitration — because for volunteer staff, pure moderation is in actuality less efficient in my eyes, for a variety of reasons beyond the scope of this post, but we'll treat it as if they're both just 'moderators' — SV presently has 34 dedicated moderation volunteers. SV hosts ~785 million words of creative writing.

AO3 hosts ~32 billion.

These are some very rough and simplified figures, but if you completely ignore all the usual problems of scaling manpower in a business (or pseudo-business), such as (but not limited to) geometrically increasing bureaucratic complexity and administrative burden, along with all the particular issues of volunteer moderation... AO3 would still need well over one thousand volunteer moderators to be able to match SV's moderator-to-creative-wordcount ratio.

Paid moderation, of course, you can get away with less — my estimate is that you could fully moderate SV with, at best, ~8 full-time moderators, still ignoring administrative burden above the level of team leader. This leaves AO3 only needing a much more modest ~350 moderators. At the US minimum wage of ~$15k p.a. — which is, in my eyes, deeply unethical to pay moderators as full-time moderation is an intensely gruelling role with extremely high rates of PTSD and other stress-related conditions — that is approximately ~$5.25m p.a. costs on moderator wages. Their average annual budget is a bit over $500k.

So, that's obviously not on the table, and we return to volunteer staffing. Which... let's examine that scenario and the questions it leaves us with, as our conclusion.

Let's say, through some miracle, AO3 succeeds in finding those hundreds and hundreds and hundreds of volunteer moderators. We'll even say none of them are malicious actors or sufficiently incompetent as to be indistinguishable, and that they manage to replicate something on the level of or superior to our moderation tooling near-instantly at no cost. We still have several questions to be answered:

How are you maintaining consistency? Have you managed to define racism to the point that moderator judgment no longer enters the equation? And to be clear, you cannot allow moderator judgment to be a significant decision maker at this scale, or you will end with absurd results.

How are you handling staff mental health? Some reading on the matter, to save me a lengthy and unrelated explanation of some of the steps involved in ensuring mental health for commercial-scale content moderators.

How are you handling your failures? No moderation in the world has ever succeeded in a 100% accuracy rate, what are you doing about that?

Using report-based discovery, how are you preventing 'report brigading', such as the theories surrounding Critics United mentioned above? It is a natural human response to take into account the amount and severity of feedback. While SV moderators are well trained on the matter, the rare times something is receiving enough reports to potentially be classified as a 'brigade' on that scale will nearly always be escalated to administration, something completely infeasible at (you're learning to hate this word, I'm sure) scale.

How are you communicating expectations to your user base? If you're relying on a flag-based system, your users' understanding of the rules is a critical facet of your moderation system — how have you managed to make them legible to a layman while still managing to somehow 'truly' define racism?

How are you managing over one thousand moderators? Like even beyond all the concerns with consistency, how are you keeping track of that many moving parts as a volunteer organisation without dozens or even hundreds of professional managers? I've ignored the scaling administrative burden up until now, but it has to be addressed in reality.

What are you doing to sweep through your archives? SV is more-or-less on-top of 'old' works as far as rule-breaking goes, with the occasional forgotten tidbit popping up every 18 months or so — and that's what we're extrapolating from. These thousand-plus moderators are mostly going to be addressing current or near-current content, are you going to spin up that many again to comb through the 32 billion words already posted?

I could go on for a fair bit here, but this has already stretched out to over two thousand words.

I think the people behind this movement have their hearts in the right place and the sentiment is laudable, but in practice it is simply 'won't someone think of the children' in a funny hat. It cannot be done.

Even if you could somehow meet the bare minimum thresholds, you are simply not going to manage a ruleset of sufficient clarity so as to prevent a much-worse repeat of the 2012 FF.net massacre, you are not going to be able to manage a moderation staff of that size and you are not going to be able to ensure a coherent understanding among all your users (we haven't managed that after nearly ten years and a much smaller and more engaged userbase). There's a serious number of other issues I haven't covered here as well, as this really is just an attempt at giving some insight into the sheer number of moving parts behind content moderation: the movement wants off-site content to be policed which isn't so much its own barrel of fish as it is its own barrel of Cthulhu; AO3 is far from English-only and would in actuality need moderators for almost every language it supports — and most damning of all, if Section 230 is wiped out by the Supreme Court it is not unlikely that engaging in content moderation at all could simply see AO3 shut down.

As sucky as it seems, the current status quo really is the best situation possible. Sorry about that.

3K notes

·

View notes

Text

wattpad vs. ao3

so this is an examination of Wattpad as an alternative to Archive of our Own, largely in response to the ongoing criticisms of AO3 when it comes to their content policy and what’s permitted onsite in terms of tropes and ratings. I’m not going to be talking about anything in the context of the completely separate and justified debate about how Archive staff handles racism and racist harassment. First off, I agree that AO3 needs to take more action against racist commenters and stories intended to harass fans of color (I’ve received a few comments like that myself) and second off, I don’t know how Wattpad handles racism.

I’m pro-AO3, but I do believe that if people have problems with AO3, they should be free to leave the platform and find something that suits their needs and wants better, and no one has brought up Wattpad in these conversations, which I think is a shame.

Wattpad:

commercial site with ads and a premium membership option

general fiction focus with fanfiction section (not a dedicated fic archive)

mobile-friendly with a dedicated app on App Store and Play Store

basic user tagging (think Tumblr, Instagram) with some native filtering

allows for user blocking

community forums on-site with direct messaging feature

RTF-only text input (no HTML editing)

native image support, including gifs and video files

ability to upload custom art in-story and as a cover for your fic

no native self-archiving/story download feature unless you’re the author

extremely large userbase, with popular fics getting hundreds of thousands of hits regularly

primarily M/F, including large amounts of selfshipping, reader insert, and canon/OC romance

site demographic skews young, with many adolescents “aging out” and moving to FFN or AO3

comprehensive, well-enforced content policy restricting and banning many story concepts and thematic elements, including erotica, all underage stories where participants are younger than sixteen, and glorification of suffering such as self-harm or sexual violence. encourages users to report stories that violate TOS.

basic content rating system, with the requirement to tag stories as mature to warn of adult content that is permitted in the TOS, including sex scenes that are part of the plot, sexual violence or dark themes that aren’t written about from a perspective of horror or condemnation, etc. no option to opt out of ratings.

can and will delete stories that are found to be in violation of the TOS, or will render them private and viewable only to the author.

Archive of our Own:

nonprofit organization with no ads or premium options for site members

dedicated fanfiction archive, though original works and nonfiction about fannish things are permitted

mobile friendly to an extent, no apps of any kind

comprehensive, thorough tagging system custom-built for maximum user customization and labeling. enables native filtering for all tags, always present and usable regardless of searches or preferences

no current options for user blocking, though change may come

no forums, direct messages, or social element except comments on fics, which can be moderated and deleted or turned off by the author

supports RTF and HTML text input for stories

limited image embedding, requiring offsite hosting and HTML editing for mobile viewers

no native image upload feature or ability to create “covers” for stories

allows the option to download all fics in multiple formats

large userbase but fics with hundreds of thousands of hits are relatively rare, and subcommunities/fandoms have different standards for a “popular” fic

primarily M/M on a sitewide basis but most popular ships and story styles vary based on fandom.

site demographic skews older than Wattpad, with many users considering themselves “fandom olds” or being present since the site’s launch

allows anything to be written and published in their stories, with content policies banning user harassment and photographs of illegal pornography. users are expected to accept that they might see fics in the listings that upset or disgust or squick them on some level, and tag filtering/external browser extensions are expected to be implemented by the user to block out upsetting content

comprehensive rating system, with fics expected to be tagged and rated and warned for accordingly. option given to opt out of warnings and ratings entirely with “Unrated” and “Choose Not To Warn” categories

will rarely delete stories, and will never do so without warning and emailing the author a copy of their fic along with an explanation for why it was deleted

Wattpad’s Content Policy:

The full policy is linked above, but Wattpad explicitly bans underage sex, purely pornographic content, graphic self-harm, suicide, hate speech, underage sex where one party is younger than sixteen (the age of consent in Canada), sex with animals, revenge porn, sexual solicitation/roleplaying, and harassment of other site users, among other things. Stories cannot focus on sexual violence in a positive way, and sex scenes must meet content standards even in mature-rated stories. This is in contrast to AO3, which (as stated above) doesn’t have bans against any of this. Their TOS FAQ is linked here, and contains extensive discussion of their content policy, while affirming that they believe in the user opting out of content they dislike rather than banning that content on principle. I can confirm anecdotally that they do take action against embedded photographic images of illegal pornography, but that’s the only ban they seem to have.

My final conclusion is that abandoning AO3 for Wattpad sacrifices user friendliness and an extremely comprehensive tagging system that will get you exactly the results you want for a heavily moderated, much less risky experience that has sitewide standards designed to protect users from graphic or controversial content. Both have fun interfaces, and both are easy to use, but I personally would recommend the latter site to anyone who felt AO3 was too free and open with the kind of stories it permits on its site.

79 notes

·

View notes

Text

Campaign Against Reddit’s Current TOS

Right now, Reddit's TOS does not outline any protections from harassment on their website. Most of their TOS is content and copyright based. While you can report comments as harassment without an account and posts with an account. You cannot report subreddits and all comment and post reports go through subreddit moderators which has allowed subreddits such as r/DIDCringe to continue to thrive. This is not cool and there shouldn't be communities centred around harassment, calling people cringey, and fakeclaiming others. The only mention of attacking others on their TOS is outlined for moderators, telling them not to attack their own members. This does not stop them from running commumities that thrive on the harassment of others.

Because of this, I have made my own change.org petition to combat this.

There is already a petition to get r/DIDCringe taken down, but I am looking for a more permanent solution to the thriving of this and other similar subreddits.

Below are some screenshots of Reddit’s TOS that are important, which you can read in full here.

Below the cut are the Image IDs for those with screen readers.

[Image 1 ID: 6. Things You Cannot Do When using or accessing Reddit, you must comply with these Terms and all applicable laws, rules, and regulations. Please review the Content Policy (and for RPAN, the Broadcasting Content Policy), which are part of these Terms and contain Reddit’s rules about prohibited content and conduct. In addition to what is prohibited in the Content Policy, you may not do any of the following: Use the Services in any manner that could interfere with, disable, disrupt, overburden, or otherwise impair the Services. Gain access to (or attempt to gain access to) another user’s Account or any non-public portions of the Services, including the computer systems or networks connected to or used together with the Services. Upload, transmit, or distribute to or through the Services any viruses, worms, malicious code, or other software intended to interfere with the Services, including its security-related features.]

[Image 2 ID: Use the Services to violate applicable law or infringe any person’s or entity's intellectual property rights or any other proprietary rights.Access, search, or collect data from the Services by any means (automated or otherwise) except as permitted in these Terms or in a separate agreement with Reddit. We conditionally grant permission to crawl the Services in accordance with the parameters set forth in our robots.txt file, but scraping the Services without Reddit’s prior consent is prohibited.Use the Services in any manner that we reasonably believe to be an abuse of or fraud on Reddit or any payment system.We encourage you to report content or conduct that you believe violates these Terms or our Content Policy. We also support the responsible reporting of security vulnerabilities. To report a security issue, please email [email protected].]

[Image 3 ID: 7. ModeratorsModerating a subreddit is an unofficial, voluntary position that may be available to users of the Services. We are not responsible for actions taken by the moderators. We reserve the right to revoke or limit a user’s ability to moderate at any time and for any reason or no reason, including for a breach of these Terms.If you choose to moderate a subreddit:You agree to follow the Moderator Guidelines for Healthy Communities;You agree that when you receive reports related to a subreddit you moderate, you will take appropriate action, which may include removing content that violates policy and/or promptly escalating to Reddit for review;You are not, and may not represent that you are, authorized to act on behalf of Reddit;You may not enter into any agreement with a third party on behalf of Reddit, or any subreddits that you moderate, without our written approval;You may not perform moderation actions in return for any form of compensation, consideration, gift, or favor from third parties;] [Outlined is: You agree that when you receive reports related to a subreddit you moderate, you will take appropriate action, which may include removing content that violates policy and/or promptly escalating to Reddit for review;]

[Image 4 ID: If you have access to non-public information as a result of moderating a subreddit, you will use such information only in connection with your performance as a moderator; andYou may create and enforce rules for the subreddits you moderate, provided that such rules do not conflict with these Terms, the Content Policy, or the Moderator Guidelines for Healthy Communities.Reddit reserves the right, but has no obligation, to overturn any action or decision of a moderator if Reddit, in its sole discretion, believes that such action or decision is not in the interest of Reddit or the Reddit community.]

[Image 5 ID: Moderator Guidelines for Healthy CommunitiesEffective April 17, 2017.Engage in Good FaithHealthy communities are those where participants engage in good faith, and with an assumption of good faith for their co-collaborators. It’s not appropriate to attack your own users. Communities are active, in relation to their size and purpose, and where they are not, they are open to ideas and leadership that may make them more active.] [Outlined is: It’s not appropriate to attack your own users.]

[Image 6 ID: cringe people on the internet involved with DID in some way. faking or not.[00:57]1. Posts must be on topic The post itself should be cringey and the person must claim to have DID 2. No calls for violence or harassment of people posted 3. No brigading or witch hunting 4. No reposts 5. No self-posts 6. Must be CRINGE content Posting someone you suspect is faking by itself is not cringe and will be removed. They have to actually being doing something cringe. While faking DID can be seen as cringe, these cases are a dime a dozen and not worth posting]

#important#reddit petition#campaign against reddit tos#long post#actuallysystem#actuallyplural#actuallymultiple

6 notes

·

View notes

Text

Workplace Mediation Solution.

Workplace Mediation, Manchester, Cheshire & North West.

Content

Service.

Speak With Those Who Have Utilized The Solution.

It can be viewed as an expensive process if an end result can not be gotten to. It is consequently just beneficial if both events are prepared to endanger.

youtube

These issues are gone over and also if you reach a contract the mediator will certainly write it down for you and also make sure it states what you both desire it to say. Everybody indicators the agreement and also you decide who else, if any person, need to see a duplicate. If you are unpleasant with sharing the joint arrangement with people who are not in the area after that a decision is made about what, if anything, to show the person or individuals that referred you to mediation. The major drawback of mediation is that there is no warranty of a resolution.

Company.

That usually leaves a circumstance where both individuals associated with the grievance need to proceed working together. Mediation can aid there by repairing the relationships so that the two can find a way to co-work efficiently. The mediation enabled both celebrations to explore where their working partnership was going wrong, as well as review what they both gotten out of each other. With their new understanding of the other events' viewpoint two contracts were drawn up. The 2nd agreement was for circulation to their supervisor and also it set out modifications in work techniques that they both wanted to see for the future. They remained in the same division and reported to the same department manager. Fiona had actually really felt under pressure from Jenni since she joined the company.

What can I expect at mediation?

The mediator does not take sides, make decisions, or give legal advice; their only role is to facilitate respectful conversation. The parties' lawyer may participate in the process and attend mediation meetings. Before the mediation process commences, parties may draft and sign a mediation agreement.

Some people wish to 'have their day in court' as well as feel a sense of injustice if the process is not translucented until completion. Although, an increasing number of of our consumers are making it clear that they anticipate their workers to act in a practical method to secure a positive resolution to a complaint or a complaint. If an agreement is gotten to through the mediation procedure, then a binding paper can be created for both events to participate in. The best-case circumstance in mediation is that all events concern a mutually concurred option to resolve the conflict, which will permit a good working relationship to be brought back.

Hear From Those Who Have Used The Service.

Generally, we would permit someday for every mediation session as well as there is likewise additional contact made with all parties, in the lead as much as and also adhering to mediation. It is vital that all individuals agree to join the mediation process, in order for mediation to take place. The dominating purpose of workplace mediation is to restore and maintain excellent and effective functioning relationships. The dispute centred around promo possibilities as well as arrangements between a manger and her supervisor.

The moderator will bring the conferences to a close, give a duplicate of the concurred statement to those involved and explain their duties for its application. If mediation services norwich is reached, various other treatments might later be utilized to attempt to solve the conflict.

Work Regulation.

To start with, the mediator meets with each celebration independently to comprehend their experience of the problem, their setting as well as interests and also what they wish to happen following. During these meetings, the arbitrator will certainly likewise look for arrangement from the celebrations to an assisted in joint conference. A qualified conciliator's function is to function as an unbiased 3rd party who helps with a meeting in between two or more individuals in disagreement to aid them get to an agreement. Although the arbitrator is in charge of the procedure, any kind of agreement originates from those in dispute.

Augsburg Staff Vote for a Union - Workday Minnesota

Augsburg Staff Vote for a Union.

Posted: Fri, 08 Jan 2021 17:24:00 GMT [source]

Every person will have had a possibility to be heard, which can assist to boost the understanding of both sides moving forward. Workplace mediation is an increasingly preferred technique embraced by several organisations as an alternative means of solving workplace disputes.

The moderator holds both of you to the ground rules and makes sure you have equal time to speak as well as to listen to every other. You will be advised by the arbitrator regarding the procedure as well as just how much time to publication out of your journal. Typically, for a two-person mediation you will be asked to allot a complete day for the mediation session. Private meetings usually begin at 9.30 am or 11am, the joint meeting usually start at 1pm and also continues till 4pm, nevertheless, timings can be versatile on the day. At the beginning of the mediation you are asked to authorize a Privacy and also Responsibility Arrangement. This record likewise advises you that the mediator can not provide legal suggestions which the material of the mediation conversation is personal, it can not be utilized in any future procedures or procedures that you might be associated with.

How do you talk during mediation?

How to Talk and Listen Effectively in Mediation 1. Strive to understand through active listening. In trial, litigants address juries in their opening statements and final arguments. 2. Avoid communication barriers. 3. Watch your nonverbal communication. 4. Be ready to deal with emotions at mediation. 5. Focus on the facts. 6. Use your mediator and limit caucuses. 7. Conclusion.

She increased the problem with her department manager; however, she really felt that absolutely nothing had actually changed. When both parties consented to mediation they were both charging the various other of bullying and harassment. Work Law Updates for very early is set to be a fascinating year, not the very least with Brexit day fast coming close to. In spite of the uncertainty that Brexit has created, HR specialists as well as entrepreneur still have to guarantee they are up-to-date with what's in store in employment law adjustments that we do recognize will certainly occur.