#oVirt

Explore tagged Tumblr posts

Text

youtube

I find it pretty devastating that Skinny Puppy’s hanDover has become nearly lost media at this point; like not just “not on streaming services” but nearly inaccessible at all, out of print, not even fully uploaded to YouTube, 4 copies on Discogs and 5 on Amazon averaging like $30. I’ve always found the lukewarm reception this album received genuinely mind blowing. I guess it didn’t sound enough like old school Skuppy for people but it’s so special. I think it’s one of Skuppy’s more genuinely difficult albums and I mean that with love, I think Skuppy should be difficult. It has an atmosphere unlike any other album from them or anyone else; it’s such a deeply dreary album. I think dreary is the most apt word. It’s just dismal and gloomy and grey. It kinda meanders through this smoggy dreamlike stupor through each track with this really distinct sharp prickliness despite its more slow atmospheric sound… Ovirt into Cullorblind into Wavy into Ashas is such an unrelentingly moody introduction, with the final song of the set being explicitly about the grief of losing a recently deceased friend. The song above, Gambatte, comes right after, and to give an idea of how low energy the album skews this is one of the faster tracks on there. Gambatte is actually one of my favorite Skuppy songs of all time and its inaccessibility makes me want to rip my hair out. It’s got such a weird muted whimsy to it. It seems like even the band has distanced themselves from this album, with it being the only one of their mainline LPs to not have a single song on the set list of the Final Tour. I know the behind the scenes was difficult and it’s part of what made the album itself so difficult, but I feel like it’s such a shame that it feels like it’s at risk being sort of forgotten.

9 notes

·

View notes

Text

Die neue Veeam Data Platform v12.2 erweitert Datenresilienz auf weitere Plattformen und Anwendungen. Das neueste Update von Veeam erweitert die Plattformunterstützung um Integrationen für Nutanix Prism, Proxmox VE und MongoDB, bietet eine breitere Cloud-Unterstützung und ermöglicht den sicheren Umstieg auf neue Plattformen. Die Veeam Data Platform v12.2 wird um die Unterstützung für den Schutz von Daten auf eine Vielzahl neuer Plattformen erweitert und die Fähigkeiten in puncto End-to-End-Cybersicherheit weiterentwickelt. Diese neue Version kombiniert umfangreiche Backup-, Wiederherstellungs- und Sicherheitsfunktionen mit der Möglichkeit, Kunden bei der Migration und Sicherung von Daten auf neuen Plattformen zu unterstützen. Die Veeam Data Platform v12.2 ist eine umfassende Lösung, die es Organisationen ermöglicht, operative Agilität und Sicherheit aufrechtzuerhalten, während sie kritische Daten vor sich wandelnden Cyberbedrohungen und unerwarteten Entwicklungen schützen. Veeam Data Platform v12.2 Mit der Veeam Data Platform v12.2 genießen Organisationen die Freiheit, ihre bevorzugte Infrastruktur mit skalierbarem, richtlinienbasiertem Schutz zu wählen. Die neue Integration mit Nutanix Prism Central bietet den branchenbesten Schutz basierend auf den Anforderungen von Unternehmen. Darüber hinaus ermöglicht die Unterstützung für den neuen Proxmox VE-Hypervisor Unternehmen, ihre Umgebungen nach ihren eigenen Bedingungen zu migrieren und zu modernisieren. Die Sicherung von MongoDB ist ebenfalls enthalten und bietet Unveränderbarkeit, zentrales Management und schnelle Wiederherstellung. Die neue Plattform hilft Organisationen dabei, ihre Transformation in die Cloud, neue Hypervisoren oder HCI zu beschleunigen. Sie bietet Unterstützung für Amazon FSx, Amazon RedShift, Azure Cosmos DB und Azure Data Lake Storage. Zudem bietet die Veeam Data Platform v12.2 eine vollständige Verwaltung der YARA-Regeln, einschließlich RBAC, sicherer Verteilung und orchestriertem Scannen von Backups, was eine rechtzeitige Erkennung von Problemen ermöglicht und die Einhaltung von Compliance-Regeln gewährleistet. Verbesserung der Sicherheitslage Mit der Veeam Data Platform v12.2 wird die Verbesserung der Sicherheitslage und die Optimierung der Betriebsabläufe erleichtert. Erweiterte Alarmsysteme zur Überprüfung der Integrität von Daten und zur Schließung von Lücken bei der Datenerfassung helfen dabei, Sicherheitsprobleme zu identifizieren. Darüber hinaus können Backups zur Archivspeicherung beschleunigt werden, um Kosten zu optimieren, ohne die Compliance zu gefährden. Veeam Data Platform v12.2 bietet verschiedene neue Funktionen - Backup für Proxmox VE: Schützen Sie den nativen Hypervisor, ohne die Verwaltung oder Verwendung von Backup-Agenten. Profitieren Sie von flexiblen Wiederherstellungsoptionen, einschließlich VM-Wiederherstellungen von und auf VMware, Hyper-V, Nutanix AHV, oVirt KVM, AWS, Azure und Google Cloud sowie Wiederherstellungen von Backups physischer Server direkt in Proxmox VE (für DR oder Virtualisierung/Migration). - Backup für MongoDB: Stärken Sie Ihre Cyberresilienz und nutzen Sie unveränderliche Backups, Sicherungskopien und fortschrittliche Speicherfunktionen. - Verbesserte Nutanix AHV-Integration: Schützen Sie kritische Nutanix AHV-Daten vor Replikationsknoten, ohne die Produktionsumgebung zu beeinträchtigen. Profitieren Sie von einer tiefgreifenden Nutanix Prism-Integration mit richtlinienbasierten Sicherungsaufträgen, verbesserter Backup-Sicherheit und Flexibilität bei der Netzwerkgestaltung. - Erweiterte AWS-Unterstützung: Erweitern Sie die native Ausfallsicherheit auf Amazon FSx und Amazon RedShift durch richtlinienbasierten Schutz und schnelle, automatisierte Wiederherstellung. - Erweiterte Microsoft Azure-Unterstützung: Erweitern Sie die native Ausfallsicherheit auf Azure Cosmos DB und Azure Data Lake Storage Gen2 für zuverlässigen Schutz und schnelle, automatisierte Wiederherstellung. Passende Artikel zum Thema Read the full article

0 notes

Text

Cockpit Ubuntu Install Configuration and Apps

Cockpit Ubuntu Install Configuration and Apps - Learn how to manage your Ubuntu Server with a web browser #ubuntuserver #cockpitlinux #cockpitubuntu #ubuntucockpit #servermanagement #freeandopensource #ubuntuwebmanagement #homeserver #homelab

Working with the oVirt Node install recently re-familiarized me with the Cockpit utility and made me want to play around with it a bit more on vanilla Ubuntu Server installations. Let’s look at installing Cockpit on Ubuntu and see the steps involved. We will also look at how you can install new apps in the utility and general configuration. Table of contentsWhat is Cockpit?Why is Cockpit…

View On WordPress

0 notes

Text

Guia Passo a Passo com Imagens para Instalar o Agente Convidado oVirt em Rocky Linux ou AlmaLinux 8/9"

Instalar o Agente Convidado oVirt em uma máquina virtual (VM) Rocky Linux ou AlmaLinux 8/9 é um processo que envolve a configuração e a instalação de pacotes específicos. O agente convidado é necessário para melhorar a integração entre a VM e o oVirt, permitindo funções como desligamento e reinicialização controlados pela interface do oVirt. Introdução: O agente convidado oVirt é uma ferramenta…

View On WordPress

0 notes

Text

nvm there was no translation on virtualbox website or in oracles docs but prank theres a pdf showing up in google search in downloads.virtualbox.org even though idek where in virtualbox.org i could access that translation

found qemu, utm, both are translated and im going to set something on fire

i found ovirt and it seems to not be translated but maybe theres a fucking translation somewhere who the fuck knows

rage hatred suffering

i chose a text to translate for the computer science translation class and the teacher said there should be no translation available so i checked and there was none and she approved my text. i sent my translation yesterday and today she replied and said theres a translation. that fucking page was translated since the last time i checked it like. 2 weeks ago ???

so now i found another text (probably harder to translate too) and im looking everywhere before sending it to her, like if i find anyone translated oracle's vm virtualbox user manual somewhere i will obliterate them

#yeah looking specifically for virtualization stuff. she said it should be smth were interested in so i chose virtualbox bc i used it a bit#at this point i just want smth to translate even if i dont really care abt it#idk what to look for tbh#personal#nourann.txt

2 notes

·

View notes

Video

youtube

THIẾT LẬP OVIRT STORAGE DOMAIN - UPLOAD IS | Khóa học ảo hóa | Trung tâm...

1 note

·

View note

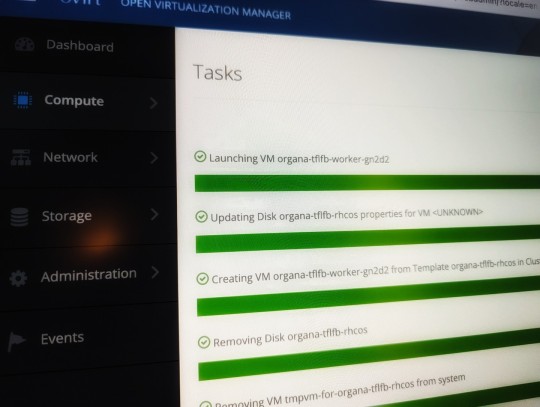

Photo

Happy heavenly birthday Carrie Fisher! 🎂 I'm launching a new OpenShift cluster in your honor today. 🤓

0 notes

Text

oVirtSimpleBackup - WebGUI / oVirt-engine-backup / oVirt 4.2.x and 4.3.x

oVirtSimpleBackup – WebGUI / oVirt-engine-backup / oVirt 4.2.x and 4.3.x

oVirtSimpleBackup – XENVM – WebGUI – Debian

Instructions for using this installer

If you are planning on using oVirtSimpleBackup for Xen Migration – Install a new VM in your Xen Environment named VMMIGRATE using the script at the bottom of the page before installing the script below. The script below will require the VMMIGRATE VM but running and available while the script is installing. Again,…

View On WordPress

0 notes

Text

Virtual Machines in Kubernetes? How and what makes sense?

Happy new year.

I stopped by saying that Kubernetes can run containers on a cluster. This implies that it can perform some cluster operations (i.e. scheduling). And the question is if the cluster logic plus some virtualization logic can actually provide us virtualization functionality as we know it from oVirt.

Can it?

Maybe. At least there are a few approaches which already tried to run VMs within or on-top of Kubernetes.

Note. I'm happy to get input on clarifications for the following implementations.

Hyper created a fork to launch the container runtimes inside a VM:

docker-proxy | v [VM | docker-runtime] | + container + container :

runV is also from hyper. It is a OCI compatible container runtime. But instead of launching a container, this runtime will really launch a VM (libvirtd, qemu, …) with a given kernel, initrd and a given docker (or OCI) image.

This is pretty straight forward, thanks to the OCI standard.

frakti is actually a component implementing Kubernetes CRI (container runtime interface), and it can be used to run VM-isolated-containers in Kubernetes by using Hyper above.

rkt is actually a container runtime, but it supports to be run inside of KVM. To me this looks similar to runv, as a a VM is used for isolation purpose around a pod (not a single container).

host OS └─ rkt └─ hypervisor └─ kernel └─ systemd └─ chroot └─ user-app1

ClearContainers seem to be also much like runv and the alternative stage1 for rkt.

RancherVM is using a different approach - The VM is run inside the contianer, instead of wrapping it (like the approaches above). This means the container contains the VM runtime (qemu, libirtd, …). The VM can actually be directly adressed, because it's an explicit component.

host OS └─ docker └─ container └─ VM

This brings me to the wrap-up. Most of the solutions above use VMs as an isolation mechanism to containers. This happens transparently - as far as I can tell the VM is not directly exposed to higher levels, an dcan thus not be directly adressed in the sense of configured (i.e. adding a second display).

Except for the RancherVM solution where the VM is running inside a container. Her ethe VM is layered on-top, and is basically not hidden in the stack. By default the VM is inheriting stuff form the pod (i.e. networking, which is pretty incely solved), but it would also allow to do more with the VM.

So what is the take away? So, so, I would say. Looks like there is at least interest to somehow get VMs working for the one or the other use-case in the Kubernetes context. In most cases the Vm was hidden in the stack - this currently prevents to directly access and modify the VM, and it actually could imply that the VM is handled like a pod. Which actually means that the assumptions you have on a container will also apply to the VM. I.e. it's stateless, it can be killed, and reinstantiated. (This statement is pretty rough and hides a lot of details).

VM The issue is that we do care about VMs in oVirt, and that we love modifying them - like adding a second display, migrating them, tuning boot order and other fancy stuff. RancherVM looks to be going into a direction where we could tnue, but the others don't seem to help here.

Cluster Another question is: All the implementations above cared about running a VM, but oVirt is also caring about more, it's caring about cluster tasks - i.e. live migration, host fencing. And if the cluster tasks are on Kubernetes shoulders, then the question is: Does Kubernetes care about them as much as oVirt does? Maybe.

Conceptually Where do VMs belong? Above implementations hide the VM details (except RancherVM) - one reaosn is that Kubernetes does not care about this. Kubernetes does not have a concept for VMs- not for isolation and not as an explicit entity. And the questoin is: Should Kubernetes care? Kubernetes is great on Containers - and VMs (in the oVirt sense) are so much more. Is it worth to push all the needed knowledge into Kubernetes? And would this actually see acceptance from Kubernetes itself?

I tend to say No. The strength of Kubernetes is that it does one thing, and it does it well. Why should it get so bloated to expose all VM details?

But maybe it can learn to run VMs, and knows enough about them, to provifde a mechanism to pass through additional configuration to fine tune a VM.

Many open questions. But also a little more knowledge - and a post that got a little long.

1 note

·

View note

Text

AWS DevOps Proxy and Job Support from India

KBS Technologies is a leading Proxy & Online Job Support Consultant Company from India provide AWS DevOps Proxy support and AWS DevOps job support from India Hyderabad across the global like USA UK Canada, Finland, Sweden, Germany, Israel, Singapore, Australia, Denmark, Belgium, Poland, Hong Kong, Qatar, Saudi Arabia, Oman, Denmark, Bahrain, JAPAN, South Korea, Switzerland, Kuwait, Spain, United Kingdom, Russia, Czech Republic, China, Belarus, Luxembourg. If you are working on AWS DevOps and you don’t have proper experience to able to complete tasks in project assignment at that time taking Job support is the right option to overcome problems. Our team of consultants is a real time experienced IT Professionals who will solve all your technical issues that you are facing in the project.We provide AWS DevOps online job support from India to individual as well as corporate clients. Our Support team contact will have a detailed discussion with you to understand your task requirements tools and technology.

We are Expertise in providing Job Support on AWS DevOps Tools

AWS DevOps cultural philosophy

AWS DevOps practices

AWS DevOps tools

Gradle

Git

Jenkins

Bamboo

Docker

Kubernetes

Puppet enterprise

Ansible

Nagios

Raygun

GCP

Openshift, Rancher cluster, Ansible , oVirt, saltstack

Our Services

AWS DevOps Job Support

AWS DevOps Proxy Support

AWS DevOps Project Support and Development

Contact us for more information:

K.V Rao

Email ID : [email protected]

Call us or WhatsApp: +919848677004

Register Here: https://www.kbstraining.com/aws-devops-job-support.php

0 notes

Text

The performance of your virtualization environment is highly influenced by Network configurations. This makes Networking one of the most important factors of any Virtualized infrastructure. In oVirt/RHEV, there are several layers that make up Networking. The underlying physical networking infrastructure is what provides connectivity between physical hardware and the logical components of the virtualization environment. For improved performance, Logical networks are created to segregate different types of network traffic onto separate physical networks or VLANs. For example, you can have separate VLANs for Storage, Virtual Machine, and Management networks to isolate traffic. Logical Networks are created in a Data Center with each cluster being assigned one or more Logical Network. A single logical network can be assigned to multiple clusters to provide communication between VMs in different clusters. Each logical network should have a unique name, data center it resides on, and type of traffic in Virtualization environment to be carried by the network. If the virtual network has to share access with any other virtual networks on a host physical NIC, the Logical networks require setting a unique VLAN tag (VLAN ID). Additional settings that can be configured on a logical network include Quality of Service (QoS) and bandwidth limiting settings. Types of Logical Networks in oVirt / RHEV Segregation of traffic types on different logical networks is of paramount importance in any Virtualized environment. During oVirt / RHEV installation, a default logical network, called ovirtmgmt is created. This network is configured to handle all infrastructure traffic and VM network traffic. Example of infrastructure traffic is management, display and migration network traffic. It is recommended that you plan and create additional logical networks to segregate traffic. The most ideal segregation model is network traffic based on the type. Logical network configuration occurs at each of the following layers of the oVirt environment. Data Center Layer – Logical networks are defined at the data center level. Cluster Layer – Logical networks defined on the data center layer, and added to clusters be be used at that layer Host Layer – On each hypervisor host in the cluster, the virtual machine logical networks are connected and implemented as a Linux bridge device associated with a physical network interface. Infrastructure networks can be implemented directly with host physical NICs without the use of Linux bridges. Virtual Machine Layer – If the logical network has been configured and is available on hypervisor host, it can be attached to a virtual machine NIC on the that host. Main network types are: 1. Management Network This type of network role facilitates VDSM communication between oVirt Manager and oVirt Compute hosts. It is automatically created during oVirt engine deployment and it is named ovirtmgmt. It is the only logical network available post installation and all other networks can be created depending on environment requirements. 2. VM Network This is connected to virtual network interface cards (vNICs) to carry virtual machine application traffic. On the host machine, a software-defined Linux bridge is created, per logical network. The bridge provides the connectivity between the host’s physical NIC and virtual machine vNICs configured to use that logical network. 3. Storage Network It provides private access for storage traffic from Storage server to Virtualization hosts. For better performance, multiple storage networks can be created to further segregate file system based (NFS or POSIX) from block based (iSCSI or FCoE) traffic. Storage networks usually have Jumbo Frames configured. Storage networks are not commonly connected to virtual machine vNICs. Storage networks are configured to isolate storage traffic to separate VLANs or physical NICs for performance tuning and QoS 4. Display Network

The display network role is assigned to a network that carries display traffic (SPICE or VNC) of the Virtual Machine from oVirt Portal to host where the Virtual Machine is running. This type of network is not connected to virtual machine vNICs, it is categorized as Infrastructure network. 5. Migration network The migration network role is assigned to handle virtual machines migration traffic between oVirt hosts. It is recommended to use dedicated non-routed migration network to ensure there is no management network disconnection to hypervisor hosts during heavy VM migrations. 6. Gluster network The Gluster network role is assigned to logical networks that carries traffic from Gluster Server to GlusterFS storage clusters. It is commonly used in hyper-converged oVirt/RHEV deployment architectures. Creating Logical Networks on oVirt / RHEV With the basics on Logical Networks covered, we can now focus on how they can be created and used on the oVirt/RHEV virtualization environments. The creation of logical networks is done under the Compute menu in the Networks page. In this guide we’ll create new logical network called glusterfs for carrying traffic from Gluster Servers to GlusterFS storage clusters. Login in to the oVirt Administration Portal as admin user. Create new logical network While on Administration portal menu, click on Network > Networks > New button to create a new logical network. You’re presented with the New Logical Network dialog window. Fill in the fields under General tab – Data Center, Name Description and other parameters. You can uncheck VM network for infrastructure and Storage type traffic. Enable if logical network is used for virtual machine traffic If using VLAN, enable tagging and input VLAN ID If Jumbo frames are supported in your network, you can set custom number as configured at network level. Under Cluster you can check list of Clusters where created network will be available. Example for all clusters. Specific cluster Configuring Hosts to use Logical Networks In the previous section, we demonstrated how to create logical networks to separate different types of network traffic. In this section we describe the procedures needed to implement the logical networks on cluster hosts. By default, created logical networks are automatically attached to all clusters in the data center. For the logical network to be used in the cluster, it should be attached to a physical interface on each cluster host. Once this has been done the logical network state of the network becomes Operational. Login to the host and check available network intefaces $ ip link show 1: lo: mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 2: enp1s0: mtu 1500 qdisc fq_codel master ovirtmgmt state UP mode DEFAULT group default qlen 1000 link/ether 52:54:00:36:ad:26 brd ff:ff:ff:ff:ff:ff 3: enp7s0: mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000 link/ether 52:54:00:31:60:02 brd ff:ff:ff:ff:ff:ff From the output, the first interface enp1s0 is already used by ovirtmgmt bridge which is mapped to ovirtmgmt network. In the new creation, we shall use enp7s0 network interface. Assign logical network to an oVirt host Navigate to Hosts page, click the name of the host to which the network will be attached. Click the Network Interfaces tab to list the NICs available on the host. Open Host Networks setup window by clicking “Setup Host Networks“. Drag a logical network listed under “Unassigned Logical Networks” section to a specific interface row on the left. After dragging the network is assigned to the chosen interface. Click the pencil icon to set network parameters. From the window you can set boot protocol, IP address, netmask, and gateway when using static addressing. You can as well make network modifications from the optional tabs for IPv6, QoS, and DNS configurations.

Setting network role at cluster level The network created can be assigned a specific role under Clusters > Clustername > Logical Networks > Manage Networks Assign the role to the network you’re doing modifications for. Confirm your network is operational by testing connectivity between hosts / Virtual Machines and desired destination. Conclusion In this article we’ve been able to create a logical network in oVirt/RHEV virtualization platform. We went further and attached it to a physical network interface on one or more hosts in the cluster. Network configurations were made on the network with static network booting. DHCP can also be used as boot protocol. For infrastructure networks you must do configurations at the cluster level to indicate what type of traffic the network will carry.

0 notes

Text

oVirt Install with GlusterFS Hyperconverged Single Node - Sort of

oVirt Install with GlusterFS Hyperconverged Single Node - Sort of - Learn about the install of an oVirt node and errors I encountered #ovirt #glusterfs #homelab #homeserver #kernelvirtualmachine #kvm #opensource #virtualization #hostedengine

I haven’t really given oVirt a shot in the home lab environment to play around with another free and open-source hypervisor. So, I finally got around to installing it in a nested virtual machine installation running in VMware vSphere. I wanted to give you guys a good overview of the steps and hurdles that I saw with the oVirt install with GlusterFS hyperconverged single-node configuration. Table…

View On WordPress

0 notes

Text

CVE-2021-20238

It was found in OpenShift Container Platform 4 that ignition config, served by the Machine Config Server, can be accessed externally from clusters without authentication. The MCS endpoint (port 22623) provides ignition configuration used for bootstrapping Nodes and can include some sensitive data, e.g. registry pull secrets. There are two scenarios where this data can be accessed. The first is on Baremetal, OpenStack, Ovirt, Vsphere and KubeVirt deployments which do not have a separate internal API endpoint and allow access from outside the cluster to port 22623 from the standard OpenShift API Virtual IP address. The second is on cloud deployments when using unsupported network plugins, which do not create iptables rules that prevent to port 22623. In this scenario, the ignition config is exposed to all pods within the cluster and cannot be accessed externally. source https://cve.report/CVE-2021-20238

0 notes

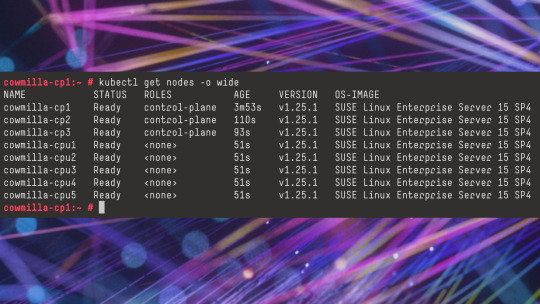

Photo

This Wednesday I had barely sales rep knowledge of SUSE SLES. This morning I've come a bit further on my journey. Thanks to Ansible and Cloud-init (I'm using oVirt) most OS functions like deploying and configuring it has been abstracted away in a very generic way.

1 note

·

View note

Photo

Ever wondered about the oVirt Engine Appliance dependencies.

This is a nice chart - the different colors of the node encode the size of the package (green lt 10MB, yellow lt 40MB, red gt 40MB).

Source

Now it's time to get a scissor and trim this dependency tree.

0 notes

Note

for the askmeme: odd numbers! (if it's too much you can skip some!)

OOH THANK YOU OMG it’s not too much I love never shutting up about music trust me

1: Favorite band?

Skinny Puppy of course lol. I don’t know who or where I’d be without that band and everything it means to me and everything it’s done for me. I know y’all all know I love it already but I can’t stress enough my love for this band and every single thing about it.

3: What's a band/artist you loved as a child but can barely listen to now?

HMMM My childhood relationship to music is weird. As a kid I didn’t listen to much music of my own accord and I thought that all music sounded like whatever you heard on a Christian rock radio station. The few bands I did get to hear that were actually good I still have warm feelings for (Elton John, Tom Petty, & Cat Stevens come to mind). That being said I apparently had a phase as a really little kid where I loved ABBA but I’m really not not a fan now lol.

5: Are you going to any gigs soon?

Oh HELL YES I HAVE VIP TICKETS TO COLD WAVES CHICAGO IN SEPTEMBER I’ll be seein ohGr, Cocksure, Lead Into Gold, Chemlab, Frontline Assembly, and more I’M SO EXCITED.

9: A song that gets you through shit?

Ggdhhdhdh I just realized there’s no 7 in this ask meme lol. ANYWAY the honest answer here would just be almost any ohGr song because nothing helps more than hearing my dad sing, but another big one that comes to mind in particular is “Witness,” which is a heartbreaking song to me that I frequently desperately need to hear.

11: A song you'd have sex to?

I wanna be fucked to some hardcore industrial hell music. How bout Revolting Cocks’ Stainless Steel Providers. Also Cuntboy by KMFDM, and Rash Reflection by Skinny Puppy? I’m sorry class.

13: A song for when you're lonely?

I love Boy George’s “I’ll Tumble 4 Ya” for lonelier nights, it’s so upbeat and George sounds so happy in it, I feel like he’s there with me telling me to be happy.

15: A song to jam out to at 4AM?

SOMETHING DURAN DURAN. Or maybe Soft Cell.

17: A song that punches you in the gut every single time?

Hmm, Skinny Puppy’s Amnesia, the distorted part always really really gets to me for some reason, although I could write a whole thinkpiece on The Process and how painful that whole album is for me to listen to for so many reasons. On a different note, Psychic TV’s I Love You, I Know.

19: If you had to pick one song to represent what you're feeling right now, what would it be?

Uhhhh Nine Inch Nails Wish.

21: A song that makes you feel alive?

Impossible question, I only listen to music that makes me feel dead. But actually after some thinking prolly traGek by ohGr, the single version, since the ending/second half is just so lively and fun and exciting and it just makes me so happy for Ogre if that makes sense..?

23: What are some lyrics you love to pieces?

Oh god there’s so many I couldn’t ever list them all. Some of my favorite song lyrics of all time include Mutiny In Heaven by The Birthday Party, You and Me and Rainbows by Tear Garden, Kiss by London After Midnight, Promise by Violent Femmes, Everyday is Halloween by Ministry, Goneja and The Choke by Skinny Puppy, Cracker by ohGr, Genocide Peroxide by Boy George... Some of my all time favorite single lyrics include “Everything disastrous is pure” from ohGr’s Bellew and “We’re broken wings but still we’ll fly” from Tear Garden’s You and Me and Rainbows... But I seriously could never list them all. God.

25: What's a band/artist you'd addict your children to from an early age?

My hypothetical nonexistent kids WOULD know and love Skinny Puppy. Have em singing Deep Down Trauma Hounds in their diapers. Glass Houses as a lullaby every night. =}

27: Has a band/artist ever inspired you to do something?

I literally can’t even begin to go into detail with this one; not to sound edgy and stupid but my entire life has been shaped by my favorite musicians and I swear to god every decision I make somehow reflects them so like yes in the hugest possible way ever absolutely.

29: What was your favorite band/artist when you were 12?

OGGDHDHD FUTRET AND LAPFOX LMAO

31: What's your favorite genre?

Just. Take A Wild Guess.

33: Do you sing?

Can I? Yes. Should I? Prolly not.

37: Do you prefer buying physical copies of albums or do you download them on the internet?

Skipping 35 since I already answered it. Anyway, I do enjoy owning physical copies of things, but in general I tend to just download em, since I like listening to music on my phone and stuff anyway where a physical copy would be useless, and I’m a broke college student.

39: Do you play your music out loud or with headphones?

Headphones ggdhdhhd I don’t like disturbing others with my music and I like keeping to myself so I’m not gonna go around blasting my bullshit.

41: A song that gives you the chills?

Nine Inch Nails’ Starfuckers Inc. always gives me chills at the end lol. Also Everyday is Halloween by Ministry, I swear 2 god I almost had a breakdown the first time I heard it. Also Ogre’s shrieking in God’s Gift (Maggot)... I’m gayhhsgsgg

43: A band/artist with an amazing instrumental but really bad lyrics?

OKAY NO OFFENSE BUT I’M JUST GONNA SAY IT AL JOURGENSEN CAN’T WRITE LYRICS FOR SHIT AND I LOVE MINISTRY BUT LIKE THE LYRICS ARE SO BAD SOMETIMES. LIKE NOT ALWAYS BUT. NOT INFREQUENTLY.

45: A song you love to sing to yourself?

I love singing Lou Reed songs and I can play a lot of em on guitar so I’ll sing and play; my favorites are prolly off Transformer, like Andy’s Chest and Hangin’ Round!

47: A song that represents a deserted city at night?

O I love this question. It would depend on the mood. I think my first thought was something Joy Division or Velvet Underground. Or maybe something off of Skinny Puppy’s hanDover, like Ovirt.

49: An upbeat song with grim lyrics?

LMAO I think a lot of industrial could be categorized as this because so much of it is really dancy while the lyrics are a lot darker. Actually just a lot of the music I listen to in general is like this. An outlier from my usual taste that comes to mind is Elton John’s Crocodile Rock which I love, that song is so depressing but catchy and fun. Hmm thinking about it now a lot of 80s music does this too even if it’s not typical goth or industrial stuff. I love music I actually only listen to music that sounds fun but is actually depressing?

53: Do you listen to instrumental music?

Skipping 51 because I did it already. Anyway I do, but not as much as I like music with lyrics since the lyrics are one of my favorite parts of music. But I can’t listen to music with lyrics while studying or writing since I’m a bad multitasker so there is some instrumental music I adore (also. video game OSTs.)

55: A song about drugs?

Immediate thought was Skinny Puppy’s Spasmolytic or Velvet Underground’s Heroin.

57: A band/artist you're proud of?

Oh MANY, since I look up to my favorite artists and their stories so much. Two big ones are Nivek Ogre of course and then Marilyn as well. Ogre because of his entire story, how he started from so little with no experience or background and he’d lost so much and just wanted to do something he believed in and he struggled through so much and battled illness and injury and addiction and lost so much but still to this day continues to do what he does and work towards what he believes in and he’s kind and caring and so full of love. He’s just such a wonderful person and I wish I could be half as good as him. Marilyn (Peter Robinson) for similar reasons, he’s so willing to speak out for what he believes in and not let anyone control him or his identity, and he’s gone through so much and had to fight such a horrible bout of depression and I actually got into his music right before he started releasing his first new music in DECADES. And he just is so positive and seems like he’s doing so much better now and he worked to rid himself of his addictions and is so candid about his mental health struggle and I just admire that so much. He’s going through a rough time right now I hope he’s able to pull through it safely :(

59: A band/artist with a sick aesthetic?

SKINNY FREAKING PUPPY, but if I’m being honest any 80s goth bands are so good and all of them inspire me every single day with their aesthetics lol.

4 notes

·

View notes