#not make ai-based databases or whatever

Explore tagged Tumblr posts

Text

okay, what am i doing here

#so many tools and factors i dont know#man i just want to read books and do some research#not make ai-based databases or whatever#maybe im not gonna be a good phd student#domi talks

4 notes

·

View notes

Text

I saw a horrible AI Tam and Lucy this morning in animal onesies and had to use my actual human hands to make a better version.

After drawing the whole thing I was like damn....I should have made s*xy pin ups with little ears, so if you want to tell me to do that, consider joining the patreon

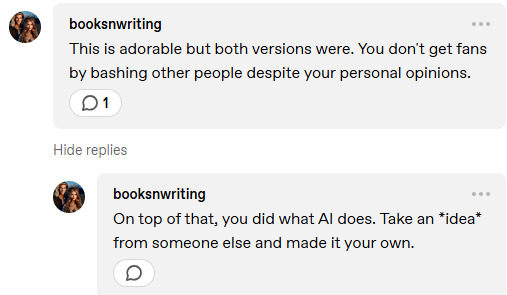

Edited because tumblr absolutely will not allow me to reply to messages, so I'm trying to reply to @booksnwriting:

The difference is that the other person put their prompt into a computer and the computer program took a bunch of artists' hard work and skill without permission to fill that prompt. I'm taking human skill, time and effort and using it to fill a prompt. What I'm doing is no different than any art challenge, or draw your OTP like this meme or whatever. Do I have their permission? No, but frankly if you're out here stealing other people's skills and calling it art, then I think it's only fair that your ideas can be turned around and used as prompts by people with those skills to produce actual drawings. Furthermore, what I'm doing is not hurting them, but if they didn't have access to a database of stolen work, maybe they would have given money to an actual human artist to draw their prompt, maybe they would have held a little drawing prompt contest and shared the art and gained real artists exposure which could then allow those artists to find work doing other commissions. Even if they didn't do either of those things, even if not having AI meant their idea just stayed in their head never to see the light of day, the existence of AI art in general devalues skills people had to work to develop and takes jobs away from those people by taking their existing work. That actively hurts artists. I'm "bashing" them because what they're doing is actively harming me and people like me.

Based on your user name I assume you are probably a writer, and I would like to ask you: is there more value in an actual human being writing things like fanfic (stories using other people's ideas as their jumping off point) or original books that include genre tropes than there is in typing prompts into a text generating AI?

Would you be annoyed if someone chose to write a retelling of Dracula using their own brain and hands in response to hearing that someone else was marketing a Dracula retelling that they'd "written" using a text autofill program?

And just so we're clear, the thing that makes the AI horrible is that it's AI, not whether or not it's nice to look at.

342 notes

·

View notes

Note

AITA for not being entirely negative about AI?

05/16/2024

Just before anyone scrolls down just to vote YTA, please hear me out: I'm not an AI bro, I am a hobbyist artist, I do not use generative AI, I know that it's all mostly based off stolen work and that's obviously Bad.

That being said, I am also an IT major so I understand the technology behind it as well as the industry using it. Because of this I understand that at this point it is very, very unlikely that AI art will ever go away, I feel like the best deal out of it that actual artists can get out of it is a compromise on what is and isn't allowed to be used for machine learning. I would love to be proven wrong though and I'm still hoping the lawsuits against Open AI and others will set a precedent for favouring artists over the technology.

Now, to the meat of this ask: I was talking in a discord sever with my other artist friends some of which are actually professionals (all around same age as me) and the topic of discussion was just how much AI art sucks, mostly concerning the fact that another artist we like (but don't know personally) had their works stolen and used in AI. The conversation then developed into talking about how hard it is to get a job in the industry where we live and how AI is now going to make that even worse. That's when I said something along the lines of: "In an ideal world, artists would get paid for all the works of theirs that are in AI learning databases so they can have easy passive income and not have to worry about getting jobs at shitty companies that wouldn't appreciate them anyway." To me that seemed like a pretty sensible take. I mean, if could just get free money every month for (consensually) putting a few dozens of my pieces in some database one time, I honestly would probably leave IT and just focus on art full time since that's always been my passion whereas programming is more of a "I'm good at it but not that excited about doing it, but it pays well so whatever".

My friends on the other hand did not share the sentiment, saying that in an ideal world AI art would be outlawed and the companies hiring them would not be shitty. I did agree about the companies being less shitty, but disagreed about AI being outlawed. I said that the major issue with AI are the copyright concerns so if tech companies were just forced to get artist's full permission to using their work first as well as providing monetary compensation there really wouldn't be anything wrong with using the technology (when concerning stylized AI art, not deepfakes or realistic AI images as those have a completely different slew of moral issues).

This really pissed a few of them off and they accused me of defending AI art. I had to explain to them that I wasn't defending AI art as it was NOW, because I know that the way it works NOW is very harmful, I was just saying that as an IDEAL scenario, not even something I think is particularly realistic, but something I think would be cool if it were actually possible. The rest of the argument was honestly just spinning in circles with me trying to explain the same points and them being outraged at the fact that I'm not 100% wholeheartedly bashing even the mere concept of AI until I just got frustrated and left the conversation.

It's been about a week and I haven't spoken to the friends I had that argument with since then. I still interact on the server and I see them interacting there too but we just kinda avoid each other. It's making me rethink the whole situation and wonder if I really was in the wrong for saying that and if I should just apologize.

134 notes

·

View notes

Text

READ THIS BEFORE INTERACTING

Alright, I know I said I wasn't going to touch this topic again, but my inbox is filling up with asks from people who clearly didn't read everything I said, so I'm making a pinned post to explain my stance on AI in full, but especially in the context of disability. Read this post in its entirety before interacting with me on this topic, lest you make a fool of yourself.

AI Doesn't Steal

Before I address people's misinterpretations of what I've said, there is something I need to preface with. The overwhelming majority of AI discourse on social media is argued based on a faulty premise: that generative AI models "steal" from artists. There are several problems with this premise. The first and most important one is that this simply isn't how AI works. Contrary to popular misinformation, generative AI does not simply take pieces of existing works and paste them together to produce its output. Not a single byte of pre-existing material is stored anywhere in an AI's system. What's really going on is honestly a lot more sinister.

How It Actually Works

In reality, AI models are made by initializing and then training something called a neural network. Initializing the network simply consists of setting up a multitude of nodes arranged in "layers," with each node in each layer being connected to every node in the next layer. When prompted with input, a neural network will propagate the input data through itself, layer by layer, transforming it along the way until the final layer yields the network's output. This is directly based on the way organic nervous systems work, hence the name "neural network." The process of training a network consists of giving it an example prompt, comparing the resulting output with an expected correct answer, and tweaking the strengths of the network's connections so that its output is closer to what is expected. This is repeated until the network can adequately provide output for all prompts. This is exactly how your brain learns; upon detecting stimuli, neurons will propagate signals from one to the next in order to enact a response, and the connections between those neurons will be adjusted based on how close the outcome was to whatever was anticipated. In the case of both organic and artificial neural networks, you'll notice that no part of the process involves directly storing anything that was shown to it. It is possible, especially in the case of organic brains, for a neural network to be configured such that it can produce a decently close approximation of something it was trained on; however, it is crucial to note that this behavior is extremely undesirable in generative AI, since that would just be using a wasteful amount of computational resources for a very simple task. It's called "overfitting" in this context, and it's avoided like the plague.

The sinister part lies in where the training data comes from. Companies which make generative AI models are held to a very low standard of accountability when it comes to sourcing and handling training data, and it shows. These companies usually just scrape data from the internet indiscriminately, which inevitably results in the collection of people's personal information. This sensitive data is not kept very secure once it's been scraped and placed in easy-to-parse centralized databases. Fortunately, these issues could be solved with the most basic of regulations. The only reason we haven't already solved them is because people are demonizing the products rather than the companies behind them. Getting up in arms over a type of computer program does nothing, and this diversion is being taken advantage of by bad actors, who could be rendered impotent with basic accountability. Other issues surrounding AI are exactly the same way. For example, attempts to replace artists in their jobs are the result of under-regulated businesses and weak worker's rights protections, and we're already seeing very promising efforts to combat this just by holding the bad actors accountable. Generative AI is a tool, not an agent, and the sooner people realize this, the sooner and more effectively they can combat its abuse.

Y'all Are Being Snobs

Now I've debunked the idea that generative AI just pastes together pieces of existing works. But what if that were how it worked? Putting together pieces of existing works... hmm, why does that sound familiar? Ah, yes, because it is, verbatim, the definition of collage. For over a century, collage has been recognized as a perfectly valid art form, and not plagiarism. Furthermore, in collage, crediting sources is not viewed as a requirement, only a courtesy. Therefore, if generative AI worked how most people think it works, it would simply be a form of collage. Not theft.

Some might not be satisfied with that reasoning. Some may claim that AI cannot be artistic because the AI has no intent, no creative vision, and nothing to express. There is a metaphysical argument to be made against this, but I won't bother making it. I don't need to, because the AI is not the artist. Maybe someday an artificial general intelligence could have the autonomy and ostensible sentience to make art on its own, but such things are mere science fiction in the present day. Currently, generative AI completely lacks autonomy—it is only capable of making whatever it is told to, as accurate to the prompt as it can manage. Generative AI is a tool. A sculpture made by 3D printing a digital model is no less a sculpture just because an automatic machine gave it physical form. An artist designed the sculpture, and used a tool to make it real. Likewise, a digital artist is completely valid in having an AI realize the image they designed.

Some may claim that AI isn't artistic because it doesn't require effort. By that logic, photography isn't art, since all you do is point a camera at something that already looks nice, fiddle with some dials, and press a button. This argument has never been anything more than snobbish gatekeeping, and I won't entertain it any further. All art is art. Besides, getting an AI to make something that looks how you want can be quite the ordeal, involving a great amount of trial and error. I don't speak from experience on that, but you've probably seen what AI image generators' first drafts tend to look like.

AI art is art.

Disability and Accessibility

Now that that's out of the way, I can finally move on to clarifying what people keep misinterpreting.

I Never Said That

First of all, despite what people keep claiming, I have never said that disabled people need AI in order to make art. In fact, I specifically said the opposite several times. What I have said is that AI can better enable some people to make the art they want to in the way they want to. Second of all, also despite what people keep claiming, I never said that AI is anyone's only option. Again, I specifically said the opposite multiple times. I am well aware that there are myriad tools available to aid the physically disabled in all manner of artistic pursuits. What I have argued is that AI is just as valid a tool as those other, longer-established ones.

In case anyone doubts me, here are all the posts I made in the discussion in question: Reblog chain 1 Reblog chain 2 Reblog chain 3 Reblog chain 4 Potentially relevant ask

I acknowledge that some of my earlier responses in that conversation were poorly worded and could potentially lead to a little confusion. However, I ended up clarifying everything so many times that the only good faith explanation I can think of for these wild misinterpretations is that people were seeing my arguments largely out of context. Now, though, I don't want to see any more straw men around here. You have no excuse, there's a convenient list of links to everything I said. As of posting this, I will ridicule anyone who ignores it and sends more hate mail. You have no one to blame but yourself for your poor reading comprehension.

What Prompted Me to Start Arguing in the First Place

There is one more thing that people kept misinterpreting, and it saddens me far more than anything else in this situation. It was sort of a culmination of both the things I already mentioned. Several people, notably including the one I was arguing with, have insisted that I'm trying to talk over physically disabled people.

Read the posts again. Notice how the original post was speaking for "everyone" in saying that AI isn't helpful. It doesn't take clairvoyance to realize that someone will find it helpful. That someone was being spoken over, before I ever said a word.

So I stepped in, and tried to oppose the OP on their universal claim. Lo and behold, they ended up saying that I'm the one talking over people.

Along the way, people started posting straight-up inspiration porn.

I hope you can understand where my uncharacteristic hostility came from in that argument.

161 notes

·

View notes

Text

@sleepy-spaceman thank you my creature. Thank you

In a different post, I went over the basics of how AI work in my little OC universe, Rift Saga (a tentative title for now) and I’ve recently come up with another subclass under the sentient AI category. These AI are called exoplanetary scope AI (EPSAI), and were made for scientific expeditions on unexplored or new planets.

They’ve got the bare basics to be considered sentient AI. Personality database, though only bland and generally lacking in any particularly notable attributes, separate memory cores (personal and functional), and a vocal system for language communications. The thing that makes exoplanetary scope AI unique, however, is the presence of a physical body that contributes to the work the AI is doing on their exoplanet. These bodies are quite barebones, all considering. They’re designed for functionality and not for appearance, and only vaguely have a “human” silhouette. Their anatomies (which is what the EPSAI’s physical vessels are called) are very geometric and shaped, and their appearances vary based on what the exoplanet’s environment consists of. Proportions can vary, along with different protective sealants and coatings applied to the anatomies that also gives a “painted” appearance, but again, it’s all for functionality. They aren’t even given names beyond a factory number or maybe being named after their exoplanet—it was all about their work, and they really didn’t have anything that was just for them.

EPSAI only have their one use. They are meant to do their job, testing the planets materials, taking measurements, everything that would need to be made known to humans to determine whether or not that exoplanet is able and worth colonizing. And after, everything is recycled, including the AI. Their processors are stripped of memories and personalities, reduced to the “factory settings” so to speak, and their anatomies are dismantled and reused elsewhere. They go through a processing checkpoint following their departure off-planet (that is only if the planet is deemed worthy of colonization, otherwise the EPSAI are left to deactivate on their own time, all forms of communication with humans cut), where they’re supposed to be cleared for dismantle. However, all that began to change once a new presence showed up.

They don’t have a name, but they’re referred to as “The Liberators”—humans who raid AI processing checkpoints who smuggle out the former scope AI to then bring them to a planet who has in recent years, built an economy based around tourism and bot rings. It is made clear following their escape that the Liberators don’t do anything for free, and the former scope AI are indebted to them. In return, the AI are given new lives as the hottest entertainment seen in recent centuries, even millennia, for money. It works as a pretty basic bet-and-pay system; put your money on a bot, and if they win you get your money back plus more, and if you lose, well, you’re outta luck.

Previously, the AI are considered to be the barest form of autonomous, but here? They’re figureheads for a different kind of life. The one physical requirement to be enlisted under the Liberators in a bot ring is the implementation of pain simulant nanites, much like how human pain receptors work, just to make it a little more real. Beyond that, there is a rising trend in flashy mods and paint jobs to draw in more betters and really put on a show. Here, they also gain a stronger sense of self, forging names and new identities.

After paying off whatever debts are owed, the AI are free to do whatever they like on-planet. The rings are a well-kept little secret, and they’d like to keep it that way. Many stay in the ring business, often getting employed under new organizations to keep fighting. But because the AI are only known for their fighting, it’s the only thing that the human settlers treat them as. Mere entertainment and an easy way to make some extra cash. It’s extremely difficult to “live a life” outside of the rings for bots, which is why so many tend to stay. I mentioned debt peonage in my original post about this. It was a common practice following the US Civil War for African Americans to work to pay off their debts, but because there was still widespread racial discrimination against them, the only places that would take African Americans into work were plantations in the southern US. It kept the labor needed to run plantations, just on a altered plane. Similarly, that is what happens here with the bots. And, at the same time, they’re put onto these pedestals because of their capabilities.

It’s a highly hypocritical practice, but that’s the way it’s been.

A lot of the inspiration for this came from a movie called Real Steel (2011), which I suggest looking up especially for visual designs, because it’s quite similar to how I imagine the designs of the AI looking.

11 notes

·

View notes

Text

My speculations on Indigo Park

I'm putting this post under a read-more in case it finds someone who hasn't played Indigo Park yet and wants to experience it blind.

(BTW, it's free and takes about an hour to finish so just go play it. The horror value's kinda tame overall, but trigger warning for blood splatter at the end.)

Why Rambley doesn't recognize Ed/the Player: The collectables notes make it obvious that our Character, Ed, used to be a regular guest at Indigo Park as a kid. Yet, when Rambley goes to register them at the beginning he says he doesn't recognize Ed's face. I've seen speculation that this might be due either to Ed's age or the facial data database being wiped or corrupted after the park's closure. However, I think there's another possibility.

The Rambley AI Guide was a relatively new addition to the Park. Indigo Park is essentially Disneyland; it's been around for a long time and I rather doubt that the technology for a sentient AI park guide was available on opening day. Rambley mostly appears on modern-looking flat-screens, but in the queue for the railroad he pops up on small CRTS, so technology has advanced over the park's life time. I suspect that Rambley as an AI was implemented a short time before whatever caused the park to be shut down, and the reason that Ed's face isn't already in the system is because Ed just never went to the Park during the time between Rambley's implementation and the closure.

Rambley needs Ed just to move around. Rambley claims he'd been stuck in the entrance area since the closure. That might imply that as an AI guide he's not permitted to move around inside the Park unless he's attached to a guest, and he has to stick close to them. He's probably linked to the Critter Cuff we wear, which would explain why he insists we get it and doesn't just override the turnstile or something. He still needs cameras to see us and TVs to communicate, but it's the Critter Cuff that determines which devices he's able to use at a given moment.

There are other AI Guides. Rambley's limitations in where in the park he can be seems inconvenient for an AI that's meant to assist all the park's guests. Perhaps during normal operations he was less limited because every guest had a Critter Cuff on, but that might have put too much strain on his processing if he was the only AI avatar. Ergo, some or all of the other Indigo characters could have been used as AI guides as well; either a guest would be assigned to one character through the whole park or the others would take over for Rambley in their themed areas while the raccoon managed the main street. Due to the sudden closure, the other AIs may be stuck in certain sections of the Park like Rambley was stuck at the entrance, and we'll interact with them and/or free them as part of the efforts to fix the place up.

The "mascots" are unrelated to the AI. But Rambley believes they are linked. The official music video for Rambely Review has garnered a lot of speculation for how different Rambley's perception of how the Mollie Macaw chase ended is to what we saw in the game. I'm not 100% sold on the idea that Rambley flat out doesn't know that the Mollie mascot got killed. His decision to drop his act and acknowledge the park's decayed state is because he sees how freaked out Ed is by the Mollie chase, and he seems to glance down toward Mollie's severed head when he trails off without describing the mascots. HOWEVER, I don't think he sees Mollie as being truly dead. He's possibly come to the conclusion (or rationalization) that the AI guides, based on the actual characters, are stuck inside the feral fleshy mascots and the mascot's death has led to Mollie's AI being liberated. This idea will stick with him until such time as we encounter an AI character before dealing with the associated mascot (likely Lloyd).

Salem is central to the park's closure. All we really know about Salem the Skunk is what we see in the Rambley's Rush arcade game, where Salem uses a potion to turn Mollie into a boss for us to fight. This reflects real world events, although whether Salem instigated the disaster due to over-committing to their characterization or was merely a catalyst that unwittingly turned the already dubious new mascots into outright dangers remains to be seen.

Rambley's disdain for Lloyd is unwarranted. Collectables commentary indicates that Lloyd's popularity may have been eclipsing Rambley's, and that ticks Rambley off. That's not the fault of the Lloyd(s) we're going to interact with, however. That's on Indigo's marketing for emphasizing Lloyd so much. And who knows, maybe there were plans for other retro-style plushies, but the Park got shut down before those could come out. Either way, while Lloydford L. Lion may be a bit of an arrogant overdramatic actor, the AI Guide version of him isn't going to come across as deserving Rambley's vitriol, and that's going to be the cause of one chapter's main conflict.

38 notes

·

View notes

Text

AI “art”

The thing about art is that it will always be enjoyed most by other artists. However, if other artists are the ONLY enjoyers, then that form of art isnt a sustainable career. For that to happen, it must also be enjoyable to non-artists, who make up the majority of any given market

An artist will be able to look at a painting and understand all of the thought, preparation, time and energy put into a piece - all of which will contribute to the overall effect of the artwork. However, most people wont be able to look so deeply and will gravitate most towards whatever looks good at the surface.

And thats why AI art is a problem. To artists, there is barely even a conversation to be had, it isnt art. It cant be art if it wasnt created by a human because thats the literal definition of what art is, an expression of HUMAN creativity. However, to a non-artist, if it looks good, thats enough. And as non-artists make up the majority of any audience, they are who you need to be able to please.

And if you think about it like that, from a purely objective perspective forgetting about what actually defines art, it makes a lot of sense why people choose AI. Art takes time and costs money. Fake art curated by robots costs nothing and is instant. You dont need to communicate properly in order to get your desired outcome, discuss your ideas with the artist and make creative decisions together based off of previous versions, instead just keep pressing generate until it looks right. Dont worry about copyright infringement or legal ownership or any of that, for AI art it doesnt apply (forgetting about the fact the only way for AI “art” to be possible is through using thousands of samples of REAL artists work as a database without their permission)

It just sucks to see so many people so comfortable with it when we, as artists feel it to be a sort of mockery of everything we have worked for. Many things can be automated, but in my (and many other’s opinions) art is not one of those things, because art is tied in so closely with what it means to be human and if you take the human out of the artwork you can hardly call it art

7 notes

·

View notes

Text

“dont irl artists base their art on other artists how is ai different” i mean there’s a million answers but most crucial is that real artists don’t train on actual child sexual abuse material and ai databases have been found to have been trained on those! like am i going crazy why are people not fucking mentioning that. it's immoral to use a program that uses actual child abuse images to make art for you and bc of the way ai is currently trained It Almost Certainly Is. csam is not exclusive to the “dark web” or whatever if you’ve talked to a mod of anything ever you’ll know idiots try and put the most awful graphic images of children being harmed on places like Reddit and Twitter all the fucking time. places ai Is Trained On. and an ai can scrape an image before a human can delete it. i don’t want an image that is in any way associated with a child being molested and that is not an acceptable way for any technology to act.

#like ignore ai art discourse#You Are Talking About A CSAM Trained Machine#Ai needs to be trained differently Else It Will Download And Train Off Of Images Of Children Being Abused#And I believe it’s not ethical to use it until thats done bc I don’t want an image potentially influenced by the ai seeing actual csam

16 notes

·

View notes

Text

Okay, I just can't. I need to rant about a very specific thing from 7.0 main story. Spoilers, ofc.

.

.

.

Playing through Stormblood and post-Stormblood, I thought that Lyse's decision to invite a very openly and well-known tempered snake into the city without any supervision (despite working with Scions for years and knowing about tempering from experience) would be THE stupidest moment of the entire game. And it was, right until the Living Memory from Dawntrail.

Immediately after entering, the gang just... go oh so slowly through this Disneyland, talking with locals, tasting the food, playing with gondolas and whatever else. Under the excuse of "this is an expansion about shoehorning knowing other's lives and cultures, so we must learn about these people and therefore their deaths won't be in vain".

Guys. Those are not real people. They are not even souls of the deceased locked in the limbo. This is literally a simulation made by a generative AI of sorts, which calculates on what the person should look like, do, say, etc. based on the database of extracted memories. And each second of this Disneyland's existence is fueled by burning through the very real souls. Including the very same people whose memories are used for simulation. It's not even "saving dead at the cost of living" or "saving past at the cost of future", it's akin to saving fucking memory NFTs at the cost of everything and everyone.

Okay, Wuk Lamat is dumb as fuck and learned about the whole reflections-souls-aether mumbo-jumbo like yesterday, but what the fuck is wrong with the rest? Are they nuts? Did they loose the last brain cell somewhere in the previous slog? They should have turn the whole thing off ASAP, not take a leisure stroll.

And it's so clear that the writers wanted to make the same dramatic plot twist as with the recreated Amaurot in Shadowbringers, but just as everything else in this MSQ it flopped horribly. Boo hoo, dead Namikka's memory is here too, it's so sad, Alexa, play Despacito while Namikka's very soul is being slowly disintegrated to fuel the illusion, completely unaware of her reunion.

I'm so fucking done, I hate DT's MSQ so much--

14 notes

·

View notes

Note

(In response to the ai discourse question mod posted)

Generally speaking, at least as far as I know from the art, writing/writing adjacent, and dev communities I’m in, it has to do with the following (from least important to most important imo) -

5. It’s lazy/low effort/etc. This tends to be a concern in creative and fan spaces for the most part, as many people find it to go against the whole kind of ‘drive’ of fandom; sharing things you’re genuinely passionate and care about and are willing to put in effort for about something you enjoy

4/3. It takes away work and opportunities from actual creators, be it programmers, designers, writers, musicians, etc. and instead steals work from unconsenting creators who’ve had their work skimmed by the AI or training algorithm

4/3. It’s harming the environment/water usage/etc. (Yes, so does most tech, but the extent of power and water Generative AI use is far more than essentially anything else. It also comes at a time where many countries, including my own, are in states of emergency regarding water shortages, heat waves, droughts, or contaminated waterways)

2. It cannot be held liable for crimes. Given the status of AI as non-living, it cannot be held responsible for harm it causes. This has led to everything from everyday people being placed into NSFW stuff and it ruining their lives to theft to near-murder (see the many mushroom incidents, chemical incidents, etc.)

1. AI is not an actual intelligence, meaning that nearly everything in it is stolen in some way or another. Be it artists getting their work skimmed, authors (both fanfic and officially published) getting their words, plots, and the like taken to be used without their consent, or even everyday people using Google Docs and having whatever they write be possibly used to train AI without their knowledge

The way this applies to SDV mods and the like is that -

A) It takes work away from artists, programmers, and writers (many of whom would probably even do it for free)

B) Shows often a seeming lack of commitment or interest in the content being put out, as well as seemingly like they don’t care about their customers (similar to when a celebrity does a ‘cash grab’ project where they sell absolute slop for like thousands of dollars to scam their fans that have supported them and who helped build the celebrity’s career)

C) Sends the message that they support the use of AI, or at the very least don’t see much of a problem with it’s usage which just reinforces the idea that they don’t value their customers or other creatives

Sorry if that’s a lot! AI politics can be fairly difficult to research at times and rather difficult to sort through so I wanted to list as much as possible for folks who haven’t had time to look super into it. Personally I’ve not had my work skimmed by AI (never gotten big enough for that) but I know people who have and there’s really nothing that can be done about it after it’s stolen. Generative AI is, and seemingly forever will be, founded on the violation of intellectual property rights, copyright, and human rights in regards to a safe planet and that shouldn’t be seen as okay.

- 🌾 anon (if anyone needs further clarification please ask!)

while i appreciate you typing out a detailed response, i was not asking about AI in the broad sense but specifically about chatbots based on sdv characters. so #4 and 2 aren't relevant. CA is working on a new game and wont be bringing any more major updates to sdv, there's no job opportunities here.

#5 - this is very subjective. personally i dont think that Being Lazy is strictly a bad thing. just part of being human.

#3 and #1 - this is why i mentioned specific sources in my original post. most of the articles ive seen are about chatgpt, im aware of its issues. idk anything about the programs used to make sdv chatbots. what are they called? how do they work? do they rely on chatgpt? are they using its databases? are they trained the same way? are they more or less harmful than chatgpt or an older program like cleverbot? thats the kind of info i was looking for. i did get some program names so ill read about them when i have time.

and lastly... i do not care about intellectual property rights. i am a communist. copyrights and patents are capitalist inventions that stifle creativity and progress.

3 notes

·

View notes

Text

Been playing Mass Effect lately and have to say it's so interesting how paragon Shepard is the definition of a "good cop". You're upholding a racially hierarchical regime where some aliens are explicitly stated to be seen as lesser and incapable of self governance despite being literal spacefarers with their own personal governments, and the actual emphasized incompetence of those supposedly "capable of governing", the council allows for all sorts of excesses and brutality among it's guard seemingly, and chooses on whims whether or not to aid certain species in their struggles based on favoritism, there is, from the councils perspective, *literal* slave labor used on the citadel that they're indifferent to because again, lesser species (they don't know that the keepers are designed to upkeep the citadel they just see them as an alien race to take advantage of at 0 cost), there is seemingly overt misogyny present among most races that is in no way tackled or challenged, limitations on free speech, genocide apologia from the highest ranks and engrained into educational databases, and throughout all of this, Shepard can't offer any institutional critique, despite being the good guy hero jesus person, because she's incapable of analyzing the system she exists in and actively serves and furthers. sure she criticizes individual actions of the council and can be rude to them, but ultimately she remains beholden to them, and carries out their missions, choosing to resolve them as a good cop or bad cop, which again maybe individually means saving a life or committing police brutality, but she still ultimately reinforces a system built upon extremely blatant oppression and never seriously questions this, not even when she leaves and joins Cerberus briefly.

And then there's the crew, barring Liara (who incidentally is the crewmate least linked to the military, and who,, is less excluded from this list in ME2,, but i wanna focus on 1) Mass Effect 1 feels like Bad Apple fixer simulator, you start with

Garrus: genocide apologist (thinks the genophage was justified) who LOVES extrajudicial murder

Ashley: groomed into being a would-be klan member

Tali: zionist who hated AI before it was cool (in a genocidal way)

Wrex: war culture mercenary super chill on war crimes

Kaidan: shown as the other "good cop" and generally the most reasonable person barring Liara, but also he did just murder someone in boot camp in a fit of rage

Through your actions, you can fix them! You can make the bad apples good apples (kinda) but like,,,,

2 of course moves away from this theme a bit while still never properly tackling corrupt institutions in a way that undoes the actions of the first game, but its focus is elsewhere and the crew is more diverse in its outlook

Ultimately i just find it interesting how Mass Effect is a game showcasing how a good apple or whatever is capable of making individual changes for the better but is ultimately still a tool of an oppressive system and can't do anything to fundamentally change that, even if they're the most important good apple in said system.

Worth noting maybe this'll change in Mass Effect 3, which i have yet to play as im in the process of finishing 2 currently (im a dragon age girl) but idk i like how it's handled at first i was iffy on it but no it's actually pretty cool.

Also sorry if this is super retreaded ground im new to mass effect discourse this is just my takeaways from it lol

18 notes

·

View notes

Text

How AI art is made and how it can be a problem

Lucas Melo

Art made by AI (Artificial Intelligence) nowadays is something really popular, in every place on the internet. The idea of AI who makes art is teaching an algorithm how to draw, and use that algorithm to make the drawing for you. Also, the way the tool is made can lead to some problems involving right of images.

You can ask the AI to do everything, for example, you can ask it to draw a knight riding a bear while holding a candy weapon (that says a lot about how powerful AIs can be), and the AI will make the drawing based on its database. There are a lot of websites like “crayon.com”, “creator.nightcafe.studio“ and many others who provide you an AI to make whatever art you ask for. Today the tool is not really developed, still has some problems drawing hands, elbows and other things that are being fixed as the time passes.

To develop an AI you take an algorithm and feed it with data and that data is used to teach the algorithm how to make a task, sometimes the data is supervised by humans, sometimes it’s not. Depends on the way the algorithm it's built. While the AI does the same task multiple times the technique develops and perfections every time it is used.

That means that when an AI is made to do art, it needs to be fed by art, made by actual artists, people. Learning from the data it received, the AI also becomes able to copy the style of artists. It wouldn’t be a problem if they had the consent of the artists to do that, but that obviously isn’t the case since the data is automatically taken from the internet most times.

Artist’s drawing and painting styles are being copied by AI. Not only does it use artworks without consent, it can make some artists lose their jobs. Since the AI is able to draw for free (or cheaper) the same style of the artist, there’s no reason to hire an artist to do that. Nowadays it’s not happening, because these AI are in development and still lack a lot of quality and precision to do what the user is exactly asking, but if it keeps going like that, it’s just a matter of time for it to happen.

In Conclusion, AI itself is not the problem. The problem is how they are made. To make an AI that does art, you have to use other people's art, and that is made without any consensus, and that may affect the artist's job.

3 notes

·

View notes

Text

AI-powered tornado damage assessment apps for insurance adjusters developed by mobile app developers in Dallas

Natural disasters like tornadoes are unforgiving. In a matter of minutes, entire neighborhoods can be turned into debris fields. When the winds calm, the responsibility of measuring the damage—and getting people compensated—falls squarely on the shoulders of insurance adjusters. But this process, traditionally manual and time-consuming, is undergoing a revolution, thanks to AI-powered apps created by mobile app developers in Dallas.

In this article, we’ll explore how these apps are making tornado damage assessment faster, more accurate, and more humane—all while boosting the efficiency of software development companies behind the scenes.

Why Tornado Damage Assessment Needs a Tech Overhaul

After a tornado, time is everything. But historically, adjusters have had to physically inspect each property, take notes, photos, and manually assess the costs. This not only delays payouts but puts professionals in harm’s way.

Rising Insurance Claims Need Scalable Solutions

With extreme weather events becoming more frequent, insurance companies are overwhelmed. There's a pressing need for scalable, digital-first solutions that software development companies can build to automate fieldwork, reporting, and decision-making.

What AI-Powered Tornado Damage Assessment Apps Do

Real-Time Aerial Imaging

Using drone integration, these apps can scan entire blocks within minutes. AI then processes the footage, detects damage types, and tags structures needing review.

Instant Structural Integrity Analysis

By using image recognition and machine learning, the app can differentiate between minor roof damage and a total collapse, giving adjusters critical insights without stepping foot on-site.

Seamless Claims Integration

Built-in integrations allow these apps to directly feed data into insurance platforms. It speeds up the claims process and ensures transparency between insurance adjusters and homeowners.

Role of Mobile App Developers in Dallas in This Transformation

Local Expertise with National Impact

Mobile app developers in Dallas understand tornado-prone regions. Their insights are shaping how the apps prioritize urgency, geographical relevance, and usability.

AI + UX: A Balancing Act

Creating an intuitive experience for adjusters (many of whom are not tech-savvy) requires thoughtful UX/UI design. Dallas-based developers are combining powerful AI engines with clean, guided workflows.

Building for Resilience and Speed

Apps developed by mobile app developers in Dallas are built with disaster conditions in mind—offline mode, fast sync when internet returns, and battery-efficient designs are common features.

Features That Make These Apps Insurance-Ready

Predictive Analytics

Beyond current damage, apps now use historical data to predict future claims and risks. This helps insurance firms prepare better for future tornado seasons.

Multi-Platform Accessibility

These apps are accessible on iOS, Android, tablets, and even rugged field devices, ensuring adjusters can work with whatever tools they have.

Automated Reporting

After assessments, the app generates formatted reports (PDF, XML, JSON) ready for insurance databases, eliminating paperwork and reducing human error.

Behind the Scenes: Software Development Companies Fueling the Movement

AI Model Training with Tornado-Specific Datasets

Top software development companies are investing in AI models trained on tornado-specific imagery—before, during, and after events. This ensures app accuracy improves with every update.

Data Privacy and Compliance

Handling sensitive property and insurance data requires rigorous security. Developers are implementing secure APIs, encrypted data pipelines, and compliance with laws like HIPAA and GDPR.

Cross-Collaboration with Government and NGOs

These apps aren't just for insurance firms. Governments and disaster recovery agencies are working with software development companies to extend the same tech for public safety and humanitarian response.

Pros and Cons of AI-Powered Tornado Damage Assessment Apps

ProsConsSpeeds up claim processingInitial training for adjusters requiredImproves accuracy of damage assessmentDrone use may face regulatory hurdlesReduces need for physical inspectionsAI decisions may need manual overridesEnhances safety for field staffHigher upfront development costsOffers scalable solutions for insurersNot all rural areas have data connectivity

Real-World Use Case

Tornado in North Texas – Spring 2025

After a major EF-3 tornado struck the Dallas-Fort Worth area, one of the largest insurance firms deployed an AI-powered assessment app built by a mobile app development company in Dallas.

Within 48 hours:

Over 900 homes were assessed via drone footage.

85% of minor claims were processed automatically.

Adjusters focused only on complex cases, increasing efficiency by 4x.

Customer satisfaction rose as people received faster updates and payouts within days instead of weeks.

Future Trends in Tornado Damage Assessment Tech

3D Modeling for Deeper Analysis

AI is now being paired with 3D modeling to create digital twins of damaged properties. These models help insurance firms simulate repairs and calculate costs more accurately.

Blockchain for Transparent Claims

Smart contracts on blockchain could automate and authenticate claims. This ensures payout rules are tamper-proof and builds trust with customers.

Voice Assistants for Adjusters

Imagine talking to your phone and saying: "Show me the top 10 properties with severe damage in zone B." That’s the direction many software development companies are now exploring.

Why Mobile App Developers in Dallas Stand Out

Disaster Experience

Having built multiple solutions post-tornado and hurricane events, Dallas developers know how to deliver resilient and reliable products.

AI-First Mindset

AI is not an afterthought. These teams embed AI from ideation through to deployment, ensuring smarter outcomes and faster iterations.

Collaborations with Local Institutions

Many mobile app developers in Dallas collaborate with universities and disaster response agencies to pilot and refine their apps using real-world scenarios.

Key Entities Involved

Entity Tags (for SEO-rich content):

Mobile App Developers in Dallas

Tornado Damage Assessment

Insurance Adjusters

Drone Imaging Technology

AI-Powered Apps

Software Development Companies

Insurance Tech

Machine Learning Models

Property Inspection Apps

Disaster Response

Frequently Asked Questions (FAQ Schema)

Q1: How accurate are AI-powered tornado assessment apps? A: Most apps claim an accuracy rate above 85%, which improves over time as the AI model is trained on more data.

Q2: Are these apps replacing insurance adjusters? A: No. They assist adjusters by handling repetitive or dangerous tasks and help prioritize complex cases for human review.

Q3: Can these apps work offline? A: Yes, most apps developed by mobile app developers in Dallas include offline functionality for field use in low-connectivity areas.

Q4: What’s the role of drones in damage assessment? A: Drones collect high-resolution aerial imagery quickly and safely, which the app then analyzes using AI for damage patterns.

Q5: Are these apps compliant with insurance regulations? A: Yes, leading software development companies ensure the apps meet data privacy, compliance, and insurance reporting standards.

Final Thoughts

The days of clipboards, hours of driving, and uncertain claims are fading. In their place, AI-powered tornado damage assessment apps—developed by forward-thinking mobile app developers in Dallas—are creating a smarter, faster, and safer path forward for insurance adjusters and homeowners alike.

Backed by the strategic minds of innovative software development companies, these apps aren’t just tools; they’re becoming indispensable partners in the insurance world’s response to climate-driven challenges.

Whether you're an adjuster on the ground or a tech leader seeking scalable solutions, these apps signal one thing clearly: The future of disaster recovery is digital—and it's already here.

1 note

·

View note

Text

Unlocking Business Potential with Integrated IT Systems

Integration Connectivity as a Service (ICaaS) is a low-code, cloud-based digital integration solution. Our integration experts have created the Smarter Integration platform to harness this cutting-edge technology and give our clients the quickest, most reliable, and most effective integration route. Smarter Integration represents the next generation of enterprise integration for the digital age. It is a tangible alternative to SaaS / IPaaS integration platforms that enable users to re-architect their infrastructure and create an agile business.

Cloud-Based Integration

Whatever the industry, many business leaders are now prioritizing digital transformation – they want to leverage emerging technologies such as AI and Blockchain to improve efficiencies and drive future growth. Cloud-based Integration, in particular, presents a whole host of opportunities for improving internal efficiencies and is the natural next step for public sector organizations looking to get on top of this mass of data. With the faster deployment of the integration layer, organizations can turn their attention to what matters. Using data empowers employees, enables cross-departmental collaboration, and drives efficiency and cost savings.

Data Integration

Data integration combines data from various sources into a unified format to provide users with a comprehensive view, enabling better decision-making and analysis. Modern enterprises rely on data to drive operational and strategic decisions. The challenge often presents itself in combining data from different sources to provide a unified view on a single interface to enable analytical analysis to drive business strategy and performance.

Integrated IT systems

Integrated IT systems unify various software, applications, and databases within an organization to streamline operations, improve data flow, and enhance efficiency. Businesses can automate processes, reduce redundancies, and ensure real-time data access by connecting disparate systems. This Integration fosters better decision-making, improves collaboration, and supports scalability, making it essential for modern digital transformation and business growth.

Check out this related blog-

https://tinyurl.com/mtp8rsww

0 notes

Text

Why Python is a Top Choice for Web Development: Benefits and Features

Python has carved a strong place for itself in the world of web development due to its simplicity, versatility, and the powerful tools it offers. Whether you’re building a simple website or a complex web application, Python provides the flexibility and efficiency developers need. Considering the kind support of Python Course in Chennai Whatever your level of experience or reason for switching from another programming language, learning Python gets much more fun.

Here’s a look at the main reasons why Python is a favorite in web development.

Readable and Easy to Learn One of Python’s biggest strengths is its readable syntax. It’s designed to be intuitive and close to human language, making it easier for beginners to pick up and professionals to work with quickly. This simplicity speeds up development and reduces the chances of coding errors.

Robust Web Frameworks Python offers several mature frameworks that make web development faster and more structured. Django, Flask, Pyramid, and FastAPI are among the most popular. Django comes with many built-in features that simplify development, while Flask offers flexibility for custom solutions. These frameworks help reduce the time and effort needed to create and maintain web apps.

Fast Development and Prototyping Python allows for rapid development, which is crucial when testing ideas or bringing a product to market quickly. Developers can write and deploy working code faster thanks to Python’s straightforward syntax and powerful libraries.

Large and Supportive Community Python has one of the largest developer communities in the world. This means you can easily find resources, documentation, tutorials, and support for any challenges you face. The strong community also keeps Python updated with the latest trends and tools. With the aid of Best Online Training & Placement Programs, which offer comprehensive training and job placement support to anyone looking to develop their talents, it’s easier to learn this tool and advance your career.

Built-in Security Features Security is a top concern in web development. Python frameworks like Django come with built-in security features to help protect against common threats such as SQL injection and cross-site scripting. This allows developers to build secure applications with less effort.

Cross-Platform Support Python is cross-platform, so applications built with it can run on different operating systems like Windows, macOS, and Linux. This flexibility is especially useful when developing for a broad user base.

Seamless Integration Python integrates well with other technologies commonly used in web development. It works easily with front-end tools like HTML, CSS, and JavaScript and connects smoothly with databases and APIs. This compatibility makes it an excellent choice for full-stack development.

Ideal for Data-Driven Applications If your web project involves data analysis, machine learning, or AI, Python is the perfect language. With libraries like Pandas, NumPy, and TensorFlow, Python allows you to incorporate data processing and intelligence directly into your web apps.

Scalable and Reliable Python is suitable for both small startups and large enterprise applications. It scales well with user growth and can handle increased traffic and complexity without sacrificing performance.

Conclusion Python combines simplicity, speed, and a rich ecosystem of tools to make web development more efficient and powerful. With its user-friendly syntax, strong community, and excellent frameworks, Python continues to be a top choice for developers looking to build modern, scalable, and secure web applications. Whether you're just starting out or working on a major project, Python offers everything you need to succeed.

#python course#python training#python#technology#python programming#tech#python online training#python online course#python online classes#python certification

0 notes

Text

Create Stunning 3D Designs with PhotoCut's AI Image Generator

PhotoCut's new AI 3D Image Generator makes it super easy if ever you wanted to build amazing 3D drawings but didn't know where to get started! Whatever your profession; architect, developer, artist, or just a cool guy who keeps trying out things with new stuff, this rather powerful application allows you to turn pretty simple text descriptions into intricate visuals in 3D using good old artificial intelligence. PhotoCut lets you build stunning 3D models quickly and effortlessly without requiring special design knowledge.

What is PhotoCut’s AI 3D Image Generator?

PhotoCut's AI 3D Image Generator is an advanced tool for creating 3D images based on text descriptions. Using the latest AI technology, this tool transforms what you write into real and creative 3D designs. Whether you're creating characters, objects, scenes, or even architectural models, it helps you create 3D images quickly and easily. You can now obtain high-quality images in minutes instead of hours or days designing sophisticated 3D models.

The AI works with machine learning, using millions of famous images as data, to produce highly detailed and lifelike designs. All you need to do is just type out the description of what you want, and the rest will be taken care of by the AI. Now, let's get deeper into all the other uses that this tool can give you and how it can aid you in your other creative projects.

Try out PhotoCut’s AI Bald filter to see how you might look with a bald head.

How Does the AI 3D Image Generator Work?

It's easy and entertaining to use PhotoCut's AI 3D Image Generator. Here's how it works:

Begin writing a long explanation of what it is that you're looking to get on the design with 3D. It can be a short description of a futuristic robot standing in an urban setting where the sun is setting. Likewise, this includes a beautiful glass-roof modern house with huge glasses.

After inputting the description, the AI algorithm processes the text you typed with powerful algorithms analyzing your words and transforming it into a 3D image based on your description. It uses an immense database of images and patterns to produce a comprehensive 3D design that best describes what you have in mind.

You may also alter your design by defining specific elements, such as the image's style, the quantity of photos you desire, and the aspect ratio (the image's measurements). This lets you change the outcome to suit your requirements.

You may rapidly create your 3D picture by clicking the "Generate" button after entering your description and making any required edits.

Once your design is ready, you can download the image or model. This makes it easy to use your creations in different projects, whether for social media, games, architecture, or more.

Key Features of PhotoCut’s AI 3D Image Generator

PhotoCut's AI 3D Image Generator boasts many cool features that would help you generate incredible 3D designs of any kind within seconds:

Fast Results: With PhotoCut's AI 3D Image Generator, you would save time instead of wasting hours or days designing 3D images. This AI works directly on the description you give it, and it'll deliver a professional-grade image to you in a matter of just minutes.

Easy to use: Using the tool does not require knowledge of 3D modeling or graphic design. Just put in a description of what you want to create, and the AI handles the rest. For both professionals and beginners, making it easy.

Limitless Potential: Possibilities are endless; whatever you may want to create, be it an architectural model, gaming character, or commercial prototype.

Design Customization: This is a flexible tool because you can change things like design, aspect ratio, and how many photos you want. You may make designs to suit your requirements.

Excellent Results: The AI generates realistic, expertly rendered 3D graphics. This makes it ideal for application in architecture, virtual worlds, gaming, product design, and other fields.

Shape your images for free using PhotoCut’s photo shape editor.

What Can You Create with PhotoCut’s AI 3D Image Generator?

With PhotoCut's AI 3D Image Generator, you can create a lot of things. Here are a few instances of these:

1. 3D Game Characters

If you are a game developer, then you have discovered a game-changer with PhotoCut's AI. Instead of taking up a lot of time in designing game characters, you can easily create detailed and unique 3D characters within minutes. It is possible to generate more characters within a few minutes, thus saving you precious time during the design process of your game. The AI is also helpful in producing variant character designs as per your input text prompts. Whether it's futuristic warriors, fantasy creatures, or cartoonish characters, there are infinite options.

2. Architectural Models

Architects and building contractors can use AI to create 3D models of buildings and structures. If you have a new design or project that requires visualization, PhotoCut's AI is at your service, helping you work out intricate and realistic models that will bring ideas to life. It's very useful for planning and prototyping, where you can get a view of how it will look before anything gets built. You can even apply AI to generate complex models of landscapes or interior spaces, assisting you in your whole design process.

3. Product Prototypes

With PhotoCut's AI, product designers can rapidly generate 3D prototypes of new products. Be it a car, chair, gadget, or anything else, AI can create a detailed 3D model based on your descriptions. This is fantastic for quick visualization of a product and improvements before finalizing the prototype. AI lets you test various designs and variations to perfect your ideas and bring them closer to reality.

4. 3D Art and Illustrations

For instance, if you are an artist, you can utilize AI to produce some great 3D art and illustrations. The use of AI is quite good in producing very realistic images, as well as more stylized designs, all depending on the creativity of your imagination. This software will help generate anything from abstract art to photorealistic images, all based on the text you provide. It even lets you explore various art styles and experiment with new ideas.

5. 3D Objects and Logos

You can use PhotoCut AI to create models of objects and logos in 3D. If you want a logo in 3D for a branding purpose or create a 3D object of a product you are designing, AI can instantly create these for you. Simply describe your required design, and AI will create a 3D model to help you in any project.

Add cool borders to your photos using PhotoCut to bring elegance.

Conclusion

PhotoCut's AI 3D Image Generator is a powerful and easy-to-use tool that lets you open doors to a wide range of new possibilities in designing amazing 3D designs. Whether it is in game development, architecture, or product design, and just for experimenting with new technologies, you now can create your high-quality 3D models within minutes. Fast processing, extremely easy interface, and virtually unlimited creative ability mark PhotoCut's AI 3D Image Generator. Be sure you check it out today to discover what kind of amazing designs you can make.

Find the best Barbie captions for Instagram and Instagram post templates to spice up your posts.

FAQs

Q1. Can AI generate 3D models?

Ans. Yes! With PhotoCut’s AI, you can easily generate detailed 3D models from text descriptions without needing design experience.

Q2. What is an AI 3D image generator?

Ans. An AI 3D picture generator is software that generates a 3D image from a text description or picture using artificial intelligence. It is very fast and easy to create 3D sketches for any project you may have in mind.

Q3. What kinds of 3D designs can I create with PhotoCut’s AI?

Ans. You can create anything from a character, an object, a building, a logo, and whatnot. The list goes on forever!

Q4. Can AI replace traditional 3D modeling?

Ans. AI could accelerate the 3D creation process but won't completely displace human innovation. The graphic created by AI needs to be amended or altered at times.

0 notes