#just looked through a Python library for doing some less basic data analysis

Explore tagged Tumblr posts

Text

Man, some programmers will use “conciseness of variable names” to get away with anything. You’ll NEVER guess how the word “analysis” in any name ever gets shortened.

#just looked through a Python library for doing some less basic data analysis#that person had WAY too much fun writing names#programming humor

1 note

·

View note

Text

Exploring D:BH fics (Part 8)

One of the things I’ve always been really interested in is - how do fanon representations of characters differ from canon characterisations, and why? There are many reasons for that interest but I won’t ramble here, since to answer that question I’ll first need to start from the beginning: understanding how D:BH characters are represented in fanon.

This post covers how I extracted descriptions of DBH characters from author-provided AO3 tags. This refines the preliminary analysis I did on Connor and RK900 in RK1700 fics.

View results for: Connor | RK900 | Hank Anderson | Gavin Reed

I’d like to emphasise that quantitative data is good for a bird’s eye view of trends and that is all I really claim to be doing here. This by no means replaces close qualitative readings of text and fandom, but I think it has its worth in validating (or not) that, “Yes, there is some sort of trend going on here and this might be interesting to dig into.” Recap: Data was scraped from AO3 in mid-October 2019. I removed any fics that were non-English, were crossovers and had less than 10 words. A small number of fics were missed out during the scrape - overall 13933 D:BH fics remain for this analysis.

Part 1: Publishing frequency for D:BH with ratings breakdown Part 2: Building a network visualisation of D:BH ships Part 3: Topic modeling D:BH fics (retrieving common themes) Part 4: Average hits/kudos/comment counts/bookmarks received (split by publication month & rating) One-shots only. Part 5: Differences in word use between D:BH fics of different ratings Part 6: Word2Vec on D:BH fics (finding similar words based on word usage patterns) Part 7: Differences in topic usage between D:BH fics of different ratings Part 8: Understanding fanon representations of characters from story tags Part 9: D:BH character prominence in the actual game vs AO3 fics

1. Pulling out the relevant tags. For this analysis, I used the ‘other’ tags since they’re more freeform and allow authors to write unstandardised tags. At the same time, they’re likely to be simpler (in terms of grammar) than sentences within a fic, making it easier to pull out the information automatically. There were about 144,000~ tags to work with.

2. Identifying tags with character names. I already have a list of names thanks to working on topic modeling in Part 3. I ran a regex looking for tags with names. This left me with 33,000+ tags.

From here on the code is really held together by hideous amounts of tape but let’s proceed.

3. Pulling out descriptions of Connor. x 3.1. (word)!(character) descriptions, e.g. soft!Connor Regex for this since this is a clear pattern. I dropped any tags that had other names besides Connor.

The next few description types relied on Stanford’s dependency parser to identify the relations between the words. I then strung together the descriptions based on the relevant dependencies.

x 3.2 (noun) (character) descriptions, e.g. police cop Connor Step 1: Check if there is a noun before ‘Connor’ modifying ‘Connor’ Step 2: If yes, pull out all the words that appear before ‘Connor’

x 3.3 (adjective/past participle verb) (character) descriptions, e.g. sad Connor Step 1: Make sure it’s Connor and not RK900/upgraded Connor Step 2: Retrieve all adjectives/past participle verbs modifying Connor Step 3: Retrieve adverbs that may modify these adjectives/verbs Step 4: String everything together

x 3.4 (character) is/being,etc (adjective) descriptions, e.g. Connor is awkward Step 1: Make sure it’s Connor and not RK900/upgraded Connor Step 2: Look for adjectives modifying Connor Step 3: Look for adverbs, adjectives, past participle verbs, nouns, negations that may further modify these adjectives (e.g. Connor is low on battery) Step 4: String everything together

x 3.5 (character) is/being,etc (noun) descriptions, e.g. Connor is a bamf Step 1: Make sure it’s Connor and not RK900/upgraded Connor Step 2: Look for nouns modifying Connor Step 3: Look for adverbs, adjectives, past participle verbs, nouns, negations that may further modify these nouns (e.g. Connor is an angry boi) Step 4: String everything together

There was a little manual cleaning to do after all of this since these are basically just automated heuristics - but it was definitely preferable to going through 33k+ tags myself.

4. Manually grouping tags. I got 1061 unique descriptions of Connor. There are some really, really common ones like deviant, bottom, adorable. After these top few descriptions, the frequencies dipped very quickly. Many descriptions had a count of 1 or 2.

But my job isn’t complete! Because sometimes ‘rare’ descriptions are really just synonyms/really similar to tags with bigger counts. For example, adorable bun, generally adorable should fall under adorable. Same with smol, tiny, small and little. So I got to work slowly piecing these similar descriptions together manually. I did question my life decisions at this point.

5. Wordcloud creation. I used Python’s WordCloud library for this. I just grabbed a random screencap of Connor from Google and edited it into a mask for the wordcloud. I kept only descriptions that had a count of at least 3.

I removed deviant, machine, human and pre-deviant since I thought those would be better viewed separately. Keeping deviant would also severely imbalance the wordcloud because it has a count of 822. The next most frequent descriptions were far less; human at 344, bottom at 312.

Final notes I didn’t look at “Connor has x” patterns since I felt those are somewhat different from character qualities/states/roles, which were what I was keen on.

I’m pretty sure I haven’t caught all possible ways to describe a character in tags. But I think overall this method is good for getting a rough first look. Not sure how the code will perform on fic text itself though since it’s kinda unwieldy.

I also feel that within fic text, a lot of character qualities are performed and not so bluntly put across, so they may not be retrievable by relying on these very explicit syntactic relations. These tags are really just one sliver of the overall picture. Still, this was a lot of fun!

14/01 NOTES FOR RK900′S RUN: x this boi is a real challenge, run was probably more imperfect than Connor’s x I replaced Upgraded Connor | RK900 in tags to upgradedconnor before doing anything else x upgradedconnor, conan, conrad, niles, nine, nines, richard, rk900 were all taken as RK900 x Much fewer tags to work with (understandable since RK900 is a minor canon character and has much less fics than Connor) x 443 unique descriptions x Top 3, keeping deviant, machine, human and pre-deviant in the pool: deviant (275), human (143), top (142)

17/01 NOTES FOR HANK’S RUN: x I replaced Hank Anderson in tags to hank before doing anything else, meaning that Hank and Hank Anderson were taken to be referencing Hank x Fewer tags than Connor but slightly more than RK900 x 565 unique descriptions x Top 3: good parent (407), parent (296), protective (287). I did not combine parent/good parent since obviously being a parent doesn’t necessarily imply being a good one. I also chose to keep father figure separate from parent categories since the implications are a little different.

19/01 NOTES FOR GAVIN’S RUN: x I replaced Gavin Reed in tags to gavin before doing anything else, so Gavin and Gavin Reed were taken to be referencing Gavin x Slightly fewer tags than Hank, but not as few as RK900 x 648 unique descriptions x Top 3: asshole (249), android (207), trans (197)

10 notes

·

View notes

Text

What I think Biotech freshmen should learn during your first year at IU

So my first year has finally ended. The curriculum for freshman year is pre-determined by the Office of Academics so I did not have a chance to change the schedule. I took about 7 subjects each semester, with a total of 2 semesters. If you do not have to take IE1 and IE2 classes, you can “jump” directly into the main curriculum. So in my first year I took: Calculus 1&2, Physics 1&2, General Biology and Chemistry plus 2 Bio and Chem labs, Organic Chemistry, Academic English 1&2 (Reading, Writing, Speaking, Listening), Critical Thinking, Introduction to Biotechnology, P.E. It’s a relief that I could work through the courses although I was not excelling at STEM subjects in highschool. But college taught me all the amazing skills to study on my own and discover knowledge for my self-growth. Apart from schoolwork, I think any Biotech freshmen should also keep an eye on learning other extra skills of a scientist/professional, which I will list below. Do not worry because a year ago I entered this school while being a completely blank state, having seen so many of my friends succeeded in getting scholarships, leading extracurricular activities,... I felt hopeless sometimes but I believe in grinding one step at a time until I could accomplish the job. So my general experience boils down to being humble and let others teach you the skills, then practice slowly but firmly. You will be able to grow so much faster. And do not compare yourself with others’ success stories because everyone has their strengths and their own clock.

These are the lists of skills I have learnt and will continue to improve in the future. I will be expanding and giving more details about each point. This is in no chronological order:

- Learn to make a positive affirmations/ orienting articles book:

During your whole college career, you will have a lot of moments of self-doubt, for example when your grades are not good, you've failed some classes, your part-time job application got turned down too many times (trust me I am so familiar with such rejections), some experiments got messy and returned no results, you wonder what your future in the field would look like. These are all scenarios that have happened to me in freshman year.

Therefore, I have found a way to cope with self-doubt and boost my confidence, which is to make a collection of positive affirmations and orienting articles. I would form an imagined overview of my own career path reading all these writings and finally came to recording my own path . I use all forms of note-jotting tools to record them. I tend to record 1) Experience snippets from influencing scientists in my field, whom I happen to follow on Facebook 2) Lists of “What college kids need to practice before they graduate” (Dr.Le Tham Duong 's Facebook) 3) Ybox 's Shared tips for career orientation column (Link) 4) Short paragraphs from the books that I have read. The paragraphs often contain insights into what successful people (in Biotech or in Finance) have thought, have planned, and have acted on. For digital copy of the books, I save the snippets into a file called "Clippings" and later export them through the website called clippings.io

- Have an online note-taking tools for jotting down important thinking (recommend Keep or Evernote for quick jot, while Onenote is more suitable for recording lectures thanks to its structure that resembles a binder)

A snippet of my Evernote, where I store career advice:

- Learn to do research properly (what is a journal, what is a citation management software, what is the structure of a paper[abstract, introduction, methods, data analysis, discussion, conclusion], poster, conferences). You can begin to search for academic papers using Google Scholar scholar.google.com. However, there are countless of other websites for published journals that serve different sience fields. You need to dig into Google further to find them.

- Learn to write essays (basic tasks are covered in Writing AE courses including brainstorming, reading, citings, argumentative essay, process essay, preparing thesis statement)

- Join a lab: learn the safety guidelines, learn who is in control of the lab, what researches/projects are being carried there). As for this, you need to make contact with professors from our department. Most professors here are friendly and willing to help if you just come up and ask a question after class. To be eligible to join their labs, - Learn to write a proper email to a professor: to ask for what will be on the next test, whether you can be a volunteer in their lab (usually by cold-email, and the professor will likely ask for your background: your highschool grades, your motivation,...); write email to an employer asking for a temporary position. - Learn to write a meeting minute: a sum-up of your meeting with other team members - Learn to answer basic questions for a volunteer opportunity: what is your strength, what is your past experience, what do you know about us, what is your free-time - Learn to write a CV: using topcv,... or downloading free templates on the Internet and write a motivation letter: What you are currently doing, what problems have you solved for your employers, and what can you do to help your new employers solve their problems.

- Learn to create an attractive presentation by using Canvas and learn to deliver ideas effectively (recommended book Think on your feet [Lib 1]).

I have discovered Canvas only for a year, but its graphics are so attractive and appealing to my taste, so here is my presentation for Finals using Canvas:

- Learn a programming language or a second language: recommend Python or R, and any foreign language that you feel interested in, but your ultimate goal would be to comfortably use that language in academic reading and exchanging ideas through writing and speaking (which is a long journey of 4+ years learning), so choose wisely. - Learn to create an online presence through a blog (Tumblr, Wordpress, Github, StackExchange,...), stalk your favorite experts on Quora and Reddit, make a habit to have a journal article delivered to your inbox every morning (me being Medium, Pocket, Nature); then Instagram or YouTube - Recommended books for incomming freshmen: How to be a straight-A student, Do not eat alone (socializing skills) - Recommended Medium sites: - Recommended Newspapers: The Economist, The Guardian, The New York Times Opinions Columns, Nature’s columns, The Scientific American. - Recommended Youtube Channels: - Recommended Podcasts: listen passively on the bus, but try to paraphrase in your own words what you have understood about their conversation: - Recommended study spots: Den Da Coffeeshop, The Coffeehouse, Library of [...] in District 1, Central Library (Thu Duc District), IU Library.

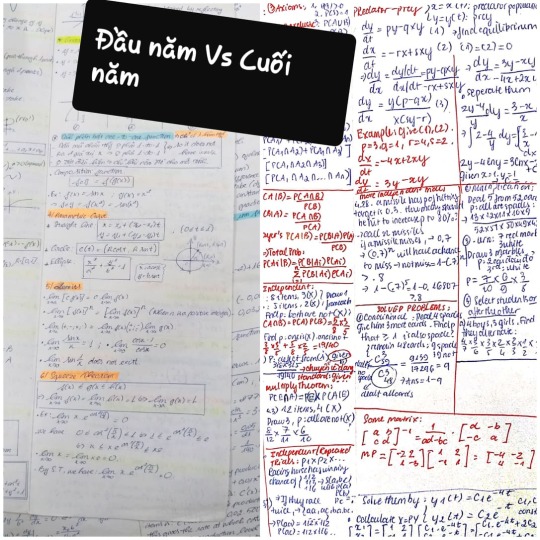

- Learn the Pomodoro technique and Forest app: - Important websites for study resources: libgen, khanacademy, Coursehero (post only a short paragraph to get 1 free upload), scripd organic chemistry tutor, for jobs: ybox.vn - If your laptop is capable (with decent hardware), learn Adobe Tools (Video editing, Photoshop,...). My laptop can only run Linux Mint, so I chose to learn the skill of citation management and research (using less resource). Basically do not become computer-illiterate. - To reduce eye-strain, buy an e-reader to read scientific papers, do not print out all of them. - One exception to IU: you can bring one two-sided A4 paper into certain exams: this is my note for Calculus class:

- Learn to write a grand summary of formulas for Physics 2, meta-sum of all exercise questions - Prepare for IELTS (if you haven't taken IELTS already): you'll need it to pass IE classes, or apply for an exchange program. Ultimately, you need at least 6.0 in IELTS to graduate. I stumbled upon this careful list of tips from a senior student in our BT department who got an 8.0 => Link

Basically, follow Ngoc Bach’s page on Facebook to receive fully-solved exam materials, add ielts-simon.com into the mix, learn 560 academic word list, listen to Ted Talk and podcasts, do tests on ieltsonlinetests.com, do Cambridge IELTS book 9-14 and you’re good to go. - Have your eyes on competitions that spark your interest (innovative competition, writing contests, speaking and debating contests,...)

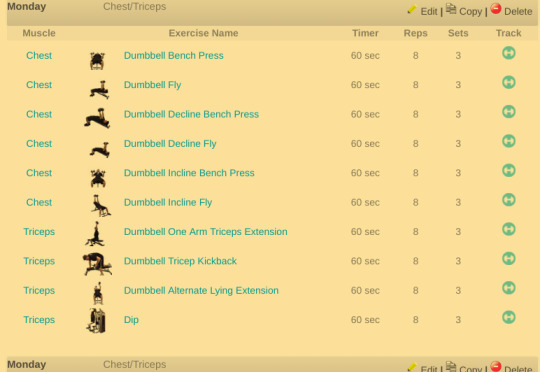

- Develop your fitness routine to protect your sanity when academic coursework overwhelm you and make you gain 15 pounds.

I do home HIIT exercises on Fitness Blender’s Youtube channel, Emi Wong, Chloe Ting home workouts in the beginning.

Later I went to the gym and do split routines with weights, then threw in squats, deadlifts, lunges and HIIT on treadmill. This is how my current routine look like: (I work out only 4 times/week)

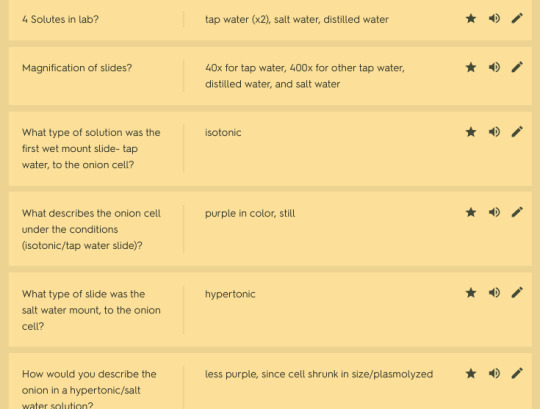

- Learn to use flashcards. (Quizlet has premade flashcards for biology class)

- Learn to manage personal finance: what is budget, expenses, income,... - Learn some google tweaks to pirate stuff. (especially textbook files and solutions files) - Learn to make handwritten A4 notes. I will post my own handwritten notes for Critical Thinking Mid (final is taken), Calculus II Mid and Final. - Learn to create meta sets for formulas and problems. I will post my formula set for Physics II and Problem/Skill set for Organic Chemistry. For Physics II, I learned my hard lesson is that it is better to do past exams than to solve advance textbook problems, so I stuck with past exams posted by TA and learnt by heart all the formulas, SI units. For Organic Chemistry you need a skillset checklist (like in the Wade textbook). Also there are questions from the slides such as the connection of amylopectin,... But they won't challenge you to think much. Only the amount of information to be memorized is deemed challenging here. - Intro to Biotech was quite easy and you could prepare in advance. 3 faculty members (from 3 fields: plant, animal, pharma) will take turn giving you an overview lecture. The exam will ask “Write what you know about those fields and their applications”, openbook-style. So hear me out and search for Overview powerpoints of that field, then write your own essay, print it out and bring it with you into the exam. Your power should be spent on Calculus and Physics, not on memorizing the essay.

7 notes

·

View notes

Photo

Top MNCs in the world are hiring professionals in this domain including Amazon, IBM, LinkedIn, Microsoft, and more. Coding skills, especially the ability to do data analysis in Python, are an additional skill set that will set you apart from your peers in the job market. In this unit, you’ll learn the basics of Python and key Python libraries, including pandas, NumPy, matplotlib, seaborn, and more.

If this degree is your goal, get started toward becoming a student today—programs start the first of each month. The curriculum is taught via video by six different instructors, all of which are experts in the industry. Some courses include quizzes and every course has a Q&A section where you can ask the lecturer questions on the course. It doesn’t matter whether or not you have experience with statistics, computer science, or business. With plenty of hands-on projects, this course by ExcelR Solutions will teach you how to answer business questions with data. ExcelR’s Data Analytics Immersion program can guide you to your dream career, as long as you’re willing to put in the time to study.

Data Analytics Courses

Some people do their best studying in the early mornings, before going in to work; others are most energized when they come home in the evenings. The M.S. Data Analytics degree program is an all-online program that you will complete through independent study with the support of ExcelR faculty. You will be expected to complete at least 8 competency units (ExcelR's equivalent of the credit hour) each 6-month term.

Let’s take a look at what you need to know as an analyst or data scientist – and what you’ll learn when you take our courses. It includes 4 industry-relevant courses followed by a capstone project that requires learners to apply data analysis principles and recommend methods to improve profits of residential rental property companies. This capstone project has been designed with help of Airbnb (Capstone’s official sponsor).

Hence, studying data analytics courses can be an awarding choice. On completion of any PG level data analytics courses, the starting salary offered to a candidate can be anywhere from INR 2-8 LPA. For your capstone project, you’ll select a real-world data set for exploration and apply all of the techniques covered throughout the course to solve a problem.

The demand for professionals and experts who are capable of processing and achieving Big Data solutions is extremely high due to which they are paid high salaries. Due to this increasing demand, professionals with ExcelR Data Analytics certification courses will surely have an upper hand in scoring a lucrative job over others. In addition to small projects designed to reinforce specific concepts, you’ll complete two capstone projects focused on a realistic data analytics scenario that you can show to future employers. Spanning just ten weeks on a part-time basis, the BrainStation course is one of the less time-intensive options on our list—ideal if you’re not quite ready to commit to a lengthy program. This course will teach you all the fundamentals of data analytics, equipping you to apply what you’ve learned to your existing role or to embark on further training.

These courses are designed for working professionals to master this technology without giving up their current profession. The curriculum of these programs will be designed in such a way that it causes minimal disruptions to your personal and professional lives. Once you get familiar with the concepts and the application of the various tools and techniques of Data Science, you will be able to gain expertise. Constant learning and practice is the only way to master Data Science and become a successful data science professional. The eight-month graduate certificate program in Analytics for Business Decision Making prepares students to do data analysis specific to business decision-making. Students learn to take data and turn the information into the next steps for an organization.

A data analytics consultant might use their skills to collect and understand the data. Data analytics consultants employ data sets and models to obtain relevant insight and solve issues. The data analytics online program offers intensive learning experiences that imitate the actual world and develop projects from scratch. Because the profession is vital to business development, most companies require a data analyst, and new graduates also have excellent job opportunities. If you aim to become more competitive, then it is worth earning the Data Analyst certification. Explore your educational opportunities and start creating a solid basis for data analyst skills.

For More Details Contact Us ExcelR- Data Science, Data Analytics, Business Analyst Course Training Andheri Address: 301, Third Floor, Shree Padmini Building, Sanpada, Society, Teli Galli Cross Rd, above Star Health and Allied Insurance, Andheri East, Mumbai, Maharashtra 400069 Phone: 09108238354

Data Analytics Courses

0 notes

Text

Huge Knowledge Careers

They work across a variety of industries—from healthcare and finance to retail and know-how. Data warehouse managers are liable for the storage and analysis of data in services. These professionals use performance and utilization metrics to evaluate information, analyze information load, and monitor job utilization. They could also be responsible for figuring out and mitigating potential dangers to data storage and transfer.

priceless analytical abilities are MapReduce (common wage of $115,907), PIG ($114,474), machine studying ($112,732), Apache Hive ($112,732), and Apache Hadoop ($110,562). This information will present how you can maximize your information analyst salary and why it's so important to invest repeatedly in your personal and professional improvement. Additionally, turning into a concern in your native analytics community is a great way to get extra visibility, which might result in inquiries from head hunters with jobs that could enhance your salary, Wallenberg says. “These experiences can help you earn the next wage as a brand new tech skilled, because you’ll already have a number of the abilities and data needed,” he says. If you assume that a profession in massive information is best for you, there are a number of steps you possibly can take to arrange and position yourself to land one of many sought-after titles above. Perhaps most importantly, you must think about the abilities and experience you’ll have to impress future employers. Data analysts work with giant volumes of information, turning them into insights companies can leverage to make better selections.

They may also enterprise out on their own, creating trading models to foretell the prices of stocks, commodities, trade charges, and so forth. The common salary for an information analyst is $75,253 per year, with an extra bonus of $2,500. Data Analysts do precisely what the job title implies — analyze firm and trade information to search out value and opportunities. Before you are taking the time to learn a brand new skill set, you’ll probably be curious in regards to the income potential of related positions. Knowing how your new expertise shall be rewarded provides you the correct motivation and context for learning.

He is B.Tech from Indian Institute of Technology, Varanasi and MBA from Indian Institute of Management, Lucknow. A graduate of the Wharton School of Business, Leah is a social entrepreneur and strategist working at quick-rising technology firms. Her work focuses on innovative, expertise-driven solutions to local weather change, training, and financial growth. 2017 Robert Half Salary Guide for Accounting and Finance calculates that almost all entry-level financial analysts at giant corporations make $52,seven hundred to $66,000 and up to $50,000 in bonuses and commissions. Data science positions may specify knowledge of languages and packages like Java, Python, predictive modeling algorithms, R, Business Objects, Periscope, ggplot, D3, Hadoop, Couch, MongoDB, and Neo4J. University of Wisconsin, the average information scientist earns $113,000, with salaries usually starting between $50,000 and $95,000.

And, should you discover an ability that you just still have to learn, keep in mind that you can take an reasonably priced,self-paced data analytics course hyderabad that will assist you to be taught every little thing you need to know for a successful career in knowledge science. Plus, many data analytics specialists boast an excessive median salary, even at entry-level positions. Jobs in massive cities like Chicago, San Francisco, and New York tend to pay the most. Generally, companies benchmark salaries in opposition to opponents when recruiting talent. When deciding whether or not or not to relocate, think about state taxes and the cost of living within the locations you might be considering. Some remote positions may pay less, however the flexibility of working from residence in a cheaper metropolis or nation could represent a more engaging offer.

They’re usually required to have prior expertise with database growth, data analysis, and unit testing. Data scientists design and construct new processes for modeling, data mining, and production. In addition to conducting data studies and product experiments, these professionals are tasked with creating prototypes, algorithms, predictive fashions, and customized analysis. These professionals are tasked with designing the structure of complicated information frameworks, in addition to constructing and sustaining these databases. Data architects develop methods for every topic area of the enterprise knowledge model and talk plans, status, and points to their firm’s executives.

Knowing the basics of SQL will give you the boldness to navigate massive databases, and to obtain and work with the info you need for your tasks. You can at all times search out opportunities to proceed learning when you get your first job. Bhasker is a Data Science evangelist and practitioner with a confirmed record of thought leadership and incubating analytics practices for varied organizations. With over sixteen years of expertise within the area of Business Analytics, he is well recognized as an skilled within the trade.

That’s why it’s necessary to keep tabs on business developments and make sure you’re up to date on the newest skills, says John Reed, senior govt director at RHT. Robert Half Technology’s Salary Guide, these professionals earn between $81,750 and $138,000 relying on their expertise, training, and skill set. Download the free guide below to be taught how you can break into the quick-paced and thrilling field of data analytics courses in hyderabad. Data analysts work to improve their own systems to make relaying future insights simpler. The goal is to develop strategies to analyze massive knowledge sets that can be easily reproduced and scaled. These professionals are responsible for monitoring and optimizing database performance to keep away from damaging results caused by constant access and high traffic. Database administrators sometimes have prior experience working on database administration groups.

Information about what you need to know, from the business’s most popular positions to right now’s sought-after knowledge abilities. A majority of these jobs require candidates with both expertise and advanced degrees. difficult to seek out, which means information jobs pay fairly well for these with the proper experience. With seasoned professionals in this industry making around $79,000 per yr, a transportation logistics specialist is an interesting career path for people who're detail-oriented, technical and forward thinkers. An information analytics background is very helpful in this job because transportation logistics specialists have to reliably identify the most efficient paths for products and services to be delivered. They should look at massive amounts of information to assist identify and remove bottlenecks in transit, be it on land, sea or in the air. While digital advertising positions have a wide salary range, advertising analyst salaries are commonly $66,571, and can rise above six figures for senior and management-level positions.

Due to the versatile nature of this data analytics job and the many industries you may find employment in, the wage can differ widely. A quantitative analyst is another extremely sought-after skilled, particularly in financial firms. Quantitative analysts use information analytics to hunt out potential financial investment opportunities or threat management problems. At Dataquest, students are outfitted with specific information and skills for information visualization in Python and R using data science and visualization libraries. Regardless of the profession path you’re wanting into, being able to visualize and talk insights associated to your company’s services and backside line is a priceless skill set that will flip the heads of employers. Having a strong understanding of how to use Python for data analytics will in all probability be required for a lot of roles. Even if it’s not a required ability, knowing and understanding Python will give you a higher hand when showing future employers the value you could deliver to their companies.

information science and analytics job openings are predicted to grow to 2.7 million, representing a $187 billion market opportunity. Whether you’re considering a graduate degree or are already enrolled in a grasp’s program, there are steps you can take now to spice up your income potential, Reed says. To start, acquire palms-on expertise through internships or project-primarily based roles when you’re in class to construct your resumé. Seek out a program with a focus on math, science, databases, programming, modeling, and predictive analytics, he suggests. Experience with databases like Microsoft SQL Server, Oracle, and IBM DB2 are essential abilities for managing information, he says. Mastery of Microsoft SQL Server and Oracle, particularly, may raise your wage by as a lot as eight percent and 6 % respectively, based on RHT ’s 2017 Salary Guide. The skills which might be in demand today aren’t always the ones that will be in demand tomorrow.

For more information

360DigiTMG - Data Analytics, Data Analytics Course Training Hyderabad

Address - 2-56/2/19, 3rd floor,, Vijaya towers, near Meridian school,, Ayyappa Society Rd, Madhapur,, Hyderabad, Telangana 500081

Call us@+91 99899 94319

0 notes

Link

Deep Learning gets more and more traction. It basically focuses on one section of Machine Learning: Artificial Neural Networks. This article explains why Deep Learning is a game changer in analytics, when to use it, and how Visual Analytics allows business analysts to leverage the analytic models built by a (citizen) data scientist. What is Deep Learning and Artificial Neural Networks? Deep Learning is the modern buzzword for artificial neural networks, one of many concepts and algorithms in machine learning to build analytics models. A neural network works similar to what we know from a human brain: You get non-linear interactions as input and transfer them to output. Neural networks leverage continuous learning and increasing knowledge in computational nodes between input and output. A neural network is a supervised algorithm in most cases, which uses historical data sets to learn correlations to predict outputs of future events, e.g. for cross selling or fraud detection. Unsupervised neural networks can be used to find new patterns and anomalies. In some cases, it makes sense to combine supervised and unsupervised algorithms. Neural Networks are used in research for many decades and includes various sophisticated concepts like Recurrent Neural Network (RNN), Convolutional Neural Network (CNN) or Autoencoder. However, today’s powerful and elastic computing infrastructure in combination with other technologies like graphical processing units (GPU) with thousands of cores allows to do much more powerful computations with a much deeper number of layers. Hence the term “Deep Learning”. The following picture from TensorFlow Playground shows an easy-to-use environment which includes various test data sets, configuration options and visualizations to learn and understand deep learning and neural networks: If you want to learn more about the details of Deep Learning and Neural Networks, I recommend the following sources: “The Anatomy of Deep Learning Frameworks”– an article about the basic concepts and components of neural networks TensorFlow Playground to play around with neural networks by yourself hands-on without any coding, also available on Github to build your own customized offline playground “Deep Learning Simplified” video series on Youtube with several short, simple explanations of basic concepts, alternative algorithms and some frameworks like H2O.ai or Tensorflow While Deep Learning is getting more and more traction, it is not the silver bullet for every scenario. When (not) to use Deep Learning? Deep Learning enables many new possibilities which were not possible in “mass production” a few years ago, e.g. image classification, object recognition, speech translation or natural language processing (NLP) in much more sophisticated ways than without Deep Learning. A key benefit is the automated feature engineering, which costs a lot of time and efforts with most other machine learning alternatives. You can also leverage Deep Learning to make better decisions, increase revenue or reduce risk for existing (“already solved”) problems instead of using other machine learning algorithms. Examples include risk calculation, fraud detection, cross selling and predictive maintenance. However, note that Deep Learning has a few important drawbacks: Very expensive, i.e. slow and compute-intensive; training a deep learning model often takes days or weeks, execution also takes more time than most other algorithms. Hard to interpret: lack of understandability of the result of the analytic model; often a key requirement for legal or compliance regularities Tends to overfitting, and therefore needs regularization Deep Learning is ideal for complex problems. It can also outperform other algorithms in moderate problems. Deep Learning should not be used for simple problems. Other algorithms like logistic regression or decision trees can solve these problems easier and faster. Open Source Deep Learning Frameworks Neural networks are mostly adopted using one of various open source implementations. Various mature deep learning frameworks are available for different programming languages. The following picture shows an overview of open source deep learning frameworks and evaluates several characteristics: These frameworks have in common that they are built for data scientists, i.e. personas with experience in programming, statistics, mathematics and machine learning. Note that writing the source code is not a big task. Typically, only a few lines of codes are needed to build an analytic model. This is completely different from other development tasks like building a web application, where you write hundreds or thousands of lines of code. In Deep Learning – and Data Science in general – it is most important to understand the concepts behind the code to build a good analytic model. Some nice open source tools like KNIME or RapidMinerallow visual coding to speed up development and also encourage citizen data scientists (i.e. people with less experience) to learn the concepts and build deep networks. These tools use own deep learning implementations or other open source libraries like H2O.ai or DeepLearning4j as embedded framework under the hood. If you do not want to build your own model or leverage existing pre-trained models for common deep learning tasks, you might also take a look at the offerings from the big cloud providers, e.g. AWS Polly for Text-to-Speech translation, Google Vision API for Image Content Analysis, or Microsoft’s Bot Framework to build chat bots. The tech giants have years of experience with analysing text, speech, pictures and videos and offer their experience in sophisticated analytic models as a cloud service; pay-as-you-go. You can also improve these existing models with your own data, e.g. train and improve a generic picture recognition model with pictures of your specific industry or scenario. Deep Learning in Conjunction with Visual Analytics No matter if you want to use “just” a framework in your favourite programming language or a visual coding tool: You need to be able to make decisions based on the built neural network. This is where visual analytics comes into play. In short, visual analytics allows any persona to make data-driven decisions instead of listening to gut feeling when analysing complex data sets. See “Using Visual Analytics for Better Decisions – An Online Guide” to understand the key benefits in more detail. A business analyst does not understand anything about deep learning, but just leverages the integrated analytic model to answer its business questions. The analytic model is applied under the hood when the business analyst changes some parameters, features or data sets. Though, visual analytics should also be used by the (citizen) data scientist to build the neural network. See “How to Avoid the Anti-Pattern in Analytics: Three Keys for Machine ...” to understand in more details how technical and non-technical people should work together using visual analytics to build neural networks, which help solving business problems. Even some parts of data preparation are best done within visual analytics tooling. From a technical perspective, Deep Learning frameworks (and in a similar way any other Machine Learning frameworks, of course) can be integrated into visual analytics tooling in different ways. The following list includes a TIBCO Spotfire example for each alternative: Embedded Analytics: Implemented directly within the analytics tool (self-implementation or “OEM”); can be used by the business analyst without any knowledge about machine learning (Spotfire: Clustering via some basic, simple configuration of a input and output data plus cluster size) Native Integration: Connectors to directly access external deep learning clusters. (Spotfire: TERR to use R’s machine learning libraries, KNIME connector to directly integrate with external tooling) Framework API: Access via a Wrapper API in different programming languages. For example, you could integrate MXNet via R or TensorFlow via Python into your visual analytics tooling. This option can always be used and is appropriate if no native integration or connector is available. (Spotfire: MXNet’s R interface via Spotfire’s TERR Integration for using any R library) Integrated as Service via an Analytics Server: Connect external deep learning clusters indirectly via a server-side component of the analytics tool; different frameworks can be accessed by the analytics tool in a similar fashion (Spotfire: Statistics Server for external analytics tools like SAS or Matlab) Cloud Service: Access pre-trained models for common deep learning specific tasks like image recognition, voice recognition or text processing. Not appropriate for very specific, individual business problems of an enterprise. (Spotfire: Call public deep learning services like image recognition, speech translation, or Chat Bot from AWS, Azure, IBM, Google via REST service through Spotfire’s TERR / R interface) All options have in common that you need to add configuration of some hyper-parameters, i.e. “high level” parameters like problem type, feature selection or regularization level. Depending on the integration option, this can be very technical and low level, or simplified and less flexible using terms which the business analyst understands. Deep Learning Example: Autoencoder Template for TIBCO Spotfire Let’s take one specific category of neural networks as example: Autoencoders to find anomalies. Autoencoder is an unsupervised neural network used to replicate the input dataset by restricting the number of hidden layers in a neural network. A reconstruction error is generated upon prediction. The higher the reconstruction error, the higher is the possibility of that data point being an anomaly. Use Cases for Autoencoders include fighting financial crime, monitoring equipment sensors, healthcare claims fraud, or detecting manufacturing defects. A generic TIBCO Spotfire template is available in the TIBCO Community for free. You can simply add your data set and leverage the template to find anomalies using Autoencoders – without any complex configuration or even coding. Under the hood, the template uses H2O.ai’s deep learning implementation and its R API. It runs in a local instance on the machine where to run Spotfire. You can also take a look at the R code, but this is not needed to use the template at all and therefore optional. Real World Example: Anomaly Detection for Predictive Maintenance Let’s use the Autoencoder for a real-world example. In telco, you have to analyse the infrastructure continuously to find problems and issues within the network. Best before the failure happens so that you can fix it before the customer even notices the problem. Take a look at the following picture, which shows historical data of a telco network: The orange dots are spikes which occur as first indication of a technical problem in the infrastructure. The red dots show a constant failure where mechanics have to replace parts of the network because it does not work anymore. Autoencoders can be used to detect network issues before they actually happen. TIBCO Spotfire is uses H2O’s autoencoder in the background to find the anomalies. As discussed before, the source code is relative scarce. Here is the snipped of building the analytic model with H2O’s Deep Learning R API and detecting the anomalies (by finding out the reconstruction error of the Autoencoder): This analytic model – built by the data scientist – is integrated into TIBCO Spotfire. The business analyst is able to visually analyse the historical data and the insights of the Autoencoder. This combination allows data scientists and business analysts to work together fluently. It was never easier to implement predictive maintenance and create huge business value by reducing risk and costs. Apply Analytic Models to Real Time Processing with Streaming Analytics This article focuses on building deep learning models with Data Science Frameworks and Visual Analytics. Key for success in projects is to apply the build analytic model to new events in real time to add business value like increasing revenue, reducing cost or reducing risk. “How to Apply Machine Learning to Event Processing” describes in more detail how to apply analytic models to real time processing. Or watch the corresponding video recording leveraging TIBCO StreamBase to apply some H2O models in real time. Finally, I can recommend to learn about various streaming analytics frameworks to apply analytic models. Let’s come back to the Autoencoder use case to realize predictive maintenance in telcos. In TIBCO StreamBase, you can easily apply the built H2O Autoencoder model without any redevelopment via StreamBase’ H2O connector. You just attach the Java code generated by H2O framework, which contains the analytic model and compiles to very performant JVM bytecode: The most important lesson learned: Think about the execution requirements before building the analytic model. What performance do you need regarding latency? How many events do you need to process per minute, second or millisecond? Do you need to distribute the analytic model to a clusters with many nodes? How often do you have to improve and redeploy the analytic model? You need to answer these questions at the beginning of your project to avoid double efforts and redevelopment of analytic models! Another important fact is that analytic models do not always need “real time processing” in terms of very fast and / or frequent model execution. In the above telco example, these spikes and failures might happen in subsequent days or even weeks. Thus, in many use cases, it is fine to apply an analytic model once a day or week instead of just every second to every new event, therefore. Deep Learning + Visual Analytics + Streaming Analytics = Next Generation Big Data Success Stories Deep Learning allows to solve many well understood problems like cross selling, fraud detection or predictive maintenance in a more efficient way. In addition, you can solve additional scenarios, which were not possible to solve before, like accurate and efficient object detection or speech-to-text translation. Visual Analytics is a key component in Deep Learning projects to be successful. It eases the development of deep neural networks by (citizen) data scientists and allows business analysts to leverage these analytic models to find new insights and patterns. Today, (citizen) data scientists use programming languages like R or Python, deep learning frameworks like Theano, TensorFlow, MXNet or H2O’s Deep Water and a visual analytics tool like TIBCO Spotfire to build deep neural networks. The analytic model is embedded into a view for the business analyst to leverage it without knowing the technology details. In the future, visual analytics tools might embed neural network features like they already embed other machine learning features like clustering or logistic regression today. This will allow business analysts to leverage Deep Learning without the help of a data scientist and be appropriate for simpler use cases. However, do not forget that building an analytic model to find insights is just the first part of a project. Deploying it to real time afterwards is as important as second step. Good integration between tooling for finding insights and applying insights to new events can improve time-to-market and model quality in data science projects significantly. The development lifecycle is a continuous closed loop. The analytic model needs to be validated and rebuild in certain sequences.

0 notes

Text

Python Pros and Cons: What are The Benefits and Downsides of the Programming Language

Python is getting more attention than usual this year, becoming one of the most popular programming languages in the world. Is it a good choice for your next project? Let’s see some advantages and disadvantages of Python to help you decide.

Python is Almost 30 Years Old, but it’s Growing Very Fast

Python is a popular, high-level, general purpose, dynamic programming language that has been present on the market for almost 30 years now. It can be easily found almost anywhere today: web and desktop apps, machine learning, network servers and many more. It’s used for small projects, but also by companies like Google, Facebook, Microsoft, Netflix, Dropbox, Mozilla or NASA. Python is the fastest growing programming language according to StackOverflow Trends. Projections of future traffic for major programming languages show that Python should overtake Java in 2018. Indeed.com, a worldwide employment-related search engine for job listings, ranks Python as the third most profitable programming language in the world. This means that more and more programmers are learning this language and using it. Why is Python so popular these days?

Copy of Blog interviews – quotes (1)-2

Python - the most important benefits of using this programming language

Versatile, Easy to Use and Fast to Develop

Python focuses on code readability. The language is versatile, neat, easy to use and learn, readable, and well-structured.

Gregory Reshetniak, Software Architect at Nokia, says: - Myself and others have been using Python for both quick scripting as well as developing enterprise software for Fortune 500 companies. It’s power is flexibility and ease of use in both cases. The learning curve is very mild and the language is feature-rich. Python is dynamically typed, which makes it friendly and faster to develop with, providing REPL as well as notebook-like environments such as Jupyter. The latter is quickly becoming the de facto working environment for data scientists. Due to Python’s flexibility, it’s easy to conduct exploratory data analysis - basically looking for needles in the haystack when you’re not sure what the needle is. Python allows you to take the best of different paradigms of programming. It’s object oriented, but also actively adopts functional programming features.

Open Source with a Vibrant Community

You can download Python for free and writing code in a matter of minutes. Developing with Python is hassle-free. What’s more, the Python programmers community is one of the best in the world - it’s very large and active. Some of the best IT minds in the world are contributing to both the language itself and its support forums.

Has All the Libraries You Can Imagine

You can find a library for basically anything you could imagine: from web development, through game development, to machine learning.

Great for Prototypes - You Can Do More with Less Code

As it was mentioned before, Python is easy to learn and fast to develop with. You can do more with less code, which means you can build prototypes and test out ideas much quicker in Python than in other languages. This means that using Python not only to saves a lot of time, but also reduce your company’s costs.

Limitations or Disadvantages of Python

Experienced programmers always recommend to use the right tools for the project. It’s good to know not only Python’s advantages, but also its disadvantages.

What problems can you face by choosing this programming language?

Speed Limitations

Python is an interpreted language, so you may find that it is slower than some other popular languages. But if speed is not the most important consideration for your project, then Python will serve you just fine.

Problems with Threading

Threading is not really good in Python due to the Global Interpreter Lock (GIL). GIL is simply a mutex that allows only one thread to execute at a time. As a result, multi-threaded CPU-bound programs may be slower than single-threaded ones - says Mateusz Opala, Machine Learning Leader at Netguru. Luckily there’s a solution for this problem. - We need to implement multiprocessing programs instead of multithreaded ones. That's what we often do for data processing.

Not Native to Mobile Environment

Python is not native to mobile environment and it is seen by some programmers as a weak language for mobile computing. Android and iOS don’t support Python as an official programming language. Still, Python can be easily used for mobile purposes, but it requires some additional effort.[Source]-https://www.netguru.com/blog/python-pros-and-cons-what-are-the-benefits-and-downsides-of-the-programming-language

Advanced level Python Certification Course with 100% Job Assistance Guarantee Provided. We Have 3 Sessions Per Week And 90 Hours Certified Basic Python Classes In Thane Training Offered By Asterix Solution

0 notes

Text

Preparing for a Data Science/Machine Learning Bootcamp

If you are reading this article, there is a good chance you are considering taking a Machine Learning(ML) or Data Science(DS) program soon and do not know where to start. Though it has a steep learning curve, I would highly recommend and encourage you to take this step. Machine Learning is fascinating and offers tremendous predictive power. If ML researcher continue with the innovations that are happening today, ML is going to be an integral part of every business domain in the near future.

Many a time, I hear, "Where do I begin?". Watching videos or reading articles is not enough to acquire hands-on experience and people become quickly overwhelmed with many mathematical/statistical concepts and python libraries. When I started my first Machine Learning program, I was in the same boat. I used to Google for every unknown term and add "for dummies" at the end :-). Over time, I realized that my learning process would have been significantly smoother had I spent 2 to 3 months on the prerequisites (7 to 10 hours a week) for these boot camps. My goal in this post is to share my experience and the resources I have consulted to complete these programs.

One question you may have is whether you will be ready to work in the ML domain after program completion. In my opinion, it depends on the number of years of experience that you have. If you are in school, just graduated or have a couple of years of experience, you will likely find an internship or entry-level position in the ML domain. For others with more experience, the best approach will be to implement the projects from your boot camp at your current workplace on your own and then take on new projects in a couple of years. I also highly recommend participating in Kaggle competitions and related discussions. It goes without saying that one needs to stay updated with recent advancements in ML, as the area is continuously evolving. For example, automated feature engineering is growing traction and will significantly simplify a Data Scientist's work in this area.

This list of boot camp prerequisite resources is thorough and hence, long :-). My intention is NOT to overwhelm or discourage you but to prepare you for an ML boot camp. You may already be familiar with some of the areas and can skip those sections. On the other hand, if you are in high school, I would recommend completing high school algebra and calculus before moving forward with these resources.

As you may already know, Machine Learning (or Data Science) is a multidisciplinary study. The study involves an introductory college-level understanding of Statistics, Calculus, Linear Algebra, Object-Oriented Programming(OOP) basics, SQL and Python, and viable domain knowledge. Domain knowledge comes with working in a specific industry and can be improved consciously over time. For the rest, here are the books and online resources I have found useful along with the estimated time it took me to cover each of these areas.

Before I begin with the list, a single piece of advice that most find useful for these boot camps is avoid going down the rabbit hole. First, learn how without fully knowing why. This may be counter-intuitive but it will help you learn all the bits and pieces that work together in Machine Learning. Try to stay within the estimated hours(maybe 25% more) I have suggested. Once you have a good handle on the how, you will be in a better position to deep five into each of the areas that make ML possible.

Machine Learning:

Machine Learning Basics - Principles of Data Science: Sinan Ozdemir does a great job of introducing us to the world of machine learning. It is easy to understand without prior programming or mathematical knowledge. (Estimated time: 5 hours)

Applications of Machine Learning - A-Z by Udemy: This course cost less than $20 and gives an overview of what business problems/challenges are solved with machine learning and how. This keeps you excited and motivated if and when you are wondering why on earth you are suddenly learning second-order partial derivatives or eigenvalues and eigenvectors. Just watching the videos and reading through the solutions will suffice at this point. Your priority code and domain familiarity. (Estimated time: 2-3 hours/week until completion. If you do not understand fully, that is ok at this time).

Reference Book - ORielly: Read this book after you are comfortable with Python and other ML concepts that are mentioned here but not necessary to start a program.

SQL:

SQL Basics - HackerRank: You will not need to write SQL as most ML programs provide you with CSV files to work with. However, knowing SQL will help you to get up to speed with pandas, Python's data manipulation library. Not to mention it is a necessary skill for Data Scientists. HackerRank expects some basic understanding of joins, aggregation function etc. If you are just starting out with SQL, my previous posts on databases may help before you start with HackerRank. (Estimated time: Couple of hours/week until you are comfortable with advanced analytic queries. SQL is very simple, all you need is practice!)

OOP:

OOP Basics - OOP in Python : Though OOP is widespread in machine learning engineering and data engineering domain, Data Scientists need not have deep knowledge of OOP. However, we benefit from knowing the basics of OOP. Besides, ML libraries in Python make heavy use of OOP and being able to understand OOP code and the errors it throws will make you self sufficient and expedite your learning. (Estimated time: 10 hours)

Python:

Python Basics - learnpython: If you are new to programming, start with the basics: data types, data structures, string operations, functions, and classes. (Estimated time: 10 hours)

Intermediate Python - datacamp: If you are already a beginner python programmer, devote a couple of weeks to this. Python is one of the simplest languages and you can continue to pick up more Python as you undergo your ML program. (Estimated hours: 3-5 hours/week until you are comfortable creating a class for your code and instantiating it whenever you need it. For example, creating a data exploration class and call it for every data set for analysis.

Data Manipulation - 10 minutes to Pandas: 10 minutes perhaps is not enough but 10 hours with Pandas will be super helpful in working with data frames: joining, slicing, aggregating, filtering etc. (Estimated time: 2-3 hours/week for a month)

Data Visualization - matplotlob: All of the hard work that goes into preparing data and building models will be of no use unless we share the model output in a way that is visually appealing and interpretable to your audience. Spend a few hours understanding line plots, bar charts, box plots, scatter plots and time-series that is generally used to present the output. Seaborne is another powerful visualization library but you can look into that later. (Estimated time: 5 hours)

Community help - stackoverflow: Python's popularity in the engineering and data science communities makes it easy for anyone to get started. If you have a question on how to do something in Python, you will most probably find an answer on StackOverflow.

Probability & Statistics:

Summary Statistics - statisticshowto: A couple of hours will be sufficient to understand the basic theories: mean, median, range, quartile, interquartile range.

Probability Distributions - analyticsvidya: Understanding data distribution is the most important step before choosing a machine learning algorithm. As you get familiar with the algorithms, you will learn that each one of them makes certain assumptions on the data, and feeding data to a model that does not satisfy the model's assumptions will deliver the wrong results. (Estimated time: 10 hours)

Conditional Probability - Khanacademy : Conditional Probability is the basis of Bayes Theorem, and one must understand Bayes theorem because it provides a rule for moving from a prior probability to a posterior probability. It is even used in parameter optimization techniques. A few hands-on exercises will help develop a concrete understanding. (Estimated Time: 5 hours)

Hypothesis Testing - PennState: Hypothesis Testing is the basis of Confusion Matrix and Confusion Matrix is the basis for most model diagnostics. It is an important concept you will come across very frequently. (Estimated Time: 10 hours)

Simple Linear Regression - Yale & Columbia Business School: The first concept most ML programs will teach you is linear regression and prediction on a data set with a linear relationship. Over time, you will be introduced to models that work with non-linear data but the basic concept of prediction stays the same. (Estimated time: 10 hours)

Reference book - Introductory Statistics: If and when you want a break from the computer screen, this book by Robert Gould and Colleen Ryan explains topics ranging from "What are Data" to "Linear Regression Model".

Calculus:

Basic Derivative Rules - KhanAcademy: In machine learning, we use optimization algorithms to minimize loss functions (different between actual and predicted output). These optimization algorithms (such as gradient descent) uses derivatives to minimize the loss function. At this point, do not try to understand loss function or how the algorithm works. When the time comes, knowing the basic derivative rules will make understanding loss function comparatively easy. Now, if you are 4 years past college, chances are you have a blurred the memory of calculus (unless, of course, math is your superpower). Read the basics to refresh your calculus knowledge and attempt the unit test at the end. (Estimated Time: 10 hours)

Partial derivative - Columbia: In the real world, there is rarely a scenario where there is a function of only one variable. (For example, a seedling grows depending on how sun, water, minerals it gets. Most data sets are multidimensional. Hence the need to know partial derivatives. These two articles are excellent and provide the math behind the Gradient Descent. Rules of calculus - multivariate and Economic Interpretation of multivariate Calculus. (Estimated Time: 10 hours)

Linear Algebra:

Brief refresher - Udacity: Datasets used for Machine learning models are often high dimensional data and represented as a matrix. Many ML concepts are tied to Linear Algebra and it is important to have the basics covered. This may be a refresher course, but at their cores, it is equally useful for those who are just getting to know Linear Algebra. (Estimated Time: 5 hours)

Matrices, eigenvalues, and eigenvectors - Stata: This post has intuitively explained matrices and will help you to visualize them. Continue to the next post on eigenvalues and vectors as well. Many a time, we are dealing with a data set with a large number of variables and many of them are strongly correlated. To reduce dimensionality, we use Principal Component Analysis (PCA), at the core of which is Eigenvalues and Eigenvectors. (Estimated Time: 5 hours)

PCA, eigenvalues, and eigenvectors - StackExchange: This comment/answer does a wonderful job in intuitively explaining PCA and how it relates to eigenvalues and eigenvectors. Read the answer with the highest number of votes (the one with Grandmother, Mother, Spouse, Daughter sequence). Read it multiple times if it does not make sense in the first take. (Estimated Time: 2-3 hours)

Reference Book - Linear Algebra Done Right: For further reading, Sheldon Axler's book is a great reference but completely optional for the ML coursework.

These are the math and programming basics that are needed to get started with Machine Learning. You may not understand everything at this point ( and that is ok) but some degree of familiarity and having an additional resource handy will make the learning process enjoyable. This is an exciting path and I hope sharing my experience with you helps in your next step. If you have further questions, feel free to email me or comment here!

0 notes

Text

1-to-1 Python Programming Courses in Bangladesh

Python is the most wanted programming language for few years according to the word wide developers’ surveys. It has skyrocketed its popularity in machine learning, data science, artificial intelligence, and various scientific work including web application development, desktop application, cross platform business solutions, etc. High salary jobs are available for Python both in Bangladesh and abroad. In freelancing sectors there is a great demand of Python. But there is a lack of training and/or course available for it in Bangladesh. Bangladeshi people started to learn the value of this very valuable programming language after a long time Python took the world by storm. Even after they realized the training institutes are stick with old and dying languages and technologies due to the lack of professional trainer. But, here we are, here we are with more than one decade of solid Python expertise in Python and relevant technologies.

Python Programming Basic to Advance Course in Bangladesh

Python programming has many uses in various technologies. But before you can jump into that you need to learn the language top to bottom first. I have seen a lot of people jumping into the bandwagon of learning machine learning, big data, NLP, etc. before learning the language properly. What is the result? Frustration, failure, lower self esteem! After some days they leave one of the most valuable language forever.

So, without doing the same mistake like others, join my Python Language Learning Course. Instead of calling it Python Basics Course I call it Python Language Learning Course as I not only teach the basics but also teach the intermediate and advanced stuffs along with giving idea of web development, machine learning, natural language processing, big data, and few other stuffs at the end of the course. Go to the course details section for learning more.

Machine Learning Course with Python in Bangladesh

From the technical point of view, we the humans are biological machines. After we are born we have no knowledge by default. We look around, we observe, we touch, we feel, we hear, we start to learn – actually we start to get trained. We grow up as knowledgeable and skilled person. But the level of knowledge and skill depends on the level of training we get all long of our life. But here is a catch, we the human has something built-in in the very depth of us to learn new things every day, every moment of our life.

The God created the humans and the human played god to create new and new machines. These machines have limited capability of doing its tasks. They are as sophisticated as we make them. But, look at the modern computers, robots and other intelligent digital machines. What you see? They are getting more smarter and better every every year, every, month, every, week, every day … actually every moment. Some of the machines are smarter than others. So, the question of human-god (god of machines) is: ‘how to make my creations smarter and better?’ The answer is ‘Machine Learning’. Machine learning is that branch of computer science that help human learn how to teach the machines to become more intelligent.

I am here to teach you how to teach the machines and play the little god. Look at our course section to know more about it.

N.B.: In this text I used ‘God’ to refer to the almighty God who created us. By the small ‘god’ I referred to those humans who create new things. Don’t get it wrong in any way. The God created us as small ‘gods’. We the human can create machines, we can modify biological machines (humans, animals, trees, etc.) to some degree, but we cannot create them – that is a power of the almighty God. So, you have to use the god power in you to create machines and make them smarter and you should try to develop them such that they can improve themselves by learning from the surroundings.

Big Data Course with Python in Bangladesh

In the modern world, data is everything. The more data you have the more educated decision you can make. The use of it spans from business, trade, economics, politics, education, health to our daily life small tasks, our mental health, environment, religion, etc.

Our ancient ancestors used to store data on stones. Doing fast forward in history, in the modern world we store data digitally. Every day zillions of bytes of data are produced. Without processing data there is no value to it. With the proper processing, we can make our data as valuable that it can provide much more value to the world that it will overlap the value of diamonds. Again, with proper processing it can so much dangerous that the whole world will be affected by that. Do you remember Cambridge Analytica and the winning of Trump? That is a game of data. With proper use of it, one businessman from one of the most powerful country became the president and it is obvious that he is influencing the politics of the whole world along with it’s environment (very important as Trump went against various international environmental agreements), economics, trade, business, etc.

The people who are behind the big data processing are the dark ring masters in the modern world (meaning of dark varies – I used it to refer to ‘power’ here). Without wasting your time take your first step to learn big data from today. Look at our course section to know more about this.

Data Mining and Scraping Training in Python in Bangladesh

Data is the gold, diamond and platinum of the modern world. You need to learn to mine the right way. You cannot just go to thousands of websites, copy the data in excel and spend decades to just collect them to analyze and make a decision against your analysis. You need to be faster than anyone else to win the race. To work with data that you don’t own or that is available online and other places in a non-structured format, you need to scrap them, you need to mine them and be the dark lord of data. The more data you have, the better analysis can you run and the best educated decision you can make.

Data mining and data scraping is an art that you cannot master going through different blogs online. You need a pro trainer who started his first big application in Python one decade ago by mining and processing what he could not afford to buy as a little guy back then. Look at our course section to learn more.

Desktop Application Development Training with Python in Bangladesh

Some people say that the modern world is the world of web applications. Well, the are right to some extent and absolutely wrong in others. The modern world is ruled by mobile, web and desktop applications – yes, by all three of them. But, every industry is not ruled by all of them. There are splits in the market. So, where does desktop applications fit? It fits better than anywhere in the corporate world. It fits better than anything in business applications. It fits better than anything when security is a big concern. It fits better than anything when speed is the most important factor. If you are targeting the corporate world, the big businesses you need to develop desktop applications. Also, remember that the browsers by which you use web applications are desktop applications. The IDEs you use for development of mobile apps are desktop applications. The graphics and 3D applications you use (and buy with a big price tag) are all desktop applications. Web or mobile applications can never be as powerful as desktop applications. Again, web applications are hosted on the cloud and could means nothing more that computer that owns someone else. So, when you have very sensitive business data you cannot trust any third party – not even Amazon or Google. Remember the PRISM project of NSA? No business with the knowledge of this will ever host their business data on the cloud. Developing a career in desktop applications are targeting the corporate world you will be able to earn better and secure a better future.

Why Python for desktop applications? There is a lot to talk about this, but I will be very brief here. Python is a cross platform programming language. You can run application developed by it on any modern desktop OS available on the earth. You don’t need to hit the compile button and wait for minutes to hours just after changing one line of code – prototyping phase is much faster in it. You have plenty of libraries and frameworks available in Python to use with your desktop application. It consumes less memory (much more less than Java, C#, etc.) – many business still uses very old PCs with very small amount of RAM (go visit any govt. office and you will see that they are still using XP). It is very fast compared to many languages. With using JIT (e.g. pypy) you can get faster performance compared to JAVA and other modern languages.

Go to our course section to learn more about this course.

Web Application Development Training in Python in Bangladesh

Web application is everywhere. Many mobile and desktop applications are getting deprecated and moving toward the web applications. This is the most lucrative profession for developers, programmers and engineers. The number of jobs in this sector is increasing every day. With a web application you don’t need to develop different app for different platforms. But, the number of web application developer in Python is very low compared to other languages. Again, in Bangladesh the number is very very low. To win you next job or the next project in web development bypassing all those low quality competition, you should learn web development in Python today.

I teach web development with Python using the cutting edge Django full stack web development framework. I prefer Django over Flask and others for it’s better architecture. Yes, I also provide training in Flask, Aiohttp, Tornado, Twisted. Look at the course section for more information.

Network Programming Training in Python in Bangladesh

Python and networking fits very well together. If you want to work on low level networking stuffs, you can choose Python for thousands of pros. It is cross platform – code once, run anywhere. It has a huge list of libraries for any task you need to perform. Again, if you are a networking professional, you can automate the boring tasks with it and live happily. Be smart, be intelligent, be lazy – when others will get tired manually doing stuffs and not getting the desired result in time, you will be done within seconds getting a lot of time to relax.

I start with teaching low level socket programming. After making you master in that, I move forward teaching you HTTP programming in Python, SMTP programming in Python, Web Socket Programming in Python and various other cool stuffs. I will cover Twisted, requests, urlib(s), aiohttp and some other cool libraries and frameworks in Python. Look at the course section for more information.

Matplotlib, Numpy, SciPy, etc. Python Training in Bangladesh

Python excels better than any other language in the scientific applications. I provide training for them. Contact me for knowing the fees and other details.

Custom Python Trainings in Bangladesh

The list above is not a complete list. There are various other stuffs in the world of Python. Various types of professions needs various types of training in Python. In more than one decade of time I have trained many professionals from various walks of life. You might need some training in Python that none around the country provide. Just contact me and secure your slot of the personal professional training.

This is not the End

These are not the end of what I can help you do in Python. Contact me, call me, email me, meet me to tell your story and let me help you. A personal professional trainer with solid expertise in Python will train you to be a Python rock star.

Contact: [email protected]

0 notes

Text

Step by step approach to perform data analysis using Python

Info is everywhere and part and parcel of every business or processes performing a small business or in easier an eternal resource, but almost no people realized their importance before this period of the surge towards data-intensive applications. The biggest reason attributed up to now for the delay of arriving of this data age where data appears to be the new oil has been the deficiency of computation power.

The most frequent and powerful machine learning algorithms were developed way before in late 1990, however, the calculation power was never there to fully augment these algorithms to be used where their power could be felt. A major roadblock in the use of these resources acquired been the non-availability of efficient open source dialects capable of dealing with different algorithms and permitting a single user to delve into the world of data and really funnel the use of data in building amazing applications. In conditions of different languages that are enabling people to make a damage in this data-driven world then, certainly, python has taken the cake considering its usefulness both as an over-all purpose vocabulary and especially for dealing with different facets of data science be it computer vision, Natural language refinement, Predictive modelling, and of-course visualization.