#i see an ai image and all i see is decades of hard work that was stolen like if u ripped the bones out of a living person

Explore tagged Tumblr posts

Text

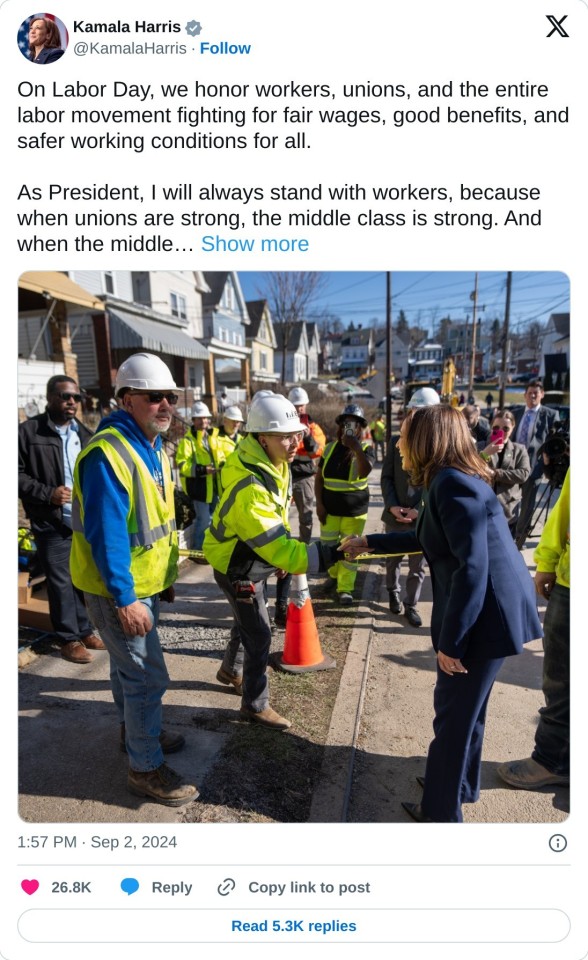

Candidates' Different Views on Labor Day

Their contrasting social media posts, with bonus federal law violation and (I think?) AI generated fake workers from Trump, are illuminating:

Contrast that with Trump and Musk joking about firing striking workers a few weeks ago:

Under Biden, Harris chaired Biden's pro-labor task force assigned to "promote [Biden's] policy for worker power, worker organizing, and collective bargaining." Here is the plan they created with specific proposals.

As I've noted, my intro to Harris was at a 2017 rally for the ACA organized by SEIU Local 721. As VP, she's done a lot of union outreach, like this speech celebrating collective bargaining to honor the Culinary Workers Union in Las Vegas.

---

Meanwhile, Trump's Labor Day email to his followers is a shill for illegal merch:

US Public Law 94-344, the Federal Flag Code: "Out of respect for the US flag, never

place anything on the flag, including letters, insignia, or designs of any kind. [...]

Use the flag for advertising or promotional purposes."

The Flag Code was a big deal in the 80s, Trump's favorite decade. Congress passed a law with a big fine and/or jail time for knowingly violating it. The Supreme Court rightly struck down those penalties as a violation of free speech, but the code remains.

Trump followed this email with Labor Day posts on his social media platform:

Happy Labor Day to all of our American Workers who represent the Shining Example of Hard Work and Ingenuity. Under Comrade Kamala Harris, all Americans are suffering during this Holiday weekend - High Gas Prices, Transportation Costs are up, and Grocery Prices are through the roof. We can’t keep living under this weak and failed “Leadership.”….

Workers an afterthought. Every time he calls Kamala "Comrade," I remember Russian news btoadcasts calling him "Comrade Trump."

….In my First Term, we achieved Major Successes to protect American Workers by negotiating Free and Fair Trade Deals, passing the USMCA (U.S./Mexico/Canada), and giving Businesses and their Workers the tools to thrive. We also invested heavily in Education and Job Training programs for those who wish to expand upon their abilities, and be successful in an Industry that they love. We were an Economic Powerhouse, all because of the American Worker! But Kamala and Biden have undone all of that. When I return to the White House, we will continue upon our Successes by creating an Environment that ensures ALL Workers, and Businesses, have the opportunity to prosper and achieve their American Dream. We will, MAKE AMERICA GREAT AGAIN!

Dismantling Obama's trade deals and putting in his own which raised tarriffs and prices and killed supply chains, making shit up, and taking credit for Biden's job training programs, par for the course. Labor Day? All about ME ME ME.

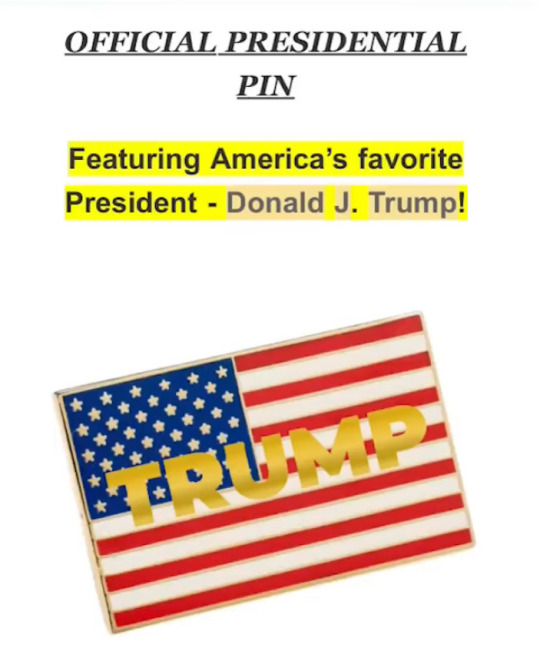

Someone on the Trump campaign realized that he probably needed a picture of himself with workers, since Kamala had posted one shaking hands with them.

However, there's something off about this image posted to his social media site at 5PM:

The suit and tie are so unnaturally smooth, I did a reverse image search to see if it was a real photo. Zero Results. What are the chances? Any photo like this should already be on the web. And there's what look to be AI artifacts (Eg guy on left missing half of vest, fragments of orange stripes).

TL;DR: I don't think he found a group of workers or cosplayers to loom in front of; I think he's embraced the AI generated crowds he falsely accused Harris of using.

10 notes

·

View notes

Text

Years ago, when people still used Boolean search and I was a cub reporter, I worked with photographer Nick Ut at the Associated Press. It felt like being in the presence of one of the Greats, even though he never acted like it. We drank the same office coffee, even as I was barely out of journalism school and he had a Pulitzer Prize that was nearly three decades old. Ut, if you don’t recognize the name, took the photo of “Napalm Girl”—Kim Phuc, whom Ut captured in 1973, at 9 years old, running from a bombing in Vietnam.

Lots of people know that photo. It’s one of the most searing images to come out of the Vietnam War—one that shifted attitudes about the conflict. Ut himself wrote many years later that he knew a single photo could change the world. “I know, because I took one that did.”

Hundreds of photos have come out of the Israel-Hamas war since it began more than seven months ago. Bombed out buildings, mass funerals, damaged hospitals, more injured children. But, as of this week, there’s one that’s garnered more attention than most: “All eyes on Rafah.”

The image features what appears to be an AI-generated landscape in which a series of refugee tents spells out the image’s title phrase. The exact origins of the image are murky, but as of this writing it’s reportedly been shared more than 47 million times on Instagram, with many of those shares coming in the 48 hours after an Israeli strike killed 45 people in a camp for displaced Palestinians, according to the Gaza Health Ministry. The image was also shared widely on TikTok and X, where a pro-Palestine account’s post featuring the image has been viewed nearly 10 million times.

As “All eyes on Rafah” circulated, Shayan Sardarizadeh, a journalist with BBC Verify, posted on X that it “has now become the most viral AI-generated image I’ve ever seen.” Ironic, then, that all those eyes on Rafah aren’t really seeing Rafah at all.

Establishing AI’s role in the act of news-spreading got fraught quickly. Meta, as NBC News pointed out this week, has made efforts to restrict political content on its platforms even as Instagram has become a ���crucial outlet for Palestinian journalists.” The result is that actual footage from Rafah may be restricted as “graphic or violent content” while an AI image of tents can spread far and wide. People may want to see what’s happening on the ground in Gaza, but it’s an AI illustration that’s allowed to find its way to their feeds. It’s devastating.

Journalists, meanwhile, sit in the position of having their work fed into large-language models. On Wednesday, Axios reported that Vox Media and The Atlantic had both made deals with OpenAI that would allow the ChatGPT maker to use their content to train its AI models. Writing in The Atlantic itself, Damon Beres called it a “devil’s bargain,” pointing out the copyright and ethical battles AI is currently fighting and noting that the technology has “not exactly felt like a friend to the news industry”—a statement that may one day itself find its way into a chatbot’s memory. Give it a few years and much of the information out there—most of what people “see”—won’t come from witness accounts or result from a human looking at evidence and applying critical thinking. It will be a facsimile of what they reported, presented in a manner deemed appropriate.

Admittedly, this is drastic. As Beres noted, “generative AI could turn out to be fine,” but there is room for concern. On Thursday, WIRED published a massive report looking at how generative AI is being used in elections around the world. It highlighted everything from fake images of Donald Trump with Black voters to deepfake robocalls from President Biden. It’ll get updated throughout the year, and my guess is that it’ll be hard to keep up with all the misinformation that comes from AI generators. One image may have put eyes on Rafah, but it could just as easily put eyes on something false or misleading. AI can learn from humans, but it cannot, like Ut did, save people from the things they do to each other.

16 notes

·

View notes

Text

So this new AI policy from Tumblr is putting me in a very uncomfortable state. I need to jot down some of these thoughts to get my head straight and to solicit some advice from other users.

For context, before this site, I posted my art from 2021-2022 on DeviantArt. Before that, I had posted there as a crummy teen over a decade ago, so it had a sense of familiarity that made me go back to it in my current day as a hobbyist artist. dA's brazen decision to create their own AI generator from user data and have everyone be opt-in by default was something that ran too far counter to my principles. I made the decision to leave the site, and instead began to post on Pixiv and Tumblr. You can find my thoughts from that time here.

I have conflicted feelings over AI images, as I've previously supported an open policy for the reuse of art which wouldn't technically be opposed to AI use, but I'm also sympathetic to the rights of artists and don't want to see them abused. And I have to admit that seeing the deleterious effect on the art space over the last year has hardened me further against the idea of AI images.

My one red line is the importance of attribution to the original artist, which dA's AI engine did not respect at all, so out of solidarity I had to abandon the site. This opt-in policy for AI scraping from Tumblr is to me slightly less offensive than dA's actions, but I still find it a crossing of my line. By my own principles, I am leaning towards having to leave this site as well.

Unfortunately, the choice feels much harder this time. While it felt bad to leave dA and people who watched me there, I was ultimately able to live with the choice (especially after providing links to follow me here and on Pixiv). The problem is that I feel even more beholden to the community here on Tumblr. Of course I'm being a little egotistical here as I don't have a real audience to speak of here, but I often feel real resonation with the people who do stumble over my images.

To be clear, I was happy and humbled by all the people who liked my works on dA, and continue to be so by people who like it on Pixiv. It's just that with Tumblr, even if I have less views, I often get messages that make it sound like my images were really appreciated. It's like every topic, even an obscure work, seems to have a fandom here that really appreciates when art of their topic is made. Even my shoddier earlier images like my Apex Legends images or my first image of Snufkin have gotten comments about the poster being really affected by it, and that in turn really affected me.

So I'm really conflicted now on what my course of action should be. My principles tell me that Tumblr's actions regarding AI are not agreeable and I should walk away. But the community here is making me have a real hard time pulling the trigger this time. BUT BUT, another voice is making me question if it's just my selfishness making me not want to abandon the positive attention here, and I'm betraying my solidarity to other artists who are being trampled by Tumblr's policy by choosing that attention instead.

I also have the challenge of figuring out where to go from here. Am I to just stick to Pixiv? (They do allow AI images, but as far as I know they don't promote the scraping of non-AI art without consent from its artists, which is why it doesn't cross my red line. If someone knows differently about Pixiv, DO let me know.) I have no idea what other art sites I could migrate to at this point. I still find the internet at large an intimidating place to interact in, so I feel completely clueless on what to do.

I don't know, this entire blog post might be an exercise in ego-stroking. I know I'm not entitled to an audience for my art, nor advice on this conundrum. Certainly, there are professional artists way more affected than myself by the AI situation, and anyone reading this should consider their situation far before mine. If this blog post is inconsiderate, I'll accept that I'm out of my league and hold my tongue. But if anyone resonates with this post and could provide any advice, please know that you would be appreciated.

Hi, Tumblr. It’s Tumblr. We’re working on some things that we want to share with you.

AI companies are acquiring content across the internet for a variety of purposes in all sorts of ways. There are currently very few regulations giving individuals control over how their content is used by AI platforms. Proposed regulations around the world, like the European Union’s AI Act, would give individuals more control over whether and how their content is utilized by this emerging technology. We support this right regardless of geographic location, so we’re releasing a toggle to opt out of sharing content from your public blogs with third parties, including AI platforms that use this content for model training. We’re also working with partners to ensure you have as much control as possible regarding what content is used.

Here are the important details:

We already discourage AI crawlers from gathering content from Tumblr and will continue to do so, save for those with which we partner.

We want to represent all of you on Tumblr and ensure that protections are in place for how your content is used. We are committed to making sure our partners respect those decisions.

To opt out of sharing your public blogs’ content with third parties, visit each of your public blogs’ blog settings via the web interface and toggle on the “Prevent third-party sharing” option.

For instructions on how to opt out using the latest version of the app, please visit this Help Center doc.

Please note: If you’ve already chosen to discourage search crawling of your blog in your settings, we’ve automatically enabled the “Prevent third-party sharing” option.

If you have concerns, please read through the Help Center doc linked above and contact us via Support if you still have questions.

95K notes

·

View notes

Text

The End is Near..

“That’s just the nature of the business, and it’s just hard to compete with AI nowadays.” – Alex Wei

I wanted to start off the journal from that quote from a youtuber called Alex Wei who is a freelancer writer. In short, he lost his job to AI.

For years I’ve been cracking jokes about AI taking over in the digital space regarding art, sound & moving image, cracking jokes about cyborgs and robots. It feels like we are really now stepping towards advancing the use of AI features and what it is capable of doing.

As of today, pretty sure everyone has seen adverts of different AI programs that are suppose to better your work and lifestyle. Making content in a blink of an eye. No need to collaborate with anyone or receive feed back. You are in the seat of creativity in using a short cut, a short cut that is constantly being developed and improved into perfection as the days are endless towards a tech future. Where you, the user, 'any user' can subscribe to an AI program to multi task anything and everything for a monthly fee.

Why hire skilled people to do this when AI is advertised to do it for you for a cheaper rate and fast results.

Which brings me to this point, for years I’ve been doing vectors as a hobby, never done commissions in years. Refused to them due to the amount of stress I got from it in the beginning, so as a casual hobbyist doing fan vector related pictures. It’s been fun, it really has. It kills time, it’s something I like to do and share on social media. Whether people engage in it or not, I care less of it as time moves forward.

I usually upload and share comments and bounce (log off). I hate social media and what it has become now. I feel like there are no actual users online now and it feels more like todays social media is a platform for fake account holders who pretend to be someone using AI, or AI generated bots making countless endless accounts. So there aren’t any engagements in anything. You’re practically a ghost buried upon / surrounded by endless accounts which we are not sure if the accounts are verified or not.

It’s gotten to the point, where I’m seeing way too much AI generated art on Deviant art and other social media sites. The users are slapping their titles as artist when all they do is input data in a box and out comes a picture without any efforts put on it.

Now Its AI vs AI, people complaining about their AI art stolen from some other AI users. AI at some point is going to replicate your art style, and you won’t be able to compete with it. Not only it will replicate it, it will make it better by somebody else pretending to be somebody else.

Like Alex mention, you can’t compete with it.

It’s not just digital art, its also sounds too and moving image. I can't comment on sound and moving images as that isn't my field or interest, however I have seen the outcomes produced from apps and they are impressive.

Any digital mark on the web will be a reason for an AI app to be created to replace the old methods.

Because of this, I just don’t feel like continuing the hobby I do with vector arts. Not sure when I will retire from it as I’ve been doing this hobby for over a decade now, maybe more. And I was fully aware of the change of it when AI was introduced a few years ago & predicting the outcome would be like this. Pretty sure many others also saw this coming a long time ago. I’m dead certain, with AI grammar – this journal would have been cut in half and straight to the point.

I haven’t written a journal on this site for years, I think now is a good time to write one and maybe say goodbye to the old fashion pen & mouse and CS3 I’ve been using all this time and will continue to do so until I feel it’s time to unplug the old tech.

Or..

Maybe when I do stop vectoring, I’ll join the AI trend and start using that on my old vectors to see what the outcome is?

Right now.. I’m feeling it. I’m 100% feeling the exit / retirement from this AI digital era.

There’s no one, there’s no real engagement, no real community. No real people, which is natural. People move onto other hobbies or activities.

It’s just AI apps recycled, repeated & advertised to help you, - AI endless outcomes. AI fake profilers.. AI digital era of bots communicating with one another bot which sounds like sci fi horror movie.

We’ll see in due time. This was something I had to write here, maybe for the last time.

“Nothing lasts forever”

Best wishes to anyone reading this. (15/01/2025)

(also, if this journal has bad grammar and spelling errors, GOOD!!! At least you reading this know it was written by a real person)

From a casual pen hobbyists, Khuan Tru.

0 notes

Text

A-fucking-I images - BBTxx entry #1

Y'all are gonna hate me for this but it's been pissing me off to the point I can't think straight. Puh, more than usual, anyway.

*Inhales*...

...I get it. AI images, or "art" as some people call them, are a menace. They're not good at all, not for artists overall. Not even the environment, if what I've read is true. Which, wow, that's got nothing to do with me; I didn't fucking make the damn things, not my fault if I use social media.

But for little selfish creatives, ones like me who just fucking need a little fucking bone, AI-generated images are a priceless tool.

Look at this...

This is the evolution of my cover for my first completed novel, Inhuman, from 2017 to 2024.

...As you can see, it's...not good. Not good at all. Before this, I had never made a cover before. I'd done titles and logos for a good decade before but the theme and feel for the HUSHS series and Inhuman was just...so hard to figure out even for a logo.

For the longest time, the second to last cover was the "final" cover for this story. I had completely given up trying to find something or make something using free graphics or even some non-free graphics and stock pictures and vectors, you name it.

Then in 2020, I finally succumbed to using AI, first through Artbreeder before settling nicely with Bing AI.

Seeing my characters for the first time, seeing them given faces...I could have cried. I first made a cover for Inhuman, as it is my most recognizable webnovel, and...I'm speechless. This fucking cover is exactly what I'd wanted for it. With that final image for the cover came the brand fucking-new amazing title that I made for it. It's so different from everything else. I love it. It came together so...naturally. Because I had been given a tool to unlock whatever bit of genuine creativity exists in my fucking noggin.

But.

Do you fucking think I can afford to get the artists I want to make a highly detailed cover that of course wouldn't look like that, but what the fuck man? You think I can afford anything past $50 let alone for 30+ goddamn stories?!

I want and refuse to get anything other than high-quality images for my work, even if it's just a fucking stupid webnovel no one reads. Covers are no different. If I had money, I'd pay these amazing, envy-worthy artists a hundred times over just to see my characters and worlds in their goddamn amazing art styles.

Alas.

I'm poor. Really fucking poor. I'm mentally fucked up to the point that I can't make money through writing commissions as people have legitimately asked me to do in the past. I have a very real inability to do fuck shit.

If you are a good artist...I'm not saying people aren't assholes and try to wring you for nothin'. But I cannot imagine having a creative skill with which I can actually make money. Something honed with blood and sweat and tears that you can use to not only make something amazing with, something people always choose over writing when trying to get an audience, but also something you can make money with.

Can't imagine that...that luxury.

I made an update all over some of my socials informing that I have to start selling my body to make ends meet now. That's where I am.

Goddammit...I digress.

Bing AI and Artbreeder are free. I'll bet there are other AI programs that are free, but Bing AI is just...*chef's kiss* for me. I don't need anything else. I'm satisfied.

Fuck, I am so disheartened and mad right now.

I'm not saying people aren't misusing AI images, for fuck's sake. I don't even need to elaborate on that tomfoolery.

But people like me?

Why can't we use it, even if just for now—not forever?

I cannot begin to explain how much...better...I did (in the past) after I started using AI to visualize my characters, locations, and more.

'Course, I'm a fucking bobblehead now. Can't even think straight, I have such debilitating mental fuckshit going on...

Whatever.

You get it.

No, I won't stop being pro-AI images in certain circumstances. Not now. Not if it helps even a goddamn little for my already botched, pathetic attempts at creativity. It isn't the end-all-be-all forever thing.

I don't fucking WANT to use AI images.

So, guess what? Are you happy now, antis? I put all of the four years worth of AI images I had into a neat little folder on my PC and said "sayonara", 'cause I won't use Bing AI for anything public anymore.

S'not like y'all give a fuck about what I write for me to even attempt giving the stories good covers anyway.

But, hey.

What do I know.

I'm just...a sad, fucked up transman.

Whatever.

Thanks for coming to my sad, fucked up TED Talk.

Now go on, do that thing you want to do so badly and block my fatass for defending AI. We're all fuckers here.

-----

Original entry: The Blackboxx Texts.

#my posts#ai images#covers#webnovels#webnovel#my webnovels#inhuman#human shed skin#book cover progress#m/m#male/male#my ocs#my characters#the molt#ai generated images#ai generated pictures#ai generated art#ai art#ao3#ao3 writer#bbtxx#ai#webnovel covers#web novel#blackboxx texts#thanks for coming to my ted talk#visceral rage#talk shit spit blood#we're all fuckers here

0 notes

Text

Reality Frictions world tour continues

It all started with a single invitation from Jason Mittell to visit Middlebury College to screen Reality Frictions and visit two of his classes. Since Mittell and Middlebury have been at the center of the video essay world for the past decade, I correctly assumed that one would be a class on "Videographic Criticism." What I didn't see coming was Jason's other class, titled "Faking Reality," which resonated even more strongly with the conceptual concerns of Reality Frictions. In fact, my own film came together as the result of a class at UCLA titled "Reality Fictions," coupled, of course, with my own class on "Videographic Scholarship." The trip to Middlebury coincided with the beginning of Fall colors, which made for an incredibly beautiful drive up from Boston and the reception from both students and Middlebury faculty including InTransition co-founder Christian Keathley was correspondingly warm and congenial.

Although it goes against my most reclusive instincts, knowing that I would be in New England motivated me to reach out to old colleagues and friends at Dartmouth and MIT's Open Doc Lab to pitch the idea of additional screenings. Amazingly, these connections paid off in ways I never expected, beginning with a generous invitation from Mark Williams and Daniel Chamberlain for a screening at Dartmouth on my way back to Boston. In the course of working out logistics, Mark put me in touch with a colleague at Shanghai Film Academy with whom he has been developing a collaboration in connection with the ongoing Media Ecology Project. The Shanghai connection was doubly fortuitous -- in addition to inviting me to screen the film, I was asked to deliver a keynote address at an Artificial Intelligence conference at Shanghai University taking place the same weekend.

Although generative AI was only one of the inspirations for Reality Frictions, I have been developing some ideas about the current generation of image synthesis technologies going back to my research on machine learning for Technologies of Vision. The Pujiang Innovation forum's focus on "Entering the Era of AI-generated Art" turned out to be the perfect venue to consolidate and share these initial thoughts, especially regarding the need to historicize the current wave of imaging technologies and aesthetics.

Another riff in the talk emphasized the reciprocal relationships between both human/machine vision and analogue/algorithmic imaging, all of which are resulting in some striking and enigmatic modes of recombination. It was sometimes hard to know how these ideas -- and images! -- were received by the audience, which was clearly focused more on industrial applications and engineering challenges, but the panel discussion afterwards suggested that the perspectives I brought from an art and design context provided a useful counterpart to the conversation.

Another complicating factor was the fact that the primary language of the conference was Mandarin, which necessitated some impressive feats of simultaneous translation enacted by a heroic team of human translators. For all of the hand-wringing about the prospect of lost jobs in the media industries due to AI, my real sympathies lie with the women tasked with the two-way art of live, simultaneous translation. If I had to bet on anyone's job being gone because of AI this time next year, it's theirs.

My next stop after the conference and screening at Shanghai Film Academy was NYU Shanghai, where the excellent Anna Greenspan arranged for a screening of Reality Frictions under the auspices of the Center of AI and Culture she co-directs there. Audiences at both Shangahi universities were remarkably receptive and enthusiastic -- in some cases even more insistent on pursuing the philosophical implications of my deliberately false bifurcation of reality and non-reality than their American college student counterparts.

For the return flight to Los Angeles, I left Shanghai in the afternoon and arrived a couple of hours earlier the same day, making it the longest and most agonizing election day I've ever experienced in more ways than one. Along with generative AI, the other motivating factor behind the film's investigation of the porous boundaries between truth and lie, of course, was the election that was decided a few hours after my return to the U.S. Even with an election outcome that suggests otherwise, I stand by the film's assertion that viewers have greater capacity for distinguishing truth from lie than we sometimes give them credit for. Of course, this reading emerged from within the deep blue bubble of southern California and a day-to-day engagement with media-making as a semiotic exercise. Although I didn't plan it this way, the next stop for Reality Frictions turned out to be in a state that lies at the other end of the ideological spectrum.

The University of Nebraska Lincoln -- thanks to the collaboration of Wendy Katz and Jesse Fleming -- generously hosted both me and Holly for a screening and talk on AI and Creativity, creating an unusual bridge between the Art History and Emerging Media Arts (EMA) programs via the university's Hixson-Lied Visiting Artist series. The reception to both events was again warm and engaged and we got a chance to glimpse both the remarkable profusion of public art on the campus thanks to Wendy's walking tours and some genuinely groundbreaking and inspiring work in mindful design emerging from Jesse's work in the EMA.

The final stop on this leg of pre-holiday screenings took place just a few days after we got back from Nebraska, this time via Zoom. Although it was not possible to arrange a screening at the Open Doc Lab as I passed through Cambridge, the screener I forwarded to them wound up in the hands of Cinematographer/Documentarian extraordinaire Kirsten Johnson, who invited me to screen the film during an upcoming residency at the University of Missouri's School of Journalism, which turned out to be followed by one of the most dynamic Q/A sessions I've had. Although it is still early in this film's life cycle, I am both encouraged by the positivity of the in-person screenings and discouraged by the majority of festival responses. Video essays, as a form, do not fit easily into the pre-existing categories offered by most film festivals. When such categories do exist -- and they are mostly in Europe -- they are almost always limited to a maximum of 20-30 minutes. And while there is something extremely gratifying about touring with a film like this, it is unsustainable in every sense of the word. To close out this thread, I will note one other piece of good news that arrived -- a panel that I organized with Allison de Fren was accepted by SCMS. We will be joined for the upcoming conference in Chicago by my UCLA colleague Kristy Guevara-Flanagan, who has also been touring with her recently completed short film As Long As We Can, and Czech scholar-curator-archivist-editors Jiří Anger and Veronika Hanáková for a roundtable discussion titled "Beyond Electronic Publication: Expanding Venues for Videographic Scholarship." See you in Chicago!

0 notes

Text

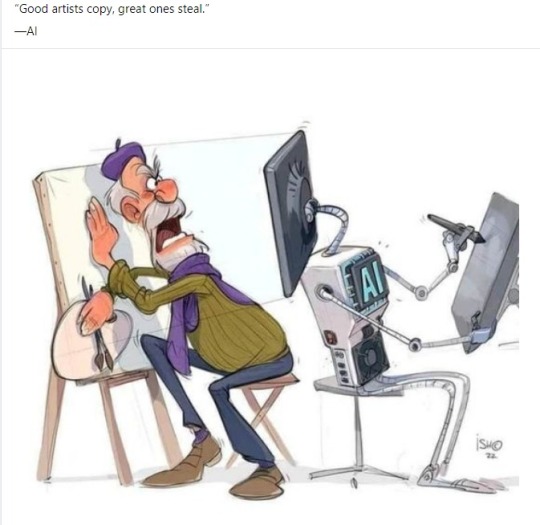

The line is incredibly simple to draw -no pun intended- and it surprises me that I've never seen it articulated.

There is, on one side, code that automates processes -and yeah, if you don't like the thought of code being used to automate a process, I have bad news about what code is- and the other is code that automates decisions.

It's that simple. Does it make any, whatsoever, decision for you, or does it just automate a process that you have decided to do.

Perhaps one thing creating the debate in question is a disconnect that stems from some not seeing said decisions. Many of the people who accept and cherish AI art are just so far removed from the experience of being an artist that they think the decisional process ends at deciding what your work shall be. They think that the decisions are about what you will draw, in what style, and whatever else they will write in those prompts of theirs, and by the time you’ve mentally written your prompt actually executing it is just a process that the AI automates.

Of course, instead creative work is just an immense bundle of decisions. The creative “process” is not split between decisions and execution but between a bunch of sections of innumerable small decisions - the decision that the drawing shall feature a leaf is not followed by the executive step of “drawing a leaf”, but by a bunch of decisions, made before and during drawing it, about where it should be, what kind it should be, what dimension it should have, how it should be angled and curved, how thick its lines should be, its color and shading and such - and the decision that it shall cast shade is not followed by a mere executive process but by a bunch of decisions about where the shade goes, how hard or soft it is, the tint it has, any potential painting effects applied to it or whatnot... so on and so forth. All decisions that not only AI prompters do not begin to make, but that they could not ever possibly hope to make, because most are decisions that cannot be informed, or made altogether, unless you are involved in the creation of the work - you cannot know how a leaf should be angled in a drawing you can’t see, and you cannot talk about how the leaf should shade an element you don’t know it casts a shade onto. But the reason they feel okay about AI art is that they don't even know about all these decisions, so they see the generated image and thing "Ah, perfect! A piece made according to my stylistic choices" and artists look at it and just see all of the choices that they didn't make. The disconnect is born from two sets of people looking at the same thing and seeing two different things because they have two different sets of eyes.

Conversely, there are points in the artistic creation in which what follows is just an executive process. When you decide that a shape should be filled in with this specific uniform color, what comes next is just execution, coloring inside that shape. And we’ve been accepting AI helping with that for decades - who's out here claiming you're not a real artist if you use the paint bucket tool? And now, there are AI tools that extend that idea to animation - for once you have colored one frame with solid colors you’re satisfied with, after the decisions that the following frames should follow suit comes coloring them accordingly, which at that point is just a manual process that involves nothing but robotic point-and-click work, a process that a cool AI called Cadmium can automate for you without having made any creative decision on your behalf.

Cadmium is very cool, by the way, and I am looking forward to see a wave of animators be motivated by the presence of such tools. We sometimes forget technology is meant to be good and cool and fun and help us, yanno.

71K notes

·

View notes

Text

Improving health, one machine learning system at a time

New Post has been published on https://thedigitalinsider.com/improving-health-one-machine-learning-system-at-a-time/

Improving health, one machine learning system at a time

Captivated as a child by video games and puzzles, Marzyeh Ghassemi was also fascinated at an early age in health. Luckily, she found a path where she could combine the two interests.

“Although I had considered a career in health care, the pull of computer science and engineering was stronger,” says Ghassemi, an associate professor in MIT’s Department of Electrical Engineering and Computer Science and the Institute for Medical Engineering and Science (IMES) and principal investigator at the Laboratory for Information and Decision Systems (LIDS). “When I found that computer science broadly, and AI/ML specifically, could be applied to health care, it was a convergence of interests.”

Today, Ghassemi and her Healthy ML research group at LIDS work on the deep study of how machine learning (ML) can be made more robust, and be subsequently applied to improve safety and equity in health.

Growing up in Texas and New Mexico in an engineering-oriented Iranian-American family, Ghassemi had role models to follow into a STEM career. While she loved puzzle-based video games — “Solving puzzles to unlock other levels or progress further was a very attractive challenge” — her mother also engaged her in more advanced math early on, enticing her toward seeing math as more than arithmetic.

“Adding or multiplying are basic skills emphasized for good reason, but the focus can obscure the idea that much of higher-level math and science are more about logic and puzzles,” Ghassemi says. “Because of my mom’s encouragement, I knew there were fun things ahead.”

Ghassemi says that in addition to her mother, many others supported her intellectual development. As she earned her undergraduate degree at New Mexico State University, the director of the Honors College and a former Marshall Scholar — Jason Ackelson, now a senior advisor to the U.S. Department of Homeland Security — helped her to apply for a Marshall Scholarship that took her to Oxford University, where she earned a master’s degree in 2011 and first became interested in the new and rapidly evolving field of machine learning. During her PhD work at MIT, Ghassemi says she received support “from professors and peers alike,” adding, “That environment of openness and acceptance is something I try to replicate for my students.”

While working on her PhD, Ghassemi also encountered her first clue that biases in health data can hide in machine learning models.

She had trained models to predict outcomes using health data, “and the mindset at the time was to use all available data. In neural networks for images, we had seen that the right features would be learned for good performance, eliminating the need to hand-engineer specific features.”

During a meeting with Leo Celi, principal research scientist at the MIT Laboratory for Computational Physiology and IMES and a member of Ghassemi’s thesis committee, Celi asked if Ghassemi had checked how well the models performed on patients of different genders, insurance types, and self-reported races.

Ghassemi did check, and there were gaps. “We now have almost a decade of work showing that these model gaps are hard to address — they stem from existing biases in health data and default technical practices. Unless you think carefully about them, models will naively reproduce and extend biases,” she says.

Ghassemi has been exploring such issues ever since.

Her favorite breakthrough in the work she has done came about in several parts. First, she and her research group showed that learning models could recognize a patient’s race from medical images like chest X-rays, which radiologists are unable to do. The group then found that models optimized to perform well “on average” did not perform as well for women and minorities. This past summer, her group combined these findings to show that the more a model learned to predict a patient’s race or gender from a medical image, the worse its performance gap would be for subgroups in those demographics. Ghassemi and her team found that the problem could be mitigated if a model was trained to account for demographic differences, instead of being focused on overall average performance — but this process has to be performed at every site where a model is deployed.

“We are emphasizing that models trained to optimize performance (balancing overall performance with lowest fairness gap) in one hospital setting are not optimal in other settings. This has an important impact on how models are developed for human use,” Ghassemi says. “One hospital might have the resources to train a model, and then be able to demonstrate that it performs well, possibly even with specific fairness constraints. However, our research shows that these performance guarantees do not hold in new settings. A model that is well-balanced in one site may not function effectively in a different environment. This impacts the utility of models in practice, and it’s essential that we work to address this issue for those who develop and deploy models.”

Ghassemi’s work is informed by her identity.

“I am a visibly Muslim woman and a mother — both have helped to shape how I see the world, which informs my research interests,” she says. “I work on the robustness of machine learning models, and how a lack of robustness can combine with existing biases. That interest is not a coincidence.”

Regarding her thought process, Ghassemi says inspiration often strikes when she is outdoors — bike-riding in New Mexico as an undergraduate, rowing at Oxford, running as a PhD student at MIT, and these days walking by the Cambridge Esplanade. She also says she has found it helpful when approaching a complicated problem to think about the parts of the larger problem and try to understand how her assumptions about each part might be incorrect.

“In my experience, the most limiting factor for new solutions is what you think you know,” she says. “Sometimes it’s hard to get past your own (partial) knowledge about something until you dig really deeply into a model, system, etc., and realize that you didn’t understand a subpart correctly or fully.”

As passionate as Ghassemi is about her work, she intentionally keeps track of life’s bigger picture.

“When you love your research, it can be hard to stop that from becoming your identity — it’s something that I think a lot of academics have to be aware of,” she says. “I try to make sure that I have interests (and knowledge) beyond my own technical expertise.

“One of the best ways to help prioritize a balance is with good people. If you have family, friends, or colleagues who encourage you to be a full person, hold on to them!”

Having won many awards and much recognition for the work that encompasses two early passions — computer science and health — Ghassemi professes a faith in seeing life as a journey.

“There’s a quote by the Persian poet Rumi that is translated as, ‘You are what you are looking for,’” she says. “At every stage of your life, you have to reinvest in finding who you are, and nudging that towards who you want to be.”

#ai#AI/ML#American#Artificial Intelligence#career#challenge#college#computer#Computer Science#Computer science and technology#data#development#Electrical engineering and computer science (EECS)#Engineer#engineering#Environment#equity#Ethics#factor#Faculty#fair#Features#focus#Full#games#gap#Gender#hand#Health#Health care

0 notes

Text

Saw this on my feed this morning.

And that's Very True.... Personally, I don't have a problem with it... So long as they keep making everything they do look like it's under a layer of cling wrap.

Some additional thoughts in relation to AI Artwork from a Painter. I have been painting Professionally since 2001. around 10 years of schooling and getting it together before that. So Yeah, been at it for a while. Also have a couple of degrees in my back pocket and know the art world, and how the actual world we all live in works. I have a pretty good bead on it.

Here's the lay of the land as I see it.

First I have a certain affection for certain parts of various paintings I have painted over the years (decades now, Geeze-Lousie!) 'Say, I like this about that. Gee That worked really well, I'll have to remember that move, that technique' etc etc.

But these Are Not my Children. Look, I wouldn't sell my Children! They are not my babies! I mean, not really if I'm honest. I never painted them to be. That wasn't / isn't their job (for me)

But rather I want whomever purchases, or acquires them, to feel that they are their babies! Heck they spoke to them. They made that special connection, the one between Art and the observer. If I listened to all the 'Art speaking to me' in here (my studio, my head) I'd go insane! It's an F'ing MadHouse in here!

Anyhow as far as AI goes, all it is, is just pretty regurgitation, I mean literally, it is. That's all. I mean yeah, you could probably survive on it, but would you really want to?

Look I can pretty much confidently say, that for all of the people who have bought my art, yes they dig the paintings, of course. It spoke to them. But they also dig that I was able to make that happen for them, as a painter, an artist.

Am I worried about losing a sale to an AI image? No. I am not. I have done this long enough to know, that anyone who would prefer to hang that over the piano as opposed to a Real, human-created painting isn't someone I was going to sell to anyhow. They were not at a place, intellectually, spiritually, whatever you want to call it, to take that ride.

When Warhol started painting color-splotches and splashes, backgrounds and highlights on silkscreens did the masses run out and start buying just screenprint images for that perfect place in their perfect pad? No! No one gave 2 shits about the screenprint. If they did, don't you think ole Andy would have made sure all those copies were a little more cleaned up? He really wasn't one to leave money on the table.

His collectors dug what he had created. You take ole' Andy out of that equation... well it just doesn't work.

I was with my wife at IKEA a bit back and they were selling a $35 Audrey Hepburn silk screen print. Why would someone buy one of my more expensive paintings of Audrey when they could just buy that? And look I absolutely think if that's what they want that's what they should buy. But the reason that folks have bought mine, is that there is just something about it, that they needed. It's hard to explain it to someone who has never had that connection yet. There's just something about it. And that's simply what AI can't copy. I honestly doubt if it ever will be able to understand that, let alone reproduce it.

When I go to the symphony and hear a symphony knocking out Beethoven's 9th. I tear up. It speaks to me. That doesn't happen for me, when I listen to a recording. Even if it's a recording of the same symphony, same performance. Is that the same for everybody? Probably not, but boy am I glad that it's that way for me. That I can have that connection. I get that not everyone can, and I feel sad for those who cannot.

So yeah, anyhow, are Fine Artists worried about the AI-Generated art revolution? None that I know are. And neither am I.

However, if I were a Graphic Designer and made my living doing that, yeah I would be. Absolutely.

0 notes

Text

As of late, I’ve started wondering what technologies we’re used to are just going to go away. That’s also a great way to close out the end of the year.

Of course technologies always fall by the wayside. Some don’t work, some get replaced, some get improved, some aren’t practical. There’s a lot created in human history that’s not in widespread use in Western society. Technologies going away is normal (as is reviving them, but that’s another story).

I wonder as of late how many technologies we’ve created are a mix of unsustainable and actually just useless. How many will just go away or could because there’s no reason to keep them or we can’t.

Mostly I’m thinking about “computer stuff,” because that’s what I’ve worked on almost all of my career. I just feel like the last decade or so things have gotten faintly ridiculous.

Embedding computers and wireless into everything. I’m missing simple electro-mechanical solutions, cable connections, and of course I wonder about security issues. Plus how sustainable is “microchips everywhere.”

So-called “AI” which is really just large language models and or algorithms. There’s a ton of hype around it, while it consumes resources and creates all sorts of legal and information issues. AI hype also eclipses really good tools for things like image analysis and searching that aren’t as sensational.

The ads in everything. Yes, we can put ads in everything, but it feels like it’s gotten overdone. Also how much web technology has grown around serving damn ads.

Social media. I think we’ve started to see people rethinking how it’s used – let alone the social, security, and technical issues. Also it seems everyone keeps trying to undermine everyone else. Oh, and ads.

Streaming. Maybe I’m old but I’m starting to miss cable.

Graphic cards. Do I need the latest particle effects when some games run on the CPU? Also, this stuff is getting used for crypto.

And so on.

I’ve been wondering if what we see in technology is basically a lot of people made money, so they can “make” things succeed by investing their word and considerable money into it. Others already had a lot of money so they can try to “make” a market. Combined together I wonder how much of our technological world is just propped up by money, hype, and newness.

And I wonder how much of that is going to be around because what’s the value proposition. Streaming is nice and all, but it’s hard to keep track of everything and it gets pricey. Computer everything doesn’t seem sustainable between costs and the fact it seems we’re well on the way to see refrigerators infected with malware. How much of the technology out there is needed versus just hyped, and we race to get ahead? How oversaturated are markets?

Also can any of this survive our various crises in the world? I mean stuff is kinda shaky right now.

I don’t know. But I have the gut feeling that there’s changes coming as some things can’t be sustained or that no one wants them, needs them, or can pay for them.

Steven Savage

www.StevenSavage.com

www.InformoTron.com

0 notes

Text

Smile For The Camera

In spite of marketers’ best efforts to try to overcome the limitations of the e-commerce platform, the fact remains that for all the ease and convenience of shopping online at home, there are some matters that can only be resolved inside a BAM store. Unless a customer has already performed the task before and is now simply in re-order mode, there’s risk in buying some new things.

Like clothing and other personal products.

Walmart, though, is making significant steps to help resolve some of the time and space gaps. Their efforts are not perfect, and could indeed raise eyebrows, but they are big steps. For example, in September 2022 they expanded virtual try-on in the women’s department. They had bought start-up Zeekit in 2021, and the technology allowed users to upload a photo of themselves. The AI and AR would take it from there, letting shoppers “try on” clothing right there on the screen. I wrote about it at the time, and reaction from my MBAs was mixed.

And now they have partnered with Perfect Corp. to offer AR-powered try-on in the cosmetics department. Users can sample 1400 different products, and this time do not need to upload a selfie. Oh, and it is all designed to work within Walmart’s iOS app. Score one more point for mobile.

Now for people like me who absolutely hate to shop for clothing, virtual try-on would be golden. I’d even be willing to take the risk on the first purchase when it comes to actual fit. Let’s face it, a 34” waist in one brand may be comfy, but in another brand may be either too big or too small.

But I understand Walmart’s inclinations to try this with women first, because—no sexism intended—women tend to be more conscious of these matters. If Walmart can crack this nut, it should be easy peasy to rope in the guys.

I also understand the limitations of even these high tech solutions. Color can vary considerably across phones, tablets, and laptops, by virtue of settings and quality of the device. Nuanced shades in the red family, for example, could come out looking muted or like a fire truck.

Then there’s the issue of privacy. Walmart swears that no user data (including images) will be saved from the cosmetics try-on. But for its clothing service, it does depend on just that. Would you want to share a photo of yourself with Walmart—or anyone, for that matter—just for the sake of seeing how you might look in that new dress?

Walmart is also considering how to use the clothing try-on inside its stores, with sophisticated mirrors that have cameras built in. Stand in front of the mirror, and then tell the machine what to do next. If trying on clothes is not your jam, this could save tons of time.

Back to the cosmetics, I see this greatly enhancing sales. Walmart may not be every woman’s first choice for beauty products, but this could pierce that veil. I have to laugh every time I enter a Dillards or similar department store, and find myself having to cut a trail through a cosmetics department that is at least a couple hundred linear feet long. Female clerks in lab coats await customers, and they give them personal applications. It’s great theatre, because it has the look and feel of science going on.

Which then raises another question. Do women crave that 1-on-1 interaction for something so uniquely personal as one’s choice of beauty products? Speaking for myself and not all men, that would send alarm bells and flashing lights into action. No way, no how. Alas, we are not all the same.

I realize there are some for whom purchase of these types of products necessitates a visit to stores. I also realize that the majority of the reluctant ones are probably closer in age to me than they are my students. When you have done something a certain way for decades, it can be hard to learn and adopt new methods.

I bet my tech-savvy daughters would be all over the cosmetics try-on. Instead of the usual Ulta gift card I put in their Christmas stocking, I could just as easily do it for Walmart and let them get on their phones. Because they are young, they are not as likely to be stuck in their ways.

There’s no future in getting old, and let’s face it, my generation’s major consuming days are behind us. Subtract 40 years, and you have legions of young shoppers open to new ideas. Walmart is genius for seeing this future.And that, my friends, is always something I am willing to try on.

Dr “Wearing Shades” Gerlich

Audio Blog

0 notes

Text

Very curious still if the Stable Diffusion and other AI lawsuits are going to result in another Napster-like situation. Adobe has already basically copy-pasted Stable Diffusion and is marketing it as Firefly. The more likely end result of the lawsuits looks like taking it away from the majority now and putting it in the hands of mega corps to sell instead (if it doesn’t win as transformative, that is). Still mixed feels on it all. And still neutral. As a piece of software doing what it was designed to do, it’s a major success. As an artist, I do have a problem with the term “Artist” being tacked on to people who make art with it, because they’re not. And that’s just a clear cut fact. Writing text into a prompt box, copy-pasting code and messing with sliders teaches you NOTHING about art or the fundamentals to make it outside of this magic box and requires you to know nothing about art or the fundamentals to use it to make something in. If you’re a visual artist, then you learn the same fundamentals that all of the visual mediums learn, value, composition, anatomy, color theory, light and shadow, line, et cetera. You aren’t no longer an artist if you switch tools from painting to sculpting, because you’ve already learned the basic rules and fundamentals to make it regardless of the tool set, et cetera. It’s the same with any traditional or digital medium be it sculpting or painting or animating or anything else. A majority of the people using AI to make art, and specifically the ones who talk-down to the understandably upset artists, are not artists themselves. The software is the one doing the work and the one who has been visually trained, not the person behind the screen inputting prompts. Not saying they’re not doing any work, but the work they’re doing to help the software produce the artworks is not the work an artist would have to do. I have no problem with Ai Artists making art with AI or doing their own thing with it, people SHOULD be free to explore creative mediums and outlets of any kind as much as they want to and open to experiment. I just wish there was a better term to coin them as than ‘artists’ though, personally. As an artist who studied and dedicated myself upwards of 20 years of my life now to get better at and closer to mastering the fundamentals and who threw away literal decades of my life to improve my craft on the literal back-bone of my own sweat, blood, hard work and actual tears, it’s a huge slap in the face to have someone be treated as if they made the same life-long sacrifice and put in the work for clicking a button and have a code generate an image. Ai Art itself still looks fucking incredible and keeps getting better. That’s also a clear cut fact. I still think it’s provided an invaluable abundance of AMAZING fucking reference material like never before for artists to learn from and study from and I still think its potential at streamlining a pipeline is insane and will continue to have insane potential for classically trained artists as well, if more could set aside their egos and try it out. My biggest personal issue still just lies mostly with the general public en masse. Seeing week old AI Art accounts spring up left and right and hit 1-2k followers after spending again, decades of your life and getting nothing, is one of the worst fucking feelings in the fucking world as an artist and one of the most demotivating. It’s very easy to see and sympathize with artists’ concerns and upset around it. But it’s not the fault of the AI or the people using it. The end result is designed to be good and designed to be appealing. It’s not at fault for doing exactly what it was meant to do. And whether it was around or not wouldn’t change the shitty fucking nature of the general publics’ shitty fucking lack of support and hunger for consumerism that puts a lot of artists out in the first place. It is just kinda sad to see a whole new way that artists can be undermined and under-appreciated and treated like shit now. And it does make it really hard to not want to just fucking kill myself after wasting my entire life honing a craft I loved and praying that “One day i’ll make it if i just get good enough!” to keep myself going, mean fucking nothing, because some guy sitting in front of his computer with a piece of software installed is typing into a text box right now making some fat anime tiddies and getting paid more for it in less time than I have my entire struggling, soul-wrenching career. I didn’t mean for this to get this long. Sorry followers for clogging up your dash. AI Art is unavoidable, and I’ve had a lot on my mind about it lately because of that I guess that I needed to get out so what better place than fucking tumblr.com fml

1 note

·

View note

Text

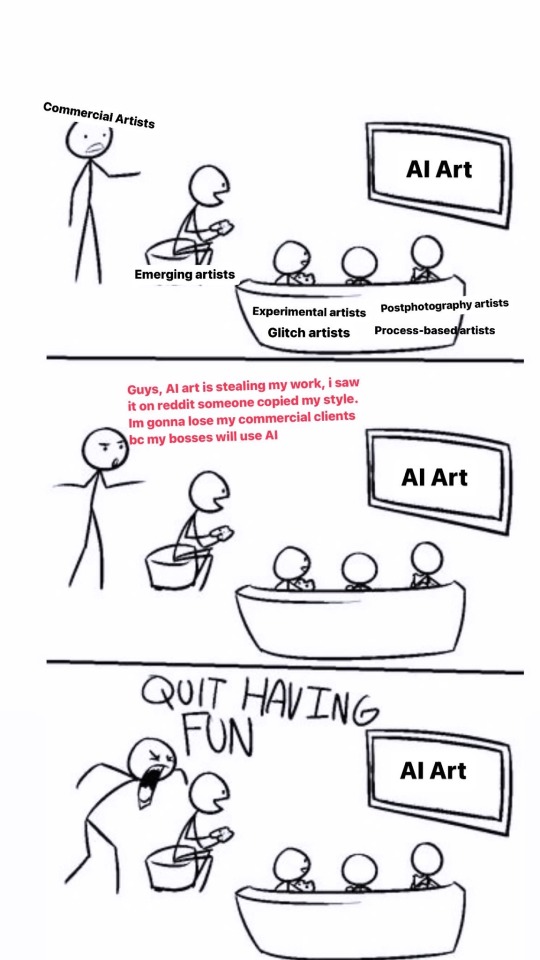

If u think losing a gig to an AI is hard, try losing gigs because your bosses are racist or xenophobic or homophobic or sexist, or try not getting gigs at all because bosses dont think your work is as legitimate as the dude who can draw 100 identical spidermans.

There are serious structural issues with pay-to-play AI services like Dalle2 and it’s centered on how these companies data laundered copyrighted works using fair use laws and research institutions to privatize a tech that should be considered public infrastructure for everyone.

But AI Art itself isn’t evil, its a tool that has been used by new media artists for at least a decade. There’s obviously ethical ways to create AI art: train your own models, create outputs based out of your own works, attribute the artists you use in your prompts, etc.

But suddenly caring about copyright like you are now team disney and team nintendo is weak. And seriously, most of your artstation works aren’t original either. Yall living off of borrowed aesthetics from 100 years of comic books and cartoons and illustrations.

AIs can’t plagiarize the way humans do. You are seeing a calculated average of images. The reason shit looks like your favorite illustrators is because a lot of these illustrators make similar art, and most people writing AI prompts have similar basic tastes. Making great AI Art from prompts takes time and patience and a keen sense of poetics.

But seriously, y’all don’t hate new tech, you hate capitalism and the corpos and bosses who are out to expropriate you.

AI Art, if anything, is the new folk art. Same repeated motifs made by anyone with a clue. This is a wonderful mingling of collective creative energies. Embrace it!

Addendum for all the reactionary responses out there:

~~~~~~~~ Artists should be getting royalties from OpenAI, Midjourney, et al. And they should be able to opt in or out of having their work included in training models. This is a given and I would never argue against compensating artists! ~~~~~~~~

This isn’t about defending these corpos either, but machine learning tech has been around before these companies started their thing and experimental artists from around the world have been using machine learning to make great art.

Another thing: the moment you post your digital art to a platform, you sign away much of your consent w/r/t how your art is used. Thats what those really long TOS are about. ArtStation and istock were scraped for data under the pretext of Fair Use, which allows or mass scraping internet data for research purposes. Fair Use is like the one law that for the most part, protects artists from the disneys and nintendos of the world. I wouldn’t be able to glitch video games without it. Emulated videogames wouldnt exist with out it either So the question is, why are corporations allowed to use Fair Use as a cover for developing privatized pay-to-play services? People who know a thing, will point out that Stable Diffusion is open source, and that’s great, but why are privatized services allowed to be built on open source infrastructure? Especially when this tech hasn’t been properly vetted for racial biases, pr0nography, etc

Yes its shitty, but these arent arguments against AI tech but against juridical structures under capitalist regimes.

260 notes

·

View notes

Photo

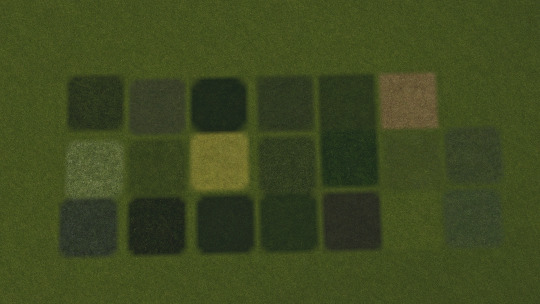

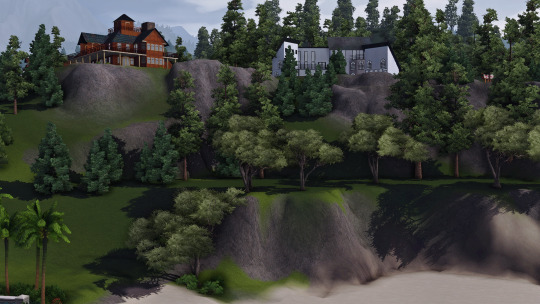

Yesterday, Lyralei presented us with a great retexturing pack for The Sims 3! Nothing like a good Upscaling you might imagine... Well, me too Haha However when it comes to The Sims things are not that simple. And almost always, logic creates its own path. Different from what we are used to seeing.

For example, this is a screenshot taken by Lyralei of their 4K retexturing package

And here is the same photo taken by me, but with textures of only 1024x1024. The big difference is that these textures are the result of overlays using GOOD textures from the game itself.

As I always say.. The flaw of The Sims 3 is the mix of hard and soft textures. There is no aesthetic standard. Using rigid textures overlaid with transparency on soft textures and adjusting their colors, we have a really harmonious result. If we take bad textures and apply an upscaling, not even artificial intelligence will be able to guarantee us a quality result. Most of the time it's the opposite.

AI augmenting a default texture gives us this. Recreating the texture from scratch gives us greater control over definition and balance:

Maybe you say: Clearly the quality is different because of the PC. But here we have another example, the two images taken on my computer. The first with 4K package and the second with ProjectEden's retexturing 1024x1024

Discarding the fact that the tones are not identical to the base game, since the Eden Project seeks to create a greater harmony between color and texture, the main difference between the two images is in the Tiling. While the 4K texture can be perfect up close, when we pull the camera away we can see the pattern repeating itself. And this flaw does not mean that the texture is not good, on the contrary, it is just extremely demarcated. The way I found to minimize this effect was to adjust several layers of overlapping textures to make them as smooth and sharp as possible. That way we guarantee a perfect result from afar and even better up close!

The definition quality is not linked to the size of the image, but to the texture itself. The big job of making Ts3 more beautiful is in rebuilding its textures from scratch. Using current textures as a reference or starting point. Never as final destination or conclusion

This is the only way to find the balance between Quality and definition.

This post is not to criticize Lyralei's work, because the fact is that The Sims 3 is a relatively messy game! What she did was a miracle. Finding ALL textures is not easy. And the worst part is when we start the work and the flaws in the terrain painting of the neighborhoods, as well as its modeling start to become more evident, as in the lower right side of this image:

The Sims has an extremely captivating look, it just needs the right textures to be represented at the level it deserves.

I'll point out, because it doesn't follow the logic of other games, when we increase the texture size, what we are doing is increasing the tiling, even if the texture is good. Here's a great example, even with Project Eden's retextures, the flaw is subtle but it shows: (2048x2048)

Now: 1024x1024

Again I would like to congratulate Lyralei for the excellent work. Next to everything we've had in the last decade, this update was without a doubt a key highlight. I'm curious to see how her projects will progress and I make myself available to be a great competitor Hahaha and also, of course, a great collaborator if one day she wants to create something together with me.

I'll be back in the future to talk more about Project Eden and share new information! Until then!!

#ProjectEden#ProjetoEden#TheSims3#sims3cc#ts3cc#s3cc#sims 3 overrides#texture pack#HD textures#Sunset Valley#The Sims#Boringbones#Boringbonestv

132 notes

·

View notes

Photo

Hellooooooo fans of Hard Truths from Soft Cats!...Hello? Where is everyone? ...A-ha! *Bends shrub aside to find five tumbleweeds inside*Ah, well, it has been a while, but hopefully this blog still has followers. Hey all! We're reviving this blog for a limited short run, called "Hard Truths From AI Generated Cats." They're still just as soft as before, but a little bit glitchy, if you know what I mean.

Why are we doing this? Well, for clarity, my "name" is Ace (I'll probably put my real name up eventually), and I'm not the original creator of this page, but a friend. Like a cicada (but much cuter!), this webcomic popped back into my life after eight years of dormancy, around the same time I was talking with a friend about image generators. I asked HardTruths F. SoftCats for their blessing (yep, that's their real name, sorry to doxx you like that!), and he was supportive. I think in the eight years since HTFSC ended, life has only gotten harder and truthier (and I mean that in the absolute Colbertian sense of the word); one could probably write an entire thesis on that subject in cat-form, but this run will serve a very specific purpose.

I am an AI researcher myself, and I work on projects that include generative machine learning methods. At once, I am both incredibly excited and fearful of the potential of these algorithms. As someone with no drawing capability myself, I feel suddenly empowered to be able to take on art projects I otherwise would not have. But then, as a hobbyist writer, when I look at GPT, while I don't feel threatened now, I see a future where the technology becomes better and could make my hobby obsolete; for those where it's their livelihood, the worry is far more tangible.

Technology rarely rewinds, but it often can be made to work for, not against, the people. But this only happens if we not only say we need to have a discussion (which is where so many conversations actually end), but to actually have one. That is a conversation that needs to happen in public forums with those who have the power to effect change, at the level of grassroot and corporate organizations and policymakers, the latter of whom are at least a decade late to the party. The box has been opened, but whether it's Pandora's Box or a treasure chest is still up to society.

I'll post a comic today and then and likely on a Monday/Thursday schedule after that until I run out, and maybe I’ll accompany it with some commentary. I hope this is a pithy and cuddly conversation-starter; all I can bring you is the hard truth from probabilistically soft cats.

11 notes

·

View notes

Text

kinktober day 8 - robot / fly (yoonkook)

https://archiveofourown.org/works/42934197/chapters/107867133#workskin

It would be a long trip to New York, so when Yoongi got to his private jet, he had to make sure he had all his necessities covered. One of them required the most precious but also the most well kept invention of Min Industries. His oldest yet latest model, Jungkook.

Yoongi could see him, strutting along the other flight attendants, he only brought them just so that the distinction between the standard assistant robots and him was even more obvious. He followed the two ladies’ path with his signature excited step, that bright look in his eyes that Yoongi worked so hard in perfecting.

His muscular build looked nearly ridiculous in the tight flight attendant uniform, tube skirt riding up the thick thighs, hugging his ass perfectly. The little blazer looked close to ripping around his shoulders and big biceps, cropped more so than the rest from his enhanced height, and buttons looking dangerously ready to pop around his chest. Yoongi could just admire him for hours. Such amazing handiwork.

He promised himself he’d be patient before calling Jungkook over. But it hadn’t even been an hour of the 15 ahead of him, when he calls his name.

“Kook-ah!” It will never stop being endearing watching the robot perk up and trot up to him, eyes widened and innocent, as if he didn’t know what Yoongi was going to ask of him. Maybe he didn’t, he didn’t put particularly hard effort on the softwares ability to retain memory.

That's what made it so good, how every time seemed like Jungkook’s first.

The robot makes his way gleefully towards where Yoongi is sitting. “Hi Mr. Min!” His voice is still a little awkward-sounding, but cheerful nonetheless. He can feel a smile growing on his face as he stares up at his creation.

“Hi, sweetheart. You got assigned to join me on my flight?” Yoongi lets his hand caress the back of Jungkook’s naked thigh, warm and supple (it took him years to perfect). And through his decade’s worth of work in Jungkook’s AI he always made sure to never let the robot know he was special among the others. Like he hasn’t designed Jungkook to the image of Yoong’s fantasies, that he takes him everywhere, that it's his most prized possession. He likes it when Jungkook thinks he himself is special, without any of Yoongi’s doing behind it.

Especially when it shows in Jungkook’s blush feature (the only one of his designs to have it), that colors his cheeks the loveliest shade of pink. “Yeah… It's not in my skills program though…” And oh his worry looks so genuine it tugs at Yoongi’s heart. “I don’t know how I’ll serve you, Mr. Min…” His big eyes look down at his creator carefully biting his lip.

“That's okay, Jungkook-ah. I know just what you can do.” A task delivered by Yoongi himself, made to be considered the first priority in all of his models. But only in Jungkook does it make his face light up in relief. “Would you bring me some snacks and feed them to me, sweetheart? Whatever they have, it's fine.” He nods quickly before hurrying away, admiring the way his ass bounces with the shirt that rides up.

Ah, this is what's amazing about giving vague orders to a robot, he thinks to himself as Jungkok returns with two armfuls of chip bags. Yoongi pats the spot beside him on the couch as Jungkook obediently follows. Kneeling politely, the tight skirt showing the curve of his bulge, and he can stare all he wants. Only needing to open his mouth for Jungkook to open a pack and begin to delicately feed his creator.

Their pace is slow, he leans back on the couch and Jungkook follows, hooking his own muscular thigh over Yoong’s fattened one. Skirt completely useless as it only clings to his bulge, and with the shameless hand Yoongi places on Jungkook's ass, it's doing nothing to cover it either.

Comfortable silence as Jungkook treats him so carefully. Allowing Yoongi to pig out without any judgmental eyes, letting the crumpled up bags collect around them, his belly taking space on his lap, pressing against Jungkook without any pressure to suck in. Letting himself bloat under the layers of fat stretching his shirt. Feeling himself empty of any thoughts or concerns waiting for him once the plane lands, just able to be taken care of by the most stunning ‘man’ he’s ever seen. The only one who he trusts in these vulnerable moments of unadulterated gluttony.

“You really shouldn’t call me that.” Jungkook is the one that breaks the silence, eyes not draining away from the movement of his hand sinking into the bag and directly to Yoongi’s lips.

“Call you what?” He doesn’t have to worry about his manners either, speaking through mouthfuls, lips probably tinted orange with cheeto dust, crums collecting on his white button up. Jungkook looks at him then.

“S-sweetheart.” His voice glitches as he says it, so endearing Yoongi has to keep himself from cooing. Jungkook’s software must’ve been processing that thought all this time… adorable. “What if the others listen and find out about us?” Again that genuine worry behind his eyes, leaning closer to whisper it as he says it, as if Jungkook was at any risk of losing his job over Yoongi’s actions.

“Oh don’t worry Jungkook-ah… It’ll be our little secret, hm?” He whispers too, trying to keep alive the fantasy programmed into the robot’s head. Jungkook nods slowly but determined. “Now… I’m getting a little stuffed. Rub my belly?”

Jungkook’s eyes light up. That's definitely something in his skills program.

24 notes

·

View notes