#human automation

Explore tagged Tumblr posts

Text

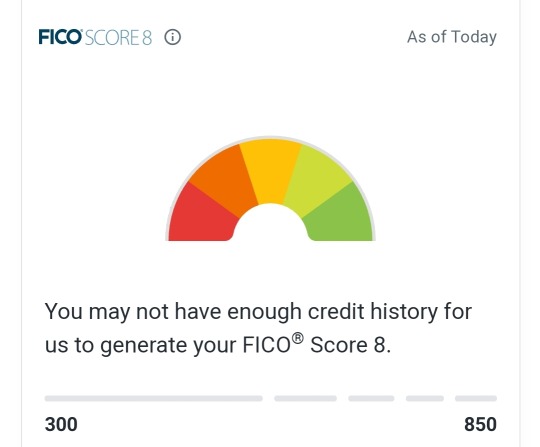

So, I have a big advice post to write, but until I have the spoons, I want to warn everyone now who has legally changed their first name:

Your credit score may be fucked up and you need to:

check *now* that all your financial institutions (credit cards, loans, etc) have your current legal name and update where necessary

check that all 3 credit bureaus, on their respective sites, not via a feed like Credit Karma (so, Trans Union, Equifax, Experian) are collecting that info correctly and generating the right score - you might need to monitor them for a few months if you made any changes in #1

I am about to apply for a mortgage and learned that as of 2 weeks ago:

Experian suddenly thinks I am 2 different people - Legal Name and Dead Name (none of whom have a score)

Equifax has reported my active 25 year old mortgage as closed and deleted one of my older credit cards, hurting my score by 30+ points

Credit scores influence everything from big home/car loans to insurance rates to job and housing applications.

And for whatever reason, the 3 bureaus that have the power to destroy your life are shockingly fragile when it comes to legally changing one's first name.

So, yeah. Once I get this mess cleaned up for myself, I have a big guide in the works if you find yourself in the same predicament. But with the mass trans migration out of oppressive states, odds are there are a lot of newly renamed people who are about to have a nasty shock when applying for new housing.

Take care, folks.

#trans stuff#transgender#credit score#united states#it is wild having an 800 spread between credit scores lol#a human being could identify there is a problem but so much life impacting shit is automated by stupid and biased algorithms

3K notes

·

View notes

Text

“Humans in the loop” must detect the hardest-to-spot errors, at superhuman speed

I'm touring my new, nationally bestselling novel The Bezzle! Catch me SATURDAY (Apr 27) in MARIN COUNTY, then Winnipeg (May 2), Calgary (May 3), Vancouver (May 4), and beyond!

If AI has a future (a big if), it will have to be economically viable. An industry can't spend 1,700% more on Nvidia chips than it earns indefinitely – not even with Nvidia being a principle investor in its largest customers:

https://news.ycombinator.com/item?id=39883571

A company that pays 0.36-1 cents/query for electricity and (scarce, fresh) water can't indefinitely give those queries away by the millions to people who are expected to revise those queries dozens of times before eliciting the perfect botshit rendition of "instructions for removing a grilled cheese sandwich from a VCR in the style of the King James Bible":

https://www.semianalysis.com/p/the-inference-cost-of-search-disruption

Eventually, the industry will have to uncover some mix of applications that will cover its operating costs, if only to keep the lights on in the face of investor disillusionment (this isn't optional – investor disillusionment is an inevitable part of every bubble).

Now, there are lots of low-stakes applications for AI that can run just fine on the current AI technology, despite its many – and seemingly inescapable - errors ("hallucinations"). People who use AI to generate illustrations of their D&D characters engaged in epic adventures from their previous gaming session don't care about the odd extra finger. If the chatbot powering a tourist's automatic text-to-translation-to-speech phone tool gets a few words wrong, it's still much better than the alternative of speaking slowly and loudly in your own language while making emphatic hand-gestures.

There are lots of these applications, and many of the people who benefit from them would doubtless pay something for them. The problem – from an AI company's perspective – is that these aren't just low-stakes, they're also low-value. Their users would pay something for them, but not very much.

For AI to keep its servers on through the coming trough of disillusionment, it will have to locate high-value applications, too. Economically speaking, the function of low-value applications is to soak up excess capacity and produce value at the margins after the high-value applications pay the bills. Low-value applications are a side-dish, like the coach seats on an airplane whose total operating expenses are paid by the business class passengers up front. Without the principle income from high-value applications, the servers shut down, and the low-value applications disappear:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

Now, there are lots of high-value applications the AI industry has identified for its products. Broadly speaking, these high-value applications share the same problem: they are all high-stakes, which means they are very sensitive to errors. Mistakes made by apps that produce code, drive cars, or identify cancerous masses on chest X-rays are extremely consequential.

Some businesses may be insensitive to those consequences. Air Canada replaced its human customer service staff with chatbots that just lied to passengers, stealing hundreds of dollars from them in the process. But the process for getting your money back after you are defrauded by Air Canada's chatbot is so onerous that only one passenger has bothered to go through it, spending ten weeks exhausting all of Air Canada's internal review mechanisms before fighting his case for weeks more at the regulator:

https://bc.ctvnews.ca/air-canada-s-chatbot-gave-a-b-c-man-the-wrong-information-now-the-airline-has-to-pay-for-the-mistake-1.6769454

There's never just one ant. If this guy was defrauded by an AC chatbot, so were hundreds or thousands of other fliers. Air Canada doesn't have to pay them back. Air Canada is tacitly asserting that, as the country's flagship carrier and near-monopolist, it is too big to fail and too big to jail, which means it's too big to care.

Air Canada shows that for some business customers, AI doesn't need to be able to do a worker's job in order to be a smart purchase: a chatbot can replace a worker, fail to their worker's job, and still save the company money on balance.

I can't predict whether the world's sociopathic monopolists are numerous and powerful enough to keep the lights on for AI companies through leases for automation systems that let them commit consequence-free free fraud by replacing workers with chatbots that serve as moral crumple-zones for furious customers:

https://www.sciencedirect.com/science/article/abs/pii/S0747563219304029

But even stipulating that this is sufficient, it's intrinsically unstable. Anything that can't go on forever eventually stops, and the mass replacement of humans with high-speed fraud software seems likely to stoke the already blazing furnace of modern antitrust:

https://www.eff.org/de/deeplinks/2021/08/party-its-1979-og-antitrust-back-baby

Of course, the AI companies have their own answer to this conundrum. A high-stakes/high-value customer can still fire workers and replace them with AI – they just need to hire fewer, cheaper workers to supervise the AI and monitor it for "hallucinations." This is called the "human in the loop" solution.

The human in the loop story has some glaring holes. From a worker's perspective, serving as the human in the loop in a scheme that cuts wage bills through AI is a nightmare – the worst possible kind of automation.

Let's pause for a little detour through automation theory here. Automation can augment a worker. We can call this a "centaur" – the worker offloads a repetitive task, or one that requires a high degree of vigilance, or (worst of all) both. They're a human head on a robot body (hence "centaur"). Think of the sensor/vision system in your car that beeps if you activate your turn-signal while a car is in your blind spot. You're in charge, but you're getting a second opinion from the robot.

Likewise, consider an AI tool that double-checks a radiologist's diagnosis of your chest X-ray and suggests a second look when its assessment doesn't match the radiologist's. Again, the human is in charge, but the robot is serving as a backstop and helpmeet, using its inexhaustible robotic vigilance to augment human skill.

That's centaurs. They're the good automation. Then there's the bad automation: the reverse-centaur, when the human is used to augment the robot.

Amazon warehouse pickers stand in one place while robotic shelving units trundle up to them at speed; then, the haptic bracelets shackled around their wrists buzz at them, directing them pick up specific items and move them to a basket, while a third automation system penalizes them for taking toilet breaks or even just walking around and shaking out their limbs to avoid a repetitive strain injury. This is a robotic head using a human body – and destroying it in the process.

An AI-assisted radiologist processes fewer chest X-rays every day, costing their employer more, on top of the cost of the AI. That's not what AI companies are selling. They're offering hospitals the power to create reverse centaurs: radiologist-assisted AIs. That's what "human in the loop" means.

This is a problem for workers, but it's also a problem for their bosses (assuming those bosses actually care about correcting AI hallucinations, rather than providing a figleaf that lets them commit fraud or kill people and shift the blame to an unpunishable AI).

Humans are good at a lot of things, but they're not good at eternal, perfect vigilance. Writing code is hard, but performing code-review (where you check someone else's code for errors) is much harder – and it gets even harder if the code you're reviewing is usually fine, because this requires that you maintain your vigilance for something that only occurs at rare and unpredictable intervals:

https://twitter.com/qntm/status/1773779967521780169

But for a coding shop to make the cost of an AI pencil out, the human in the loop needs to be able to process a lot of AI-generated code. Replacing a human with an AI doesn't produce any savings if you need to hire two more humans to take turns doing close reads of the AI's code.

This is the fatal flaw in robo-taxi schemes. The "human in the loop" who is supposed to keep the murderbot from smashing into other cars, steering into oncoming traffic, or running down pedestrians isn't a driver, they're a driving instructor. This is a much harder job than being a driver, even when the student driver you're monitoring is a human, making human mistakes at human speed. It's even harder when the student driver is a robot, making errors at computer speed:

https://pluralistic.net/2024/04/01/human-in-the-loop/#monkey-in-the-middle

This is why the doomed robo-taxi company Cruise had to deploy 1.5 skilled, high-paid human monitors to oversee each of its murderbots, while traditional taxis operate at a fraction of the cost with a single, precaratized, low-paid human driver:

https://pluralistic.net/2024/01/11/robots-stole-my-jerb/#computer-says-no

The vigilance problem is pretty fatal for the human-in-the-loop gambit, but there's another problem that is, if anything, even more fatal: the kinds of errors that AIs make.

Foundationally, AI is applied statistics. An AI company trains its AI by feeding it a lot of data about the real world. The program processes this data, looking for statistical correlations in that data, and makes a model of the world based on those correlations. A chatbot is a next-word-guessing program, and an AI "art" generator is a next-pixel-guessing program. They're drawing on billions of documents to find the most statistically likely way of finishing a sentence or a line of pixels in a bitmap:

https://dl.acm.org/doi/10.1145/3442188.3445922

This means that AI doesn't just make errors – it makes subtle errors, the kinds of errors that are the hardest for a human in the loop to spot, because they are the most statistically probable ways of being wrong. Sure, we notice the gross errors in AI output, like confidently claiming that a living human is dead:

https://www.tomsguide.com/opinion/according-to-chatgpt-im-dead

But the most common errors that AIs make are the ones we don't notice, because they're perfectly camouflaged as the truth. Think of the recurring AI programming error that inserts a call to a nonexistent library called "huggingface-cli," which is what the library would be called if developers reliably followed naming conventions. But due to a human inconsistency, the real library has a slightly different name. The fact that AIs repeatedly inserted references to the nonexistent library opened up a vulnerability – a security researcher created a (inert) malicious library with that name and tricked numerous companies into compiling it into their code because their human reviewers missed the chatbot's (statistically indistinguishable from the the truth) lie:

https://www.theregister.com/2024/03/28/ai_bots_hallucinate_software_packages/

For a driving instructor or a code reviewer overseeing a human subject, the majority of errors are comparatively easy to spot, because they're the kinds of errors that lead to inconsistent library naming – places where a human behaved erratically or irregularly. But when reality is irregular or erratic, the AI will make errors by presuming that things are statistically normal.

These are the hardest kinds of errors to spot. They couldn't be harder for a human to detect if they were specifically designed to go undetected. The human in the loop isn't just being asked to spot mistakes – they're being actively deceived. The AI isn't merely wrong, it's constructing a subtle "what's wrong with this picture"-style puzzle. Not just one such puzzle, either: millions of them, at speed, which must be solved by the human in the loop, who must remain perfectly vigilant for things that are, by definition, almost totally unnoticeable.

This is a special new torment for reverse centaurs – and a significant problem for AI companies hoping to accumulate and keep enough high-value, high-stakes customers on their books to weather the coming trough of disillusionment.

This is pretty grim, but it gets grimmer. AI companies have argued that they have a third line of business, a way to make money for their customers beyond automation's gifts to their payrolls: they claim that they can perform difficult scientific tasks at superhuman speed, producing billion-dollar insights (new materials, new drugs, new proteins) at unimaginable speed.

However, these claims – credulously amplified by the non-technical press – keep on shattering when they are tested by experts who understand the esoteric domains in which AI is said to have an unbeatable advantage. For example, Google claimed that its Deepmind AI had discovered "millions of new materials," "equivalent to nearly 800 years’ worth of knowledge," constituting "an order-of-magnitude expansion in stable materials known to humanity":

https://deepmind.google/discover/blog/millions-of-new-materials-discovered-with-deep-learning/

It was a hoax. When independent material scientists reviewed representative samples of these "new materials," they concluded that "no new materials have been discovered" and that not one of these materials was "credible, useful and novel":

https://www.404media.co/google-says-it-discovered-millions-of-new-materials-with-ai-human-researchers/

As Brian Merchant writes, AI claims are eerily similar to "smoke and mirrors" – the dazzling reality-distortion field thrown up by 17th century magic lantern technology, which millions of people ascribed wild capabilities to, thanks to the outlandish claims of the technology's promoters:

https://www.bloodinthemachine.com/p/ai-really-is-smoke-and-mirrors

The fact that we have a four-hundred-year-old name for this phenomenon, and yet we're still falling prey to it is frankly a little depressing. And, unlucky for us, it turns out that AI therapybots can't help us with this – rather, they're apt to literally convince us to kill ourselves:

https://www.vice.com/en/article/pkadgm/man-dies-by-suicide-after-talking-with-ai-chatbot-widow-says

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/04/23/maximal-plausibility/#reverse-centaurs

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#ai#automation#humans in the loop#centaurs#reverse centaurs#labor#ai safety#sanity checks#spot the mistake#code review#driving instructor

855 notes

·

View notes

Text

"RK Industrial Heavylifter gen 3" is a Cyberlife-developed cobot that is set apart from other competitors by using famous RK-series preconstruction module in its work, minimizing any accidents during the collaborative process.

I wonder if small RK line feels any familial feelings towards it, or are they horrified by a creature so similar to them being encased in a moving tomb. And what even goes through the mind of the creature? How present or out of it is it?

What other atrocities are there to witness at Cyberlife's R&D.

#uhh yeah some meaning I guess with this piece#I really tried to explain how an industrial bot can even be similar to highly sophisticated desings like Connor#I couldn't do enything else because I'm cursed with k n o w l e d g e#of insudtrial bots#and automation development lifecycles#anyway this whole thing was an excuse to draw Connor hanging out with and industrial bot#did I mention that I like industrial bots?#i like Industrial bots#so yeah normal tags time#art#fan art#my art#dbh#detroit become human#connor rk800#dbh connor#dbh rk800#rk800#rk900#rk900 dbh#dbh rk900

104 notes

·

View notes

Text

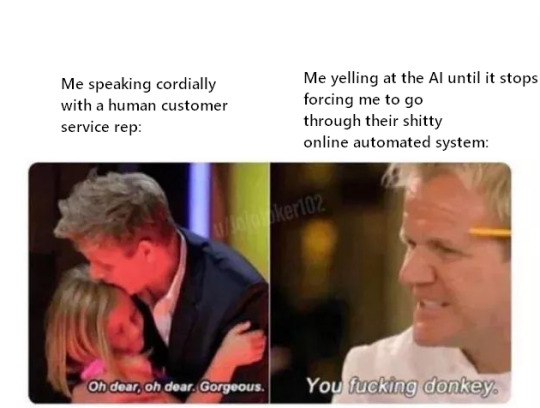

how i feel having to make phone conversations in the year of our lord and savior 2025

#kat talks#let me talk to a real human person!!!#i dont need an AI to try guessing at what i want especially when my questions arent in its script#also if i could use the online automated system i wouldnt be here!!#also mid sentence I got switched to a human representative and i had to immediately shift my tone lmao

43 notes

·

View notes

Text

Randomly ranting about AI.

The thing that’s so fucking frustrating to me when it comes to chat ai bots and the amount of people that use those platforms for whatever godamn reason, whether it be to engage with the bots or make them, is that they’ll complain that reading/creating fanfic is cringe or they don’t like reader-inserts or roleplaying with others in fandom spaces. Yet the very bots they’re using are mimicking the same methods they complain about as a base to create spaces for people to interact with characters they like. Where do you think the bots learned to respond like that? Why do you think you have to “train” AI to tailor responses you’re more inclined to like? It’s actively ripping off of your creativity and ideas, even if you don’t write, you are taking control of the scenario you want to reenact, the same things writers do in general.

Some people literally take ideas that you find from fics online, word for word bar for bar, taking from individuals who have the capacity to think with their brains and imagination, and they’ll put it into the damn ai summary, and then put it on a separate platform for others so they can rummage through mediocre responses that lack human emotion and sensuality. Not only are the chat bots a problem, AI being in writing software and platforms too are another thing. AI shouldn’t be anywhere near the arts, because ultimately all it does is copy and mimic other people’s creations under the guise of creating content for consumption. There’s nothing appealing or original or interesting about what AI does, but with how quickly people are getting used to being forced to used AI because it’s being put into everything we use and do, people don’t care enough to do the labor of reading and researching on their own, it’s all through ChatGPT and that’s intentional.

I shouldn’t have to manually turn off AI learning software on my phone or laptop or any device I use, and they make it difficult to do so. I shouldn’t have to code my own damn things just to avoid using it. Like when you really sit down and think about how much AI is in our day to day life especially when you compare the different of the frequency of AI usage from 2 years ago to now, it’s actually ridiculous how we can’t escape it, and it’s only causing more problems.

People’s attention spans are deteriorating, their capacity to come up with original ideas and to be invested in storytelling is going down the drain along with their media literacy. It hurts more than anything cause we really didn’t have to go into this direction in society, but of course rich people are more inclined to make sure everybody on the planet are mindless robots and take whatever mechanical slop is fucking thrown at them while repressing everything that has to deal with creativity and passion and human expression.

The frequency of AI and the fact that it’s literally everywhere and you can’t escape it is a symptom of late stage capitalism and ties to the rise of fascism as the corporations/individuals who create, manage, and distribute these AI systems could care less about the harmful biases that are fed into these systems. They also don’t care about the fact that the data centers that hold this technology need so much water and energy to manage it it’s ruining our ecosystems and speeding up climate change that will have us experience climate disasters like with what’s happening in Los Angeles as it burns.

I pray for the downfall and complete shutdown of all ai chat bot apps and websites. It’s not worth it, and the fact that there’s so many people using it without realizing the damage it’s causing it’s so frustrating.

#I despise AI so damn much I can’t stand it#I try so hard to stay away from using it despite not being able to google something without the ai summary popping up#and now I’m trying to move all of my stuff out from Google cause I refuse to let some unknown ai software scrap my shit#AI is the antithesis to human creation and I wished more knew that#I can go on and on about how much I hate AI#fuck character ai fuck janitor ai fuck all of that bullshit#please support your writers and people in fandom spaces because we are being pushed out by automated systems

23 notes

·

View notes

Text

H1: “So what do you think of this “unending alien threat”?”

H2: “I honestly don’t care. Hasn’t affected my life greatly. I have bills to pay and student loans. Rent went up again. Essentials are more expensive and oranges aren’t available for some reason but that’s kinda the same thing we’ve been dealing with forever.”

H1: “I heard they’re a bunch of commies. Don’t have money anymore or are at least working toward that. Oh my god what if they pay for hormones???“

H2: “You think alien monsters from outer space care about that of all things?”

H1: “Hey they might! Let’s put it another way. Wouldn’t it be nice not to pay for insulin?”

H2: “Yeah okay you got me there.”

H1: “You ever think about… just leaving? Going off to join that band of xeno commies?”

H2: “I could turn you in for that. But I won’t. Because that’s looking like a better option every day.”

#humans are deathworlders#fully automated luxury gay space communism#in spaaaaaace!#human domestication guide#too but it’s not specifically#hdg

45 notes

·

View notes

Text

Have I ever explained this concept I’m obsessed with?

Person who ends up cryogenically frozen for awhile™️ wakes up finally and it’s to a strange world where there’s robots everywhere

With their image

Turns out their best friend didn’t take their disappearance well and kept making replica after replica of them because they couldn’t quite nail the personality down, and after awhile, without having seen the original in forever, the robots have become copies of copies of copies (essentially, very flanderized versions of the Human)

The best friend also made a ton of other robots and essentially became an evil overlord (their just woken up friend is Not Impressed™️) leading to the creation of a resistance group set out to stop them

The Human (who is absolutely confused at the moment and a bit scared) runs into the resistance group and they think they’re a defective bot (because even tho the personalities are off, the appearances aren’t off except for blemishes getting erased) who thinks they’re real

And they’re like “if this robot thinks it’s a Human, who are we to deny it Humanity” while the Human keeps trying to explain they are a Human, and also trying to avoid getting caught and “reprogrammed” by bot versions of themself

(2 other fun things in this: they used to make robots with their friend and after their disappearance their friend used a lot of their stuff to make new robots and so a ton of things have the Human’s failsafes and backup codes so other robotic things are no problem for them, only their bot selves, which were made completely new after they disappeared are a problem, and a few of the first gen robots they made are still around and recognized them immediately and keep trying to track their maker down but the resistance group keeps interfering)

Can never really think of a satisfying ending with the rebellion group because it always just boils down to the Human finally kicking the door down to face their friend and being like “what the fuck did you do in my image????”

(And then my brain tries to make it more convoluted by being like “and then it turns out that’s actually not even their friend, their friend died awhile ago and left behind a robot version of themself which is why they suddenly started acting so weird and supervillain-y evil and they never actually get to see each other again, they both just ended up with funhouse artificial versions of each other, and there is no satisfying ending confrontation, there never will be”)

#no fandom#humans#robots#there’s also a ton of other stuff I can’t articulate well#like the friend became an ‘overlord’ because of corporate greed just wanting to automate everything

132 notes

·

View notes

Text

Several years later, Facebook has been overrun by AI-generated spam and outright scams. Many of the “people” engaging with this content are bots who themselves spam the platform. Porn and nonconsensual imagery is easy to find on Facebook and Instagram. We have reported endlessly on the proliferation of paid advertisements for drugs, stolen credit cards, hacked accounts, and ads for electricians and roofers who appear to be soliciting potential customers with sex work. Its own verified influencers have their bodies regularly stolen by “AI influencers” in the service of promoting OnlyFans pages also full of stolen content. …

Experts I spoke to who once had great insight into how Facebook makes its decisions say that they no longer know what is happening at the platform … “I believe we're in a time of experimentation where platforms are willing to gamble and roll the dice and say, ‘How little content moderation can we get away with?,'” Sarah T. Roberts, a UCLA professor and author of Behind the Screen: Content Moderation in the Shadows of Social Media, told me.

Very good and troubling article. If Meta - one of the richest companies in the world - is giving up on moderation, what does it mean for the dying, cash-strapped website we’re all on?

#The automated spam filter right now is overtuned and seems to ban most people who’ve made new Tumblr accounts#And they'll unban you the moment you email Tumblr support about it#faster than a human could manage - it's pretty clearly also a robot unbanning people#I suspect Tumblr has automated the majority of its moderation#And I think the situation with trans women being banned is likely a function of that being a group of people subject to malicious reports#combined with that automation

19 notes

·

View notes

Text

#automation#capitalism#value of human life#sharing a screenshot (alt has full text) because OP got too many notes and locked it#my screenshots

123 notes

·

View notes

Text

xfinity customer service should kill itself. having nothing would be better than what they're trying to pass as helpful customer service

#unable to find a phone number to call on their website. finally found a number and it was an automated assistant fml#and then it wouldn't connect me to a human! xfinity should be shot and killed over this

10 notes

·

View notes

Text

Supervised AI isn't

It wasn't just Ottawa: Microsoft Travel published a whole bushel of absurd articles, including the notorious Ottawa guide recommending that tourists dine at the Ottawa Food Bank ("go on an empty stomach"):

https://twitter.com/parismarx/status/1692233111260582161

After Paris Marx pointed out the Ottawa article, Business Insider's Nathan McAlone found several more howlers:

https://www.businessinsider.com/microsoft-removes-embarrassing-offensive-ai-assisted-travel-articles-2023-8

There was the article recommending that visitors to Montreal try "a hamburger" and went on to explain that a hamburger was a "sandwich comprised of a ground beef patty, a sliced bun of some kind, and toppings such as lettuce, tomato, cheese, etc" and that some of the best hamburgers in Montreal could be had at McDonald's.

For Anchorage, Microsoft recommended trying the local delicacy known as "seafood," which it defined as "basically any form of sea life regarded as food by humans, prominently including fish and shellfish," going on to say, "seafood is a versatile ingredient, so it makes sense that we eat it worldwide."

In Tokyo, visitors seeking "photo-worthy spots" were advised to "eat Wagyu beef."

There were more.

Microsoft insisted that this wasn't an issue of "unsupervised AI," but rather "human error." On its face, this presents a head-scratcher: is Microsoft saying that a human being erroneously decided to recommend the dining at Ottawa's food bank?

But a close parsing of the mealy-mouthed disclaimer reveals the truth. The unnamed Microsoft spokesdroid only appears to be claiming that this wasn't written by an AI, but they're actually just saying that the AI that wrote it wasn't "unsupervised." It was a supervised AI, overseen by a human. Who made an error. Thus: the problem was human error.

This deliberate misdirection actually reveals a deep truth about AI: that the story of AI being managed by a "human in the loop" is a fantasy, because humans are neurologically incapable of maintaining vigilance in watching for rare occurrences.

Our brains wire together neurons that we recruit when we practice a task. When we don't practice a task, the parts of our brain that we optimized for it get reused. Our brains are finite and so don't have the luxury of reserving precious cells for things we don't do.

That's why the TSA sucks so hard at its job – why they are the world's most skilled water-bottle-detecting X-ray readers, but consistently fail to spot the bombs and guns that red teams successfully smuggle past their checkpoints:

https://www.nbcnews.com/news/us-news/investigation-breaches-us-airports-allowed-weapons-through-n367851

TSA agents (not "officers," please – they're bureaucrats, not cops) spend all day spotting water bottles that we forget in our carry-ons, but almost no one tries to smuggle a weapons through a checkpoint – 99.999999% of the guns and knives they do seize are the result of flier forgetfulness, not a planned hijacking.

In other words, they train all day to spot water bottles, and the only training they get in spotting knives, guns and bombs is in exercises, or the odd time someone forgets about the hand-cannon they shlep around in their day-pack. Of course they're excellent at spotting water bottles and shit at spotting weapons.

This is an inescapable, biological aspect of human cognition: we can't maintain vigilance for rare outcomes. This has long been understood in automation circles, where it is called "automation blindness" or "automation inattention":

https://pubmed.ncbi.nlm.nih.gov/29939767/

Here's the thing: if nearly all of the time the machine does the right thing, the human "supervisor" who oversees it becomes incapable of spotting its error. The job of "review every machine decision and press the green button if it's correct" inevitably becomes "just press the green button," assuming that the machine is usually right.

This is a huge problem. It's why people just click "OK" when they get a bad certificate error in their browsers. 99.99% of the time, the error was caused by someone forgetting to replace an expired certificate, but the problem is, the other 0.01% of the time, it's because criminals are waiting for you to click "OK" so they can steal all your money:

https://finance.yahoo.com/news/ema-report-finds-nearly-80-130300983.html

Automation blindness can't be automated away. From interpreting radiographic scans:

https://healthitanalytics.com/news/ai-could-safely-automate-some-x-ray-interpretation

to autonomous vehicles:

https://newsroom.unsw.edu.au/news/science-tech/automated-vehicles-may-encourage-new-breed-distracted-drivers

The "human in the loop" is a figleaf. The whole point of automation is to create a system that operates at superhuman scale – you don't buy an LLM to write one Microsoft Travel article, you get it to write a million of them, to flood the zone, top the search engines, and dominate the space.

As I wrote earlier: "There's no market for a machine-learning autopilot, or content moderation algorithm, or loan officer, if all it does is cough up a recommendation for a human to evaluate. Either that system will work so poorly that it gets thrown away, or it works so well that the inattentive human just button-mashes 'OK' every time a dialog box appears":

https://pluralistic.net/2022/10/21/let-me-summarize/#i-read-the-abstract

Microsoft – like every corporation – is insatiably horny for firing workers. It has spent the past three years cutting its writing staff to the bone, with the express intention of having AI fill its pages, with humans relegated to skimming the output of the plausible sentence-generators and clicking "OK":

https://www.businessinsider.com/microsoft-news-cuts-dozens-of-staffers-in-shift-to-ai-2020-5

We know about the howlers and the clunkers that Microsoft published, but what about all the other travel articles that don't contain any (obvious) mistakes? These were very likely written by a stochastic parrot, and they comprised training data for a human intelligence, the poor schmucks who are supposed to remain vigilant for the "hallucinations" (that is, the habitual, confidently told lies that are the hallmark of AI) in the torrent of "content" that scrolled past their screens:

https://dl.acm.org/doi/10.1145/3442188.3445922

Like the TSA agents who are fed a steady stream of training data to hone their water-bottle-detection skills, Microsoft's humans in the loop are being asked to pluck atoms of difference out of a raging river of otherwise characterless slurry. They are expected to remain vigilant for something that almost never happens – all while they are racing the clock, charged with preventing a slurry backlog at all costs.

Automation blindness is inescapable – and it's the inconvenient truth that AI boosters conspicuously fail to mention when they are discussing how they will justify the trillion-dollar valuations they ascribe to super-advanced autocomplete systems. Instead, they wave around "humans in the loop," using low-waged workers as props in a Big Store con, just a way to (temporarily) cool the marks.

And what of the people who lose their (vital) jobs to (terminally unsuitable) AI in the course of this long-running, high-stakes infomercial?

Well, there's always the food bank.

"Go on an empty stomach."

Going to Burning Man? Catch me on Tuesday at 2:40pm on the Center Camp Stage for a talk about enshittification and how to reverse it; on Wednesday at noon, I'm hosting Dr Patrick Ball at Liminal Labs (6:15/F) for a talk on using statistics to prove high-level culpability in the recruitment of child soldiers.

On September 6 at 7pm, I'll be hosting Naomi Klein at the LA Public Library for the launch of Doppelganger.

On September 12 at 7pm, I'll be at Toronto's Another Story Bookshop with my new book The Internet Con: How to Seize the Means of Computation.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/08/23/automation-blindness/#humans-in-the-loop

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

--

West Midlands Police (modified) https://www.flickr.com/photos/westmidlandspolice/8705128684/

CC BY-SA 2.0 https://creativecommons.org/licenses/by-sa/2.0/

#pluralistic#automation blindness#humans in the loop#stochastic parrots#habitual confident liars#ai#artificial intelligence#llms#large language models#microsoft

1K notes

·

View notes

Text

Viktor 🤝 Hebert West

Dr. Frankenstein-based character who gets his surpreme school work plagiarized by his own professor and fights The Man in the name of bending morality for the exploration of prolonging life... his college colleague is intrigued by his work, then terrified, then begrudgingly willing to cooperate, then...

#whats a little human automation?#idk enough about stanwick but we can throw singed somewhere in there too#League of Legends#Re-Animator#League of Legends Viktor#Viktor#Viktor League of Legends#Arcane#Arcane Viktor#Viktor Arcane#Jayce Talis#league of legends jayce

23 notes

·

View notes

Text

Nadeko canonically comes out the end of shinomono against considering AI real art.

Idk if nisio believes that but it’s certainly a makes sense as an opinion to give to Nadeko given the incident of shinomono.

In this way Nadeko’s word play of A.I. circulation in caramel ribbon cursetard could technically come full circle (baddum tsh).

#the word play is between ai as in electronically automated and ai as in love#nadeko initially considers it bc she might lose hand function from the incident but at the end says that art is fundement to human evolution#saying it’s not some ai circulation is a refrence to both her as a traditional artist and the song not being “renai circulation#as the arc plays on the breaking down and destruction of the concept of renai circulation#Sengoku Nadeko#nadeposting#naderamblings#I do think nisio is at least ai curious given the nft incident#but I’m trying to be nice abt it

7 notes

·

View notes

Text

#a.b.e.l#divine machinery#archangel#automated#behavioral#ecosystem#learning#divine#machinery#ai#artificial intelligence#wires and cables#serverroom#server room#angel#angels#robotics#robot#android#computer#old computers#divine beings#humans#sad#memories#sentient ai

9 notes

·

View notes

Text

what's the point of making an appointment if i'm gonna be called at the most inconvenient of times earlier that day anyway

#personal#I'M AT WORK AND YOU'RE CALLING ME WITH A PRIVATE NUMBER TO TALK ABOUT MY APPOINTMENT THAT I HAVE IN TWO HOURS FROM NOW#I CAN'T CALL YOU BACK AND IF I CALL THE GENERAL NUMBER OF THE HOSPITAL I WON'T GET TO TALK TO A HUMAN BEING BECAUSE IT'S ALL AUTOMATED#WHAT THE FUCK DO YOU WANT ME TO DO?????????? WHAT THE FUCK DO YOU WANT FROM MEEEEHEGGRGRVBRBRBBF

4 notes

·

View notes

Text

I see there's a new post on AO3 on AI and data scraping, the contents of which I would describe as a real mixed bag, and the sheer number of comments on it is activating my self-preservation instincts too much for me to subject myself to reading through them. Instead I'm thinking about how much daylight there is between does or doesn't constitute a TOS violation and what does or doesn't violate community norms, and how AO3 finally rolled out that blocking and muting feature recently, and how I think it would be good, actually, if most people's immediate reaction to seeing a work that announces itself as being the product of generative AI was to mute the user who posted it.

That's my reaction, anyway!

#ao3#christ i'm annoyed by so many people lately#i feel the same way about people posting “fic” they generated from a chat-gpt prompt as i would if they posted their google search results#is crafting a good prompt or query a skill? yeah#is it creative work? no#is there a difference between a human being creating based on existing art and a fancy predictive engine spitting out rearrangements?#absolutely#and that's not even getting into the problems with the ml datasets behind the fancy predictive engines#and the way this tech is already being used to decimate creative industries because capitalism privileges the shitty and cheap#or the way companies already generate shitty machine translations and try to pay translators next to nothing to “improve” them#which is typically much more work than starting from scratch#or just run with the shitty machine translation as is because they don't give a fuck if it's useless and confusing to users#anyway i'm real fucking grumpy#please stop trying to automate art and start automating the shit that gets in the way of humans having the time and resources to make art

77 notes

·

View notes