#how to redirect 404 error page to homepage in html

Explore tagged Tumblr posts

Text

How To Redirect To Multiple Websites With Using JavaScript?

Redirect To Multiple Websites तब होता है जब पता बार में कोई वेब पता टाइप करने पर विज़िटर टाइप की गई वेबसाइट से भिन्न किसी अन्य वेबसाइट (या URL) पर भेजा जाता है।

Redirect To Multiple Websites With Using JavaScript. ।मुख पृष्ठ।।पोस्ट।।Redirect To Multiple Websites। Redirect To Multiple Websites आप इस लेख मे पढ़ेंगे: Redirect To Multiple Websitesवेबसाइट रीडायरेक्ट क्या है?पैरामीटर क्या है?HTML कोड क्या है?आउटपुट रिजल्ट: वेबसाइट रीडायरेक्ट क्या है? रीडायरेक्ट तब होता है जब पता बार में कोई वेब पता टाइप करने पर कोई विज़िटर उसके द्वारा टाइप की गई वेबसाइट…

View On WordPress

#301 And 302 redirect#404 Redirect#अखंड रामायण#how to check website multiple redirect loop#how to redirect 404 error page to homepage in html#how to redirect multiple domains to one website#html redirect to multiple website in background#MNSGranth#redirect to 404 htaccess#redirect to multiple website in background#Redirect To Multiple Websites#redirecting multiple domains to one website#Wordpress#Wordpress Tricks

1 note

·

View note

Text

Tips To Make SEO Techniques Do The Job

It is wise to take care when taking advice from just anyone when it comes to SEO, but you will have to learn to trust someone. When you see the tips provided for you in the following paragraphs, you are going to understand that this information is around as trustworthy as it comes. No thrills or hyperbole here, only the basic truth about how you can use SEO to enhance your site's rankings.

you may also interesting in Longmont SEO

If you want searchers to find you, you have to be consistently providing all of them with content - high-quality substance full of the keywords they are looking for. This sounds elementary, when you are not regularly providing content in your blog or site, and in case it can do not contain the keywords you want your audience to get, you only won't get seen in searches.

To make certain your page is extremely ranked, you must pick the best keywords for optimization. Accomplish this by ensuring the keywords you decide on areas closely linked to the service or product you're offering as you can, plus be sure you're using terms that people look for frequently.

Grab any information the competitors present you with and use it to your benefit. Sometimes, competing websites provide you with exact specifics of their targeted keywords. There are two common approaches to find this information. One is to search in the META tag from the site's homepage. Additionally, on some pages with articles, some or every one of the keywords is usually in bold.

You need to avoid 404 errors at all costs. A 404 error happens when a person attempts to go to a page that not any longer exists or never existed from the beginning. Once you update pages with new links, make sure to make use of a 301 redirect. The redirect will automatically take the user towards the new link when they arrive at the old one.

Never make use of an unauthorized program that will help you submit your web site to a search engine. Many search engines like yahoo have detectors to view if you work with this sort of tactic and when you are, they will likely permanently ban your web site from any with their listings. This is called being blacklisted.

It is wise to utilize your keyword phrases in your HTML title tag. The title tag is the main weight during the search using the search engines. Had you been the reader, what words are you likely to locate? Once those words have been identified, they must be included in your page title.

A good rule of thumb to go by when confronted with SEO is to use your keywords and keyword phrases in your URLs whenever you can. Most search engines like google highly value the usage of keywords within the URL, so rather than using arbitrary numbers, select replacing them keywords.

Keep your site from link farms and avoid ever linking to just one out of your site. Search engines like google usually do not like link farms and being related to you might eventually affect your quest rankings. If you find yourself being linked from a, speak with the webmaster and request which they remove you.

Don't ignore long-tail keywords. when optimizing your content, long-tail keywords could grab you traffic faster than your primary keywords simply because they have far less competition. Do your homework and locate keyword combinations that are based on your primary keywords in virtually any of several ways. Brainstorm all of the different reasons why people can be typing your keywords and research related problems, issues and ideas to find new long-tail keywords to make use of.

If you intend on using search engine marketing to improve your traffic, an incredible tip is usually to name the photos on your site. The real reason for it is because typically, people notice images before words. Since people can't key in images to locate in an internet search engine, you must name your images.

A tag cloud is a wonderful way to link users to specific topics they could be interested in, and it is useful as being a tool to find out whether you have correctly focused your online pages on your desired keywords. However, tag clouds may also be considered keyword stuffing that will hurt your search engine optimization. Therefore, you ought to limit your consumption of tag clouds and keep track of whether the tag clouds you have, are used often enough by targeted traffic to justify their existence.

Make use of keywords to determine the anchor text of your internal links. Search engine spiders depend on descriptive key phrases to look for the subject of your page that these links to. Don't use the phrase, "Just Click Here." Using, "Click The Link," within your anchor text will add no value to your site.

For search engine marketing - use any extension readily available for your URL! There exists a pervading myth that using a .com is way better for the various search engines it is far from. The major search engines do not have a preference for .com, so if that isn't readily available for the keywords you require, a .net along with a .info work perfectly and help you save money as well.

In case you are unsure regarding what to type right into a search bar on a search engine to retrieve the results that you are interested in, it can be of great importance and assistance to ask a friend, colleague, or professor to help you. By doing this you simply will not struggle to attempt to find the information that you need online.

To be able to properly execute search engine optimization you must first pick the proper keywords for your site. This is a critical step. There are several free research tools on the net to help you out using this process. Among the easiest to work with which assists you in comparing the buzz as much as five keywords are Google trends.

As you can tell from this article, the very best SEO tips are short, sweet and to the point. They won't give you the runaround with fluff and so they don't make any bold promises that you'll be instantly rich if you only accomplish this or that. This can be real advice for the real site owner. Apply it wisely and view your rankings rise.

1 note

·

View note

Text

Top 10 SEO Mistakes & How To Fix Them Right Now

No matter what your company does, there will be hundreds of companies that do a similar thing. So how do you stand out from the competition and drive traffic to your exact website? The answer is simple: SEO. Search engine optimization is a complex issue that can either make it or break it in terms of your site’s visibility and traffic inflow. By fine-tuning the SEO part, you can enjoy a significant rise of the website visitors – but at the same time, improper SEO can hurt your site a lot. If you feel like your site can do better, or you are not satisfied with the users’ engagement, time to go through our list of the biggest SEO mistakes that most websites make and see if anything rings a bell.

1. Low site speed

You want your site to be ranked in top search results by Google so users can easily find it. Google, in turn, wants the users to be happy – and this is the primary reason behind its decision to include site speed in the search ranking algorithms. There are many things that can impact the speed of your site, including a poorly written code, lots of useless plugins, or heavy images. While you may not even suspect it, some of these things can drag your site down in terms of performance. The first step for you to take would be analyzing your site with PageSpeed Insights by Google. It is an immensely helpful tool that detects the biggest problems with your site and provides suggestions on how to fix them. Another thing that you can do is request an SEO audit from a reliable SEO company to get an in-depth analysis of the existing problems and recommendations on how to resolve them. The next steps will depend on the audit results. If there are a few minor issues, you will be able to fix them yourself. But if the problem is more serious, you might need to reach out for help. In e-commerce, for example, it is a common practice to hire developers that work with the specific e-commerce platform, especially if it’s a complex one like Magento. In this case, store owners will need a knowledgeable Magento 2 developer who can get the store back on track and speed it up to stop losing conversions.

2. Poor site structure

A well-organized site structure is essential. It helps users easily navigate through the site, and this, in turn, leads to better user experience. At the same time, it also helps search bots “understand” your site, see which content is the most important and index the pages correspondingly. So what makes a good site structure? It implies: Easy and clear navigation Proper categorization Internal Linking No duplicate pages Breadcrumbs (recommended) Unfortunately, many websites have complicated and confusing navigation, broken links, duplicate content, and other issues that prevent Google from proper indexing. Think of a good site structure as of a pyramid where the homepage is a cornerstone, and then the user gradually moves to categories, subcategories, etc. As well, check out this guide on setting up a site structure with a detailed explanation of each step. One more thing to consider is an XML sitemap. The XML sitemap is basically a list of the most essential pages of your site, and it helps Google see and index these pages. It is highly recommended for any website to have an XML sitemap. To make it visible for Google, you’ll need to add your map to the Google Search Console (a “Sitemaps” section).

3. Broken links

You may not even remember a newsletter that you sent to your customers a few months ago, but they may still click on the links in it. And it may happen that these links are broken and lead to a 404 page. There are a few reasons for 404 error. The user may incorrectly type the URL, the page was deleted, or a site structure was changed. As well, the broken links may be both internal and external, meaning, the other site might have a broken link that supposedly leads to your site. How do you keep an eye on all your links and ensure none of them result in a 404 error? For that, there are many available tools that will help you track your links and identify the broken ones. First, there are crawl tools that analyze the internal links on your site and report about the broken ones. Then, there are backlink tools that, as you might have guessed, analyze the external sources that may contain a broken link to your site. As well, you can track the 404 error with Google Analytics. Broken links are a serious issue. They harm the ranking of your site and decrease the user’s experience significantly. But before rushing to fix them, estimate how many users (or conversions) you are losing due to 404 error and then prioritize your tasks correspondingly.

4. Non-optimized images

When people hear SEO, they immediately think of keywords, links, XML sitemaps, and other “technical” stuff. What many site owners tend to ignore is the issue of image optimization, which often remains overlooked. Images are an essential part of your SEO optimization strategy. They help your site get noticed and draw users to it. After all, people do lots of image search, and you want your website to appear in these search results. An e-commerce store is an excellent example of a site that demands proper image optimization. With a variety of product images, such a store wants to be noticed among the competition and let the users know that it has the needed products. Naming the image Every image needs an informative and keyword-filled name so Google can understand what the image is about. Describe its key features and think what keywords users may search for. Then, add one or two keywords in the image description, so it looks something like “white-flat-shoes-women.jpg”. But don’t try to fill in the image’s name with keywords only - make the name descriptive and useful. Alt attributes You can see the alt attribute text when the browser does not display the image and shows text instead. Alt attributes help both users and search bots understand what the hidden image is about, so you need to write these attributes for every image on your site. Same as with image name, alt attributes have to contain keywords and describe the image in a clear and neat manner. As well, keep an eye on the image size and types. Certain image types like JPG or PNG are suitable for different purposes, and image size may impact the site load speed.

5. Non-optimized text

The content of your site serves a few crucial functions: it informs the users about your products/services and, at the same time, impacts your ranking. If the text does not have enough keywords, is not unique or relevant, does not contain any links, and does not bring value to the user, it can seriously hurt your ranking. The trick is, Google assesses the sites by their value for the users. If a bot sees high bounce rates or identifies a copypasted content on your pages, it will lower your site or even hide it from the search results. One of the biggest mistakes that many e-commerce marketers do with their text is leaving the product description from manufacturer untouched. That means, they copy and paste it without even the slightest altering. But think about this. How many stores, in your opinion, are selling the same products and copypasted the same text? Obviously, when seeing several identical texts, Google will rank more “reliable” sites higher than yours. Try writing a unique product/service description and create a unique copy across your site. And don’t forget the keywords – we will talk about them just in a moment.

6. Issues with keywords

Ah, the keywords. The special words that can draw the traffic to your site and significantly raise its ranking. However, for some sites, keywords remain a terra incognita. The most popular issues with keywords are: Overuse of keywords: the copy seems unnatural Misuse of keywords: use of irrelevant keywords, for example Missing keywords: there are no or very little keywords across your site. It is recommended to place the keywords all across the site, including the URLs and H1 tags. But how do you choose the relevant ones? Google offers an array of tools to search for keywords, including the Google AdWords Keyword Tool and Google Analytics itself. As well, study your competition and see which keywords are used most often. But when choosing keywords, always make sure they are relevant to the content on your site.

7. Duplicate content

Duplicate content means you have the same content on your pages, and this is bad for your ranking. The thing is, when Google sees the pages with identical content, it cannot decide which one is canonical. As a result, the overall ranking drops down, and the same happens to site visibility. There are a few ways how you can fix the issue: Use robots.txt to prevent individual pages from crawling Apply 301 redirect to redirect one link to another page Use no-index, no-follow tags to prevent the page from being indexed/crawled Use the rel canonical tag to let the search engine know which page is canonical. As well, the problem may be that you have identical content. In this case, do a rewrite or create unique content for every page, as we discussed above.

8. Misuse of the H1 tag

The H1 tag is an HTML tag that indicates a heading on a web page. This tag is on top of tag hierarchy on a page and helps search engines understand the content on the page. When a bot lands on a page, it reads the HTML code and immediately “understands” what the page is about due to proper tag hierarchy. So if your site has a few H1 tags or poor tag organization, it will confuse the bot and, as a result, hurt your ranking. Some best practices to follow when creating an H1 tag: Add keywords to it Try not to make it too lengthy Do proper hierarchy: h1, h2, h3, etc. Keep one H1 tag per page The use of the H1 tag also contributes to user behavior. It is more pleasant to interact with a well-organized page than do guesswork in an attempt to understand what this whole thing is about.

9. Content with zero value

By now, you may think: how does this relate to SEO mistakes? But valuable content is actually an essential part of your SEO strategy. In an attempt to drive traffic to the site, some entrepreneurs may decide to add many irrelevant but popular keywords to the site. While it may work at first, users will soon see that the content mismatches the keywords, and they will simply leave. The search bot, in turn, will see a high bounce rate on the page and will understand that your site does not offer any value to the users. As a result, your ranking will drastically drop, and you may even get blacklisted. As well, quality content contributes to link building. If your content is indeed informative and brings value, people will share it – and that means higher ranking.

10. Lack of mobile optimization

This one is really important. In 2018, Google rolled out mobile-first indexing. That means the mobile version of your site is now the first thing that Google will include in the index. Because the number of mobile users is growing drastically, website owners need to adapt to this change and transform their websites correspondingly. A mobile-friendly site usually means: Light-weight design for faster loading Interactive and clickable elements Larger fonts Mobile-friendly images Easy navigation If the site is not adapted for mobile, users will leave it faster than you’ll notice and it will hit a blow at your conversions.

Summary

Search engine optimization is essential for any website. It is responsible for the site’s visibility and hence, building its reputation online. Invest some time into building a solid SEO strategy and start by fixing the above SEO mistakes. Also, don’t forget to hold regular SEO audits. In return, you will be rewarded with a high ranking, satisfied users, and an inflow of conversions. If you need any help with your website's SEO, reach out to Wowbix Digital Marketing today! Read the full article

1 note

·

View note

Text

Your Website Must Have These Pages

When you are knee-deep into the digital marketing ecosystem, a website is your bread and butter. Having a well-designed website isn’t just important for more engagement, but it is also crucial for conversions. Undoubtedly every business is unique and every website should be different, however as per the top website SEO services in UAE, there are certain pages that every website must have to draw traffic and keep the audience hooked. Does your website have them all? Read on to find out!

1 Homepage

Your website’s homepage is like your front door. It will be one of your most viewed pages so you have to convey the right message to establish your credibility as a legitimate business. Your homepage should clearly state a brief explanation of how you can help your potential customer through your offerings.

2 About Page

The About Us section can help your visitors to answer some important questions such as your company’s history, who owns your business, your company’s vision and mission, and more.

3 Product/ services (if you offer any)

If you offer any product or service, a product page comes in handy. Don’t cram everything onto one page. Create a layout responsive enough to help your customers make an informed decision.

4 Contact Page

For both budding and established businesses, the contact us page serves as the lead driver of their website. Irrespective of whether you include a calendar scheduler, a contact form, a phone number, email id or any appointment booking app, a contact page is the most effective way to help your customers reach you out.

5 Testimonials Page

The testimonials page contains reviews from your previous clients. Believe it or not, opinions coming from seemingly objective third parties can increase your brand credibility and be extremely persuasive. So, having a testimonials page on your site is a mandate.

6 Blog Section

The fact that you are reading this blog now proves that blogging is a tried-and-true method to optimize any website for more traffic. Instead of loading up different product pages for each individual keyword, you can create a blog to increase your ranking, build authority and spread brand awareness.

7 Latest News

In this section, you can post links to redirect your customers to the articles, advertisements, press releases, advertisements and many other accomplishments of the business.

8 Privacy Policy

The privacy policy is a page containing how your website gathers, uses, manages and discloses customers’ data.

9 Sitemap Page

Typically sitemaps come in the XML and HTML frameworks, and they act as an index of all the web pages that are included in your site. Your sitemap should be located at the footer throughout all of your webpages, where possible. Many plugins can aid you in building an HTML sitemap.

10 Site Not Found Page

Also known as a 404 error page, this is a page that your visitors get directed to when a webpage has moved, expired or is no longer working. As a 404 error page represents a standard HTML framework, you can customize it any way you want. Just ensure to add a link back to your homepage, so your visitors can get back to your site easily.

Ask the experts!

As a website owner, you wouldn’t just be disseminating information and soliciting responses, you would also be needing conversions for your site. If you want to inculcate all the necessary web pages on your site, optimize your pages like a pro, and get more ROI, then it’s best that you go for the top website SEO services in UAE. The professionals can help you create a responsive and dependable web framework that can keep your customer hooked.

So, did you get started yet?

Source: https://seooutofthebox.com/your-website-must-have-these-pages/

0 notes

Text

What is a 301, 302 Redirect and How to fix it- in Hindi

301, 302 Redirect 404 - पेज नहीं मिला, 403 - निषिद्ध और, 500 - सर्वर त्रुटि तथा 301 और 302 क्या है इसे Wordpress मे कैसे ठीक करते है जैसी सभी जानकारियाँ एक साथ जाने और स्वयं ही ठीक करें अभी।

301, 302 Redirect तथा 404 – पेज नहीं मिला, 403 – निषिद्ध और, 500 – सर्वर त्रुटि सहित सभी जानकारियाँ एक साथ। ।मुख पृष्ठ।।पोस्ट।।301, 302 Redirect and How to fix it। 301, 302 Redirect 301 (स्थायी) रीडायरेक्ट क्या है? 301 एक HTTP स्थिति कोड है जो एक वेब सर्वर द्वारा एक ब्राउज़र को भेजा जाता है। एक 301 एक यूआरएल से दूसरे यूआरएल पर स्थायी रीडायरेक्ट का संकेत देता है, जिसका मतलब है कि पुराने यूआरएल…

View On WordPress

#301 And 302 redirect#301 Redirect#301 vs 302 redirect#302 Redirect#404 Redirect#difference between 301 and 302 redirect#how to redirect 404 error page to homepage in html#Html 404 errors#what&039;s the difference between a 301 and a 302 redirect

0 notes

Text

6 Easy Ways to Help Reduce Your Website’s Page Load Time

New Post has been published on https://tiptopreview.com/6-easy-ways-to-help-reduce-your-websites-page-load-time/

6 Easy Ways to Help Reduce Your Website’s Page Load Time

The adage “patience is a virtue” doesn’t apply online.

Even a one-second delay can drastically reduce pageviews, customer satisfaction and drop conversions. The speed of your site even affects your organic search rankings.

So what’s the biggest factor contributing to your page speed?

Size.

It takes browsers time to download the code that makes up your page. It has to download your HTML, your stylesheets, your scripts and your images. It can take a while to download all that data.

As web users expect more engaging site designs, the size of a site’s resource files will continue to grow. Each new feature requires a new script or stylesheet that weighs down your site just a little more.

How do you make sure your site is up to speed?

There are some great resources for analyzing this. Google’s PageSpeed Insights, HubSpot’s Website Grader, and GTMetrix are some of the most popular. Both services will analyze your site and tell you where you’re falling behind.

A little warning: the results can be a bit daunting sometimes, but most fixes are relatively quick and easy. You might not fix everything the speed service recommends, but you should fix enough to make the site experience better for your visitors.

Let’s learn how to speed up things.

While a few modern content management systems like HubSpot implement speed-enhancing options out-of-the-box, more common systems, like WordPress and Joomla, require a little manual labor to get up to speed.

Now let’s look at some essential speed solutions that every webmaster should consider.

How to Reduce Page Load Time

Scale down your images.

Cache your browser for data storage.

Reduce CSS load time.

Keep scripts below the fold.

Add asynchronous pages.

Minimize redirects.

1. Scale down your images.

Images are one of the most common bandwidth hogs on the web. The first way to optimize your images is to scale them appropriately, so they don’t affect page loading time as much.

Many webmasters use huge images and then scale them down with CSS. What they don’t realize is that your browser still loads them at the full image size. For example, if you have an image that is 1000 x 1000 pixels, but you have scaled it down to 100 x 100 pixels, your browser must load ten times more than necessary.

Take a look at the size difference when we scaled down one of our images:

Just by changing the dimensions of my image, from 598 x 398 to 600 x 232, the file size decreased immensely. If you optimize your images before uploading them, you won’t forget to when you put them on your page.

Sometimes though, scaling images will make your photos blurry. The clarity can be lost and the image becomes distorted. If that usually happens to you, go with the second option: compressing.

Compressing images will drastically reduce image size without losing out on the quality. There are several free online tools for image compression, such as tinypng.com, that can reduce your image sizes.You can see size reductions anywhere from 25% to 80%.

For example, I took that first image, with 133 KB, and compressed it using a free website app called Squoosh. When the image was completed, it was 87% smaller, and didn’t lose any of its original quality.

2. Cache your browser for data storage.

Why make visitors download the same things every time they load a page? Enabling browser caching lets you temporarily store some data on a visitors’ computer, so they don’t have to wait for it to load every time they visit your page.

How long you store the data depends on their browser configuration and your server-side cache settings. To set up browser caching on your server, check out the resources below or contact your hosting company:

3. Reduce CSS load time.

Your CSS loads before people see your site. The longer it takes for them to download your CSS, the longer they wait. An optimized CSS means your files will download faster, giving your visitors quicker access to your pages.

Start by asking yourself, “Do I use all of my CSS?” If not, get rid of the superfluous code in your files. Every little bit of wasted data can add up until your website’s snail-pace speed scares away your visitors.

Next, you should minimize your CSS files. Extra spaces in your stylesheets increase file size. CSS minimization removes those extra spaces from your code to ensure your file is at its smallest size.

See if your CMS already minimizes your CSS or if there’s an option for it. HubSpot, for example, already minimizes your CSS by default, whereas WordPress websites require an additional plugin such as WP Hummingbird to optimize those files.

If your CMS does not have a minimize CSS option, you can use a free online service like CSS Minifier. Simply paste in your CSS and hit “Compress” to see your newly minimized stylesheet.

Minimizing your resource files is a great way to knock some size off your files. Trust me — those little spaces add up quickly.

4. Keep scripts below the fold.

Javascript files can load after the rest of your page, but if you put them all before your content — as many companies do — they will load before your content does.

This means your visitors must wait until your Javascript files load before they see your page. The simplest solution is to place your external Javascript files at the bottom of your page, just before the close of your body tag. Now more of your site can load before your scripts.

Another method that allows even more control is to use the defer or async attributes when placing external .js files on your site. Both defer and async are very useful, but make sure you understand the difference before you use them.

Async tags load the scripts while the rest of the page loads, but scripts can be loaded out of order. Basically, lighter files load first. This might be fine for some scripts, but can be disastrous for others.

For instance, let’s say one of your pages has a video above the fold, text underneath, and a couple of pictures at the bottom. If you run async tags on this page, the text will load first, then the images, and the video last.

The largest file — the video file — is also what users are supposed to interact with first. When it loads last, it doesn’t have the same impact. So use async tags if your page has a lot of the same elements that don’t take a long time to load, like text, or a carousel of images.

The defer attribute loads your scripts after your content has finished loading. It also runs the scripts in order. To illustrate, think of your homepage. All of the elements, including image fields, text fields, and layout, would appear in order, with the rest loading after.

Just make sure your scripts run without breaking your site. All you need to do is add a simple word in your <script> tags. For example, you can take your original script:

<script type=”text/javascript” src=”/path/filename.js”></script>

And add the little code to ensure it loads when you want:

<script type=”text/javascript” src=”/path/filename.js” defer></script>

<script type=”text/javascript” src=”/path/filename.js” async></script>

The importance of your scripts will determine if they get an attribute and which attribute you add. More essential scripts should probably have the async attribute so they can load quickly without holding up the rest of your content. The nonessential ones, however, should wait until the end to ensure visitors see your page faster.

But always make sure you test each script to ensure the attribute doesn’t break your site.

5. Add asynchronous pages.

Most web pages download content little by little from different sources. For instance, the body of a web page is loaded by the browser. The head, however, is rendered by external sources, such as a stylesheet and script used to load text and images.

The browser loading the page from beginning to end, head to body, takes a while, but is how a lot of pages are rendered, with this synchronous loading. If one part of the page needs a certain function to load, but that component is already loading another portion of the page, then that initial load must finish before getting to work on the next task.

In the meantime, the visitor is waiting for ages for a web page to appear. On the backend, so much of a computer’s processor is being leveraged that it seems like everything just stops.

Instead, asynchronous loading recognizes the scripts that can be loaded simultaneously, overriding synchronous features. When certain pages are loaded at the same time, eliminating that chain of function commands discussed earlier, other aspects of the page, like the page’s head, can be loaded in tandem.

Asynchronous pages can be implemented just by adding in a few lines of code in your page’s CSS. Read how to design them in this post.

6. Minimize redirects.

How many redirects rest on your website? If you recently did a website migration or acquired subdomains, it’s likely you have an HTTP request or two. These redirects make pages take a while to load.

Redirects, like “Error: 404 not found,” pop up when users type in an incorrect web address or are taken to a broken page on a website. When a page is redirected, another page takes its place or a blank page with the error message replaces the screen.

Visitors would have to sit through the HTTP screen while they’re being redirected, increasing the amount of time taken to load the page. Let’s say your “About” page has been moved to a different subdomain.

When visitors type in the website, let’s say mywebsite.com/about, they should be taken instantly to that page. Instead, mywebsite.com/about leads to an HTTP page, then takes a visitor to mywebsite.com > mywebsite.com/aboutus. That’s an additional page users have to go through just to get to their intended destination.

Instead of hosting multiple redirects, remove them. In this post, you’ll learn how to find all of your redirect pages, alternatives, and most importantly, how to remove them.

As online users demand a richer online experience, the size of our pages will continue to grow. There will be flashier Javascript, more CSS tricks and more third-party analytics to weigh down our websites.

A little attention will go a long way — remember, just a one-second delay is all it takes to lose a lead.

Source link

0 notes

Text

Page Redirects in WordPress or ClassicPress

Page redirects in WordPress or ClassicPress are not the most straightforward topic if you are dealing with it for the first time. Many people have heard of page redirects before but aren’t always sure when you need to use them or how to implement them. These are sometimes needed when maintaining a Wordpress or Woocommerce site. In the following blog post, you will learn everything you need to know about page redirects (in WordPress and otherwise). This includes what they are and why they matter when to use what type of redirect, where to apply them, and different ways of correctly implementing page redirects on your WordPress site, so to start lets take a look at what they are.

What Are Page Redirects and Why Do You Need Them?

Page redirects are basically like a send-on notice for the post office. When you move, you can get one of those and any mail that was sent to your old house will automatically be delivered to your new mailing address. Redirects are the same thing but for web pages only that, instead of letters and parcels, it sends visitors and search spiders to another web address. Implementing page redirects can be necessary for many reasons: A mistake in your title and URL that you want to correct Attempting to add/target a different keyword with your page The entire permalink structure of your site has changed Some external link is pointing to the wrong address and you want visitors to find the right page You want to change parts of your URL, like remove www or switch to HTTPS (or both) You have moved to an entirely new domain (or merged another site with yours) and want the traffic and SEO value of the old URL to land on the new one Why Do They Matter? From the above list, it’s probably already obvious why page redirects are a good idea. Of course, if your entire site moves, you don’t want to start from scratch but instead, benefit from the traffic and links you have already built. However, even if you only change one page, implementing a redirect makes sense. That’s because having non-existent pages on your site is both bad for visitors and search engine optimization. When someone tries to visit them, they will see a 404 error page. This is not a pleasant experience and usually very annoying (as entertaining as 404 pages can be). Because of that, search engines are also not a big fan of this kind of error and might punish you for it. Also, you want them to understand your site structure and index it correctly, don’t you? Therefore, it’s a good idea to leave a “this page no longer exists, please have a look over here ” message whenever necessary. Different Redirect Codes and What They Mean When talking about redirects, you need to know that there are several different types. These are categorized by the HTTP codes that they have been assigned to, similar to the aforementioned 404 error code for a missing page. However, for redirects, they are all in the 300 category: 301 — This is the most common kind. It means that a page has moved permanently and the new version can from now on be found at another location. This page redirect passes on 90-99 percent of SEO value. 302 — This means a page has moved temporarily. The original URL is currently not available but will come back and you can use the new domain in the meantime. It passes no link value. 303 — Only used for form submissions to stop users from re-submitting when someone uses the browser back button. This is probably not relevant to you unless you are a developer. 307 — The same as a 302 but for HTML 1.1. It means something has been temporarily moved. 308 — The permanent version of the 307.

When to Use What? Of course, the biggest question is, when to use which type of page redirect? While there are several options, you usually only need two of them: 301 and 302. Out of those, probably more than 90 percent of the time, you will use a 301. That’s because for the rest (except 303), it’s not always clear how search engines handle them, so you basically stick to those two options. As for when to use which, much of it you can already understand from what the code tells the browser or search spider, however, here’s a detailed description: 301 — Use this when you are planning on deleting a page and want to point visitors to another relevant URL or when you want to change a page’s permalink (including the domain). 302 — Use this, for example, when making changes to a page that visitors are not supposed to see or when you redirect them to a temporary sales page that will soon turn back to the original. That way, search engines won’t de-index the existing page. Redirects and Page Speed While page redirects are great tools for webmasters and marketers, the downside of them is that they can have an effect on page speed. As you can imagine, they represent an extra step in the page loading process. While that’s not much, in a world where visitors expect page load times mere seconds, it matters. In addition, page redirects use up crawl budget from search engines, so you can potentially keep them from discovering your whole site by having too many of them. Therefore, here are some important rules for their usage: Avoid redirect chains — This means several hops from an old to a new page. This is especially important when you redirect http to https and www to non-www. These should all resolve to the same domain directly (https://domain.com), not ping pong from one to the next. Don’t redirect links that are in your control — This means, if there is a faulty link inside a menu, inline, or similar, change them manually. Don’t be lazy. Try to correct external links — If the fault is with an incoming link, consider reaching out to the originator and ask them to correct it on their end. In essence, keep page redirects to a minimum. To see if you have multiple redirects in place, you can use the Redirect Mapper.

How to Find Pages to Redirect and Prepare the Right URLs

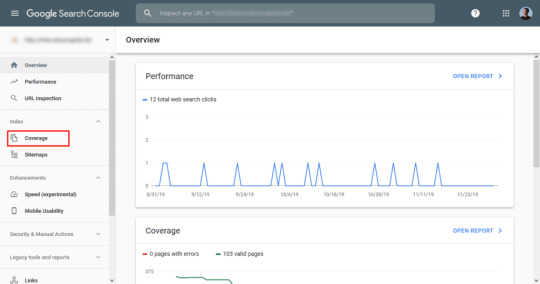

So, besides when you do a site or page move, how do you find pages to redirect? A good place to start is the 404 errors/crawl errors in Google Search Console. You find them under Coverage. Note that Search Console now only shows 404 errors that threaten your pages from being indexed and not all of them. Therefore, to track down non-existent pages, you can also use a crawler like Screaming Frog. Some of the WordPress plugins below also help you with that, additionally you can take a look at SEMRush, and SEO management tool which is very popular, and used by many experts and beginners alike, you can get a free trial via the link above. Then, to prepare your page redirects: Get the correct to and from URL — This means to stay consistent in the format. For example, if you are using a trailing slash, do it for both URLs. Also, always redirect to the same website version, meaning your preferred domain including www/non-www, http/https, etc. Get the slug, not the URL — This means /your-page-slug instead of http://yoursite.com/your-page-slug. This way, you make your redirects immune to any changes to the top-level domain such as switching from www to non-ww or from http to https. Redirect to relevant pages — Meaning similar in topic and intent. Don’t just use the homepage or something else, try to anticipate search intent and how you can further serve it.

How to Correctly Implement Page Redirects in WordPress

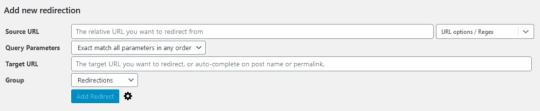

You have different methods of implementing page redirects in WordPress. Basically, you can either use a plugin or do it (somewhat) manually via .htaccess. Both come with pros and cons: Plugin — Easy to use, nontechnical, however, potentially slower because many of them use wp_redirect, which can cause performance issues. .htaccess — This is a server file and very powerful. For example, you can include directives for using gzip compression in it. Using this is faster because page redirects are set up at the server level, not somewhere above it. However, making a mistake can mess up and/or disable your entire site. Let’s go over both options: 1. Using a Plugin You have different plugin options for redirects in WordPress. Among them are: Redirection — This is the most popular solution in the WordPress directory. It can redirect via Core, htaccess, and Nginx server redirects. Simple 301 Redirects — Easy to use, few options, does just what you need and nothing more. Safe Redirect Manager — With this plugin, you can choose which redirect code you want to use (remember what we talked about earlier!). It also only redirects to white-listed hosts for additional security. Easy Redirect Manager — Suitable for 301 and 302 redirects. The plugin is well designed and comes with many options. All of the plugins work in a very similar way. They provide you with an interface where you can enter a URL to redirect and where it should lead instead.

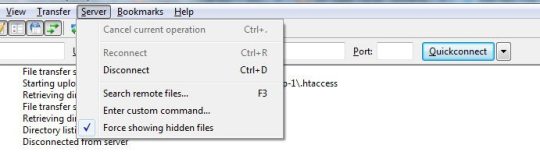

Some of them, like the Redirection plugin, also have additional functionality. For example, this plugin also tracks whenever a visitor lands on a page that doesn’t exist so you can set up appropriate page redirects. 2. Using .htaccess .htaccess usually resides on your server inside your WordPress installation. You can access it by dialing up via FTP.

Be aware though that it is hidden by default, so you might have to switch on the option to show hidden files in your FTP client of choice.

The first thing you want to do is download and save it in a safe place so you have a copy of your old file in case something goes wrong. After that, you can edit the existing file (or another local copy) with any text or code editor. A simple redirect from one page on your site to another can be set up like this: RewriteEngine On Redirect 301 /old-blog-url/ /new-blog-url/ If the brackets already exist (as they should when you are using WordPress), all you need is this: Redirect 301 /old-blog-url/ /new-blog-url/ Just be sure to include it right before the closing bracket. You can also use wildcards in redirects. For example, the code below is used to redirect all visitors from the www to the non-www version of a website. RewriteCond %{HTTP_HOST} ^www.mydomain.com$ RewriteRule (.*) http://mydomain.com/$1 To explore more options and if you don’t want to write them out manually, there is this useful tool that creates redirect directives for you. When you are done, save/re-upload and you should be good to go. Be sure to test thoroughly!

Conclusion

Page Redirects in WordPress can be very useful & page redirects have a very important function. They keep visitors and search engines from landing on non-existent pages and are, therefore, a matter of both usability and SEO. Above, you have learned all you need to know about their usage and how to implement them. You are now officially ready to start sending visitors and search spiders wherever you want. Note that these aren’t the only ways to implement page redirects. However, they are the most common and recommended. If you want to know less common ways, check this article on CSS Tricks. What do you use to implement page redirects in WordPress? Any more tools or tips? Share them in the comments section below & if you enjoyed this post, why not check out this article on WordPress Building Trends For 2020! Post by Xhostcom Wordpress & Digital Services, subscribe to newsletter for more! Read the full article

1 note

·

View note

Text

SEO Local Platinum

The LVPro | Local Platinum offering includes: Keyword Topics: SEO work will focus on 6 keyword topics Trackable Keywords: We will focus on 30 target keywords and track 150 keywords Optimized Pages: We will recommend or perform optimization of website pages every month Locally Relevant Link Building: We will build backlinks (from local search engines & directories), business listings and NAP enabled citations every month to improve local presence Content Optimization:We will post industry-specific editorial content and create/modify website content to boost rankings THE LVPro PROCESS : Site Assessment and Intake: We audit your website, online presence of your business and gather information about your target audience and goals. Keyword Research: We research the best 30 keywords based on the industry vertical and physical location to bring the most effective results. Target keywords are approved by mutual consensus. Content and On Page Optimization: From our site assessment and keyword research, we implement high-quality content optimization to your site to engage visitors. Extensive on page optimization includes Google tools integration, plagiarism check, page load time & mobile friendly check and implementation of page title, meta description, header tags, internal linking, local schema setup, image alt and hyperlink optimization. Local Optimization: Once the content optimization is in place, we focus on building the local presence by submitting the business to top local search engines and directories including Google My Business, Bing Local, Apple Maps, Facebook and more. Monthly Link Building: With monthly link building via informational content posting, guest blogging, local citations and NAP enabled directory submissions, we rank the website for target keywords with an authoritative backlink portfolio.

Applicable Add Ons

Call Tracking ( Local No. ) 1 Month Additional work to optimize the website. $40 Monthly (TEMPORARILY FOR FREE) Call Tracking ( Toll Free No. ) 1 Month Additional work to optimize the website. $40 Monthly (TEMPORARILY FOR FREE)

What you can Expect

Customizable dashboard: Track your campaign progress and access and view campaign performance and includes email notifications. Affordable SEO with no obligation: LVPro offers comprehensive, high-quality, US-based SEO at reasonable prices. We’re so confident in our work and our pricing that we don’t have long term contracts! Experience and technology: We’re have helped over 45,000 businesses boost their search rankings and we manage over 5 million keywords. This experience, coupled with our industry-leading technology, gives us access to vast amount of data that keeps us ahead of other SEO providers. Complete transparency and measurable results: LVPro’s powerful reporting dashboard shows your SEO dollars are going. Campaign monitoring: LVPro monitors every SEO campaign to identify areas of improvement. We also provide automated weekly and monthly updates on campaign performance to ensure your goals are being met. LVPro Reporting & Customer Dashboard Creation Global Website Audit Page Specific Audit - up to 30 Pages 3 Competitors Analysis Backlink Profile Analysis and Link Detox - Up to 150 Domains Keyword Research, Grouping & URL Mapping Website Duplicate Content Check - up to 30 Pages Google Penalty Check Website Form Conversion and Event Tracking - up to 6 Goals Review Widget Installation to Generate Positive Reviews Call Tracking (if included) HeatMap, ScrollMap, Overlay and Confetti Report Setup for Homepage User Testing Video Page Title & Meta Tags Creation - up to 30 Pages 6 Website Content Writing (250 Words Per Page) Internal Linking, Image, Hyperlink and Heading Tag Optimization - up to 30 Pages Image GEO Tagging - up to 30 Images Homepage & 2 Landing Page Speed Reporting on Desktop & Mobile Robots.txt Optimization Page URL Canonicalization - up to 30 Pages Adding Rich Snippets - up to 30 Pages Voice Search Optimization Schema Implementation (Local Business Information, Map, Image, Logo and Reviews) - up to 30 Pages FAQ Schema Implementation Google My Business (GMB) Listing Setup and Optimization with Description GMB Website Embed - up to 30 Pages 4 GMB Posts 6 GMB Q&A’s 5 GPS Listing Submissions Google My Map Syndication with Driving Directions Weekly SEO Status Reports Month 1 SEO Performance Report 6 Editorial / Guest Blog with Social Boost 1 Press Release Writing, Distribution & Social Boost 1 Infographic Creation, Distribution & Social Boost 1 Brand Interview 2 Q&A Posting FAQ Page Content Google Analytics, Search Console and Bing Webmasters Setup Analytics Spam Filtering Broken Link Fixing, GSC 404 Error Correction & 301 Page Redirection - up to 30 Pages User HTML Sitemap Creation, Uploading & Linking XML Sitemap Creation & Submission to Google & Bing Local Citation Audit with NAP Inconsistency Fixing, Optimizing Existing Listings & Troubleshooting Duplicate Listings Bing Local, Apple Maps & Facebook Local Listings Yelp Advanced Optimization 6 Authority Business Listings 12 Industry Specific + 2nd Tier Local Citations Website Social Boost - 6 URLs X 20 Sites Promotional Video & Business Presentation - 2 Videos & PPTs X 10 sites GEO Tagged Photo Submissions - 6 Photos X 5 Sites 6 Social Network Citations Local & Social Community Setup 6 Coupon Distribution (if provided) Weekly SEO Status Reports Month 2 SEO Performance Report 6 Editorial / Guest Blogs with Social Boost 6 Social Network Citations 6 Authority Business Listings 12 Industry Specific + 2nd Tier Local Citations 4 GMB Posts Continued SEO Enhancements to Improve SEO Performance Bi-Monthly SEO KPI Audit Bi-Monthly Content Gap Analysis, Backlink Gap Analysis & Keyword Opportunity Assessment One Time User Experience Video Weekly SEO Status Reports Monthly SEO Performance Reports

FAQ's

Once you order is successfully placed, we will assign you an account manager within 24 business hours who will be your primary point of contact and will reach out to you to get things started. Following the launch of the campaign on the dashboard, we will work on the deliverables as mentioned and reach out weekly and monthly with updates on progress and any changes required to help improve performance. Local SEO targets local search specifically. Which means that your audience is within a few miles from your business location and that your online presence is meant to drive foot traffic to an actual establishment. This is done through several methods including using geo-centric keywords, producing locally relevant content, building citations on local niches and directories, etc. National SEO is location neutral. It's ideal for websites that generate revenue exclusively online or are just looking to generate awareness through a wide audience. If you're running a nationwide campaign or an e-commerce campaign, this is the perfect methodology for you. Yes! As long as a business offers a viable product or service, SEO can move their website higher in search rankings and drive more sales. LVPro provides SEO services for business owners across the globe. Supported languages are English (North America, UK, Australia) and Spanish. Good SEO can’t be done overnight, it takes time. Many of our customers start to see positive momentum in 3-4 months. In fact, over 82% of our small business clients can reach the 1st page of Google after 6 months if the right conditions are met. Keyword Optimization: It takes a lot to rank well for a keyword, so we do exhaustive research to find the very best ones keywords for the business. Website Optimization: We perform a full-service cleanup as well as exhaustive upgrades to the website code and user experience. Business Profile Development: To make sure Google and prospective customers know that the business is active, we create and maintain up-to-date profiles on trusted online business directories. Link Portfolio Development: Get the word out about the business. We help by creating a diverse portfolio of links to the website and place them in strategic locations all over the web—on popular industry sites, news sites, blogs, articles, and more. Custom Content Creation: We create and post high-quality content that will keep the website fresh and at the top of the search rankings and keep potential customers interested . Service and Performance Reporting: We provide reports that show businesses at-a-glance how their campaign is performing as well as an in-depth review of all the work we’ve done on their behalf. Campaign Monitoring and SEO Consulting: Our goal is to help small businesses succeed online. To help achieve this we actively monitor every SEO campaign to identify areas for improvement. We also provide monthly consultations to review each campaign and ensure client goals are being met. The keywords we suggest are unique to every business and are based on extensive research. We consider many factors including your specific products or services, industry, location, competition, and more. Our goal is find the keywords that will provide the most impact for your business. LVPro Local SEO is offered in 5 different packages to fit the needs and budgets of all small businesses. Our Local SEO packages are based on monthly pricing. Packages with higher pricing will include more activities, but all of our packages include dedicated client support and full transparency into the work being performed. Our LVPro pricing sheet provides complete details on pricing and tasks included in each package. LVPro provides total transparency into the work that we perform. Each client will be given access to a custom reporting dashboard that shows every SEO action being performed both on and off their website. Clients will be able to track every action performed, as well as monitor keyword movement and overall performance. We also provide weekly and monthly updates for all SEO customers to review campaign activity and performance, ensuring that their goals are being achieved. In most situations client support is handled through you, the reseller. Weekly and Monthly campaign progress reports are delivered by LVPro to you and your clients via dashboard. Any client questions or concerns regarding their SEO campaign can be brought to LVPro by you, the reseller. We highly recommend using our dashboard for communication. For help selling a potential client or any product related questions, you can reach out to us via email [email protected] For any active clients, you can contact your assigned account manager directly through dashboard conversation. All Services rendered by LVPro are on a prepaid subscription basis. Depending on the amount of work completed at the time of cancellation, this may mean receiving a full refund, a partial refund, no refund, or owing additional fees. To cancel a service, you can reach out to your assigned account manager or our support team via email at [email protected] Read the full article

0 notes

Text

7 Steps to Grow your Church Using SEO

The question for many pastors and church planters is, “How can I increase traffic to my church website?”

Instead of straining your budget and subjecting your church to costly SEO, here are seven simple tactics that will help your church’s website rise in the local google search rankings.

Though these techniques are more for the DIY (do it yourself) crowd, if you wish to learn how search engine optimization (SEO) can help grow your church membership, you will want to get your free SEO analysis by completing the short form in the footer and we’ll be happy to follow up with what we can do to make sure you receive the maximum exposure possible online. Watch this short video to learn everything you need to know about SEO.

youtube

Seven tips for improving your church website rankings.

1. Disburse the power of your home page’s link to your most eminent (relevant – main) pages. Put simply, spread the link juice around your website.

As one could assume, your homepage draws in many more online consumers than any other part of your website. It is important that link authority is easily directed from your homepage to your most evident subpages. Your most secure bet is through HTML links.

Even if you have already made sure that your most crucial category pages are incorporated in the top nav, it is critical that you include links to your most prized products, or articles (content) and landing pages. Footer and navigational links will not pass much authority; however, links in the body of a homepage will be most likely to attract more views and direct more traffic to your important subpages. And you can always remember, where the viewer goes, so does Google bot.

Tip: Create simple and straightforward links so that online consumers can easily find your website

2. Use smaller images that will load faster. No one likes to carry more weight than they need to…

When designing a website, it is important to consider the file size and resolution of an image prior to including it on the webpage. Although putting large images on a website because of the quality you will get is tempting, it can drastically slow down the sites load time.

An image with 600 dots per inch that was shrunk down using height and width features not only gives online consumers the idea that the website designer was lazy, but impedes them from further using the website. Remember, a slow website will timeout on some devices and browsers, leading the visitor to click away never to return. Also, an image too large or the webpage can significantly slow down the load time- hindering the consumer’s experience on the website. And as Google is using page load time as a ranking factor, this can and will affect your local search ranking.

Lucky for you, it’s incredibly simple to change that image to a smaller-more reasonable-size, and then re-upload it. This will not only increase your website’s aesthetic appeal but it will also optimize your site speed.

Make sure to check the file sizes of your images prior to publishing your website. An easy tool to use is WebPageTest, which will check the file sizes of all the aspects of your page. Teach your design team to not upload images that are too large to your webpage. Checking images before including them on a website is a good habit to get into. A powerful tool for optimizing images is JPEGmini by Beamr.

3. Ensure that other sites aren’t linking to pages that are returning a 404 message.

If URLs are returning a 404 error (dead link) on your website it not only impedes the visitor from getting the information but it will damage your site’s link authority. Google Search Console is a simple and reliable tool that allows you to check for 404 pages on your site and whether or not they are being linked to. If the web service reveals that you have an externally linked page that leads to a 404, it is crucial that you fix it immediately.

TIP: To check 404’s using Google Search Console, just navigate to ‘Crawl’ – then – ‘Crawl Errors’ – ‘Not Found’, and select every URL that is linking to a 404 error page.

Google will prioritize the errors, and the most prominent errors they will include their external links. Click the URL and then select the “Linked From” tab which will display the URLs linking to the 404s. Ensure that these misleading URLs are permanently redirected to the correct link on your site.

As your link recovery skills begin to develop it is important that you increase GSC utilizing other helpful tools like Link Research Tools, Ahrefs Broken Links report and Link Juice Recovery Tool. However, we’ll focus on more pressing matters right now.

4. Optimize videos, articles or microsites that aren’t on your home page. Web 2.0’s make for amazing “supporting sites” to your main website.

While videos, article’s, and microsites are a fantastic way to gather support for your business, they are not nearly as effective if you’re hosting the content on other domains. You will lose the opportunity for higher SEO rankings if your content’s links are pointing to another business’s website.

For example, when Victoria’s Secret’s article “12 Things Women do Every Day That are Fearless” was posted on Buzzfeed’s front page, they missed the opportunity to increase traffic on their website because the article didn’t link back to their main webpage. Buzzfeed benefited, but Victoria’s Secret lost all that link juice that Buzzfeed was throwing off.

By far, the best way to increase your website’s ranking is to include videos, article’s, and microsites on your website. From there, you can expand and find ways to direct even more traffic to your website through you most prominent products and landing pages.

One technique that is proving extremely powerful is to leverage site builders or so called web 2.0’s. Examples include Tumblr, Wiseintro, Wix, etc. The way this works is to build a microsite or landing page on one of these sites that serves as a feeder to your main site. Not only does it allow you to dedicate an entire site to a very narrow aspect or topic relating to what you do, but it allows you to benefit from the power of the domain. Essentially, Google gives you credit for having such a powerful “friend” connecting to your website.

5. Utilize social hubs and forums to discover important keywords prior to your competitors.

If you notice a persistent mention or question in social media, you can take advantage of the keyword before your competitors! Then, you can easily find a way to include it in your website and improve your SEO rank (score).

Discovering recurring keywords before your competitors will not merely improve your ranking, but give you a head start on the popular questions online consumers are likely to ask. Becoming the first to jump on popular keywords will set you up as a leader among other businesses.

The keywords you discover will not always directly relate to your product. A good example of this is a company who makes baby furniture including the phrase “baby names” in their website content. Even if the phrase is barely related to your product, if it is being searched by your direct target market it is a good idea to include it in your website plan. Learn how our brand authority service can grow your church.

6. Augment the “visual richness” of your SERP listing with intriguing (rich) snippets.

Snippets are the cherry on top of a delicious ice cream and hot fudge sunday. The sunday (your website) is already great; however, there’s always room for improvement. Leveraging snippets are one of my most recommended SEO strategies. Along with an enticing title tag and meta description, your snippet will radiate above all others.

For example, if you are interested in a safe and trustworthy crib for your newborn baby, you will be most interested in a listing that looks like it’s a remarkable product with great quality.

Every new snippet added to your website is a new opportunity for your business. Ratings on your website provide reliable reviews of your product from previous consumers. The “in stock” and price disclosure depicts what online customers need to know in order to make a buying decision for your product.

Although rich snippets won’t directly increase your ranking, they will draw more potential customers to your website by increasing your click-through rate.

7. Utilize link analysis techniques to decipher your competitors’ best links.

Despite the fact that commanding links are incredibly important when vying for high search rankings, obtaining such links is a confusing process for many. Finding hubs (common connection points for devices in a network) that link to multiple sites is an easy way to get on track. Hubs can be review sites, trade magazines, city wide resource/review sites, or even blogs. An easy way to uncover these hub sites is through the Majestic tool “Click Hunter” or SEOprofiler. It shouldn’t be a big deal for hubs to link to you because they are already linking to similar websites and in fact many just need you to complete a short form on the site with your information and it will be added in minutes, hours or weeks at the longest.

An easy way to build your links is to search for websites linking to other churches in your area and analyze which of those sites may be willing to link to you too. From there, reach out to the sites that make the most sense to approach. By using this technique, your competitors did all the hard work of qualifying sites that could possibly link to sites like yours, but you can still benefit.

If your head is spinning, feel free to reach out and we can take a look at what will be required to get your church the massive exposure in your city that you deserve. Complete this short form to get started with a no obligation discussion.

The post 7 Steps to Grow your Church Using SEO appeared first on Get your website on the first page of Google with SEO.

0 notes

Link

Website Mistakes You’re Probably Making & Can Fix Quickly

Really bad website mistakes can even be hidden in really nice looking websites, chances are you’re making most of these mistakes and you can identify and fix them now.

You’re here because you’re looking for ways to improve your website, right?

So, I’m guessing that there are some website mistakes that are keeping your site from:

• generating the traffic you want • engaging visitors to keep them active • generating the leads or sales you desire

Chances are that you’re having a hard time identifying what these issues are, because your site looks fantastic, right?

This is a common problem. Partly because website design, website themes, and platforms to create great looking sites are so readily available. There’s nothing wrong with that, but what that has done is made it so that having a nice looking site simply isn’t enough.

For many businesses a website is expected to be a (if not THE) primary source of revenue generation, and this simply can’t happen when website mistakes that leave the user out of the equation are happening.

People forget that if the website is not user-friendly, visitors will bounce.

As you reassess your website for these common mistakes, consider how it will funnel sales and how you want it to accomplish that. Don’t lose sight of the fact that the primary purpose and ultimate goal is to attract visitors to take action.

Let’s take a look at the website mistakes most businesses are making and how to correct them.

Website Mistake #1 – Poor or No Responsiveness

Although everyone in the online field knows the significance of having a responsive website design, many business owners don’t know to prioritize it.

Responsive design increases the accessibility of your website across devices, such as smartphones, desktops, tablets, iPad, etc.

Even Google judges it as a critical factor in determining the performance and quality of the platform. There are three configurations one can consider using to be mobile friendly.

Building a website and mobile site separately

Sites opening with the same URLs, but their HTML and CSS may vary depending on the type of device a user is using

Websites open across all the devices with the same set of URLs and HTML but with different CSS styles to display page correctly. CSS media queries is a technique that helps deliver a page as per the device.

When determining what is best for your business, keep in mind what Google says in their developer Guide:

We recommend using responsive web design because it:

Makes it easier for users to share and link to your content with a single URL.

Helps Google’s algorithms accurately assign indexing properties to the page rather than needing to signal the existence of corresponding desktop/mobile pages.

Requires less engineering time to maintain multiple pages for the same content.

Reduces the possibility of the common mistakes that affect mobile sites.

Requires no redirection for users to have a device-optimized view, which reduces load time. Also, user agent-based redirection is error-prone and can degrade your site’s user experience (see Pitfalls when detecting user agents for details).

Saves resources when Googlebot crawls your site. For responsive web design pages, a single Googlebot user agent only needs to crawl your page once, rather than crawling multiple times with different Googlebot user agents to retrieve all versions of the content. This improvement in crawling efficiency can indirectly help Google index more of your site’s content and keep it appropriately fresh.

Website Mistake #2 – Hard to Scan Content

On average, a visitor can read only 28% of content on a website. Hence, it’s critical to make it easy to browse for them.

You don’t have to cut down your information. Instead, focus on its proper presentation through sub-heads, short paragraphs, highlighted formatting, bullets & numbers, etc. This kind of approach can help you increase the consumption rate of your content among people.

Check out this tutorial on how to format your website content the right way.

Website Mistake #3 – Poor Graphics and Photos

Most businesses need to resort to the use of stock photos to convey the essence of their content to their readers. Photos are great because they serve as visual clues for the reader to help understand the points being made.

But using generic stock photos can be damaging as they can obscure the message.

It’s always better to have original images. If budget, time, or resource is a challenge, you can always use stock photos, just make sure to be prudent with them. The photos that you select should either answer your visitor’s questions through a story or teach and show. The images have to fulfill one of these two rules. Otherwise, you could just be wasting your efforts, time, and space.

In this context, it is also necessary to note that images and graphics have to be responsive. Their shapes and sizes should be able to modify according to the screen size.

Website Mistake #4 – Unclear Navigation Menu

Navigation menu and breadcrumb trail are two essential things that help users to explore your platform without any confusion. However, if the navigation menu is not visible or distinct from other visual elements, users can get lost on your website.

We’ve all been on one of those websites where you get lost chasing a piece of content never to find your way back to any sort of organized category.

Ultimately, you end up just exiting. But if the navigational components are precise, visitors can focus on your platform and spend some time there.

Some companies get extra experimental with their ideas and introduce moving, bouncing, rolling or other such animated navigation designs. But beware of these creative mistakes. Users can accidentally click them and move out of the page from which you could drive a conversion. You don’t want these kind of mistakes on your website.

Website Mistake #5 – Carousels on homepage

I’m one of those designers who has mixed feelings about home page carousels, because the real estate is so valuable. That being said they can still create issues with the user.

The fact is that only 1% of site visitors actually click on a slide, and it’s almost always the first slide.

People can also easily mistake them as ads and avoid them all together. If any of these two things happen ultimately you’re killing the effectiveness of your home page. Important content gets pushed down and more importantly missed. Your website visitors can feel distracted or annoyed for not being able to see what they wanted to see. Consequently, they may not convert.

Aside from that image carousels play a big part in slow loading, clumsy fonts, 404 error, missing favicons, and absence of necessary design elements. If you’re looking for more scientific proof that sliders damage user experience and work against your goals, you’ll want to take a look at this article by Yoast.

Techniques to Improve Website Design Mistakes

Extensive planning: Cover your buyer’s journey in your website design with proper planning – where they go first, what they read, what converts them, etc.

Social share and follow buttons: These non-pushy tools help your visitors to share your content on their social media accounts quickly. You can use them as a good source of traffic generator.

Calls-to-action: Include call-to-action buttons at the right places to guide your users on the next step.

Images: Choose website photos smartly. These should look genuine and apt for your theme.

Navigation: Content layout, responsive design, and navigation bars are essential for increasing the user experience on your website.

Expansive homepage: You can make your home page longer with a suitable value proposition, intro video, products & services, etc.

White space: It makes your website readable by utilizing the design elements in the best possible way.

SEO: Weave SEO techniques from the development stage of the website for excellent optimization and results.

Testing: Use A/B tests, heat maps, or multi-variant tests to find out which element is working with users and which is not. It can make your updating and upgrading job easy.

For any business, creating a successful website can mean a lot of work. But if you don’t want to miss out on leads and conversions, identifying and correcting these common website mistakes will make a huge impact. If you need help, then choose a reputable web design company for assistance.

After all, your website should be a solution and not just a place holder for ineffective content.

0 notes

Text

Dominate SERP With This Proven SEO Advice

Search engines operate in a strange new world. Search engines are visualized as spiders crawling through cyberspace. They pick up “keywords” and add them to their lair of identifying words. They use these words to direct searchers to sites. Search engines operate by artificial intelligence–different from ours. The following tips are designed to help you bridge that gap and help your business gain a valuable friend in these search engine “spiders.”

When setting up site SEO, don’t forget about your site’s URL. Having a domain is better than a subdomain, if you can set one up. Also, any URL longer than about 10 words risks being classified as spam. You want about 3 to 4 words in the domain and no more than 6 or 7 in the page name.

A great way to optimize your search engine is to provide use internal links. This means you have an easy access to links within your own site. This provides an easier database for customers of viewers to use and will end up boosting the amount of traffic you have.

Plan your website so that the structure is clean and you avoid going too deeply into directories. Every page you write for your website should be no more than three clicks away from the homepage. People, and search engines, like to find the information they are looking for, quickly and easily.

You can bring in new traffic to your web site by posting on forums in your chosen niche. Most forums allow links in, either your signature area or on your profile, but read the rules first. When you comment on the forums, make sure your comments are helpful. Answer questions, ask pertinent questions, be friendly and have fun.

Before venturing into the world of optimizing your search engine results, it can be beneficial to learn the lingo. Many terms such as HTML and SERP will come up regularly, and understanding them can be a huge benefit as you grow your page hits. There are many books and websites to help you learn the lingo fast.

Decide whether or not you want to use a link farm. Link farms are sites without content that just have thousands of links. This is generally seen as a negative thing. However, these do appear in search engines, and can help you rise in the ranks. It is your decision as to what is most important: rapport with other sites, or search engine rankings.

Audit click through patterns to see how your customers end up buying (or not). There is software that will track every click visitors make. If you see that a certain page is leading many customers to a purchase, consider making it more prominent on your site and using similar language on other pages of your site.

A good rule of thumb to follow for ultimate search engine optimization is to never change or retire a page URL without providing a 301 redirect to the updated page. The infamous 404 page not found error, is the worst page that can be displayed for your site, so avoid this by implementing a 301 redirect.