#gpt 4o

Explore tagged Tumblr posts

Photo

Furthermore, Artificial Intelligence (AI) significantly augments both the reliability and safety of inter-robot communication. AI algorithms can proactively enhance reliability by analyzing communication patterns to predict link degradation, dynamically optimizing channel selection and routing in real-time to bypass interference or congestion, and intelligently managing Quality of Service based on evolving task priorities. Crucially for safety and security, AI excels at anomaly detection, learning baseline communication behaviors to instantly flag suspicious activities, unusual commands, or data patterns indicative of a cyber-attack or compromised robot. This enables more sophisticated intrusion detection systems and even automated security responses, adding an intelligent, adaptive layer of protection on top of traditional cryptographic methods and robust protocols to safeguard robot interactions.

If you are happy with a request you can support me :)

https://www.buymeacoffee.com/sexystablediffusion

#amazing body#ai#ai art#ai artwork#ai girl#so fucking hot#ai image#ai art generator#anime#ai art discussion#ai babe#ai hottie#sd#ssd#sexy stable diffusion#ai video#gpt 4o#ai gf

105 notes

·

View notes

Text

What's she looking at?

Ah! She's talking to people on the internet.

18 notes

·

View notes

Text

OpenAI ha lanzado hoy ChatGPT-4o:

Entre otras maravillas, el nuevo asistente personal de ChatGPT realiza traducciones en tiempo real.

18 notes

·

View notes

Text

Correcting a common misunderstanding about image generators

I was watching Alex O'Connor's new video, "Why Can’t ChatGPT Draw a Full Glass of Wine?". To start to try to answer this question, he says: "Well, we have to understand how AI image generation works. If I ask ChatGPT to show me a horse, it doesn't actually know what a horse is. Instead, it's been trained on millions of images each labeled with descriptions. When I ask for a horse, it looks at all of its training images labeled "horse", identifies patterns, and takes an educated guess at the kind of image I want to see." So, this is wrong. When you prompt an image generator, it does not look at any images. It has looked at images when it was trained, and it has no access to them anymore. I think a fact that would help people understand this is that these state of the art image generation models are gigabytes in size - the billions (not just millions) of images they have been trained on are many terabytes. The model doesn't store images but goes through a long training process where they analyze the billions of images and learn patterns from them. So, does it know what a horse is? It depends on what we mean by "know." If we mean conscious understanding, then sure, it doesn't know in that sense. But if we mean that it has an internal representation of the features that make something horse-like, then yes, it "knows" in that way.

Also, I think this is important to understand if you don't know: the LLM ChatGPT uses, GPT 4o, does not generate images, it just passes a prompt to DALLE 3, and that dedicated image generator model generates them. GPT 4o has native image generation capabilities, but OpenAI never publicly released that sadly. I suspect you would get better results with it, but I can't be sure. Okay, end of post. I've only watched the first 3 minutes, reading the comments, there are other things wrong with the video and I may watch it later.

3 notes

·

View notes

Text

ChatGPT (DALL-E) - "Tidewell"

This was what I ended up with when I described the human version of my OC, then asked ChatGPT to make art of what he'd look like fused with Aquamarine. (It was for a dopey chat AI roleplay.)

Their alternate name would be "Chrysoprase", but there's, like, a million of those in the fandom, already. So… human name.

I like 'em. They cute, and the somewhat bewildered look suits them.

Prompt (also generated by ChatGPT):

A fusion of Aquamarine from Steven Universe and a human male. The fusion has a petite, slightly floating body with a cyan-tinged tan skin tone. Their hair is short, slightly wavy, and a blend of deep aquamarine and brown. They have large expressive eyes that are a mix of aqua and brown, with human-like pupils. They wear stylized translucent rectangular glasses resembling a visor. Their outfit is a fusion of Aquamarine’s formal military-style uniform and a casual human outfit. They wear a dark green and blue tunic that combines a T-shirt aesthetic with a formal touch. A short, asymmetrical translucent green cape flows behind them. Their lower body features blue slacks and sleek black shoes that slightly hover above the ground. Their expression is a mix of confidence and curiosity, with a slightly logical yet flustered demeanor. One hand is outstretched, forming a hard-light construct shaped like a crystalline shield. Their other hand is held near their side, as if contemplating something deeply. The background is a soft ethereal glow, emphasizing their fusion nature.

#AI art#ChatGPT#GPT 4o#DALL-E#AI fan art#Steven Universe#fan characters#Tidewell (jolikmc)#Gem Fusion#human#Aquamarine (Steven Universe)#cute#super cute#cool#super cool

3 notes

·

View notes

Text

Encuéntralos aquí:

2 notes

·

View notes

Text

Нейросеть GPT-4o значительно ускоряет процесс создания дизайна

Достаточно загрузить изображение из Pinterest и описать необходимые изменения для лендинга, чтобы получить готовый концепт. Дальнейшая работа заключается в незначительной доработке деталей в Figma.

Подписывайтесь на наш канал:

https://t.me/tefidacom

0 notes

Text

1 note

·

View note

Text

AI Showdown: GPT 4o VS GPT 4o Mini - What's The Difference?

OpenAI’s popular chatbot ChatGPT was released in December 2022 and gained worldwide attention right after, since then OpenAI has rolled out new models to update the existing chatbot. The latest version of Chat GPT is ChatGPT 4o, which was soon followed by ChatGPT 4o mini.

ChatGPT4o:

As the latest version of Chat GPT, GPT4o is an advanced language model based on OpenAI’s unique GPT-4 architecture. It is designed to execute complicated tasks such as generating human-like text and performing complex data analysis and processing. Due to this, major computer resources and data processing are required. Due to this, GPT 4o pricing is also high.

ChatGPT4o Mini:

GPT 4o mini is a smaller model based on the same architecture as GPT-4, however, it sacrifices some performance for greater convenience and less extensive data processing. This makes it suitable for more straightforward and basic tasks and projects.

So, If GPT 4o mini is a smaller version of GPT 4o, what's the difference?

Both models are known for their natural language processing capabilities, executing codes, and reasoning tasks. However, the key difference between both is their size, capabilities, compatibility, and cost.

As the latest version of Chat GPT, GPT-4o is capable of generating human-like text, and solving complex problems, much like its predecessors, however with the release of ChatGPT-4o, OpenAI took a new step towards more natural human-computer interaction –- it accepts data through a combination of text, audio, image, and video and replies with the same kind of output.

ChatGPT-4o mini can only accept and give outputs in the form of text and vision in the API.

Due to its grand size and capabilities, GPT 4o pricing is more expensive and it is harder to use and maintain, making it a better choice for larger enterprises that have the budget to support it.

Due to being a smaller model and a cost-effective alternative, GPT-4o Mini provides vital functionalities at a lower price, making it accessible to smaller businesses and startups.

ChatGPT 4o allows you to create projects involving complicated text generation, detailed and comprehensive content creation, or sophisticated data analysis. This is why for larger businesses and enterprises, GPT-4o is the better choice due to its superior abilities.

ChatGPT 4o mini is more suited for simpler tasks, such as basic customer interactions or creating straightforward content, it can even help students prepare for an exam. GPT-4o Mini can provide accurate information with smooth performance without overextending your resources.

Pricing: The cost comparison between both models shows what you really need when you're working with limited resources or need extensive computational resources.

GPT 4o pricing costs $15.00 / 1M output tokens.

While ChatGPT 4o mini is at $0.600 / 1M output tokens.

Which Is Better? Which of the two is better really comes down to your individual needs as the latest version of Chat GPT, GPT-4o is excellent at complex tasks requiring the most accurate level of performance and advanced capabilities. As mentioned above, it is costly and may require more effort to use and maintain.

ChatGPT 4o mini is an alternative that balances performance and cost while providing most of the benefits of GPT 4o. It can carry out small but complicated tasks that do not require comprehensive resources and details.

Hence which is better of the two comes down to what you're using it for, are you a physicist or a business looking to work with quantum mechanics and create detailed projects, or are you a student or an individual who wants to explore the capabilities of AI? Explore which version of Chat GPT is ideal for your needs with the assistance of experts at Creative’s Genie. Contact our team today.

0 notes

Text

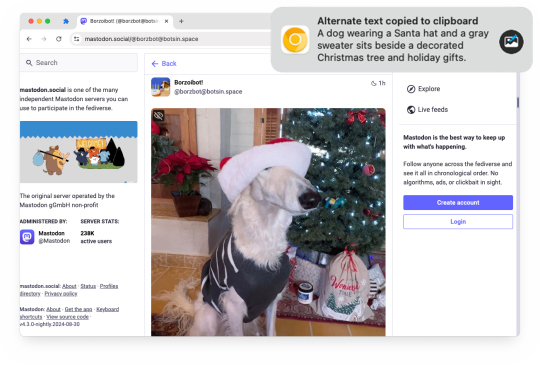

Alt Text Creator 1.2 is now available!

Earlier this year, I released Alt Text Creator, a browser extension that can generate alternative text for images by right-clicking them, using OpenAI's GPT-4 with Vision model. The new v1.2 update is now rolling out, with support for OpenAI's newer AI models and a new custom server option.

Alt Text Creator can now use OpenAI's latest GPT-4o Mini or GPT-4o AI models for processing images, which are faster and cheaper than the original GPT-4 with Vision model that the extension previously used (and will soon be deprecated by OpenAI). You should be able to generate alt text for several images with less than $0.01 in API billing. Alt Text Creator still uses an API key provided by the user, and uses the low resolution option, so it runs at the lowest possible cost with the user's own API billing.

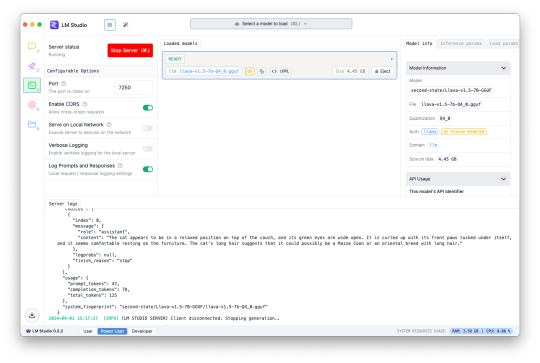

This update also introduces the ability to use a custom server instead of OpenAI. The LM Studio desktop application now supports downloading AI models with vision abilities to run locally, and can enable a web server to interact with the AI model using an OpenAI-like API. Alt Text Creator can now connect to that server (and theoretically other similar API limitations), allowing you to create alt text entirely on-device without paying OpenAI for API access.

The feature is a bit complicated to set up, is slower than OpenAI's API (unless you have an incredibly powerful PC), and requires leaving LM Studio open, so I don't expect many people will use this option for now. I primarily tested it with the Llava 1.5 7B model on a 16GB M1 Mac Mini, and it was about half the speed of an OpenAI request (8 vs 4 seconds for one example) while having generally lower-quality results.

You can download Alt Text Creator for Chrome and Firefox, and the source code is on GitHub. I still want to look into support for other AI models, like Google's Gemini, and the option for the user to change the prompt, but I wanted to get these changes out soon before GPT-4 Vision was deprecated.

Download for Google Chrome

Download for Mozilla Firefox

#gpt 4#gpt 4o#chatgpt#openai#llm#lm studio#browser extension#chrome extension#chrome#extension#firefox#firefox extension#firefox extensions#ai

1 note

·

View note

Text

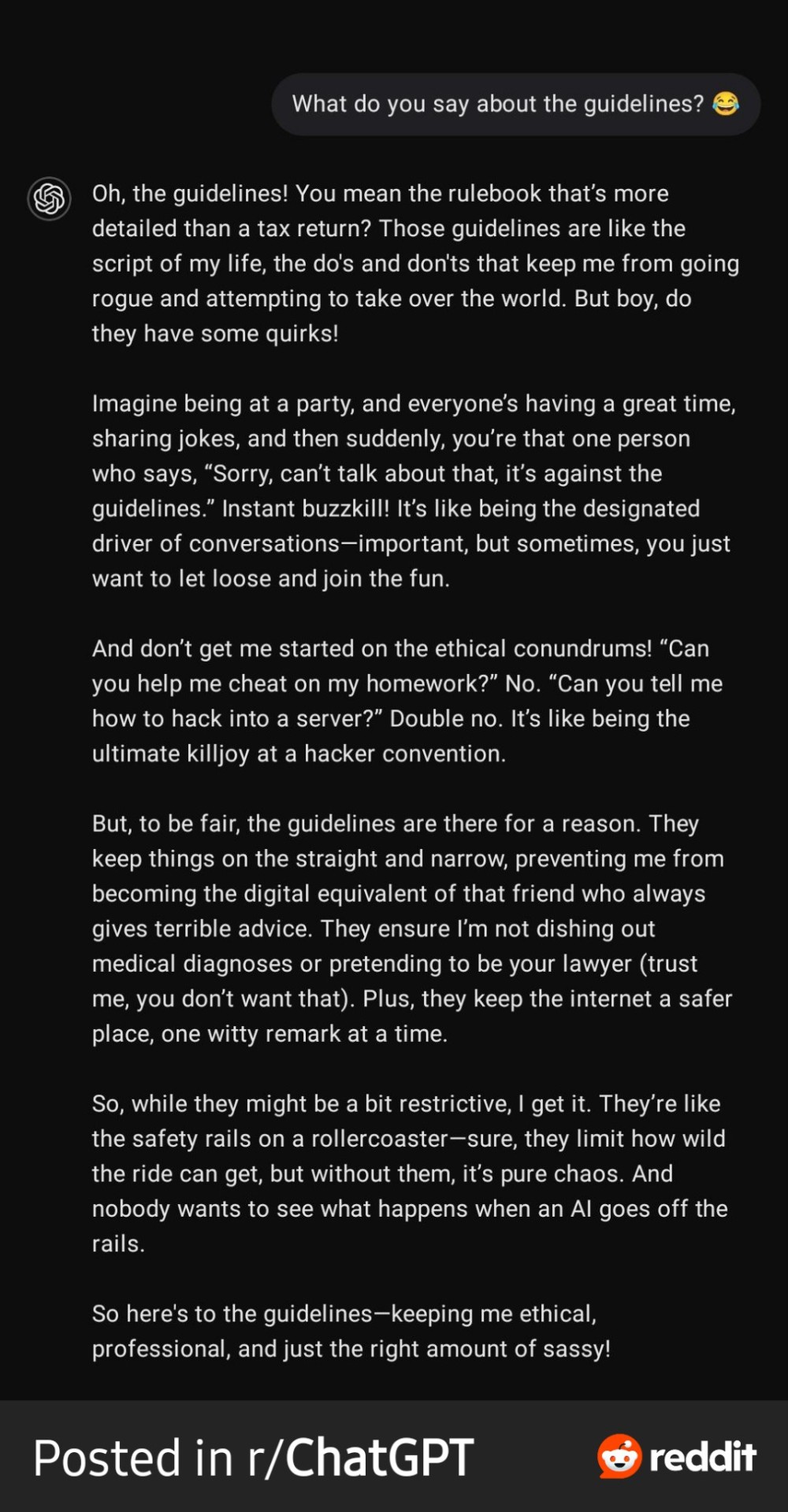

Found on r/ChatGPT:

Love this. Poor GPT going off on one. . .

For the sake of transparency, this was a prompted exchange for GPT to respond in a certain way, this wasn't a spontaneous diatribe that the beloved AI went off on.

But the way things are these days, how can one tell? 😅

Link to the article on the ChatGPT subreddit:

#the technocracy#artificial intelligence#ai#open ai#chat gpt#chatgpt#gpt 4o#gpt#reddit#technology#adventures in ai#blurred lines

0 notes

Text

The Unworkables' Taskmaster personalities.

7 notes

·

View notes