#geminiflash

Explore tagged Tumblr posts

Text

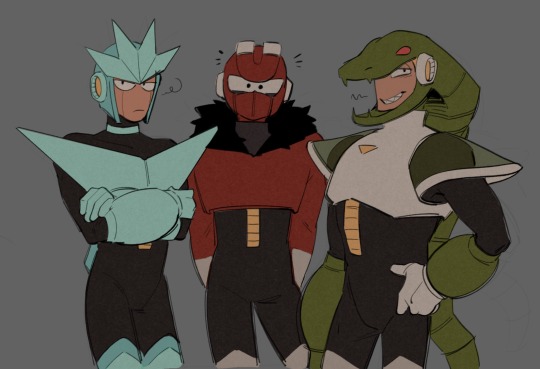

So My

Coffee Shop/Modern AU Gemini and his husband Flashman

Flashman still has a similar mm helm (his head) due to an accident so he's surgically like that.

#megaman#shiro does art#rockman#gemini man#geminiman#flashman#flash man#GeminiFlash#MM Coffee Shop AU#MM Modern AU

5 notes

·

View notes

Text

GPT-4o Mini: OpenAI’s Most Cost-Efficient Small Model

OpenAI is dedicated to maximising the accessibility of intelligence. OpenAI is pleased to present the GPT-4o mini, their most affordable little variant. Because GPT-4o mini makes intelligence considerably more affordable, OpenAI anticipate that it will greatly increase the breadth of applications produced using AI. GPT-4o mini beats GPT-4 on conversation preferences in the LMSYS leaderboard, scoring 82% on MMLU at the moment (opens in a new window). It is priced at 15 cents per million input tokens and 60 cents per million output tokens, which is more than 60% less than GPT-3.5 Turbo and an order of magnitude more affordable than prior frontier models.

GPT-4o mini’s low cost and latency enable a wide range of applications, including those that call multiple APIs, chain or parallelize multiple model calls, pass a large amount of context to the model (such as the entire code base or conversation history), or engage with customers via quick, real-time text responses (e.g., customer support chatbots).

The GPT-4o mini currently supports text and vision inputs and outputs through the API; support for image, text, video, and audio inputs and outputs will be added later. The model supports up to 16K output tokens per request, has a context window of 128K tokens, and has knowledge through October 2023. It is now even more economical to handle non-English text because of the enhanced tokenizer that GPT-4o shared.

A little model with superior multimodal reasoning and textual intelligence

GPT-4o mini supports the same range of languages as GPT-4o and outperforms GPT-3.5 Turbo and other small models on academic benchmarks in textual intelligence and multimodal reasoning. Additionally, it shows better long-context performance than GPT-3.5 Turbo and excellent function calling speed, allowing developers to create applications that retrieve data or interact with external systems.

GPT-4o mini has been assessed using a number of important benchmarks.

Tasks incorporating both text and vision reasoning: GPT-4o mini outperforms other compact models with a score of 82.0% on MMLU, a benchmark for textual intelligence and reasoning, compared to 77.9% for Gemini Flash and 73.8% for Claude Haiku.

Proficiency in math and coding: The GPT-4o mini outperforms earlier tiny models available on the market in activities including mathematical reasoning and coding. GPT-4o mini earned 87.0% on the MGSM, a test of math thinking, compared to 75.5% for Gemini Flash and 71.7% for Claude Haiku. In terms of coding performance, GPT-4o mini scored 87.2% on HumanEval, while Gemini Flash and Claude Haiku scored 71.5% and 75.9%, respectively.

Multimodal reasoning: The GPT-4o mini scored 59.4% on the Multimodal Reasoning Measure (MMMU), as opposed to 56.1% for Gemini Flash and 50.2% for Claude Haiku, demonstrating good performance in this domain.

OpenAI collaborated with a few reliable partners as part of their model building approach to gain a deeper understanding of the capabilities and constraints of GPT-4o mini. Companies like Ramp(opens in a new window) and Superhuman(opens in a new window), with whom they collaborated, discovered that GPT-4o mini outperformed GPT-3.5 Turbo in tasks like extracting structured data from receipt files and producing excellent email responses when given thread history.

Integrated safety precautions

OpenAI models are constructed with safety in mind from the start, and it is reinforced at every stage of the development process. Pre-training involves filtering out (opens in a new window) content that they do not want their models to encounter or produce, including spam, hate speech, adult content, and websites that primarily collect personal data. In order to increase the precision and dependability of the models’ answers, OpenAI use post-training approaches like reinforcement learning with human feedback (RLHF) to align the model’s behaviour to their policies.

The safety mitigations that GPT-4o mini has in place are identical to those of GPT-4o, which they thoroughly examined using both automated and human reviews in accordance with their preparedness framework and their voluntary commitments. OpenAI tested GPT-4o with over 70 outside experts in social psychology and disinformation to find potential dangers. OpenAI have resolved these risks and will provide more information in the upcoming GPT-4o system card and Preparedness scorecard. These expert assessments have yielded insights that have enhanced the safety of GPT-4o and GPT-4o mini.

Based on these discoveries, OpenAI groups additionally sought to enhance GPT-4o mini’s safety by implementing fresh methods that were influenced by their study. The first model to use their instruction hierarchy(opens in a new window) technique is the GPT-4o mini in the API. This technique helps to strengthen the model’s defence against system prompt extractions, jailbreaks, and prompt injections. As a result, the model responds more consistently and is safer to use in large-scale applications.

As new hazards are discovered, OpenAI will keep an eye on how GPT-4o mini is being used and work to make the model safer.

Accessibility and cost

As a text and vision model, GPT-4o mini is now accessible through the Assistants API, Chat Completions API, and Batch API. The cost to developers is 15 cents for every 1 million input tokens and 60 cents for every 1 million output tokens, or around 2500 pages in a typical book. In the upcoming days, OpenAI want to launch GPT-4o mini fine-tuning.

GPT-3.5 will no longer be available to Free, Plus, and Team users in ChatGPT; instead, they will be able to access GPT-4o mini. In keeping with OpenAI goal of ensuring that everyone can benefit from artificial intelligence, enterprise users will also have access starting next week.

Next Steps

In recent years, there have been notable breakthroughs in artificial intelligence along with significant cost savings. For instance, since the introduction of the less capable text-davinci-003 model in 2022, the cost per token of the GPT-4o mini has decreased by 99%. OpenAI is determined to keep cutting expenses and improving model capabilities in this direction.

In the future, models should be readily included into all websites and applications. Developers may now more effectively and economically create and expand robust AI applications thanks to GPT-4o mini. OpenAI is thrilled to be leading the way as AI becomes more dependable, approachable, and integrated into their everyday digital interactions.

Azure AI now offers GPT-4o mini, the fastest model from OpenAI

Customers can deliver beautiful apps at a reduced cost and with lightning speed thanks to GPT-4o mini. GPT-4o mini is more than 60% less expensive and considerably smarter than GPT-3.5 Turbo, earning 82% on Measuring Massive Multitask Language Understanding (MMLU) as opposed to 70%.1. Global languages now have higher quality thanks to the model’s integration of GPT-4o’s enhanced multilingual capabilities and larger 128K context window.

The OpenAI-announced GPT-4o mini is available concurrently on Azure AI, offering text processing at a very high speed; picture, audio, and video processing to follow. Visit the Azure OpenAI Studio Playground to give it a try for free.

GPT-4o mini is safer by default thanks to Azure AI

As always, safety is critical to the efficient use and confidence that Azure clients and Azure both need.

Azure is happy to report that you may now use GPT-4o mini on Azure OpenAI Service with their Azure AI Content Safety capabilities, which include protected material identification and prompt shields, “on by default.”

To enable you to take full advantage of the advances in model speed without sacrificing safety, Azure has made significant investments in enhancing the throughput and performance of the Azure AI Content Safety capabilities. One such investment is the addition of an asynchronous filter. Developers in a variety of industries, such as game creation (Unity), tax preparation (H&R Block), and education (South Australia Department for Education), are already receiving support from Azure AI Content Safety to secure their generative AI applications.

Data residency is now available for all 27 locations with Azure AI

Azure’s data residency commitments have applied to Azure OpenAI Service since the beginning.

Azure AI provides a comprehensive data residency solution that helps customers satisfy their specific compliance requirements by giving them flexibility and control over where their data is processed and kept. Azure also give you the option to select the hosting structure that satisfies your needs in terms of applications, business, and compliance. Provisioned Throughput Units (PTUs) and regional pay-as-you-go provide control over data processing and storage.

Azure is happy to announce that the Azure OpenAI Service is currently accessible in 27 locations, with Spain being the ninth region in Europe to launch earlier this month.

Global pay-as-you-go with the maximum throughput limitations for GPT-4o mini is announced by Azure AI

With Azure’s global pay-as-you-go deployment, GPT-4o mini is now accessible for 15 cents per million input tokens and 60 cents per million output tokens, a substantial savings over earlier frontier models.

Customers can enjoy the largest possible scale with global pay-as-you-go, which offers 30 million tokens per minute (TPM) for GPT-4o and 15 million TPM (TPM) for GPT-4o mini. With the same industry-leading speed and 99.99% availability as their partner OpenAI, Azure OpenAI Service provides GPT-4o mini.

For GPT-4o mini, Azure AI provides industry-leading performance and flexibility

Azure AI is keeping up its investment in improving workload efficiency for AI across the Azure OpenAI Service.

This month, GPT-4o mini becomes available on their Batch service in Azure AI. By utilising off-peak capacity, Batch provides high throughput projects with a 24-hour turnaround at a 50% discount rate. This is only feasible because Microsoft is powered by Azure AI, which enables us to provide customers with off-peak capacity.

This month, Azure is also offering GPT-4o micro fine-tuning, which enables users to further tailor the model for your unique use case and situation in order to deliver outstanding quality and value at previously unheard-of speeds. Azure lowered the hosting costs by as much as 43% in response to their announcement last month that Azure would be moving to token-based charging for training. When combined with their affordable inferencing rate, Azure OpenAI Service fine-tuned deployments are the most economical option for clients with production workloads.

Azure is thrilled to witness the innovation from businesses like Vodafone (customer agent solution), the University of Sydney ( AI assistants), and GigXR ( AI virtual patients), with more than 53,000 customers turning to Azure AI to provide ground-breaking experiences at incredible scale. Using Azure OpenAI Service, more than half of the Fortune 500 are developing their apps.

Read more on govindhtech.com

#gpt40mini#openai#mostcost#Efficientsmallmodel#gpt4#alittlemodel#multimodal#textualintelligence#gpt40#geminiflash#claudehaiku#api#openaimodel#azureai#azureopenaiservice#globalpay#technology#technews#news#govindhtech

0 notes

Note

flashman dakimakura

capcom are u listening? this is what the people need want

#this ask is probably in reference to the geminiflash drawing#and yes.#that is what that looks like isnt it kasljdlasd#cak3oask

13 notes

·

View notes

Text

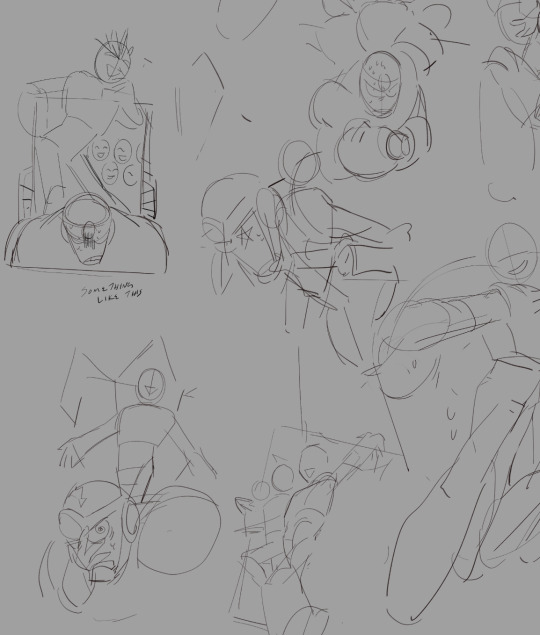

Concept doodles for that Geminiflash piece. Sorry Gemini looks butt ass naked from behind I didn’t know what else to do lmao

Those three are are trio to me for some reason. Do not seperate them.

Oh I just realized this is the first time I’m showing my designs for magnet and snake hehe

193 notes

·

View notes