#epistemic status: uncertain

Explore tagged Tumblr posts

Text

Spicy take of uncertain epistemic status: I feel like a relatively small but still significant part of the disdain towards The Game Awards that some folks in the Western games press display stems from the fact that the foreign-language games press they usually disdain or ignore gets to sit at the same table and cast the same votes they do.

0 notes

Text

I sometimes think of Tolkien’s creatures in terms of the ratio of spirit-to-body (fëa to hroä, I suppose) that makes up their self—Valar being completely spirit, animals being completely body, with elves being much more spirit and humans being much more body (with plenty of room for individual variation, of course. It’s a continuum).

#not a perfect system but it’s a decently applicable theory#epistemic status: uncertain but i kinda like it#edit: mother of FUCK i plut the accent on hröa in the wrong plae#place*

79 notes

·

View notes

Text

Gender Identity and the Ideological Turing Test

@ozymandias271 has been running an Ideological Turing Test on Gender Identity vs. Blanchard Bailey theories of transness (supporters of one or the other write essays for both as if they were a supporter of it), and it has driven home for me how much these are in different categories of things.

I hadn’t intended to vote, and don’t think I voted on a single Gender Identity entry. But I found myself spontaneously forming opinions on the Blanchard Bailey ones and voting. The truth isn’t out yet and it’s possible I was very wrong, but even so I think the fact that I could not even form an opinion on the Gender Identity essays points to an important thing.

I have a gears level understanding of Blanchard-Bailey. I could see how changing an input would change its prediction, and I can see how changing their model would change its reaction to the same input. This gears level understanding lets me say things like “I can see the evidence that those things exist but why on earth would you insist those are the only two possible cases?”

Not only do I not have a gears level understanding of Gender Identity theory, it feels to me like a gears level understanding is impossible. GI says that what goes on inside people’s head is a black box that we must just accept. What makes someone a woman? She says she’s a woman. But why is she choosing that over being a man? Because she’s a woman. What is she basing that choice on? She feels better as a woman. What are the implications of choosing being a woman over a man? Whatever an individual decides...

I could pass an ideological turing test on gender identity, but as a Chinese room, where I could pass blachard-bailey with actual understanding. I’m also left with the vague feeling that trying to have a gears level understanding is shameful, which I super do not appreciate.

My own theory that the phrase gender identity is increasingly doing the work of “identity”, full stop, feels better to me. It’s not predictive now because we’re in a weird transition period where several definitions are in flux to the point of uselessness. But it predicts we will stabilize with fairly well understood definitions of what people mean when they say “Being Male/Female is an Important Part of My Identity”, and people for whom those predictions aren’t valid will say “yeah, I have a testosterone/estrogen type body but it’s not an important part of my self-identity, it’s more important you know I am Christian/a nerd/a tiger.” The same way that for some people race is a physiological trait and for others it’s a deep part of who they are.

I wish the commenters were focusing on “is this person making a good argument for the side” and ignoring “is this person using the quirks of phrasing you’d expect from this side.” Some of that is because humans like to win, but I think another part is that the phrasing is the most there part of gender identity. It’s not a theory of transness, it’s a signaling mechanism for demonstrating you are emotionally safe for trans people. Which is a good thing to have. I just wish it wasn’t marketing itself as a scientific explanation

15 notes

·

View notes

Link

There are a lot of complaints these days about white men, particularly online where confrontations are much less threatening. People make those complaints in lieu of gathering actual power, which is hard and takes time away from endlessly refreshing Twitter. These categorical claims about white men are existentially harmless no matter where they arise - who gives a shit? - but they are also quite weird when they come from white men. And boy, do woke white men love to complain about white men! Let’s check in with Dr. Grist.

…

Much could be said here! First and foremost is the fact that “ideas don’t arise from specific individual minds but from the flow of history and our contingent place within that history” is just a crude approximation of Marxism, a philosophy developed by a couple of white men and which famously has a lot of white male admirers. Or, if you squint a different way, you could maybe call this thinking a simplistic consequence of French poststructuralism, an intellectual tradition developed almost exclusively by white men. Etc. Honestly the entirety of 20th century philosophical development points squarely in the direction that Roberts is arguing, so his claim that it would appear deeply threatening to the vast population of white men seems a little odd. I am almost charmed by all of this, in the sense that Roberts has expressed a profoundly undergraduate vision of the history of ideas, one that ham-handedly mirrors more sophisticated and forceful versions developed by white men, and then posits it as both somehow novel and uniquely threatening to white men. Almost charmed, that is, because such a desperate play for the approval of other people (most of them white) dressed up as truth-telling can’t be genuinely charming.

It should go without saying that what Roberts is saying is utterly self-undermining. If Roberts believes that the opinions of white men are inherently suspect because they arise from a situated and contingent position within history, then his own position is inherently suspect because Dave Roberts is a white man. If, on the other hand, Roberts’s point is merely that white male opinions exist within the same contingent and uncertain epistemic status as everyone else’s, then that means that there is no reason to trust white men more but also no reason to trust us less. It’s just the interplay of different ideas, all arising from the inherent confusion of history. Which would mean that the ideas that should and will rule are those that arise from the interchange of ideas, from combat between them… in other words, from the processes of Reason1, which Roberts dismisses here with his usual mixture of confidence and confusion.

…

I am kind of fascinated by the “I hate white dudes” white dudes. Take Will Stancil. I don’t really know what a Will Stancil is or does, other than that Will Stancil fucking loves to complain about white men on Twitter.

…

Or take Will Wilkinson, who turned on an absolute fucking dime from snide Cato Institute libertarian to weepy woke white man when the political wind changed direction. I don’t generally take cues on how to be progressive from guys whose lives were subsidized by the Koch brothers for years and years, but I especially don’t when it’s those who so obviously “read the room” and realized that a career in media was going to take a lot of performative sneering about white guys.

…

You guys, seriously - if criticizing white men can have any valence at all, it cannot admit exceptions; if you admit exceptions, you are necessarily not really critiquing white men. By the very nature of making these critiques publicly, you are inherently asking the people reading them to see you as the exception. You are, all of you, saying “I am the good white man,” which puts you in the company of literally millions of other aging white guys who have gotten very worried about their place in the world and see a market opportunity in making themselves out to be unlike other white men. Which, you know, fine. Hustle on, player. The problem is that the it renders the substance of your critique totally incoherent. Dave Roberts is asking us all, with a straight face, to take seriously that he has a very low opinion of white men in a way specifically designed to improve our opinion of him, a white man. And so is Will Stancil and Will Wilkinson and Noah Blatarsky and dozens upon dozens of other white dudes I could name. The want to earn credit for being willing to critique their own identity category but cannot help but exempt themselves from that critique!

I find this all untoward. I find it cynical and gross to go through the motions of pretending to indict yourself when doing so is really just a strategy to elevate yourselves above others. It’s like religious types who self-flagellate specifically to appear more holy than others, a pantomime of humility driven by hubris. It’s a special kind of hypocrisy. These are the kinds of guys who loudly mock “not all men,” but their entire careers amount to one long performance of “not all men.”

4 notes

·

View notes

Text

Institutional Myth in Contemporary Art

I’ve spent a lot of time in & around the New York visual art scene the past few years, and it’s been a very strange & uncanny but also informative experience. A lot of the preference falsification and undead prestige cultures of, say, academia, or science, or politics are in play, but here the emperor seems almost fully denuded, instead of partially clothed, which helps clear the fog. I’ve been trying to understand, and see clearly, through a culture which I’ve previously accused (jokingly, but seriously) of gaslighting me and other participants, & thought some of the resulting thoughts might be of interest to folks around here who either are inside visual arts communities, and feel similarly enshrouded, or who are outside of it but have never quite ‘understood’ what’s going on in visual arts scenes.

Sarah Perry, in “Business As Magic,” defines an institutional myth as a public narrative advanced by a number of simultaneous institutions and individuals with aligned self-interests, who are uncoordinated (there is no “conspiracy”) but nevertheless converge upon and reinforce a shared message (as if there were). The four characteristics of such a myth are:

Language of Morality: Beliefs about trivialities are not myths. Institutional myths “refer to widespread social values and emphatically use semantics coming from those value systems” (Piber & Pietch, 2006). Myths deal with sacred matters and moral consideration, and often use language borrowed from religion.

Zone of Ignorance: Myths are protected from falsification or excessive inquiry by social forces. “Follow the sacred and there you will find a circle of motivated ignorance,” says Jonathan Haidt. […]

Idealization and Ambiguity: Myths are not merely falsehoods, but idealized, simplified accounts of complex matters. Myths attach to values that are difficult to precisely define and difficult to measure. A myth is so vague and uncertain that its epistemic status is in some ways unknowable. […]

Symbolic Evaluation: Since myths deal in subjects that are inherently ambiguous and in some sense unknowable, the evaluation of myths is “restricted to symbolic considerations, which leave many interpretations possible” (Piber & Pietch, 2006). Symbolic ritual activity is directed toward the sacred value, with no demonstrable causal connection to any particular measurable outcome. Empirical falsification is inherently difficult, and therefore can be avoided.

Perry applies this frame to discuss national security as the institutional myth whose moralistic invocations of terrorism, and sacredness zone around 9/11, created an unchallengeable narrative in early 2000s American political culture, leading ultimately to heightened military involvement in the ME. I think it applies also to a very different kind of narrative, advanced by progressive cultural institutions & interested individuals, rather than conservative political ones, this being the myth of visual art’s intrinsic and inalienable value to society. (This narrative is heavily incentivized by both government grant structures and private donor prestige cycles.)

Language of Morality. Contemporary visual art is advanced as not just capable of addressing current cultural, ethical, & political crises—that capability is assumed, as evidenced by the high valuation and large attention directed at explicitly activist work—but one of the key technologies in doing so.

Zone of Ignorance. One important supplementary myth of the visual arts world which insulates it criticism is that outsiders to the its culture are incapable of advancing meaningful criticism against it, since they lack the deep disciplinary knowledge necessary to “properly” perceive contemporary works:

When I showed the poem to Sischy, she was not amused. “Forgive my lack of a sense of humor,” she said, “but what I see in that poem is just another reinforcement of stereotypes about the art world. It’s like a Tom Stoppard play, where you have an entire broadway audience snickering about things they haven’t understood. It makes outsiders feel clever about things they know nothing about… [T]his poem reflects the gap that exists between the serious literary audience and the serious art audience.”

That’s Janet Malcolm in 1986’s “Girl of the Zeitgeist,” quoting Artforum Editor in Chief Ingrid Sischy. The “poem” under discussion is by art critic Robert Hughes—his writing for Time magazine, instead of a dedicated New York arts publication, is enough for Sischy to dismiss him as outsider.

There is an extent to which many of the “effects” which contemporary art tries to achieve in viewers are inaccessible to outsiders; they dialogue with the discipline’s history, techniques, other contemporary artists, etc. However, the majority of critics who are familiar enough with contemporary visual art to bother formulating public opposition to it are well aware of what the artworks under discussion are 1) trying to, and 2) do in fact accomplish in viewers. What is being objected to is the scope and value of that accomplishment. Is a work which claims to “think through” crucial contemporary political issues, merely by using fabric with a contested history of use (e.g. produced in the Middle East, used by American military, etc…), actually “thinking through” anything substantial, and if so what conclusions can we actually come to through the invocation of this contested fabric? The successful delegitimization of outside critiques also suppresses the reality that many, perhaps even most, insiders to visual art are themselves disillusioned with the current state of visual art. This reality is hidden through preference falsification not just to others but to oneself, insofar as once one “becomes” an insider, one has invested such tremendous amounts of time and psychic energy which would be devalued by such an admission. Further, the futility of launching such critiques against contemporary art —the clear public record of critics/thinkers/writers being delegitimized as necessarily ignorant— discourages insiders from receiving the same fate, say, that Robert Hughes receives. In other words, no outsider is capable of criticizing, and no true insider would. Either way, aggressions against the zone of ignorance are handily suppressed. This is to say nothing of the self-selection effects in which those skeptical of visual art’s impact on culture & society instead go into filmmaking, or research, etc. Continuing (Tumblr won't let me start my numbering at 3, so treat #1 & 2 as #3 & 4 in actuality):

Idealization and Ambiguity. The precise benefit and value of contemporary art is kept vague, or even undefinable—the value is intrinsic and inalienable, but also unquantifiable. It is argued to exist, but is also so self-evident it does not need identification.

Symbolic Evaluation. As The Sublemon has argued, experimental art rarely embodies a true experimental spirit because it lacks success-failure criteria, and its results are not actively evaluated, are treated as beside the point, as if the mere conducting of the experiment were where value lies. (As opposed to the value lying in the extraction of knowledge possible in the experiment’s wake.) Part of this is the rise of precarity in visual arts culture, which leads to (as Bourdieu puts it), mutual admiration societies of hobbyists who support each other in order to rise to the top. Rigorous criticism, with the real potential of failure, is suppressed; paradoxically, art that claims to be highly experimental and risk-taking never seems to fail. Similarly, there is very little real attempt to evaluate the claims of contemporary art’s general value, purpose, or function. Occasionally such questions are summarily asked in the op-ed pages of a major newspaper, but the discipline itself evades, in almost all its theoretical production, real inquiry into these topics.

These qualities of institutional myth resemble Bourdieu’s theory of illusio: “the tendency of participants to engage in [a field’s] game and believe in its significance, that is, believe that the benefits promised by the field are desirable. […] Whatever the combatants on the ground may battle over, no one questions whether the battles in question are meaningful. The considerable investments in the game guarantee its continued existence. Illusio is thus never questioned” (Henrik Lundberg & Göran Heidegren).

Lastly, Perry argues that within corporations, energy must be split between turning an efficient profit and perpetuating the public-facing institutional myth. In arts organizations, the two efforts are the same; money comes in via grants and donations that are the result of persuading both individuals and other institutions of the worthiness of the myth.

4 notes

·

View notes

Note

how did you deconvert?

I dunno if I'd call it deconversion, because I was born into it. I was "saved" at the age of six, when I recited a little prayer out of my devotional book and then went and told my mom that I'd given my heart to Jesus, could I be baptized? It was just kind of assumed that every kid in the family would end up doing something similar.I got some radical notions in my head at a fairly young age, like "hell can't be literal eternal torture if God is benevolent, because then God would be evil, so hell must not be eternal torture." I also learned that the Bible was assembled by committee, and that called its "literal truth" status into question. So I was already not a fundamentalist by the time I was about twelve. Then I got really into having theological fights with Mormons in high school, mostly because my dad had deconverted from Mormonism and had lots of anti-Mormonism material he loved to talk about. Also because I was a straitlaced nerd and the other straitlaced nerds I was friends with were Mormon. At some point I started applying the same arguments to my own beliefs as I did to Mormonism, and identified as an "agnostic theist" for a few years. By the time I was eighteen, that had turned into "agnostic atheist" which is about where I stand now. The big tipping point was a realization that even something with deity-level powers would have to make a very strong argument for me to worship it. It would be easy to frame it as an accumulation of evidence against my faith over the years, and point to all of the little inconsistencies that pinged my bullshit meter over the years. It would also be easy to point to a few instances of late-night study and pretend that my deconversion was well-reasoned. But the truth is that it was messy and I spent a lot of years uncertain and the tips from "agnostic theist" to "lol why would a deity need my prayers" to "agnostic atheist" weren't epistemically (sp?) sound. Those just kinda happened while I wasn't looking. In my defense, my "salvation" was even more fraught with reasoning errors, as I was only six. The best I can do is revisit the idea every few years with my updated and hopefully improved reasoning processes to see if anything changes. Nothing has, yet.

12 notes

·

View notes

Text

Failing gracefully with advice for abusers

epistemic status: fairly uncertain. I’ve put a lot of thought into this, but advice for dealing with abuse is anti-inductive. I have no domain expertise here, beyond being a survivor who once

Abuse victims often believe they are abusers. Abusers often believe they are victims of their victims. This makes some of the “how to react if you’re an abuser” advice horrifying.

Is there a way to create advice for people who think they are abusers that at fails gracefully?

One way to start may be with advice that’s difficult to go wrong with.

“Do not lay hands on your partner, unless it’s in immediate self-defense from an act of physical violence initiated towards you.”

“Treat a single no or objection from your partner on matters of bodily autonomy (sex and any form of touch) as sacrosanct. Even if you think it’s intended to hurt you. Even if you think it’s intended to control you. Never nonconsensually touch your partner. Never pressure your partner into physical contact..”

“If your partner isn’t economically dependent on you, and you think you may be an abuser, you should break up with your partner. You always have a right to break up with your partner, even if, especially if, you are an abuser. If you’re an abuser, you’re doing them a favor. Anything else you do is harm mitigation.”

The area where things get hairy is economic dependence. Dumping your victim onto the streets is not an okay thing to do, especially if you have been a factor in limiting their economic dependence. An abuser may be obligated to give economic support to their victim until the victim achieves self-support.

Since many abusers will not break up with the partner,and many victims who think they are abusers cannot break up with their partners, harm mitigation advice is useful.

Abuse is fundamentally about control and violations of autonomy. This is the thing that separates abusive relationships from merely unhealthy ones.

My thought would be to recommend moving in the direction of disentanglement and independence. If one partner is economically dependent on the other partner, try to reduce or remove economic dependence. Both partners should obtain friends outside the relationship. If they are co-habitating, it should be ended if possible.

This advice is reversible. A victim doing this moves in the direction of escape. An abuser doing this moves in the direction of not abusing their victim.

#cw abuse#cw violence#cw intimate partner violence#cw domestic violence#cw dating abuse#practical morality#cw discourse#poking tumblr with a stick#cw intimate partner abuse#cw dating violence#cw domestic abuse

43 notes

·

View notes

Text

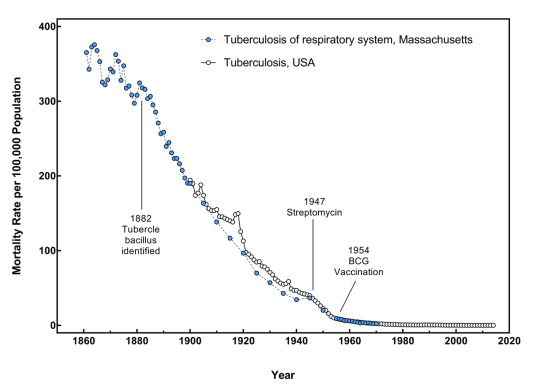

OP's post seemed off to me, so I looked up "History of Tuberculosis" in Wikipedia, the Online Encyclopedia that Anyone Can Edit™. (epistemic status: wikipedia)

A short timeline of tuberculosis (TB) treatments:

1816: development of the stethoscope by René Laennec, a useful diagnostic tool for TB

1840s: The beginnings of "TB sanatoriums", places to shunt TB patients for treatment (with the side effect of reducing the number of symptomatic TB carriers in the general population, reducing contagion)

1869: TB demonstrated to be contagious by Jean Antoine Villemin

1880s-1890s: systematized sanatoriums for TB patients, coercion of TB patients into sanatoriums: further reductions in contagion

1882: TB discovered by Robert Koch to be caused by an infectious agent

1882: Robert Koch discovers Mycobacterium tuberculosis and a way of testing for its presence in the gunk coughed up patients

1886: Peter Dettweiler claims his sanatorium has cured 132 in 1022 patients, after opening in 1877.

late 1880s: surgical treatments for TB developed and spread

1895: Wilhelm Röntgen discovers X-ray, X-ray used to track progression of TB

1901: UK royal commission on TB

1902: International Conference on TB

1800s-1900s (uncertain date) bans on public spitting in UK and US

1916: best-condition treatments in British sanatoria reduce case fatality rate to 50% of patients dead after 5 years.

1921: first use of successful BCG vaccine against TB in humans, developed in 1906. Widespread vaccination campaigns didn't really kick off until after WWII.

1994: streptomycin developed, first antibiotic effective against TB

OP's chart (from Wikipedia, natch) tracks the mortality rate as a proportion of the total population of the USA (infected and non-infected), not the mortality rate as a proportion of known cases. I think the chart is less reflective of a decline in the case fatality rate than it is of a decline in the total number of TB cases.

OP is correct that TB vaccines were not widely deployed in the US. They postdated most of the decline in TB cases.

So, yes, OP is correct that nonmedical interventions work to reduce the total fatality rate. The decline really begins around 1870, just after it's realized that TB is contagious. That realization is when people get serious about shoving TB patients into sanatoriums. This removes TB patients as disease vectors in the general population.

Quarantining contagious people is indeed a highly-effective method of preventing disease spread.

Unlike with TB, the US has decided that coercing COVID patients into quarantine is not feasible. Maybe that's because of the CFR and maybe it's a lack of quarantine wards and maybe that's just politics. Instead, we've opted for widespread vaccination and masking as prophylactics against contagion.

97% of the decline in tuberculosis mortality is not attributable to antibiotics or vaccines.

In fact, no tuberculosis vaccine has ever been widely used in the US.

Found this interesting in light of recent discussion about non-pharmaceutical interventions for other infectious diseases.

65 notes

·

View notes

Text

Herodotus and Presocratic Philosophy-III (Truth)

“2.5 Conclusion

The philosophical projects of Xenophanes and Parmenides both highlighted the importance of inquiry beyond truth, a feature of philosophy that aligns closely with Herodotus’ preoccupation with ‘what is said’ and ‘what seems’. On the other hand, Parmenides and the succeeding philosophical tradition never advocated for a robust skepticism, and the importance of truth to their projects was paramount, if difficult of access. These epistemological advances in philosophy have been ignored in the context of Herodotus’ establishment of his ‘peculiar’ narratorial persona. In the Histories, the rarity of Herodotus’ affirmations on truth might lead one to interpret the text as unconcerned with the ‘true reality’ lying behind uncertain perception. However, given the intense debate on the status of truth claims among Presocratic intellectuals, Herodotus’ epistemocritical narrator can be intellectually situated: the extremely high premium on truth and its rarity in his historiē underwrites his competence in handling the material constituting the Histories, rather than weakening it. This does not lead the historian to a demotion of phenomenological truths, in contrast to some of his contemporaries – the text does include an account of truth derived by the senses as well as reasoning faculties. In domesticating the language of ‘veridical’ εἰµί, Herodotus goes further, by creatively coopting the vocabulary of philosophy that was explicitly formulated in opposition to phenomenal truth and using it for his own purposes. The importance of Herodotus’ innovation here can scarcely be exaggerated: historiography stakes out a distinct epistemic space among the set of possible positions in the field of philosophy. Herodotus emerges not as a Homeric warrior, grappling with truth, but as a Presocratic sophos aware of the limits of human wisdom. Like Xenophanes, the Histories are intent upon attaining a ‘better’ record of historical action, rather than a simply ‘true’ one.”

Kinglsey, Katie Scarlett “ The New Science: Herodotus' Historical Inquiry and Presocratic Philosophy”, doctoral dissertation, Princeton 2016, pp 58-59 (the dissertation is available on https://dataspace.princeton.edu/bitstream/88435/dsp01qn59q647s/1/Kingsley_princeton_0181D_11926.pdf )

0 notes

Text

Epistemic status: full of question marks. exploratory? dreaming big, perhaps of things impossibly big. Right, so,

I saw a couple papers (which I didn’t entirely understand, but seemed neat) about different theories of probabilities which use infinitesimals in the probability values (one of which was this one (<-- not sure that link will actually work. Let me know if it doesn’t?), and the other one I can’t find right now but was similar, used the same system I think, though it also dealt with uniform probability distributions over the rational interval from 0 to 1) and they built their stuff using nonstandard models of the natural numbers, where, if I’m understanding correctly, they got some nonstandard integer to represent “how many standard natural numbers there are”, or something like that. Also, they defined limits in a way that was like, as some variable ranging over the finite subsets of some infinite set gets larger in the sense of subsets, then the value “approaches” the limit in some sense, and I think this was in a way connected to ultrafilters and the nonstandard models of arithmetic they were using. I did not actually follow quite how they were defining these limits.

(haha I still don’t actually understand ultrafilters in such a way that I remember quite what they are without looking it up.)

Intro to the Idea:

So, I was wondering if maybe it might work out in an interesting way to apply this theory of probability to markov chains when, say, all of the states are in one (aperiodic) class, and are recurrent, but none of them are positive recurrent (or, alternatively, some of them could be positive recurrent, but almost all of them would not be), in order to get a stationary distribution with appropriate infinitesimals?

Feasibility of the Idea:

It seems to me like that should be possible to work out, because I think the system the authors of those papers define is well-defined and such, and can probably handle this situation fine? Yeah, uh, it does handle infinite sequences of coin tosses, and assigns positive (infinitesimal) probability to the event “all of them land heads” (and also a different infinitesimal probability which is twice as large to the event “all of them after the first one land heads (and the first one either lands heads or tails)” ), and I think that suggests that it can probably handle events like, uh, “in a random walk on Z where left and right have probability 1/2 each, the proportion(?) of the steps which are in the same state as the initial state, is [????what-sort-of-thing-goes-here????]”, because they could just take all the possible cases where the proportion(?) is [????what-sort-of-thing-goes-here????], and each of these cases would correspond to a particular infinite sequence of coin tosses, which each have the same infinitesimal probability, and then those could just be “added up” using the limit thing they defined, and that should end up with the probability of the proportion(?) being [????what-sort-of-thing-goes-here????].

Building Up the Idea:

So, if that all ends up working out with normal markov chains, then the next step I see would be to attempt to apply it to markov chains where the matrix of transition probabilities itself has values which are infinitesimal, or have infinitesimal components, and, again, trying to get stationary distributions out of those (which would again have infinitesimal values for some of the states). Specifically, one case that I think would be good to look at would be the case where a number is picked uniformly at random from the natural numbers and combined with the current state in order to get the next state (or, perhaps, a pair of natural numbers). Or from some other countably infinite set. So, then (if that all worked out), you could talk about stuff like a stationary distribution of a uniform random walk on Z^N and stuff like that.

The Idea:

If all that stuff works out, then, take this markov chain: State space: S∞ , the symmetric group on N, or alternatively the normal subgroup of S∞ comprised of its elements which fix all but at most finitely many of the elements of N. Initial state (if relevant) : The identity permutation. State transitions: Pick two natural numbers uniformly at random from N, and apply the transposition of those two natural numbers to the current state. Desired outcome: A uniform probability distribution on S∞ (or on the normal subgroup of it of finite permutations)!!! (likely with infinitesimal components in the probabilities, but one can take the standard part of these probabilities, and at least under conditioning maybe these would even be positive.)

Issues and Limitations:

Even if this all works out, I’m not sure it would really give me quite what I want. Notably, in the systems described in the papers I read-but-did-not-fully-understand, for some events, the standard parts of the probabilities of some events depended on the ultrafilter chosen.

Also, if every (or even “most”) of the permutations had an infinitesimal probability (i.e. a nonzero probability with standard part 0) in the stationary distribution, that probably wouldn’t really do what I want it to do. Or, really, no, that’s not quite the problem. If the exact permutations had infinitesimal probability, that could be fine, but I would hope that the events of the form “the selected permutation begins with the finite prefix σ” would all have probabilities with positive standard part.

But, it seems plausible, even likely, that if this all worked, that for any natural number n, that the probability that a permutation selected from such a stationary distribution would send 1 to a number less than n, would be infinitesimal. (not a particular infinitesimal. The specific infinitesimal would depend on n, and would increase as n increased, but would remain infinitesimal for any finite n).

This is undesirable! I wouldn’t be able to sample prefixes to this in the same way that I can sample prefixes to infinite sequences of coin tosses.

(Alternatively, it might turn out that the standard part of the probability that the selected permutation matches the identity permutation for the first m integers is 1, as a result the probability that a transposition include one of the first m integers being infinitesimal, which might be less unfortunate, but still seems bad? I’m less certain that this outcome would be undesirable.)

I think that, what I really want, would require having a probability distribution on N which, while not uniform, would be the “most natural” probability distribution on N, in the same way that the uniform distribution on {1,2,3,4} appears to be the “most natural” probability distribution on {1,2,3,4}, when one has no additional information, and also that said distribution on N would have positive (standard parts of) probabilities for each natural number.

To have this would require both finding such a distribution, and also showing that that distribution really is the “most natural” probability distribution on N. Demonstrating that such a distribution really is the “most natural” seems likely to be rather difficult, especially given that I am uncertain of exactly what I mean when I say “most natural”.

...

If I should have put a read-more in here, sorry. I can edit the post to put one in if you tell me to. edit: forgot to put in the “σ” . did that now.

1 note

·

View note

Text

On The Fae and Things That Are Not Metaphors

On The Fae and Things That Are Not Metaphors

(Epistemic status: Potentially a memetic hazard. This is a narrative about actualizing as a member of society.) There are stories, faerie tales we call them. I don’t mean Disney, I mean the real faerie tales. The ones with the Seelie, the Unseelie, where the outcome is uncertain, almost arbitrary. The ones where the viewpoint character breaks The Rules and maybe they get out of it…or maybe they…

View On WordPress

4 notes

·

View notes

Text

Resolving to blog more

Someone recounted to me at a rationalist meeting some months ago that Scott Alexander writes his SSC posts really rapidly, with not much more thought going into the writing than what needs to be there. He’s cranking out essays at lightning rate as a passion project, whereas I struggle a lot longer to get a tenth as much done for my freaking schoolwork. I think it’s partly a perfectionist impulse - but also it’s a “wait this paper doesn’t even make sense, what the hell am i doing” impulse. I don’t even think that i put the bar of acceptable minimum quality too low, it’s that i’m not actually that good at rigorous thinking. Whatever it is, it’s really cut me hard in school, to the point where i’ve avoided writing stuff because of neuroses.

But i was going through his old stuff, and I realized. Yes, part of it is intelligence and an incredibly omnivorous curiosity, both of which are noticeable even in his accessible earliest LJ entries in 2006, but a lot of it is just the culmination of over a decade of practice. But also he was a philosophy major, so epistemic status uncertain here. His old LJ, it just looks like a regular blog. What he’s doing, where his interests lie. He’s writing for some people, but it looks like mostly for himself. I think I write pretty well when i work at it, but not at top speed. If I had a decade, how good could I be?

So. This isn’t the perfect platform, nor is my internet personality as clean as I’d like it to be. I’m not quite sure what I’d like to get out of it (what could i write about, how could i make it good). I guess the things I have to talk about are mostly Rationality, Gender, maybe art/illustration? Artists, fandoms, and crowdfunding, maybe. I have this problem where I’m majoring in fandom but oops, I’m not in any mainstream fandoms, just these weird dorky nerd ones which are qualitatively different. Maybe self improvement. Maaaaaaybe politics.

This isn’t the first time that i’ve pledged to write more, and i’m not quite sure how i could make it stick. “10,000 hours” has become an old rag by now, but this is the point at which i want to start making a go of it.

This took nearly half an hour to type and revise, and while it’s better and more complete than my initial set of thoughts, I think I could have articulated and reanalyzed in a lot less time. Theres a lot of mess in here. I want to work on faster and more streamlined.

1 note

·

View note

Text

Each Of Us Know Different Things: Epistemological Approaches to Quantum theory

“To see why the quantum state might represent what someone knows, consider another case where we use probabilities. Before your friend rolls a die, you guess what side will face up. If your friend rolls a standard six-sided die, you’d usually say there is about a 17 percent (or one in six) chance that you’ll be right, whatever you guess. Here the probability represents something about you: your state of knowledge about the die. Let’s say your back is turned while she rolls it, so that she sees the result—a six, say—but not you. As far as you are concerned, the outcome remains uncertain, even though she knows it. Probabilities that represent a person’s uncertainty, even though there is some fact of the matter, are called epistemic, from one of the Greek words for knowledge.

This means that you and your friend could assign very different probabilities, without either of you being wrong. You say the probability of the die showing a six is 17 percent, whereas your friend, who has seen the outcome already, says that it is 100 percent. That is because each of you knows different things, and the probabilities are representations of your respective states of knowledge. The only incorrect assignments, in fact, would be ones that said there was no chance at all that the die showed a six.”

The above passages come from an article in Nautilus magazine. The article is titled: Is Quantum Theory About Reality or What We Know?, and it discusses epistemological approaches to quantum theory.

I sort of unknowingly (no pun intended) took this approach at my book group a few weeks ago while reading Deepak Chopra’s new book (Chopra is a very anthropocentric subjective idealist, btw). In the book, Chopra was using quantum theory to make a case for subjective idealism by citing the famous thought experiment about a dead cat dreamed up by Erwin Schrödinger. Schrödinger used the thought experiment to illustrate the problem he saw with the Copenhagen Interpretation of quantum mechanics, which essentially states that an object in a physical system can simultaneously exist in all possible configurations, but observing the system forces the system to collapse and forces the object into just one of those possible states. So, as Berkley would say, to be is to be perceived. And this is what Chopra also wants to argue: reality, at its most fundamental level, is more like the human mind than anything else.

Approaching this from a panpsychist/panexperientialist point of view, I speculated that if we understand that physics, like biology, is the study of living organisms that also have various degrees of subjectivity like humans (i.e. physics studies the smaller organisms and biology studies slightly larger ones), then yes, those organisms which constitute the box are also constantly prehending/feeling/taking account of things in their environment, and certainly do participate in creating reality. So, like the dice example mentioned in the passages above, the organisms that make up the box for example, which again are also perceiving subjects, help to end the quantum superposition, collapsing it, and thus already have knowledge of the cat’s status inside the box even if the outside observers are left with epistemic uncertainty. I think it’s reasonable to speculate that both the observers outside the box (the scientists) and the observers inside the box (the simpler organisms constituting the box) can assign different probabilities without being wrong.

Each Of Us Know Different Things: Epistemological Approaches to Quantum theory was originally published on TURRI

0 notes

Text

:@WilliamBaude: Constitutional Liquidation

My latest article on James Madison and constitutional practice, with some criticisms and related links

Earlier this month, the Stanford Law Review published my latest article, Constitutional Liquidation. I've blogged about some of the ideas here over the years, and also discussed them with what feels like nearly everybody I've encountered in the past few years, but the final abstract is below:

James Madison wrote that the Constitution's meaning could be "liquidated" and settled by practice. But the term "liquidation" is not widely known, and its precise meaning is not understood. This Article attempts to rediscover the concept of constitutional liquidation, and thereby provide a way to ground and understand the role of historical practice in constitutional law.

Constitutional liquidation had three key elements. First, there had to be a textual indeterminacy. Clear provisions could not be liquidated, because practice could "expound" the Constitution but could not "alter" it. Second, there had to be a course of deliberate practice. This required repeated decisions that reflected constitutional reasoning. Third, that course of practice had to result in a constitutional settlement. This settlement was marked by two related ideas: acquiescence by the dissenting side, and "the public sanction"—a real or imputed popular ratification.

While this Article does not provide a full account of liquidation's legal status at or after the Founding, liquidation is deeply connected to shared constitutional values. It provides a structured way for understanding the practice of departmentalism. It is analogous to Founding-era precedent, and could provide a salutary improvement over the modern doctrine of stare decisis. It is consistent with the core arguments for adhering to tradition. And it is less susceptible to some of the key criticisms against the more capacious use of historical practice.

Apart from the article, I wanted to share links to a few related things.

1. Last fall, I presented the article in an especially lively discussion at Harvey Mansfield's Program on Constitutional Government. You can watch a video of the proceedings, which include discussions of judicial review, judicial supremacy, the Affordable Care Act, and much more.

2. Curt Bradley and Niel Siegel already have a critique up on SSRN. In Historical Gloss, Madisonian Liquidation, and the Originalism Debate, they defend the rival concept of "historical gloss" and argue:

We argue that a narrow account of liquidation, offered by Professor Caleb Nelson, most clearly distinguishes liquidation from gloss, but that it does so in ways that are normatively problematic. We further argue that a broader account of liquidation, recently offered by Professor William Baude, responds to those normative concerns by diminishing the distinction between liquidation and gloss, but that significant differences remain that continue to raise normative problems for liquidation. Finally, we question whether either scholar's account of liquidation is properly attributed to Madison.

3. Finally, one lynchpin of Madison's theory of liquidation was the difference between clear text, which could not be liquidated, and unclear or ambiguous questions, which could. This puts a lot of pressure on how we decide when text is "clear," which was until recently a woefully underexained problem in legal interpretation. But there are two great new articles on this, one by Ryan Doerfler and one by Richard Re.

Here's Doerfler, Going 'Clear':

This Article proposes a new framework for evaluating doctrines that assign significance to whether a statutory text is "clear." As previous scholarship has failed to recognize, such doctrines come in two distinct types. The first, which this Article call evidence-management doctrines, instruct a court to "start with the text," and to proceed to other sources of statutory meaning only if absolutely necessary. Because they structure a court's search for what a statute means, the question with each of these doctrines is whether adhering to it aids or impairs that search — the character of the evaluation is, in other words, mostly epistemic. The second type, which this Article call uncertainty-management doctrines, instead tell a court to decide a statutory case on some ground other than statutory meaning if, after considering all the available sources, what the statute means remains opaque. The idea underlying these doctrines is that if statutory meaning is uncertain, erring in some direction constitutes "playing it safe." With each such doctrine, the question is thus whether erring in the identified direction really is "safer" than the alternative(s) — put differently, evaluation of these doctrines is fundamentally practical.

This Article goes on to address increasingly popular categorical objections to "clarity" doctrines. As this Article explains, the objection that nobody knows how clear a text has to be to count as "clear" rests partly on a misunderstanding of how "clarity" determinations work — such determinations are sensitive to context, including legal context, in ways critics of these doctrines fail to account for. In addition, the objection that "clarity" doctrines are vulnerable to willfulness or motivated reasoning is fair but, as this Article shows, applies with equal force to any plausible alternative.

And here's Re, Clarity Doctrines:

Clarity doctrines are a pervasive feature of legal practice. But there is a fundamental lack of clarity regarding the meaning of legal clarity itself, as critics have pointed out. This article explores the nature of legal clarity as well as its proper form. In short, the meaning of legal clarity in any given doctrinal context should turn on the purposes of the relevant doctrine. And the reasons for caring about clarity generally have to do with either (i) the deciding court's certainty about the right answer or (ii) the predictability that other interpreters (apart from the deciding court) would converge on a given answer. In general, debates about what type and degree of clarity to require often reflect implicit disagreements about the relevant clarity doctrine's goals. So by challenging a doctrine's accepted purposes, reformers can justify changes in clarity doctrines. To show as much, this article discusses a series of clarity doctrines and illuminates several underappreciated avenues for reform, particularly as to federal habeas corpus, Chevron, qualified immunity, constitutional avoidance, and the rule of lenity. Finally, this article acknowledges, but also discusses ways of mitigating, several anxieties about clarity doctrines, including worries that major clarity doctrines are too pluralistic, malleable, or awkward.

I'm excited and heartened by these pieces.

0 notes