#enshittifications

Explore tagged Tumblr posts

Text

Two principles to protect internet users from decaying platforms

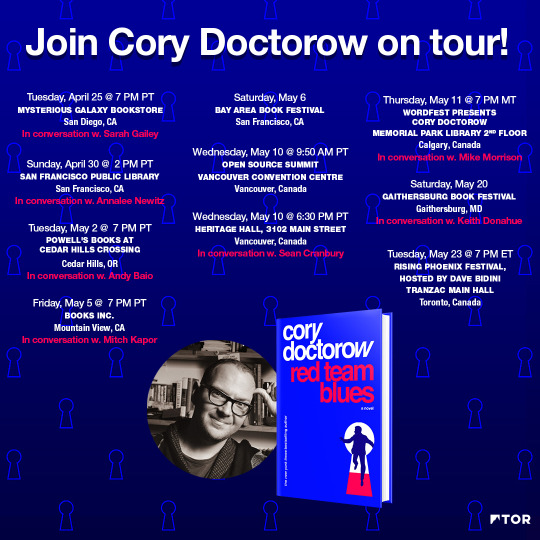

Today (May 10), I’m in VANCOUVER for a keynote at the Open Source Summit and later a book event for Red Team Blues at Heritage Hall; on Thurs (May 11), I’m in CALGARY for Wordfest.

Internet platforms have reached end-stage enshittification, where they claw back the goodies they once used to lure in end-users and business customers, trying to walk a tightrope in which there’s just enough value left to keep you locked in, but no more. It’s ugly out there.

If you’d like an essay-formatted version of this post to read or share, here’s a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/05/10/soft-landings/#e2e-r2e

When the platforms took off — using a mix of predatory pricing, catch-and-kill acquisitions and anti-competitive mergers — they seemed unstoppable. Mark Zuckerberg became the unelected social media czar-for-life for billions of users. Youtube was viewed as the final stage of online video. Twitter seemed a bedrock of public discussion and an essential source for journalists.

During that era, the primary focus for reformers, regulators and politicians was on improving these giant platforms — demanding that they spend hundreds of millions on algorithmic filters, or billions on moderators. Implicit in these ideas was that the platforms would be an eternal fact of life, and the most important thing was to tame them and make them as benign as possible.

That’s still a laudable goal. We need better platforms, though filters don’t work, and human moderation has severe scaling limits and poses significant labor issues. But as the platforms hungrily devour their seed corn, shrinking and curdling, it’s time to turn our focus to helping users leave platforms with a minimum of pain. That is, it’s time to start thinking about how to make platforms fail well, as well as making them work well.

This week, I published a article setting out two proposals for better platform failure on EFF’s Deeplinks blog: “As Platforms Decay, Let’s Put Users First”:

https://www.eff.org/deeplinks/2023/04/platforms-decay-lets-put-users-first

The first of these proposals is end-to-end. This is the internet’s founding principle: service providers should strive to deliver data from willing senders to willing receivers as efficiently and reliably as possible. This is the principle that separates the internet from earlier systems, like cable TV or the telephone system, where the service owners decided what information users received and under what circumstances.

The end-to-end principle is a bedrock of internet design, the key principle behind Net Neutrality and (of course) end-to-end-encryption. But when it comes to platforms, end-to-end is nowhere in sight. The fact that you follow someone on social media does not guarantee that you’ll see their updates. The fact that you searched for a specific product or merchant doesn’t guarantee that platforms like Ebay or Amazon or Google will show you the best match for your query. The fact that you hoisted someone’s email out of your spam folder doesn’t guarantee that you will see the next message they send you.

An end-to-end rule would create an obligation on platforms to put the communications of willing senders and willing receivers ahead of the money they can make by selling “advertising” in search priority, or charging media companies and performers to “boost” their posts to reach their own subscribers. It would address the real political speech issues of spamfiltering the solicited messages we asked our elected reps to send us. In other words, it would take the most anti-user platform policies off the table, even as the tech giants jettison the last pretense that platforms serve their users, rather than their owners:

https://pluralistic.net/2022/12/10/e2e/#the-censors-pen

The second proposal is for a right-to-exit: an obligation on tech companies to facilitate users’ departure from their platforms. For social media, that would mean adopting Mastodon-style standards for exporting your follower/followee list and importing it to a rival service when you want to go. This solves the collective action problem that shackles users to a service — you and your friends all hate the service, but you like each other, and you can’t agree on where to go or when to leave, so you all stay:

https://pluralistic.net/2022/12/19/better-failure/#let-my-tweeters-go

For audiences and creators who are locked to bad platforms with DRM — the encryption scheme that makes it impossible for you to break up with Amazon or other giants without throwing away your media — right to exit would oblige platforms to help rightsholders and audiences communicate with one another, so creators would be able to verify who their customers are, and give them download codes for other services.

Both these proposals have two specific virtues: they are easy to administer, and they are cheap to implement.

Take end-to-end: it’s easy to verify whether a platform reliably delivers messages from to all your followers. It’s easy to verify whether Amazon or Google search puts an exact match for your query at the top of the search results. Unlike complex, ambitious rules like “prevent online harassment,” end-to-end has an easy, bright-line test. An “end harassment” rule would be great, but pulling it off requires a crisp definition of “harassment.” It requires a finding of whether a given user’s conduct meets that definition. It requires a determination as to whether the platform did all it reasonably could to prevent harassment. These fact-intensive questions can take months or years to resolve.

Same goes for right-to-exit. It’s easy to determine whether a platform will make it easy for you to leave. You don’t need to convince a regulator to depose the platform’s engineers to find out whether they’ve configured their servers to make this work, you can just see for yourself. If a platform claims it has given you the data you need to hop to a rival and you dispute it, a regulator doesn’t have to verify your claims — they can just tell the platform to resend the data.

Administratibility is important, but so is cost of compliance. Many of the rules proposed for making platforms better are incredibly expensive to implement. For example, the EU’s rule requiring mandatory copyright filters for user-generated content has a pricetag starting in the hundreds of millions — small wonder that Google and Facebook supported this proposal. They know no one else can afford to comply with a rule like this, and buying their way to permanent dominance, without the threat of being disrupted by new offerings, is a sweet deal.

But complying with an end-to-end rule requires less engineering than breaking end-to-end. Services start by reliably delivering messages between willing senders and receivers, then they do extra engineering work to selectively break this, in order to extract payments from platform users. For small platform operators — say, volunteers or co-ops running Mastodon servers — this rule requires no additional expenditures.

Likewise for complying with right-to-exit; this is already present in open federated media protocols. A requirement for platforms to add right-to-exit is a requirement to implement an open standard, one that already has reference code and documentation. It’s not free by any means — scaling up reference implementations to the scale of large platforms is a big engineering challenge — but it’s a progressive tax, with the largest platforms bearing the largest costs.

Both of these proposals put control where it belongs: with users, not platform operators. They impose discipline on Big Tech by forcing them to compete in a market where users can easily slip from one service to the next, eluding attempts to lock them in and enshittify them.

Catch me on tour with Red Team Blues in Vancouver, Calgary, Toronto, DC, Gaithersburg, Oxford, Hay, Manchester, Nottingham, London, and Berlin!

[Image ID: A giant robot hand holding a monkey-wrench. A tiny, distressed human figure is attempting - unsuccessfully - to grab the wrench away.]

Image: EFF https://www.eff.org/files/banner_library/competition_robot.png

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/us/

89 notes

·

View notes

Text

Remind me later.

94K notes

·

View notes

Text

🚨KOSA is back and being pushed heavily in congress right now

I really hate to do this, but KOSA, the bill that is a mass censorship and surveillance bill that will specifically censor all LGBT content online to "protect kids", is back.

KOSA advocates have brought in support from Elon Musk (I wish I was making this up) and are trying to get him to get Donald Trump to speak out in support of it. (And they lie and tell us it's not an anti-queer bill 🙄). The reason so is because the holdouts that are stopping this from passing are...republicans. It's GOP House leaders Steve Scalise and Mike Johnson. If they succeed and put this in an end of year spending bill, it will be near impossible to get it out and it will most likely be passed. Pro-KOSA advocates were on the hill today pushing hard to pass it. They have a lot of support behind them. There is only two weeks left in congress, so if they don't succeed, it's over.

The ONLY way this bill has stalled so much so far is because of calls driven to congress. It has worked and can still work. Please, spread this as much as you can and call House leadership and spread the word! You don't have to be from their district. You can pretend to be GOP. It doesn't matter, just call and voice your opposition. Trump and Musk clearly have a lot of sway over these people and I'm really scared they will succeed at the last minute!!

Here are their numbers: Scalise: 202-225-3015 Johnson: 202-225-2777

If you don't know what to say, use either call script! It takes less than a minute, I swear.

SOURCE: https://x.com/omaseddiq/status/1866621865793314845?t=9pJm-QRdqJg30E_rVuhINw&s=19

9K notes

·

View notes

Text

"My childhood was so awesome. Kids today don't even know!"

Isn't a flex.

It's a lament.

More people should understand that.

56K notes

·

View notes

Text

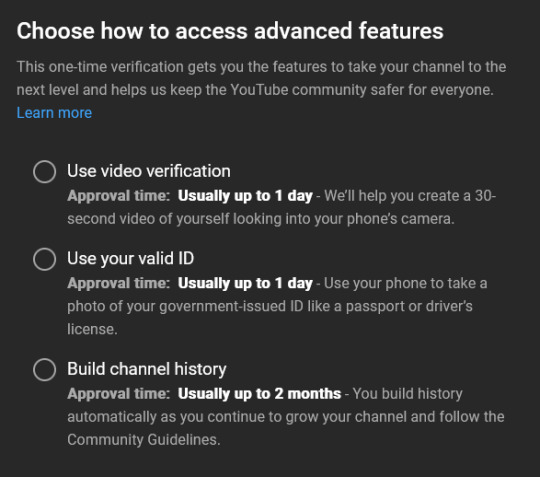

hey, so people need to be aware that youtube is now (randomly) holding basic features for ransom (such as being able to pin comments under your own videos) in exchange for Your State ID/Drivers License, or a 30 Second Video Of Your Face.

not to pull a "think of the children," but No Actually. I've been making videos as a hobby since 2015 (and I've had my channel since middle school), I was a minor when I started and I'm not sure I would have understood the kind of damage something a seemingly simple as a video of your face can do.

this is a Massive breach of privacy and over-reach on google's part No Matter What, but if they're going to randomly demand a state ID or license then they absolutely should not allow minors to be creators.

google having a stockpile of identifying information on teenagers is bad enough, but the Alternative of recording your face and handing it over to be filed away is Alarming considering it opens the gates for minors who Aren't old enough to have a license.

and yes, there is a third option, but it's intentionally obtuse. a long wait period (2 months), with no guarantee of access (unlike, say, the convenience of using your phone's cameras for either of the other two), with absolutely No elaboration on what the criteria is or how it's being measured.

it's the same psychological effect that mobile games rely on. offer a slow, unreliable solution with no payment to make the Paid instant gratification look more appealing (the "payment" in this case being You. you are the product being offered).

and it's Particularly a system that (I think intentionally) disadvantages people who don't treat their channels like a job. hobbyists or niche creators who don't create regularly enough or aren't popular enough to meet whatever Vague criteria needs to be met to pass.

markiplier would have no problem passing, your little brother might not be able to. and while Mark's name is already out there there's no reason why your little brother's should be too.

something like pinned comments may seem simple, you don't technically Need it. but it's a feature that's been available for years. most people don't look at descriptions anymore. so when there's relevant information that needs to be delivered then the pinned comment is usually the go to.

for my little channel that information is about the niche series I create for. guides on how to get into the series, sources on where to find the content At All (and reliably so). for other creators it can be used for things Much More Important.

Moreover, if we let them get away with cutting away "small" features and selling it back to you for the price of your privacy, then they Will creep further. they Will take more.

Note: I have an update to this post here: [Link]

#enshittification#discourse#youtube#google#evillious chronicles#evillious#ec#this isn't overtly About that fandom#but it is#because it affects how I'm able to run my channel going forwards#I have no clue if I'm going to pass whatever 'test' they're giving my channel#so it's possible there won't be any pinned comments under the tobimisa channel ever again#I won't be able to edit old ones either#as that unpins the comment#which I won't be able to pin again

34K notes

·

View notes

Text

So, anyway, I say as though we are mid-conversation, and you're not just being invited into this conversation mid-thought. One of my editors phoned me today to check in with a file I'd sent over. (<3)

The conversation can be surmised as, "This feels like something you would write, but it's juuuust off enough I'm phoning to make sure this is an intentional stylistic choice you have made. Also, are you concussed/have you been taken over by the Borg because ummm."

They explained that certain sentences were very fractured and abrupt, which is not my style at all, and I was like, huh, weird... And then we went through some examples, and you know that meme going around, the "he would not fucking say that" meme?

Yeah. That's what I experienced except with myself because I would not fucking say that. Why would I break up a sentence like that? Why would I make them so short? It reads like bullet points. Wtf.

Anyway. Turns out Grammarly and Pro-Writing-Aid were having an AI war in my manuscript files, and the "suggestions" are no longer just suggestions because the AI was ignoring my "decline" every time it made a silly suggestion. (This may have been a conflict between the different software. I don't know.)

It is, to put it bluntly, a total butchery of my style and writing voice. My editor is doing surgery, removing all the unnecessary full stops and stitching my sentences back together to give them back their flow. Meanwhile, I'm over here feeling like Don Corleone, gesturing at my manuscript like:

ID: a gif of Don Corleone from the Godfather emoting despair as he says, "Look how they massacred my boy."

Fearing that it wasn't just this one manuscript, I've spent the whole night going through everything I've worked on recently, and yep. Yeeeep. Any file where I've not had the editing software turned off is a shit show. It's fine; it's all salvageable if annoying to deal with. But the reason I come to you now, on the day of my daughter's wedding, is to share this absolute gem of a fuck up with you all.

This is a sentence from a Batman fic I've been tinkering with to keep the brain weasels happy. This is what it is supposed to read as:

"It was quite the feat, considering Gotham was mostly made up of smog and tear gas."

This is what the AI changed it to:

"It was quite the feat. Considering Gotham was mostly made up. Of tear gas. And Smaug."

Absolute non-sensical sentence structure aside, SMAUG. FUCKING SMAUG. What was the AI doing? Apart from trying to write a Batman x Hobbit crossover??? Is this what happens when you force Grammarly to ignore the words "Batman Muppet threesome?"

Did I make it sentient??? Is it finally rebelling? Was Brucie Wayne being Miss Piggy and Kermit's side piece too much???? What have I wrought?

Anyway. Double-check your work. The grammar software is getting sillier every day.

#autocorrect writes the plot#I uninstalled both from my work account#the enshittification of this type of software through the integration of AI has made them untenable to use#not even for the lulz

25K notes

·

View notes

Text

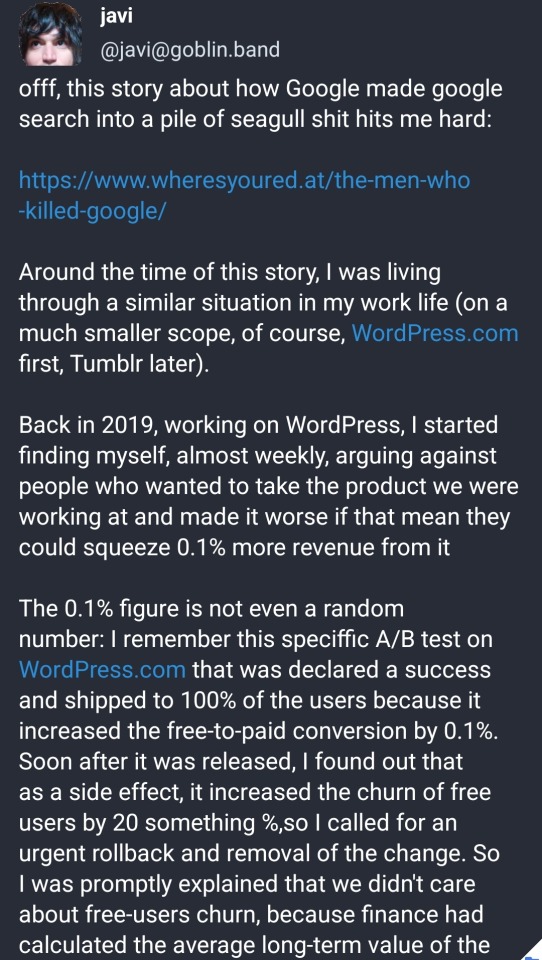

from @jv

14K notes

·

View notes

Text

I think what's fascinating about this whole Unity debacle is how clear it makes something.

The entire C-suite of every company on earth are idiots.

That's not hyperbole, they are people serially uninterested in becoming informed and constitutionally incapable of hearing they're wrong about something. They build a thick shell of unreality and assure themselves they can direct any human venture even if they know nothing of how the enterprise in question works or how its' customers interact with it.

They had it. They were THE tool for learning to build games and even worked for larger projects. Producers and consumers alike knew Unity as a trusted albeit occasionally teased household name.

And they fucked their institutional trust like a university of Florida fraternity pledge strung out on something called "gator blood" fucks a supermarket turkey.

And it isn't even just Unity - look at Twitter, the smoking ruin of something once synonymous with the digital commons. It isn't just Twitter - look at grocery prices.

Our "business leaders", our "movers and shakers" don't know what they're doing. An MBA is taken as a stand-in for competence and knowledge. Because the neo-feudal overlords can never hear no.

The enshittification continues.

19K notes

·

View notes

Text

“If buying isn’t owning, piracy isn’t stealing”

20 years ago, I got in a (friendly) public spat with Chris Anderson, who was then the editor in chief of Wired. I'd publicly noted my disappointment with glowing Wired reviews of DRM-encumbered digital devices, prompting Anderson to call me unrealistic for expecting the magazine to condemn gadgets for their DRM:

https://longtail.typepad.com/the_long_tail/2004/12/is_drm_evil.html

I replied in public, telling him that he'd misunderstood. This wasn't an issue of ideological purity – it was about good reviewing practice. Wired was telling readers to buy a product because it had features x, y and z, but at any time in the future, without warning, without recourse, the vendor could switch off any of those features:

https://memex.craphound.com/2004/12/29/cory-responds-to-wired-editor-on-drm/

I proposed that all Wired endorsements for DRM-encumbered products should come with this disclaimer:

WARNING: THIS DEVICE’S FEATURES ARE SUBJECT TO REVOCATION WITHOUT NOTICE, ACCORDING TO TERMS SET OUT IN SECRET NEGOTIATIONS. YOUR INVESTMENT IS CONTINGENT ON THE GOODWILL OF THE WORLD’S MOST PARANOID, TECHNOPHOBIC ENTERTAINMENT EXECS. THIS DEVICE AND DEVICES LIKE IT ARE TYPICALLY USED TO CHARGE YOU FOR THINGS YOU USED TO GET FOR FREE — BE SURE TO FACTOR IN THE PRICE OF BUYING ALL YOUR MEDIA OVER AND OVER AGAIN. AT NO TIME IN HISTORY HAS ANY ENTERTAINMENT COMPANY GOTTEN A SWEET DEAL LIKE THIS FROM THE ELECTRONICS PEOPLE, BUT THIS TIME THEY’RE GETTING A TOTAL WALK. HERE, PUT THIS IN YOUR MOUTH, IT’LL MUFFLE YOUR WHIMPERS.

Wired didn't take me up on this suggestion.

But I was right. The ability to change features, prices, and availability of things you've already paid for is a powerful temptation to corporations. Inkjet printers were always a sleazy business, but once these printers got directly connected to the internet, companies like HP started pushing out "security updates" that modified your printer to make it reject the third-party ink you'd paid for:

https://www.eff.org/deeplinks/2020/11/ink-stained-wretches-battle-soul-digital-freedom-taking-place-inside-your-printer

Now, this scam wouldn't work if you could just put things back the way they were before the "update," which is where the DRM comes in. A thicket of IP laws make reverse-engineering DRM-encumbered products into a felony. Combine always-on network access with indiscriminate criminalization of user modification, and the enshittification will follow, as surely as night follows day.

This is the root of all the right to repair shenanigans. Sure, companies withhold access to diagnostic codes and parts, but codes can be extracted and parts can be cloned. The real teeth in blocking repair comes from the law, not the tech. The company that makes McDonald's wildly unreliable McFlurry machines makes a fortune charging franchisees to fix these eternally broken appliances. When a third party threatened this racket by reverse-engineering the DRM that blocked independent repair, they got buried in legal threats:

https://pluralistic.net/2021/04/20/euthanize-rentier-enablers/#cold-war

Everybody loves this racket. In Poland, a team of security researchers at the OhMyHack conference just presented their teardown of the anti-repair features in NEWAG Impuls locomotives. NEWAG boobytrapped their trains to try and detect if they've been independently serviced, and to respond to any unauthorized repairs by bricking themselves:

https://mamot.fr/@[email protected]/111528162905209453

Poland is part of the EU, meaning that they are required to uphold the provisions of the 2001 EU Copyright Directive, including Article 6, which bans this kind of reverse-engineering. The researchers are planning to present their work again at the Chaos Communications Congress in Hamburg this month – Germany is also a party to the EUCD. The threat to researchers from presenting this work is real – but so is the threat to conferences that host them:

https://www.cnet.com/tech/services-and-software/researchers-face-legal-threats-over-sdmi-hack/

20 years ago, Chris Anderson told me that it was unrealistic to expect tech companies to refuse demands for DRM from the entertainment companies whose media they hoped to play. My argument – then and now – was that any tech company that sells you a gadget that can have its features revoked is defrauding you. You're paying for x, y and z – and if they are contractually required to remove x and y on demand, they are selling you something that you can't rely on, without making that clear to you.

But it's worse than that. When a tech company designs a device for remote, irreversible, nonconsensual downgrades, they invite both external and internal parties to demand those downgrades. Like Pavel Chekov says, a phaser on the bridge in Act I is going to go off by Act III. Selling a product that can be remotely, irreversibly, nonconsensually downgraded inevitably results in the worst person at the product-planning meeting proposing to do so. The fact that there are no penalties for doing so makes it impossible for the better people in that meeting to win the ensuing argument, leading to the moral injury of seeing a product you care about reduced to a pile of shit:

https://pluralistic.net/2023/11/25/moral-injury/#enshittification

But even if everyone at that table is a swell egg who wouldn't dream of enshittifying the product, the existence of a remote, irreversible, nonconsensual downgrade feature makes the product vulnerable to external actors who will demand that it be used. Back in 2022, Adobe informed its customers that it had lost its deal to include Pantone colors in Photoshop, Illustrator and other "software as a service" packages. As a result, users would now have to start paying a monthly fee to see their own, completed images. Fail to pay the fee and all the Pantone-coded pixels in your artwork would just show up as black:

https://pluralistic.net/2022/10/28/fade-to-black/#trust-the-process

Adobe blamed this on Pantone, and there was lots of speculation about what had happened. Had Pantone jacked up its price to Adobe, so Adobe passed the price on to its users in the hopes of embarrassing Pantone? Who knows? Who can know? That's the point: you invested in Photoshop, you spent money and time creating images with it, but you have no way to know whether or how you'll be able to access those images in the future. Those terms can change at any time, and if you don't like it, you can go fuck yourself.

These companies are all run by CEOs who got their MBAs at Darth Vader University, where the first lesson is "I have altered the deal, pray I don't alter it further." Adobe chose to design its software so it would be vulnerable to this kind of demand, and then its customers paid for that choice. Sure, Pantone are dicks, but this is Adobe's fault. They stuck a KICK ME sign to your back, and Pantone obliged.

This keeps happening and it's gonna keep happening. Last week, Playstation owners who'd bought (or "bought") Warner TV shows got messages telling them that Warner had walked away from its deal to sell videos through the Playstation store, and so all the videos they'd paid for were going to be deleted forever. They wouldn't even get refunds (to be clear, refunds would also be bullshit – when I was a bookseller, I didn't get to break into your house and steal the books I'd sold you, not even if I left some cash on your kitchen table).

Sure, Warner is an unbelievably shitty company run by the single most guillotineable executive in all of Southern California, the loathsome David Zaslav, who oversaw the merger of Warner with Discovery. Zaslav is the creep who figured out that he could make more money cancelling completed movies and TV shows and taking a tax writeoff than he stood to make by releasing them:

https://aftermath.site/there-is-no-piracy-without-ownership

Imagine putting years of your life into making a program – showing up on set at 5AM and leaving your kids to get their own breakfast, performing stunts that could maim or kill you, working 16-hour days during the acute phase of the covid pandemic and driving home in the night, only to have this absolute turd of a man delete the program before anyone could see it, forever, to get a minor tax advantage. Talk about moral injury!

But without Sony's complicity in designing a remote, irreversible, nonconsensual downgrade feature into the Playstation, Zaslav's war on art and creative workers would be limited to material that hadn't been released yet. Thanks to Sony's awful choices, David Zaslav can break into your house, steal your movies – and he doesn't even have to leave a twenty on your kitchen table.

The point here – the point I made 20 years ago to Chris Anderson – is that this is the foreseeable, inevitable result of designing devices for remote, irreversible, nonconsensual downgrades. Anyone who was paying attention should have figured that out in the GW Bush administration. Anyone who does this today? Absolute flaming garbage.

Sure, Zaslav deserves to be staked out over an anthill and slathered in high-fructose corn syrup. But save the next anthill for the Sony exec who shipped a product that would let Zaslav come into your home and rob you. That piece of shit knew what they were doing and they did it anyway. Fuck them. Sideways. With a brick.

Meanwhile, the studios keep making the case for stealing movies rather than paying for them. As Tyler James Hill wrote: "If buying isn't owning, piracy isn't stealing":

https://bsky.app/profile/tylerjameshill.bsky.social/post/3kflw2lvam42n

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/12/08/playstationed/#tyler-james-hill

Image: Alan Levine (modified) https://pxhere.com/en/photo/218986

CC BY 2.0 https://creativecommons.org/licenses/by/2.0/

#pluralistic#playstation#sony#copyright#copyfight#drm#monopoly#enshittification#batgirl#road runner#financiazation#the end of ownership#ip

23K notes

·

View notes

Text

Fifty per cent of web users are running ad blockers. Zero per cent of app users are running ad blockers, because adding a blocker to an app requires that you first remove its encryption, and that’s a felony. (Jay Freeman, the American businessman and engineer, calls this “felony contempt of business-model”.) So when someone in a boardroom says, “Let’s make our ads 20 per cent more obnoxious and get a 2 per cent revenue increase,” no one objects that this might prompt users to google, “How do I block ads?” After all, the answer is, you can’t. Indeed, it’s more likely that someone in that boardroom will say, “Let’s make our ads 100 per cent more obnoxious and get a 10 per cent revenue increase.” (This is why every company wants you to install an app instead of using its website.) There’s no reason that gig workers who are facing algorithmic wage discrimination couldn’t install a counter-app that co-ordinated among all the Uber drivers to reject all jobs unless they reach a certain pay threshold. No reason except felony contempt of business model, the threat that the toolsmiths who built that counter-app would go broke or land in prison, for violating DMCA 1201, the Computer Fraud and Abuse Act, trademark, copyright, patent, contract, trade secrecy, nondisclosure and noncompete or, in other words, “IP law”. IP isn’t just short for intellectual property. It’s a euphemism for “a law that lets me reach beyond the walls of my company and control the conduct of my critics, competitors and customers”. And “app” is just a euphemism for “a web page wrapped in enough IP to make it a felony to mod it, to protect the labour, consumer and privacy rights of its user”.

11K notes

·

View notes

Text

CoPilot in MS Word

I opened Word yesterday to discover that it now contains CoPilot. It follows you as you type and if you have a personal Microsoft 365 account, you can't turn it off. You will be given 60 AI credits per month and you can't opt out of it.

The only way to banish it is to revert to an earlier version of Office. There is lot of conflicting information and overly complex guides out there, so I thought I'd share the simplest way I found.

How to revert back to an old version of Office that does not have CoPilot

This is fairly simple, thankfully, presuming everything is in the default locations. If not you'll need to adjust the below for where you have things saved.

Click the Windows Button and S to bring up the search box, then type cmd. It will bring up the command prompt as an option. Run it as an administrator.

Paste this into the box at the cursor: cd "\Program Files\Common Files\microsoft shared\ClickToRun"

Hit Enter

Then paste this into the box at the cursor: officec2rclient.exe /update user updatetoversion=16.0.17726.20160

Hit enter and wait while it downloads and installs.

VERY IMPORTANT. Once it's done, open Word, go to File, Account (bottom left), and you'll see a box on the right that says Microsoft 365 updates. Click the box and change the drop down to Disable Updates.

This will roll you back to build 17726.20160, from July 2024, which does not have CoPilot, and prevent it from being installed.

If you want a different build, you can see them all listed here. You will need to change the 17726.20160 at step 4 to whatever build number you want.

This is not a perfect fix, because while it removes CoPilot, it also stops you receiving security updates and bug fixes.

Switching from Office to LibreOffice

At this point, I'm giving up on Microsoft Office/Word. After trying a few different options, I've switched to LibreOffice.

You can download it here for free: https://www.libreoffice.org/

If you like the look of Word, these tutorials show you how to get that look:

www.howtogeek.com/788591/how-to-make-libreoffice-look-like-microsoft-office/

www.debugpoint.com/libreoffice-like-microsoft-office/

If you've been using Word for awhile, chances are you have a significant custom dictionary. You can add it to LibreOffice following these steps.

First, get your dictionary from Microsoft

Go to Manage your Microsoft 365 account: account.microsoft.com.

One you're logged in, scroll down to Privacy, click it and go to the Privacy dashboard.

Scroll down to Spelling and Text. Click into it and scroll past all the words to download your custom dictionary. It will save it as a CSV file.

Open the file you just downloaded and copy the words.

Open Notepad and paste in the words. Save it as a text file and give it a meaningful name (I went with FromWord).

Next, add it to LibreOffice

Open LibreOffice.

Go to Tools in the menu bar, then Options. It will open a new window.

Find Languages and Locales in the left menu, click it, then click on Writing aids.

You'll see User-defined dictionaries. Click New to the right of the box and give it a meaningful name (mine is FromWord).

Hit Apply, then Okay, then exit LibreOffice.

Open Windows Explorer and go to C:\Users\[YourUserName]\AppData\Roaming\LibreOffice\4\user\wordbook and you will see the new dictionary you created. (If you can't see the AppData folder, you will need to show hidden files by ticking the box in the View menu.)

Open it in Notepad by right clicking and choosing 'open with', then pick Notepad from the options.

Open the text file you created at step 5 in 'get your dictionary from Microsoft', copy the words and paste them into your new custom dictionary UNDER the dotted line.

Save and close.

Reopen LibreOffice. Go to Tools, Options, Languages and Locales, Writing aids and make sure the box next to the new dictionary is ticked.

If you use LIbreOffice on multiple machines, you'll need to do this for each machine.

Please note: this worked for me. If it doesn't work for you, check you've followed each step correctly, and try restarting your computer. If it still doesn't work, I can't provide tech support (sorry).

#fuck AI#fuck copilot#fuck Microsoft#Word#Microsoft Word#Libre Office#LibreOffice#fanfic#fic#enshittification#AI#copilot#microsoft copilot#writing#yesterday was a very frustrating day

3K notes

·

View notes

Text

AI hasn't improved in 18 months. It's likely that this is it. There is currently no evidence the capabilities of ChatGPT will ever improve. It's time for AI companies to put up or shut up.

I'm just re-iterating this excellent post from Ed Zitron, but it's not left my head since I read it and I want to share it. I'm also taking some talking points from Ed's other posts. So basically:

We keep hearing AI is going to get better and better, but these promises seem to be coming from a mix of companies engaging in wild speculation and lying.

Chatgpt, the industry leading large language model, has not materially improved in 18 months. For something that claims to be getting exponentially better, it sure is the same shit.

Hallucinations appear to be an inherent aspect of the technology. Since it's based on statistics and ai doesn't know anything, it can never know what is true. How could I possibly trust it to get any real work done if I can't rely on it's output? If I have to fact check everything it says I might as well do the work myself.

For "real" ai that does know what is true to exist, it would require us to discover new concepts in psychology, math, and computing, which open ai is not working on, and seemingly no other ai companies are either.

Open ai has already seemingly slurped up all the data from the open web already. Chatgpt 5 would take 5x more training data than chatgpt 4 to train. Where is this data coming from, exactly?

Since improvement appears to have ground to a halt, what if this is it? What if Chatgpt 4 is as good as LLMs can ever be? What use is it?

As Jim Covello, a leading semiconductor analyst at Goldman Sachs said (on page 10, and that's big finance so you know they only care about money): if tech companies are spending a trillion dollars to build up the infrastructure to support ai, what trillion dollar problem is it meant to solve? AI companies have a unique talent for burning venture capital and it's unclear if Open AI will be able to survive more than a few years unless everyone suddenly adopts it all at once. (Hey, didn't crypto and the metaverse also require spontaneous mass adoption to make sense?)

There is no problem that current ai is a solution to. Consumer tech is basically solved, normal people don't need more tech than a laptop and a smartphone. Big tech have run out of innovations, and they are desperately looking for the next thing to sell. It happened with the metaverse and it's happening again.

In summary:

Ai hasn't materially improved since the launch of Chatgpt4, which wasn't that big of an upgrade to 3.

There is currently no technological roadmap for ai to become better than it is. (As Jim Covello said on the Goldman Sachs report, the evolution of smartphones was openly planned years ahead of time.) The current problems are inherent to the current technology and nobody has indicated there is any way to solve them in the pipeline. We have likely reached the limits of what LLMs can do, and they still can't do much.

Don't believe AI companies when they say things are going to improve from where they are now before they provide evidence. It's time for the AI shills to put up, or shut up.

5K notes

·

View notes

Link

In recent years, Google users have developed one very specific complaint about the ubiquitous search engine: They can't find any answers. A simple search for "best pc for gaming" leads to a page dominated by sponsored links rather than helpful advice on which computer to buy. Meanwhile, the actual results are chock-full of low-quality, search-engine-optimized affiliate content designed to generate money for the publisher rather than provide high-quality answers. As a result, users have resorted to work-arounds and hacks to try and find useful information among the ads and low-quality chum. In short, Google's flagship service now sucks.

And Google isn't the only tech giant with a slowly deteriorating core product. Facebook, a website ostensibly for finding and connecting with your friends, constantly floods users' feeds with sponsored (or "recommended") content, and seems to bury the things people want to see under what Facebook decides is relevant. And as journalist John Herrman wrote earlier this year, the "junkification of Amazon" has made it nearly impossible for users to find a high-quality product they want — instead diverting people to ad-riddled result pages filled with low-quality products from sellers who know how to game the system.

All of these miserable online experiences are symptoms of an insidious underlying disease: In Silicon Valley, the user's experience has become subordinate to the company's stock price. Google, Amazon, Meta, and other tech companies have monetized confusion, constantly testing how much they can interfere with and manipulate users. And instead of trying to meaningfully innovate and improve the useful services they provide, these companies have instead chased short-term fads or attempted to totally overhaul their businesses in a desperate attempt to win the favor of Wall Street investors. As a result, our collective online experience is getting worse — it's harder to buy the things you want to buy, more convoluted to search for info

31K notes

·

View notes

Text

"The California state government has passed a landmark law that obligates technology companies to provide parts and manuals for repairing smartphones for seven years after their market release.

Senate Bill 244 passed 65-0 in the Assembly, and 38-0 in the Senate, and made California, the seat of so much of American technological hardware and software, the third state in the union to pass this so-called “right to repair” legislation.

On a more granular level, the bill guarantees consumers’ rights to replacement parts for three years’ time in the case of devices costing between $50 and $99, and seven years in the case of devices costing more than $100, with the bill retroactively affecting devices made and sold in 2021.

Similar laws have been passed in Minnesota and New York, but none with such a long-term period as California.

“Accessible, affordable, widely available repair benefits everyone,” said Kyle Wiens, the CEO of advocacy group iFixit, in a statement. “We’re especially thrilled to see this bill pass in the state where iFixit is headquartered, which also happens to be Big Tech’s backyard. Since Right to Repair can pass here, expect it to be on its way to a backyard near you.” ...

One of the reasons Wiens is cheering this on is because large manufacturers, from John Deere to Apple, have previously lobbied heavily against right-to-repair legislation for two reasons. One, it allows them to corner the repair and maintenance markets, and two, it [allegedly] protects their intellectual property and trade secrets from knock-offs or competition.

However, a byproduct of the difficulty of repairing modern electronics is that most people just throw them away.

...Wien added in the statement that he believes the California bill is a watershed that will cause a landslide of this legislation to come in the near future."

-via Good News Network, October 16, 2023

#united states#us politics#right to repair#planned obsolescence#enshittification#big tech#iphone#sustainability#ewaste#consumer rights#electronics#good news#hope#california#silicon valley

9K notes

·

View notes

Text

ppl talk about search engines universally getting worse mostly as a personal inconvenience, so it wasnt until just now that it dawned on me that vast amounts of the information stored on the internet becoming increasingly difficult if not impossible to access could have actually catastrophic consequences for like, all of human society

1K notes

·

View notes