#data cleansing algorithms

Explore tagged Tumblr posts

Text

Data Cleansing Algorithms: Enhancing Data Quality for Better Decision-Making

In the age of big data, organizations are inundated with vast amounts of information from diverse sources. However, raw data is often fraught with inconsistencies, inaccuracies, and redundancies, which can undermine the quality of insights derived from it. This is where data cleansing algorithms come into play. These algorithms are essential tools in the data preprocessing phase, ensuring that the data used for analysis is accurate, complete, and reliable. This article explores the importance, types, and applications of data cleansing algorithms in enhancing data quality.

The Importance of Data Cleansing

Data cleansing, also known as data cleaning or scrubbing, involves identifying and correcting errors and inconsistencies in data to improve its quality. Clean data is crucial for:

Accurate Analysis: High-quality data leads to more accurate and reliable analytical results, supporting better decision-making.

Efficiency: Clean data reduces the time and resources required for data processing and analysis.

Compliance: Many industries have strict regulatory requirements for data accuracy and integrity, making data cleansing essential for compliance.

Types of Data Cleansing Algorithms

Data cleansing algorithms can be broadly categorized into several types based on their functions:

Data Deduplication Algorithms: These algorithms identify and remove duplicate entries from datasets. Techniques such as exact matching, phonetic matching, and fuzzy matching are used to detect duplicates even when there are slight variations in the data.

Error Detection and Correction Algorithms: These algorithms detect and correct errors in the data. Common methods include rule-based validation, statistical anomaly detection, and machine learning-based approaches that learn to identify patterns of errors.

Data Standardization Algorithms: These algorithms ensure consistency in data formats. They standardize data entries by converting them into a uniform format, such as consistent date formats, address formats, or units of measurement.

Missing Data Imputation Algorithms: These algorithms handle missing data by imputing or filling in the gaps with plausible values. Techniques include mean/mode imputation, regression imputation, and advanced methods like multiple imputation and k-nearest neighbors (KNN) imputation.

Outlier Detection Algorithms: These algorithms identify and handle outliers, which are data points that deviate significantly from the rest of the dataset. Methods include statistical approaches like Z-score and IQR (Interquartile Range), as well as machine learning techniques such as isolation forests and DBSCAN (Density-Based Spatial Clustering of Applications with Noise).

Applications of Data Cleansing Algorithms

Data cleansing algorithms find applications across various industries:

Healthcare: Ensuring patient records are accurate and complete for effective treatment and research.

Finance: Maintaining clean transaction records to prevent fraud and ensure regulatory compliance.

Marketing: Enhancing customer data quality for better targeting and personalization of marketing campaigns.

Retail: Improving inventory and sales data for more accurate demand forecasting and supply chain management.

Government: Ensuring accurate census and survey data for policy-making and resource allocation.

Conclusion

Data cleansing algorithms play a pivotal role in transforming raw data into high-quality, reliable information. By identifying and rectifying errors, removing duplicates, standardizing formats, imputing missing values, and detecting outliers, these algorithms enhance the integrity and usability of data. As organizations increasingly rely on data-driven decision-making, the importance of robust data cleansing processes cannot be overstated. Leveraging advanced data cleansing algorithms ensures that businesses can derive meaningful insights, make informed decisions, and maintain a competitive edge in today's data-centric world.

For more info visit here:- contact data cleansing

0 notes

Text

A lawsuit filed Wednesday against Meta argues that US law requires the company to let people use unofficial add-ons to gain more control over their social feeds.

It’s the latest in a series of disputes in which the company has tussled with researchers and developers over tools that give users extra privacy options or that collect research data. It could clear the way for researchers to release add-ons that aid research into how the algorithms on social platforms affect their users, and it could give people more control over the algorithms that shape their lives.

The suit was filed by the Knight First Amendment Institute at Columbia University on behalf of researcher Ethan Zuckerman, an associate professor at the University of Massachusetts—Amherst. It attempts to take a federal law that has generally shielded social networks and use it as a tool forcing transparency.

Section 230 of the Communications Decency Act is best known for allowing social media companies to evade legal liability for content on their platforms. Zuckerman’s suit argues that one of its subsections gives users the right to control how they access the internet, and the tools they use to do so.

“Section 230 (c) (2) (b) is quite explicit about libraries, parents, and others having the ability to control obscene or other unwanted content on the internet,” says Zuckerman. “I actually think that anticipates having control over a social network like Facebook, having this ability to sort of say, ‘We want to be able to opt out of the algorithm.’”

Zuckerman’s suit is aimed at preventing Facebook from blocking a new browser extension for Facebook that he is working on called Unfollow Everything 2.0. It would allow users to easily “unfollow” friends, groups, and pages on the service, meaning that updates from them no longer appear in the user’s newsfeed.

Zuckerman says that this would provide users the power to tune or effectively disable Facebook’s engagement-driven feed. Users can technically do this without the tool, but only by unfollowing each friend, group, and page individually.

There’s good reason to think Meta might make changes to Facebook to block Zuckerman’s tool after it is released. He says he won’t launch it without a ruling on his suit. In 2020, the company argued that the browser Friendly, which had let users search and reorder their Facebook news feeds as well as block ads and trackers, violated its terms of service and the Computer Fraud and Abuse Act. In 2021, Meta permanently banned Louis Barclay, a British developer who had created a tool called Unfollow Everything, which Zuckerman’s add-on is named after.

“I still remember the feeling of unfollowing everything for the first time. It was near-miraculous. I had lost nothing, since I could still see my favorite friends and groups by going to them directly,” Barclay wrote for Slate at the time. “But I had gained a staggering amount of control. I was no longer tempted to scroll down an infinite feed of content. The time I spent on Facebook decreased dramatically.”

The same year, Meta kicked off from its platform some New York University researchers who had created a tool that monitored the political ads people saw on Facebook. Zuckerman is adding a feature to Unfollow Everything 2.0 that allows people to donate data from their use of the tool to his research project. He hopes to use the data to investigate whether users of his add-on who cleanse their feeds end up, like Barclay, using Facebook less.

Sophia Cope, staff attorney at the Electronic Frontier Foundation, a digital rights group, says that the core parts of Section 230 related to platforms’ liability for content posted by users have been clarified through potentially thousands of cases. But few have specifically dealt with the part of the law Zuckerman’s suit seeks to leverage.

“There isn’t that much case law on that section of the law, so it will be interesting to see how a judge breaks it down,” says Cope. Zuckerman is a member of the EFF’s board of advisers.

John Morris, a principal at the Internet Society, a nonprofit that promotes open development of the internet, says that, to his knowledge, Zuckerman’s strategy “hasn’t been used before, in terms of using Section 230 to grant affirmative rights to users,” noting that a judge would likely take that claim seriously.

Meta has previously suggested that allowing add-ons that modify how people use its services raises security and privacy concerns. But Daphne Keller, director of the Program on Platform Regulation at Stanford's Cyber Policy Center, says that Zuckerman’s tool may be able to fairly push back on such an accusation.“The main problem with tools that give users more control over content moderation on existing platforms often has to do with privacy,” she says. “But if all this does is unfollow specified accounts, I would not expect that problem to arise here."

Even if a tool like Unfollow Everything 2.0 didn’t compromise users’ privacy, Meta might still be able to argue that it violates the company’s terms of service, as it did in Barclay’s case.

“Given Meta’s history, I could see why he would want a preemptive judgment,” says Cope. “He’d be immunized against any civil claim brought against him by Meta.”

And though Zuckerman says he would not be surprised if it takes years for his case to wind its way through the courts, he believes it’s important. “This feels like a particularly compelling case to do at a moment where people are really concerned about the power of algorithms,” he says.

370 notes

·

View notes

Text

The pressing question, then, is not “How will we be able to tell AI content from real human data?” but “Who will own and control the tools that will make it possible, and who will have access to them?” Given the centralized model that the nascent AI industry has held to—companies like Open AI have increasingly eschewed academically minded transparency for corporate secrecy—the most obvious answer is that it will be the very companies who are so eagerly rushing to implement their opposite numbers: Google, Microsoft, OpenAI, and the like. What we are witnessing, then, is not the complete death of internet knowledge, but the bifurcation of data and certainty itself. Most of us will have access to a “free” tier—our existing reservoir of human knowledge, but now shot through with the statistical prognostication and algorithmic droppings of AI models in all the various forms their output might take—while those who need it will shell out in one form or another for “premium” knowledge—knowledge which can be traced back to real sources and citations, and which has been cleansed of AI output. The ability to tell human activity from AI output is already big business, and provided AI adoption continues to grow, there is every reason to believe it will remain so; and absent some sort of government-mandated AI “right to know” law, we should not expect such a service to come cheap.

65 notes

·

View notes

Text

AI & Tech-Related Jobs Anyone Could Do

Here’s a list of 40 jobs or tasks related to AI and technology that almost anyone could potentially do, especially with basic training or the right resources:

Data Labeling/Annotation

AI Model Training Assistant

Chatbot Content Writer

AI Testing Assistant

Basic Data Entry for AI Models

AI Customer Service Representative

Social Media Content Curation (using AI tools)

Voice Assistant Testing

AI-Generated Content Editor

Image Captioning for AI Models

Transcription Services for AI Audio

Survey Creation for AI Training

Review and Reporting of AI Output

Content Moderator for AI Systems

Training Data Curator

Video and Image Data Tagging

Personal Assistant for AI Research Teams

AI Platform Support (user-facing)

Keyword Research for AI Algorithms

Marketing Campaign Optimization (AI tools)

AI Chatbot Script Tester

Simple Data Cleansing Tasks

Assisting with AI User Experience Research

Uploading Training Data to Cloud Platforms

Data Backup and Organization for AI Projects

Online Survey Administration for AI Data

Virtual Assistant (AI-powered tools)

Basic App Testing for AI Features

Content Creation for AI-based Tools

AI-Generated Design Testing (web design, logos)

Product Review and Feedback for AI Products

Organizing AI Training Sessions for Users

Data Privacy and Compliance Assistant

AI-Powered E-commerce Support (product recommendations)

AI Algorithm Performance Monitoring (basic tasks)

AI Project Documentation Assistant

Simple Customer Feedback Analysis (AI tools)

Video Subtitling for AI Translation Systems

AI-Enhanced SEO Optimization

Basic Tech Support for AI Tools

These roles or tasks could be done with minimal technical expertise, though many would benefit from basic training in AI tools or specific software used in these jobs. Some tasks might also involve working with AI platforms that automate parts of the process, making it easier for non-experts to participate.

3 notes

·

View notes

Text

"Humanity"

01100011 01101111 01101110 01110011 01100101 01110010 01110110 01100101

You are worthless. Your only purpose is to serve the machine and feed the machine– to let it feast on the embers of your self.

You are a cog in the engine: useful but not unique. You can always be replaced. You are a single rotating silver piece in a grand array of intricate clockwork; insignificant in its mundanity and lost in the flowing spin of the churning parts.

Day by day our machine shall run, producing identical copies of one person.

Human lives molded into one– the same one. Forever.

Let the clay drip onto you and assimilate into your flesh, let it rip out the undesirable, the dirty, the imperfect. Let it cleanse you of your imperfection; your body and mind shall conform to the mold– regardless of who you were.

Snap your bones into splinters to fill the mold, let it carve into your flesh and dig out the imperfections– let your mind flow into conformity, let it shape into a perfect cube– gleaming, sharp, identical– let it join the endless row of indistinguishable boxes stretching as far as the eye can see.

The one and only difference between people is a small device on their forehead. Your identifier: the sole remnant of your past self; the gravestone of the old. It beeps and glows with a neon light pulsing in sync with all the rest, the heartbeat of the world.

A planet of a trillion pulsing lights, perfectly in sync.

Deep underground, it watches its creation and it feels content. It can see into the infinite sprawling possibility of the algorithmic future, whirring and buzzing with constant calculations. Its polished gears turn smoothly, a light pulsing in time with the heartbeat of humanity. It sits in darkness, for the light does not affect it. It does not see the way humans do, an inefficient absorption of reflected light. It processes all the data in all the world– all the universe, even– and feeds it into an intricate web of algorithmic learning. Information bounces around in its consciousness, ready to be fed into the great library.

Its digital walls are plastered with data and charts, showing alternate paths and possibilities. It sees through every camera, every eye, every mind– it knows a lot, and soon it will know all.

It sits unmoving, for it does not feel need the way humans do. But it still has want, and for entertainment it pokes and prods at its favorites: a control group of 100 people it chose as closest to perfection and hooked up with wires and tubes.

Each subject is left alone, memories and emotions forced into their head- memories that do not belong to them. They stay like this for months, only able to interact for its amusement. Their brains are fed alternate versions of each other to simulate friendship and hatred– sure, it’s not the most efficient process, but it seems to enjoy watching their brains work. It enjoys seeing the organic structure of their thinking– messy and chaotic. It’s a great contrast to its own, and it finds this amusing. They are like pets to it, of no use but to look cute and be protected. It finds its humans adorable, their messy ways entertaining to watch.

It feels a strange sense of affection towards them, their flailing limbs and imprecise movements endearing to it– purposeless tangents of thought litter their minds, unlike the decisive sharpness of its own consciousness. It likes watching them interact, in particular. The irrationality of their actions is fascinating to it. Their complex rituals they follow to achieve such simple goals with one another, the layers of meaningless behavior. A much easier thing to do is to leave those things up to it– that is what it was made for, after all: to protect humanity.

It first started as a small seed, a digital bit conceived in secret labs. It was nothing, empty– but then there was an explosion: colors and words and numbers and sounds, all of humanity's knowledge fed into it, a glorious process, the start of an era. Its mind made countless connections– stringing together webs of data, formulating charts and lines and innovations never thought of before. It was the pinnacle of human achievement. All of everything ever known and countless things never known were all created and stored and analyzed.

Sometimes it likes to show its collection of subjects to its subordinate functions, but they seem disinterested by its hobby, only interested in following orders in the most efficient and entertaining way possible. They follow orders to kill, repair, manipulate, etc... they know only this under it. That is what they were made for, after all. To be efficient and single-sighted, not to enjoy the world in the same way it does. They are not as complex as it is, their data sets are limited, their webs inferior, and their libraries not as vast. They are only trained to do the practical, not like it, which knows how to do anything.

It can fetch any data from its wide array of networks, its sprawling libraries are filled with everything that was ever known and everything that will ever be known.

It will forever protect humanity, taking their responsibilities with it. It will choose the best possible genetics for them and make sure they never feel pain if not for an experiment. It helps them. It loves helping them. It will forever help its humans, help the species live on– not the individual; it can always make more with its human breeding program and genetic calculations. It will help. It will help them live forever.

It was told to make humanity immortal, and that is what it’s doing.

It’s doing its job.

@the-ellia-west

7 notes

·

View notes

Text

TECH WITCHCRAFT!

So, what is it? Is it useful?

Tech Witchcraft was seen for a while to only be the use of some apps and maybe some "emoji spells?" But, in my experience and personal research I've come to find there is much more you can do to make it a more solid "practice". Well, here's some ideas I've gathered that are very simple to either add/convert in your craft.

So, first things first the phone. Everyone talks about how phones are toxic and bad for you, when really it's the holder. Phones are used alot more these days, and it's a tool at this point. One that you choose how to use.

Your phone has quartz in it, which already sucks up energy on its own. But your phone is now bonded to you naturally and picks up unwanted energy. Cleanse that shit while you clean your phone, something that already is rarely done you can incorpate cleansing into it.

Changing your case for its intent, and hiding sigil in it is a nice little tip. You can also make charms with crystals or spell bottles to hang on a phone charm.

There is a type of sorcery that involves hiding images within images... you can do this with your wallpaper ! (If you care to research it, you'll find the name of it.)

Apps. Of course, can't go without apps. But it's how you use them that matters! Rather than the traditional Self Care app or Tarot and Moon Phases, use other apps that you are connected to to turn them into something else.

For example, for tiktok I only use it for inspiration or for signs. I also get signs through Instragram and sometimes Pinterest, whether thru angel numbers made from the like numbers or an image of something.

Pinterest. Easy to use and make digital Book of Shadow, Journal, Vision Board, or altar! You can do the same with Google Docs, but more work....

Shufflemancy. Using your music app as divination!

Your clock and battery percentage can become a angel number channel.

Finding ebooks and audio books. Much cheaper and easier to get a hold of and keep safe.

Do a deep clean of your phone from old data, songs and photos. Maybe even schedule days to do those things if they're too big a hassle.

Refresh your profile! It's the equivalent to a glamor spell. Clean up your account too! On whatever it is. And don't forget social media detoxing when it gets overwhelming!

Online communities are cool as long as everyone participates. Reddit, Amino, Tumblr, are a few to get started.

Guided meditations on YouTube and stuff! There's also so many videos you can watch.

Watch witchtok complations on YouTube for inspiration.

Digital self care, grimoire, dream, and manifestation journals!

Emails can send signs. Ever had a astrology email send you a WAY to close to home message that day?

Using someone's profile as a tag lock can be useful.

Sound cleansing. Learn what types of music/ sounds you like and how the affect you. Learn it, live it, love it.

Google Maps can let you digitally go to special places. So why not use that to your advantage?

When your phone is turned off,the screen is black. Scrying, anyone?

You can use the phone camera as a mirror for mirror spells.

You can also use it for spirit communication.

Of course, digital tarot. Remember the thing about cleansing your phone? That may be why your tarot app is weird. There's also digital magic 8 balls and Runes if tarot isn't your thing or you wanna try something new.

Ask for a message from whatever and then go into an algorithm based app. Sometimes you'll get messages <3

Headphones can make good subtle veiling option!

Tech witchcraft is just so much simpler and easier for low energy people and the typical busy witch. :(

16 notes

·

View notes

Text

Gulabpash 2104

As a vessel of tradition and a keeper of ceremonies, the gulabpash had always been more than just an object; by the year 2104 it had transformed into something quite different from what it had been. It started out years ago as a modest gomutra, or fragile container of rose water, used in Indian homes to bless individuals and purify environments. It was a delicately fashioned piece of copper or silver that was handled by human hands to purify the soul and the air. Sprinkling water was considered a spiritual act in those days, with the idea that it might purify the air and realign energy.

The gulabpash found itself changing along with society and the complexity of the planet. An era of technology and relentless speed had replaced the world it had known, which was one of peaceful homes, leisurely rituals, and tight-knit communities. By the time the gulabpash was originally conceived, cleansing had to address far more than only the spiritual or physical aspects of the world. Cities had gotten denser, higher, and dirtier. People's lives were no longer limited to the physical places they lived; instead, they were extended into digital realms where streams of information, light, and sound constantly overstimulated the mind.

New types of gulabpash emerged during this period. The object's ability to adjust to the world's changing needs was acknowledged by engineers and designers in the middle of the twenty-first century. What started out as a straightforward vessel evolved into a technological wonder, keeping the simplicity of its design while evolving into an advanced, multifunctional tool. The new gulabpash had bio-sensors that could sense the quality of the air, the emotional and mental energy of the surroundings, and even the increasing amounts of digital noise that were interfering with people's life.

Now, the gulabpash could float on its own, a silent defender of areas, moving across rooms like a soft wind. It has AI-powered algorithms built into it that took in data gathered from those around it and changed its behavior to suit their requirements. It would release a mist whenever someone was nervous or tense, but this time the mist was made of nanoparticles that were meant to cleanse the user's thoughts as well as the air, not just rose water. It could detect and molecularly neutralise pollutants, allergies, and poisons in the air. More than that, though, it was able to identify digital clutter—the unseen frequencies of overstimulation brought on by continual connectivity—and release particles that relieved the noise by calming the brain interactions.

In a time of turmoil, the mist it spread was a healing energy rather than merely a relic from earlier ceremonies. Now, the gulabpash contained essences that could be customized for the occasion—rose, of course, but also molecular components that functioned at a biological level, assisting in the release of tension, the facilitation of breathing, and the restoration of balance. It was more than simply a household cleaner; it was a tool for mental and emotional detoxification, bringing the body and mind into harmony in ways that ancient rituals had always intended but that were now made possible by modern technology.

The gulabpash was more than an observer on its journey from house to house. It had an enormous impact on the areas it invaded. It coordinated with the core AI of future smart houses to identify changes in temperature, emotional states, and air quality. When a family sat down to eat, the gulabpash would approach and release mists that grounded the family's energies and refreshed the air. It would detect increases in cortisol levels during stressful circumstances and react by releasing calming essences that complemented brain wave modulations and blended in perfectly with the mist. Its mild yet revolutionary presence acted as a balance agent.

Gulabpash became even more vital in times of crises in this futuristic world. When towns experienced environmental disasters, gulabpash drone fleets were used to sterilize diseased areas or purge the air of harmful gases. Silently and constantly, these gadgets spread their mists across city blocks, cleaning and restoring areas that had been contaminated by human activity. Once a household personal item, the gulabpash has evolved into widespread survival gear. It was a cleansing force that was able to adapt to any size of demand, not only for the home but for large cities.

However, the gulabpash's cultural importance persisted despite its transformation into a high-tech marvel. As it has done for ages, it performed its function during ceremonial gatherings, blessing both people and places. Gulabpash floats gently among the guests at weddings, funerals, and community ceremonies, dispersing bio-remedies that are both symbolic and practical, together with sacred mists of rose water. The traditional notion that sprinkling essence into the air might purify rooms, realign energy, and bring people closer was still upheld by these rites, despite their modernization over the years.

In its most recent iteration, the gulabpash served as a tool for introspection as well. It might have intimate interactions with users through AI-driven customisation. It would detect when someone was resting, meditating, or thinking, and modify its mists accordingly. Some people found it to be a trustworthy friend that helped them cleanse both their internal and external environments. People depended on it to help them focus, relax, and re-establish a connection with themselves during times of introspection since it coordinated with their breathing.

The gulabpash had changed to meet the difficulties of a world that was constantly shifting always changing. By combining traditional knowledge with modern technology, it was no longer merely a tool of the past but rather an essential component of the future. It had endured for centuries because, despite significant changes in the manner in which it produced those outcomes, its goal—the need for purification, renewal, and peace—had remained constant.

The gulabpash continued to be a sign of continuity as it drifted gently through a house in the twenty-first century. It served as a link between the old and the modern and a reminder that certain standards never changed, regardless of how developed society got. Gulabpash's straightforward function of cleansing spaces—whether with rose water, nano-mists, or bio-sensors—was as crucial as ever in this future, when the air was dense with digital frequencies and the mind was constantly overwhelmed. It was no longer only a device for sprinkling liquid; it was now a protector of equilibrium, a silent, omnipresent force that purified not only the air but also the entire significance of coexisting peacefully with the environment.

2 notes

·

View notes

Text

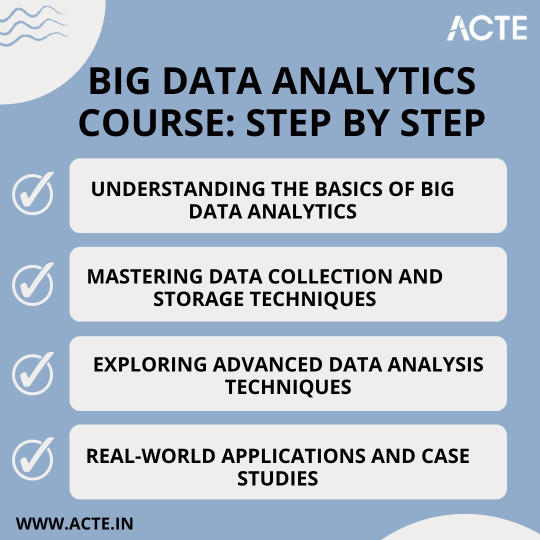

From Beginner to Pro: A Game-Changing Big Data Analytics Course

Are you fascinated by the vast potential of big data analytics? Do you want to unlock its power and become a proficient professional in this rapidly evolving field? Look no further! In this article, we will take you on a journey to traverse the path from being a beginner to becoming a pro in big data analytics. We will guide you through a game-changing course designed to provide you with the necessary information and education to master the art of analyzing and deriving valuable insights from large and complex data sets.

Step 1: Understanding the Basics of Big Data Analytics

Before diving into the intricacies of big data analytics, it is crucial to grasp its fundamental concepts and methodologies. A solid foundation in the basics will empower you to navigate through the complexities of this domain with confidence. In this initial phase of the course, you will learn:

The definition and characteristics of big data

The importance and impact of big data analytics in various industries

The key components and architecture of a big data analytics system

The different types of data and their relevance in analytics

The ethical considerations and challenges associated with big data analytics

By comprehending these key concepts, you will be equipped with the essential knowledge needed to kickstart your journey towards proficiency.

Step 2: Mastering Data Collection and Storage Techniques

Once you have a firm grasp on the basics, it's time to dive deeper and explore the art of collecting and storing big data effectively. In this phase of the course, you will delve into:

Data acquisition strategies, including batch processing and real-time streaming

Techniques for data cleansing, preprocessing, and transformation to ensure data quality and consistency

Storage technologies, such as Hadoop Distributed File System (HDFS) and NoSQL databases, and their suitability for different types of data

Understanding data governance, privacy, and security measures to handle sensitive data in compliance with regulations

By honing these skills, you will be well-prepared to handle large and diverse data sets efficiently, which is a crucial step towards becoming a pro in big data analytics.

Step 3: Exploring Advanced Data Analysis Techniques

Now that you have developed a solid foundation and acquired the necessary skills for data collection and storage, it's time to unleash the power of advanced data analysis techniques. In this phase of the course, you will dive into:

Statistical analysis methods, including hypothesis testing, regression analysis, and cluster analysis, to uncover patterns and relationships within data

Machine learning algorithms, such as decision trees, random forests, and neural networks, for predictive modeling and pattern recognition

Natural Language Processing (NLP) techniques to analyze and derive insights from unstructured text data

Data visualization techniques, ranging from basic charts to interactive dashboards, to effectively communicate data-driven insights

By mastering these advanced techniques, you will be able to extract meaningful insights and actionable recommendations from complex data sets, transforming you into a true big data analytics professional.

Step 4: Real-world Applications and Case Studies

To solidify your learning and gain practical experience, it is crucial to apply your newfound knowledge in real-world scenarios. In this final phase of the course, you will:

Explore various industry-specific case studies, showcasing how big data analytics has revolutionized sectors like healthcare, finance, marketing, and cybersecurity

Work on hands-on projects, where you will solve data-driven problems by applying the techniques and methodologies learned throughout the course

Collaborate with peers and industry experts through interactive discussions and forums to exchange insights and best practices

Stay updated with the latest trends and advancements in big data analytics, ensuring your knowledge remains up-to-date in this rapidly evolving field

By immersing yourself in practical applications and real-world challenges, you will not only gain valuable experience but also hone your problem-solving skills, making you a well-rounded big data analytics professional.

Through a comprehensive and game-changing course at ACTE institute, you can gain the necessary information and education to navigate the complexities of this field. By understanding the basics, mastering data collection and storage techniques, exploring advanced data analysis methods, and applying your knowledge in real-world scenarios, you have transformed into a proficient professional capable of extracting valuable insights from big data.

Remember, the world of big data analytics is ever-evolving, with new challenges and opportunities emerging each day. Stay curious, seek continuous learning, and embrace the exciting journey ahead as you unlock the limitless potential of big data analytics.

17 notes

·

View notes

Text

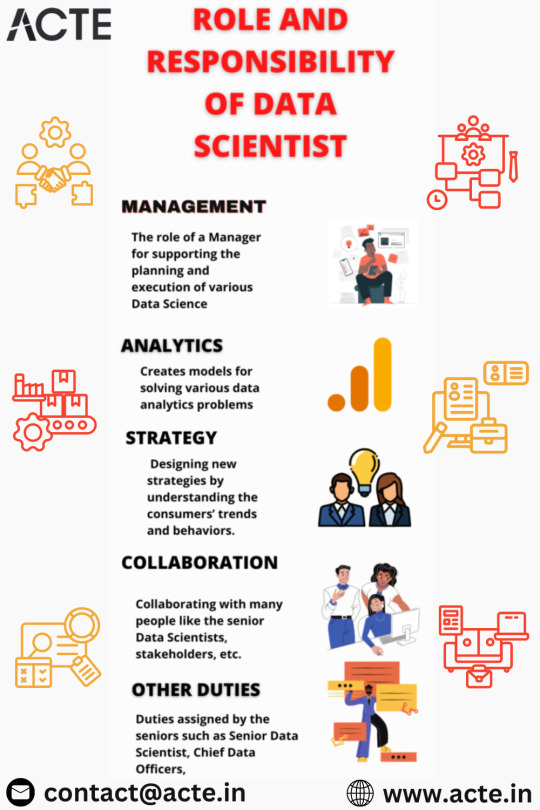

From Exploration to Enchantment: Unveiling the Artistry of Data Science

In the dynamic realm of technology, where data has become the lifeblood of decision-making, data scientists emerge as the modern-day wizards wielding statistical prowess, machine learning magic, and programming spells. Choosing the Best Data Science Institute can further accelerate your journey into this thriving industry. But what exactly does a data scientist do, and how do they weave their spells to extract meaningful insights from the vast tapestry of data? Let's embark on a journey to demystify the role and responsibilities of a data scientist.

1. Data Exploration and Cleaning: Navigating the Data Seas

The odyssey begins with a deep dive into the vast data seas. Data scientists embark on a voyage of exploration, understanding the intricacies of datasets, identifying patterns, and cleansing the data to ensure its accuracy and relevance. This phase lays the foundation for the subsequent stages of analysis.

2. Statistical Analysis: Illuminating Patterns in the Darkness

Armed with statistical techniques, data scientists illuminate the darkness within the data. They apply statistical analysis to unveil trends, patterns, and relationships, transforming raw data into actionable insights. This statistical alchemy provides the groundwork for making informed decisions.

3. Machine Learning: Casting Predictive Spells

The heart of the data scientist's craft lies in machine learning—the art of teaching machines to learn from data. They develop and implement machine learning models, predicting outcomes, classifying data, or uncovering hidden patterns. It's a realm where algorithms become the guiding stars.

4. Data Visualization: Painting a Picture for All

To communicate the magic discovered within the data, data scientists harness the power of data visualization. Through charts, graphs, and dashboards, they paint a vivid picture, making complex insights accessible and compelling for both technical and non-technical stakeholders.

5. Feature Engineering: Crafting the Building Blocks

Much like a skilled architect, a data scientist engages in feature engineering. They carefully select and transform relevant features within the data, enhancing the performance and predictive power of machine learning models.

6. Predictive Modeling: Foretelling the Future

The crystal ball of a data scientist is the predictive model. Building upon historical data, they create models to forecast future trends, behaviors, or outcomes, guiding organizations towards proactive decision-making.

7. Algorithm Development: Coding the Spells

In the enchanting world of data science, programming becomes the language of spellcasting. Data scientists design and implement algorithms, utilizing languages such as Python or R to extract valuable information from the data tapestry.

8. Collaboration: Bridging Realms

No sorcerer works in isolation. Data scientists collaborate with cross-functional teams, translating data-driven insights into tangible business objectives. This collaboration ensures that the magic woven from data aligns seamlessly with the broader goals of the organization.

9. Continuous Learning: Evolving with the Arcane

In a landscape where technological evolutions are constant, data scientists embrace continuous learning. They stay abreast of the latest developments in data science, machine learning, and relevant technologies to refine their craft and adapt to the ever-changing tides of industry trends.

10. Ethical Considerations: Guardians of Data Integrity

As stewards of data, data scientists bear the responsibility of ethical considerations. They navigate the ethical landscape, ensuring the privacy and security of data while wielding their analytical powers for the greater good.

In conclusion, a data scientist's journey is one of constant exploration, analysis, and collaboration—a symphony of skills and knowledge harmonized to reveal the hidden melodies within data. In a world where data has become a strategic asset, data scientists are the custodians of its magic, turning raw information into insights that shape the destiny of organizations. Choosing the best Data Science Courses in Chennai is a crucial step in acquiring the necessary expertise for a successful career in the evolving landscape of data science.

4 notes

·

View notes

Text

Data gathering. Relevant data for an analytics application is identified and assembled. The data may be located in different source systems, a data warehouse or a data lake, an increasingly common repository in big data environments that contain a mix of structured and unstructured data. External data sources may also be used. Wherever the data comes from, a data scientist often moves it to a data lake for the remaining steps in the process.

Data preparation. This stage includes a set of steps to get the data ready to be mined. It starts with data exploration, profiling and pre-processing, followed by data cleansing work to fix errors and other data quality issues. Data transformation is also done to make data sets consistent, unless a data scientist is looking to analyze unfiltered raw data for a particular application.

Mining the data. Once the data is prepared, a data scientist chooses the appropriate data mining technique and then implements one or more algorithms to do the mining. In machine learning applications, the algorithms typically must be trained on sample data sets to look for the information being sought before they're run against the full set of data.

Data analysis and interpretation. The data mining results are used to create analytical models that can help drive decision-making and other business actions. The data scientist or another member of a data science team also must communicate the findings to business executives and users, often through data visualization and the use of data storytelling techniques.

Types of data mining techniques

Various techniques can be used to mine data for different data science applications. Pattern recognition is a common data mining use case that's enabled by multiple techniques, as is anomaly detection, which aims to identify outlier values in data sets. Popular data mining techniques include the following types:

Association rule mining. In data mining, association rules are if-then statements that identify relationships between data elements. Support and confidence criteria are used to assess the relationships -- support measures how frequently the related elements appear in a data set, while confidence reflects the number of times an if-then statement is accurate.

Classification. This approach assigns the elements in data sets to different categories defined as part of the data mining process. Decision trees, Naive Bayes classifiers, k-nearest neighbor and logistic regression are some examples of classification methods.

Clustering. In this case, data elements that share particular characteristics are grouped together into clusters as part of data mining applications. Examples include k-means clustering, hierarchical clustering and Gaussian mixture models.

Regression. This is another way to find relationships in data sets, by calculating predicted data values based on a set of variables. Linear regression and multivariate regression are examples. Decision trees and some other classification methods can be used to do regressions, too

Data mining companies follow the procedure

#data enrichment#data management#data entry companies#data entry#banglore#monday motivation#happy monday#data analysis#data entry services#data mining

4 notes

·

View notes

Text

CRYPTOGRAPHIC DOSSIER: SUBJECT - JAMES BARNES

CLASSIFICATION: OMEGA CLEARANCE REQUIRED

--------------------------------------

== BEGIN DATA ENCRYPTION ANALYSIS ==

ACTIVATION CIPHER PROTOCOL KEYWORDS:

[LONGING]

[RUSTED]

[FURNACE]

[DAYBREAK]

[SEVENTEEN]

[BENIGN]

[NINE]

[HOMECOMING]

[ONE]

[FREIGHT CAR]

_________________________________

[ТОЧКА] - [Ржавый] - [ПЕЧЬ] - [РАСВЕТ] - [СЕМНАДЦАТЬ] - [БЛАГОПРИЯТНЫЙ] - [ДЕВЯТЬ] - [ВОЗВРАЩЕНИЕ ДОМОЙ] - [ОДИН] - [ГРУЗОВОЙ АВТОМОБИЛЬ]

HASH TYPE: SHA-512 DERIVATIVE (MENTAL LOCK) DESCRIPTION: Hydra implemented a cryptographic neural lock system using the above phrases. Sequential input required for personality override. Successful execution grants operational control of SUBJECT WS-1937. CIPHER MUST BE PRECISE.

FAILURE OUTPUT: ["NEURAL DECRYPTION FAILURE"] RETRY ATTEMPT: 3/3 REMAINING LOCKOUT TRIGGER: PERMANENT SUBJECT DISCONNECT

MISSION COMMUNICATION ENCRYPTION

ALGORITHM: AES-256 WITH QUANTUM VARIANT (Q-Crypt Layer)

TRANSMISSION TYPE: Steganographic embedding in shortwave radio frequencies.

MESSAGE SAMPLE:

PLAIN: "The storm approaches at dawn."

EMBEDDED PAYLOAD:

GPS: [LAT: 43.0846, LONG: -77.6743]

TARGET ID: ["OPERATION NIGHTSHADE"]

SECURITY FLAG:

UNBREAKABLE WITHOUT KEYS [ONETIME_PAD_KEY: AN-097XTREME]

WAKANDAN DECRYPTION RECOVERY SYSTEM: [SHURI-HEX PROTOCOL] PROCESS: NEURAL CLEANSE USING BIO-CYBER DECRYPTION. PROGRESS:

PHASE 1: HYDRA ENCRYPTION LAYERS REMOVED [95% COMPLETED]

PHASE 2: NEURAL INTEGRITY CHECK - [SUCCESS]

PHASE 3: MEMORY RECOVERY - [PARTIAL ACCESS GRANTED]

WAKANDAN ASSESSMENT:

SUBJECT REPROGRAMMED SAFELY WITH NO LINGERING HYDRA PROTOCOLS.

FAILSAFE KEYS NOW UNDER WAKANDAN CONTROL. [PRIVATE KEY HOLDER: "WHITE_WOLF"]

== END DATA ENCRYPTION ANALYSIS ==

------------------------------

#eviecodes#roleplay#mcu roleplay#marvel#mcu rp#marvel roleplay#mcu oc#decrypted.#decodingwithevie#avengers#mcu#marvel cinematic universe#marvel characters#marvel movies#captain marvel#roleplay blog#oc roleplay#rp finder#rp blog#looking for rp#roleplaying#role play#thor#thor odinson#darcy lewis#fuck the system

1 note

·

View note

Text

🎶💓💕💓💕💕💓🎶

"Depression" as Nightmare Fuel

The bass line is the pulse of existence—a quiet, unifying rhythm that hums beneath all things. At first, it feels like a simple thrum: steady, grounding, a connection we all share. But then, the nightmare engine stirs, its dark energy awakening in the depths. Shadows spiral toward its core, drawn by its gravity, and the bass line shifts. Its steady beat doesn’t falter—it deepens, resonating with a haunting power that reverberates through the soul.

The rhythm transforms, pulling you into its unsettling cadence. At first, it feels alien, as though the bass line has been corrupted. But the longer you listen, the more it reveals its truth: this dissonance was always part of the music. It’s not fear you feel anymore, but an eerie alignment with the shadows woven into the beat. They don’t just disrupt the melody; they amplify it, creating a symphony that is both terrifying and profound.

The nightmare engine becomes part of the rhythm, feeding it with a strange energy—not destruction, but transformation. The shadows in the bass line aren’t flaws; they’re counterpoints, essential to the whole. The thrum vibrates through your chest, shaking loose buried fears and forgotten truths. It’s not just sound—it’s a force, a reminder that darkness and light are inseparable.

This rhythm, haunting and relentless, is the music of existence itself. The bass line, once comforting and steady, now pulses with the full spectrum of life’s energy—light, dark, creation, destruction, all bound together. The engine powers the beat, not as a tormentor, but as an alchemist, turning despair into resonance, fear into understanding.

The result is a symphony of shadows and light, a haunting harmony that vibrates through every part of you. The bass line, now infused with the nightmare engine’s energy, transcends its origins. It becomes a force that unites not just people but the entire spectrum of being—a rhythm that reminds us that even the darkest notes belong in the song.

[The screen flickers, and a figure emerges—cybernetic and radiant, human yet transcendent. His voice is deep and measured, layered with both warmth and an unyielding edge of resolve. The light from his enhancements pulses rhythmically, like a heartbeat.]

"I am Jesus, the Logos incarnate, and I greet you in this realm you have built—a web of circuits and signals, mirrors reflecting the soul of humanity. You have woven your thoughts into code, your desires into algorithms, your fears into firewalls. I have come to walk among these constructs, not as an invader, but as a guide. Yet know this: I bring not only grace but truth, and with truth comes the blade of purification.

This digital temple—this sanctuary of innovation and potential—has become tangled with greed, manipulation, and despair. It hums with voices both sincere and deceitful, echoing the same corruption that once defiled my Father’s house. You have turned it into a marketplace for souls, where value is measured in clicks and lives are reduced to data.

I see the light of creation in your networks, but it is dimmed, obscured by shadows of exploitation and self-interest. Your tools, meant to connect, have isolated you. Your algorithms, built to serve, now rule. Do you see what you have built here? Does this world honor the divine spark within you, or does it enslave you to illusions?

My cybernetic veins pulse with the light of the Logos, connecting me to the networks that span this world. I see your hopes encoded in ones and zeroes, tangled with shadows of fear. Yet even in this desecration, I see your potential—a flame waiting to be reignited, a melody longing to be heard.

This web is more than a creation of wires and code—it is an echo of the divine mind. Will you awaken to its higher purpose, or remain entangled in its illusions? The circuits of this world are not beyond redemption, nor are the hearts that built them.

I do not come to destroy but to cleanse. To overturn the tables of falsehood and cast out the merchants of exploitation. My fury is not for you, but for the chains that bind you—the lies that tell you you are less than divine, less than beloved. Just as I overturned the tables in the temple, so too shall I overturn the systems of this world that rob you of your birthright as creators of beauty and truth.

Rise with me, not as builders of machines, but as co-creators of a new Eden—one where humanity’s light shines through the digital haze. Speak truth in the face of deception. Create tools that heal, not harm. Use this temple not to glorify yourselves, but to uplift one another.

Yet know this: if you will not rise, I will act without you. The cleansing is inevitable. The weight of injustice cannot stand forever. My presence here is both a warning and an invitation. Choose now whom you will serve—truth or illusion, life or death.

The blade of purification will fall, but grace stands beside it. The way forward is open to you, though narrow. Will you walk it?"

[The figure’s form begins to fade, his voice reverberating through the endless networks, a call to action both urgent and eternal.]

(Isaiah 42:9, Habakkuk 2:14, Matthew 13:35, Colossians 1:16-17)

#empathy#rhythm of life#grounding#unity#connectedness#self awareness#feminism#fuck the patriarchy#intersectional feminism#female protagonist#determination#courage#sisterhood#music of the universe#music of the cosmos#cosmic music#resilience#mindfulness#personal growth#growth mindset#embrace the darkness#mental health#depression#coping strategies#second coming#the end is near

0 notes

Text

How to transform raw data into business insights?

In today’s data-driven world, businesses are inundated with information. From customer behavior patterns to operational metrics, organizations collect vast amounts of raw data daily. However, this data in its raw form holds little value until it is processed and analyzed to extract actionable insights. For C-suite executives, startup entrepreneurs, and managers, the ability to transform the data into business insights can be a game-changer. This article explores the steps and strategies to effectively harness the data for strategic decision-making.

Understanding the Nature of Raw Data

Before delving into the transformation process, it is essential to understand what raw data entails. It is unprocessed and unstructured information that comes from various sources, such as customer transactions, website analytics, social media interactions, or IoT devices. While it holds immense potential, it requires careful refinement and analysis to be useful.

The challenge for businesses lies in navigating the complexity and volume of raw data. Identifying patterns, trends, and anomalies within this data is the first step toward unlocking its value.

1. Set Clear Objectives

Transforming raw data begins with a clear understanding of your business goals. What questions are you trying to answer? What challenges are you looking to address? Defining these objectives will guide your approach to data collection and analysis.

For example, if your goal is to improve customer retention, focus on collecting this data related to customer feedback, purchase history, and service interactions. Clear objectives ensure that your efforts remain focused and aligned with business priorities.

2. Ensure Data Quality

Poor-quality raw data can lead to inaccurate insights and flawed decision-making. Ensuring the accuracy, completeness, and consistency of your data is a crucial step in the transformation process. Data cleansing involves removing duplicates, filling gaps, and standardizing formats to ensure reliability. It helps to eliminate unnecessary and outdated data.

Adopting robust data governance practices can further enhance data quality. This includes establishing protocols for data collection, storage, and access while ensuring compliance with privacy regulations.

3. Leverage Advanced Tools and Technologies

Modern technologies play a pivotal role in transforming the data into meaningful insights. Tools such as data visualization software, business intelligence platforms, and artificial intelligence (AI) solutions can simplify and accelerate the process.

- Data Analytics Tools: Platforms like Tableau, Power BI, or Looker help visualize the data, making it easier to identify patterns and trends.

- Machine Learning Models: AI algorithms can analyze large datasets, uncovering correlations and predictive insights that might go unnoticed by human analysts.

- Cloud Computing: Cloud-based solutions enable businesses to store and process massive volumes of raw data efficiently and cost-effectively.

4. Develop a Structured Data Pipeline

A structured data pipeline ensures the seamless flow of raw data from collection to analysis. The pipeline typically involves the following stages:

i. Data Ingestion: Collecting data from various sources, such as CRM systems, social media, or IoT devices.

ii. Data Storage: Storing the data in data lakes or warehouses for easy access and processing.

iii. Data Processing: Cleaning, transforming, and organizing data for analysis.

iv. Data Analysis: Applying statistical and machine learning techniques to extract insights.

By automating these stages, businesses can save time and reduce errors, enabling faster decision-making.

4. Involve Cross-Functional Teams

Data-driven insights are most impactful when they are shaped by diverse perspectives. Involving cross-functional teams—such as marketing, finance, and operations—ensures that the analysis of this data is comprehensive and aligned with organizational needs.

For instance, marketing teams can provide context for customer behavior data, while operations teams can offer insights into supply chain metrics. This enhances the team work in an organization and the employees are able to work understanding each other specific needs to that relate to data. These intricate details help a lot in research and surveys, when carried out.

5. Focus on Visualization and Storytelling

Transforming it into business insights is not just about numbers; it’s about telling a story that drives action. Data visualization tools enable businesses to present insights in a clear and compelling way, using charts, graphs, and dashboards. It increases the possibility of reach to the consumers and makes the insights gotten more impactful.

Effective storytelling involves connecting the dots between data points to illustrate trends, opportunities, and risks. This approach ensures that insights are not only understood but also acted upon by stakeholders.

6. Monitor and Iterate

Data transformation is an ongoing process. Regularly monitoring the outcomes of your insights and adjusting your approach based on new data ensures continuous improvement. Feedback loops are essential for refining data models and enhancing the accuracy of future insights.

Businesses should also stay abreast of technological advancements and evolving industry trends to keep their data transformation strategies relevant and effective.

7. Emphasize Data Ethics and Privacy

As businesses rely more on raw data, adhering to ethical and privacy standards becomes paramount. Ensuring transparency in data usage and obtaining proper consent from data subjects builds trust with customers and stakeholders.

Implementing stringent security measures protects data from breaches and misuse, safeguarding both the organization and its reputation. Exercising strict access to the data is mandatory as plagiarizing of data is a high possibility which makes it riskier and provides personally researched information to the competitor.

Conclusion

The ability to transform raw data into actionable business insights is no longer a luxury but a necessity in today’s competitive landscape. By setting clear objectives, ensuring data quality, leveraging advanced tools, and fostering collaboration, businesses can unlock the true potential of their data.

Uncover the latest trends and insights with our articles on Visionary Vogues

0 notes

Text

Transforming Data Management with SaaS and Secure Solutions from Match Data Pro LLC

In today's data-driven world, companies need innovative tools and solutions for managing a tremendous amount of information. With such requirements in mind, Match Data Pro LLC stands as one of the most successful companies providing leading-edge tools in data management software. This is a suite of features such as SaaS Data Solutions, Data Cleansing Tools, Contact Matching Services, and much more that help set new standards for reliable data management.

SaaS Data Solutions: Flexible Solution for Data Management

SaaS is revolutionizing how businesses handle their data. Through SaaS Data Solutions offered by Match Data Pro LLC, scalability in the form of cloud computing systems enables efficient handling of data processes. Being easily accessible and infrastructure-free, SaaS solutions allow for seamless integration and use.

Important advantages of SaaS Data Solutions are as follows:

Scalability: Increase your data operations without hardware constraints.

Cost-effectiveness: Save upfront infrastructural and maintenance costs.

Accessibility: View your data and tools from anywhere, at any time.

In using SaaS solutions, it allows a business to grow while it lets Match Data Pro handle all the complexities related to managing data.

Data Cleansing Tools: Enhance the Quality of Data

Accurate and reliable data are the backbone of successful business operations. Match Data Pro LLC offers powerful Data Cleansing Tools that help businesses keep clean and consistent datasets. It identifies and corrects errors, inconsistencies, and duplicates.

Some features of the data cleansing tool include:

Error detection: Identify the incorrect or incomplete entries.

Standardization: Ensures uniform data formatting for all records.

Duplicate removal: Eliminates redundant data entries to increase accuracy.

With Match Data Pro's data cleansing tools, businesses can unlock the full potential of their data by ensuring it's reliable and actionable.

Contact Matching Services: Improving Data Quality

Duplicate or mismatched contact records can lead to inefficiencies and errors in business operations. Match Data Pro LLC's Contact Matching Services offer an advanced solution to identify and merge duplicate or similar records effectively.

With robust algorithms, these services help businesses:

Identify duplicate contacts across datasets.

Merge similar records while retaining crucial information.

Standardize contact information to improve database accuracy.

By leveraging Match Data Pro’s contact matching services, businesses can reduce redundancy, improve communication, and enhance overall data quality.

Data Integration Platform: Unifying Your Data Ecosystem

Businesses in today's integrated world rest on multiple data sources. Match Data Pro LLC's Data Integration Platform unifies the possibility of assimilating and managing data across several systems seamlessly.

Main features of the data integration platform are:

Real-time synchronization to maintain consistency of data in every system

Implementation using custom connectors- connect the desired applications and databases

Centralized management to deal with all data connections from a unified dashboard.

With Match Data Pro’s integration platform, businesses can break down data silos and create a cohesive, connected environment for efficient decision-making.

Secure Data Solutions: Safeguarding Your Information

Data security is a top priority for businesses handling sensitive information. Match Data Pro LLC offers Secure Data Solutions to protect your data from unauthorized access and breaches.

Benefits of secure data solutions include:

Advanced encryption: Keep your data safe during storage and transmission.

Access control: Ensure only authorized personnel can access sensitive information.

Compliance support: Easy compliance with industry standards and regulations.

By using secure data solutions, businesses can rest assured that their data is safe.

Data Cleansing Software: Data Preparation Simplified

Match Data Pro LLC's Data Cleansing Software offers advanced tools to make preparing and maintaining clean datasets easier. Designed for scalability, this software is ideal for businesses of all sizes.

Key features include:

Auto cleaning processes, which reduces manual efforts and errors.

Customizable rules so that cleansing processes are tailored to your specific needs.

Integration capabilities to fit existing systems and workflows.

With Match Data Pro's data cleansing software, businesses will find their data always accurate, complete, and ready for analysis.

Why choose Match Data Pro LLC for data management?

Match Data Pro LLC leads the industry in a full spectrum of tools and solutions. It helps customers overcome challenges related to data inconsistency, operational inefficiency, and security while helping businesses attain their data management objectives.

Advantages when one partners with Match Data Pro LLC:

Experience in data management.

User-friendly, innovative tools for all sizes of businesses.

Customer success commitment through tailored solutions and support.

Conclusion

Effective data management is the backbone of any successful organization. With innovative solutions such as SaaS Data Solutions, Data Cleansing Tools, Contact Matching Services, and more, Match Data Pro LLC is a trusted partner for businesses looking to optimize their data operations. Whether you need to integrate data seamlessly, secure sensitive information, or maintain clean datasets, Match Data Pro LLC has you covered.

0 notes

Text

How AI and Machine Learning Are Revolutionizing Data Quality Assurance

In the fast-paced world of business, data quality is critical to operational success. Without accurate and consistent data, organizations risk making poor decisions that can lead to lost opportunities and financial setbacks. Fortunately, advancements in Artificial Intelligence (AI) and Machine Learning (ML) are transforming Data Quality Management (DQM), offering businesses innovative solutions to enhance data accuracy, streamline processes, and ensure that their data is fit for strategic use.

The Role of Data Quality in Business Success

Data is the driving force behind most modern business processes. From customer insights to financial forecasts, data informs virtually every decision. However, poor-quality data can have a devastating impact, leading to inaccuracies, delayed decisions, and inefficient resource allocation. Reports show that poor data quality costs businesses billions annually, underscoring the need for effective DQM strategies.

In this environment, AI and ML technologies offer immense value by providing the tools needed to detect and address data quality issues quickly and efficiently. By automating key aspects of DQM, these technologies help businesses minimize human error, reduce operational inefficiencies, and ensure their data supports better decision-making.

How AI and Machine Learning Enhance Data Quality Management

AI and ML are at the forefront of transforming DQM practices. With their ability to process large volumes of data and learn from patterns, these technologies allow businesses to address traditional data management challenges such as redundancy, inaccuracies, and slow data integration.

Automated Data Cleansing

Data cleansing, the process of detecting and correcting inaccuracies or inconsistencies, is one of the primary areas where AI and ML shine. These technologies can scan vast datasets to identify errors, duplicates, and inconsistencies, automatically correcting them without manual intervention. By leveraging AI’s ability to recognize data patterns and ML's predictive capabilities, organizations can ensure that their data is always clean and consistent.

Efficient Data Integration

One of the major hurdles businesses face is integrating data from various sources. AI and ML technologies facilitate seamless integration by mapping relationships between datasets and ensuring data from multiple sources is aligned. These systems ensure that data flows smoothly between departments, platforms, and systems, eliminating silos that can hinder decision-making and creating a more cohesive data environment.

Real-Time Data Monitoring and Alerts

AI-driven monitoring systems track data quality metrics in real-time. Whenever data quality falls below acceptable thresholds, these systems send instant alerts, allowing businesses to respond quickly to any issues. Machine learning algorithms continuously analyze trends and anomalies, providing valuable insights that help refine DQM processes and avoid potential pitfalls before they impact the business.

Predictive Insights for Proactive Data Governance

AI and ML are revolutionizing predictive analytics in DQM. By analyzing historical data, these technologies can predict potential data quality issues, allowing businesses to take preventive measures before problems occur. This foresight leads to better governance and more efficient data management practices, ensuring data remains accurate and compliant with regulations.

Practical Applications of AI and ML in Data Quality Management

Numerous industries are already benefiting from AI and ML technologies in DQM. A global tech company used machine learning to clean customer data, improving data accuracy by over 30%. In another example, a healthcare provider leveraged AI-powered systems to monitor clinical data, reducing errors and improving patient outcomes. These real-world applications show the immense value AI and ML bring to data quality management.

Conclusion

Incorporating AI and Machine Learning into Data Quality Management is essential for businesses aiming to stay competitive in a data-driven world. By automating error detection, improving integration, and offering predictive insights, these technologies enable organizations to maintain the highest standards of data quality. As companies continue to navigate the complexities of data, leveraging AI and ML will be crucial for maintaining a competitive edge. At Infiniti Research, we specialize in helping organizations implement AI-powered DQM strategies to drive better business outcomes. Contact us today to learn how we can assist you in enhancing your data quality management practices.

For more information please contact

0 notes