#data annotation outsourcing

Explore tagged Tumblr posts

Text

Guide to Partner with Data Annotation Service Provider

Data annotation demand has rapidly grown with the rise in AI and ML projects. Partnering with a third party is a comprehensive solution to get hands on accurate and efficient annotated data. Checkout some of the factors to hire an outsourcing data annotation service company.

#data annotation#data annotation service#image annotation services#video annotation services#audio annotation services#image labeling services#data annotation solution#data annotation outsourcing

2 notes

·

View notes

Text

Quality data annotation is crucial to building smarter, scalable AI/ML models that enhance efficiency and accuracy. Accurate data annotation transforms raw data into valuable assets, enabling machine learning algorithms to make intelligent predictions and decisions. With a focus on precision, quality data labeling supports model scalability, ensuring adaptability to various applications and industries. Learn how data annotation contributes to a solid foundation for AI innovation and improves outcomes in diverse fields, from healthcare to finance and beyond.

#data annotation#data annotation services.#data annotation machine learning#data annotation company#data annotation companies#data annotation outsourcing

0 notes

Text

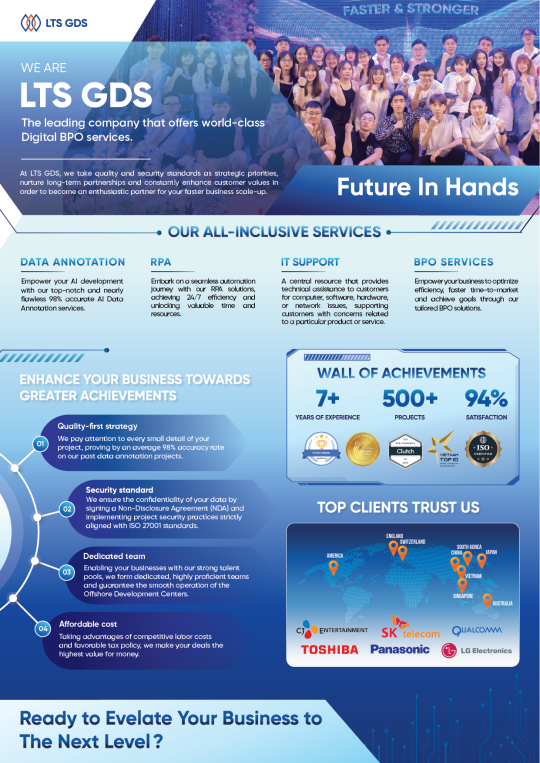

Our IT Services

With more than 7 years of experience in the data annotation industry, LTS Global Digital Services has been honored to receive major domestic awards and trust from significant customers in the US, Germany, Korea, and Japan. Besides, having experienced hundreds of projects in different fields such as Automobile, Retail, Manufacturing, Construction, and Sports, our company confidently completes projects and ensures accuracy of up to 99.9%. This has also been confirmed by 97% of customers using the service.

If you are looking for an outsourcing company that meets the above criteria, contact LTS Global Digital Service for advice and trial!

2 notes

·

View notes

Text

Reasons To Outsource Your Data Annotation: The Ultimate Guide

Businesses are looking to improve their data processing efficiency and accuracy within the budget. They collect and analyze the data to gain valuable insights. The critical aspect of this process is data annotation. It is a method of labeling and categorizing all the data to improve accuracy and usability. However, annotating data can be time-consuming and needs sufficient resources. This is why many companies outsource their project to professional service providers. Let’s explore everything about data annotation and the reasons to outsource your data annotation work.

0 notes

Text

#ai#cogitotech usa#annotation#data annotation#data collection#machine learning#content#data annotation companies#cogito ai#data quality#Outsourcing Data Labeling

0 notes

Text

Data labeling in machine learning involves the process of assigning relevant tags or annotations to a dataset, which helps the algorithm to learn and make accurate predictions. Learn more

0 notes

Text

Beyond Paperless: The Unexpected Reasons Businesses Need Printers

In today's digital world, the concept of a paperless office has gained significant traction. With the proliferation of cloud storage, electronic signatures, and digital workflows, it's easy to assume that traditional printers have become obsolete. However, the reality is quite the opposite. Despite the push towards digitization, printers continue to be indispensable tools for businesses. In this article, we'll explore the unexpected reasons why businesses still need printers and how they contribute to efficiency, security, and overall productivity.

The Convenience Factor

In a world where convenience is king, printers play a crucial role in streamlining everyday tasks. While digital documents have their advantages, there are still numerous instances where physical copies are necessary. Consider the following scenarios:

Client Meetings: Despite the prevalence of digital presentations, having hard copies of reports, proposals, and contracts can enhance the professionalism of client meetings.

Legal Documents: Many legal processes still require physical signatures and notarization, making printers essential for handling contracts, agreements, and other legal paperwork.

On-the-Go Printing: In fast-paced environments, the ability to quickly print boarding passes, event tickets, or last-minute documents can be a lifesaver.

Security and Compliance

Beyond convenience, printers play a critical role in maintaining the security and compliance of sensitive information. While digital files are susceptible to cyber threats, physical documents provide an added layer of security. Here's how printers contribute to safeguarding sensitive data:

Confidentiality: Printing sensitive documents in-house reduces the risk of unauthorized access compared to outsourcing printing services.

Regulatory Compliance: Many industries, such as healthcare and finance, have strict regulations regarding the handling of sensitive information. Printers equipped with secure printing features help businesses comply with these regulations.

Data Protection: By utilizing secure printing methods, businesses can prevent unauthorized access to printed documents, mitigating the risk of data breaches.

The Human Touch

In a world dominated by screens and digital interactions, the tactile experience businesses need printers of handling physical documents can have a profound impact. The act of reviewing a printed report, annotating a document with a pen, or sharing a physical handout fosters a sense of connection and engagement that digital files often lack. This human touch can enhance collaboration, creativity, and overall communication within a business environment.

FAQs

Q: With the rise of e-signatures, do businesses still need physical copies of documents? A: While e-signatures have streamlined many processes, certain legal and regulatory requirements still necessitate physical copies of documents. Additionally, some individuals may prefer physical documents for review and record-keeping purposes.

Q: How can printers contribute to environmental sustainability? A: Modern printers are designed with energy-efficient features and support sustainable printing practices such as duplex printing and toner-saving modes, reducing overall environmental impact.

Q: Are there security risks associated with network-connected printers? A: Like any networked device, printers can be vulnerable to cyber threats. However, implementing secure printing protocols and regularly updating printer firmware can mitigate these risks.

Conclusion

In conclusion, the "Beyond Paperless: The Unexpected Reasons Businesses Need Printers" highlights the enduring relevance of printers in today's business landscape. From enhancing convenience and security to fostering human connections, printers continue to be indispensable tools for modern workplaces. As businesses navigate the complexities of digital transformation, it's clear that the role of printers goes beyond paper – they are essential enablers of productivity, security, and efficiency. Embracing the synergy of digital and physical workflows, businesses can harness the full potential of printers to drive success in the digital age.

6 notes

·

View notes

Text

Best data extraction services in USA

In today's fiercely competitive business landscape, the strategic selection of a web data extraction services provider becomes crucial. Outsource Bigdata stands out by offering access to high-quality data through a meticulously crafted automated, AI-augmented process designed to extract valuable insights from websites. Our team ensures data precision and reliability, facilitating decision-making processes.

For more details, visit: https://outsourcebigdata.com/data-automation/web-scraping-services/web-data-extraction-services/.

About AIMLEAP

Outsource Bigdata is a division of Aimleap. AIMLEAP is an ISO 9001:2015 and ISO/IEC 27001:2013 certified global technology consulting and service provider offering AI-augmented Data Solutions, Data Engineering, Automation, IT Services, and Digital Marketing Services. AIMLEAP has been recognized as a ‘Great Place to Work®’.

With a special focus on AI and automation, we built quite a few AI & ML solutions, AI-driven web scraping solutions, AI-data Labeling, AI-Data-Hub, and Self-serving BI solutions. We started in 2012 and successfully delivered IT & digital transformation projects, automation-driven data solutions, on-demand data, and digital marketing for more than 750 fast-growing companies in the USA, Europe, New Zealand, Australia, Canada; and more.

-An ISO 9001:2015 and ISO/IEC 27001:2013 certified -Served 750+ customers -11+ Years of industry experience -98% client retention -Great Place to Work® certified -Global delivery centers in the USA, Canada, India & Australia

Our Data Solutions

APISCRAPY: AI driven web scraping & workflow automation platform APISCRAPY is an AI driven web scraping and automation platform that converts any web data into ready-to-use data. The platform is capable to extract data from websites, process data, automate workflows, classify data and integrate ready to consume data into database or deliver data in any desired format.

AI-Labeler: AI augmented annotation & labeling solution AI-Labeler is an AI augmented data annotation platform that combines the power of artificial intelligence with in-person involvement to label, annotate and classify data, and allowing faster development of robust and accurate models.

AI-Data-Hub: On-demand data for building AI products & services On-demand AI data hub for curated data, pre-annotated data, pre-classified data, and allowing enterprises to obtain easily and efficiently, and exploit high-quality data for training and developing AI models.

PRICESCRAPY: AI enabled real-time pricing solution An AI and automation driven price solution that provides real time price monitoring, pricing analytics, and dynamic pricing for companies across the world.

APIKART: AI driven data API solution hub APIKART is a data API hub that allows businesses and developers to access and integrate large volume of data from various sources through APIs. It is a data solution hub for accessing data through APIs, allowing companies to leverage data, and integrate APIs into their systems and applications.

Locations: USA: 1-30235 14656 Canada: +1 4378 370 063 India: +91 810 527 1615 Australia: +61 402 576 615 Email: [email protected]

2 notes

·

View notes

Text

The Best Labelbox Alternatives for Data Labeling in 2025

Whether you're training machine learning models, building AI applications, or working on computer vision projects, effective data labeling is critical for success. Labelbox has been a go-to platform for enterprises and teams looking to manage their data labeling workflows efficiently. However, it may not suit everyone’s needs due to high pricing, lack of certain features, or compatibility issues with specific use cases.

If you're exploring alternatives to Labelbox, you're in the right place. This blog dives into the top Labelbox alternatives, highlights the key features to consider when choosing a data labeling platform, and provides insights into which option might work best for your unique requirements.

What Makes a Good Data Labeling Platform?

Before we explore alternatives, let's break down the features that define a reliable data labeling solution. The right platform should help optimize your labeling workflow, save time, and ensure precision in annotations. Here are a few key features you should keep in mind:

Scalability: Can the platform handle the size and complexity of your dataset, whether you're labeling a few hundred samples or millions of images?

Collaboration Tools: Does it offer features that improve collaboration among team members, such as user roles, permissions, or integration options?

Annotation Capabilities: Look for robust annotation tools that support bounding boxes, polygons, keypoints, and semantic segmentation for different data types.

AI-Assisted Labeling: Platforms with auto-labeling capabilities powered by AI can significantly speed up the labeling process while maintaining accuracy.

Integration Flexibility: Can the platform seamlessly integrate with your existing workflows, such as TensorFlow, PyTorch, or custom ML pipelines?

Affordability: Pricing should align with your budget while delivering a strong return on investment.

With these considerations in mind, let's explore the best alternatives to Labelbox, including their strengths and weaknesses.

Top Labelbox Alternatives

1. Macgence

Strengths:

Offers a highly customizable end-to-end solution that caters to specific workflows for data scientists and machine learning engineers.

AI-powered auto-labeling to accelerate labeling tasks.

Proven expertise in handling diverse data types, including images, text, and video annotations.

Seamless integration with popular machine learning frameworks like TensorFlow and PyTorch.

Known for its attention to data security and adherence to compliance standards.

Weaknesses:

May require time for onboarding due to its vast range of features.

Limited online community documentation compared to Labelbox.

Ideal for:

Organizations that value flexibility in their workflows and need an AI-driven platform to handle large-scale, complex datasets efficiently.

2. Supervisely

Strengths:

Strong collaboration tools, making it easy to assign tasks and monitor progress across teams.

Extensive support for complex computer vision projects, including 3D annotation.

A free plan that’s feature-rich enough for small-scale projects.

Intuitive user interface with drag-and-drop functionality for ease of use.

Weaknesses:

Limited scalability for larger datasets unless opting for the higher-tier plans.

Auto-labeling tools are slightly less advanced compared to other platforms.

Ideal for:

Startups and research teams looking for a low-cost option with modern annotation tools and collaboration features.

3. Amazon SageMaker Ground Truth

Strengths:

Fully managed service by AWS, allowing seamless integration with Amazon's cloud ecosystem.

Uses machine learning to create accurate annotations with less manual effort.

Pay-as-you-go pricing, making it cost-effective for teams already on AWS.

Access to a large workforce for outsourcing labeling tasks.

Weaknesses:

Requires expertise in AWS to set up and configure workflows.

Limited to AWS ecosystem, which might pose constraints for non-AWS users.

Ideal for:

Teams deeply embedded in the AWS ecosystem that want an AI-powered labeling workflow with access to a scalable workforce.

4. Appen

Strengths:

Combines advanced annotation tools with a global workforce for large-scale projects.

Offers unmatched accuracy and quality assurance with human-in-the-loop workflows.

Highly customizable solutions tailored to specific enterprise needs.

Weaknesses:

Can be expensive, particularly for smaller organizations or individual users.

Requires external support for integration into custom workflows.

Ideal for:

Enterprises with complex projects that require high accuracy and precision in data labeling.

Use Case Scenarios: Which Platform Fits Best?

For startups with smaller budgets and less complex projects, Supervisely offers an affordable and intuitive entry point.

For enterprises requiring precise accuracy on large-scale datasets, Appen delivers unmatched quality at a premium.

If you're heavily integrated with AWS, SageMaker Ground Truth is a practical, cost-effective choice for your labeling needs.

For tailored workflows and cutting-edge AI-powered tools, Macgence stands out as the most flexible platform for diverse projects.

Finding the Best Labelbox Alternative for Your Needs

Choosing the right data labeling platform depends on your project size, budget, and technical requirements. Start by evaluating your specific use cases—whether you prioritize cost efficiency, advanced AI tools, or integration capabilities.

For those who require a customizable and AI-driven data labeling solution, Macgence emerges as a strong contender to Labelbox, delivering robust capabilities with high scalability. No matter which platform you choose, investing in the right tools will empower your team and set the foundation for successful machine learning outcomes.

Source: - https://technologyzon.com/blogs/436/The-Best-Labelbox-Alternatives-for-Data-Labeling-in-2025

0 notes

Text

Zoetic BPO Services Reviews: Is It the Right Partner for Your Non-Voice Process Needs?

In today’s digital age, non-voice process BPO services have gained immense popularity due to their efficiency, cost-effectiveness, and ability to streamline business operations. Companies worldwide are outsourcing data entry, email support, chat support, and other back-office tasks to specialized BPO service providers. If you are looking for a genuine and reliable company to get non-voice BPO projects, Zoetic BPO Services stands out as a trusted name in the industry.

What is a Non-Voice Process?

A non-voice process involves back-office operations that do not require direct customer interaction through voice calls. Instead, tasks are performed via:

Data Entry Services

Email Support Services

Chat Support Services

Form Processing

Content Moderation

Image and Video Annotation

These services are crucial for businesses looking to enhance productivity without increasing overhead costs.

Why Choose Zoetic BPO Services for Non-Voice Process Projects?

When selecting a BPO service provider, reliability, quality, and transparency are critical factors. Here’s why Zoetic BPO Services is the best company to partner with:

1. Authentic and Verified BPO Projects

Zoetic BPO Services provides genuine non-voice projects, ensuring that businesses and freelancers get real work opportunities without falling into scams.

2. Expertise in Non-Voice Processes

With years of experience, Zoetic BPO Services has built a reputation for delivering high-quality, error-free non-voice services to businesses across various domains.

3. Cost-Effective and Scalable Solutions

The company offers affordable BPO solutions, allowing businesses to scale their operations seamlessly without exceeding their budget.

4. Data Security and Compliance

Handling sensitive data requires strict security measures. Zoetic BPO Services ensures data confidentiality and follows industry standards to maintain compliance with regulatory requirements.

5. Positive Client Feedback

If you search for Zoetic BPO Services Reviews, you will find numerous positive testimonials from businesses and individuals who have successfully secured profitable non-voice BPO projects through them.

FAQs

1. What types of non-voice projects does Zoetic BPO Services offer?

Zoetic BPO Services provides a variety of non-voice projects, including data entry, email support, chat support, form processing, and more.

2. Are the projects provided by Zoetic BPO Services genuine?

Yes, Zoetic BPO Services is known for offering legitimate and verified non-voice projects, ensuring trust and transparency.

3. How can I apply for a non-voice project at Zoetic BPO Services?

You can visit their official website or contact their support team to explore available BPO opportunities.

4. Is there any training provided for non-voice projects?

Yes, depending on the project requirements, Zoetic BPO Services offers training and guidance to ensure quality output.

5. How can I check Zoetic BPO Services Reviews?

You can search for Zoetic BPO Services Reviews online or visit their website to read testimonials from satisfied clients.

#bpocompany#dataentryprojects#outsourcing#dataentry#dataentryservices#dataentrywork#zoeticbposervices#bpo#business#outsourcingservices

0 notes

Text

Human-in-the-loop data annotation significantly improves the precision of machine learning models. By integrating human feedback, models can better understand complex data patterns and enhance their accuracy. This collaborative approach combines automated processing with human insight, ensuring higher quality results. Leveraging human expertise in data labeling helps in refining model predictions, leading to more reliable and efficient AI systems. This method is essential for tasks requiring nuanced understanding and precise outcomes.

#data annotation#data annotation outsourcing#data annotation company#data annotation companies#data annotation machine learning

0 notes

Text

A Guide to Choosing a Data Annotation Outsourcing Company

Clarify the Requirements: Before evaluating outsourcing partners, it's crucial to clearly define your data annotation requirements. Consider aspects such as the type and volume of data needing annotation, the complexity of annotations required, and any industry-specific or regulatory standards to adhere to.

Expertise and Experience: Seek out outsourcing companies with a proven track record in data annotation. Assess their expertise within your industry vertical and their experience handling similar projects. Evaluate factors such as the quality of annotations, adherence to deadlines, and client testimonials.

Data Security and Compliance: Data security is paramount when outsourcing sensitive information. Ensure that the outsourcing company has robust security measures in place to safeguard your data and comply with relevant data privacy regulations such as GDPR or HIPAA.

Scalability and Flexibility: Opt for an outsourcing partner capable of scaling with your evolving needs. Whether it's a small pilot project or a large-scale deployment, ensure the company has the resources and flexibility to meet your requirements without compromising quality or turnaround time.

Cost and Pricing Structure: While cost is important, it shouldn't be the sole determining factor. Evaluate the pricing structure of potential partners, considering factors like hourly rates, project-based pricing, or subscription models. Strike a balance between cost and quality of service.

Quality Assurance Processes: Inquire about the quality assurance processes employed by the outsourcing company to ensure the accuracy and reliability of annotated data. This may include quality checks, error detection mechanisms, and ongoing training of annotation teams.

Prototype: Consider requesting a trial run or pilot project before finalizing an agreement. This allows you to evaluate the quality of annotated data, project timelines, and the proficiency of annotators. For complex projects, negotiate a Proof of Concept (PoC) to gain a clear understanding of requirements.

For detailed information, see the full article here!

2 notes

·

View notes

Text

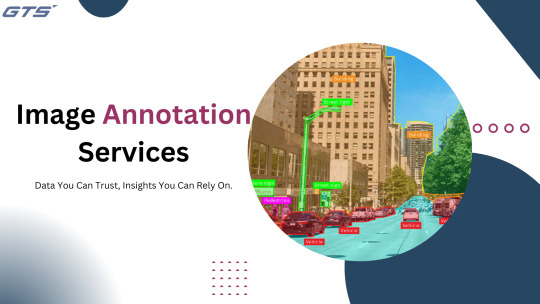

Maximizing Efficiency in AI Projects with Image Annotation Services

Introduction:

Artificial Intelligence (AI) has revolutionized numerous sectors by streamlining processes, enhancing decision-making capabilities, and improving user interactions. A fundamental component that underpins the effectiveness of any robust AI model is data preparation, particularly through image annotation. As the demand for AI applications, especially in the realm of computer vision, continues to expand, the significance of image annotation services becomes increasingly critical in optimizing the performance and precision of AI initiatives.

This article will delve into the significance of image annotation services, the various forms of image annotations, and how these services can greatly enhance the results of AI projects.

Comprehending Image Annotation Services

Image Annotation Services refers to the process of labeling images or specific objects within those images to render them understandable to AI systems. This involves assigning meaningful tags to raw images, enabling AI technologies to interpret, identify, and categorize visual information. Annotation tasks can range from recognizing and classifying objects in images to delineating specific boundaries, points, or areas of interest.

These annotations serve as the essential "ground truth" for AI and machine learning algorithms, making them vital for developing models capable of executing intricate tasks such as object detection, facial recognition, and medical imaging analysis.

The Importance of Image Annotation Services for AI Projects

Although image annotation may appear straightforward, it demands a high level of accuracy, consistency, and specialized knowledge. Human annotators must grasp the subtleties of visual data and apply labels appropriately. Therefore, utilizing image annotation services is crucial for enhancing the efficiency of AI projects.

Precision in Model Training : AI models depend on precise data for effective predictions and classifications. Accurate image annotation is essential for ensuring that models learn from high-quality, well-labeled datasets, which enhances their performance. Inaccurate annotations can lead to misinterpretations by AI models, resulting in errors and inefficiencies within the system.

2. Enhanced Scalability : As AI initiatives expand, the demand for image annotation increases significantly. Delegating this responsibility to specialized image annotation services enables organizations to efficiently manage extensive datasets, thereby accelerating the training process without compromising quality. This scalability ensures that AI projects adhere to timelines and can accommodate growing data requirements.

3. Proficiency in Complex Annotation Tasks : Certain AI initiatives necessitate sophisticated annotation methods, such as pixel-level segmentation or 3D object detection. Professional image annotation services possess the expertise required to tackle these intricate tasks, guaranteeing that AI models receive the most precise and comprehensive annotations available.

4. Economic Viability : Establishing an in-house team of annotators for large-scale AI projects can incur substantial costs and require considerable time. Image annotation services offer a more economically viable alternative by providing access to a skilled workforce without the overhead expenses linked to hiring and training a complete team. This approach allows organizations to allocate resources to the primary aspects of their AI projects while outsourcing annotation tasks to experts.

5. Accelerated Time-to-Market : In the realm of AI projects, time is often a crucial element, particularly in competitive sectors. Image annotation services can dramatically reduce the time needed for image annotation, ensuring that AI models are trained swiftly and effectively. This expedited development cycle results in a quicker time-to-market for AI applications, providing businesses with a competitive advantage.

Types of Image Annotations

Various types of image annotations are employed in artificial intelligence projects, tailored to meet the specific requirements of each initiative. Among the most prevalent types are:

Bounding Boxes : Bounding boxes represent the most basic form of annotation, where annotators create rectangular outlines around objects within an image. This technique is frequently utilized in object detection tasks to instruct AI models on how to recognize and categorize objects present in images.

Polygonal Annotation : For objects with more complex shapes, polygonal annotation is utilized to delineate the exact contours of an object. This approach is particularly advantageous for irregularly shaped items or those with detailed boundaries, such as in satellite imagery analysis or medical imaging.

Semantic Segmentation : Semantic segmentation entails labeling each pixel in an image to categorize various regions. This annotation type is beneficial for applications that necessitate precise recognition, such as in autonomous driving or medical diagnostics.

Keypoint Annotation : Keypoint annotation consists of marking specific points within an image, such as facial landmarks in facial recognition tasks or joint locations in human pose estimation. This assists AI models in comprehending the relationships among different components of the image.

3D Cubes and LIDAR Annotation : In sophisticated AI applications, particularly in the realm of autonomous vehicles, 3D annotation techniques are employed. This involves labeling three-dimensional objects or utilizing LIDAR data to aid AI systems in understanding spatial relationships within a three-dimensional environment.

Advantages of Delegating Image Annotation to Experts

Although managing image annotation internally may appear viable, there are numerous advantages to entrusting this task to specialized services:

Uniformity and Standardization : Expert services employ established annotation protocols and tools to guarantee that each image is annotated uniformly. This uniformity is essential for training machine learning models, as discrepancies in annotations can adversely impact model performance.

Accelerated Turnaround : Delegating annotation tasks enables quicker completion of AI projects. Professional services are equipped to manage extensive datasets and can deliver high-quality annotations in a timely manner, thereby expediting the model training phase.

Access to Cutting-Edge Tools and Technology : Image annotation providers frequently utilize sophisticated software and tools that enhance both the efficiency and precision of the annotation process. These tools may feature capabilities such as auto-tagging, collaborative workflows, and quality assurance mechanisms to optimize the annotation workflow.

Quality Assurance and Error Identification : Reputable image annotation services implement rigorous quality control measures to identify and rectify errors. This minimizes the risk of incorrect labels impacting the training of the AI model, thereby contributing to the overall success of the AI initiative.

Conclusion

Image annotation services are vital for improving the efficiency and effectiveness of AI projects. By outsourcing the annotation process to professionals, organizations can ensure the precision, scalability, and rapidity of their AI systems. These services not only enhance the quality of training data but also assist companies in saving time, lowering costs, and maintaining a competitive advantage in the swiftly evolving landscape of AI technologies.

Investing in professional image annotation services represents a prudent decision for any organization aiming to fully harness the potential of AI.Whether you’re working on autonomous driving, facial recognition, or any other computer vision project, image annotation will be a key factor in maximizing your AI project’s efficiency and success.

Image annotation services play a pivotal role in maximizing the efficiency of AI projects by providing high-quality, labeled data that enhances the accuracy of machine learning models. Partnering with experts like Globose Technology Solutions ensures precise annotations tailored to specific project needs, driving faster development cycles and more effective AI solutions. The right image annotation strategy accelerates project timelines, optimizes resource allocation, and ultimately empowers businesses to achieve their AI goals with greater precision and efficiency.

0 notes

Text

Struggling with Data Labeling? Try These Image Annotation Services

Introduction:

In the era of artificial intelligence and machine learning,Image Annotation Services data is the driving force. However, raw data alone isn’t enough; it needs to be structured and labeled to be useful. For businesses and developers working on AI models, especially those involving computer vision, accurate image annotation is crucial. But data labeling is no small task. It’s time-consuming, resource-intensive, and requires a meticulous approach.

If you’ve been struggling with data labeling, you’re not alone. The good news is that professional image annotation services can make this process seamless and efficient. Here’s a closer look at why data labeling is challenging, the importance of image annotation, and the best services to help you get it done.

The Challenges of Data Labeling

Time-Consuming Process

Labeling thousands or even millions of images can take an enormous amount of time, delaying project timelines and slowing innovation.

High Cost of In-House Teams

Building and maintaining an in-house team for data labeling can be costly, especially for small and medium-sized businesses.

Need for Precision

AI models require accurate and consistent labels. Even minor errors in annotation can significantly impact the performance of your AI systems.

Scaling Issues As your dataset grows, so do the challenges of managing, labeling, and ensuring quality control at scale.

The Importance of Image Annotation

Image annotation involves adding metadata or labels to images, helping AI systems understand what’s in a picture. These annotations are used to train models for tasks such as:

Object detection

Image segmentation

Facial recognition

Autonomous driving systems

Medical imaging analysis

Without proper annotation, AI models cannot interpret visual data effectively, leading to inaccurate predictions and unreliable outputs.

Top Image Annotation Services to Streamline Your Projects

If you’re ready to take your AI projects to the next level, here are some top-notch image annotation services to consider:

Offers a range of high-quality image and video annotation services tailored to various industries, including healthcare, retail, and automotive. With a focus on precision and scalability, they ensure your data labeling needs are met efficiently.

Key Features:

Bounding boxes, polygons, and semantic segmentation

Annotation for 2D and 3D data

Scalable solutions for large datasets

Affordable pricing plans

Scale AI

Scale AI provides a comprehensive suite of data annotation services, including image, video, and text labeling. Their platform combines human expertise with machine learning tools to deliver high-quality annotations.

Key Features:

Rapid turnaround times

Detailed quality assurance

Customizable annotation workflows

Labelbox

Labelbox is a popular platform for managing and annotating datasets. Its intuitive interface and robust toolset make it a favorite for teams working on complex computer vision projects.

Key Features:

Integration with ML pipelines

Flexible annotation tools

Collaboration-friendly platform

CloudFactory

CloudFactory specializes in combining human intelligence with automation to deliver precise image annotations. Their managed workforce is trained to handle intricate labeling tasks with accuracy.

Key Features:

Workforce scalability

Specialized training for annotators

Multilingual support

Amazon SageMaker Ground Truth

Amazon’s SageMaker Ground Truth is a powerful tool for building labeled datasets. It uses machine learning to automate annotation and reduce manual effort.

Key Features:

Active learning integration

Pay-as-you-go pricing

Automated labeling workflows

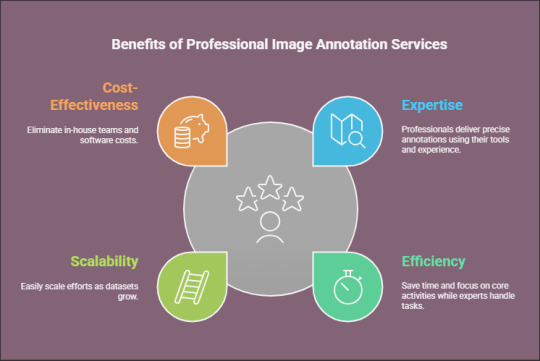

Why Choose Professional Image Annotation Services?

Outsourcing your image annotation tasks offers several benefits:

Expertise: Professionals have the tools and experience to deliver precise annotations.

Efficiency: Save time and focus on your core business activities while experts handle the data labeling.

Scalability: Easily scale your annotation efforts as your dataset grows.

Cost-Effectiveness: Eliminate the need for in-house teams and costly software investments.

Conclusion

Data labeling doesn’t have to be a bottleneck for your AI projects. By leveraging professional image annotation services like Globose Technology Solutions and others, you can ensure your models are trained on high-quality, accurately labeled datasets. This not only saves time and resources but also enhances the performance of your AI systems.

So, why struggle with data labeling when you can rely on experts to do it for you? Explore the services mentioned above and take the first step toward seamless, efficient, and accurate image annotation today.

0 notes

Text

Strategies for Efficient Video Data Acquisition in AI Initiatives

Introduction

In the field of artificial intelligence (AI), video data serves as a fundamental element for training advanced models that can interpret motion, identify objects, and recognize various activities. The increasing need for high-quality Video Data Collection is evident in applications ranging from autonomous vehicles to surveillance systems. Nevertheless, the process of collecting and organizing video data for AI projects is intricate and necessitates careful planning and implementation. This article will discuss effective strategies for video data acquisition to enhance the success of your AI projects.

The Significance of Video Data Collection

Video data provides a valuable reservoir of information for AI systems:

Temporal Context: Capturing sequences of frames allows for context and motion analysis.

Practical Applications: Crucial for tasks such as object tracking, action recognition, and scene comprehension.

Improved Model Training: High-quality video data significantly enhances the precision and reliability of AI models.

Strategies for Efficient Video Data Acquisition

Articulate Your Objectives Precisely

Prior to initiating video data acquisition, it is essential to delineate your project objectives:

What is the intended use of the dataset?

Which artificial intelligence models will leverage the data?

What particular annotations or labels are necessary (e.g., bounding boxes, object tracking, activity recognition)?

2.Select Appropriate Equipment

The caliber of your video data is contingent upon the recording apparatus:

Utilize high-definition cameras for enhanced image clarity.

Choose stabilized recording devices to minimize motion blur.

Ensure adequate lighting to improve visibility in the videos.

3.Strategy for Data Diversity

Utilizing diverse datasets enhances the performance of AI models:

Capture videos across a variety of settings (indoor, outdoor, urban, rural).

Incorporate different lighting conditions (daytime, nighttime, dusk, dawn).

Record a range of scenarios and subjects to reduce bias.

4.Maintain Consistent Data Formats

Uniformity facilitates data processing and annotation:

Adopt standardized video formats (e.g., MP4, AVI, MKV).

Ensure consistent frame rates and resolutions throughout recordings.

Document essential metadata such as timestamps, camera angles, and locations.

5.Establish Quality Control Protocols

Quality is imperative in the collection of video data:

Conduct regular reviews of videos for clarity, stability, and relevance.

Eliminate or re-record videos that exhibit excessive noise, inadequate lighting, or irrelevant content.

Employ automation tools to identify potential issues during the collection process.

6.Ethical Practices in Data Collection

Privacy and ethical considerations are vital in video data collection:

Secure consent from individuals featured in the videos.

Remove or anonymize personal information where necessary.

Comply with local and international data privacy regulations (e.g., GDPR, CCPA).

7.Leverage Automation Tools

Automation streamlines large-scale video data collection:

Use drones for aerial video capture in remote or expansive areas.

Deploy IoT devices for real-time video recording in specific locations.

Utilize scripts and APIs to integrate data directly into storage pipelines.

8.Annotate Your Video Data

Annotation adds context and prepares data for training:

Use professional annotation tools for tasks like object detection or activity tracking.

Ensure annotations are consistent and aligned with project requirements.

Outsource annotation tasks to expert services like GTS.AI.

Addressing Obstacles in Video Data Acquisition

Storage Needs: Video files tend to be substantial in size, necessitating adaptable storage solutions.

Processing Duration: The preprocessing of video data to ensure uniformity can be labor-intensive.

Financial Limitations: The procurement of high-quality equipment and annotation services can incur significant costs.

Mitigating Bias: Achieving diversity within datasets demands additional effort and strategic planning.

How GTS.AI Facilitates Video Data Acquisition

At GTS.AI, we are dedicated to assisting businesses and researchers in optimizing their video data collection and annotation workflows. Our offerings include:

Specialized Video Annotation: Customized to meet the specific requirements of your project, encompassing object detection, activity recognition, and more.

Data Quality Management: Upholding high standards through comprehensive validation processes.

Ethical Standards: Adhering to global data privacy regulations.

Tailored Solutions: Providing flexible and scalable services to accommodate your distinct needs.

Conclusion

The successful collection of video data serves as a crucial basis for developing AI systems capable of comprehending motion and context. By implementing the strategies presented in this guide, you can establish comprehensive video datasets that foster innovation within your projects. Collaborating with specialists such as GTS.AI guarantees that your data collection endeavors are conducted efficiently, ethically, and with significant impact. Are you prepared to enhance your AI initiatives? Reach out to us today!

0 notes

Text

The Role of Video Data Annotation in AI Development

Introduction:

In the swiftly advancing domain of artificial intelligence (AI), the annotation of video data is crucial for the creation of effective AI models. As the appetite for AI-driven applications increases, the necessity for precisely labeled video data becomes increasingly vital. This article delves into the importance of video data annotation in AI development and its influence on the efficacy of AI models.

Defining Video Data Annotation

Video Data Annotation refers to the practice of labeling or tagging video content with metadata to render it comprehensible for AI systems. This process includes the identification and categorization of various components within a video frame, such as objects, actions, and scenes. These annotations empower AI models to learn from and interpret video data, thereby facilitating tasks like object detection, action recognition, and scene comprehension.

The Significance of Video Data Annotation in AI Development

Improves Model Precision: High-quality video data annotation guarantees that AI models are trained on accurately labeled data. This enhances the models' capacity to identify patterns and generate precise predictions, resulting in superior performance in practical applications.

Facilitates Automation of Complex Tasks: Video data annotation is vital for training AI models to execute intricate tasks such as autonomous driving, surveillance, and video content analysis. Annotated video data provides the essential knowledge that AI systems require to comprehend and respond to dynamic environments.

Accommodates a Variety of Use Cases: Numerous industries, from healthcare to retail, utilize video data annotation to create AI solutions customized to their unique requirements. For example, in healthcare, annotated videos can assist in surgical training and patient monitoring, while in retail, they can improve customer experiences through personalized recommendations and enhanced security surveillance.

Key Techniques in Video Data Annotation

Object Detection: The process of identifying and labeling various objects present within video frames.

Action Recognition: The task of annotating the actions executed by individuals in the video.

Scene Segmentation: The division of video content into significant segments based on changes in scenes.

Temporal Labeling: The act of marking events or actions that are specific to certain timeframes within a video timeline.

Challenges in Video Data Annotation

Despite its critical role, video data annotation faces numerous challenges:

High Volume of Data: The vast amounts of data generated by videos necessitate extensive manual annotation, rendering the process both time-consuming and labor-intensive.

Consistency and Accuracy: Maintaining consistency and accuracy across annotations is essential for training dependable AI models. This requires the establishment of clear guidelines and quality control measures.

Evolving AI Needs: As AI models advance in sophistication, the complexity of annotations also escalates, demanding ongoing updates and refinements to annotation methodologies.

Leveraging Professional Video Data Annotation Services

To address these challenges, many organizations opt for professional video data annotation services. Companies such as GTS.ai provide comprehensive video annotation solutions, utilizing advanced tools and skilled annotators to produce high-quality labeled data. By outsourcing video data annotation, businesses can concentrate on their primary AI development activities while ensuring their models are trained on optimal data.

Conclusion

Video data annotation serves as a fundamental element in Globose Technology Solutions development, allowing models to effectively interpret and learn from video content. As the applications of AI continue to proliferate across various industries, the need for accurate and reliable video annotation services will increase. Investing in professional annotation services can significantly improve the accuracy and performance of AI models, fostering innovation and efficiency in AI-driven solutions.

0 notes