#data annotation services

Explore tagged Tumblr posts

Text

What is a Data pipeline for Machine Learning?

As machine learning technologies continue to advance, the need for high-quality data has become increasingly important. Data is the lifeblood of computer vision applications, as it provides the foundation for machine learning algorithms to learn and recognize patterns within images or video. Without high-quality data, computer vision models will not be able to effectively identify objects, recognize faces, or accurately track movements.

Machine learning algorithms require large amounts of data to learn and identify patterns, and this is especially true for computer vision, which deals with visual data. By providing annotated data that identifies objects within images and provides context around them, machine learning algorithms can more accurately detect and identify similar objects within new images.

Moreover, data is also essential in validating computer vision models. Once a model has been trained, it is important to test its accuracy and performance on new data. This requires additional labeled data to evaluate the model's performance. Without this validation data, it is impossible to accurately determine the effectiveness of the model.

Data Requirement at multiple ML stage

Data is required at various stages in the development of computer vision systems.

Here are some key stages where data is required:

Training: In the training phase, a large amount of labeled data is required to teach the machine learning algorithm to recognize patterns and make accurate predictions. The labeled data is used to train the algorithm to identify objects, faces, gestures, and other features in images or videos.

Validation: Once the algorithm has been trained, it is essential to validate its performance on a separate set of labeled data. This helps to ensure that the algorithm has learned the appropriate features and can generalize well to new data.

Testing: Testing is typically done on real-world data to assess the performance of the model in the field. This helps to identify any limitations or areas for improvement in the model and the data it was trained on.

Re-training: After testing, the model may need to be re-trained with additional data or re-labeled data to address any issues or limitations discovered in the testing phase.

In addition to these key stages, data is also required for ongoing model maintenance and improvement. As new data becomes available, it can be used to refine and improve the performance of the model over time.

Types of Data used in ML model preparation

The team has to work on various types of data at each stage of model development.

Streamline, structured, and unstructured data are all important when creating computer vision models, as they can each provide valuable insights and information that can be used to train the model.

Streamline data refers to data that is captured in real-time or near real-time from a single source. This can include data from sensors, cameras, or other monitoring devices that capture information about a particular environment or process.

Structured data, on the other hand, refers to data that is organized in a specific format, such as a database or spreadsheet. This type of data can be easier to work with and analyze, as it is already formatted in a way that can be easily understood by the computer.

Unstructured data includes any type of data that is not organized in a specific way, such as text, images, or video. This type of data can be more difficult to work with, but it can also provide valuable insights that may not be captured by structured data alone.

When creating a computer vision model, it is important to consider all three types of data in order to get a complete picture of the environment or process being analyzed. This can involve using a combination of sensors and cameras to capture streamline data, organizing structured data in a database or spreadsheet, and using machine learning algorithms to analyze and make sense of unstructured data such as images or text. By leveraging all three types of data, it is possible to create a more robust and accurate computer vision model.

Data Pipeline for machine learning

The data pipeline for machine learning involves a series of steps, starting from collecting raw data to deploying the final model. Each step is critical in ensuring the model is trained on high-quality data and performs well on new inputs in the real world.

Below is the description of the steps involved in a typical data pipeline for machine learning and computer vision:

Data Collection: The first step is to collect raw data in the form of images or videos. This can be done through various sources such as publicly available datasets, web scraping, or data acquisition from hardware devices.

Data Cleaning: The collected data often contains noise, missing values, or inconsistencies that can negatively affect the performance of the model. Hence, data cleaning is performed to remove any such issues and ensure the data is ready for annotation.

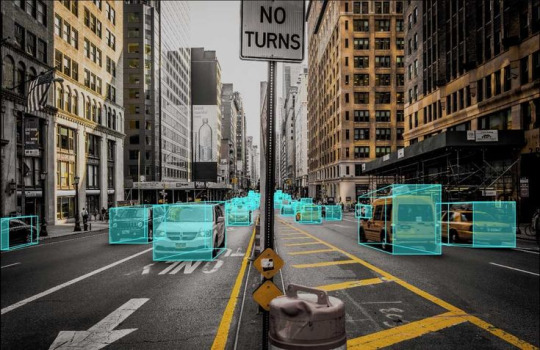

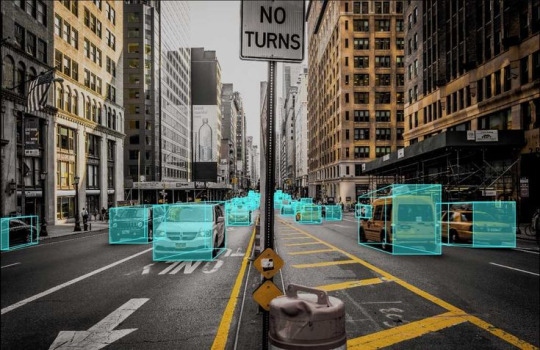

Data Annotation: In this step, experts annotate the images with labels to make it easier for the model to learn from the data. Data annotation can be in the form of bounding boxes, polygons, or pixel-level segmentation masks.

Data Augmentation: To increase the diversity of the data and prevent overfitting, data augmentation techniques are applied to the annotated data. These techniques include random cropping, flipping, rotation, and color jittering.

Data Splitting: The annotated data is split into training, validation, and testing sets. The training set is used to train the model, the validation set is used to tune the hyperparameters and prevent overfitting, and the testing set is used to evaluate the final performance of the model.

Model Training: The next step is to train the computer vision model using the annotated and augmented data. This involves selecting an appropriate architecture, loss function, and optimization algorithm, and tuning the hyperparameters to achieve the best performance.

Model Evaluation: Once the model is trained, it is evaluated on the testing set to measure its performance. Metrics such as accuracy, precision, recall, and score are computed to assess the model's performance.

Model Deployment: The final step is to deploy the model in the production environment, where it can be used to solve real-world computer vision problems. This involves integrating the model into the target system and ensuring it can handle new inputs and operate in real time.

TagX Data as a Service

Data as a service (DaaS) refers to the provision of data by a company to other companies. TagX provides DaaS to AI companies by collecting, preparing, and annotating data that can be used to train and test AI models.

Here’s a more detailed explanation of how TagX provides DaaS to AI companies:

Data Collection: TagX collects a wide range of data from various sources such as public data sets, proprietary data, and third-party providers. This data includes image, video, text, and audio data that can be used to train AI models for various use cases.

Data Preparation: Once the data is collected, TagX prepares the data for use in AI models by cleaning, normalizing, and formatting the data. This ensures that the data is in a format that can be easily used by AI models.

Data Annotation: TagX uses a team of annotators to label and tag the data, identifying specific attributes and features that will be used by the AI models. This includes image annotation, video annotation, text annotation, and audio annotation. This step is crucial for the training of AI models, as the models learn from the labeled data.

Data Governance: TagX ensures that the data is properly managed and governed, including data privacy and security. We follow data governance best practices and regulations to ensure that the data provided is trustworthy and compliant with regulations.

Data Monitoring: TagX continuously monitors the data and updates it as needed to ensure that it is relevant and up-to-date. This helps to ensure that the AI models trained using our data are accurate and reliable.

By providing data as a service, TagX makes it easy for AI companies to access high-quality, relevant data that can be used to train and test AI models. This helps AI companies to improve the speed, quality, and reliability of their models, and reduce the time and cost of developing AI systems. Additionally, by providing data that is properly annotated and managed, the AI models developed can be exp

2 notes

·

View notes

Text

Image Annotation Services

Image annotation services play a crucial role in training AI and machine learning models by accurately labeling visual data. These services involve tagging images with relevant information to help algorithms recognize objects, actions, or environments. High-quality image annotation services ensure better model performance in autonomous driving, facial recognition, and medical imaging applications. Whether it’s bounding boxes, polygons, or semantic segmentation, precise annotations are essential for AI accuracy. Partnering with expert providers guarantees scalable and reliable image labeling solutions.

0 notes

Text

Abode Enterprise

Abode Enterprise is a reliable provider of data solutions and business services, with over 15 years of experience, serving clients in the USA, UK, and Australia. We offer a variety of services, including data collection, web scraping, data processing, mining, and management. We also provide data enrichment, annotation, business process automation, and eCommerce product catalog management. Additionally, we specialize in image editing and real estate photo editing services.

With more than 15 years of experience, our goal is to help businesses grow and become more efficient through customized solutions. At Abode Enterprise, we focus on quality and innovation, helping organizations make the most of their data and improve their operations. Whether you need useful data insights, smoother business processes, or better visuals, we’re here to deliver great results.

#Data Collection Services#Web Scraping Services#Data Processing Service#Data Mining Services#Data Management Services#Data Enrichment Services#Business Process Automation Services#Data Annotation Services#Real Estate Photo Editing Services#eCommerce Product Catalog Management Services#Image Editing service

1 note

·

View note

Text

Drive Innovation with Custom Data Annotation Services Built for Your Success

In the ever-evolving world of artificial intelligence (AI) and machine learning (ML), data is the lifeblood that powers intelligent systems. The quality of data used for training AI models determines their success, and one crucial aspect of data preparation is Data Annotation Services. Through accurate and efficient annotation, businesses can transform raw data into structured formats, allowing AI systems to make predictions, detect patterns, and provide actionable insights.

What Are Data Annotation Services?

Data Annotation Services involve labeling or tagging raw data to make it usable for machine learning algorithms. This process includes identifying objects, categorizing text, tagging images, and providing contextual information across various data types, such as text, audio, video, and images. Annotations enable machines to understand data in the same way humans do, facilitating the learning process that drives AI systems.

Custom data annotation is designed to cater to specific industry needs. Whether you’re in healthcare, retail, finance, or automotive, customized solutions ensure your data is appropriately labeled to achieve optimal AI model performance.

Why Custom Data Annotation is Crucial

While there are generic data annotation solutions available, the complexity of AI applications often requires tailored services to ensure that data is accurately prepared for your specific use case. Customized data annotation addresses:

Domain Expertise: Annotators with knowledge of your industry can ensure the annotations are contextually relevant and accurate. For example, in healthcare, annotations might require familiarity with medical terms, while in retail, product categorization may involve understanding nuances in consumer behavior.

Precision and Quality: Custom services ensure that your data is annotated with high accuracy, which improves the overall performance of your AI models. High-quality annotations minimize the risk of errors, leading to better predictions and more reliable outputs from your machine learning models.

Scalability: As your data requirements grow, customized services can scale accordingly. Whether you're handling millions of images, extensive customer data, or large video datasets, a tailored solution ensures you get the volume of annotations needed in the time frame required.

Time Efficiency: Custom annotation processes are designed to optimize workflows, speeding up the process of data preparation and reducing the time to deploy AI applications. By working with an experienced partner, you can ensure that your team focuses on core business tasks while annotation is handled efficiently.

Key Benefits of Custom Data Annotation Services

1. Enhanced Accuracy for AI Training

Customized annotation ensures that the data fed into AI models is labeled precisely. This accuracy improves the training phase, allowing AI systems to learn faster and more effectively. Whether for image recognition, speech-to-text translation, or sentiment analysis, high-quality data annotations are crucial for building reliable AI applications.

2. Cost Efficiency

Investing in accurate data annotation from the start prevents the need for expensive retraining or correction of AI models later on. Custom services help identify and address potential issues early in the process, leading to better long-term outcomes and reduced overall costs.

3. Improved Model Performance

Customized data annotations lead to better model outcomes, as AI systems trained on quality data tend to perform better in real-world scenarios. With properly annotated data, machine learning models can achieve higher levels of accuracy, efficiency, and reliability.

4. Increased Flexibility

Different industries require unique data annotation solutions. Custom services allow you to define the criteria for your annotations, ensuring that your datasets are tailored to meet the specific needs of your business. This flexibility allows you to stay competitive and adapt quickly to new challenges and opportunities in your sector.

5. Data Security and Compliance

Data privacy and security are major concerns, particularly in industries like healthcare and finance. Customized annotation services ensure that your data is handled securely and in compliance with relevant regulations, such as GDPR or HIPAA, to protect sensitive information.

Applications of Custom Data Annotation

Custom data annotation has applications across a wide variety of industries:

Healthcare: Annotating medical images, patient records, and diagnostic data to train AI models for disease detection, medical research, and more.

E-Commerce: Categorizing products, analyzing customer reviews, and providing personalized recommendations based on annotated data.

Autonomous Vehicles: Annotating images and videos for object detection, road sign recognition, and other tasks that enable self-driving vehicles to navigate safely.

Finance: Labeling financial transactions, risk assessments, and fraud detection datasets to train machine learning algorithms for better predictions.

Entertainment: Annotating content for recommendation systems, video indexing, and content moderation.

How to Choose the Right Data Annotation Partner

To achieve the best results, selecting the right data annotation partner is essential. Here are some key factors to consider:

Industry Experience: Ensure your provider has experience in your specific industry. They should understand the nuances of your data and the type of annotation required for optimal results.

Quality Assurance: The annotation process should include stringent quality control measures. This ensures that the data provided is accurate, consistent, and ready for AI model training.

Advanced Tools and Technology: Look for a partner who uses cutting-edge tools, such as AI-assisted annotation, which enhances both speed and accuracy.

Security: Make sure that your data is handled securely and in compliance with privacy regulations, particularly if you're working with sensitive or confidential information.

The Future of Data Annotation

The future of data annotation is bright, with technology advancing rapidly. AI-assisted tools and automation are already transforming the way data is annotated, significantly speeding up the process. However, human annotators still play a vital role in ensuring quality, especially for complex and nuanced data.

With the rise of 3D and multi-modal data, there is an increasing demand for more sophisticated annotation methods. The future will see more industries relying on customized annotation services to drive innovation and harness the full potential of AI.

Conclusion

Custom Data Annotation Services are a key driver of AI success. By ensuring your data is accurately labeled, you enable machine learning models to perform at their best, improving business outcomes and unlocking new possibilities. Whether you're in healthcare, finance, retail, or any other industry, tailored annotation services can provide the edge needed to stay competitive and innovate.

Ready to leverage the power of custom Data Annotation Services for your business? Contact us today to learn how our solutions can help you drive innovation and success in your AI initiatives. Together, we can unlock the full potential of your data.

Visit Us, https://www.tagxdata.com/

0 notes

Text

Achieve exceptional AI/ML model performance with quality data annotation services. Enhance your algorithms with accurately labeled datasets tailored to your business needs. Properly annotated data ensures your machine learning models deliver precise and reliable results, boosting efficiency and innovation. Leverage expert services to optimize your AI development. Explore how data annotation accelerates smart model building and revolutionizes your AI capabilities.

0 notes

Text

Top 5 Applications of Video Annotation Services Across Industries

In an era where data is the backbone of innovation, Video Annotation services have emerged as a critical tool in numerous industries. The ability to train machine learning models using labeled data has revolutionized the healthcare and retail sectors. By providing labeled datasets, Data Annotation services ensure that algorithms can accurately interpret visual information, leading to smarter automation, enhanced decision-making, and improved efficiency.

Here, we explore the top five applications of Video Annotation services across diverse industries, showcasing how they transform processes and drive advancements.

1. Autonomous Vehicles

Autonomous vehicles heavily rely on machine learning algorithms to interpret their surroundings and make split-second decisions. Annotation services play an essential role in this by helping train these algorithms. Detailed video labeling identifies and categorizes objects like pedestrians, traffic signals, vehicles, and road signs.

Video Annotation services ensure that vehicles can "see" and understand the environment in this domain. By consistently feeding annotated data into algorithms, these cars become safer, more reliable, and capable of handling complex road conditions. The precision of annotations ensures that the vehicle can differentiate between objects such as cyclists, roadblocks, or sudden obstacles, significantly reducing the risk of accidents.

2. Healthcare and Medical Research

The healthcare industry is another beneficiary of Data Annotation services. Video data captured during surgeries or diagnostic procedures, such as MRI scans or ultrasound footage, can be annotated to highlight specific areas of interest. This aids in training AI models to detect anomalies, classify diseases, and predict outcomes.

In the realm of surgical robotics, Video Annotation services are invaluable. They assist in recognizing patterns during surgical procedures, enhancing robotic precision, and ensuring better patient outcomes. Furthermore, annotated medical videos can train AI systems for tasks such as tumor detection, making diagnostics faster and more accurate.

3. Retail and Customer Insights

Retailers leverage Video Annotation services to analyze customer behavior, optimize store layouts, and enhance the shopping experience. By annotating in-store surveillance footage, retailers can monitor traffic patterns, identify popular product sections, and detect customer engagement with certain products.

This information allows businesses to refine their marketing strategies, improve product placement, and optimize staffing decisions. Additionally, with advancements in personalized marketing, Data Annotation services help retailers categorize customers based on age, gender, and behavior, enabling them to offer tailored shopping experiences that increase sales and customer satisfaction.

4. Security and Surveillance

Security agencies utilize Video Annotation services to enhance surveillance systems and boost public safety. Annotated video footage enables AI-driven systems to detect unusual behavior, recognize individuals of interest, and track movement patterns in real-time.

For instance, security teams can use annotated video feeds in large public gatherings to identify potential threats or abnormal behavior, ensuring faster response times. Moreover, Annotation services are instrumental in facial recognition, vehicle identification, and crowd management, making security systems far more efficient than traditional methods.

In forensic investigations, annotated video footage accurately analyzes events, aiding law enforcement agencies in crime prevention and investigation.

5. Sports Analytics

Sports teams and coaches increasingly adopt Video Annotation services to analyze player performance, game tactics, and opponent strategies. Annotating match footage categorizes and analyzes key moments such as goals, fouls, player movements, and tactical formations.

This application of Data Annotation services goes beyond simple performance tracking. Advanced AI systems can predict injury risks by analyzing player movements, suggest optimal strategies by reviewing opponent plays, and enhance player training by identifying areas for improvement. The ability to break down game footage into granular details allows coaches and analysts to make data-driven decisions that can significantly impact a game's outcome.

Conclusion

The transformative power of Video Annotation services is evident across multiple industries. From enhancing road safety in autonomous vehicles to improving patient outcomes in healthcare, Data Annotation services play a pivotal role in unlocking the potential of machine learning and AI technologies. As industries evolve, the demand for precise, efficient, and high-quality Annotation services will only increase, making them indispensable for future innovation.

These applications represent just the tip of the iceberg, as video annotation continues to open new possibilities and redefine how industries function.

0 notes

Text

Lidar Annotation Services

Elevate your Lidar data precision with SBL's specialized Lidar Annotation Services. Our detailed 3D point cloud annotations drive superior accuracy in object detection and localization for autonomous systems and robotics. Partner with us to advance your AI initiatives seamlessly. Read more at https://www.sblcorp.ai/services/data-annotation-services/lidar-annotation-services/

0 notes

Text

Data Annotation Services

In the realm of artificial intelligence, Data Annotation Services play a pivotal role. These services meticulously label data, transforming raw information into structured formats that machine learning algorithms can comprehend. The precision of annotations directly impacts the efficacy of AI models, making this process indispensable.

Accurate Data Annotation Services ensure that AI systems can recognize patterns, interpret nuances, and make informed decisions. Whether it’s tagging images for computer vision applications, transcribing audio for speech recognition, or categorizing text for natural language processing, the quality of data annotation is paramount.

Expert annotators utilize sophisticated tools and techniques to handle diverse data types. They bring a nuanced understanding of context, ensuring that every piece of data is correctly labeled. This meticulous attention to detail accelerates AI training, enhances model accuracy, and ultimately drives innovation.

By leveraging professional Data Annotation Services, businesses can unlock the full potential of their AI initiatives. High-quality annotated data is the bedrock upon which powerful, intelligent systems are built, paving the way for advancements in technology and automation. For more information, visit this resource.

0 notes

Text

Challenges and Best Practices in Data Annotation

Data annotation is a crucial step in training machine learning models, but it comes with its own set of challenges. Addressing these challenges effectively through best practices can significantly enhance the quality of the resulting AI models.

Challenges in Data Annotation

Consistency and Accuracy: One of the major challenges is ensuring consistency and accuracy in annotations. Different annotators might interpret data differently, leading to inconsistencies. This can degrade the performance of the machine learning model.

Scalability: Annotating large datasets manually is time-consuming and labor-intensive. As datasets grow, maintaining quality while scaling up the annotation process becomes increasingly difficult.

Subjectivity: Certain data, such as sentiment in text or complex object recognition in images, can be highly subjective. Annotators’ personal biases and interpretations can affect the consistency of the annotations.

Domain Expertise: Some datasets require specific domain knowledge for accurate annotation. For instance, medical images need to be annotated by healthcare professionals to ensure correctness.

Bias: Bias in data annotation can stem from the annotators' cultural, demographic, or personal biases. This can result in biased AI models that do not generalize well across different populations.

Best Practices in Data Annotation

Clear Guidelines and Training: Providing annotators with clear, detailed guidelines and comprehensive training is essential. This ensures that all annotators understand the criteria uniformly and reduces inconsistencies.

Quality Control Mechanisms: Implementing quality control mechanisms, such as inter-annotator agreement metrics, regular spot-checks, and using a gold standard dataset, can help maintain high annotation quality. Continuous feedback loops are also critical for improving annotator performance over time.

Leverage Automation: Utilizing automated tools can enhance efficiency. Semi-automated approaches, where AI handles simpler tasks and humans review the results, can significantly speed up the process while maintaining quality.

Utilize Expert Annotators: For specialized datasets, employ domain experts who have the necessary knowledge and experience. This is particularly important for fields like healthcare or legal documentation where accuracy is critical.

Bias Mitigation: To mitigate bias, diversify the pool of annotators and implement bias detection mechanisms. Regular reviews and adjustments based on detected biases are necessary to ensure fair and unbiased data.

Iterative Annotation: Use an iterative process where initial annotations are reviewed and refined. Continuous cycles of annotation and feedback help in achieving more accurate and reliable data.

For organizations seeking professional assistance, companies like Data Annotation Services provide tailored solutions. They employ advanced tools and experienced annotators to ensure precise and reliable data annotation, driving the success of AI projects.

#datasets for machine learning#Data Annotation services#data collection#AI for machine learning#business

0 notes

Text

Data Annotation Services

Data Annotation Services

.

Who We Are

We at Evertech BPO services are dedicated for offering our clients with industry best outsourcing services. They are able to get success in their endeavors while our experts are taking care of the data management requirements. For your success, quality focused and client centric solutions are offered by us within the expected timeline.

.

consistent efforts at building long-term relationships with our clients backed by a commitment to delivering on-time and qualitative services have been pivotal to our consistent growth above market standards.

.

Why Choose Us

TRUSTED OUTSOURCING PARTNER

.

We at Evertech BPO is working with the vision to become the one stop destination for all the requirements of clients. We are dedicated to offer our clients with value. Best practices are implemented by us to offer clients with cost effective solutions within the anticipated time frame.

.

Each and every aspect of the project is fulfilled based on the demands of clients. The team at Evertech BPO has the knowledge, experience, tools and technology to provide excellent services to clients. We are dedicated in serving the client with the services that can meet their demands and satisfy them.

.

Our services :

* Data Entry Services

* Data Processing Services

* Data Conversion Services

* Data Enrichment & Data Enhancement Services

* Data Annotation Services

* Web Research Services

* Photo Editing Services

* Scanning Services

* Virtual Assistant Services

* Web Scrapping Services

.

Contact us :

We have a expert teams don’t hesite to contact us

Phone Number :�� +91 90817 77827

.

Email Address : [email protected]

.

Website : https://www.evertechbpo.com/

Contact us : https://www.evertechbpo.com/contact-us/

0 notes

Text

https://justpaste.it/exzat

#Data Annotation Services#Images annotation#Videos annotation#Videos annotation Services#Images annotation Services#Data collection company#Data collection#datasets#data collection services

0 notes

Text

https://pixelannotation.mystrikingly.com/blog/exploring-the-use-of-2d-bounding-boxes-in-retail-and-e-commerce-applications

0 notes

Text

What is Data Annotation Tech?

Data annotation technology refers to the process of labeling or tagging data to make it understandable and usable for machine learning algorithms. In the context of machine learning and artificial intelligence, annotated data is essential for training models. Data annotation involves adding metadata, such as labels, tags, or other annotations, to different types of data, such as images, text, audio, or video.

Recommended to Read: Data Annotation Tech: A Better Option For Career

Data annotation aims to create a labeled dataset that a machine learning model can use to learn patterns and make predictions or classifications. Different types of data annotation techniques are used depending on the nature of the data and the task at hand. Some common data annotation methods include:

Image Annotation: This involves labeling objects or regions in images, such as bounding boxes around objects, segmentation masks, or key points.

Text Annotation: For natural language processing tasks, text annotation may involve labeling entities, sentiments, or relationships within the text.

Audio Annotation: In audio processing, data annotation may include labeling specific sounds or segments within an audio file.

Video Annotation: Similar to image annotation, video annotation involves labeling objects or actions in video frames.

3D Point Cloud Annotation: In tasks related to computer vision in three-dimensional space, annotating point clouds involves labeling specific points or objects in a 3D environment.

Data annotation is a crucial step in the machine learning pipeline, as it provides the supervised learning algorithms with labeled examples to learn from. The quality and accuracy of the annotations directly impact the performance of the trained model. There are various tools and platforms available to streamline the data annotation process, and the field continues to evolve with advancements in computer vision and natural language processing technologies.

1 note

·

View note

Text

Enhance AI Accuracy with Expert Data Annotation Services for Your Business Needs

In today’s data-driven world, businesses rely heavily on artificial intelligence (AI) to improve efficiency, drive innovation, and maintain a competitive edge. However, the accuracy and performance of AI models are only as good as the data they are trained on. This is where data annotation services play a crucial role in the development and enhancement of AI systems.

Data annotation refers to the process of labeling or tagging data to provide context and structure for machine learning (ML) algorithms. It is the foundation of AI training, helping machines interpret and understand information accurately. By utilizing expert data annotation, businesses can improve the quality of their AI models, ensuring that they operate effectively and make more precise decisions.

Why Data Annotation Services Matter for AI Accuracy

Foundation for Machine Learning Models

AI systems, particularly machine learning models, rely on large datasets to learn patterns, make predictions, and provide valuable insights. However, these systems cannot function effectively without properly labeled data. Data annotation is essential because it turns raw data into structured information that machine learning models can understand. Whether it’s image recognition, natural language processing, or speech recognition, accurate annotations are critical for training models to achieve high performance.

Improved AI Accuracy

Data annotation directly impacts the accuracy of AI algorithms. The better the quality of annotations, the more precise the AI model’s predictions will be. Whether you're working with text, images, or videos, accurate annotations ensure that the AI can learn the correct features, leading to better decision-making, reduced errors, and higher precision in results.

Scalability of AI Projects As businesses scale their AI projects, the amount of data they need to process grows exponentially. Without the support of expert, handling vast quantities of data with precision becomes a daunting task. Annotation services provide the necessary infrastructure to manage and label data on a large scale, ensuring that AI systems remain efficient as they expand.

Types of Data Annotation Services

Image Annotation

Bounding Boxes: A rectangular box is drawn around specific objects within an image. This is useful for object detection tasks, such as identifying cars, animals, or faces in images.

Semantic Segmentation: This technique involves labeling each pixel in an image to identify and separate different objects or regions. It’s often used in tasks like autonomous vehicle navigation or medical image analysis.

Keypoint Annotation: Points are annotated on important features in an image (such as facial features or hand gestures) to train models in recognizing human actions, poses, or objects.

Text Annotation

Entity Recognition: Involves labeling specific pieces of text as entities (e.g., names, dates, locations) for applications like chatbots, voice assistants, and content extraction systems.

Sentiment Annotation: Labels are applied to text to indicate the sentiment expressed (positive, negative, or neutral). This is useful for sentiment analysis in customer feedback or social media monitoring.

Part-of-Speech Tagging: Words in a sentence are labeled with their grammatical roles, which is essential for applications like natural language processing (NLP) and text translation.

Audio Annotation

Speech-to-Text Transcription: This involves converting spoken language into written text. It is widely used in virtual assistants, transcription services, and voice recognition systems.

Sound Event Detection: Labels are added to specific sound events in audio files, like identifying the sound of a doorbell or a phone ringing. This is used in surveillance systems or automated monitoring applications.

Video Annotation

Object Tracking: Annotating moving objects across frames in a video to help AI systems track objects over time. This is essential in applications like surveillance, autonomous driving, and sports analytics.

Action Recognition: Identifying and tagging actions or activities in video clips, such as walking, running, or waving. This technique is commonly used in security surveillance, human-computer interaction, and sports analytics.

How Data Annotation Services Enhance AI Models

Increased Efficiency in AI Training

Annotating data efficiently and accurately saves time during the AI training process. High-quality annotated datasets allow machine learning algorithms to train faster and more effectively, producing models that are ready for deployment in a shorter time.

Customization for Business-Specific Needs

Expert provide customized solutions based on the unique needs of your business. Whether you are developing a healthcare application, a retail recommendation system, or a fraud detection system, annotated data tailored to your specific use case helps create more accurate models that are optimized for your business objectives.

Better Accuracy in Predictions and Decisions

Accurate data annotations ensure that AI models make better predictions, leading to smarter decisions. For instance, in an e-commerce application, annotated data can help the AI understand user preferences, which can then be used to personalize product recommendations, enhancing customer experience and boosting sales.

Improved Model Validation

Data annotation also help in validating AI models by providing clear examples of correct and incorrect outputs. These examples allow businesses to fine-tune their AI models, ensuring that they operate at peak performance. Regular validation and re-annotation can help maintain the effectiveness of AI systems over time.

How to Choose the Right Data Annotation Services

Industry Expertise

Whether it’s healthcare, retail, finance, or autonomous driving, choosing a provider with domain-specific knowledge ensures that the annotations are highly relevant and accurate for your AI needs.

Quality Assurance

Ensure the service provider offers stringent quality control processes to maintain the accuracy and consistency of annotations. This includes double-checking annotations, using experienced annotators, and having mechanisms in place for error detection and correction.

Scalability and Speed

As your business grows, the amount of data you need to annotate will likely increase. Choose a provider that can scale their services and maintain speed without sacrificing quality. A reliable data annotation service will be able to handle large datasets efficiently and meet tight deadlines.

Cost-Effectiveness

While quality should never be compromised, it's also essential to consider the cost-effectiveness of the data annotation services. Look for a provider that offers competitive pricing while ensuring top-notch results.

Conclusion

In conclusion, data annotation services are crucial for enhancing the accuracy of AI models. By turning raw data into structured, labeled information, businesses can develop AI systems that deliver more accurate predictions, better customer experiences, and smarter decision-making. Whether you're building a computer vision model, natural language processing system, or audio recognition tool, accurate data annotation ensures that your AI solutions are capable of performing at their best.

To ensure the best possible outcomes for your AI projects, partner with a trusted data annotation service provider that understands your industry needs and delivers high-quality annotations efficiently. Start enhancing the accuracy of your AI models today—contact a professional data annotation service provider and take your AI initiatives to the next level.

Visit Us, https://www.tagxdata.com/

0 notes

Text

Data annotation is the backbone of machine learning, ensuring models are trained with accurate, labeled datasets. From text classification to image recognition, data annotation transforms raw data into actionable insights. Explore its importance, methods, and applications in AI advancements. Learn how precise annotations fuel intelligent systems and drive innovation in diverse industries.

0 notes