#cloudml

Explore tagged Tumblr posts

Photo

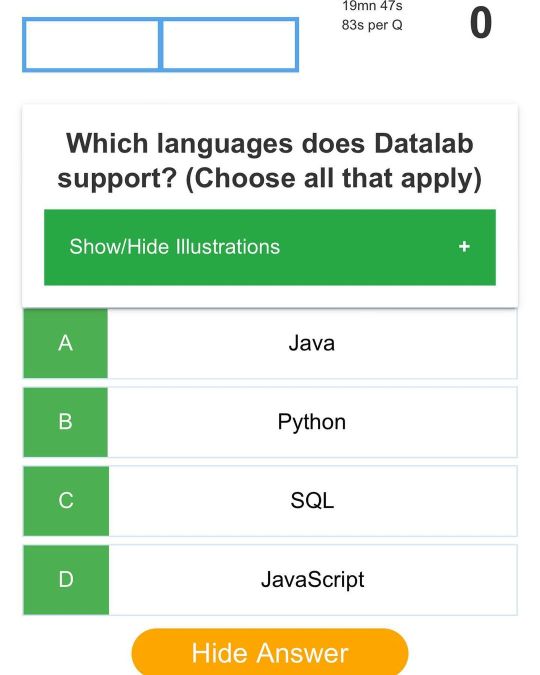

GCP Professional Machine Learning Engineer Quizzes, Practice Exams: Framing, Architecting, Designing, Developing ML Problems & Solutions ML Jobs Interview Q&A ios: https://apps.apple.com/ca/app/gcp-machine-learning-eng-pro/id1611101916 Windows: https://www.microsoft.com/en-ca/p/gcpprofmachinelearningengineer/9nf14pr19bx5 Use this App to learn about Machine Learning on GCP and prepare for the GCP Professional Machine Learning Engineer. GCP Professional Machine Learning Engineer validates expertise in building, training, tuning, and deploying machine learning (ML) models on GCP. The App provides hundreds of quizzes and practice exams about: - Machine Learning Operation on GCP - Framing ML problems, - Architecting ML solutions, - Designing data preparation and processing systems, - Developing ML models, - Modelling - Data Engineering - Computer Vision, - Exploratory Data Analysis, - ML implementation & Operations - Machine Learning Basics Questions and Answers - Machine Learning Advanced Questions and Answers - Scorecard - Countdown timer - Machine Learning Cheat Sheets - Machine Learning Interview Questions and Answers - Machine Learning Latest News The App covers Machine Learning Basics and Advanced topics including: Monitoring, optimizing, and maintaining ML solutions, Automating and orchestrating ML pipelines, NLP, Modelling, Etc. #GCPML #MachineLEarning #GCPProfessionalMachineLearningEngineer #GoogleMl #CloudMl #NLP #AI #GCpAI https://www.instagram.com/p/Ca8bZVMJUos/?utm_medium=tumblr

0 notes

Text

Cloud ML Engine Your Friend on cloud

Cloud ML Engine Your Friend on cloud

What we are doing here. Theory of Not relativity but cloud ml engine a bit of tensorflow(not stack overflow) and hands on in

Create a TensorFlow training application and validate it locally.

Run your training job on a single worker instance in the cloud.

Run your training job as a distributed training job in the cloud.

Optimize your hyperparameters by using hyperparameter tuning.

Deploy a model…

View On WordPress

0 notes

Text

Fwd: Job: Washington.MachineLearningEvolution

Begin forwarded message: > From: [email protected] > Subject: Job: Washington.MachineLearningEvolution > Date: 18 November 2021 at 05:19:18 GMT > To: [email protected] > > > Hello EvolDir Members: > > Hiring Now: NSF-funded Consultant or Postdoctoral Fellow (1 year, with > potential for extension), Washington, D.C., USA; remote work space > possible > > Project title: NSF Convergence Accelerator Track E: Innovative seafood > traceability network for sustainable use, improved market access, and > enhanced blue economy > > Project Overview: This proposal will build a cross-cutting traceability > network to accelerate the path towards accurate and inclusive monitoring > and management of marine bioresources, whose sustainability is vital to > feed the global population. Leveraging wide-ranging expertise in fisheries > science, marine biology, environmental anthropology, computer science, > trade policy, and the fisheries industry, we will develop a powerful > tool to achieve long-lasting & transferable solutions. Addressing the > global challenge of feeding the human population will require the ocean > as a solution. > > This NSF Convergence Accelerator project will: > > 1.Develop a prototype traceability tool that allows affordable > identification of species and area of capture for wild octopus fisheries > within the United States and abroad using our proposed machine learning > (ML) model “SeaTraceBlueNet” trained on legacy data of environmental > metadata, species occurrence and images; > > 2.Develop a community-based citizen-science network (fishers, researchers, > industry partners, students, etc.) to gather new data (images, metadata > and environmental DNA (eDNA)), train on and test the portable eDNA > kits and SeaTraceBlueNet dashboard prototype to build the collaborative > capacity to establish a standardized traceability system; and, > > 3.Set a system in place to connect traceability, sustainability and > legality to support the development of a blue economy around the octopus > value chain, incorporating the best practices and existing standards > from stakeholders. > > > Background and skills sought: > > Expertise (5+ years of combined work and/or academic experience) > preferably in one of the following fields: Bioinformatics, Machine > Learning, Computer Sciences, Biological Sciences, Molecular Biology Note: > PhD is not required, as long as applicant shows demonstrated abilities > in the following. > > Experience required: Software: Agile software i.e., Jira, GitHub, > Anaconda, pyCharm, Jupyter notebooks, OpenCV Must have languages: Python, > SQL, bash scripting, linux command-line Optional: C++, R, Spark, Hive > > Programming environments and infrastructure: Cloud, HPC, Linux, Windows > Familiar with Machine learning platform and libraries such as, TensorFlow, > PyTorch, Caffe, Keras, Scikit-learn, scipy, etc. Implementing computer > vision models such as ResNet, Deep learning models using Recurrent > Neural Networks (CNNs, LSTMs, DNNs), using Support Vector Machines > (SVMs) models, probabilistic and un/regression models, data processing > and handling activities including data wrangling, computer vision. > > Bonus skills: Using BERT NLP models, computer software like OpenVino, > targeting GPUs, familiarity with GCP tools and applications, > such as BigTable, cloudSQL, DataFlow, CloudML, DataProc, etc., > dashboard development and implementation, Compiling and configuring HPC > environments, developing applications using MPI, OpenMPI, pyMIC, using job > schedulers such as PBS, Scrum; Bioinformatics tools such as BLAST, Qiime2 > > Job Physical Location: Washington, D.C. USA; remote work station is > an option. > > Compensation: $65,000 (annual) with benefits, and some travel > > Although, US citizenship is not required, proper work status in the USA > is required. Unfortunately, we cannot sponsor a visa at this time. > > Expected start date: November/December 2021 (up to 12 months depending > on start date) > > Application Deadline: Applications will be reviewed and interviews > will take place on a rolling basis until the position is filled. > Application submission process: Please send the following documents via > email to Demian A. Willette, Loyola Marymount University (demian.willette > @ lmu.edu). > > 1.Curriculum Vitae: Including all relevant professional and academic > experience; contracts, collaborations, on-going projects, grants > funded, list of publications (URLs provided), presentations, workshops, > classes; Machine Learning and bioinformatics skills and languages; > Gitbub/bitbucket; any experience with processing molecular sequence data > (genomics and/or metabarcoding) > > 2.Reference contacts: Names, affiliations and contact information (email, > phone) for up to three professional references that we will contact in > the event that your application leads to an interview. > > 3.Transcripts: Transcript showing date of completion of your most relevant > degree(s) and grades. > > Please send all inquiries to: Demian A. Willette, Loyola Marymount > University (demian.willette @ lmu.edu) > > > Cheryl Ames > via IFTTT

0 notes

Text

Data Engineering on GCP | Google Cloud Data Engineer | GKCS

Course Description

This four-day instructor-led class provides participants a hands-on introduction to designing and building data processing systems on Google Cloud Platform. Through a combination of presentations, demos, and hand-on labs, participants will learn how to design data processing systems, build end-to-end data pipelines, analyze data and carry out machine learning. The Data Engineering on GCP course covers structured, unstructured, and streaming data.

Objectives

This Google Cloud Data Engineer course teaches participants the following skills:

Design and build data processing systems on Google Cloud Platform

Process batch and streaming data by implementing autoscaling data pipelines on Cloud Dataflow

Derive business insights from extremely large

datasets using Google BigQuery

Train, evaluate and predict using machine learning models using Tensorflow and Cloud ML

Leverage unstructured data using Spark and ML APIs on Cloud Dataproc

Enable instant insights from streaming data

Audience

This class is intended for the following:

Extracting, loading, transforming, cleaning, and validating data

Designing pipelines and architectures for data processing

Creating and maintaining machine learning and statistical models

Querying datasets, visualizing query results and creating reports

Prerequisites

To get the most of out of this course, participants should have:

Completed Google Cloud Fundamentals- Big Data and Machine Learning course #8325 OR have equivalent experience

Basic proficiency with common query language such as SQL

Experience with data modeling, extract, transform, load activities

Developing applications using a common programming language such Python

Familiarity with Machine Learning and/or statistics

Content

The course includes presentations, demonstrations, and hands-on labs.

Leveraging Unstructured Data with Cloud Dataproc on Google Cloud Platform

Module 1: Google Cloud Dataproc Overview

Module 2: Running Dataproc Jobs

Module 3: Integrating Dataproc with Google Cloud Platform

Module 4: Making Sense of Unstructured Data with Google’s Machine Learning APIs

Module 5: Serverless data analysis with BigQuery

Module 6: Serverless, autoscaling data pipelines with Dataflow

Module 7: Getting started with Machine Learning

Module 8: Building ML models with Tensorflow

Module 9: Scaling ML models with CloudML

Module 10: Feature Engineering

Module 11: Architecture of streaming analytics pipelines

Module 12: Ingesting Variable Volumes

Module 13: Implementing streaming pipelines

Module 14: Streaming analytics and dashboards

Module 15: High throughput and low-latency with Bigtable

For more IT Training on Google Cloud Courses visit GK Cloud Solutions.

#cloudcomputing#google#cloud#googlecloud#gcp#googlecloudplatform#googlecloudcertified#gcpcloud#googlecloudpartners#cloudcertification#cloudtraining#googlecloudtraining#googlecloudplatformgcp#googlecloudpartner#gcloud#googlecloudcommunity#googlecloudcertification#googleclouddataengineer#dataengineeringongcp#gcpdataengineer#googlecloudprofessionaldataengineer#dataengineeringwithgooglecloudprofessionalcertificate#dataengineeringongooglecloudplatform#googlecloudcertifiedprofessionaldataengineer#dataengineeringwithgooglecloud#googlecloudcertifieddataengineer#dataengineergooglecloud#googlecloudplatformdataengineer#googledataengineercourse#googlecloudprofessionaldataengineercertified

0 notes

Link

0 notes

Text

小米:基於K8s原生擴展的機器學習平台引擎 ML Engine

相比於傳統的調度系統,Kubernetes在CSI、CNI、CRI、CRD等許多可以擴展的接口上有很大提升。從生態系統上來講,Kubernetes 依託於 CNCF 社區,生態組件日趨���富。在雲原生設計理念方面,Kubernetes 以聲明式 API為根本,向上在微服務、Serverless、CI/CD等方面可以做更好的集成,因此不少公司開始選擇基於K8s搭建機器學習平台。在ArchSummit全球架構師峰會(北京站)的現場,InfoQ採訪到了小米人工智能部高級軟件工程師褚向陽,聽他分享小米機器學習平台以及基於K8s原生擴展的機器學習平台引擎ML Engine。

2015年,現任小米人工智能部高級軟件工程師褚向陽加入了創業公司——數人云,開始進行PaaS平台的研發,算是國內比較早的一批投身容器化PaaS平台研發的工程師,後來去到京東廣告部,這個階段積累了一些GPU的使用經驗,為他之後來小米做機器學習平台打下了基礎。採訪中,褚向陽表示,相較於通用型PaaS平台,機器學習平台更加聚焦業務,需要對機器學習相關業務具備清晰認知,並理解困難點。在小米工作的這段時間,褚向陽參與構建和優化了小米CloudML …

from 小米:基於K8s原生擴展的機器學習平台引擎 ML Engine via KKNEWS

0 notes

Text

Data Science for Startups: Introduction

I recently changed industries and joined a startup company where I’m responsible for building up a data science discipline. While we already had a solid data pipeline in place when I joined, we didn’t have processes in place for reproducible analysis, scaling up models, and performing experiments. The goal of this series of blog posts is to provide an overview of how to build a data science platform from scratch for a startup, providing real examples using Google Cloud Platform (GCP) that readers can try out themselves.

This series is intended for data scientists and analysts that want to move beyond the model training stage, and build data pipelines and data products that can be impactful for an organization. However, it could also be useful for other disciplines that want a better understanding of how to work with data scientists to run experiments and build data products. It is intended for readers with programming experience, and will include code examples primarily in R and Java.

Why Data Science?

One of the first questions to ask when hiring a data scientist for your startup is how will data science improve our product? At Windfall Data, our product is data, and therefore the goal of data science aligns well with the goal of the company, to build the most accurate model for estimating net worth. At other organizations, such as a mobile gaming company, the answer may not be so direct, and data science may be more useful for understanding how to run the business rather than improve products. However, in these early stages it’s usually beneficial to start collecting data about customer behavior, so that you can improve products in the future.

Some of the benefits of using data science at a start up are:

Identifying key business metrics to track and forecast

Building predictive models of customer behavior

Running experiments to test product changes

Building data products that enable new product features

Many organizations get stuck on the first two or three steps, and do not utilize the full potential of data science. The goal of this series of blog posts is to show how managed services can be used for small teams to move beyond data pipelines for just calculating run-the-business metrics, and transition to an organization where data science provides key input for product development.

Series Overview

Here are the topics I am planning to cover for this blog series. As I write new sections, I may add or move around sections. Please provide comments at the end of this posts if there are other topics that you feel should be covered.

Introduction (this post): Provides motivation for using data science at a startup and provides an overview of the content covered in this series of posts. Similar posts include functions of data science, scaling data science and my FinTech journey.

Tracking Data: Discusses the motivation for capturing data from applications and web pages, proposes different methods for collecting tracking data, introduces concerns such as privacy and fraud, and presents an example with Google PubSub.

Data pipelines: Presents different approaches for collecting data for use by an analytics and data science team, discusses approaches with flat files, databases, and data lakes, and presents an implementation using PubSub, DataFlow, and BigQuery. Similar posts include a scalable analytics pipeline and the evolution of game analytics platforms.

Business Intelligence: Identifies common practices for ETLs, automated reports/dashboards and calculating run-the-business metrics and KPIs. Presents an example with R Shiny and Data Studio.

Exploratory Analysis: Covers common analyses used for digging into data such as building histograms and cumulative distribution functions, correlation analysis, and feature importance for linear models. Presents an example analysis with the Natality public data set. Similar posts include clustering the top 1% and 10 years of data science visualizations.

Predictive Modeling: Discusses approaches for supervised and unsupervised learning, and presents churn and cross-promotion predictive models, and methods for evaluating offline model performance.

Model Production: Shows how to scale up offline models to score millions of records, and discusses batch and online approaches for model deployment. Similar posts include Productizing Data Science at Twitch, and Producizting Models with DataFlow.

Experimentation: Provides an introduction to A/B testing for products, discusses how to set up an experimentation framework for running experiments, and presents an example analysis with R and bootstrapping. Similar posts include A/B testing with staged rollouts.

Recommendation Systems: Introduces the basics of recommendation systems and provides an example of scaling up a recommender for a production system. Similar posts include prototyping a recommender.

Deep Learning: Provides a light introduction to data science problems that are best addressed with deep learning, such as flagging chat messages as offensive. Provides examples of prototyping models with the R interface to Keras, and productizing with the R interface to CloudML.

The series is also available as a book in web and print formats.

Tooling

Throughout the series, I’ll be presenting code examples built on Google Cloud Platform. I choose this cloud option, because GCP provides a number of managed services that make it possible for small teams to build data pipelines, productize predictive models, and utilize deep learning. It’s also possible to sign up for a free trial with GCP and get $300 in credits. This should cover most of the topics presented in this series, but it will quickly expire if your goal is to dive into deep learning on the cloud.

For programming languages, I’ll be using R for scripting and Java for production, as well as SQL for working with data in BigQuery. I’ll also present other tools such as Shiny. Some experience with R and Java is recommended, since I won’t be covering the basics of these languages.

Ben Weber is a data scientist in the gaming industry with experience at Electronic Arts, Microsoft Studios, Daybreak Games, and Twitch. He also worked as the first data scientist at a FinTech startup.

author: Ben Weber

link: https://towardsdatascience.com/@bgweber

0 notes

Text

Fwd: Job: Smithsonian_NHM.MachineLearningEvolution

Begin forwarded message: > From: [email protected] > Subject: Job: Smithsonian_NHM.MachineLearningEvolution > Date: 3 October 2021 at 05:47:06 BST > To: [email protected] > > > Hello EvolDir Members: > > Hiring Now: NSF-funded sub-contract or Postdoctoral Fellow (1 year, > with potential for extension), located in Washington, D.C., USA. > > Project title: NSF Convergence Accelerator Track E: Innovative seafood > traceability network for sustainable use, improved market access, and > enhanced blue economy > > Project Overview: This proposal will build a cross-cutting traceability > network to accelerate the path towards accurate and inclusive monitoring > and management of marine bioresources, whose sustainability is vital to > feed the global population. Leveraging wide-ranging expertise in fisheries > science, marine biology, environmental anthropology, computer science, > trade policy, and the fisheries industry, we will develop a powerful > tool to achieve long-lasting & transferable solutions. Addressing the > global challenge of feeding the human population will require the ocean > as a solution. > > This NSF Convergence Accelerator project will: > > 1.Develop a prototype traceability tool that allows affordable > identification of species and area of capture for wild octopus > fisheries within the United States and abroad using our proposed > machine learning (ML) model “SeaTraceBlueNet” trained on legacy > data of environmental metadata, species occurrence and images; > > 2.Develop a community-based citizen-science network (fishers, > researchers, industry partners, students, etc.) to gather new data > (images, metadata and environmental DNA (eDNA)), train on and test the > portable eDNA kits and SeaTraceBlueNet dashboard prototype to build > the collaborative capacity to establish a standardized traceability > system; and, > > 3.Set a system in place to connect traceability, sustainability and > legality to support the development of a blue economy around the > octopus value chain, incorporating the best practices and existing > standards from stakeholders. > > Qualifications: Demonstrated expertise in Machine Learning (and > Bioinformatics, preferably). Although a PhD is not required, professional > work experience and a degree in a Machine Learning-related field is > required. > > Note: Applicants must be current residents of the United States. However, > US citizenship is not required. Unfortunately, we cannot sponsor a visa > at this time. > > Experience sought: > > Software: Agile software i.e., Jira, GitHub, Anaconda, pyCharm, Jupyter > notebooks, OpenCV, BLAST > > Languages: Python, SQL, bash scripting, linux command-line Optional: > C++, R, Spark, Hive > > Programming environments and infrastructure to be used: Cloud, HPC, > Linux, Windows Desired: Familiar with Machine learning platform and > libraries such as, TensorFlow, PyTorch, Caffe, Keras, Scikit-learn, scipy, > etc. Implementing computer vision models such as ResNet, Deep learning > models using Recurrent Neural Networks (CNNs, LSTMs, DNNs), using Support > Vector Machines (SVMs) models, probabilistic and un/regression models, > data processing and handling activities including data wrangling, > computer vision. > > Bonus skills/knowledge of: Using BERT NLP models, computer software like > OpenVino, targeting GPUs, familiarity with GCP tools and applications, > such as BigTable, cloudSQL, DataFlow, CloudML, DataProc, etc., > dashboard development and implementation, Compiling and configuring > HPC environments, developing applications using MPI, OpenMPI, pyMIC, > using job schedulers such as PBS, Scrum, can use Linux, Qiime2 > > Job Physical Location: Smithsonian Institution’s National Museum of > Natural History, Laboratory of Analytical Biology (LAB), Washington, > D.C. USA > > Compensation: Salary includes health and dental benefits, computer > provided for the duration of the position > > Expected start date: October/November 2021 (up to 12 months depending > on start date) > > Application Deadline: Applications will be reviewed and interviews will > take place on a rolling basis until the position is filled. > > Application submission process: Please send the following documents via > email to Demian A. Willette, Loyola Marymount University (demian.willette > “at” lmu.edu) > > 1.Curriculum Vitae: Including all relevant professional and academic > experience; any grants, publications (URLs provided), presentations, > workshops; Machine Learning and bioinformatics skills and languages; > Gitbub/bitbucket; experience analyzing molecular sequence data (if > applicable) > > 2.Reference contacts: Names, affiliations and contact information (email, > phone) for up to three professional references that we will contact in > the event that your application leads to an interview. 3.Transcripts: > Transcript showing date of completion of most recent relevant degree. > > Inquiries: Demian A. Willette, Loyola Marymount University > (demian.willette “at” lmu.edu) > > Cheryl Ames > via IFTTT

0 notes

Text

Fwd: Job: Smithsonian_NHM.MachineLearningEvolution

Begin forwarded message: > From: [email protected] > Subject: Job: Smithsonian_NHM.MachineLearningEvolution > Date: 3 October 2021 at 05:47:06 BST > To: [email protected] > > > Hello EvolDir Members: > > Hiring Now: NSF-funded sub-contract or Postdoctoral Fellow (1 year, > with potential for extension), located in Washington, D.C., USA. > > Project title: NSF Convergence Accelerator Track E: Innovative seafood > traceability network for sustainable use, improved market access, and > enhanced blue economy > > Project Overview: This proposal will build a cross-cutting traceability > network to accelerate the path towards accurate and inclusive monitoring > and management of marine bioresources, whose sustainability is vital to > feed the global population. Leveraging wide-ranging expertise in fisheries > science, marine biology, environmental anthropology, computer science, > trade policy, and the fisheries industry, we will develop a powerful > tool to achieve long-lasting & transferable solutions. Addressing the > global challenge of feeding the human population will require the ocean > as a solution. > > This NSF Convergence Accelerator project will: > > 1.Develop a prototype traceability tool that allows affordable > identification of species and area of capture for wild octopus > fisheries within the United States and abroad using our proposed > machine learning (ML) model “SeaTraceBlueNet” trained on legacy > data of environmental metadata, species occurrence and images; > > 2.Develop a community-based citizen-science network (fishers, > researchers, industry partners, students, etc.) to gather new data > (images, metadata and environmental DNA (eDNA)), train on and test the > portable eDNA kits and SeaTraceBlueNet dashboard prototype to build > the collaborative capacity to establish a standardized traceability > system; and, > > 3.Set a system in place to connect traceability, sustainability and > legality to support the development of a blue economy around the > octopus value chain, incorporating the best practices and existing > standards from stakeholders. > > Qualifications: Demonstrated expertise in Machine Learning (and > Bioinformatics, preferably). Although a PhD is not required, professional > work experience and a degree in a Machine Learning-related field is > required. > > Note: Applicants must be current residents of the United States. However, > US citizenship is not required. Unfortunately, we cannot sponsor a visa > at this time. > > Experience sought: > > Software: Agile software i.e., Jira, GitHub, Anaconda, pyCharm, Jupyter > notebooks, OpenCV, BLAST > > Languages: Python, SQL, bash scripting, linux command-line Optional: > C++, R, Spark, Hive > > Programming environments and infrastructure to be used: Cloud, HPC, > Linux, Windows Desired: Familiar with Machine learning platform and > libraries such as, TensorFlow, PyTorch, Caffe, Keras, Scikit-learn, scipy, > etc. Implementing computer vision models such as ResNet, Deep learning > models using Recurrent Neural Networks (CNNs, LSTMs, DNNs), using Support > Vector Machines (SVMs) models, probabilistic and un/regression models, > data processing and handling activities including data wrangling, > computer vision. > > Bonus skills/knowledge of: Using BERT NLP models, computer software like > OpenVino, targeting GPUs, familiarity with GCP tools and applications, > such as BigTable, cloudSQL, DataFlow, CloudML, DataProc, etc., > dashboard development and implementation, Compiling and configuring > HPC environments, developing applications using MPI, OpenMPI, pyMIC, > using job schedulers such as PBS, Scrum, can use Linux, Qiime2 > > Job Physical Location: Smithsonian Institution’s National Museum of > Natural History, Laboratory of Analytical Biology (LAB), Washington, > D.C. USA > > Compensation: Salary includes health and dental benefits, computer > provided for the duration of the position > > Expected start date: October/November 2021 (up to 12 months depending > on start date) > > Application Deadline: Applications will be reviewed and interviews will > take place on a rolling basis until the position is filled. > > Application submission process: Please send the following documents via > email to Demian A. Willette, Loyola Marymount University (demian.willette > “at” lmu.edu) > > 1.Curriculum Vitae: Including all relevant professional and academic > experience; any grants, publications (URLs provided), presentations, > workshops; Machine Learning and bioinformatics skills and languages; > Gitbub/bitbucket; experience analyzing molecular sequence data (if > applicable) > > 2.Reference contacts: Names, affiliations and contact information (email, > phone) for up to three professional references that we will contact in > the event that your application leads to an interview. 3.Transcripts: > Transcript showing date of completion of most recent relevant degree. > > Inquiries: Demian A. Willette, Loyola Marymount University > (demian.willette “at” lmu.edu) > > Cheryl Ames > via IFTTT

0 notes

Photo

Google Cloud Machine Learning EngineでUnity ML-Agentsを動かす https://ift.tt/2MAubPt

概要

Unity ML-Agents(以下 ML-Agents)をクラウド上で動作させるのに適切な環境を探しています。 そのなかで、Google Cloud Machine Learning Engine(以下 Cloud ML)上で動作するものなのか、検証してみました。

ML-AgentsはTensorflowを利用した強化学習ができるライブラリです。 なので、Tensorflowなどの機械学習実行エンジンであるCloud MLで動かないわけがない。(はず)

Google Cloud Machine Learning Engineとは

Google Cloud Machine Learning Engine は”エンジン”であって開発環境ではない ~制約に気を付けよう~ https://www.gixo.jp/blog/10960/

ML Engine を一言で説明するなら「機械学習を実行するためのクラウドサービス」です。そのため、DataRobotなどの他のクラウドサービスのようにサービス上で開発は行えません。

Unity ML-Agents

Unity Machine Learning Agentsベータ版 https://unity3d.com/jp/machine-learning

Unity ML-Agentsは、新世代のロボット、ゲームをはじめさまざまな分野において、迅速かつ効率的に新しいAIアルゴリズムの開発を行い、テストする柔軟な方法を提供します。

手順

前提条件

Cloud MLやUnity、ML-Agentsの環境構築が済んでいる前提です。 まだ環境がないという方は下記をご参考ください。

MacでCloud Machine Learning Engineを利用してみる https://cloudpack.media/42306

Macでhomebrewを使ってUnityをインストールする(Unity Hub、日本語化対応) https://cloudpack.media/42142

MacでUnity ML-Agentsの環境を構築する https://cloudpack.media/42164

Unityでアプリをビルドする

ML-Agentsでは実際にUnityアプリを動作させつつ強化学習がすす��ます。 MacであればMac用、WindowsであればWindows用にアプリをビルドするわけですが、今回はCloud ML上で動作させる必要があるので、Linux用のビルドとなります。

アプリはML-Agentsに含まれる[3DBall]アプリを利用します。 手順は下記記事のDockerに関する手順以外をすすめてビルドします。

MacでUnity ML-AgentsをDockerで動作させる https://cloudpack.media/42285

unity-volume フォルダに以下ファイルとフォルダが用意できたと思います。

> ll ml-agents/unity-volume/ 3DBall.x86_64 3DBall_Data

Cloud ML上で実行するための準備

Cloud MLで実行するための下準備です。

変数設定

Cloud Storageのバケット名やCloud MLを利用するリージョンなどを変数に設定します。 BUCKET_NAMEとJOB_NAMEは任意で指定してください。

fish

> set -x BUCKET_NAME 任意のバケット名 > set -x REGION us-central1 > set -x JOB_NAME gcp_ml_cloud_run_01

bash

> export BUCKET_NAME=任意のバケット名 > export REGION=us-central1 > export JOB_NAME=gcp_ml_cloud_run_01

ハイパーパラメータの指定

今回は動作確認が目的となりますので、学習のステップを少なくします。 ML-Agentsライブラリにtrainer_config.yamlというハイパーパラメータを指定するYAMLがあるので、そちらでmax_stepsの指定をして5,000ステップ実行して終了するようにします。

> cd 任意のディレクトリ/ml-agents > vi python/trainer_config.yaml Ball3DBrain: normalize: true batch_size: 64 buffer_size: 12000 summary_freq: 1000 time_horizon: 1000 lambd: 0.99 gamma: 0.995 beta: 0.001 + max_steps: 5000

アプリとハイパーパラメータファイルのアップロード

Cloud MLでは学習に利用するファイルなどをCloud Storageに置いて利用することになります。 今回はUnityアプリのファイル・フォルダとハイパーパラメータ設定ファイルをCloud Storageにコピーしておきます。 また、Linux用ビルドはDataフォルダがないとアプリが起動しません。 フォルダのままだと取り回しが面倒なので、ZIPファイルに圧縮しておきます。

> cd 任意のディレクトリ/ml-agents # Dataフォルダの圧縮 > zip -r 3DBall_Data.zip unity-volume/3DBall_Data/ # Cloud Storageのバケットにコピー # Unityアプリファイル > gsutil cp unity-volume/3DBall.x86_64 gs://$BUCKET_NAME/data/3DBall.x86_64 > gsutil cp unity-volume/3DBall_Data.zip gs://$BUCKET_NAME/data/3DBall_Data.zip # ハイパーパラメータ設定ファイル > gsutil cp python/trainer_config.yaml gs://$BUCKET_NAME/data/trainer_config.yaml

学習実行ファイルの準備

ML-Agentsに含まれているpython/learn.pyが学習を開始するためのファイルになりますが、これをCloud MLで動作できるように以下の準備をします。

Pythonのパッケージ化

Cloud Storage連携実装追加

Pythonのパッケージ化

Cloud MLにPythonでパッケージ化されたファイルをアップして実行するので、その準備をします。

# Pythonフォルダのsetup.pyがCloud MLで動作するようにファイルを追加 > touch python/MANIFEST.in > vi python/MANIFEST.in +include requirements.txt # learn.py実行用のパッケージ作成 > mkdir python/tasks > touch python/tasks/__init__.py > cp python/learn.py python/tasks/learn.py

learn.pyのカスタマイズ

learn.pyにCloud Storageからファイルを取得、学習結果をCloud Storageへ保存する実装を追加します。 今回、ファイル名を固定していますが、とりあえず動作検証のためなので、あしからず。 Cloud Storageからのファイル取得・アップにgsutilコマンドを利用していますが、ここはPythonで実装してもOKです。 手抜きできるところは徹底的に^^

> vi python/tasks/learn.py

python/tasks/learn.py

# import追加 import shlex import subprocess import zipfile # import追加ここまで (略) # General parameters (略) fast_simulation = not bool(options['--slow']) no_graphics = options['--no-graphics'] # ここから追加 # Stackdriver Loggingでログ確認できるようにする logging.basicConfig(level=logging.INFO) # Cloud Storageのパス指定 trainer_config_file = 'gs://任意のバケット名/data/trainer_config.yaml' app_file = 'gs://任意のバケット名/data/3DBall.x86_64' app_data_file = 'gs://任意のバケット名/data/3DBall_Data.zip' # 実行環境のに保存する際のパスを指定 file_name = env_path.strip().replace('.x86_64', '') cwd = os.getcwd() + '/' app_data_local_dir = os.path.join(cwd, file_name + '_Data') app_local_file = os.path.join(cwd, file_name + '.x86_64') app_data_local_file = app_data_local_dir + '.zip' # Cloud Storageからコピー、Cloud ML環境だとgsutilが利用できて楽 logger.info(subprocess.call(['gsutil', 'cp', trainer_config_file, cwd])) logger.info(subprocess.call(['gsutil', 'cp', app_file, cwd])) logger.info(subprocess.call(['gsutil', 'cp', app_data_file, cwd])) # Unityアプリ動作用にDataファイルを展開する with zipfile.ZipFile(app_data_local_file) as existing_zip: existing_zip.extractall(cwd) # Unityアプリが実行できるように権限を付与する logger.info(subprocess.call(shlex.split('chmod u+x ' + app_local_file))) # ここまで追加 (略) tc.start_learning() # ここから追加 # モデルファイルをCloud Storageへアップ # TODO: 圧縮する models_local_path = './models/{run_id}'.format(run_id=run_id) models_storage_path = 'gs://任意のバケット名/{run_id}/models'.format(run_id=run_id) logger.info(subprocess.call(['gsutil', 'cp', '-r', models_local_path, models_storage_path])) # ここまで追加

パッケージ作成 Cloud MLの操作に利用するgcloudコマンドにはパッケージを自動作成する機能もありますが、ここでは手動でパッケージングします。

> cd python > python setup.py sdist (略) Writing unityagents-0.4.0/setup.cfg Creating tar archive removing 'unityagents-0.4.0' (and everything under it) > ll dist total 96 -rw-r--r-- 1 user users 45K 8 6 11:14 unityagents-0.4.0.tar.gz

Cloud MLで実行してみる

さて、下準備が完了しましたので、Cloud MLへジョブ登録して学習を実行してみましょう。

パラメータ指定に--がありますが、これ以降のパラメータが--module-nameで指定したlearn.pyにパラメータとして渡されるので、取ってはいけません。(1敗)

> cd 任意のディレクトリ/ml-agents > gcloud ml-engine jobs submit training $JOB_NAME \ --python-version=3.5 \ --runtime-version 1.8 \ --module-name tasks.learn \ --packages python/dist/unityagents-0.4.0.tar.gz \ --region $REGION \ --staging-bucket gs://$BUCKET_NAME \ -- \ 3DBall \ --run-id $JOB_NAME \ --train Job [gcp_ml_cloud_run_01] submitted successfully. Your job is still active. You may view the status of your job with the command $ gcloud ml-engine jobs describe gcp_ml_cloud_run_01 or continue streaming the logs with the command $ gcloud ml-engine jobs stream-logs gcp_ml_cloud_run_01 jobId: gcp_ml_cloud_run_01 state: QUEUED Updates are available for some Cloud SDK components. To install them, please run: $ gcloud components update

はい。

ジョブが登録されたか確認しましょう。

> gcloud ml-engine jobs describe $JOB_NAME createTime: '2018-08-06T02:20:53Z' etag: sm-_r3BqJBo= jobId: gcp_ml_cloud_run_01 startTime: '2018-08-06T02:21:24Z' state: RUNNING trainingInput: args: - 3DBall - --run-id - gcp_ml_cloud_run_01 - --train packageUris: - gs://任意のバケット名/gcp_ml_cloud_run_01/xxxx/unityagents-0.4.0.tar.gz pythonModule: tasks.learn pythonVersion: '3.5' region: us-central1 runtimeVersion: '1.8' trainingOutput: consumedMLUnits: 0.01

ジョブ実行されたかログを見てみましょう。

> gcloud ml-engine jobs stream-logs $JOB_NAME (略) INFO 2018-08-06 11:22:50 +0900 master-replica-0 List of nodes to export : INFO 2018-08-06 11:22:50 +0900 master-replica-0 action INFO 2018-08-06 11:22:50 +0900 master-replica-0 value_estimate INFO 2018-08-06 11:22:50 +0900 master-replica-0 action_probs INFO 2018-08-06 11:22:51 +0900 master-replica-0 Restoring parameters from ./models/gcp_ml_cloud_run_01/model-5001.cptk INFO 2018-08-06 11:22:51 +0900 master-replica-0 Froze 16 variables. (略) INFO 2018-08-06 11:22:54 +0900 master-replica-0 Module completed; cleaning up. INFO 2018-08-06 11:22:54 +0900 master-replica-0 Clean up finished. INFO 2018-08-06 11:22:54 +0900 master-replica-0 Task completed successfully. INFO 2018-08-06 11:27:32 +0900 service Job completed successfully.

はい。

これで、ML-AgentsがCloud ML上で実行されて、modelsフォルダに結果ファイルが出力されているはずです。 learn.pyでmodelsフォルダをCloud Storageにコピーしてるので、フォルダをローカルにコピーして、Unityアプリにbytesファイルを組み込んで動作させることができます。

> gsutil ls gs://任意のバケット名/$JOB_NAME/models gs://任意のバケット名/gcp_ml_cloud_run_01/models/3DBall_gcp_ml_cloud_run_01.bytes gs://任意のバケット名/gcp_ml_cloud_run_01/models/checkpoint gs://任意のバケット名/gcp_ml_cloud_run_01/models/model-5001.cptk.data-00000-of-00001 gs://任意のバケット名/gcp_ml_cloud_run_01/models/model-5001.cptk.index gs://任意のバケット名/gcp_ml_cloud_run_01/models/model-5001.cptk.meta gs://任意のバケット名/gcp_ml_cloud_run_01/models/raw_graph_def.pb

tensorboardでも結果が確認できます。

> gsutil cp -r gs://任意のバケット名/$JOB_NAME/models ローカルの任意のフォルダ/ > tensorboard --logdir=ローカルの任意のフォルダ/models

実行できることが確認できました。やったぜ^^

あとは、learn.pyをもう少し実用的に改修したり、Cloud Pub/Subや、Cloud Functionsなどを利用すれば、Cloud StorageにUnityアプリをアップすれば、自動的に強化学習が実行される素敵環境が構築できそうです^^

参考

Google Cloud Machine Learning Engine は”エンジン”であって開発環境ではない ~制約に気を付けよう~ https://www.gixo.jp/blog/10960/

Unity Machine Learning Agentsベータ版 https://unity3d.com/jp/machine-learning

MacでCloud Machine Learning Engineを利用してみる https://cloudpack.media/42306

Macでhomebrewを使ってUnityをインストールする(Unity Hub、日本語化対応) https://cloudpack.media/42142

MacでUnity ML-Agentsの環境を構築する https://cloudpack.media/42164

MacでUnity ML-AgentsをDockerで動作させる https://cloudpack.media/422854

トレーニング アプリケーションのパッケージング https://cloud.google.com/ml-engine/docs/tensorflow/packaging-trainer?hl=ja

【mac】zipファイル操作コマンド https://qiita.com/griffin3104/items/948e38aab62bbb0d0610

Linuxの権限確認と変更(超初心者向け) https://qiita.com/shisama/items/5f4c4fa768642aad9e06

17.5.1. subprocess モジュールを使う https://docs.python.jp/3/library/subprocess.html

CloudMLからgoogle cloud storageのファイルにアクセスする https://qiita.com/mikebird28/items/543581ef04476a76d3e0

Google Cloud ML Engine 上でPythonを実行する時のコツ https://rooter.jp/ml/google-cloud-ml-engine-python-tips/

Pythonでzipファイルを圧縮��解凍するzipfile https://note.nkmk.me/python-zipfile/

gsutilコマンド全部試したので解説する(part1) https://www.apps-gcp.com/gsutil-command-explanation-part1/

元記事はこちら

「Google Cloud Machine Learning EngineでUnity ML-Agentsを動かす」

August 28, 2018 at 02:00PM

0 notes