#cloudcomposer

Explore tagged Tumblr posts

Text

How Can We Operate Airflow Apache On Google Cloud?

Apache Airflow on Google Cloud

Are you considering utilizing Google Cloud to run Apache Airflow? This is a well-liked option for managing intricate sequences of operations, such Extract, Transform, and Load (ETL) or pipelines for data analytics. Airflow Apache is a sophisticated tool for scheduling and dependency graphing. It employs a Directed Acyclic Graph (DAG) to arrange and relate many tasks for your workflows, including scheduling the required activity to run at a specific time.

What are the various configuration options for Airflow Apache on Google Cloud? Making the incorrect decision could result in lower availability or more expenses. You could need to construct multiple environments, such as dev, staging, and prod, or the infrastructure could fail. It will examine three methods for using Airflow Apache on Google Cloud in this post and go over the benefits and drawbacks of each. It offer Terraform code, which is available on GitHub, for every method so you may give it a try.

It should be noted that this article’s Terraform has a directory structure. The format of the files under modules is the same as that of the Terraform default code. Consider the modules directory to be a type of library if you work as a developer. The real business code is stored in the main.tf file. Assume you are working on development: begin with main.tf and save the shared code in folders such as modules, libraries, etc.)

Apache Airflow best practices

Let’s examine three methods for utilizing Airflow Apache.

Compute Engine

Installing and using Airflow directly on a Compute Engine virtual machine instance is a popular method for using Airflow on Google Cloud. The benefits of this strategy are as follows:

It costs less than the others.

All you need to know about virtual machines.

Nevertheless, there are drawbacks as well:

The virtual computer needs to be maintained by you.

There is less of it available.

Although there can be significant drawbacks, Compute Engine can be used to quickly prove of concept Airflow adoption.

First, use the following terraform code to construct a Compute Engine instance (some code has been eliminated for brevity). Allow is a firewall configuration. Since Airflow Web uses port 8080 by default, it ought to be open. You are welcome to modify the other options.

main.tf

module “gcp_compute_engine” { source = “./modules/google_compute_engine” service_name = local.service_name

region = local.region zone = local.zone machine_type = “e2-standard-4” allow = { … 2 = { protocol = “tcp” ports = [“22”, “8080”] } } }

The code and files that take the variables it handed in before and actually build an instance for it was found in the google_compute_engine directory, which it reference as source in main.tf above. Take note of how it takes in the machine_type.

modules/google_compute_engine/google_compute_instance.tf

resource “google_compute_instance” “default” { name = var.service_name machine_type = var.machine_type zone = var.zone … }

Use Terraform to run the code you wrote above:

$ terraform apply

A Compute Engine instance will be created after a short wait. The next step is to install Airflow by connecting to the instance; Launch Airflow after installation.

You can now use your browser to access Airflow! You will need to take extra precautions with your firewall settings if you intend to run Airflow on Compute Engine. It should only be accessible to authorized users, even in the event that the password is compromised. It has only made the sample accessible with the barest minimum of firewall settings.

You ought to get a screen similar after logging in. Additionally, an example DAG from Airflow is displayed. Examine the contents of the screen.

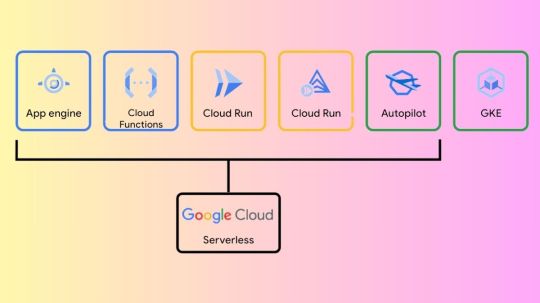

GKE Autopilot

Using Google Kubernetes Engine (GKE), Google’s managed Kubernetes service, running Airflow Apache on Google Cloud is made extremely simple. Additionally, you have the option to operate in GKE Autopilot mode, which will automatically scale your cluster according to your demands and assist you avoid running out of compute resources. You can manage your own Kubernetes nodes without having to do so because GKE Autopilot is serverless.

GKE Autopilot provides scalability and high availability. Additionally, you may make use of the robust Kubernetes ecosystem. For instance, you can monitor workloads in addition to other business services in your cluster using the kubectl command, which allows for fine-grained control over workloads. However, if you’re not particularly knowledgeable with Kubernetes, utilizing this method may result in you spending a lot of time managing Kubernetes rather than concentrating on Airflow.

Cloud Composer

Using Cloud Composer, a fully managed data workflow orchestration service on Google Cloud, is the third option. As a managed service, Cloud Composer simplifies the Airflow installation process, relieving you of the burden of maintaining the Airflow infrastructure. But it offers fewer choices. One unusual scenario is that storage cannot be shared throughout DAGs. Because you don’t have as much control over CPU and memory utilization, you might also need to make sure you balance those usages.

Conclude

Three considerations must be made if you plan to use Airflow in production: availability, performance, and cost. Three distinct approaches of running Airflow Apache on Google Cloud have been covered in this post; each has advantages and disadvantages of its own.

Remember that these are the requirements at the very least for selecting an Airflow environment. It could be enough to write some Python code to generate a DAG if you’re using Airflow for a side project. But in order to execute Airflow in production, you’ll also need to set up the Executor (LocalExecutor, CeleryExecutor, KubernetesExecutor, etc.), Airflow Core (concurrency, parallelism, SQL Pool size, etc.), and other components as needed.

Read more on Govindhtech.com

#Google#googlecloud#apacheairflow#computeengine#GKEautopilot#cloudcomposer#govindhtech#news#technews#Technology#technologynews#technologytrends

0 notes

Link

#GCP#BigQuery#datawarehousing#dataproc#dataflow#datafusion#cloudcomposer#datapipe#dataorchestration#dataautomation#learning#learningneverstops#learningeveryday#continouslearning#selflearning#keeplearning#happylearning#learnsomethingnew#learningisfun#quarentinediaries#newskills

1 note

·

View note

Photo

พี่ติ๋วเลี้ยง #ข้าวผัดกุ้ง เลยมารองท้องยามดึก ระหว่างลอง @AcloudGuru #Playground เพื่อทดสอบ #CloudComposer & #CloudDataproc (at Uthai Thani) https://www.instagram.com/p/CkBWlm1puMf/?igshid=NGJjMDIxMWI=

0 notes

Photo

@devopsdotcom : Google Looks to Simplify Building of Workflows https://t.co/YmSUpVpR30 @mvizard #apache #cloudcomposer #google #opensource https://t.co/xhmUmN04vQ

0 notes